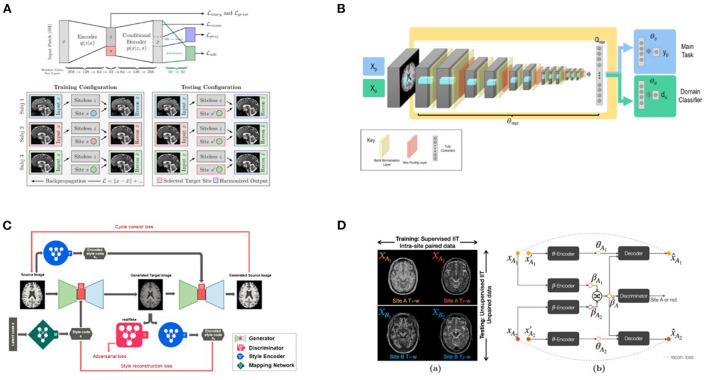

Figure 4.

Four GAN-based deep learning harmonization models. (A) The network architecture in Moyer et al. (41). The invariant representations to and from the images are learned using an encoder/decoder architecture, with a one-hot vector to represent the protocol identifiers. (B) General network architecture in (67). The network is formed of three sections: the feature extractor with parameters, the label predictor with parameters, and the domain classifier with parameters. The domain invariant features (from the feature extractor), which are used in domain-invariant label predictions. are learned by confusing the domain classifier. The represents the input data used to train the main task with labels, and represents the input data used to train the steps involved in unlearning scanner with labels d. (C) The architecture of the style-encoding GAN (44). Generators learn to generate images by inputting a source image and a style code. The anatomy of the brain MRI was preserved using a cycle-GAN architecture, and the harmonization was achieved by inserting a style code into the images. Reproduced with permission. (D) (a) Given T1-w and T2-w images from Sites A and B, the method from Zuo et al. (69) learns the site-invariant anatomy from supervised image-to-image translation (T1–T2 synthesis) and site-variant contrast from unsupervised image-to-image translation (harmonization), where is learned to control the image contrast after harmonization, and is learned to preserve the anatomical information.