Abstract

In this study, we aimed to develop an artificial intelligence clinical decision support solution to mitigate operator-dependent limitations during complex endoscopic procedures such as endoscopic submucosal dissection and peroral endoscopic myotomy, for example, bleeding and perforation. A DeepLabv3-based model was trained to delineate vessels, tissue structures and instruments on endoscopic still images from such procedures. The mean cross-validated Intersection over Union and Dice Score were 63% and 76%, respectively. Applied to standardised video clips from third-space endoscopic procedures, the algorithm showed a mean vessel detection rate of 85% with a false-positive rate of 0.75/min. These performance statistics suggest a potential clinical benefit for procedure safety, time and also training.

Keywords: ENDOSCOPIC PROCEDURES, ENDOSCOPY, SURGICAL ONCOLOGY

What is already known on this topic

Recently, artificial intelligence (AI) tools have been developed for clinical decision support in diagnostic endoscopy, but so far, no algorithm has been introduced for therapeutic interventions.

What this study adds

Considering the elevated risk of bleeding and perforation during endoscopic submucosal dissection and peroral endoscopic myotomy, there is an apparent need for innovation and research into AI guidance in order to minimise operator-dependent complications. In this study, we developed a deep learning algorithm for the real-time detection and delineation of relevant structures during third-space endoscopy.

How this study might affect research, practice or policy

This new technology shows great promise for achieving higher procedure safety and speed. Future research may further expand the scope of AI applications in GI endoscopy.

In more detail

Endoscopic submucosal dissection (ESD) is an established organ-sparing curative endoscopic resection technique for premalignant and superficially invasive neoplasms of the GI tract.1 2 However, ESD and peroral endoscopic myotomy (POEM) are complex procedures with an elevated risk of operator-dependent adverse events, specifically intraprocedural bleeding and perforation. This is due to inadvertent transection through submucosal vessels or into the muscularis propria, as visualisation and cutting trajectory within the expanding resection defect is not always apparent.3 4 An effective mitigating strategy for intraprocedural adverse events has yet to be developed.

Artificial intelligence clinical decision support solution (AI-CDSS) has rapidly proliferated throughout diagnostic endoscopy.5–7 We therefore sought to develop a novel AI-CDSS for real-time intraprocedural detection and delineation of vessels, tissue structures and instruments during ESD and POEM.8

Sixteen full-length videos of 12 ESD and 4 POEM procedures using Olympus EVIS X1 series endoscopes (Olympus, Tokyo, Japan) were extracted from the Augsburg University Hospital database. A total of 2012 still images from these videos were annotated by minimally invasive tissue resection experts (ESD experience ≥500 procedures) using the computer vision annotation tool for the categories electrosurgical knife, endoscopic instrument, submucosal layer, muscle layer and blood vessel. A DeepLabv3+ neural network architecture with KSAC9 and a 101-layer ResNeSt backbone10 (online supplemental methods) was trained with these data. The performance of the algorithm was measured in an internal fivefold cross validation, as well as a test on 453 annotated images from 11 separate videos using the parameters Intersection over Union (IoU), Dice Score and pixel accuracy (online supplemental methods). The IoU and Dice Score measure the percentual overlap between the algorithm’s delineation and the gold standard. The pixel accuracy measures the percentage of true pixel predictions per image and over all classes. The validation metrics were calculated by accumulating the per-fold outputs. The cross validation was done without hyperparameter tuning.

gutjnl-2021-326470supp001.pdf (95.1KB, pdf)

Three further full-length videos (1× POEM, 1× rectal ESD and 1× oesophageal ESD) were used for an evaluation of the algorithm on video. Thirty-one clips with 52 predefined vessels (online supplemental methods) were evaluated frame by frame with artificial intelligence (AI) overlay for true and false vessel detection, and a vessel detection rate (VDR) was determined.

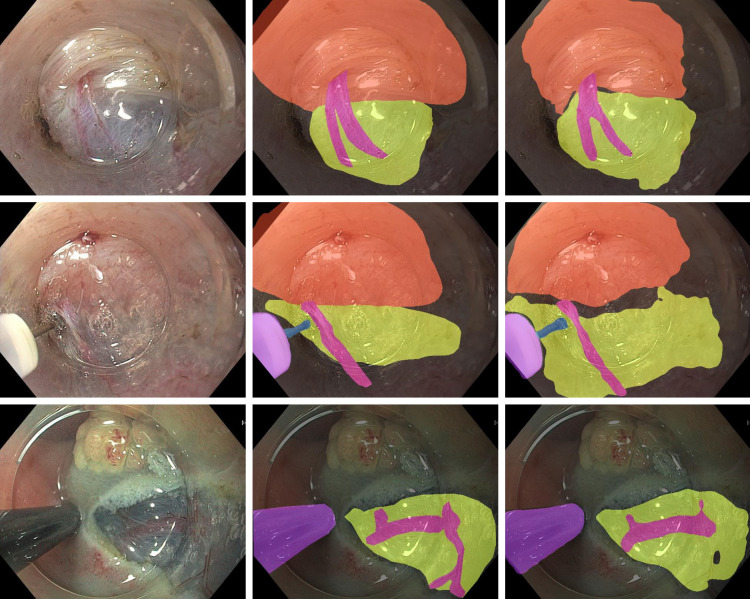

The cross-validated mean IoU, mean Dice Score and pixel accuracy were 63%, 76% and 81%, respectively. On the test set, the AI-CDSS achieved scores of 68%, 80% and 87% for the same parameters. The individual per class values and 95% CIs are shown in table 1. Examples of the original frames, expert annotations and AI segmentations are shown in figure 1.

Table 1.

Performance results of the AI-CDSS in the internal cross validation and the test data set: IoU and Dice Score for all categories as well as their means across all categories, pixel accuracy for complete frames and 95% CI in brackets

| Internal cross validation | |||||||

| Vessel detection | Tissue differentiation | Instrument detection | |||||

| Vessel | Submucosa | Muscularis | Background | Instrument | Knife | Mean | |

| Dice Score | 55.15 (54.10 to 56.18) |

75.51 (74.88 to 76.12) |

70.64 (69.32 to 71.88) |

86.49 (85.99 to 86.99) |

88.69 (87.57 to 89.83) |

80.60 (79.61 to 81.49) |

76.18 (75.73 to 76.57) |

| IoU | 38.07 (37.08 to 39.07) |

60.65 (59.85 to 61.44) |

54.60 (53.05 to 56.10) |

76.19 (75.43 to 76.98) |

79.68 (77.89 to 81.54) |

67.51 (66.13 to 68.77) |

62.78 (62.18 to 63.31) |

| Pixel accuracy | 80.99 (80.52 to 81.47) |

||||||

| Test | |||||||

| Dice Score | 62.77 (60.08 to 65.12) |

80.71 (79.50 to 81.82) |

72.48 (69.40 to 74.99) |

91.39 (90.45 to 92.10) |

89.69 (87.09 to 91.96) |

83.50 (82.06 to 84.87) |

80.09 (79.14 to 80.92) |

| IoU | 45.74 (42.94 to 48.28) |

67.65 (65.97 to 69.24) |

56.84 (53.14 to 59.99) |

84.14 (82.56 to 85.36) |

81.30 (77.14 to 85.11) |

71.67 (69.58 to 73.72) |

67.89 (66.61 to 69.04) |

| Pixel accuracy | 86.89 (85.86 to 87.70) |

||||||

AI-CDSS, artificial intelligence clinical decision support solution.

Figure 1.

Examples of original images (left column) with corresponding expert annotations (middle column) and AI segmentations (right column). The muscle layer, submucosa, vessels and knife are segmented with a coloured overlay.

The mean VDR was 85%. The VDR for rectal ESD, oesophageal ESD and POEM were 70%, 95% and 92%, respectively. The mean false-positive rate was 0.75 /min. The algorithm spotted seven out of nine vessels, which caused intraprocedural bleeding. It also recognised the two vessels which required specific haemostasis by haemostatic forceps for major bleeding.

To demonstrate the performance of the AI-CDSS without computing quantitative performance measures, we show an example of an internal POEM procedure with AI overlay. For visualisation of the experiment, we show six video clips, which were used for the evaluation of VDR in the same video (2× POEM, 2× rectal ESD and 2× oesophageal ESD; online supplemental video 1). For a test in robustness, the algorithm was also applied to a randomly selected highly compressed YouTube video of a gastric per-oral endoscopic myotomy procedure (ENDOCLUNORD 2020, https://www.youtube.com/watch?v=VKFHWOzYDGM; online supplemental video 2). The individual output is the result of an exponential moving average between the current and past predictions which smoothes the predictions and is a simple way to include temporal information.

gutjnl-2021-326470supp002.mp4 (313.4MB, mp4)

gutjnl-2021-326470supp003.mp4 (14.4MB, mp4)

Comments

This preliminary study aims at investigating the potential role of AI during therapeutic endoscopic procedures such as ESD or POEM. The algorithm delineated tissue structures, vessels and instruments in frames taken from endoscopic videos with a high overlap to the gold standard provided by expert endoscopists. Analogous technology11 has been demonstrated for application in laparoscopic cholecystectomy to differentiate between safe and dangerous zones of dissection with a mean IoU of 53% and 71%, respectively.

On video clips with standardised and predefined vessels, the algorithm showed a VDR of 85%. The lower performance of 70% in rectal ESD compared with excellent detection of over 90% in oesophageal ESD and POEM might be explainable by poorer visualisation of the structures and more intraprocedural bleeding, which is in agreement with clinical experience.

Numerous preclinical and clinical studies on AI in GI endoscopy have been published, but until now, the application of AI has been limited largely to diagnostic procedures such as the detection of polyps or the characterisation of unclear lesions. In abdominal surgery, AI has been applied with promising results for various tasks, including the detection of surgical instruments, image guidance, navigation and skill assessment (‘smart surgery’).12 The results of this study suggest that AI may have the potential to optimise complex endoscopic procedures such as ESD or POEM in analogy to the mentioned research (‘smart ESD’). By highlighting submucosal vessels and other tissue structures, such as the submucosal cutting plane, therapeutic procedures could become faster and burdened with fewer adverse events such as intraprocedural or postprocedural bleeding and perforation. In the future, AI assistance may have the potential to accelerate the learning curve of trainees in endoscopy.

The major limitation of this study is the small number of videos used for training and validation; however, every video contained a complete therapeutic ESD procedure with a full range of procedural situations. The study is further limited by the fact that the algorithm was not yet tested in a real-life setting. However, the AI model was tested on externally generated video sequences and was able to recognise submucosal vessels and the cutting plane. Furthermore, surrogate parameters such as the detection of vessels, which bled later during the procedures, give rise to the conclusion that these complications might have been preventable by the application of the AI-CDSS. This is a first preclinical report on a novel technology; further research is needed to evaluate a potential clinical benefit of this AI-CDSS in detail.

Footnotes

Twitter: @papa_joaopaulo, @ReMIC_OTH

AE and RM contributed equally.

Contributors: AE and MWS: study concept and design, acquisition of data, analysis and interpretation of data, drafting of the manuscript and critical revision of the manuscript. RM: study concept and design, software implementation, analysis and interpretation of data, drafting of the manuscript and critical revision of the manuscript. AP: study concept and design, acquisition of data and critical revision of the manuscript. NS: analysis and interpretation of data, drafting of the manuscript and critical revision of the manuscript. FP, CF, CR, SKG and GB: acquisition of data and critical revision of the manuscript. DR and TR: software implementation and experimental evaluation and critical revision of the manuscript. LAdS: statistical analysis and critical revision of the manuscript. JP: Statistical analysis, critical revision of the manuscript and study supervision. MB: study concept and design, analysis and interpretation of data, drafting of the manuscript and critical revision of the manuscript. CP: study concept and design, analysis and interpretation of data, statistical analysis, critical revision of the manuscript, administrative and technical support and study supervision. HM: study concept and design, acquisition of data, critical revision of the manuscript, administrative and technical support, and study supervision. AE: guarantor. All authors: had access to the study data and reviewed and approved the final manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: NS: speaker honorarium, Boston Scientific and Pharmascience. MB: CEO and founder, Satisfai Health. HM: consulting fees, Olympus.

Patient and public involvement: Patients and/or the public were not involved in the design, conduct, reporting or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

All data relevant to the study are included in the article or uploaded as supplementary information.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

Not applicable. Ethics approval was obtained from the ethics committee of Ludwigs-Maximilians-Universität, Munich (project number 21–1216).

References

- 1. Bourke MJ, Neuhaus H, Bergman JJ. Endoscopic submucosal dissection: indications and application in Western endoscopy practice. Gastroenterology 2018;154:1887–900. 10.1053/j.gastro.2018.01.068 [DOI] [PubMed] [Google Scholar]

- 2. Shahidi N, Rex DK, Kaltenbach T, et al. Use of endoscopic impression, artificial intelligence, and pathologist interpretation to resolve discrepancies between endoscopy and pathology analyses of diminutive colorectal polyps. Gastroenterology 2020;158:783–5. 10.1053/j.gastro.2019.10.024 [DOI] [PubMed] [Google Scholar]

- 3. Draganov PV, Aihara H, Karasik MS, et al. Endoscopic submucosal dissection in North America: a large prospective multicenter study. Gastroenterology 2021;160:2317–27. 10.1053/j.gastro.2021.02.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Fleischmann C, Probst A, Ebigbo A, et al. Endoscopic submucosal dissection in Europe: results of 1000 neoplastic lesions from the German endoscopic submucosal dissection registry. Gastroenterology 2021;161:1168–78. 10.1053/j.gastro.2021.06.049 [DOI] [PubMed] [Google Scholar]

- 5. Byrne MF, Chapados N, Soudan F, et al. Real-Time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut 2019;68:94–100. 10.1136/gutjnl-2017-314547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Ebigbo A, Mendel R, Probst A, et al. Computer-Aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut 2019;68:1143–5. 10.1136/gutjnl-2018-317573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Shahidi N, Bourke MJ. How to manage the large Nonpedunculated colorectal polyp. Gastroenterology 2021;160:2239–43. 10.1053/j.gastro.2021.04.029 [DOI] [PubMed] [Google Scholar]

- 8. Khashab MA, Benias PC, Swanstrom LL. Endoscopic myotomy for foregut motility disorders. Gastroenterology 2018;154:1901–10. 10.1053/j.gastro.2017.11.294 [DOI] [PubMed] [Google Scholar]

- 9. Huang Y, Wang Q, Jia W. See More Than Once - Kernel-Sharing Atrous Convolution for Semantic Segmentation. arXiv 2019;190809443. 10.48550/arXiv.1908.09443 [DOI] [Google Scholar]

- 10. Zhang H, Wu C, Zhang Z. ResNeSt: Split-Attention networks. arXiv 2020;200408955. 10.48550/arXiv.2004.08955 [DOI] [Google Scholar]

- 11. Madani A, Namazi B, Altieri MS, et al. Artificial intelligence for intraoperative guidance: using semantic segmentation to identify surgical anatomy during laparoscopic cholecystectomy. Ann Surg 2020. doi: 10.1097/SLA.0000000000004594. [Epub ahead of print: 13 Nov 2020]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hashimoto DA, Rosman G, Rus D, et al. Artificial intelligence in surgery: promises and perils. Ann Surg 2018;268:70–6. 10.1097/SLA.0000000000002693 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

gutjnl-2021-326470supp001.pdf (95.1KB, pdf)

gutjnl-2021-326470supp002.mp4 (313.4MB, mp4)

gutjnl-2021-326470supp003.mp4 (14.4MB, mp4)

Data Availability Statement

All data relevant to the study are included in the article or uploaded as supplementary information.