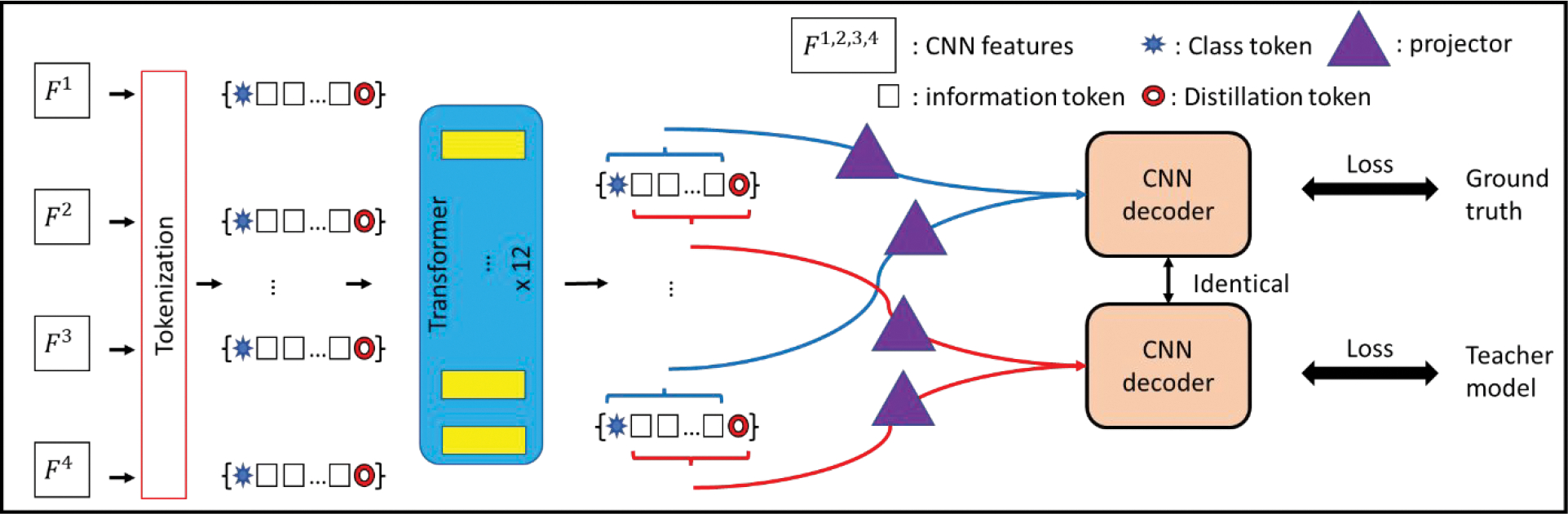

Figure 2:

Tokenization with knowledge distillation: The CNN decoder first takes the class token and the information token as input to generate segmentations matching the ground truth. The decoder then takes the distillation token with the information tokens as input for segmentation, aiming to reach the output of the teacher models.