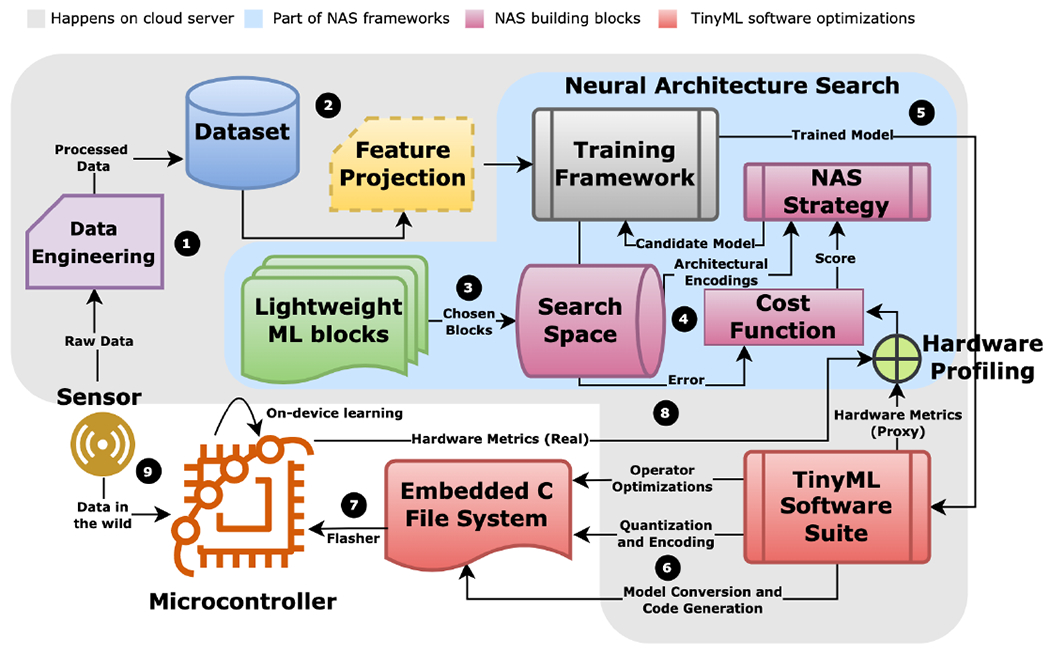

Fig. 1.

Closed-loop workflow of porting machine learning models onto microcontrollers. Step (3) to Step (8) are repeated until desired performance is achieved. (1) Data engineering performs acquisition, analytics and storage of raw sensor streams (Section III). (2) Optional feature projection directly reduces dimensionality of input data (Section IV). (3) Models are chosen from a lightweight ML zoo based on the application and hardware specifications (Section VI and Section X). (4) Neural architecture search strategy builds candidate models from the search space for training and evaluates the model based on cost function (Section VII). (5) Trained candidate model is ported to a TinyML software suite. (6) The TinyML software suite performs inference engine optimizations, deep compression and code generation. It also provides approximate hardware metrics (e.g., SRAM, Flash and latency) (Section V, Section VII, and Section VIII). (7) The embedded C file system is ported onto the microcontroller via command line interface. (8) The microcontroller optionally reports real runtime hardware metrics back to the neural architecture search strategy (Section VII). (9) On-device training or federated learning are used occasionally to account for shifts in incoming data distribution (Section IX).