Abstract

In this era of Coronavirus disease 2019 (COVID-19), an accurate method of diagnosis with less diagnosis time and cost can effectively help in controlling the disease spread with the new variants taking birth from time to time. In order to achieve this, a two-dimensional (2D) tunable Q-wavelet transform (TQWT) based on a memristive crossbar array (MCA) is introduced in this work for the decomposition of chest X-ray images of two different datasets. TQWT has resulted in promising values of peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) at the optimum values of its parameters namely quality factor (Q) of 4, and oversampling rate (r) of 3 and at a decomposition level (J) of 2. The MCA-based model is used to process decomposed images for further classification with efficient storage. These images have been further used for the classification of COVID-19 and non-COVID-19 images using ResNet50 and AlexNet convolutional neural network (CNN) models. The average accuracy values achieved for the processed chest X-ray images classification in the small and large datasets are 98.82% and 94.64%, respectively which are higher than the reported conventional methods based on different models of deep learning techniques. The average accuracy of detection of COVID-19 via the proposed method of image classification has also been achieved with less complexity, energy, power, and area consumption along with lower cost estimation as compared to CMOS-based technology.

Keywords: COVID-19, Chest X-ray images, Image decomposition and classification, Memristive crossbar array (MCA) based model, TQWT method

1. Introduction

COVID-19, caused by the novel SARS-CoV-2 virus can be understood as a type of pneumonia [1]. Patients diagnosed with COVID-19 suffer from dry cough, sore throat, and fever which may lead to organ failure [2]. The most prevalent method to diagnose COVID-19, the real-time reverse transcription-polymerase chain reaction (RT-PCR) test takes around 10–15 h to produce the result, making the diagnosis process very slow [1]. Another way to diagnose COVID-19 is the rapid diagnostic test (RDT) which takes 30 min to give the result. Even though the RDT method is faster, it is less reliable [3]. There is a need to explore other methods for COVID-19 diagnosis, especially in a populous country like India and many countries in the Asian subcontinent. Various studies have shown that COVID-19 affects the lungs of the patient. Hence chest X-ray images of suspected patients are the most feasible method to detect COVID-19 at an early stage [4]. Clinical imaging data are one of the most crucial diagnostic bases in all COVID-19 diagnostic data. Unfortunately, drawing the target area of medical images manually is a time-consuming and laborious task. It increases the burden on the clinicians given the complexity. Therefore, computer technology can be used to diagnose the disease using medical imaging techniques [5]. Deep learning techniques, which are a subset of machine learning techniques, have been explored to diagnose COVID-19 automatically using chest X-ray images [6]. Convolutional neural networks (CNNs), specially designed for images, are a class of deep neural networks in deep learning [7]. Residual neural network (ResNet) is a deep CNN, which is used for feature extraction and classification [8]. ResNet50 has been applied in various image recognition and classification applications such as metastatic cancer recognition [9], hyperspectral image classification [10], and chromosome classification [11]. On the other hand, AlexNet is an 8-layer model with 5 convolutional layers and 3 fully connected layers [12], which has various applications in image processing like identification of maize leaf disease [13], COVID-19 virus detection, and power equipment classification [14], scene image classification [15]. ResNet50 and AlexNet are two CNN models explored in this work for the classification of chest X-ray images that are preprocessed by a wavelet decomposition technique called tunable Q-wavelet transform (TQWT) [16]. The images are decomposed by setting TQWT parameters, namely quality factor (Q), oversampling rate (r), and the number of decomposition levels (J), to their optimized values. TQWT is described in detail in the later sections. The usage of TQWT to decompose the input chest X-ray images for classification application using an MCA-based model is novel and has not been reported elsewhere to the best of the author's knowledge. Performance of proposed model computed for two-class classification of chest X-ray image databases such as COVID-19 and normal class.

The current ongoing deep learning technologies are based on complementary metal oxide semiconductor (CMOS) circuits which have more operations in computation [17], area consumption, energy consumption [18], processing time, and power consumption [19]. These technological limitations can be overcome using the memristive crossbar array (MCA) as these significantly reduce the power consumption as compared to the CMOS-based conventional systems [20]. MCA is gaining popularity in various domains of image processing, such as pattern recognition and edge detection [21].

MCA is more efficient in terms of energy as well as processing time as compared to the traditional Von Neumann circuits in some applications such as pattern processing [22]. The energy consumption of a memristor-based resistive random-access memory is less which attracts a lot of attention to in-memory computation for various applications [17]. Various studies on memristor-based accelerator architectures [22] and memristor-based architectures for neuromorphic applications [23] have been previously published [24]. In conventional CMOS-based neural networks [25], the neurons are represented by capacitors that are bulky and occupy a large area [26], thus making the integration of a large number of neurons in a chip extremely challenging [27]. On the other hand, by representing the neural parameters with the resistance state of memristor cells [25], an MCA can work as a dot-production engine and can eliminate the data transfer overhead of numerous neural weights [28]. In this work our objectives are to resolve the following outlined problems that have not been addressed in the prior state-of-artwork:

-

•

Qualitative and quantitative investigations on the selection of optimum TQWT parameters for image decomposition.

-

•

Extended mathematical investigation for MCA model-based two-dimensional (2D) TQWT to decompose the chest X-ray images for further image classification.

-

•

Computational diagnosis of COVID-19 by using chest X-ray images through pre-trained CNN models.

2. Proposed methodology for diagnosis of COVID-19

2.1. Related work

In this proposed work, chest X-ray images are used to diagnose COVID-19 using the MCA model based on the TQWT image decomposition technique and pre-trained CNN models [12]. Chest X-ray images from two different datasets have been considered: a small dataset [29] having a total of 2193 chest X-ray images (COVID-19 chest X-ray images - 852 and normal chest X-ray images - 1341) and a large dataset [30] having a total of 5275 chest X-ray images (COVID-19 chest X-ray images - 2409 and normal chest X-ray images - 2866) have been used. In addition to the RT-PCR test [31], chest X-ray images can also be utilized as an assistive tool to diagnose COVID-19 with the help of image processing techniques with a machine learning algorithm. Many models have been proposed from all around the world for the diagnosis of COVID-19 using chest X-ray images. The best performance has been achieved by the ResNet50.

Model so far [4]. An accuracy of 98.82% has been achieved using the proposed methodology. A new model named COVID-Net has been proposed by Wang and Wong [32] which utilizes chest X-ray images for COVID-19 diagnosis. This model has achieved an accuracy of 83.5%. Li et al. [33] have proposed COVNet to detect COVID-19 using chest X-ray images. This model uses ResNet50 as the backbone network [3]. The sensitivity and specificity obtained from the COVNet model are 90% and 96%, respectively [33].

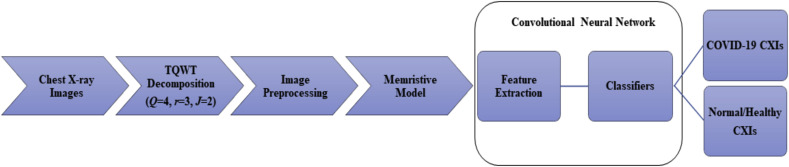

The schematic of the proposed methodology is shown in Fig. 1 . Firstly, chest X-ray images are decomposed by using TQWT technique; the optimum values for TQWT parameters are determined so that the chest X-ray images can be pre-processed with the parameters of the decomposition set to their optimized values. The sub-bands obtained after each level of decomposition of the image contain both low and higher frequency components. For the higher levels of decomposition, further decomposition is performed iteratively on the approximation component only. As one goes for higher levels of decomposition the classification performance is observed to degrade since these components contain noise present in the image.

Fig. 1.

Schematic shows the image decomposition and classification of the small and large datasets using the MCA-based model.

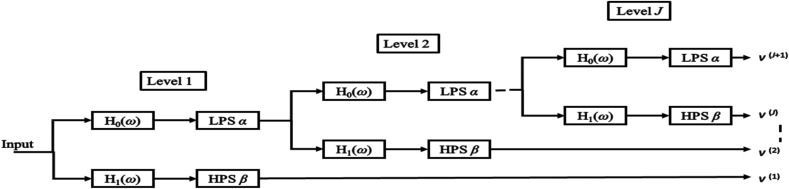

The chest X-ray images of large and small datasets are decomposed using TQWT technique at an optimized value of Q, r, and J [16]. The decomposed image coefficients are then stored in an MCA, as shown in Fig. 2 [34], where each cross point in the MCA is holding a coefficient value. The input image coefficient values are converted into voltages and fed to the MCA system-based model along the rows. The current along the columns is collected and image retrieval is performed using these current values, as can be observed in Fig. 2 level decomposition is given in Fig. 3 . In the proposed work image decomposition is done using TQWT where the number of sub-bands coefficients is.

Fig. 2.

MCA for image storage and retrieval. The memristor, which is placed at each cross point in the crossbar architecture is magnified by its circuit symbol.

Fig. 3.

Block diagram for TQWT for J level of TQWT decomposition.

Represented by ‘ω’ and further, it is considered as input in the memristive device-based model represented by ‘v’. The retrieved chest X-ray images are used for automatic image classification of COVID-19 via pre-trained CNN models using MATLAB version R2021a. Here, ResNet50 and AlexNet CNN models are used to classify chest X-ray images based on COVID-19 cases. A pre-trained adaptation of ResNet50 and AlexNet CNN models is separately processed in our utilized model, and all the chest X-ray images from the datasets are resized based on the input size requirement of the CNN models. The proposed method has produced remarkable accuracy and has successfully identified COVID-19 positive chest X-ray images as COVID-19 and COVID-19 negative chest X-ray images as Healthy/Normal as shown in Fig. 1.

In Fig. 4 , the simulation flow of work represents the algorithm used in the proposed methodology, where the program starts with an input image for optimization of Q, r, and J in TQWT processing. For optimized conditions, both datasets are being processed which have sub-band image coefficients that pass through the memristive model for further processing using the CNN model. In the proposed methodology, 30% of the dataset is used for training and 70% of the dataset is used for testing the classification model. In the current technology, less number of images are needed for training of the model during the transfer learning approach, as shown by Hu et al. by using 30% of the input data [35]. The transfer learning technique comes as a rescue provided the input images are resized according to the pre-trained network that is being used [35]. To achieve better performance and reduce the computational complexity with a reduced number of operations, 30% training data is used in the submitted manuscript instead of 70% training data. Two different pre-trained networks, namely ResNet50, and AlexNet, as shown in Fig. 4, have been applied for the classification. ResNet50 comprises five stages, namely convolution layer, batch normalization layer, rectified linear unit (ReLU) activation layer, and maximum pooling layer. The next stage comprises of convolution block and an identity convolution block where each block has three convolutional layers in each. The output layer comprises the average pooling layer, fully connected layer, and softmax layer. Similarly, AlexNet consists of five convolutional and three fully connected layers as shown in Fig. 4. The outcome of both the networks gives two classes of identification that is COVID-19 positive and normal or healthy chest X-ray images.

Fig. 4.

The simulation flow of work defines the algorithm for the proposed methodology.

2.2. Tunable Q-wavelet transform image decomposition techniques

There are various wavelets available that could be used for image processing, however, many of the wavelet transforms have limitations due to their constant quality factor [16]. After determining the basis function and decomposition level number, the quality factor (Q) is fixed [36].

The Q of a wavelet transform has to be in accordance with the oscillatory behavior of the image to which it is being applied [16]. In most wavelet transforms such as discrete wavelet transform (DWT), it is not possible to tune the Q, which affects the quality of reconstructed output images, of the wavelet [36]. To overcome the drawback of constant Q in traditional wavelet transforms, Selesnick et al. [16] has proposed TQWT technique, which is a nonlinear signal decomposition technique that facilitates a suitable Q of the wavelet basis function based on the signal to be decomposed [36]. TQWT technique is efficient in processing one-dimensional signals like speech, cardiac sound [36], and electroencephalogram (EEG) signals [37]. Similarly, TQWT could also be suitable for 2D signals like images with texture variations. Q, redundancy (r), and the number of decomposition levels (J) are TQWT parameters [36]. It can be observed that for J level decomposition, J+1 number of sub-bands are obtained which are represented by v.

The transfer functions of the low-pass and high-pass filter banks are represented by H 0(ω) and H 1(ω), respectively. The low-pass coefficient obtained after one stage of decomposition is used as the input for the succeeding stage. At each stage, the low-pass and the high-pass filter are followed by scaling. The parameters ‘Q’, and ‘r’ are related to the low-pass scaling factor (LPS α) and high-pass scaling factor (HPS β), as shown in Equations (1), (2).

| (1) |

| (2) |

The optimum values of Q, r, and J are determined so that all the chest X-ray images can be preprocessed through TQWT. The calculation of the optimum TQWT parameters has been described in section III. The decomposed chest X-ray images are stored in a memristive system developed from an analytical model as described in the following subsection.

2.3. MCA-based analytical model

An MCA can be comprehended by a 3-dimensional (3D) structure like a human brain [38]. The MCA offers remarkable downscaling at a nanoscale level which leads to high-density storage, ultrahigh switching speed, and longer operation cyclability which help to design an efficient system for image processing applications [38]. The ability to change synaptic weight is a crucial mechanism used in the process of learning by the human brain [34]. To emulate the brain pattern for image and speech recognition, one can introduce a neuromorphic MCA. As memristor-based systems are nonvolatile, low-power consuming, and nanoscale dimensioned they are highly apt for in-memory computing and also for implementing the computing systems [28] like CNNs.

The proposed analytical model [34] to develop a neuromorphic MCA-based model is validated via experimental results of Y2O3-based memristive systems. The nonlinear model describes the synaptic learning of Y2O3-based devices along with the detailed analytical model. The analytical model used for the study in this paper is represented by Equations (3), (4), (5). The nature of the MCA to be controlled by flux is expressed through the first term on the right-hand side of the I–V relationship shown in Equation (3).

| (3) |

The amount of impact of the state variable for positive and negative applied programming voltages on the device current is indicated by the parameters a 1 and a 2, respectively. The parameters, b 1, and b 2 sketch the slope of conductance in I–V characteristics. The hysteresis loop area controlling parameters are represented by α 1 and α 2, whereas the state variable is represented by w.

| (4) |

| (5) |

The net electronic barrier of the MCA is depicted by the parameters χ and γ. f(w) is the piecewise window function, as shown in Equation (4), making sure that the state variable is confined between 0 and 1. Equation (5) shows the derivative of the state variable in the time domain, where ‘A’, and ‘m’ determine the impact of the input voltage on the state variable. The analytical model proposed here can be applied to either unipolar or bipolar systems. The value of p restricts the window function between 0 and 1.

The developed MCA-based model shows various synaptic functionality such as learning and forgetting behavior, and synaptic plasticity [39]. Furthermore, the design of the analytical model [34] is inspired by the experimentally fabricated crossbar architecture which has successfully captured various synaptic and resistive memory characteristics.

3. Results and discussion

3.1. Tunable Q-wavelet transform image decomposition techniques

In this work, the sub-bands that are formed using TQWT-based image decomposition are used for deep feature extraction. Three major experiments are carried out in the current study, the first one is done to identify the best level of image decomposition and to obtain the optimized values of Q, r, and J. Second experiment is performed to process the input images through MCA-based model with TQWT.

Parameters at their optimized values of image decomposition for further diagnosis of COVID-19 with efficient image storage. In the third experiment, the proposed model is studied for image classification of two class chest X-ray image databases by considering the best CNN model, optimizer, and classifier as reported [3]. .

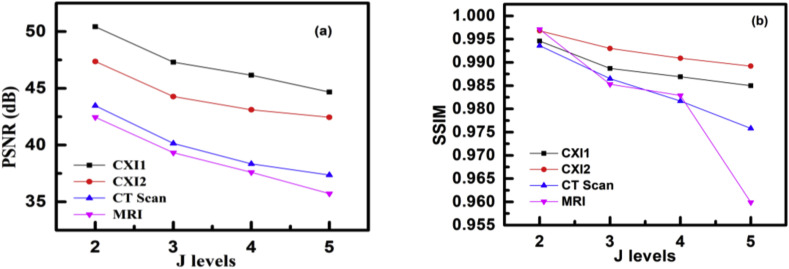

Decomposition has been performed on images mentioned earlier at various values of TQWT parameters and is observed to obtain their optimum values. This method of decomposition is accomplished using a parallel operation of low-pass filtering and high-pass filtering, respectively [16]. The coefficient taken from sub-bands at their optimized parameters is used for further reconstruction of output images. The filter banks applied for various levels of decompositions applied in this work are shown in Fig. 5 . In Fig. 5 (a), (b), (c), and (d) show the magnitude vs frequency plots of TQWT filters for second, third, fourth, and fifth decomposition levels, respectively. For the J-level decomposition, we require J+1 filters and each of those filters is represented by different colors in Fig. 5 for easy differentiation. The peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) values of the input and the decomposed image are observed at different values of Q, r, and J, and these values are tabulated in Table 1. The variation in the values of PSNR and SSIM can be observed pictorially in Fig. 6 (a) and (b), respectively. It can be observed that as the decomposition level is increased, the PSNR and SSIM values decline since the degradation in the image quality increases with the increase in the decomposition level. The same is observed in the cases where Q is larger than 4, as seen in Table 1, which could be reasoned as the Q of wavelet changes that is used for image decomposition the compatibility of the wavelet with the corresponding image changes.

Fig. 5.

Filter-bank for TQWT decomposition at (a) J = 2, (b) J = 3, (c) J = 4, and (d) J = 5.

Table 1.

Optimizing quality measurements for TQWT decomposed images.

| Q | r | J | Chest X-ray images 1 |

Chest X-ray images 2 |

CT scan |

MRI |

||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |||

| 3 | 3 | 2 | 47.9173 | 0.9901 | 45.7127 | 0.9949 | 42.4208 | 0.9904 | 41.7225 | 0.9958 |

| 4 | 50.4271 | 0.9946 | 47.3663 | 0.9968 | 43.4716 | 0.9936 | 42.4547 | 0.9971 | ||

| 5 | 49.1728 | 0.9924 | 46.4849 | 0.9957 | 41.9729 | 0.9922 | 40.7496 | 0.9952 | ||

| 6 | 49.0592 | 0.9922 | 46.1085 | 0.9952 | 40.9478 | 0.9913 | 39.7352 | 0.9931 | ||

| 7 | 48.5168 | 0.9921 | 45.7690 | 0.9946 | 40.1148 | 0.9900 | 39.1375 | 0.9902 | ||

| 3 | 3 | 46.5160 | 0.9873 | 43.9821 | 0.9923 | 39.5144 | 0.9843 | 38.6477 | 0.9853 | |

| 4 | 47.2999 | 0.9887 | 44.2713 | 0.9930 | 40.1446 | 0.9865 | 39.3283 | 0.9924 | ||

| 5 | 48.7629 | 0.9922 | 45.8647 | 0.9952 | 41.5023 | 0.9912 | 40.2830 | 0.9946 | ||

| 6 | 48.0599 | 0.9902 | 45.2835 | 0.9941 | 40.6298 | 0.9900 | 39.4280 | 0.9922 | ||

| 7 | 48.3067 | 0.9917 | 45.5364 | 0.9945 | 40.0446 | 0.9897 | 39.0282 | 0.9900 | ||

| 3 | 4 | 44.2128 | 0.9850 | 41.7548 | 0.9878 | 36.5341 | 0.9764 | 35.6735 | 0.9569 | |

| 4 | 46.1557 | 0.9869 | 43.1073 | 0.9909 | 38.3391 | 0.9817 | 37.5975 | 0.9829 | ||

| 5 | 46.4327 | 0.9870 | 43.5358 | 0.9915 | 38.9386 | 0.9840 | 38.0069 | 0.9889 | ||

| 6 | 47.6471 | 0.9900 | 44.7899 | 0.9936 | 40.1210 | 0.9823 | 38.9252 | 0.9913 | ||

| 7 | 47.6001 | 0.9904 | 44.9237 | 0.9935 | 39.6900 | 0.9883 | 38.6860 | 0.9889 | ||

| 3 | 5 | 42.2357 | 0.9809 | 39.1245 | 0.9807 | 33.8711 | 0.9704 | 33.6807 | 0.9295 | |

| 4 | 44.6694 | 0.9850 | 41.8046 | 0.9875 | 36.5913 | 0.9758 | 35.7242 | 0.9599 | ||

| 5 | 45.3264 | 0.9846 | 42.4492 | 0.9892 | 37.3568 | 0.9789 | 36.5811 | 0.9786 | ||

| 6 | 45.9121 | 0.9854 | 42.9670 | 0.9901 | 38.1996 | 0.9823 | 37.0857 | 0.9844 | ||

| 7 | 47.2194 | 0.9901 | 44.3838 | 0.9929 | 39.1981 | 0.9869 | 38.2265 | 0.9877 | ||

Fig. 6.

Variation in (a) PSNR and (b) SSIM of images for different J levels.

It can be observed that the parameter values Q = 4, r = 3, and J = 2 produce the best results in terms of PSNR and SSIM. It might be because of the compatibility of the wavelet with a Q of 4 and r of 3 with the corresponding image and the fact that lower decomposition level results in better-reconstructed image quality. Implementation of TQWT can also be understood easily by representing it in terms of low pass and high pass filters, Fig. 5 shows the filter bank to implement TQWT. In this study best level of decomposition is opted out of level-2, -3, -4, and -5 based decomposition as shown in Table 1. The best-tuned parameter values are observed to be the J = 2, Q = 4, and r = 3.

3.2. Image classification using CNN with MCA-based model

The classification performance of COVID-19 from the chest X-ray images database is studied. It has been shown in earlier studies that ResNet50 gives a better performance out of maxima, minima, average, and fusion operations [3]. There are two output classes in the classification: COVID-19 and normal. The input images are designated as chest X-ray image 1, chest X-ray image 2, computed tomography (CT) scan, and magnetic resonance imaging (MRI), where, chest X-ray image 1 is a COVID-19 image taken from the large dataset while chest X-ray image 2 is a normal image taken from the small dataset. The CT scan and MRI images are taken from Refs. [40,41], respectively. These images are considered to observe if the optimum parameters of TQWT decomposition are different for different images. In Table 2 the input and output images which are decomposed using the optimized parameters of Q, r, and J are tabulated along with the corresponding quality measures. The range of the quality measures is different for different images due to the differences in the size and resolution of the images. These specific values of TQWT parameters have been utilized to decompose all the chest X-ray images from the datasets in the next stage of image classification. In this phase, each filter bank has a frequency coefficient represented by ‘ω’ in Fig. 3, and the optimized level of decomposed chest X-ray images sub-band (v) coefficients are fed as input voltages (v) to the MCA-based model as described earlier. The images retrieved from the MCA are then used to train the earlier mentioned CNN models. The parameters used for performance evaluation in this application are accuracy, sensitivity, specificity, and precision [3], which are defined by equations (6), (7), (8), (9), respectively.

| (6) |

| (7) |

| (8) |

| (9) |

Table 2.

Input and output images obtained after decomposition using optimised TQWT parameters.

| Images | Chest X-ray images 1 | Chest X-ray images 2 | CT scan | MRI |

|---|---|---|---|---|

| Input |

|

|

|

|

| Reconstructed Output (Q = 4, r = 3, and J = 2) |

|

|

|

|

| Quality Measures | PSNR = 50.4271 dB SSIM = 0.9946 |

PSNR = 47.3663 dB SSIM = 0.9968 |

PSNR = 43.4716 dB SSIM = 0.9936 |

PSNR = 42.4547 dB SSIM = 0.9971 |

In the above equations, NTP, NTN, NFP, and NFN represent the number of true positives, the number of true negatives, the number of false positives, and the number of false negatives, respectively. Fig. 7 shows the variation in accuracy with the number of iterations while training, to build the two CNN models. The training accuracies for both datasets have been plotted for small and large chest X-ray image datasets. The training accuracy, though low in the beginning has reached nearly 90% in very few iterations as the CNN model learns the features better with each iteration and improves its ability to classify the chest X-ray images. After a few more iterations the accuracy is always observed to be above 80%.

Fig. 7.

Training and validation plots for CNN models deploying (a) ResNet50 for a small dataset, (b) AlexNet for a small dataset, (c) ResNet50 for a large dataset, and (d) AlexNet for a large dataset.

While training the CNN model new data are added for validating the model. The accuracy versus iterations plot during the validation of the models is also shown in Fig. 7. Fig. 7(a) and (b) represent training and validation accuracy while building the CNN using the small dataset for ResNet50 and AlexNet models, respectively. Fig. 7(c) and (d) show training and validation accuracy while building the CNN using the large dataset for ResNet50 and AlexNet models, respectively. The training and validation accuracy is better for a small dataset than the large dataset since the small dataset has fewer chest X-ray images to validate and test as compared to those for the large dataset. In the classification of COVID-19 chest X-ray images for small and large datasets, we have achieved a higher value of assessment parameters as compared to the reported results [40] by using TQWT-based decomposed chest X-ray images through the MCA-based model. The confusion matrices obtained after classifying the chest X-ray images from the two datasets using ResNet50 and AlexNet as COVID-19 or normal chest X-ray images. The size of the deep feature vector of the last fully connected layer depends on the type of pre-trained network. A support vector machine is used in the proposed work, as this classifier gives better performance than other reported classifiers for the application of image classification [3].

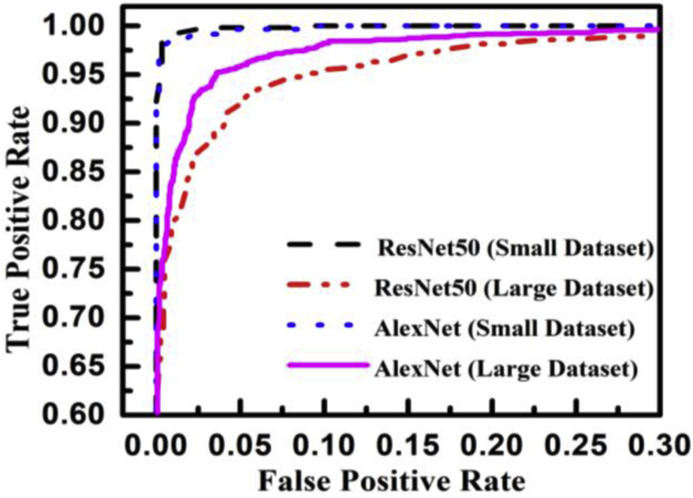

The true class is represented along the rows and the predicted class is represented along the columns. The first and fourth elements in the matrix give the NTP, NTN and the other elements, i.e., the second and third elements give the NFP, and NFN, respectively, as shown in Table 3 . The confusion matrix obtained after classifying the CXIs from the two datasets using ResNet50 and AlexNet as COVID or normal CXIs. The different parameters of evaluation have been calculated from the confusion matrix values that are accuracy, sensitivity, specificity, and precision can be observed in Table 4 . The receiver operating characteristic (ROC) curve, indicating the performance of the classification models which shows the diagnostic capability of the proposed classifier, and the relation between clinical sensitivity and specificity for every possible cut-off, is plotted for both datasets in Fig. 8 . To obtain the ROC curve, only the true positive rate (TPR) and false positive rate (FPR) are needed as a function of some classifier parameter. Classifiers that give curves closer to the top-left corner indicate better performance. It shows how many correct positive results occur among all positive samples available during the test. FPR, which is calculated by using the formula ‘FPR = 1-specificity’, is taken on the x-axis. The ROC curve is another appreciable way to visualize the performance of a classifier apart from the quality measurement parameters [42]. From Fig. 8, one can conveniently analyze that the small dataset has better ROC which indicates the capability of the classifier to distinguish clearly between two classes. It can be simply understood as a probability curve that informs how good the model is at differentiating the chest X-ray images with COVID-19 and without COVID-19. A good classification model is expected to have covered a large area under its ROC. This way, while comparing multiple models one can select a model by observing the corresponding ROC.

Table 3.

Output images after classification using MCA-based model with CONFUSION matrix based parameters.

| Chest X-ray Images | Small Dataset |

Large Dataset |

||||||

|---|---|---|---|---|---|---|---|---|

| ResNet50 | AlexNet | ResNet50 | AlexNet | |||||

| COVID-19 Positive |  |

|

|

|

||||

| Normal/Healthy |

|

|

|

|

||||

| Confusion Matrix-based parameters | NTP | 588 | NTP | 591 | NTP | 1608 | NTP | 1584 |

| NFP | 8 | NFP | 5 | NFP | 78 | NFP | 102 | |

| NFN | 6 | NFN | 9 | NFN | 68 | NFN | 113 | |

| NTN | 590 | NTN | 587 | NTN | 1618 | NTN | 1573 | |

Table 4.

Image classification quality measurement parameters.

| Chest X-ray Images | Small Dataset |

Large Dataset |

||

|---|---|---|---|---|

| ResNet50 | AlexNet | ResNet50 | AlexNet | |

| Confusion Matrix |

|

|

|

|

| Accuracy (%) | 98.82 | 98.82 | 95.67 | 93.62 |

| Precision (%) | 98.65 | 99.16 | 95.37 | 93.95 |

| Sensitivity (%) | 98.98 | 98.50 | 95.94 | 93.34 |

| Specificity (%) | 98.66 | 99.15 | 95.40 | 93.91 |

Fig. 8.

ROC plot for small and large datasets for AlexNet and ResNet50 models.

In Table 5 the performance of the proposed method with the methods available in the literature for diagnosis of COVID-19 from chest X-ray images databases is compared. It has been observed that accuracy, precision, specificity, and sensitivity values of 98.82%, 98.65%, 98.66%, and 98.98%, respectively have been achieved for the classification of images in the small dataset using our proposed ResNet50. For AlexNet models, the corresponding values are 98.82%, 99.16%, 99.15%, and 98.50% for the classification of images in the small dataset as shown in Table 5. For the small and large datasets, lower values of assessment parameters have been reported using other reported CNN models, as shown in Table 5 [[43], [44], [45], [46], [47]]. For the classification of images in the large dataset by using our utilized model, the values of accuracy, precision, specificity, and sensitivity are 95.67%, 95.37%, 95.40%, and 95.94%, respectively by ResNet50, and 93.62%, 93.95%, 93.91%, and 93.34%, respectively, by AlexNet, these are given in Table 5 [43, 44, 48–54].

Table 5.

Performance comparison of the proposed method with others for identification of COVID-19 using chest x-ray image database.

| Small Dataset | |||||

|---|---|---|---|---|---|

| Ref. | Models | Accuracy (%) | Precision (%) | Specificity %) | Sensitivity (%) |

| [46] | AlexNet | 99.00 | 98.00 | 99.00 | 99.00 |

| [43] | Covid-Net | 93.30 | 98.90 | – | 91.00 |

| [47] | Modified MobileNet | 95.00 | 99.00 | – | 96.00 |

| Our Work | ResNet50 | 98.82 | 98.65 | 98.66 | 98.98 |

| AlexNet | 98.82 | 99.16 | 99.15 | 98.50 | |

| Large Dataset | |||||

| Ref |

Models |

Accuracy (%) |

Precision (%) |

Specificity %) |

Sensitivity (%) |

| [44] | COVID-Net | 90.10 | 84.00 | – | 98.20 |

| DenseNet-201 | 91.75 | 94.24 | 78.00 | – | |

| [48] | ResNet50+SVM | 95.38 | – | 93.47 | 97.29 |

| [49] | ResNet-101 | 71.90 | – | 71.80 | 77.30 |

| [50] | XCOVNet | 98.44 | 99.29 | – | 99.48 |

| [51] | Xception | 91.00 | 92.00 | – | 87.00 |

| [52] | ResNet-50 | 98.00 | 94.81 | 98.44 | 87.29 |

| [53] | DenseNet-121 | 88.00 | – | 90.00 | 87.00 |

| [43] | Modified ResNet | 99.30 | – | – | 99.10 |

| [54] | XCOVNet | 88.90 | 83.40 | 96.40 | 85.90 |

| Our Work | ResNet50 | 95.67 | 95.37 | 95.40 | 95.94 |

| AlexNet | 93.62 | 93.95 | 93.91 | 93.34 | |

It has been observed that the level of accuracy of the proposed methodology is 5.92% and 4.02% higher as achieved by others such as Covid-Net [43] and Modified MobileNet [47], respectively, for the classification of images in the small dataset by using both the CNN models. For large datasets, the proposed model using ResNet50 has achieved 33.06%, 8.71%, 7.61%, 6.18%, 5.13%, and 4.27% higher accuracy as compared to those obtained by using.

ResNet-101 [49], DenseNet-121 [53], XCOVNet [54], COVID-Net [44], Xception [51], and DenseNet-201 [44], respectively. From the comparative analysis of our proposed work with reported literature for both the small and large datasets, it is evident that most of the performance matrices via conventional technology provide less values of accuracy, precision, specificity, and sensitivity. Although a CNN model [46] from the small dataset, and [43,50,52] from the large dataset give better accuracy and sensitivity as compared to those in the proposed work, however, commercially viable technologies for image classification consume more operations in computation, area consumption, energy consumption and processing time which have a direct impact on the cost of the overall system since these are based on CMOS systems [19,20]. These technological limitations can be overcome using the MCA as these reduce the total energy consumption, the number of operations in computation, area consumption, processing time, and power consumption compared to the conventional system [44] which will be helpful to circumvent Von Neumann bottleneck issues. As given in Table 6 , Halawani et al. and Khalid et al. have demonstrated image processing and digital logic circuits with a reduced number of devices and operations in the MCA-based model as compared to the conventional CMOS-based counterparts. A comparative analysis of compression for (512 × 512) image is performed using our MCA-based TQWT model and conventional CMOS-based models in which the values of various parameters are taken as in Ref. [19]. Table 6 displays the achievement of better performances compared to the conventional CMOS-based models. Hence, our proposed approach makes the memristor-based solution very attractive for image processing applications. This components-based study is useful for circuitry design to the application of image classification via MCA model-based architecture [45].

Table 6.

Comparison of the conventional digital CMOS-based computing with the MCA-based in-memory computation.

| Compression for (128 × 128) image [19] | |||

|---|---|---|---|

| Parameters | CMOS | Memristor | Prominent Improvement |

| Number of Operations | 1282 × 4 × 5 | 1282 × 2 | 10 times |

| Area (μm2) | 327000 | 7864.2 | 5 orders of magnitude |

| Processing Speed (μs) | 19.2 | 15 | 1.28 times |

| Energy Consumption (nJ) | 70.9080 | 6.4398 | 11 times |

| Full adder circuit by using 3-bit [20] | |||

| Parameters |

CMOS |

Memristor |

Prominent Improvement |

| Number of Transistor | 34 | 24 | 10 lesser transistors |

| Processing Time (ps) | 75.3 | 62.4 | 14.84% |

| Power Consumption (μW) | 117.3 | 53.08 | 54.74% |

| Our work on compression for (512 × 512) image | |||

| Parameters |

CMOS |

MCA |

Prominent Improvement |

| Number of Operations [19,20] | 5123 + (5122 × 511) | 5122 | 1023 times |

| Area (μm2) [19] | 1585446.912 | 41943.04 | 37.8 times |

| Energy Consumption (pJ) [19] | 1115.4 | 1.484 | 752 times |

| Processing Time (μs) [19,20] | 0.15 | 0.06 | 2.5 times |

| Power Consumption (mW) [19] | 7436 | 24.733 | 300 times |

At the hardware level, an MCA will be specifically utilized to accelerate the construction of artificial neural networks. As compared with conventional computer processors [55], the data stored in an MCA are processed in a parallel manner, which increases the computational speed and fault tolerance simultaneously and significantly reduces the system power consumption [56]. In this work, the performance of the proposed method using an MCA-based model is compared with the reported deep learning model based on other conventional technology. Hence, it can be concluded that by using an MCA without compromising the performance in image processing and classification high processing speed with savings in energy, power, area, and cost can be achieved.

4. Conclusion

This work explores the merits of employing TQWT and MCA based techniques for effective detection of the COVID-19 virus through chest X-ray images. Further, the highest values of peak signal to noise ratio and structural similarity index for chest X-ray image is 50.4271 dB and 0.9946, respectively, for the optimized TQWT parameters, namely Q = 4, r = 3, and J = 2. The utilized method can overcome the limitations of the complementary metal oxide semiconductor-based technology and is feasible with less complexity, processing speed, energy, power, and area consumption along with lower cost estimation as compared to current technology. This study is carried out to find the optimum values of parameters for the decomposition of chest X-ray images using a tunable Q-wavelet transform. By using the obtained optimum parameters, remarkable values of peak signal-to-noise ratio and structural similarity index measure are achieved. From the decomposed images, which are stored in the MCA, features are extracted using two different convolutional neural network models: ResNet50, and AlexNet. High average accuracy values of 98.82% and 94.64% are achieved by using the MCA-based model for small and large datasets containing 2193 and 5275 chest X-ray images, respectively. The image processing capability of the MCA-based model improves the operational efficiency of the neural network and reduces the energy consumption of the system as compared to other reported convolutional neural network models. In addition, the MCA model-based image processing technology can enhance the processing speed and accuracy along with the reduction in the number of operations, area, and energy consumption. This work can be further extended to identify different stages of coronavirus disease 2019 and to build an on-chip architecture based on a MCA. It can also be modified to diagnose other diseases like influenza and tuberculosis, from chest X-ray images, CT scans, and other imaging techniques.

Declaration of competing interest

The authors, Kumari Jyoti, Sai Sushma, Saurabh Yadav, Pawan Kumar, Ram Bilas Pachori, and Shaibal Mukherjee, declare no conflict of interest.

Acknowledgment

This work is partially supported by the CRDT project (No. IITI/CRDT/2022-23/04), and CSIR (No. 22(0841)/20/EMR-II).

Biographies

Kumari Jyoti (Member, IEEE) received the B.E. degree with honors in Electronics and Communication Engineering from TIT College, Rajiv Gandhi Technological University, Bhopal, India in 2014, and the M.Tech. degree in nanotechnology from the National Institute of Technology, Kurukshetra, India, in 2017. She is currently pursuing the Ph.D. degree with the Department of Electrical Engineering, Indian Institute of Technology Indore, India. She has published several research papers for reputed international journals and conference papers as authored and co-authored. Her current research interests include the application of analytical modelling of metal oxide-based non-volatile memories and memristive crossbar array (MCA) for Biomedical Image processing, Machine Learning, Deep Neural Networks, Artificial Intelligence, and Internet of Things (IoT) in the Healthcare and Agriculture domain using Convolutional Neural Networks.

Sai Sushma Sarvisetti (Member, IEEE) has received her Bachelor's degree in Electronics and Communication Engineering from Gayatri Vidya Parishad College of Engineering, India in 2020. She is currently pursuing Master's in VLSI Design and Nanotechnology from Indian Institute of Technology Indore, India. Her research interests include emerging technologies like memristors, CNNs and VLSI design.

Saurabh Yadav (Member, IEEE) student member (M′19) received the B.Sc. degree in Non-medical from Kurukshetra University, Kurukshetra, India, in 2015, the M.Sc. degree in Applied Physics from Kurukshetra University, Kurukshetra, Haryana, India in 2017 and the M.Sc. degree in Mathematics from Kurukshetra University (DDE), Kurukshetra, Haryana, India in 2021. He is currently pursuing the Ph.D. degree in Centre for Advanced Electronics at Indian Institute of Technology Indore, India. His current research interest includes the analytical model development and fabrication of Memristors and memtransistors. Mr. Saurabh awards and honours include JRF under UGC fellowship, and Qualified GATE (Graduate Aptitude Test in Engineering) in 2020.

Pawan Kumar (Member, IEEE) student member (M′19) received the B.Tech. degree in electronic and communication engineering from Rajasthan Technical University Kota, India, in 2013 and the M.Tech. degree in Material Science and Nanotechnology from National Institute of Technology, Kurukshetra, Haryana, India in 2016. He is currently pursuing the Ph.D. degree in electrical engineering at Indian Institute of Technology Indore, India. He has authored and co-authored 7 articles. His current research interest includes the analytical model development and fabrication of MgZnO/ZnO or CdZnO heterostructure field effect transistor (HFET). Mr. Kumar awards and honors include JRF under DST-SERB fellowship, and Qualified GATE (Graduate Aptitude Test in Engineering) in 2016, 2014 and 2013.

Ram Bilas Pachori (Senior Member, IEEE) received the B.E. degree with honours in Electronics and Communication Engineering from Rajiv Gandhi Technological University, Bhopal, India in 2001, the M.Tech. and Ph.D. degrees in Electrical Engineering from Indian Institute of Technology (IIT) Kanpur, Kanpur, India in 2003 and 2008, respectively. He worked as a Postdoctoral Fellow at Charles Delaunay Institute, University of Technology of Troyes, Troyes, France during 2007–2008. He served as an Assistant Professor at Communication Research Center, International Institute of Information Technology, Hyderabad, India during 2008–2009. He served as an Assistant Professor at Department of Electrical Engineering, IIT Indore, Indore, India during 2009–2013. He worked as an Associate Professor at Department of Electrical Engineering, IIT Indore, Indore, India during 2013–2017 where presently he has been working as a Professor since 2017. Currently, he is also associated with Center for Advanced Electronics at IIT Indore. He was a Visiting Professor at Neural Dynamics of Visual Cognition Lab, Free University of Berlin, Germany during July–September 2022. He has served as a Visiting Professor at School of Medicine, Faculty of Health and Medical Sciences, Taylor's University, Subang Jaya, Malaysia during 2018–2019. Previously, he has worked as a Visiting Scholar at Intelligent Systems Research Center, Ulster University, Londonderry, UK during December 2014. His research interests are in the areas of Signal and Image Processing, Biomedical Signal Processing, Non-stationary Signal Processing, Speech Signal Processing, Brain-Computer Interfacing, Machine Learning, and Artificial Intelligence and Internet of Things (IoT) in Healthcare. He is an Associate Editor of Electronics Letters, IEEE Transactions on Neural Systems and Rehabilitation Engineering, Biomedical Signal Processing and Control and an Editor of IETE Technical Review journal. He is a senior member of IEEE and a Fellow of IETE, IEI, and IET. He has 268 publications which include journal papers (166), conference papers (72), books (08), and book chapters (22). He has also three patents: 01 Australian patent (granted) and 02 Indian patents (filed). His publications have been cited approximately 12000 times with h-index of 57 according to Google Scholar. He has worked on various research projects with funding support from SERB, DST, DBT, CSIR, and ICMR.

Shaibal Mukherjee (Senior Member, IEEE) completed his PhD in Electrical and Computer Engineering, University of Oklahoma, USA in 2009 followed by his postdoctoral research work in the Center of Quantum Devices, Electrical Engineering and Computer Science, Northwestern University, USA. In September 2010, he joined IIT Indore and currently is a Professor in the Department of Electrical Engineering at IIT Indore. The Hybrid Nanodevice Research Group (HNRG) led by Shaibal at IIT Indore explores new physics of micro- and nano-structured materials, and to apply this knowledge in realizing advanced tools and devices for chemical, biological, optical, electronic and energy applications. He has published 105+ research articles in peer-reviewed journals, 102+ international conference proceedings, 11 book/book chapters and 9 patents (granted: 1, filed: 8). He is the recipient of various prestigious awards such as “2022 IETE Fellow”, “2021 Japan Society for the Promotion of Science (JSPS) Invitational Fellowship Award”, “2020 DUO-India Professor Fellowship Award”, “2019 DAAD Fellowship Award”, “2018 Materials Research Society of India (MRSI) Medal”, “2016 Young Faculty Research Fellowship (YFRF) under Visvesvaraya PhD Scheme for Electronics and IT”. He is recently appointed as Adjunct Faculty at RMIT University, Melbourne, Australia. He is an Associate Editor for IEEE Sensors Journal, a senior member of IEEE, a regular member of Optical Society of America and Life Fellow of MRSI and Optical Society of India. He is the founding Chair of IEEE Madhya Pradesh (MP) Section Electron Devices Society (EDS) chapter.

References

- 1.Ouyang X., et al. Dual-sampling attention network for diagnosis of COVID-19 from community acquired pneumonia. IEEE Trans. Med. Imag. 2020;39(8):2595–2605, Aug. doi: 10.1109/TMI.2020.2995508. [DOI] [PubMed] [Google Scholar]

- 2.Laguarta J., Hueto F., Subirana B. COVID-19 artificial intelligence diagnosis using only cough recordings. IEEE Open J. Eng. Med. Biol. Sep. 2020;1:275–281. doi: 10.1109/OJEMB.2020.3026928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chaudhary P.K., Pachori R.B. FBSED based automatic diagnosis of COVID-19 using X-ray and CT images. Comput. Biol. Med. Jul. 2021;134:104454. doi: 10.1016/j.compbiomed.2021.104454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Qi A., et al. Directional mutation and crossover boosted ant colony optimization with application to COVID-19 X-ray image segmentation. Comput. Biol. Med. Sep. 2022;148 doi: 10.1016/j.compbiomed.2022.105810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Su H., et al. Multilevel threshold image segmentation for COVID-19 chest radiography: a framework using horizontal and vertical multiverse optimization. Comput. Biol. Med. Jul. 2022;146 doi: 10.1016/j.compbiomed.2022.105618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chaudhary P.K., Pachori R.B. Automatic diagnosis of glaucoma using two-dimensional Fourier-Bessel series expansion based empirical wavelet transform. Biomed. Signal Process Control. Feb. 2021;64 [Google Scholar]

- 7.Shin H.C., et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imag. May 2016;35(5):1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tian X., Chen C. 2ndIEEE Inter. Conf. On Infor. Commu. Signal Process. (ICICSP); 2019. Modulation pattern recognition based on Resnet50 neural network; pp. 34–38. [Google Scholar]

- 9.Wang M., Gong X. 2020 IEEE 20th Inter. Conf. On Commu. Techn. (ICCT) Dec. 2020. Metastatic cancer image binary classification based on resnet model; pp. 1356–1359. [Google Scholar]

- 10.Jiang Y., Li Y., Zhang H. Hyperspectral image classification based on 3-D separable ResNet and transfer learning. Geosci. Rem. Sens. Lett. IEEE. Dec. 2019;16(12):1949–1953. [Google Scholar]

- 11.Wang C., Yu L., Zhu X., Su J., Ma F. Extended ResNet and label feature vector based chromosome classification. IEEE Access. Oct. 2020;8:201098–201108. [Google Scholar]

- 12.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Assoc. Comp. Mach. Jun. 2017;60(6):84–90. [Google Scholar]

- 13.Lv M., Zhou G., He M., Chen A., Zhang W., Hu Y. Maize leaf disease identification based on feature enhancement and DMS-robust alexnet. IEEE Access. Mar. 2020;8:57952–57966. [Google Scholar]

- 14.Davari N., Akbarizadeh G., Mashhour E. Corona detection and power equipment classification based on GoogleNet-AlexNet: an accurate and intelligent defect detection model based on deep learning for power distribution lines. IEEE Trans. Power Deliv. Sep. 2021;37(4):2766–2774. [Google Scholar]

- 15.Sun Jing, Cai Xibiao, Sun Fuming, Zhang J. 2016 3rd Inter. Conf. Inform. And Cybern. for Comput.L Social Syst. (ICCSS); Jan. 2016. Scene image classification method based on Alex-Net model; pp. 363–367. [Google Scholar]

- 16.Selesnick I.W. Wavelet transform with tunable Q-factor. IEEE Trans. Signal Process. 2011;59(8):3560–3575, Aug. [Google Scholar]

- 17.Dong Z., et al. Convolutional neural networks based on RRAM devices for image recognition and online learning tasks. IEEE Trans. Electron. Dev. Jan. 2019;66(1):793–801. [Google Scholar]

- 18.Truong S.N., Shin S.H., Byeon S.D., Song J.S., Min K.S. New twin crossbar architecture of binary memristors for low-power image recognition with discrete cosine transform. IEEE Trans. Nanotechnol. 2015;14(6):1104–1111, Nov. [Google Scholar]

- 19.Halawani Y., Mohammad B., Al-Qutayri M., Al-Sarawi S.F. Memristor-based hardware accelerator for image compression. IEEE Trans. Very Large Scale Integr. Syst. 2018;26(12):2749–2758, Dec. [Google Scholar]

- 20.Khalid M., Mukhtar S., Siddique M.J., Ahmed S.F. Memristor based full adder circuit for better performance. Trans. Elec. Electro. Materials. 2019;20(5):403–410. [Google Scholar]

- 21.Pannu J.S., et al. Design and fabrication of flow-based edge detection memristor crossbar Circuits1. IEEE Trans. on Circuits and Systems II. May 2020;67(5):961–965. [Google Scholar]

- 22.Yin S., Sun X., Yu S., Seo J.-S. High-throughput in-memory computing for binary deep neural networks with monolithically integrated RRAM and 90-nm CMOS. IEEE Trans. Electron. Dev. Oct. 2020;67(10):4185–4192. [Google Scholar]

- 23.Xia L., et al. MNSIM: simulation platform for memristor-based neuromorphic computing system. IEEE Trans. Comput. Aided Des. Integrated Circ. Syst. May 2018;37(5):1009–1022. [Google Scholar]

- 24.Cai Y., Tang T., Xia L., Li B., Wang Y., Yang H. Low bit-width convolutional neural network on RRAM. IEEE Trans. Comput. Aided Des. Integrated Circ. Syst. July 2020;39(7):1414–1427. [Google Scholar]

- 25.Ambrogio S., et al. Neuromorphic learning and recognition with one-transistor-one-resistor synapses and bistable metal oxide RRAM. IEEE Trans. Electron. Dev. Apr. 2016;63(4):1508–1515. [Google Scholar]

- 26.Garbin D., et al. HfO2-based OxRAM devices as synapses for convolutional neural networks. IEEE Trans. Electron. Dev. 2015;62(8):2494–2501, Aug. [Google Scholar]

- 27.Wang J.J., et al. Handwritten-digit recognition by hybrid convolutional neural network based on HfO2 memristive spiking-neuron. Sci. Rep. Aug. 2018;8 doi: 10.1038/s41598-018-30768-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Duan S., et al. Memristor-based cellular nonlinear/neural network: design, analysis, and applications. IEEE Transact. Neural Networks Learn. Syst. Jun. 2015;26(6):1202–1213. doi: 10.1109/TNNLS.2014.2334701. [DOI] [PubMed] [Google Scholar]

- 29.Wang L., Lin Z.Q., Wong A. COVID-net: a tailored deepconvolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. Dec. 2020;10(1) doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Haghanifar A., Majdabadi M.M., Ko S. vol. 13807. 2020. (COVID-CXNet: Detecting COVID-19 in Frontal Chest X-Ray Images Using Deep Learning). ArXiv, abs/2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang S.H., et al. COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Inf. Fusion. Apr. 2021;68:131–148. doi: 10.1016/j.inffus.2020.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wang L., Lin Z.Q., Wong A. Covid-net: a tailored deep convolutional neural network design for detection of covid-19 cases from chest X-ray images. Sci. Rep. May 2020;10:1–12. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Li L., et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology. Mar. 2020;296(2) doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kumar S., Agrawal R., Das M., Jyoti K., Kumar P., Mukherjee S. Analytical model for memristive systems for neuromorphic computation. J. Phys. D Appl. Phys. Jun. 2021;54(35) [Google Scholar]

- 35.Hu G., Liu L., Tao D., Song J., Tse K.T., Kwok K.C.S. Deep learning-based investigation of wind pressures on tall building under interference effects. J. Wind Eng. Ind. Aerod. Jun. 2020;201:104138. [Google Scholar]

- 36.Kumar T.S., Kanhangad V. Proc. Int. Conf. Digit. Image Comput., Techn. Appl. (DICTA); 2015. Face recognition using two-dimensional tunable-Q wavelet transform; pp. 1–7. [Google Scholar]

- 37.Bhattacharyya A., et al. A multi-channel approach for cortical stimulation artefact suppression in depth EEG signals using time-frequency and spatial filtering. IEEE Trans. Biomed. Eng. Jul. 2018;66(7):1915–1926. doi: 10.1109/TBME.2018.2881051. [DOI] [PubMed] [Google Scholar]

- 38.Li H., et al. 3-D resistive memory arrays: from intrinsic switching behaviors to optimization guidelines. IEEE Trans. Electron. Dev. 2015;62(10):3160–3167, Oct. [Google Scholar]

- 39.Das M., Kumar A., Singh R., Htay M.T., Mukherjee S. Realization of synaptic learning and memory functions in Y2O3 based memristive device fabricated by dual ion beam sputtering Nanotechnology. Nanotechnology. 2018;29:1–9. doi: 10.1088/1361-6528/aaa0eb. [DOI] [PubMed] [Google Scholar]

- 40.Johnson K.A., Becker J.A. 1999. The Whole Brain Atlas.http://www.med.harvard.edu/aanlib/home.html [Google Scholar]

- 41.E. Soares, P. Angelov, S. Biaso, M. H. Froes, and D. K. Abe, SARS-CoV-2 Identification. DOI: 10.1101/2020.04.24.20078584v3.

- 42.Dalton L.A. Optimal ROC-Based classification and performance analysis under Bayesian uncertainty models. IEEE ACM Trans. Comput. Biol. Bioinf. Jul. 2016;13(4):719–729. [Google Scholar]

- 43.Jia G., Lam H.-K., Xu Y. Classification of COVID-19 chest X-Ray and CT images using a type of dynamic CNN modification method. Comput. Biol. Med. l. 2021;134(Ju) doi: 10.1016/j.compbiomed.2021.104425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Badawi A., Elgazzar K. Detecting coronavirus from chest X-rays using transfer learning. COVID. Sep. 2021;1(1):403–415. 2021. [Google Scholar]

- 45.Oh Y., Park S., Ye J.C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans. Med. Imag. 2020;39(8):2688–2700, Aug. doi: 10.1109/TMI.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 46.Haghanifar A., et al. Multimed Tools Appl; 2022. COVID-CXNet: Detecting COVID-19 in Frontal Chest X-Ray Images Using Deep Learning. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pham D.T. Classification of COVID-19 chest X-rays with deep learning: new model or fine tuning? Health Inf. Sci. Syst. 22 Nov. 2020;9(12) doi: 10.1007/s13755-020-00135-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sethy P.K., Behera S.K., Ratha P.K., Biswas P. Detection of coronavirus disease (COVID-19) based on deep feature and support vector machine. Int. J. Math. Eng. management sciences. 2002;5(4):643–651. 725526. [Google Scholar]

- 49.Azemin M.Z.C., Hassan R., Tamrin M.I.M., Ali M.A.M. COVID-19 deep learning prediction model using publicily avaible radiologist- adjdicated chest X-ray images as training data: preliminary findings. Int. J. Biomed. Imag. 2020 doi: 10.1155/2020/8828855. 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Madaan V., et al. XCOVNet: chest X-ray image classification for COVID-19 early detection using convolutional neural networks. New Generat. Comput. 2021;39:583–597. doi: 10.1007/s00354-021-00121-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lujan-García J.E., Moreno-Ibarra M.A., Villuendas-Rey Y., Yáñez-Márquez C. Fast COVID-19 and pneumonia classification using chest X-ray images. Mathematics. 2020;8(1423) [Google Scholar]

- 52.Imani M. Automatic diagnosis of coronavirus (COVID-19) using shape and texture characteristics extracted from X-Ray and CT-Scan images. Biomed. Signal Process Control. Jul. 2021;68:102602. doi: 10.1016/j.bspc.2021.102602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kim G.Y., Kim J.Y., Kim C.H., Kim S.M. Evaluation of deep learning for COVID-19 diagnosis: impact of image dataset organization. J. Appl. Clin. Med. Phys. Jul. 2021;22(7):297–305. doi: 10.1002/acm2.13320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Oh Y., Park S., Ye J.C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans. Med. Imag. May 2020;39(8):2688–2700. doi: 10.1109/TMI.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 55.Hu K., et al. Colorectal polyp region extraction using saliency detection network with neutrosophic enhancement. Comput. Biol. Med. 2022;147(Aug) doi: 10.1016/j.compbiomed.2022.105760. [DOI] [PubMed] [Google Scholar]

- 56.Ji X., Dong Z., Zhou G., Lai C.S., Yan Y., Qi D. Memristive system based image processing technology: a Review and perspective. Electronics. Dec. 2021;10(24):3176. [Google Scholar]