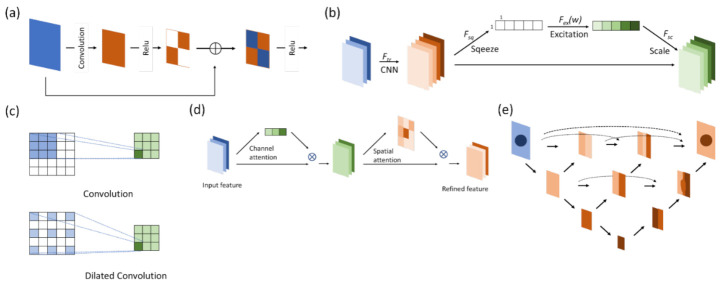

Figure 4.

The blocks or modules used in different neural networks for computer vision deep learning. (a) The residual block applies a skip connection to keep the gradients from vanishing or being lost during backpropagation. (b) The SE module assigns weights to each channel for representation and multiples them to the corresponding feature map to increase the robustness. (c) Dilated convolution expands the reception field to enhance its ability to process objects with blurred borders. (d) The attention module identifies the relationships between regional features so that it detects object borders more effectively. (e) U-Net++ block-fills the space between the encoder and the decoder with convolutional blocks to improve performance.