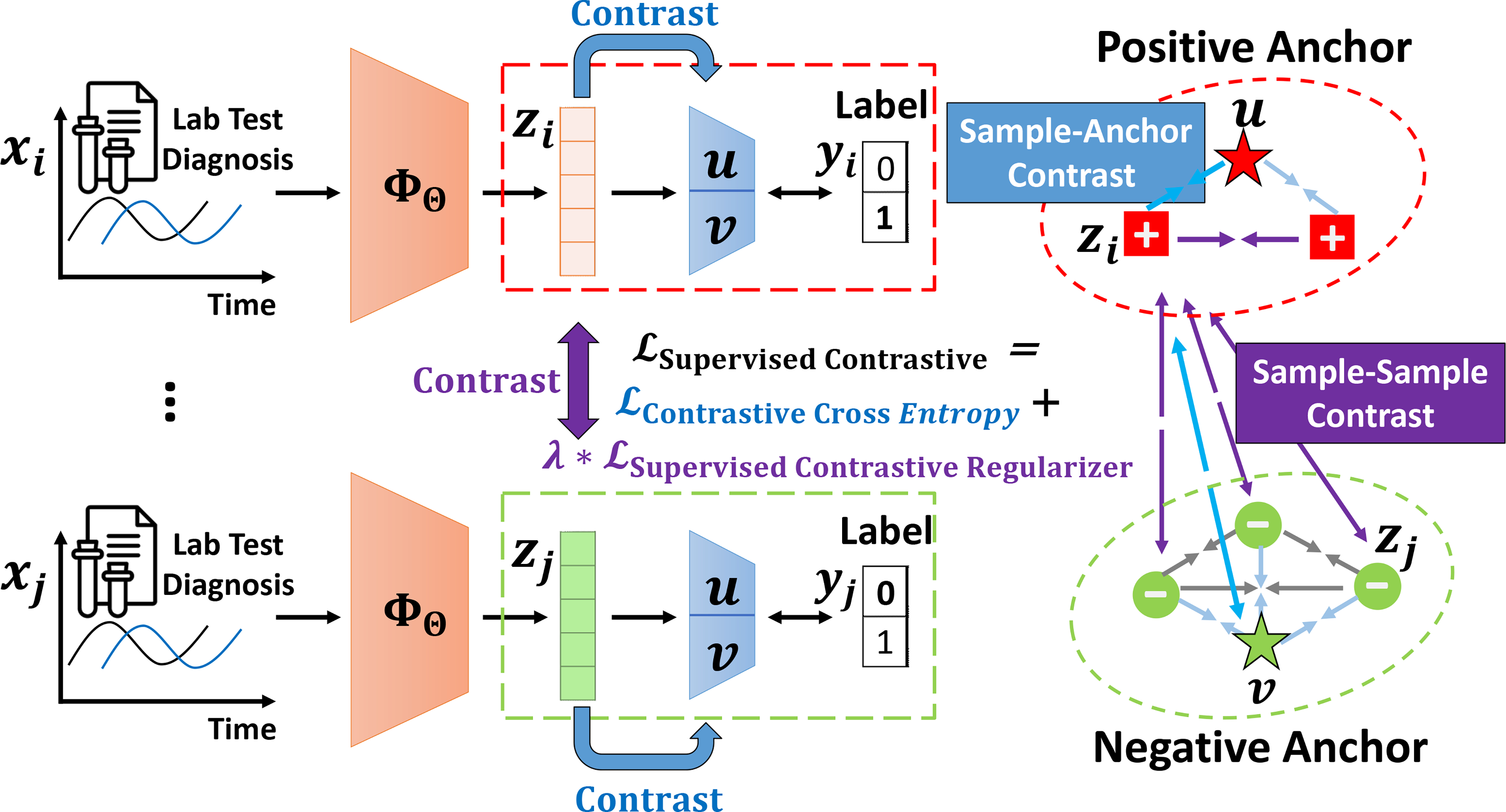

Fig. 1.

An illustration of our SCEHR. We propose a general supervised contrastive learning loss for clinical risk prediction problems using longitudinal electronic health records. The overall goal is to improve the performance of binary classification (e.g. in-hospital mortality prediction) and multi-label classification (e.g. phenotyping) by pulling (→←) similar samples closer and pushing (←→) dissimilar samples apart from each other. tries to contrast sample representations with learned positive and negative anchors, and tries to contrast sample representations with others in a mini-batch according to their labels. For brevity, we only highlight the contrastive pulling and pushing forces associated with sample i in a mini-batch consisting of two positive samples and three negative samples