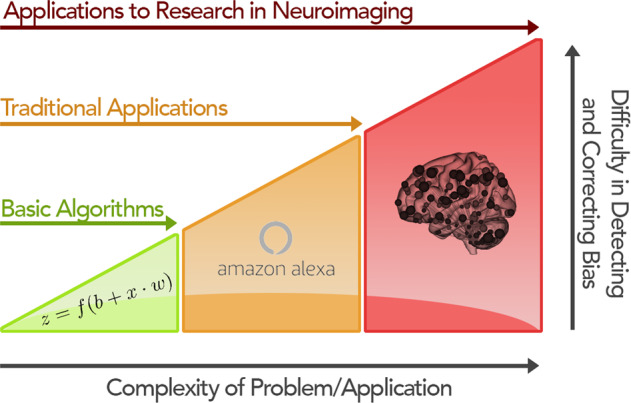

Fig. 1. The increasing difficulty of understanding biases as application complexity increases.

In theoretical work, such as algorithmic proofs, bias is low, putatively, as these works often do not focus on real-world data. However, biases quickly emerge in well-established applications in machine learning, like language and image processing. These biases may be missed during the initial product development but can quickly become apparent upon widespread use. Finally, for emerging applications of machine learning, such as in psychiatry, potential biases are often hard to observe, understand, and prevent, in part because (1) our knowledge of mental health disorders is still limited in comparison to traditional applications like image processing and (2) the data may not be comprehensive enough to model the complexities of mental health fully.