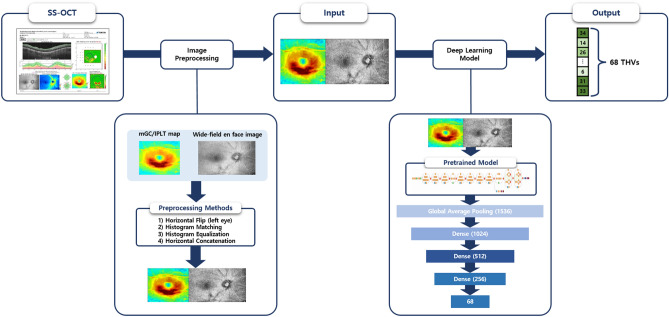

Figure 1.

Flow diagram of the deep learning model (Google’s convolutional neural network architecture). Extraction of input images (wide-field en face image with mGC/IPLT map or wide-field RNFLT map with mGC/IPLT map) was performed on a wide-field scan of SS-OCT. Then, preprocessing of the extracted images was carried out, including consistency and contrast enhancement and concatenation of images. Inception-ResNet-V2 was used as the backbone structure at the beginning of the architecture to extract the global features. The global average pooling layer flattened the output of the Inception-ResNet-V2 backbone and averaged 1536 features. The three dense layers gradually condensed these features into 68 final output values, which corresponded to 68 10-2 visual field threshold values. mGC/IPLT macular ganglion cell/inner plexiform layer thickness; RNFLT retinal nerve fiber layer thickness; SS-OCT swept-source optical coherence tomography.