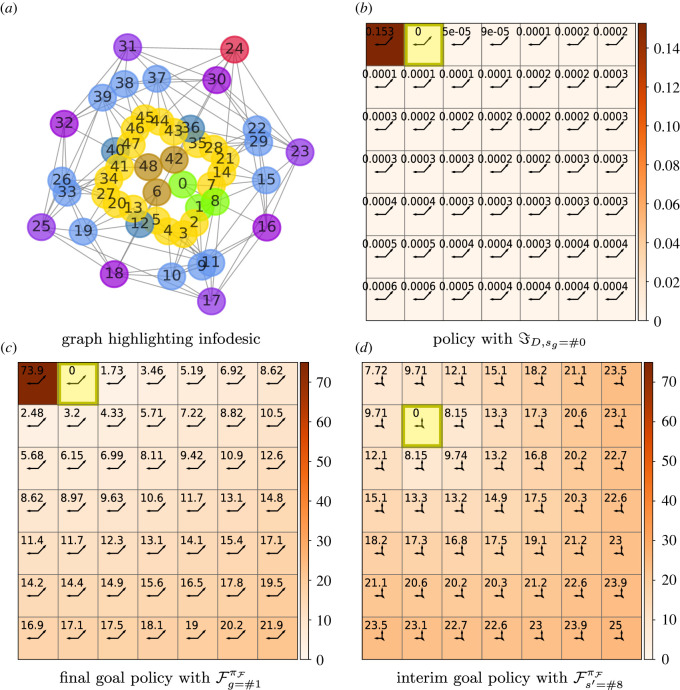

Figure 6.

Approaching open-loop policies with β = 0.01. A 7 × 7 Moore gridworld with near-minimal information processing, β = 0.01. The agent starts in the corner #0 and aims to reach the adjacent state #1. (a) Graph plot showing the -infodesic, ψ0→1 = 〈#0, #8, #1〉, highlighted in green. (b) The policy and decision information for the final goal state #1, highlighted in yellow and (c) the same gridworld policy and goal with free energies as annotations and heatmap. (d) The policy and free energies for the interim goal #8, highlighted in yellow.