Table 1.

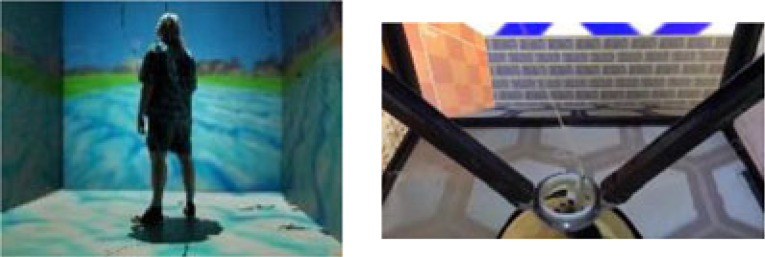

An overview of the visual environments available to investigate the neural encoding and perception of optic flow. The table also summarizes available methods to couple visual displays to a subject's self-motion. VR ‘CAVE’ image from: https://commons.wikimedia.org/w/index.php?curid=868395.

| Real environments | Large display environments | Head-mounted displays |

|---|---|---|

|

|

|

| A photograph of a woodland. — Natural visual inputs. — World and eye cameras can be used to reconstruct observed visual scene [9,103].— Lenses [106] and prisms [107] can be used to manipulate visual inputs. |

Left: a VR ‘CAVE’. Right: two-dimensional rodent VR [102]. — Flexible presentation of visual inputs. — Curved or multiple display devices can achieve large visual field coverage. — Virtual [95,108,109] / augmented [108,110,111] reality. |

Left and right eye views of a head-mounted display. — Flexible stereoscopic presentations of visual inputs. — Virtual [104] / augmented reality [105]. |

| Coupling with movement | ||

| — Natural free movement. — Passive motion platforms and treadmills can be incorporated [112]. |

— One-dimensional/two-dimensional treadmills [49,72,102]. — Passive motion platforms [113,114]. — Free movement interaction is possible with motion tracking cameras (e.g. ‘VR CAVEs’) [115]. |

— Natural free movement using built-in tracking of rotational and translational head movements. — One-dimensional/two-dimensional treadmills [104]. — Passive motion platforms. |