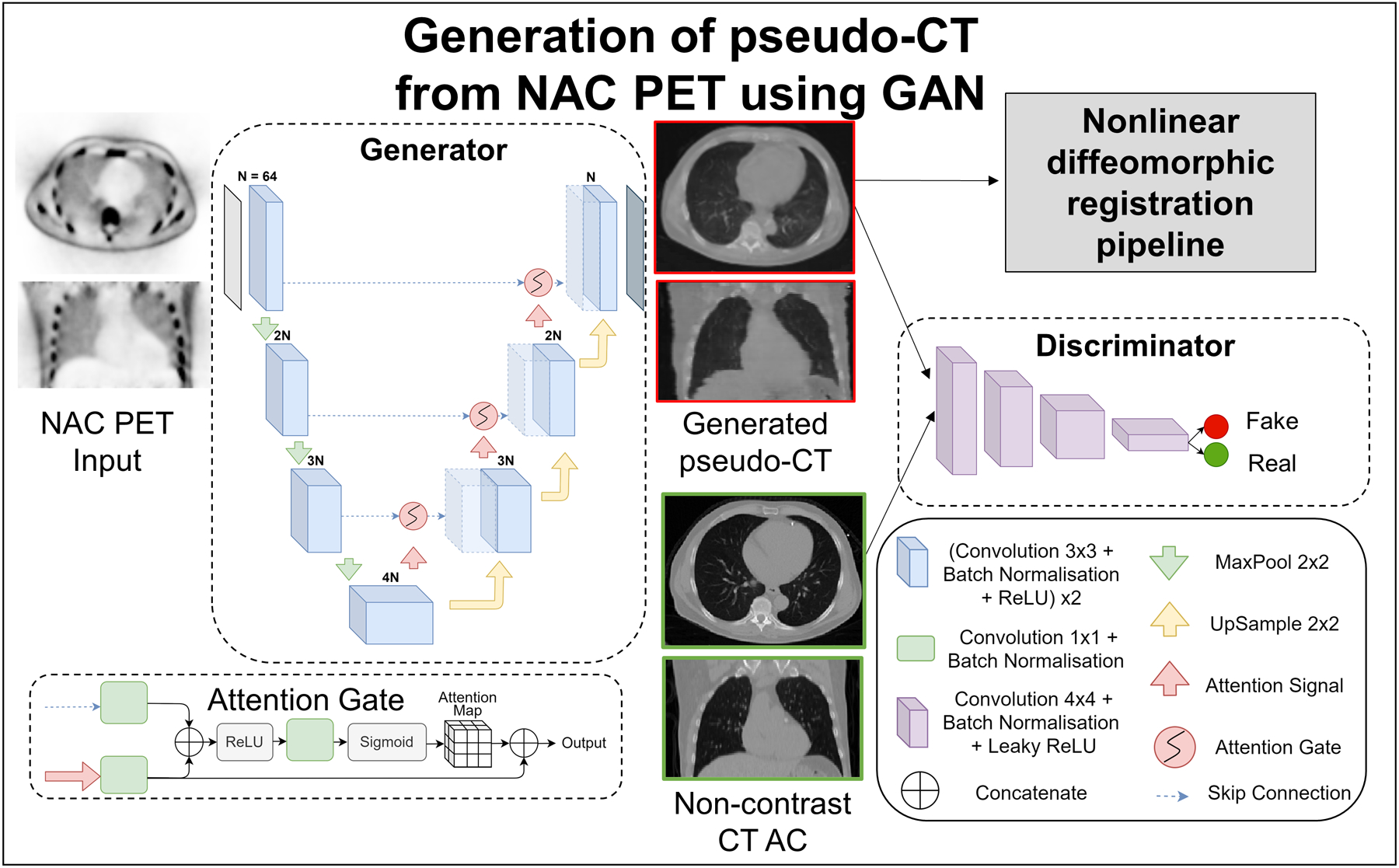

Figure 2: Generation of pseudo-CT from NAC PET.

The GAN consisted of two deep learning networks, the generator and discriminator which were trained together to generate realistic pseudo-CT images. The input to the UNet based generator was a 2D NAC PET slice and the output was the corresponding pseudo-CT slice. The encoder part of the generator consisted of 4 contracting convolution blocks each. Each convolution block was followed by a 2×2 max pool operation for dimension reduction. The decoder block consisted of 3 repeated upsampling blocks each doubling the number of feature channels. Batch normalization normalized inputs from a layer and stabilized the learning process. ReLU is used to set all negative inputs to zero and pass all positive inputs to introduce non-linearity in the network. Skip connections were used with attention gates connecting the encoder to the decoder. The discriminator with convolution blocks had inputs of generated pseudo-CT and corresponding real CT AC slice. The output of the network was an averaged similarity between the two inputs. The generated pseudo-CT slices were consolidated per patient and input to the diffeomorphic registration pipeline.

AC: attenuation correction, CT: computed tomography, NAC: non-attenuation corrected, PET: positron emission tomography, ReLU: rectified linear unit