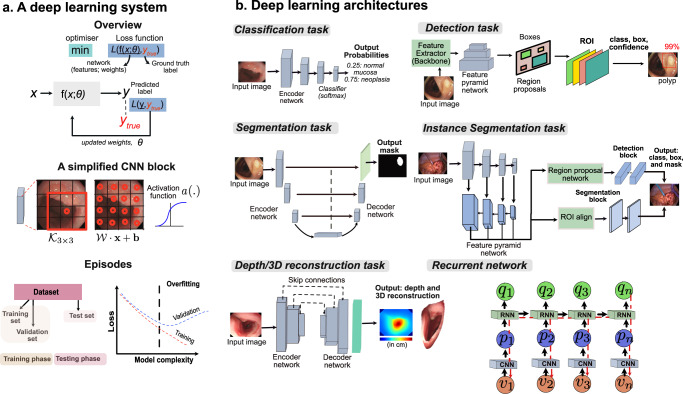

Fig. 3. Deep learning system and its widely used designs.

a A conceptual representation of a deep learning system with an optimiser for minimising a loss function. A simplified convolutional neural network (CNN) block comprising a 3 × 3 kernel and computed weight for each pixel with kernel weights and bias is provided. It also demonstrates a non-linear activation function applied to capture more complex features. The training and test phase consists of split datasets where the validation set is used to guarantee that the learnt parameters generalise and do not overfit the training dataset. A model over-fitting graph is shown that is regulated using a validation set. b Some widely used deep learning architectures are shown for various tasks in endoscopic image analysis. For the classification network, only an encoder network is used that is usually followed by a classifier such as softmax3. For detection, features are extracted using an encoder network, which is then pulled using a region proposal network to predict both the class and the bounding box representations128. For semantic segmentation, the encoder features are up-scaled to image size per-pixel classification. Similarly, for the instance-segmentation task, both the region proposals for bounding boxes and per-pixel predictions for masks are used131. The idea of a depth estimation network is to understand how far the camera is from an anatomical region providing distances in the real-world coordinate system22. Finally, recurrent neural networks (aka RNNs) can embed temporal video information to refine current predictions from a CNN network64. Here the sequential frame inputs v1,.., vn are fed to the CNN network producing visual feature vectors p1,..., pn, which are then fed to the RNN network. The RNNs output represents the temporal relationship providing context-aware predictions for each frame such that the output for the nth frame qn is dependent on both current and previous frames, i.e., feature vectors q(Vn) and all other previous feature vectors q(Vu), u < n. Both CNN and RNN networks are jointly optimised using boosting strategy. The sources of relevant endoscopy images: gastroscopy and colonoscopy images in (a and b) are acquired from Oxford University Hospitals under Ref. 16/YH/0247 and forms part of publicly released endoscopy challenge datasets (EDD2020127 under CC-by-NC 4.0 and PolypGen128 under CC-by, Dr S. Ali is the creator of both datasets). Surgical procedure data are taken from ROBUST-MIS113.