Abstract

We have previously suggested a distinction in the brain processes governing biological and artifactual stimuli. One of the best examples of the biological category consists of human faces, the perception of which appears to be determined by inherited mechanisms or ones rapidly acquired after birth. In extending this work, we inquire here whether there is a higher memorability for images of human faces and whether memorability declines with increasing departure from human faces; if so, the implication would add to the growing evidence of differences in the processing of biological versus artifactual stimuli. To do so, we used images and memorability scores from a large data set of 58,741 images to compare the relative memorability of the following image categories: real human faces versus buildings, and extending this to a comparison of real human faces with five image categories that differ in their grade of resemblance to a real human face. Our findings show that, in general, when we compare the biological category of faces to the artifactual category of buildings, the former is more memorable. Furthermore, there is a gradient in which the more an image resembles a real human face the more memorable it is. Thus, the previously identified differences in biological and artifactual images extend to the field of memory.

Keywords: artifactual images, biological images, faces, illusory faces, memorability

INTRODUCTION

Our ability to remember stimuli depends both on our internal states and on external factors which are intrinsic properties of the stimuli. An example of the former is the impoverishment of memorability in general during depressive states (Williams et al., 2000) whereas an example of the latter is the dependence of the memorability of images containing animals on the number of animals in the image (Dubey et al., 2015). Although many other stimulus properties have been examined with regard to their effect on memorability (e.g., Isola et al., 2011), stimuli have not been previously studied for their memorability as a function of the two broad categories to which they belong; namely, the biological and artifactual ones.

We use the term “biological” to refer to non‐man‐made stimuli (e.g., human faces and bodies, trees, flowers, and animals), and the term “artifactual” to refer to man‐made stimuli (e.g., motor vehicles, buildings, and technological devices). Our previous work has shown that there is a difference in the extent to which esthetic judgments for different categories of stimuli are resistant to revision in light of external opinion, with biological stimuli being more resistant than artifactual ones (Bignardi et al., 2021; Chen & Zeki, 2011; Glennon & Zeki, 2021; Zeki & Chén, 2020; Zhang & Zeki, 2022). This distinction has been addressed mainly in the field of visual perception and has not yet been extended to the field of memory.

In this study, we examined memorability for stimuli by concentrating on faces as representative of biological stimuli compared to buildings as representative of artifactual stimuli. We chose our stimuli to represent different categories, beginning with real human faces compared to buildings and progressing through a comparison of real human faces to five categories that differed increasingly from human faces. Previous studies have examined whether there are differences in recognition memory and in neural activity patterns across object categories that differ in their animacy and size (Blumenthal et al., 2018) and differences in neural activity patterns for faces, chairs, and buildings (Martin et al., 2016). We wanted to address the question of how memorability varies, if at all, with stimuli that depart increasingly from resemblance to a real biological stimulus; namely, human faces.

We used a very large number of images derived from a large data set. This has both advantages and disadvantages; the former revolves around the fact that the large number of stimuli includes both the biological and the artifactual categories as well as the subcategories within each, thus making it possible to make a broad comparison. The disadvantage is that the stimuli were not strictly controlled for a number of characteristics such as color, size, angle of view, and so on. We nevertheless hypothesized that if the division between the biological category of faces and artifactual categories is real, a difference in the memorability for human faces versus other categories would emerge even in the absence of strict controls for the factors mentioned.

METHODS

In all three of our experiments, we used images and memorability scores from the LaMem data set (http://memorability.csail.mit.edu/index.html; Khosla et al., 2015). This data set consists of 58,741 images with memorability scores for each of the images. Each image contains data from 80 Amazon Mechanical Turk workers on average. In Khosla et al.'s (2015) study, each image was presented for 600 ms, and participants were asked whether they had previously seen it during the experiment. Memorability scores can range from 0 (lowest memorability) to 1 (highest memorability). The memorability score of an image was computed as the hit rate of that image minus the false alarm rate of that image. For each image, we used the mean of the five memorability scores provided in the data set. Five scores were available because Khosla et al. split their data into five parts, each containing the training, validation, and testing sets. To determine the training and validation scores, they used a random half of their subjects and used the other half to find the testing scores.

We inspected carefully the 58,741 images and placed them into different categories depending on our question of interest. We excluded images that showed a word or words. The experimenters in the present study were blind to the previously provided memorability scores. To examine differences in memorability scores between the different image categories, we used Welch's t test in the first experiment and Welch's analysis of variance and the Games‐Howell post hoc test in the second experiment. These tests were used because there was no homogeneity of variances, p < .001, in Levene's test.

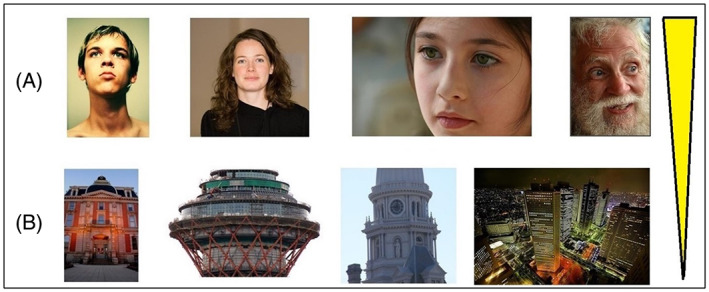

In our first experiment, we examined the memorability of stimuli depicting only buildings or real human faces (Figure 1) because previous studies examining biological and artifactual stimuli have most often included these types of stimuli. We found 363 images that contained a building or buildings; we excluded images of famous buildings (e.g., Twin Tower building in New York or the Eiffel Tower in Paris). We found 129 images that contained a real human face; here, we excluded images that showed more than one face or showed only parts of a face (e.g., only one eye or only the mouth). No representation of faces or buildings in paintings was used.

FIGURE 1.

Categories of images analyzed in Experiment 1. (A) Real human faces and (B) buildings. The yellow bar illustrates the increase in memorability from B to A

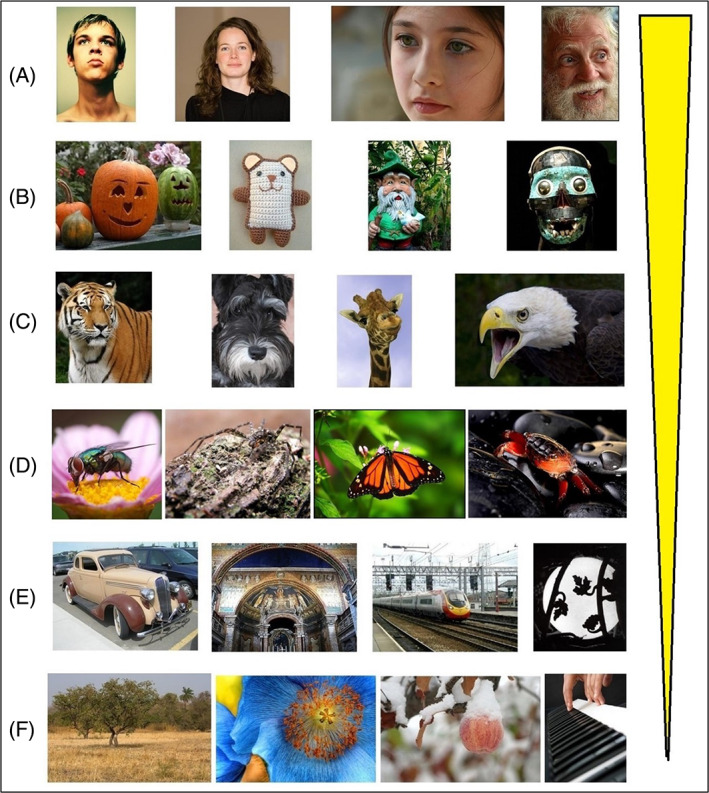

We followed this by comparing the memorability scores for stimuli falling into six different image categories, each successive category departing increasingly from real human faces: real human faces (Figure 2A), face‐like stimuli (Figure 2B), animal faces resembling human ones (Figure 2C), animal faces with lesser resemblance to human faces (Figure 2D), imagined‐face‐like stimuli (Figure 2E), and non‐face‐like images (Figure 2F). For the real human faces category, we used the 129 images from our first experiment. The face‐like category (1,317 images) included replicas of real (animal or human) faces such as those shown in the form of a cartoon image, paintings, sculptures, skulls, dolls, or other toys. It also included examples of face pareidolia (i.e., the perception of illusory faces in random stimuli) in which it was easy to see the face‐like configuration. In the “animal faces resembling human ones” category (2,022 images), we did not include: (a) images that apart from the animal face also contained artifactual stimuli or (b) animals whose head did not resemble a human face (e.g., butterfly, centipede, jellyfish). We had a separate category named “animal faces with lesser resemblance to human faces” (508 images) in which we included images of animals whose head did not much resemble a human face (e.g., butterfly, centipede, jellyfish, snail, spider, bee) and animals whose face could not be clearly seen (e.g., birds shown from a long distance). In the imagined‐face‐like category (9,379 images), we included examples of face pareidolia in which it was difficult to see the face‐like configuration, that is, images that could look like a face if one searched for a long time and/or had some imagination and/or excellent ability to detect faces among many distracting stimuli. In the non‐face‐like category (13,750 images), we included images that did not contain a face and did not look face‐like if seen for a short or long duration.

FIGURE 2.

Categories of images analyzed in Experiment 2. (A) Real human faces, (B) face‐like stimuli, (C) animal faces resembling human ones, (D) animal faces with lesser resemblance to human faces, (E) imagined‐face‐like images, (F) non‐face‐like images. The yellow bar illustrates the increase in memorability from F to A

In our third experiment, we selected the 50 most memorable images and the 50 least memorable ones in the LaMem data set. In that test, the memorability scores for the first category ranged from 0.9894 to 1, and in the latter category ranged from 0.2658 to 0.3644.

RESULTS

The single most memorable category in our results belongs to real human faces, a result obtained by comparing real human faces against all other categories, p < .001, η2 = 0.055 (Figure 2 and Table 1) as well as real human faces against buildings (M = 0.859, SEM = 0.0050 for real human faces; M = 0.697, SEM = 0.0051 for buildings; p < .001, η2 = 0.5084, Figure 1). We have noticed that of the 50 most memorable images in the LaMem data set, 32 contained faces or face‐like stimuli; of the 50 least memorable images, only 3 contained a face or face‐like stimuli.

TABLE 1.

Memorability scores for different image categories

| Image categories | M | SEM | Games Howell test |

|---|---|---|---|

| Real human faces | 0.859 | 0.0050 | |

| All face‐like stimuli (including face‐like artifactual stimuli) | 0.835 | 0.0021 | p < .001 |

| Animal faces resembling human ones | 0.773 | 0.0021 | p < .001 |

| Animal faces with lesser resemblance to human faces | 0.767 | 0.0044 | p = .894 |

| Imagined‐face‐like | 0.732 | 0.0012 | p < .001 |

| Non‐face‐like | 0.718 | 0.0011 | p < .001 |

DISCUSSION

In this study, we set out to explore whether the previously identified differences in the extent to which esthetic judgments of biological and artifactual images are resistant to updating in light of external opinion (Bignardi et al., 2021; Zeki & Chén, 2020; Zhang & Zeki, 2022) extend to the field of memory. As expected, the largest differences in memorability were found when comparing biological stimuli that were those of real human faces and artifactual ones that were those of buildings. In fact, our results show that there is a gradient in which the more an image looks like a real human face, the more memorable it is (see Figure 2).

Three previous studies (Brady et al., 2019; Dubey et al., 2015; Maguire et al., 2001) have examined memorability for somewhat similar types of stimuli to ours with a different approach and methodology. Maguire et al. (2001) examined memory for buildings, human faces, and animal faces, and Brady et al. (2019) compared memorability for stimuli that had different grades of resemblance to faces (from non‐faces to unambiguous faces). These previous studies, in addition to using only black and white stimuli (unlike our stimuli, most of which were colored) and analyzing a smaller number of images, also presented them for durations that differ from ours, significantly so in the work of Maguire et al. (at 3000 ms) and less so in the work of Brady et al. (at 500 ms). Dubey et al.'s (2015) comparison was between persons and animals, but their stimuli, whether those of humans or animals, were not restricted to their faces. Despite these differences, our results are similar to theirs in finding that memorability scores were higher for persons than for animals. Consistent with the results of Brady et al., we also found that the more the images looked like a face, the more memorable they were.

A clear gradient in memorability, depending on how much images look like a real human face, may depend on several factors: it may be because subjects are more familiar (i.e., have more expertise) with real human faces than with non‐face stimuli; or stimuli that have more self‐related information (e.g., stimuli more like ourselves) are more important than ones containing non‐self‐related information and therefore more demanding of our attention. There seems to be a preference for face‐like stimuli across vertebrate species that is present even at birth and is independent of experience (Di Giorgio et al., 2017; Sugita, 2008). Another possibility is that faces may register more easily in our memory because they possess characteristics such as symmetry. Our eyes tend to look for symmetric structure in the world (Kootstra et al., 2011). This increase in gaze would likely lead to more attention and to greater ability to remember that stimulus (Aly & Turk‐Browne, 2016). Lastly, the judgment of the beauty of faces seems to be more resistant to revision in light of external influences (Bignardi et al., 2021); therefore, when they are encoded, it may be that they are not affected so much by proactive and retroactive interference. It has also been argued that the brain has an inherited, or very rapidly acquired, template for faces and facial configuration, making their recognition easier (Yang et al., 2022; Zeki, 2009).

Functional neuroimaging studies have shown that the fusiform face area and occipital face area play a role in processing both faces and face‐like stimuli (e.g., Liu et al., 2014; Wardle et al., 2020). Importantly, these areas can distinguish human faces from face‐like stimuli and also face‐like stimuli from non‐face‐like stimuli (Wardle et al., 2020). However, there is variability across participants and across illusory face images in the ability to perceive a face‐like pattern and how face‐like this pattern is perceived to be (Liu et al., 2014; Wardle et al., 2022). This variability may have been the reason why there was quite large variability in memorability scores in the imagined‐face‐like category of our second experiment.

We believe that complexity did not affect our results. Gilbert and Schleuder (1990) and Saraee et al. (2020) found that memorability was higher for more complex images. Therefore, according to their results, we would have expected that scenes would be more memorable than were single stimuli such as faces, which is the opposite from what we found.

The limitations of using the LaMem data set are that participant demographics were unavailable, the number of images per category was unbalanced, remember/know responses and confidence ratings were not collected, and it is unknown whether participants were previously familiar with the images shown (i.e., before seeing them in the experiment). Another limitation of our study is that we only examined one modality (vision) and only restricted ourselves to two‐dimensional stimuli within that modality. It has been previously shown that there is a differentiation in memory for real three‐dimensional stimuli versus photographs of real three‐dimensional stimuli (Kapsetaki et al., 2022; Snow et al., 2014). Thus, future studies could examine whether our findings translate to actual stimuli in real life (i.e., real three‐dimensional stimuli rather than images of them) and to stimuli in other modalities (e.g., audition and touch).

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest to report.

ETHICS STATEMENT

This study was approved by the Ethics Committee of University College London.

ACKNOWLEDGMENTS

We would like to thank Sophia Sia for her assistance in categorizing some of the images. This work was supported by a grant from The Leverhulme Trust (RPG‐2017‐341), London, to S. Zeki.

Kapsetaki, M. E. , & Zeki, S. (2022). Human faces and face‐like stimuli are more memorable. PsyCh Journal, 11(5), 715–719. 10.1002/pchj.564

Funding information The Leverhulme Trust, London, Grant/Award Number: RPG‐2017‐341

DATA AVAILABILITY STATEMENT

The data used in this study are freely available online: http://memorability.csail.mit.edu/.

REFERENCES

- Aly, M. , & Turk‐Browne, N. B. (2016). Attention promotes episodic encoding by stabilizing hippocampal representations. Proceedings of the National Academy of Sciences, 113(4), E420–E429. 10.1073/pnas.1518931113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bignardi, G. , Ishizu, T. , & Zeki, S. (2021). The differential power of extraneous influences to modify aesthetic judgments of biological and artifactual stimuli. PsyCh Journal, 10, 190–199. 10.1002/pchj.415 [DOI] [PubMed] [Google Scholar]

- Blumenthal, A. , Stojanoski, B. , Martin, C. B. , Cusack, R. , & Köhler, S. (2018). Animacy and real‐world size shape object representations in the human medial temporal lobes. Human Brain Mapping, 39(9), 3779–3792. 10.1002/hbm.24212 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brady, T. F. , Alvarez, G. A. , & Störmer, V. S. (2019). The role of meaning in visual memory: Face‐selective brain activity predicts memory for ambiguous face stimuli. The Journal of Neuroscience, 39(6), 1100–1108. 10.1523/JNEUROSCI.1693-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, C. H. , & Zeki, S. (2011). Frontoparietal activation distinguishes face and space from artifact concepts. Journal of Cognitive Neuroscience, 23(9), 2558–2568. 10.1162/jocn.2011.21617 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Giorgio, E. , Loveland, J. L. , Mayer, U. , Rosa‐Salva, O. , Versace, E. , & Vallortigara, G. (2017). Filial responses as predisposed and learned preferences: Early attachment in chicks and babies. Behavioural Brain Research, 325, 90–104. 10.1016/j.bbr.2016.09.018 [DOI] [PubMed] [Google Scholar]

- Dubey, R. , Peterson, J. , Khosla, A. , Yang, M.‐H. , & Ghanem, B. (2015). What makes an object memorable? In Proceedings of the IEEE international conference on computer vision (pp. 1089–1097). Institute of Electrical and Electronics Engineers (IEEE). [Google Scholar]

- Gilbert, K. , & Schleuder, J. (1990). Effects of color and complexity in still photographs on mental effort and memory. Journalism Quarterly, 67(4), 749–756. 10.1177/107769909006700429 [DOI] [Google Scholar]

- Isola, P. , Parikh, D. , Torralba, A. , & Oliva, A. (2011). Understanding the intrinsic memorability of images. In Shawe‐Taylor J., Zemel R., Bartlett P., Pereira F., & Weinberger K. (Eds.), Advances in neural information processing systems (NIPS) (pp. 2429–2437). Neural Information Processing Systems Foundation, Inc. (NIPS). [Google Scholar]

- Kapsetaki, M. E. , Militaru, I. E. , Sanguino, I. , Boccanera, M. , Zaara, N. , Zaman, A. , Loreto, F. , Malhotra, P. A. , & Russell, C. (2022). Type of encoded material and age modulate the relationship between episodic recall of visual perspective and autobiographical memory. Journal of Cognitive Psychology, 34(1), 142–159. 10.1080/20445911.2021.1922417 [DOI] [Google Scholar]

- Khosla, A. , Raju, A. S. , Torralba, A. , & Oliva, A. (2015). Understanding and predicting image memorability at a large scale. In Proceedings of the IEEE international conference on computer vision (pp. 2390–2398). Institute of Electrical and Electronics Engineers (IEEE). [Google Scholar]

- Kootstra, G. , de Boer, B. , & Schomaker, L. R. B. (2011). Predicting eye fixations on complex visual stimuli using local symmetry. Cognitive Computation, 3(1), 223–240. 10.1007/s12559-010-9089-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, J. , Li, J. , Feng, L. , Li, L. , Tian, J. , & Lee, K. (2014). Seeing Jesus in toast: Neural and behavioral correlates of face pareidolia. Cortex, 53, 60–77. 10.1016/j.cortex.2014.01.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maguire, E. A. , Frith, C. D. , & Cipolotti, L. (2001). Distinct neural systems for the encoding and recognition of topography and faces. NeuroImage, 13(4), 743–750. 10.1006/nimg.2000.0712 [DOI] [PubMed] [Google Scholar]

- Martin, C. B. , Cowell, R. A. , Gribble, P. L. , Wright, J. , & Köhler, S. (2016). Distributed category‐specific recognition‐memory signals in human perirhinal cortex. Hippocampus, 26(4), 423–436. 10.1002/hipo.22531 [DOI] [PubMed] [Google Scholar]

- Saraee, E. , Jalal, M. , & Betke, M. (2020). Visual complexity analysis using deep intermediate‐layer features. Computer Vision and Image Understanding, 195, 102949. 10.1016/j.cviu.2020.102949 [DOI] [Google Scholar]

- Snow, J. C. , Skiba, R. M. , Coleman, T. L. , & Berryhill, M. E. (2014). Real‐world objects are more memorable than photographs of objects. Frontiers in Human Neuroscience, 8, 837. 10.3389/fnhum.2014.00837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugita, Y. (2008). Face perception in monkeys reared with no exposure to faces. Proceedings of the National Academy of Sciences, 105(1), 394–398. 10.1073/pnas.0706079105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle, S. G. , Paranjape, S. , Taubert, J. , & Baker, C. I. (2022). Illusory faces are more likely to be perceived as male than female. Proceedings of the National Academy of Sciences, 119(5), 1–12. 10.1073/pnas.2117413119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle, S. G. , Taubert, J. , Teichmann, L. , & Baker, C. I. (2020). Rapid and dynamic processing of face pareidolia in the human brain. Nature Communications, 11(1), 1–14. 10.1038/s41467-020-18325-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams, R. A. , Hagerty, B. M. , Cimprich, B. , Therrien, B. , Bay, E. , & Oe, H. (2000). Changes in directed attention and short‐term memory in depression. Journal of Psychiatric Research, 34(3), 227–238. 10.1016/S0022-3956(00)00012-1 [DOI] [PubMed] [Google Scholar]

- Yang, T. , Formuli, A. , Paolini, M. , & Zeki, S. (2022). The neural determinants of beauty. European Journal of Neuroscience, 55(1), 91–106. 10.1111/ejn.15543 [DOI] [PubMed] [Google Scholar]

- Zeki, S. (2009). Splendors and miseries of the brain: Love, creativity. In And the quest for human happiness (1st ed.). John Wiley & Sons. [Google Scholar]

- Zeki, S. , & Chén, O. Y. (2020). The Bayesian‐Laplacian brain. European Journal of Neuroscience, 51(6), 1441–1462. 10.1111/ejn.14540 [DOI] [PubMed] [Google Scholar]

- Zhang, H. , & Zeki, S. (2022). Judgments of mathematical beauty are resistant to revision through external opinion. PsyCh Journal, 1–7. 10.1002/pchj.556 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used in this study are freely available online: http://memorability.csail.mit.edu/.