Abstract

Background

Implementation of evidence‐based practice (EBP) in healthcare remains challenging. The influence of leadership has been recognized. However, few randomized trials have tested effects of an educational and skills building intervention for leaders in clinical settings.

Aims

Test effects of an EBP leadership immersion intervention on EBP attributes over time among two cohorts of leaders at a national comprehensive cancer center.

Methods

A stratified, randomized, wait‐list group, controlled design was conducted. Participants received the evidence‐based intervention one year apart (2020, n = 36; 2021, n = 30) with EBP knowledge, beliefs, competencies, implementation self‐efficacy, implementation behaviors, and organizational readiness measured at pre‐ and post‐intervention, and one‐ and two‐year follow‐ups. Participants applied learnings to a specific clinical or organization priority topic.

Results

Baseline outcomes variables and demographics did not differ between cohorts except for age and years of experience. Both cohorts demonstrated significant changes in EBP attributes (except organizational readiness) post‐intervention. Mixed linear modeling revealed group by time effects at 3‐months for all EBP attributes except implementation behaviors and organizational readiness after the first intervention, favoring cohort 2020, with retained effects for EBP beliefs and competencies at one year. Following Cohort 2021 intervention, at 12‐weeks post‐intervention, implementation behaviors were significantly higher for cohort 2021.

Linking Evidence to Action

An intensive EBP intervention can increase healthcare leaders' EBP knowledge and competencies. Aligning EBP projects with organizational priorities is strategic. Follow‐up with participants to retain motivation, knowledge and competencies is essential. Future research must demonstrate effects on clinical outcomes.

Keywords: administration, cancer, curriculum, education, evidence‐based practice, experimental research, intervention research, leadership, learning, management, oncology, organization, quality improvement

INTRODUCTION

Evidence‐based practice (EBP) is widely recognized as important for achieving high quality care and best patient outcomes. In 2009, the Institute of Medicine (IOM) established the goal that by 2020, 90% of all healthcare decisions should be supported by accurate, timely, and best available evidence (IOM, 2009). While an important and laudable goal, the translation of research evidence into routine practice remains challenging and continues to take years to achieve (Balas & Boren, 2000; Borsky et al., 2018; Khan et al., 2021). Recent studies examining routine preventive practices (Borsky et al., 2018) and well‐known evidence‐based cancer control practices (Khan et al., 2021) reveal continued delays in the adoption of new evidence into practice. For example, Khan et al. (2021) recently found that the average time from research publication to routine implementation, defined as 50% uptake by clinicians, was 15 years (range 13–21 years).

Barriers to evidence‐based practice (EBP) across healthcare are well established and include lack of knowledge, mentors, and leaders to create infrastructures to support routine use of EBP (Gallagher‐Ford & Connor, 2020; Harding et al., 2014). Among hospital clinicians (n = 6160), only 42% reported having implemented EBP for clinical decision making in the past year (Weng et al., 2013). Moreover, this number may be an overestimate as self‐reported guideline adherence to EBP is subject to bias (Adams et al., 1999).

Strategies to mitigate barriers have also been identified, many through the field of implementation science. Among the key strategies is engagement of leadership to drive evidence‐based cultures and behaviors (Farahnak et al., 2020; Li et al., 2018; Proctor et al., 2019). Additionally, effectively driving change in healthcare settings requires knowledge found in implementation science including change models and theories and EBP processes and strategies for implementing and sustaining practice changes (Proctor et al., 2019). Thus, leaders need EBP and implementation science knowledge, skills, and confidence in themselves to promote a culture of EBP and a learning environment (Majers & Warshawsky, 2020). However, such preparation is reported as missing and needed (Lunden et al., 2020). Furthermore, rigorous research on the effects of providing formal EBP education to leaders in promoting implementation of EBP (Meza et al., 2021) is limited.

Purpose & Aims

The purpose of this stratified, randomized, wait‐list group control trial was to determine the effects of a research‐based, 40‐hour experiential EBP leadership educational and skills building intervention on EBP attributes, EBP implementation, and reportable indicators of quality and safety (forthcoming paper) among two cohorts of leaders from a comprehensive cancer center in the Midwestern region of the United States.

Specific aims and hypotheses included:

Aim 1: Test the effects of the EBP leadership intervention on EBP attributes (knowledge, beliefs, competency).

H1: Greater improvements in EBP attributes will be observed among nurse leaders who are assigned to the immediate intervention compared to leaders who are assigned to the wait‐list intervention.

Aim 2: Test the effects of the EBP leadership intervention on EBP implementation (EBP Implementation Self‐Report, EBP Implementation Self‐Efficacy, Organizational Culture & EBP Readiness).

H2: Greater improvements in EBP implementation will be observed among nurse leaders who are assigned to the immediate intervention compared to leaders who are assigned to the wait‐list intervention.

Aim 3: Examine the sustained EBP leadership intervention effects over time.

H3: Nurse leaders who are assigned to the immediate intervention group retain their improvements in EBP attributes and EBP implementation.

METHODS

Study design

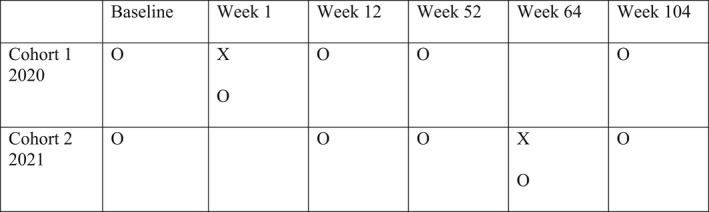

A prospective, stratified, randomized, wait‐list group, controlled design was used to evaluate the study aims and to advance the science on effects of leadership EBP education on EBP attributes and behaviors. Formal leaders at a national comprehensive cancer center were randomized to participate in an immediate EBP educational intervention in 2020 (cohort 1) or wait‐list EBP educational intervention in 2021 (cohort 2). Figure 1 displays the study design with measurement time points.

FIGURE 1.

Study design. Note. X = intervention/immersion education; O = observation/data collection

Study, setting, and sample

The study was conducted at a mid‐western United States comprehensive cancer center. This center, part of an academic medical center and university system, is one of 51 National Cancer Institute (NCI)‐designated Comprehensive Cancer Centers. The center includes a 356‐bed adult inpatient facility, 32 outpatient clinics, and designation as a Magnet® facility by the American Nurses Credentialing Center (ANCC). This center is committed to EBP and defines EBP as a problem‐solving approach to the delivery of health care that integrates the best evidence from studies and patient care data with clinician expertise and patient preferences and values. The sample was comprised of front‐line, middle nursing and other management and senior leaders. At the study launch, approximately 100 nurse leaders met eligibility criteria. The chief nursing officer (CNO) at the center urged all nursing leaders and leaders who worked closely with nursing to participate in the educational program. He also encouraged participation in the study.

Intervention

The EBP leadership educational and skills building intervention was provided by the Helene Fuld National Institute for Evidence‐Based Practice in Nursing and Healthcare (i.e., The Fuld Institute), which is part of the university where the cancer center is located. The Fuld Institute is an international hub for the teaching and dissemination of best practices in EBP to improve healthcare quality, safety, and patient outcomes. Internationally renowned EBP experts lead 5‐day evidence‐based practice immersions and work with healthcare systems on implementing and sustaining EBP. These immersions are 40‐hour, experiential, research‐based programs that apply adult learning principles to guide participants in learning the EBP process, strategies for implementation, as well as using resources and guidance for creating and sustaining infrastructures to support EBP within their health systems. Learners come to the educational program with a clinical or leadership issue and apply experiential learning through multiple hands‐on practical exercises to address the issue or opportunity.

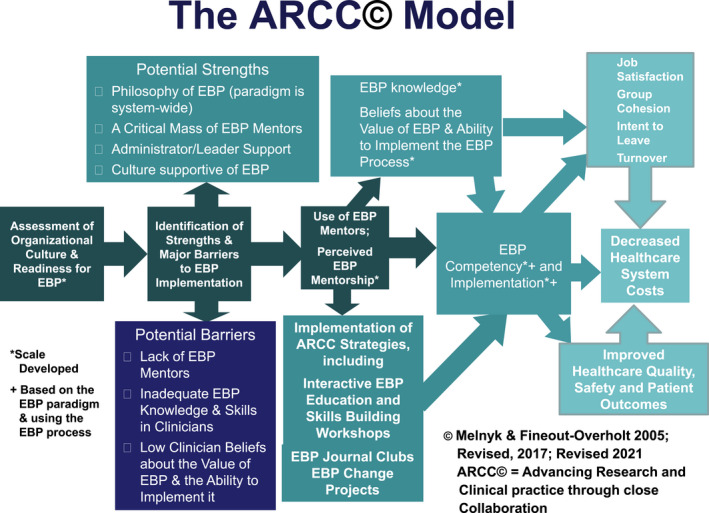

The immersion intervention is based on the Advancing Research & Clinical practice with Close Collaboration (ARCC) model (Melnyk, Fineout‐Overholt et al., 2017; Figure 2). The evidence‐based ARCC model is designed to promote EBP and improve patient outcomes, hospital costs, and clinician retention (Melnyk et al., 2021). The model begins with assessing the organization's culture and readiness for EBP, followed by assessing and managing specific organizational barriers and facilitators to EBP. Developing EBP mentors are a key aspect of the model, and all immersion participants learn to be mentors for their organizations. Implementation strategies that emerge from the implementation science literature are emphasized, including the driving force of leaders. Fidelity of the immersion is assured through the use of a standardized curriculum with didactic components and experiential components. Faculty facilitators gain competence in the program content by first participating in an immersion, serving as a table co‐facilitator, and then as a table facilitator. Repeated studies have revealed significant changes in EBP knowledge, beliefs, attitudes, and implementation competencies following the immersion (Gallagher‐Ford et al., 2020; Gorsuch et al., 2021).

FIGURE 2.

Advancing Research & Clinical practice through close collaboration (ARCC) model

Measurements

Demographic data were gathered on age, gender, race, ethnicity, education, years of experience as a nurse, years of experience on the current unit, current roles, years of leadership experience, years in current position, previous experiences with EBP, EBP education, and quality improvement, and involvement in leadership and governance committees. Outcome variables included EBP attributes of knowledge, beliefs, and competency as well as EBP implementation, implementation strategies self‐efficacy, and organizational culture and readiness for EBP. The measures are all standardized with established validity and reliability (Melnyk et al., 2008, 2014; Tucker et al., 2020).

The EBP Knowledge Scale consists of 25 multiple choice and 13 true or false questions and assesses general EBP knowledge. Higher scores reflect more knowledge. The EBP Beliefs Scale is a 16‐item scale that assesses perceptions about EBP (Melnyk et al., 2008). Higher scores reflect more positive beliefs. The EBP Competency Scale includes 24 EBP essential skills evaluated on a 4‐point Likert scale (Melnyk et al., 2014). Higher scores reflect more competency in EBP steps and processes.

The EBP Implementation Scale is an 18‐item frequency scale that assesses the extent that participants report having implemented key components of an EBP (Melnyk et al., 2008). Higher scores are more positive. The EBP Implementation Strategies Self‐Efficacy Scale (ISE4EBP) is a 29‐item scale assessing level of self‐efficacy (confidence) related to implementation strategies on a scale of 0% to 100% (Tucker et al., 2020). Higher scores reflect greater self‐efficacy in selecting and using implementation strategies. The Organizational Culture and Readiness System‐wide Integration of Evidence‐based Practice Scale (OCRSIEP) was used to assess organizational culture and readiness for EBP. Higher scores reflect greater organizational readiness for and movement toward a culture of EBP (Melnyk et al., 2010).

Study procedures

The study was approved by the university's institutional human subject review board.

The CNO for the cancer center was a strong advocate of the study and provided funding for the EBP educational program. All eligible participants were sent an email invitation for the study, which included the purpose of the study and a consent form. The survey asked participants to read the consent form and to indicate if they were interested in participating in the study. If yes, they were asked to provide their name and email so they could be included in the study randomization process, which was manually generated. Participant group assignment was concealed until baseline data were collected.

Study participants came to the immersion with clinical or leadership topics that aligned with the strategic priorities of the organization, although some did have to pivot due to COVID‐19 pandemic implications and needs. Cohort 1 topics included central line associated blood stream infections (CLABSI), patient reports, communication in a large organization, peer to peer accountability, telephone triage, fatigue mitigation, and falls. Cohort 2 topics included different foci and reflected many of the shifting priorities due to the ongoing COVID‐19 pandemic. Topics included manager and assistant nurse manager onboarding, nurse practice drift, active participation/engagement in virtual meetings, effect of virtual education and transition to practice, efficient ambulatory patient scheduling, interprofessional education, and sitter use. Participants worked in groups during and after the immersion experience to address their topics. All participants developed a plan for next steps to implement the evidence‐based initiative upon return to their organization. Fuld team members provided follow‐up at 3‐month intervals through video technology over the following year.

Baseline data were gathered from both cohorts in early 2020. Follow up data were gathered for cohort 1 (2020) immediately after they completed the EBP leadership educational program, and from both cohorts at 12 weeks and 12 months after cohort 1 completed the educational program. Data collection was repeated for both cohorts after cohort 2 (2021) received the educational intervention with the exception that the immediate post‐education observation for cohort 2 (2021) was not completed for cohort 1 (2020) since their data were just collected one week earlier and no additional education occurred for cohort 1 (2020) (see Figure 2).

Data analysis & power analysis

Descriptive statistics were used to summarize the sample characteristics and EBP attributes at different time points for both cohorts. Linear mixed effects models were used to derive between‐group differences over time with a with‐in subject random intercept for repeated measures. Group‐by‐time interaction effects were examined, and other covariates (age, sex, education, current role, practical experience, and leadership experience) were adjusted as fixed‐effects covariates in the mixed‐effects regression models. Trend plots were used to display the adjusted mean values for both cohorts over time. A p‐value <.05 was deemed to be statistically significant. The analyses were conducted in SPSS and R 4.0.5.

A total sample of 67 (37 and 30 for cohort 1 and 2, respectively) had 80% power to detect a between‐group difference in the change of EBP attribute scores from baseline with a large effect size (d = 0.82) using a two‐sided significance level of 0.05.

RESULTS

The first educational program occurred March 2–6, 2020, immediately prior to the COVID‐19 pandemic and subsequent stay at home orders for the United States. As a result, the first cohort participated via in‐person, while the second cohort program was delivered via a synchronous, live online video platform. As a reminder, the CNO invited all leaders to participate in the EBP program whether they chose to complete the research questionnaires or not. Cohort 1 (2020) included 48 participants, and cohort 2 (2021) included 40 participants. The leadership roles represented in each cohort were very similar and are presented in Table 1. Fewer participants completed the study questionnaires (cohort 1 n = 37; cohort 2 n = 30).

TABLE 1.

Cohort participant roles

| Role | Cohort 1 (n = 48) | Cohort 2 (n = 40) |

|---|---|---|

| Associate Chief Nursing Officer | 1 | 1 |

| Director | 5 | 5 |

| Manager | 26 | 18 |

| Assistant Manager | 13 | 13 |

| Nurse Scientist | 1 | 1 |

| Educator | 1 | 0 |

| Process Engineer | 1 | 1 |

| Operation Improvement Manager | 0 | 1 |

Table 2 presents demographic characteristics of age, race, ethnicity, and education level. Participants were also asked about previous experience with EBP, quality improvement, and research efforts. No significant differences were observed between groups for any of these characteristics except for age and years of experience. Cohort 2 was slightly older (p = .036) with more years of nursing practice experience (p = .027).

TABLE 2.

Cohort demographics

| Characteristics | Cohort 1 | Cohort 2 |

|---|---|---|

| f (%) | f (%) | |

| Gender | ||

| Female | 32 (86.5) | 25 (83.3) |

| Race & Ethnicity | ||

| White | 35 (94.6) | 27 (90) |

| Black of African American | 1 (2.7) | 1 (3.33) |

| Asian | 0 | 1 (3.33) |

| Hispanic, Latino or Spanish Origin | 0 | 1 (3.33) |

| American Indian or Alaska Native, White | 1 (2.7) | 0 |

| Education | ||

| Bachelors | 10 (7) | 6 (20) |

| Masters | 26 (70.3) | 20 (66.7) |

| Clinical Doctorate | 1 (2.7) | 3 (10) |

| PhD | 0 | 1 (3.3) |

| M (n) | M (n) | |

|---|---|---|

| Age | 39.89 (37) | 44.37 (30) |

| # of Years of Leadership Experience | 7.24 (37) | 8.4 (30) |

| # of Years in Current Role | 3.27 (37) | 3.47 (30) |

| # of Years in Practice | 15.24 (37) | 20.03 (30) |

Overall study key results

Table 3 presents mean scores for the outcome variables for both cohorts over time. Baseline data were not statistically different between cohorts for all outcome variables (at the p < .05 alpha level). After the 5‐day intervention (EBP immersion) for each cohort, the immediate post‐immersion surveys were completed by those who had just participated; each cohort had four timepoints. Attrition led to variable sample sizes for each timepoint.

TABLE 3.

Descriptive statistics for EBP attributes by cohort and over time

| EBP attribute |

Baseline M (SD) n |

Week 1 M (SD) n |

Week 12 M (SD) n |

Week 52 M (SD) n |

Week 53 M (SD) n |

Week 64 M (SD) n |

|---|---|---|---|---|---|---|

| 2020 Cohort | ||||||

| EBP Knowledge |

22.58 (6.57) 36 |

30.69 (3.64) 32 |

30.12 (3.89) 26 |

30.76 (3.70) 17 |

– |

28.33 (4.36) 12 |

| EBP Competencies |

50.11 (8.75) 37 |

64.36 (10.75) 33 |

64.96 (11.98) 24 |

68.76 (5.80) 17 |

– |

68.58 (12.54) 12 |

| EBP Beliefs |

56.84 (7.78) 37 |

67.12 (6.30) 33 |

65.71 (5.97) 24 |

65.59 (9.18) 17 |

– |

65.83 (6.90) 12 |

| EBP Implementation Self‐Efficacy |

66.53 (18.20) 36 |

80.07 (15.36) 32 |

81.83 (13.17) 24 |

79.59 (14.85) 16 |

– |

81.94 (17.06) 11 |

| EBP Implementation |

8.89 (7.77) 37 |

15.91 (7.13) 33 |

11.87 (10.26) 23 |

12.69 (9.38) 16 |

– |

12.27 (9.22) 11 |

| Organizational Readiness |

83.92 (13.70) 37 |

84.84 (12.81) 32 |

94.78 (12.75) 23 |

93.44 (14.36) 16 |

– |

96.64 (18.82) 11 |

| 2021 Cohort | ||||||

| EBP Knowledge |

24.6 (6.44) 30 |

– |

23.22 (8.48) 18 |

25.76 (6.62) 25 |

30.40 (3.18) 15 |

28.11 (5.35) 9 |

| EBP Competencies |

54.57 (12.51) 30 |

– |

50.83 (13.51) 18 |

55.68 (14.57) 25 |

64.60 (13.12) 15 |

64.33 (13.80) 9 |

| EBP Beliefs |

60.40 (8.58) 30 |

– |

56.33 (8.98) 18 |

58.68 (7.87) 25 |

62.53 (6.33) 15 |

61.22 (7.73) 9 |

| EBP Self‐Efficacy |

67.68 (17.81) 30 |

– |

65.85 (21.51) 18 |

70.44 (18.37) 24 |

79.92 (9.47) 14 |

78.49 (7.38) 8 |

| EBP Implementation |

11.03 (9.20) 30 |

– |

7.44 (7.18) 18 |

9.28 (7.71) 25 |

18.50 (9.37) 14 |

19.38 (16.68) 8 |

| Organizational Readiness |

87.62 (14.19) 29 |

– |

88.94 (17.68) 18 |

90.67 (11.62) 25 |

98.07 (9.98) 14 |

94.13 (19.47) 8 |

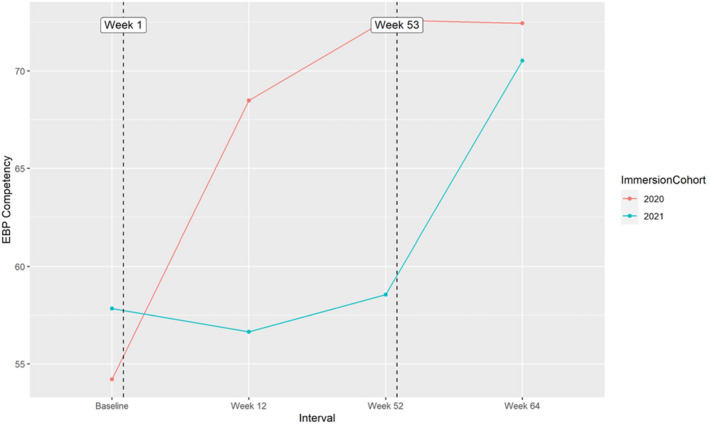

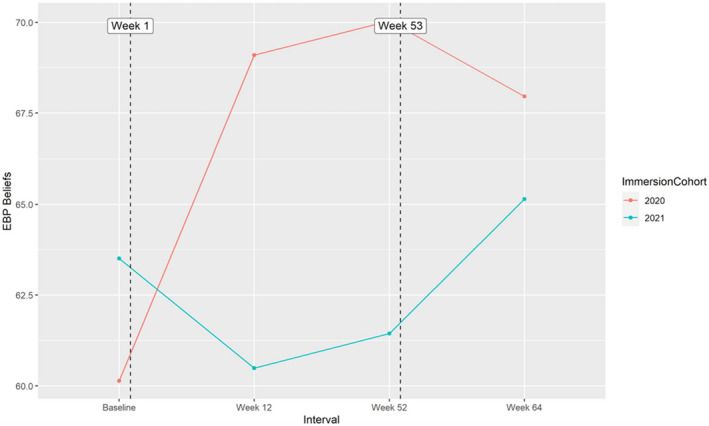

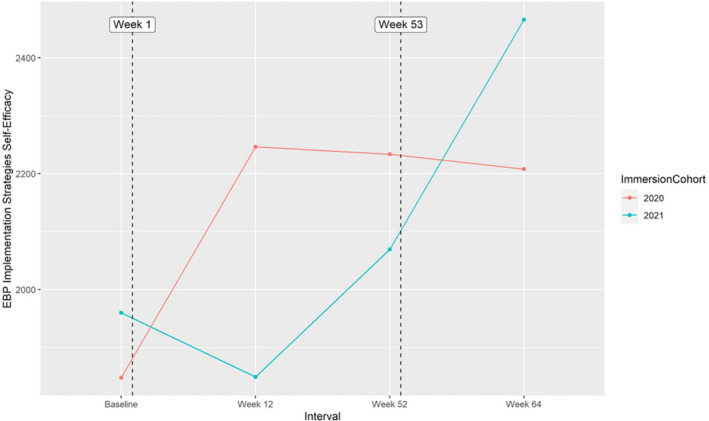

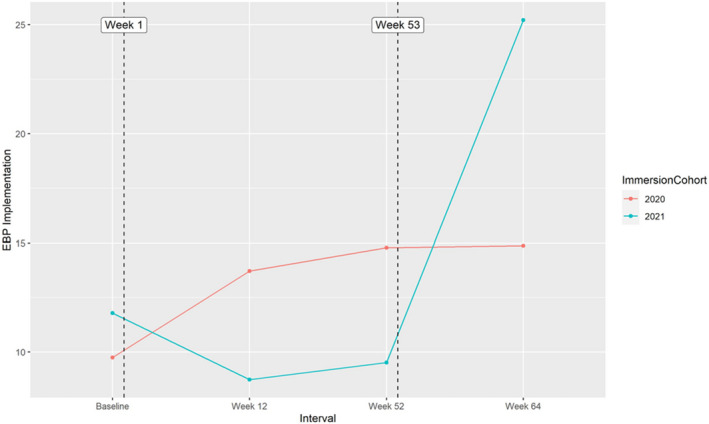

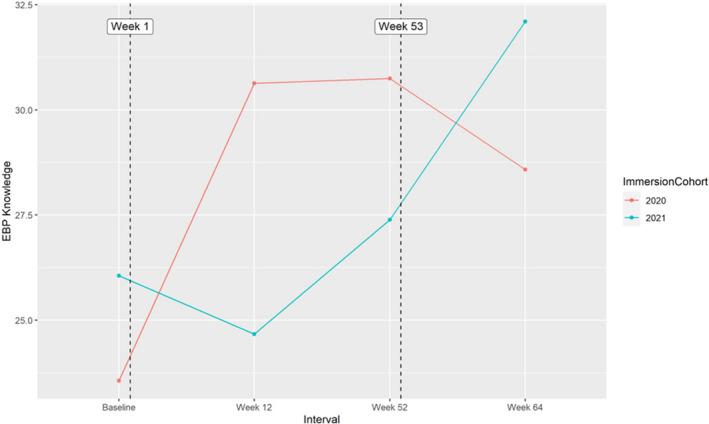

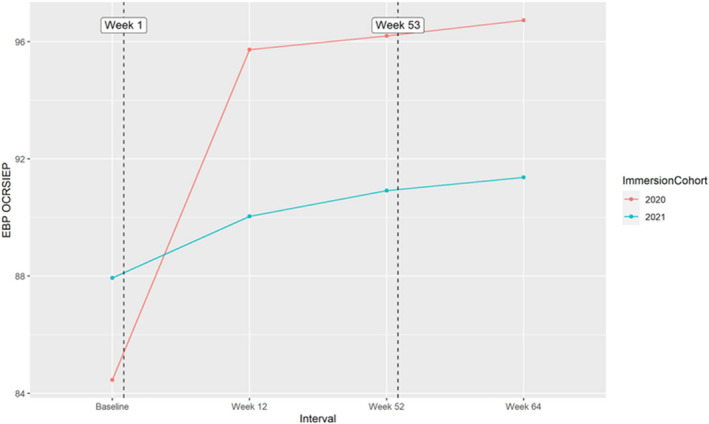

Table 4 includes the linear mixed model findings for EBP attributes and implementation measures for participants over time. Mean group differences are shown for each variable and time point along with p‐values and 95% confidence intervals, indicating the significance of the group difference. Following the 2020 cohort education, leaders performed significantly better than the wait‐list education cohort 2 (2021) on four of the seven outcome measures at week 12. These included knowledge, competency, beliefs, and implementation strategies self‐efficacy, but not implementation or organizational culture and EBP readiness. At week 52, one year later and just before cohort 2 was trained, cohort 1 retained significantly better scores on EBP competency and beliefs but lost the significant difference for EBP knowledge and implementation strategies self‐efficacy. At week 64, 12 weeks after cohort 2 was trained, the groups did not differ on any of the attributes except implementation, which climbed more steeply for cohort 2 participants 12 weeks after their education. Graphic displays of each EBP attribute over time for each cohort are shown in Figures 3, 4, 5, 6, 7, 8. Group‐by‐time effects can be seen for all EBP attributes and implementation measures and while some of the gains from the educational intervention were lost over time, the effects remained significant for both groups from baseline to week 64.

TABLE 4.

Mean group differences by time for all EBP attributes and implementation measures

| EBP attributes | Interval | Mean difference (1–2) | Standard error | p‐value |

95% Confidence interval |

|

|---|---|---|---|---|---|---|

| Lower | Upper | |||||

| EBP Knowledge | Baseline | −2.49 | 1.56 | .114 | −5.60 | 0.61 |

| Week 12 | 5.96 | 1.75 | .001 | 2.50 | 9.43 | |

| Week 52 | 3.36 | 1.78 | .062 | −0.18 | 6.89 | |

| Week 64 | −3.52 | 2.27 | .123 | −8.00 | 0.97 | |

| EBP Competency | Baseline | −3.63 | 2.78 | .194 | −9.14 | 1.87 |

| Week 12 | 11.83 | 3.36 | .001 | 5.19 | 18.48 | |

| Week 52 | 14.04 | 3.38 | .000 | 7.35 | 20.73 | |

| Week 64 | 1.92 | 4.70 | .683 | −7.36 | 11.21 | |

| EBP Beliefs | Baseline | −3.37 | 1.87 | .076 | −7.09 | 0.35 |

| Week 12 | 8.61 | 2.21 | .000 | 4.24 | 12.98 | |

| Week 52 | 8.60 | 2.23 | .000 | 4.19 | 13.01 | |

| Week 64 | 2.82 | 2.99 | .347 | −3.09 | 8.72 | |

| EBP implementation strategies self‐efficacy | Baseline | −112.21 | 126.86 | .379 | −364.26 | 139.84 |

| Week 12 | 397.60 | 148.06 | .008 | 104.49 | 690.71 | |

| Week 52 | 164.01 | 153.39 | .287 | −139.63 | 467.65 | |

| Week 64 | −257.75 | 208.29 | .218 | −669.51 | 154.02 | |

| EBP implementation | Baseline | −2.03 | 2.46 | .412 | −6.93 | 2.86 |

| Week 12 | 4.98 | 2.86 | .084 | −0.68 | 10.65 | |

| Week 52 | 5.27 | 2.91 | .073 | −0.49 | 11.03 | |

| Week 64 | −10.33 | 3.93 | .010 | −18.10 | −2.56 | |

| EBP OCRSIEP | Baseline | −3.48 | 3.85 | .367 | −11.10 | 4.14 |

| Week 12 | 5.69 | 4.70 | .228 | −3.61 | 15.00 | |

| Week 52 | 5.29 | 4.79 | .271 | −4.18 | 14.75 | |

| Week 64 | 5.36 | 7.08 | .450 | −8.64 | 19.36 | |

Note: All the estimates and p‐values above are based on linear mixed effects models.

FIGURE 3.

Reported EBP competencies over time for cohort 1 and 2

FIGURE 4.

Reported EBP beliefs over time for cohort 1 and 2

FIGURE 5.

Reported EBP implementation strategy self‐efficacy over time for cohort 1 and 2

FIGURE 6.

Reported EBP implementation over time for cohort 1 and 2

FIGURE 7.

Reported EBP knowledge over time for cohort 1 and 2

FIGURE 8.

Reported organizational readiness for EBP over time for cohort 1 and 2

Results by study aims

Study Aim 1: Test the Effects of the EBP Leadership Intervention on EBP Attributes (Knowledge, Beliefs, Competency).

Aim 1 study hypothesis stated greater improvements in EBP attributes (knowledge, beliefs, competencies) will be observed among nurse leaders who are assigned to the immediate intervention compared to leaders who are assigned to the wait‐list intervention. This hypothesis was supported for all three attributes following the first training at week 12.

Study Aim 2: Test the effects of the EBP leadership intervention on EBP implementation (EBP Implementation Self‐Report, EBP Implementation Self‐Efficacy, Organizational Culture & EBP Readiness).

Aim 2 study hypothesis was greater improvements in EBP implementation will be observed among nurse leaders who are assigned to the immediate intervention compared to leaders who are assigned to the wait‐list intervention. This hypothesis was partially supported. Greater improvement in EBP implementation strategies self‐efficacy was found for the immediate intervention group at week 12 but not for EBP implementation or organizational culture and EBP readiness.

Study Aim 3: Examine the Sustained EBP Leadership Intervention Effects Over Time.

The hypothesis for aim 3 was nurse leaders who are assigned to the immediate intervention group retain their improvements in EBP attributes and EBP implementation. This hypothesis was partially supported. Immediate intervention group participants retained their EBP competency and EBP beliefs at week 52, but their EBP knowledge and EBP implementation self‐efficacy were no longer significant compared to wait‐list participants. Their EBP implementation trended more toward significance (p = .073) at week 52 than at week 12 (p = .084), and organizational culture and EBP readiness remained unimproved.

DISCUSSION

We conducted a randomized, wait‐list group, controlled trial to engage leadership at a comprehensive cancer center in an intensive EBP immersion that culminates with EBP initiatives being led in participant respective clinical settings for up to one year following the immersion (initiative outcomes to be presented in forthcoming paper). Our central hypothesis was built on implementation science, the ARCC model, and identification of leadership as a key driver in successful uptake and sustainability of EBP (Proctor et al., 2019; Sandstrom et al., 2011). This is particularly relevant given a 2021 report on translation of cancer guidelines into routine practice taking an average of 15 years (Khan et al., 2021). Our data primarily support our study hypotheses and indicate we improved EBP knowledge, competencies, beliefs, and implementation strategies self‐efficacy among both cohorts, with significant differences between cohorts after cohort one received the immersion intervention, and the differences fading after the wait‐list cohort received the intervention. We did not see significant improvements for organizational culture and EBP readiness, but these scores were relatively high at baseline, and given that senior leadership supported this project and leaders were the intervention recipients, it is likely that readiness for EBP was present at the onset of the study.

COVID‐19 pandemic implications

We observed implementation ratings soar for cohort 2 at 12‐weeks post‐intervention, when compared to the modest ratings reported by cohort 1 at 12 weeks post‐intervention. This is likely due to the COVID‐19 pandemic effects that were at peak intensity for cohort 1 at 12‐week post‐intervention (June 2020). It is unlikely that members of cohort 1 were able to realistically implement any major efforts while simultaneously navigating the unanticipated surge in patients throughout the health system. Indeed, a major limitation to the external validity of this study is that it was launched as the United States experienced the initial impacts of the COVID‐19 pandemic. Despite this unexpected and unparalleled challenge of the emerging pandemic, site leadership teams discussed the leadership EBP education and work that had been initiated and made the decision to retain it as a priority for the organization. Thus, while some initial EBP projects may have been delayed in cohort 1, all groups ultimately initiated the planned EBP work within their respective clinical settings.

Leadership training implications

Indeed, our promising findings suggest that we can engage leaders in intensive EBP education and skills building to influence their EBP attributes and implementation confidence and behaviors. In turn, we believe that such initiatives will influence a culture of EBP and improve the quality and safety of cancer care and patient outcomes. Both cohorts have since been in the implementation and sustainability stages of their projects, with additional long‐term indicators of quality and safety being recorded and monitored for ongoing evaluation. Many initiatives are linked to reportable indicators of quality and safety as well as accreditation priorities, such as CLABSIs, patient falls, communication among members of the healthcare team, fatigue mitigation of clinical staff, leadership onboarding practices, efficient access to ambulatory services, and inter‐professional education among members of the healthcare team. Additional projects related specifically to COVID‐19 priorities and shifts in care delivery include active participation/engagement in virtual meetings and effect of virtual education and transition to practice for new clinicians. Advancing these projects despite the hurdles of a global pandemic is a testament to the leaders and their value and commitment to a culture of EBP throughout their health system.

Advancement of EBP science

The current study contributes to implementation science by examining the effects of EBP immersive education and skills building for healthcare leaders (primarily nursing leadership teams) on EBP attributes and implementation variables and project outcomes for two years. In a similar randomized trial by Aarons et al. (2015) with mental health first‐level leaders, EBP implementation training was found to be feasible, acceptable, and have perceived utility by leaders and their staff. However, their intervention initiative was only 6 months and included only leader and supervisee‐rated outcomes. They recommended further research was needed for longer periods of time and follow‐up on a more diverse set of outcomes. In a more recent study by Proctor et al. (2019), a pre‐post evaluation of a training program for mental health leaders (n = 16) was similarly conducted with three in‐person half‐day sessions and interim coaching and technical support. They found improvements in self‐reported leadership skills and implementation climate, and participants reported the program to be acceptable and appropriate for their needs. Again, our study advances this work through a rigorous RCT design, extended longitudinal data collection, and project implementation effects on clinical, staff, and system outcomes to be measured over time.

Nurse leaders are pivotal to healthcare daily patient care operations and are thus critical influencers of EBP at the point of patient care. By participating in intensive, hands on, experiential, and research based EBP education, study participants are well poised to address EBP barriers within their units, facilitate change to integrate EBP into patient care decisions, and establish a robust infrastructure to support EBP that is relevant to their staff and to the patients they serve.

Our study was guided by the evidence‐based ARCC model for system‐wide implementation and sustainability of EBP (Melnyk & Fineout‐Overholt, 2019). This model emphasizes the importance of organizational system factors to ensure EBP implementation, including culture and EBP mentorship (Melnyk et al., 2021). Key to the ARCC model is the use of a critical mass of EBP mentors who serve as leaders and change agents for EBP and work with bedside clinicians on implementing and sustaining EBP in real‐world healthcare practice settings (Melnyk & Fineout‐Overholt, 2019). Research on the model supports that when there is a culture of EBP and EBP mentorship, point of care staff implement EBP, have competency in EBP, and higher job satisfaction as well as intent to stay in the system (Melnyk et al., 2021). Bandura's theory of self‐efficacy also supported this study as our immersion program aims to build self‐efficacy (confidence) in EBP implementation along with knowledge, competencies, and beliefs. We measured this construct through use of the ISE4EBP Scale (Tucker et al., 2020). For both cohorts, scores on this measure increased significantly post‐education.

Study limitations

Limitations of our study include attrition that was likely influenced by the COVID‐19 pandemic, shared method variance by using a host of self‐report measures, and overall small sample. Additionally, given that the leaders do work together, some contamination could have occurred. In a real world setting and the pragmatic nature of this study, this may also be a positive clinical implication. Response rates were low in some of the repeated measures, thus warranting caution in drawing conclusions. This was likely impacted by the pandemic with attrition of leaders higher than normal and multiple stressors of clinical leaders.

Implications for practice

Leadership skills building in EBP is a critical strategy toward improving the quality and safety of healthcare and advancing EBP as the standard of care across health settings (Proctor et al., 2019; Sandstrom et al., 2011). Leaders who create a vision for EBP and have knowledge about best practices and implementation strategies tend to incorporate evidence into their own leadership practices, thus fueling an organizational culture that supports EBP (Gallagher‐Ford & Connor, 2020; Melnyk et al., 2010). Specifically, among chief nurse executives, most report value in EBP, yet simultaneously also report low incidence of EBP implementation (Melnyk et al., 2016). Thus, formal skills building as an EBP leader, and not solely as a user of EBP, is encouraged to create the vision and infrastructure to support organizational change toward an EBP culture. Our study is among the first to use a rigorous design to evaluate effects of leadership skills building in EBP and include long‐term assessments.

Linking evidence to action

An intensive EBP intervention can increase healthcare leaders' EBP knowledge, competencies, and self‐efficacy.

Aligning EBP projects with organizational priorities is strategic.

Follow‐up with participants to retain motivation, knowledge and competencies is essential.

Future research must demonstrate effects on clinical outcomes.

CONCLUSION

Research has identified that healthcare leaders and those they partner with daily must receive leadership EBP education to create and sustain an EBP culture within healthcare systems using an evidence‐informed approach toward meaningful change. In this RCT, despite being launched at the onset of the COVID‐19 pandemic, we found that a 5‐day EBP educational immersion intervention led to improved EBP knowledge, competency, beliefs, and implementation strategies self‐efficacy at immediate post‐intervention, with retention of these improvements for EBP competency and beliefs at one‐year post‐intervention. Following training of the wait‐list group, all group differences were lost except for EBP implementation which was stronger for the wait‐list group. Future research will examine the effects of training leaders on their EBP behaviors, EBP project initiatives, and clinical and organizational outcomes.

Tucker, S. , McNett, M. , O’Leary, C. , Rosselet, R. , Mu, J. & Thomas, B. et al. (2022) EBP education and skills building for leaders: An RCT to promote EBP infrastructure, process and implementation in a comprehensive cancer center. Worldviews on Evidence‐Based Nursing, 19, 359–371. Available from: 10.1111/wvn.12600

REFERENCES

- Aarons, G. A. , Ehrhart, M. G. , Farahnak, L. R. , & Hurlburt, M. S. (2015). Leadership and organizational change for implementation (LOCI): A randomized mixed method pilot study of a leadership and organization development intervention for evidence‐based practice implementation. Implementation Science, 10(11), 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams, A. S. , Soumerai, S. B. , Lomas, J. , & Ross‐Degnan, D. (1999). Evidence of self‐report bias in assessing adherence to guidelines. International Journal for Quality in Health Care, 11(3), 187–192. [DOI] [PubMed] [Google Scholar]

- Balas, E. A. , & Boren, S. A. (2000). Managing clinical knowledge for healthcare improvement. In Yearbook of medical informatics: Patient‐centered systems. Schattauer Veragsgesellschaft. [PubMed] [Google Scholar]

- Borsky, A. , Zhan, C. , Miller, T. , Ngo‐Metzger, Q. , Bierman, A. S. , & Meyers, D. (2018). Few Americans receive all high‐priority, appropriate clinical preventive services. Health Affairs, 37(6), 925–928. [DOI] [PubMed] [Google Scholar]

- Farahnak, L. R. , Ehrhart, M. G. , Torres, E. M. , & Aarons, G. A. (2020). The influence of transformational leadership and leader attitudes on subordinate attitudes and implementation success. Journal of Leadership & Organizational Studies, 27(1), 98–111. [Google Scholar]

- Gallagher‐Ford, L. , & Connor, L. (2020). Transforming healthcare to evidence‐based healthcare: A failure of leadership. Journal of Nursing Administration, 50(5), 248–250. [DOI] [PubMed] [Google Scholar]

- Gallagher‐Ford, L. , Koshy Thomas, B. , Connor, L. , Sinnott, L. T. , & Melnyk, B. M. (2020). The effects of an intensive evidence‐based practice educational and skills building program on EBP competency and attributes. Worldview on Evidence‐Based Nursing, 17(1), 71–81. [DOI] [PubMed] [Google Scholar]

- Gorsuch, P. F. , Gallagher‐Ford, L. , Thomas, B. , Connor, L. , & Melnyk, B. M. (2021). Impact of a formal educational skills building program to enhance evidence‐based practice competency in nurse teams. Worldviews on Evidence‐Based Nursing, 17(4), 258–268. [DOI] [PubMed] [Google Scholar]

- Harding, K. E. , Porter, J. , Donley, E. , & Taylor, N. F. (2014). Not enough time or a low priority? Barriers to evidence‐based practice for allied health clinicians. Journal of Continuing Education in the Health Professions, 34(4), 224–231. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine . (2009). Leadership commitments to improve value in healthcare: Finding common ground: Workshop summary. National Academies Press. [PubMed] [Google Scholar]

- Khan, S. , Chambers, D. , & Neta, G. (2021). Revisiting time to translation: Implementation of evidence‐based practices (EBPs) in cancer control. Cancer Causes & Control, 32(3), 221–230. [DOI] [PubMed] [Google Scholar]

- Li, S. , Jeffs, L. , Barwick, M. , & Stevens, B. (2018). Organizational and contextual factors that influence the implementation of evidence‐based practices across healthcare settings: A systematic literature review. Systematic Reviews, 7, 72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunden, A. , Kvist, T. , & Teras, M. (2020). Readiness and leadership in evidence‐based practice and knowledge management: A cross‐sectional survey of nurses' perceptions. Nordic Journal of Nursing Research, 41(4), 187–196. [Google Scholar]

- Majers, J. S. , & Warshawsky, N. (2020). Evidence‐based decision‐making for nurse leaders. Nurse Leader, 18(5), 471–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melnyk, B. , Fineout‐Overholt, E. , Giggleman, M. , & Cruz, R. (2010). Correlates among cognitive beliefs, EBP implementation, organizational culture, cohesion and job satisfaction in evidence‐based practice mentors from a community hospital system. Nursing Outlook, 58(6), 301–308. [DOI] [PubMed] [Google Scholar]

- Melnyk, B. , Fineout‐Overholt, E. , & Mays, M. (2008). The evidence‐based practice beliefs and implementation scales: Psychometric properties of two scales. Worldviews on Evidence‐Based Nursing, 5(4), 208–216. [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , & Fineout‐Overholt, E. (2019). ARCC evidence‐based practice mentors: The key to sustaining evidence‐based practice. In Melnyk B. M. & Fineout‐Overholt E. (Eds.), Evidence‐based practice in nursing and healthcare: A guide to best practice (4th ed., pp. 514–526). Wolters Kluwer. [Google Scholar]

- Melnyk, B. M. , Fineout‐Overholt, E. , Giggleman, M. , & Choy, K. (2017). A test of the ARCC© model improves implementation of evidence‐based practice, healthcare culture, and patient outcomes. Worldviews on Evidence‐Based Nursing, 14(1), 5–9. [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , Gallagher‐Ford, L. , Long, L. L. , & Fineout‐Overholt, E. (2014). The establishment of evidence‐based practice competencies for practicing nurses and advanced practice nurses in real‐world clinical settings: Proficiencies to improve healthcare quality, reliability, patient outcomes, and costs. Worldviews on Evidence‐Based Nursing, 11(1), 5–15. [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , Gallagher‐Ford, L. , Thomas, B. K. , Troseth, M. , Wyngarden, K. , & Szalacha, L. (2016). A study of chief nurse executives indicates low prioritization of evidence‐based practice and shortcomings in hospital performance metrics across the United States. Worldviews on Evidence‐Based Nursing, 13(1), 6–14. [DOI] [PubMed] [Google Scholar]

- Melnyk, B. M. , Tan, A. , Hsieh, A. P. , & Gallagher‐Ford, L. (2021). Evidence‐based practice culture and mentorship predict EBP implementation, nurse job satisfaction, and intent to stay: Support for the ARCC© model. Worldviews on Evidence‐Based Nursing, 18(4), 272–281. [DOI] [PubMed] [Google Scholar]

- Meza, R. D. , Triplett, N. S. , Woodard, G. S. , Martin, P. , Khairuzzaman, A. N. , Jamora, G. , & Dorsey, S. (2021). The relationship between first‐level leadership and inner‐context and implementation outcomes in behavioral health: A scoping review. Implementation Science, 16(1), 1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor, E. , Ramsey, A. T. , Brown, M. T. , Malone, S. , Hooley, C. , & McKay, V. (2019). Training in implementation practice leadership (TRIPLE): Evaluation of a novel practice change strategy in behavioral health organizations. Implementation Science, 14, 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandstrom, B. , Borglin, G. , & Nilsson, R. (2011). Promoting the implementation of evidence‐based practice: A literature review focusing on the role of nursing leadership. Worldviews on Evidence‐Based Nursing, 8(4), 212–223. [DOI] [PubMed] [Google Scholar]

- Tucker, S. , Zadvinskis, I. M. , & Connor, L. (2020). Development and psychometric testing of the implementation self‐efficacy for EBP (ISE4EBP) scale. Western Journal of Nursing Research, 43(1), 45–52. [DOI] [PubMed] [Google Scholar]

- Weng, Y.‐H. , Kuo, K. N. , Yang, C.‐Y. , Lo, H.‐L. , Chen, C. , & Chiu, Y.‐W. (2013). Implementation of evidence‐based practice across medical, nursing, pharmacological and allied healthcare professionals: A questionnaire survey in nationwide hospital settings. Implementation Science, 8, 112. [DOI] [PMC free article] [PubMed] [Google Scholar]