Abstract

Emotions ubiquitously impact action, learning, and perception, yet their essence and role remain widely debated. Computational accounts of emotion aspire to answer these questions with greater conceptual precision informed by normative principles and neurobiological data. We examine recent progress in this regard and find that emotions may implement three classes of computations, which serve to evaluate states, actions, and uncertain prospects. For each of these, we use the formalism of reinforcement learning to offer a new formulation that better accounts for existing evidence. We then consider how these distinct computations may map onto distinct emotions and moods. Integrating extensive research on the causes and consequences of different emotions suggests a parsimonious one-to-one mapping, according to which emotions are integral to how we evaluate outcomes (pleasure & pain), learn to predict them (happiness & sadness), use them to inform our (frustration & content) and others’ (anger & gratitude) actions, and plan in order to realize (desire & hope) or avoid (fear & anxiety) uncertain outcomes.

Keywords: emotion, mood, computational modeling, reinforcement learning, reward

1. Intro

Emotional states ubiquitously impact action, perception and learning. Through these influences and others, emotions are thought to have played a crucial role throughout evolution in increasing the survivability of our species (Darwin, 2015; Nesse & Ellsworth, 2009). Conversely, when emotions malfunction, they can have debilitating consequences for the individual. Indeed, emotional disturbances play key roles in a wide range of mental disorders, such as depression, bipolar disorder, borderline personality disorder, phobias, and other anxiety disorders. These disorders, however, differ substantially from one another in the types and timescales of emotions they involve (Greenberg et al., 2003; Hazlett et al., 2021; Matsunaga et al., 2008; Miyamoto et al., 2013; Regier et al., 2013; Sobocki et al., 2006; Regier et al., 2013; Seligman, 1971; Tsanas et al., 2016; Carpenter & Trull, 2013; Fernandez & Johnson, 2016; Houben & Kuppens, 2020; Keltner & Kring, 1998). It is thus not an exaggeration to say that without a thorough understanding of emotions, we cannot hope to fully understand how human cognition functions or dysfunctions. Nevertheless, despite decades of emotion research, the literature remains rife with disagreement, even on basic questions such as what emotional states are, what causes them, and what are their roles (Adolphs et al., 2019; Ekman et al., 1987; Frijda, 1993; Lench et al., 2011; Moors et al., 2013; Parkinson, 1997; Russell, 1980, 2003; Russell & Barrett, 1999; Scherer, 2005).

At the same time, advances in computational cognitive science have given rise to a new understanding of how people form evaluations, and how these evaluations influence actions and physiological responses (Baker et al., 2009; Friston, 2009; Montague et al., 1996; Shenhav et al., 2017; Tobler et al., 2007; Westbrook et al., 2020). Critically, evaluations, action tendencies and physiological responses are precisely what most emotion theorists accept as central constituents of emotions (Ellsworth, 2013; Frijda, 1993; Lazarus & Lazarus, 1991; Moors et al., 2013; Parkinson, 1997; Scherer, 2009a). Somewhat surprisingly, however, in studying these functions computational cognitive science has rarely considered emotions. It thus stands to reason that both emotion research and computational cognitive science can benefit a great deal from integrating their respective insights into a coherent theory. Emotion research provides theoretical frameworks for conceptualizing emotions, and empirical knowledge on the evaluations and behavioral responses that emotions entail. Conversely, computational cognitive science provides quantitative tools for linking between perceptual input, its evaluation, and ensuing behavior, in a manner that is constrained by normative principles and neurobiological data. The goal of this paper is to facilitate an integration between these two closely related, yet largely independent, fields.

Luckily, we do not need to start from scratch. An increasing number of researchers have begun to lay foundations for a computational cognitive science of emotions. Broadly, these efforts have taken one of three approaches. The first aims to explain how experiences of emotions (i.e., feelings) arise, specifically, by classifying patterns of interoceptive and exteroceptive sensations that correspond with past exemplars of each feeling (Barrett, 2017; Seth & Friston, 2016). Researchers taking this approach often consider specific feelings to be too variable across individuals and cultures for them to be systematically characterized. The second approach aims to explain how emotions simplify action selection and inferences about the environment, specifically by constraining the set of probable states and actions to those that are congruent with the present emotion (Bach & Dayan, 2017; Huys & Renz, 2017). Researchers taking this approach view emotions as heuristics that are evoked due to lack of information or cognitive resources, and which do not necessarily constitute veridical representations. They thus have relatively little to say about what emotions might represent about the environment and oneself.

We therefore focus here on a third approach, which aims to understand what computations about the environment and oneself emotions represent. This approach draws from non-computational theories that view emotions as constituted, in whole or in part, from appraisals – a set of cognitive judgments concerning the satisfaction of one’s needs by the environment (Clore & Ortony, 2000; Ellsworth, 2013; Frijda, 1986, 1993, p. 19; Lazarus, 1991; Lazarus & Lazarus, 1991; Moors, 2013; Moors et al., 2013; Nussbaum, 2004; Reisenzein, 1995; Solomon, 1988). Researchers taking this approach have begun to replace the plethora of traditional appraisal dimensions, such as pleasantness, goal relevance, agency, and controllability, to name just a few, with a more parsimonious set of computations.

In what follows, we examine this literature through the lens of the computational framework of reinforcement learning1. Our examination reveals three classes of proposed computations: computations concerning expected reward (Eldar et al., 2016; Joffily & Coricelli, 2013), computations concerning the effectiveness of actions in obtaining reward (Bennett, Davidson, et al., 2021), and computations concerning uncertain future reward (Hagen, 1991; Peters et al., 2017). In each case, we propose modifications of previous suggestions that may more parsimoniously account for existing evidence. We then consider how well these different classes of computations cohere with one another, and whether together they could offer a comprehensive computational account of emotion. First, however, we begin by considering the significance to emotions of a concept that all the computations we identify are predicated on – the concept of reward.

2. Reward in machines and humans

2.1. The computational concept of reward

In reinforcement learning, a reward function specifies to what degree different states and actions are of value to the agent and should thus be pursued, and to what degree they are harmful and should thus be avoided. Correspondingly, the goal of a reinforcement learning agent is to maximize its expected cumulative reward:

| (2.1.1) |

where t denotes the present time step, and γ is a discount rate between 0 and 1 that serves to discount future rewards. In this expected sum, rewards may also be negative (r < 0) in which case they are often referred to as ‘punishment’. The agent typically does not know in advance which available options are most rewarding. To learn this, the agent needs to try them out and observe the reward associated with each. Thus, through trial and error, it can come to choose options that maximize its expected cumulative reward.

2.2. Reward as pleasure and pain

In humans, reward does not only reinforce choices but also has hedonic value. This has led psychologists (Frijda, 2001) philosophers (Helm, 2002), and even computational scientists (Sutton & Barto, 2018; Turing, 1969) to converge on the idea that reward is represented in humans by pleasure and pain. As an example, consider how remarkably similar the following phenomenological analysis of pleasure is to the computational definition of reward (Frijda, 2001):

“The core of affective experience, whatever its guise, is its evaluative nature. Affect introduces value in a world of factual perceptions and sensations. It creates preferences, manifest or experienced palatability and aversiveness of stimuli, and behavioral priorities other than as are based on habit strength. Pleasure is good, and pain is bad, and so are their objects.”

Like reward and punishment, pleasure is primarily evoked by experiences that promote our well-functioning, such as consuming food, performing something well or achieving a goal, whereas pain is inflicted by experiences that harm us (Frijda, 2001). The goal of maximizing expected cumulative pleasure is thought to guide our behavior (Bentham, 1996; Mellers et al., 1999). And to know how pleasurable an experience is going to be, we normally first need to try it out (e.g., when tasting new food).

This correspondence between pleasure and reward is further underscored by empirical findings. Like pleasure, the reward function that guides human decisions adapts to homeostatic needs (e.g., eating is less pleasurable when we are not hungry; Keramati & Gutkin, 2014) and changing goals (e.g., winning a game is less pleasurable when we do not aim to win; Juechems & Summerfield, 2019). Moreover, outcomes that for most people constitute reward are less rewarding to people who suffer from an inability to feel pleasure (i.e., anhedonia; Huys et al., 2013), and more rewarding under the influence of pleasure-enhancing opioids (van Steenbergen et al., 2019).

However, though they lie at the core of emotional experiences, pleasure and pain are not themselves considered emotions. Rather, they are primary evaluative processes, to which emotions are in some way secondary (Frijda, 2001; Jacobson, 2021; Price, 2000). To understand precisely in what way, we inquire what computations about reward emotions may represent.

3. Computation of expected reward

Recent work has argued that emotional states represent mismatches between actual and expected rewards (Eldar et al., 2016; Joffily & Coricelli, 2013), often referred to as ‘reward prediction errors’. To understand why representing these errors can be useful, it is helpful to consider their role in reinforcement learning.

3.1. Value learning

A reinforcement learning problem (Sutton & Barto, 2018) is often operationalized by assuming that each state s the agent can occupy may be associated with a different amount of reward r. The value of a state (denoted V(s)), however, includes not only this immediate reward but also the expected reward associated with all future states that follow s:

| (3.1.1) |

A popular algorithm for learning the values of different states relies on what are typically referred to as temporal-difference prediction errors (Sutton & Barto, 2018):

| (3.1.2) |

where denotes value as it is estimated at time step t. Prediction errors are computed in this way since the value of each state st should equal, on average, the immediate reward obtained in it, rt, plus the discounted value of the succeeding state, st+1. Once we compute these prediction errors, value learning simply consists of using them to update existing value estimates:

| (3.1.3) |

where η is a learning rate between 0 and 1, which need not be fixed. Bayesian inference dictates that the update should be smaller (i.e., η will be lower) the more confident we are in our existing value estimate relative to how reliably we think the observed reward is associated with the present state (Mathys et al., 2011).

A large body of cognitive science research indicates that humans and animals employ value learning (Daw & O’Doherty, 2014; Kable & Glimcher, 2009; D. J. Levy & Glimcher, 2012; Montague & Berns, 2002; Padoa-Schioppa, 2011). For example, many brain imaging studies have shown that activation in specific brain areas, such as the ventromedial prefrontal cortex and ventral striatum, is correlated with learned values (Bartra et al., 2013; Levy & Glimcher, 2012; O’Reilly, 2020). Moreover, early foundational work in primates (Schultz et al., 1997) showed that brainstem dopaminergic neurons signal the reward prediction errors defined in Eq. 3.1.2. Though in some instances people may not learn values as described above, but rather relative preferences among sets of states (Bennett, Niv, et al., 2021; Shteingart & Loewenstein, 2014), value learning in one form or another remains a key building block of any comprehensive account of human learning and decision making.

3.2. Emotion as change in value

Empirical work has shown that positive reward prediction errors are associated with positive emotions, and negative reward prediction errors (i.e., disappointments) with negative emotions (Mellers et al., 1997; Rutledge et al., 2014). The relationship between emotional state and reward prediction errors has also been demonstrated outside the lab, in the impact of real world outcomes such as sports matches and student exams on mood (Otto & Eichstaedt, 2018; Villano et al., 2020).

But is it the prediction error per se (δ) that evokes emotional state, or is it the magnitude of the change in value (i.e., ηδ in Eq. 3.1.3)? Intuitively, changes in value may be the more likely cause since surprising outcomes that change our future expectations (high η; e.g., a good exam score revealing that our abilities are better than we thought) seem more emotionally meaningful than surprises that we consider to be chance events (low η; e.g., a good exam score due to a grading error). In the studies mentioned above, changes in value were mostly not measured, at least not in a way that was dissociable from reward prediction errors. A recent study, however, decoupled these two quantities by introducing trial-to-trial changes in reward magnitude that impacted only reward prediction errors and not expected values (Blain & Rutledge, 2020). Subjects’ self-reports during the task suggested that emotional state is primarily driven by changes in value (ηδ).

3.3. Emotional state as cumulative change in value

Emotional states may persist for seconds, hours, weeks or even months. Extension in time endows them with two key properties. First, rather than constituting a response to a single specific event, enduring emotional states accumulate the impact of multiple events. This cumulative property has long been theoretically postulated (Mendl et al., 2010; Nettle & Bateson, 2012; Parducci, 1995; Ruckmick, 1936; Webb et al., 2019) and has also been empirically demonstrated over small timescales (Rutledge et al., 2014). This suggests a formulation of emotional state as cumulative change in value:

| (3.3.1) |

where λ ∈ [0,1] serves to discount value updates made longer ago. This sum, which extends infinitely into the past with diminishing weights, can be continuously estimated using the following recursive update rule:

| (3.3.2) |

This construal of an enduring emotional state is intuitively summarized by saying that we are in a positive emotional state when reward is becoming generally more available to us, and in a negative emotional state when the availability of reward is decreasing.

Though the computation of change in the general availability of reward can, and likely is, made using different algorithms, a useful property of the formula presented above is that the setting of λ modulates the two properties that are thought to distinguish emotions and moods, namely, how long the emotional state lasts and whether it constitutes a response to a specific event (Ekman, 1999; Ketai, 1975; Morris, 2012; Schnurr, 1989). With λ = 0, the emotional state only reflects the very last change in value, and the impact of a change only lasts one time step. The closer λ is to 1, the more equal are the weights of older and newer changes in value, and consequently, each individual value change has a longer lasting impact on emotional state. Thus, different settings of λ offer a continuum of possibilities for modeling emotional state, from an instantaneous emotion to a long-lasting mood.

3.4. The role of enduring emotional states

According to our formulation, an instantaneous emotion corresponds to an individual change in value and has no extension in time. Thus, it is most parsimonious to assume that its role is to execute or signal the value update. But what role does an enduring emotional state serve? This question brings us to the second property enabled by extension in time. Empirical work indicates that enduring emotional states tend to generalize the information that evoked them (Eldar et al., 2016) via the operation of an emotion-congruent bias (Clore, 1992; Eldar & Niv, 2015; Lerner & Keltner, 2000; Michely et al., 2020; Slovic et al., 2007; Vinckier et al., 2018). Having been evoked by a series of positive value updates, an enduring positive emotional state may make subsequent outcomes seem better than they normally seem, and thus lead to further positive value updates. For example, winning a wheel-of-fortune draw can improve people’s emotional state and positively enhance values learned from subsequent outcomes for a set of unrelated slot machines (Eldar & Niv, 2015). In this way, the emotional state generalizes the positive outcome obtained from one source of reward (i.e., the wheel-of-fortune draw) by incorporating it into the values of other, subsequently sampled, sources of reward (i.e., the slot machines).

In this example, generalization is irrational because the outcomes of the wheel of fortune draw and of playing the slot machines are mutually independent. However, in ecological settings, changes in reward availability may often be linked across time and across different sources of reward. As an example of a link between consecutive time steps (i.e., trends), consider how when spring arrives, the availability of food doesn’t change overnight, but rather, it increases gradually, which means that an initial increase predicts further increases. As an example of a link between different sources of reward, consider how a change of season may make multiple types of food, water, and shelter synchronously more abundant. Similarly, in social animals, success in obtaining one type of reward (e.g., gathering food) can increase the individual’s social status, and thus increase their access to other types of reward (e.g., sexual partners).

In each of these cases, individual rewards are probabilistically determined, and therefore value can only be estimated up to some approximation error. But these estimates will be more accurate if we take into account correlations across sources of reward and across time. This can be achieved by biasing each individual value update towards the recently typical update, that is, in accordance with the emotional state:

| (3.4.1) |

This emotion-biased value update can in fact be mathematically derived as the optimal equation for inferring the location of a moving object from a sequence of noisy observations, with value corresponding to the object’s location and emotional state to its velocity (Eldar et al., 2016). The idea is that in inferring the value of a specific source of reward, an unbiased agent only considers rewards already obtained from that source, and therefore neglects global changes in expected reward. Conversely, an emotion-biased agent informs its specific value estimates with the recent global trend.

4. Computation of action evaluation

Reward depends not only on the states a reinforcement learning agent occupies, but also on the actions the agent chooses to take. Correspondingly, the second class of computations that have been suggested to be represented by emotional states comprise evaluations of how effective actions are in obtaining reward (Bennett, Davidson, et al., 2021; Kurzban et al., 2013). Thus, we next consider how agents evaluate their own actions and accordingly adjust their action-selection policy.

4.1. Learning how to obtain reward

In reinforcement learning, the expected reward associated with taking action a in state s is defined as the action’s value, Q:

| (4.1.1) |

There is a close correspondence between values of actions and values of states, in that the latter equals a weighted sum of the former:

| (4.1.2) |

where π(a|s) is the agent’s policy, that is, the probability it will take action a in state s. Consequently, the relative effectiveness of an action in obtaining reward can be represented as the advantage (A) it confers above the value of the state in which it was taken:

| (4.1.3) |

A rational agent should always strive to eliminate advantage, since negative advantage (i.e., A < 0) means that the evaluated action is disadvantageous and should thus be excluded from the policy, whereas positive advantage (i.e., A < 0) means that alternative disadvantageous actions are unnecessarily included in the policy. Indeed, eliminating advantage is a useful learning algorithm in various machine learning applications (Baird, 1994), and is consistent with how humans often adjust their behavior when learning by trial and error (Bennett, Niv, et al., 2021; Palminteri et al., 2015; Solomyak et al., 2022).

Advantage is typically estimated based on a reward, rt, that was received following action at:

| (4.1.4) |

In this, advantage closely resembles a reward prediction error (compare with Eq. 3.1.2). However, meaningful differences between these two quantities can be observed once we re-express advantage as a function of the values of chosen (at) and alternative (ã) actions:

| (4.1.5) |

This formulation exposes that advantage concerns the relative difference between the values of chosen and unchosen actions. Consequently, computations of advantage and reward prediction error differ substantially in cases where the agent finds out that an action it did not choose is either more or less valuable than it previously estimated.

As an illustration, imagine you are standing in line to buy tickets for the theatre. You choose one of two alternative queues (queue I) and then find out that the other queue (queue II) is moving much faster than you expected. In this case, the advantage you would compute for your choice of queue is negative, appropriately signaling a missed opportunity (Bennett, Davidson, et al., 2021; Coricelli et al., 2005; Loomes & Sugden, 1982), but you would experience no prediction error with respect to this queue since it is moving as expected. Moreover, you will in fact experience a positive prediction error with respect to the state ‘about to choose a queue’, regardless of whether it is your queue or the alternative queue that is faster than expected. Conversely, if both queues suddenly move faster than expected, advantage remains at zero, and the improvement in your situation will be captured as a positive reward prediction error with respect to your current state’s value. Thus, although reward prediction errors and advantage are often correlated, they diverge in that the former generally signals changes in expected reward, whereas the latter signals differences in expected reward between chosen and alternative actions.

4.2. Emotion as relative action effectiveness

It is widely recognized that emotions are involved in how we evaluate and adjust our actions. Negative emotions, in particular, are thought to signal that we are not doing as well as we could be doing, and should therefore change our course of action (Kurzban et al., 2013). Such policy adjustment makes sense whenever we find that an alternative course of action may work better, which is to say, whenever the effectiveness of our current course of action is lower than that of alternative courses of action. In such cases, the resulting emotional state facilitates a search for alternative courses of action (Goldberg et al., 1999), thereby helping us adjust our policy to avoid further loss.

The measure of advantage precisely captures the comparison between chosen and alternative actions, and thus, it has recently been suggested to be represented by emotional state (Bennett, Davidson, et al., 2021). This suggestion can explain not only the general involvement of emotion in how we evaluate and adjust our actions, but also more subtle empirical observations. First, it inherently explains why we feel bad when an action we could have taken, but didn’t, is found to have better outcomes than expected (Coricelli et al., 2005; Loomes & Sugden, 1982). In addition, it explains why we typically have stronger emotional responses to outcomes of actions that we don’t normally take (Kahneman & Miller, 1986; Kutscher & Feldman, 2019). This is because outcomes of more typical actions similarly impact both sides of the advantage equation (i.e., both Q(a,s) and V(s), since the latter is an average of the value of typical actions) and thus partially cancel out.

There are at least two factors, however, that moderate the usefulness of an estimated advantage as a measure of action effectiveness. The first is that the observed outcome based on which advantage is estimated may only be probabilistically determined by the action. For this reason, advantage-based updates of policies are usually moderated by a learning rate between 0 and 1, which reflects the degree to which observed outcomes are thought to be controlled through action. This learning rate can thus be thought of as an index of estimated environmental controllability (Ligneul et al., 2022).

The second potential moderating factor is self-control, that is, the degree to which the agent can control its own action. Self-control could be low for actions that we are innately predisposed to take or avoid, that have become habitual, or that require skill, planning, or training (e.g., telling a joke effectively, or shooting a basketball into the basket). To formalize self-control, we assume that rather than being controlled directly, the agent changes its policy by adjusting a set of control parameters θa|s, one for each action, such that the probability of taking an action is proportional to . Here, βa|s represents how difficult it is to acquire or eliminate action a. This formulation ensures that while the control parameters are uniformly controllable, the actions they control are not. The controllability of action a in state s is thus denoted as:

| (4.2.1) |

Combining environmental and self-control into a measure of overall controllability, , allows expressing policy adjustment as (Williams, 1992)2:

| (4.2.2) |

Compared to estimated advantage alone, the multiplication of controllability and estimated advantage, offers a more accurate representation of the gains and losses that are attributable to our choices.

4.3. Emotional state as cumulative action effectiveness

The accumulation of multiple gains and losses that are attributable to our choices is thought to be represented by an enduring emotional state (Bennett, Davidson, et al., 2021). To also account for controllability, here we propose the following formulation of this accumulation:

| (4.3.1) |

where . As in the case of value changes, if modelled with λ = 0, the computed emotional state only reflects the last action, but the higher λ is, the more it will reflect actions taken longer ago.

Like other emotional states, enduring emotional states representing action effectiveness also have the consequence of generalizing the information that evoked them. Thus, negative emotional states evoked by poor outcomes of actions make subsequent outcomes seem worse and more attributable to actions (Ask & Pina, 2011; DeSteno et al., 2000; Lerner & Tiedens, 2006; Quigley & Tedeschi, 1996; Tetlock, 2002). In similar to Eq. 3.3.1, this impact of emotional state may be formulated as:

| (4.3.2) |

Why is such generalization beneficial? In a noisy environment, each individual observation relatively little information. Averaging over multiple recent outcomes via accumulates information from multiple observations, and thus offers a more informative measure of how well the current policy is doing. It is precisely this rationale that underlies the widespread use of ‘momentum’ in machine learning algorithms (Rumelhart et al., 1985). Indeed, simulations have shown that informing policy updates by a cumulative measure of advantage accelerates learning in various artificial settings (Bennett, Davidson, et al., 2021).

To draw the parallel to real world problems, consider that an enduring negative emotional state due to recurrent underperformance may signal that a person’s overall approach is suboptimal, indicating they should more critically examine their subsequent actions. For example, a student may experience a series of failures in achieving their goals such as failing an exam, not being able to focus during class, and making repeated errors in their assignments. Together, these may indicate that the student has adopted inefficient working habits. By negatively biasing the processing of subsequent outcomes, a negative emotional state may aid the student to more quickly identify and modify additional cases of poor performance.

4.4. Emotions about other people’s actions

Other people’s actions can evoke emotions that are as strong and meaningful as the emotions we have about our own actions (FeldmanHall & Chang, 2018). We propose such emotions can also be understood as representations of action effectiveness that serve to adjust policy. Except that in this case, the policy that is being adjusted is someone else’s. Indeed, it is well known that emotions are often expressed in order to influence other people’s behavior (Jones et al., 2011; Parkinson, 1996). For instance, when someone acts in a way that harms us, we may express a negative emotion with the hope that the person will change their ways. Moreover, there is evidence that such emotions represent not only (dis)advantage (to us due to someone else’s actions) but also controllability. Thus, emotional responses are stronger in response to the outcomes of peers’ actions that are perceived as intentional, and therefore, as more amenable to control (De Quervain et al., 2004). Similarly, when people aim to change a peer’s behavior, and believe that to be achievable, they tend to experience more negative emotions towards the peer (Lemay Jr et al., 2012).

5. Computation of uncertain prospects

The classes of computations we considered so far identify changes in expected reward that are indicated by evident outcomes. An additional computation we need to consider concerns outcomes that may or may not happen in the future. Indeed, dangers and opportunities have long been thought to be the subject of emotions. Computational work has suggested that our emotions about such uncertain prospects may represent the width and skewness of the distribution of possible outcomes (Hagen, 1991; Wu et al., 2011), and more generally, our uncertainty about these outcomes (A. Peters et al., 2017). In reinforcement learning, computations about uncertain prospects can be captured in two broad ways, either by associating a given state or action with a distribution of rewards (‘distributional RL’; Dabney et al., 2018), or by associating a given action in a given state with a distribution of succeeding states, each of which is associated with a scalar reward (‘Model-based RL’; Doll et al., 2012). Here we use the latter formalism because it offers a way to represent the effects of our actions on specific prospective outcomes, which often serve as the objects of our emotions.

5.1. Prospecting for reward

A prospective reinforcement-learning agent computes the value of a present state st based on the values of the future states st+1 that are likely to follow it, given the different actions it may choose:

| (5.1.1) |

Here, the left term represents the expected immediate reward, and the right term represents the prospective reward, computed as a weighted average over possible future actions (a) and states (s). To perform this computation the agent requires a model of the world that specifies which future states are likely given different actions (i.e., p(st+1|st,at))). For this reason, prospective reinforcement-learning is often referred to as ‘model-based’ (Daw et al., 2011; Daw & Dayan, 2014; Doll et al., 2015; Gläscher et al., 2010). Notably, the values of future states can also be expanded as a function of the states that follow them, and so can the values of those states, and so on ad infinitum.

A main benefit of performing such prospective computation is that it allows us to compute values for states that we never experienced. For example, we can infer a positive value for exercising regularly, before ever having done so, based on a model of the world that specifies that exercising regularly improves health. Another related benefit of this form of computation is that it quickly and efficiently adjusts to changes in the environment. It does so since new information regarding the probability of a future state s instantly changes the value estimated for any state that s might follow. Prospection rids us of the need to experience these states anew to know that their values changed. For example, upon learning that a highly infectious virus is spreading in our city, prospection allows us to decrease our estimated values for attending any type of crowded social event.

5.2. Emotion as danger and opportunity

Using the formalism of prospective reinforcement learning, we devise a representation of danger and opportunity that is sensitive to the computations implicated by previous work, namely, the width and skewness of the distribution of possible outcomes. We first consider skewness.

Positive and negative skewness are associated with positive and negative anticipatory emotions (Hagen, 1991; Wu et al., 2011), or in other words, emotion is positive when there are prospects that are much better than the expected outcome, and negative when there are prospects that are much worse. We can represent how the value of an individual prospect differs from expectations as:

| (5.2.1) |

where V(s) is the value of a prospective state s that may materialize Δt time steps following present time t, and is the expected value for that future time, averaged over the possible future states the agent may occupy, given current state st and its action-selection policy πt. G tells us whether it would be a good thing if the prospect materializes. If a prospect with G > 0 materializes, that means things turned out better than expected, whereas if a prospect with G < 0 materializes, things turned out worse than expected.

G, however, does not provide information about the probability the prospect will materialize. It is thus susceptible to any prospect no matter how imaginary or irrelevant it might be. This problem can be solved by modulating G by the probability of the prospect, p(st+Δt=s|st,πt). The question here is whether this probability should be counted as such, or whether what primarily drives emotions are prospects whose probability is potentially controllable. From a rational perspective, there is no use in devoting mental resources to a prospect we cannot control. Anecdotal evidence seems to support this perspective. For example, people who report being less concerned with death attribute this to their understanding that death is inevitable or not under their control (Feifel, 1974). Additionally, accepting that one cannot control a negative prospect is a common coping strategy that serves to mitigate negative emotions (Jackson et al., 2012; McCracken & Keogh, 2009; Zettler et al., 1995). And in the positive domain, availability of desirable prospects increases positive anticipatory emotions (Ghoniem et al., 2020).

Thus, we propose that emotions may only be affected by probabilities of prospects that, to the person’s estimation, are potentially controllable. In the case of a positive prospect, what we should care about is whether there is a course of action, a, by which we may be able to increase the prospect’s probability, whereas with regards to a negative prospect, we care about whether we can do something (that we are not already planning to do, as specified by our present policy πt) to avoid it:

| (5.2.2) |

Using these definitions, prospective emotions anticipating individual prospects can be expressed as an upper confidence bound (UCB; Garivier & Moulines, 2011) on the prospective change in value modulated by the potential to do something about it:

| (5.2.3) |

An upper confidence bound on a given computation is the largest probable quantity it might produce given our uncertainty about the estimates involved.

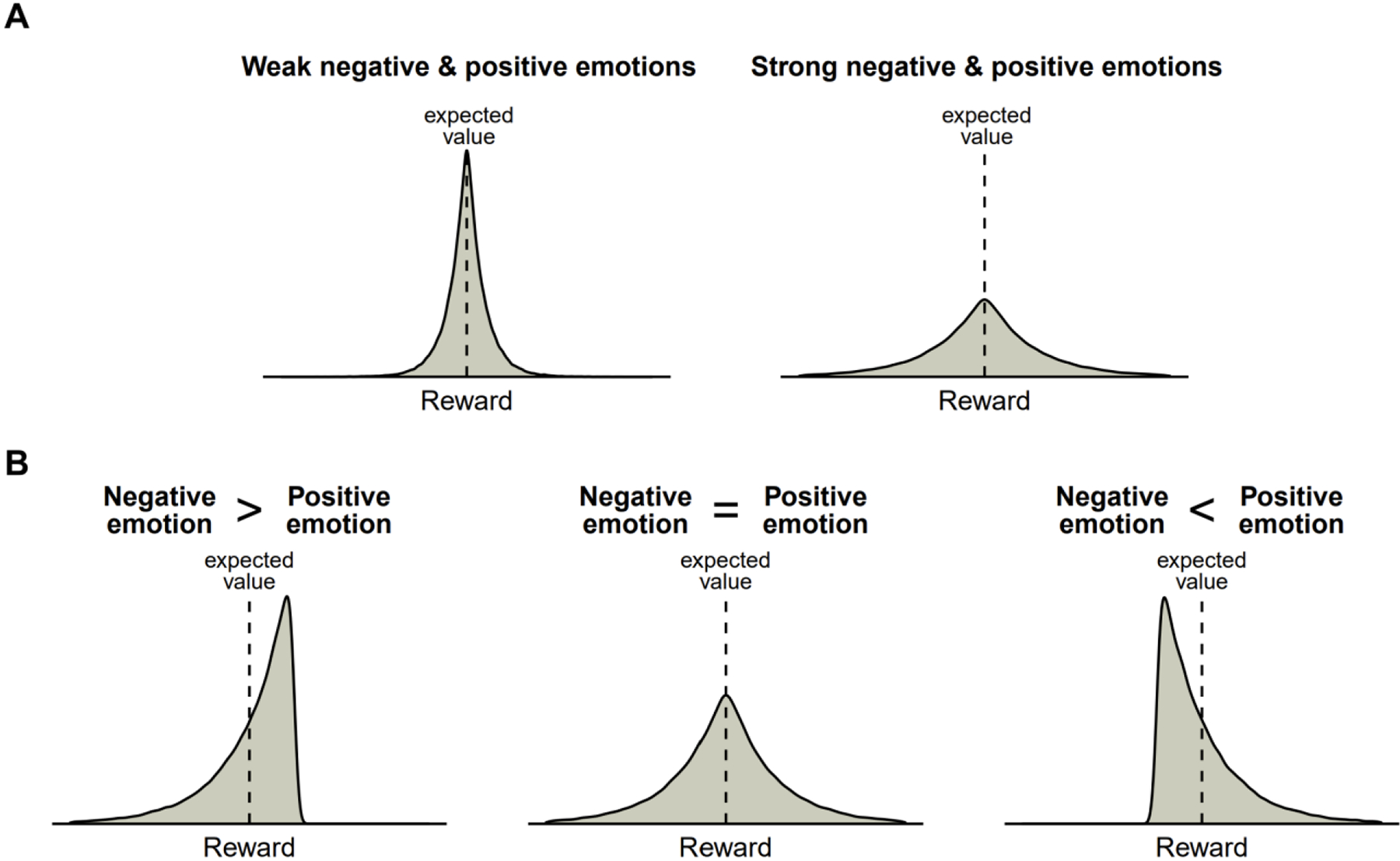

This computation satisfies all properties suggested by prior work (Figure 1). First, the upper confidence bound is inherently higher the more uncertain we are about the values and probabilities of prospective states, and thus the computed emotion will scale with the width of the estimated distribution of outcomes that is attributable to lack of knowledge (i.e., estimation uncertainty). Second, if it is the true distribution of possible outcomes that is wider (i.e., higher irreducible uncertainty), that means that on average probable prospects deviate more from the expected value (i.e., higher absolute G), and thus the computed emotions will also be stronger. Third, if the distribution of possible outcomes is positively skewed, that means there are prospects with greater positive deviations which would lead to stronger positive emotions, whereas if the skewness is negative, that means there are prospects with greater negative deviations leading to stronger negative emotions.

Figure 1. Determinants of positive and negative prospective emotions.

A. If the distribution of possible outcomes is wider, there are more probable prospects with high deviation from the expected value, and thus both positive and negative emotions are stronger. The estimated distribution can be wider either because outcomes are random (irreducible uncertainty) or due to lack of information (estimation uncertainty). B. If the skewness of the distribution is negative, then there are more probable prospects with a high negative deviation from the expected value, and thus negative emotions predominate. Conversely, if skewness is positive, then there are more prospects with a high positive deviation, and thus positive emotions predominate.

5.3. Emotional state as cumulative prospection

Prospective emotions do not always concern a single definite prospect (Grupe & Nitschke, 2013; LeDoux & Pine, 2016). To represent prospective emotions in the presence of uncertainty about when and where the prospect will materialize and what precisely it might involve, we can sum over different possible prospects and time delays:

| (5.3.1) |

This sum represents prospective emotional states that extend in time and are not specific to a single prospect.

Like the other types of emotional states, empirical research suggests that prospective emotional states also serve to generalize, in this case, from old to new prospects. Thus, having been evoked by a recognized danger, a negative prospective emotional state increases our tendency to recognize and respond to additional dangers (Abend et al., 2020; Bar-Haim et al., 2007; Mogg & Bradley, 1998; Pitman et al., 1993; Rosen & Schulkin, 1998). Such enhanced threat detection may be mediated, for instance, by increased attention to threat-related words (MacLeod et al., 1986) or images (Öhman et al., 2001). A prototypical example of increased threat detection is offered by the enhanced startle responses people and animals exhibit under threat (Davis et al., 1993). In a dangerous environment where taking risks is ill advised (e.g., a jungle with predators and poisonous animals), this effect may be lifesaving. Drawing the parallel in the domain of positive prospects, we can conceptualize a positive prospective emotional state as a motivational state in which a person is primed to recognize and pursue opportunities (Luthans & Jensen, 2002).

6. Mapping computations onto emotions

6.1. Three classes of computations

We identified three classes of computations that emotions may represent: computations of changes in expected reward, of the effectiveness of potentially controllable actions, and of potentially controllable dangers and opportunities. As we have seen, all three are useful, in ways that complement each other, to an agent striving to maximize reward. Tracking expected reward is necessary for performing the two other computations, and can help arbitrate between action and inaction (Niv et al., 2006) as between exploration and exploitation (Le Heron et al., 2020; McClure et al., 2005). Evaluating the relative effectiveness of actions based on experience helps improve one’s policies even absent a world model that enables prospection, whereas prospection enables estimating expected reward and the effectiveness of actions in the absence of direct experience. Indeed, the cognitive science literature strongly suggests that humans utilize all three of these computations.

Seen as appraisals, one might be tempted to assume a many-to-many mapping between the computations we described and emotions (Scherer, 2009a). However, these computations lie one step above the primary appraisals often invoked in theories of emotions. For example, the computation of reward lost or gained due to potentially controllable actions incorporates multiple appraisal dimensions such as pleasantness, goal relevance, agency, and controllability. This raises another, more parsimonious possibility, that of a one-to-one mapping between computations and emotions.

6.2. Three classes of emotions

Our proposal here (Figure 2) leans on Nesse and Ellsworth’s (2009) evolutionary analysis of the roles of different emotions, as on an extensive psychological literature examining the antecedents and consequences of different emotions (Averill, 1968; Carver, 2004; Carver & Harmon-Jones, 2009; Cunningham, 1988, 1988; Frijda, 1986, 1987; Harmon-Jones & Sigelman, 2001; Keltner et al., 1993; Kreibig, 2010; Lench et al., 2011, 2016; Levine, 1996; Levine & Pizarro, 2004; Nesse & Ellsworth, 2009; Oatley et al., 2006; Oatley & Duncan, 1994; Roseman, 1996). Closely resembling our three classes of computations, this literature identifies two classes of emotions that are evoked by evident outcomes, and one that is evoked in anticipation of prospective outcomes.

Figure 2.

Summary of computations we propose are represented by emotional states.

The first two classes involve, on one hand, emotions such as happiness and sadness, and on the other hand, emotions such as frustration, anger, and content. Both sets of emotions are evoked by evident outcomes, but they differ in whether those outcomes are potentially controllable. Thus, happiness and sadness are evoked by outcomes that are not necessarily attributable to someone’s actions and thus there may not be anything one can, or needs to, do to about them. The appropriate response is to adjust one’s expectations, upwards (happiness) or downwards (sadness), and carry on. Happiness and sadness are thus well suited to represent positive and negative changes in value.

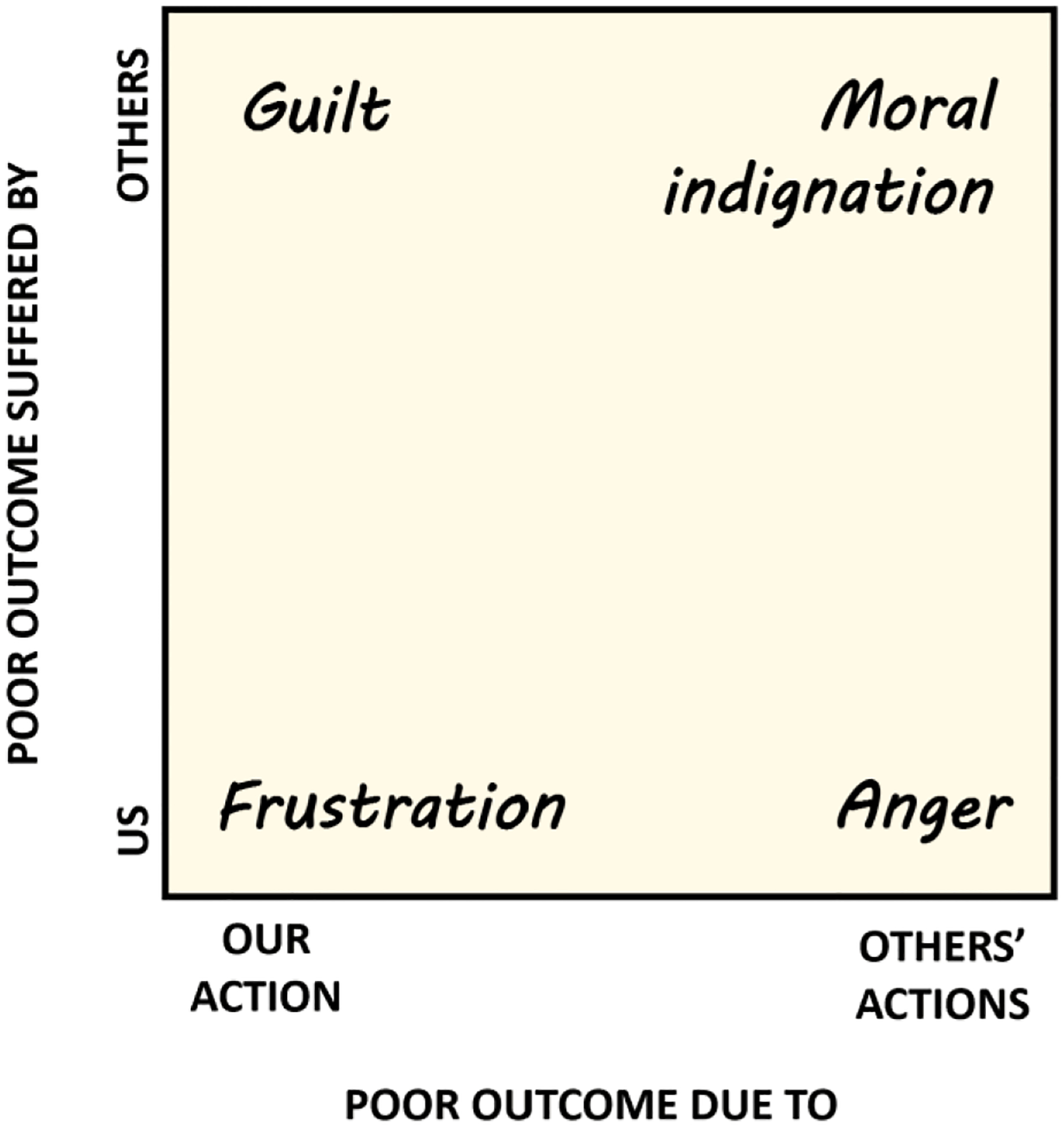

By contrast, emotions such as frustration, anger, and content occur when outcomes warrant positive or negative evaluations of actions. Correspondingly, these emotions are associated with behavioral changes that can be understood as adjustments of one’s policy in favor of advantageous, and away from disadvantageous, actions. This class of emotions is the most social and diverse out of the three classes due to two reasons: (i) we evaluate and influence not only our, but also other people’s, actions, and (ii) our actions impact not only ourselves but also others. In many languages, different combinations of responsible and affected agent are labeled as distinct emotions (Figure 3). Thus, when we suffer because of our own mistakes, we get ‘frustrated’ (Dollard et al., 1939), whereas if someone else is at fault, we get ‘angry’ (Averill, 1983; Berkowitz, 1999; Ortony et al., 1990). If we harmed someone else we feel ‘guilt’ (Chang & Smith, 2015; Leith & Baumeister, 1998; Tangney & Fischer, 1995), whereas if it was someone else that harmed another person, we feel ‘moral indignation’. What is common to all these scenarios is that we estimate that things are not going as well as they could go due to our or others’ actions. The emotions that represent this judgment serve to change the responsible party’s policy, either directly or through a social expression of the emotion.

Figure 3. Action-evaluation emotions.

Different emotion labels are used for different combinations of culpable agent (‘poor outcome due to’) and the agent suffering as a result (‘poor outcome suffered by’). These labels are paralleled by corresponding labels in the domain of positive outcomes (e.g., ‘anger’ correspond to ‘gratitude’, ‘frustration corresponds to ‘content’)

Finally, the quintessential prospective emotions are fear and desire (T. Brown, 1846; Nesse & Ellsworth, 2009). Both emotions are evoked by negative and positive prospects, and both motivate planning, to avoid or realize the emotion-inducing prospect. These emotions also each have their polar opposite. Specifically, the lack of desirable prospects is often referred to as apathy, and the lack of fearful prospects as calm. Of all emotions, fear has probably been most extensively studied in relation to learning, with decades of research devoted to what was once called “fear conditioning” and is now more commonly referred to as “threat conditioning”. This change in terminology has to do with the predominant use of animals in such research, and the inability to question animals about their emotions (LeDoux, 2014). Perhaps as a result, research in humans on fear conditioning has also neglected the emotional aspect of this much studied phenomenon. Our computational formulation of fear as a representation of potentially controllable danger, and the quantitative predictions such a formulation can generate, may facilitate a reconsideration of the role of fear in threat conditioning in humans and animals.

6.3. Three classes of moods

Though general theories on the subject of moods are lacking, many researchers implicitly or explicitly assume that there are different types of moods (Harmatz et al., 2000; Jansson & Nordgaard, 2016; McGowan et al., 2020; Rottenberg, 2005; Truax & Selthon, 2003; Tsanas et al., 2016). Moreover, the classifications of moods in the literature often parallel classifications of emotions. For example, extended diffuse (i.e., non-specific) sadness is often referred to as a sad or depressed mood, extended diffuse anger is referred to an angry or irritable mood, and extended diffuse fear is referred to as an anxious mood. This correspondence between emotions and moods, as well as the distinction between them in timescale and specificity, are well captured by assuming that moods are constructed through temporal integration of momentary emotions. As we have seen, this perspective can be implemented with regards to each of the computations we identified through a cumulative sum. These sums provide running averages of recent changes in expected reward, of recent success or failure of our and others’ actions, and of recently encountered dangers and opportunities.

Defined as these computations, moods accelerate learning through generalization, that is, by biasing the agent in favor of computations that are similar in type and valence to those the present mood represents. This is helpful for the simple reason that whenever similar changes, evaluations, or prospects are frequently encountered, it makes sense to regard them as a priori more likely. It is important to note, however, that the benefit of emotional states as prior expectations concerning the present environment extends beyond the domain of learning. Specifically, such prior expectations could explain the patterns of automatic physiological responses and action tendencies associated with emotional states (Scherer, 2009b). As an example, consider how increased arousal and quick reactivity to potential threats, both features of an anxious mood, can be helpful in the dangerous environments that an anxious mood signals.

A key open question about moods is where to draw the line between them and emotions, and whether a line should be drawn at all. Convention has it that emotions last moments to minutes, whereas moods last hours to weeks. We are not aware, however, of empirical evidence that justifies such a categorical distinction. Indeed, our formulations in this paper allow a continuum of possibilities for modeling emotional states, from a momentary emotion to a lasting mood. Future work could investigate whether emotional states temporally integrate computations on only a few fixed timescales, or on a whole continuum of timescales.

7. Summary and general implications

The emotions we examined in this paper combine to form a set of interdependent computations necessary for an agent to behave adaptively given limited information in an uncertain world. Though we have presented specific algorithms for carrying out each computation (Figure 2), the essential part of our proposal concerns what is being computed, namely, obtained reward (pleasure and pain), changes in expected reward (happiness/sadness), the relative effectiveness of actions in obtaining reward (content/anger), potential changes in expected reward the agent could plan for (fear/desire), and global changes in the prior probability of each of these computations (the different types of mood).

This proposed framework generates a plethora of quantitative predictions that are amenable to testing in and outside the lab. There are two prerequisites for testing these hypotheses in human subjects. First, rather than querying subjects about one or two dimensions of their emotional states, as done so far in computationally inspired investigations of emotion (Rutledge et al., 2014; Eldar & Niv, 2015; Villano et al., 2020), finer-grained information needs to be collected about different types of emotions and moods. Second, the experimental design needs to be powerful enough to allow independently manipulating changes in expected reward, action evaluations, and prospective computations.

Recent progress has also charted a way towards studying emotions in animals (Anderson & Adolphs, 2014), a critical endeavor if we are to understand emotional function and dysfunction at the neural level. In the absence of subjective reports, it is proposed that emotional states in animals are operationalized via a set of objective canonical characteristics, which include scalability, valence, persistence, and generalization. The computations we outlined here fit exceptionally well with this approach since they possess all these characteristics. Future studies of emotions in animals could thus make use of the computations we proposed to design manipulations that would evoke specific emotions, as well as to generate quantitative hypotheses about the downstream effects of these manipulations on learning and behavior. This could help map the neural chain of events that is necessary for each computation to be properly performed.

In this regard, it is important to remember that the algorithmic details that we delineated likely underestimate the complexity and sophistication of the brain mechanisms in place. Thus, for example, it is likely that a change in expected reward will evoke stronger or longer-lasting happiness if there is reason to believe that this change is particularly likely to generalize to other sources of reward. Additionally, our formulation of how action effectiveness is computed directly couples these computations with changes in policy, though in practice decisions about policy could dissociate from these computations. We also left open the question of whether and how people infer specific relevant timescales for computing mood, and whether this computation is equipped to detect discrete, as opposed to gradual, global changes. Thus, substantial additional research is required to investigate the finer details of how these computations are performed and used. Nevertheless, our framework offers a first comprehensive account of the computations mediated by different emotional states, together with a set of biologically plausible and empirically supported algorithms for performing these computations. We next examine several general implications that arise from a consideration of this framework as a whole.

7.1. Disentangling emotions

The task of differentiating between different types of emotion is complicated by the observation that different emotions tend to co-occur. This is especially evident regarding the three major negative emotions: sadness, anger, and fear (or anxiety). All three types of emotion are prevalent, for instance, in depression, in bipolar disorder, and in borderline personality disorder (Goldberg & Fawcett, 2012; Riley et al., 1989; Tsanas et al., 2016). And all three strongly covary in the general population (Diener & Emmons, 1984). Our account provides a natural explanation for this co-occurrence since it posits that all three types of emotion can be evoked by new information that portends a poor outcome. Sadness will be evoked to the extent that the new information lowers expectations of the future. Anger will also be evoked if the poor outcome is attributed to ours or others’ actions. And fear will also be evoked if there is uncertainty about whether the poor outcome will eventually materialize, such that we might be able to avoid it. These three possibilities are mutually compatible and often occur together.

Given the complexity and uncertainty involved in performing these computations, it is also no wonder that we often find ourselves not knowing what to feel. So far, an inability to report one’s emotions (i.e. alexithymia) has been taken as evidence that a person has a problem perceiving or verbalizing their emotions (Taylor & Bagby, 2004), possibly because the concepts they hold for different emotions are not well differentiated (Demiralp et al., 2012). Our account suggests, however, that in many cases the difficulty might not be in the act of interoceptive perception and categorization but rather in making inferences about the external environment and one’s state in it. For instance, whether we respond with anger or sadness depends on our ability to assign (or not assign) credit for a poor outcome to someone’s actions. If we are uncertain about this, then an undifferentiated negative emotional response may the best we can do.

On longer timescales, our account suggests that meaningful interactions likely exist between different types of mood. For instance, enduring sadness entails that reward availability is decreasing, which should impact the significance and likelihood of prospective computations responsible for emotions such as fear and desire. At the same time, a prospective emotion such as anxiety might focus attention on negative implications of experienced outcomes and thus bias computations concerning changes in value, potentially tempering happiness and promoting sadness. Indeed, phenomenological studies have exposed complex emotion dynamics with meaningful consequences for mental health (Houben et al., 2015). Future work could consider how these empirically observed dynamics cohere with the computations delineated here.

7.2. Situational, developmental, and innate priors

Another consequence of the uncertainty inherent in emotion-mediated computations is that they are invariably informed by prior expectations. Such prior expectations can be situational, developmental, or innate. Here we proposed that the primary role of moods is to act as situational priors. A sad mood biases computations towards sadness, an irritable mood biases computations towards anger, an anxious mood biases computations towards fear, and so on. These impacts of different moods are rational to the extent that decreases in value portend further decreases in value (sadness; Eldar et al., 2016), suboptimal actions portend further suboptimal actions (anger; (Bennett, Davidson, et al., 2021), and looming dangers portend further dangers (fear; Bolles & Fanselow, 1980).

By contrast to temporary moods, lasting developmental and innate priors could underlie stable individual predispositions to experience one or another type of emotion (Akiskal et al., 2005; Davidson & Irwin, 1999; Marteau & Bekker, 1992; Spielberger et al., 1983; Wilkowski & Robinson, 2008). Besides genetic variation, such priors may be shaped by a person’s predominant childhood experiences. For instance, growing up with an abusive parent rationally motivates a prior expectation that one is likely to be harmed by others’ actions, thus leading the child to be quick to respond with anger and aggression (Trickett & Kuczynski, 1986).

A particularly important prior expectation, the impact of which cuts across multiple types of emotion, concerns controllability. Beliefs about controllability may be informed by innate, developmental, and situational factors. For instance, it is well established that experiencing lack of controllability over outcomes can generate “learned helplessness”, which can be understood as a belief that outcomes cannot be controlled by our actions (Lieder et al., 2013; Maier & Seligman, 1976). Our account predicts, and indeed evidence supports, that such a belief will have a substantial impact on one’s temperament, diminishing a tendency to respond actively with anger and enhancing a tendency to respond passively with sadness and resignation (Launius & Lindquist, 1988; Peterson & Seligman, 1983; Sedek & Kofta, 1990).

Within a given situation, the expected relationship between controllability and anger is made more complex when we consider not only how intense anger is expected to be but also how long it is likely to persist. If controllability is very low then there is no point getting angry, but if it is very high, any disadvantageous action that is identified will be quickly eliminated from the policy, and then anger will quickly fade. The maximum amount of anger is thus expected at some medium level of controllability. Based on this rationale, we may also expect abnormal amounts of anger in cases where the estimated level of controllability is higher than the actual level. A similar logic applies to the relationship of controllability with the prospective emotional states of fear, anxiety, desire, and hope.

7.3. Function and malfunction

It is increasingly recognized that psychopathologies of mood and anxiety may be rooted in dysfunctions of computational mechanisms used to obtain reward and avoid punishment (Aylward et al., 2019; Bach, 2021; V. M. Brown et al., 2021; Chiu & Deldin, 2007; Gillan et al., 2020; Halahhakoon et al., 2022; Huys & Browning, 2021; I. Levy & Schiller, 2021; McCabe et al., 2010; Montague et al., 2012; Sharp et al., 2021; Sharp & Eldar, 2019; Szanto et al., 2015; Zorowitz et al., 2021). However, this rapidly evolving literature has so far mostly shied away from defining what constitutes functional and dysfunctional emotional responses. The normative definitions we proposed here fill this crucial gap, and can thus advance existing research towards identification and investigation of psychopathological emotions.

Emotional malfunction may arise within our framework if a computational algorithm does not work properly (e.g., changes in value are inferred when there aren’t any), if a person’s prior assumptions are inappropriate (e.g., controllability is believed to be lower or higher than it is), or relatedly, if the person has adapted to one environment but now resides in a different one wherein past computations are no longer suitable (Montague et al., 2012). Importantly, each of these failures may apply to each of the different factors that impact a given emotion. For instance, excessive anxiety could result due to overestimation of potential decreases in value (Eq. 5.2.1) or due to misestimation of self-control (Zorowitz et al., 2020; Eq 5.2.2). Similarly, excessive sadness could result due to underestimation of obtained reward (i.e., diminished pleasure; r in Eq. 3.1.2) or due to an asymmetry in learning that makes negative value updates predominate over positive value updates (e.g., due to differences in η in Eq. 3.1.3). Ultimately, given the extent to which psychopathological processes have so far resisted elucidation, a multifactorial approach is most likely to bear fruit.

That said, at least one specific pathological mechanism might engender multiple types of emotional disturbances. This mechanism concerns the generalizing impact of mood, which entails, for instance, that sadness which is evoked by recent negative value updates promotes further negative value updates. The critical question here is whether sadness-induced negative value updates make a person even sadder. Normatively, this should not be the case (notice that the computation of mood in Eq. 3.3.2 does not factor in the mood-induced component of value updates). However, if the brain does not dissociate between value updating that is induced by mood and that which is induced by external information, and thus factors both into the computation of mood, then sadness and happiness will recursively self-intensify. Such lack of dissociation may either constitute normal function, given the brain’s implementational constraints, or a specific form of malfunction. Either way, preliminary evidence indicates that self-intensifying moods might be key to explaining individual predispositions to mood disorders (Eldar & Niv, 2015). The possibility that irritable and anxious moods also self-intensify has yet to be thoroughly investigated, but it is easy to see how this mechanism could contribute to mental disorders involving extreme rage or anxiety.

8. Brain implementation

Whether distinct emotions are implemented by distinct neural circuits is a matter of ongoing debate (Berridge, 2019; Hamann, 2012). Some meta-analyses found that different emotions are associated with different patterns of brain activity (Vytal & Hamann, 2010), whereas others failed to find such dissociation (Lindquist et al., 2012). Our account of emotions as reflective of distinct types of computation entails that different emotions must be implemented by dissociable neural circuits. However, since each emotion-mediated computation involves multiple cognitive functions (e.g., perception, valuation, prediction, and learning, to name just a few of the processes involved in value learning), the neural circuits implementing these computations are likely spread over large, partially overlapping networks of brain regions. It is unclear whether current macroscopic neuroimaging techniques are best suited to dissociate such overlapping circuits. Indeed, one methodological difference that seems to distinguish the works that do find unique neural signatures for different emotions is that these works admitted signatures that consist of granular partially overlapping multi-voxel patterns of brain activity (Kragel et al., 2016).

Ultimately, our account suggests that the debate concerning the brain basis of emotion may be most effectively solved by focusing on computational mechanisms as a bridge between emotion and brain function (LeDoux, 2000; Sander et al., 2005). The computational mechanisms we associated here with distinct emotions – the reward function, value learning, policy learning, and prospection – have all been, for some time now, major targets for neuroscientific study. Thus, in combination with our account, existing neuroscience research generates clear hypotheses concerning the likely neural substrates of each type of emotion. We next review several of these hypotheses.

8.1. Liking vs wanting

The functional dissociation that we proposed between pleasure on one hand, and happiness and content on the other, fits with a familiar dissociation from the neuroscience literature between ‘liking’ and ‘wanting’. A substantial body of empirical work dissociates between gaining pleasure from consuming a given reward (i.e., liking) and motivation to obtain the reward (i.e., wanting; Berridge, 1996; Berridge et al., 2009). The dissociation between these two functions is most evident in pathological conditions such as schizophrenia (where liking can be intact while wanting is suppressed) and addiction (where wanting persists in the absence of liking; Berridge & Robinson, 2016). Indeed, these two functions seem to be implemented by distinct neural mechanisms. Thus, liking is thought to be at least partially mediated by opioids, whereas wanting is thought to be partially mediated by dopamine (Berridge, 2007).

In normal function, liking and wanting are dissociated in time, in the sense that one can be observed in the absence of the other (Berridge & Dayan, 2021). Our account explains this dissociation by positing that liking (i.e., pleasure) represents the reward value of present experience, whereas wanting (i.e., policy) relies on information gained from past experience (McClure et al., 2003). It thus makes sense that wanting will follow liking and will persist for some time in its absence. In addition, our account enriches the concepts of liking and wanting by offering a computational role for the inherently affective liking – namely, that of a reward function – and an affective aspect to the computational construct of wanting, or more precisely for the learning that leads to wanting – namely, that of happiness and content. A neural prediction to which this perspective directly leads is that whereas pleasure is mediated by opioids, happiness and content should be mediated by dopamine. Several studies already offer preliminary empirical support for this prediction (Rutledge et al., 2014, 2015; van Steenbergen et al., 2019).

An intriguing open question relates to the possibility that pleasures may not only drive the learning that leads to wanting, but could themselves be modified through learning from other types of reward signals (Dayan, 2021). An illustrative example of such modification is how we naturally acquire a taste for foods that made us satiated and an aversion of foods that made us nauseous (Birch, 1999; Myers & Sclafani, 2001). How and when different types of pleasure (e.g., gustatory, sexual, affiliative, achievement-related) are modified is thus a promising target for future work.

8.2. Value vs policy learning

The distinction between value and policy learning, which we proposed differentiates between sadness and anger, is a key characteristic of “actor-critic” reinforcement learning models (Sutton & Barto, 2018). In addition to their usefulness for solving machine learning problems (Grondman et al., 2012; Haarnoja et al., 2018; Konda & Tsitsiklis, 2000; Peters & Schaal, 2008), this class of models has been widely used for understanding how the brain learns to predict and maximize reward. This body of work suggests that value learning is mediated by dopaminergic prediction error signals transmitted from the ventral tegmental area to the ventral striatum and frontal areas (Barto, 1995; Houk & Adams, 1995; Joel & Weiner, 2000; Miller & Wickens, 1991; Waelti et al., 2001; Wickens & Kötter, 1995), whereas policy learning is mediated by dopaminergic signals from the substantia nigra pars compacta to the dorsal striatum (Lerner et al., 2015; O’Doherty et al., 2004; Roeper, 2013; Rossi et al., 2013). Though this simple picture still requires further elaboration and investigation (Averbeck & O’Doherty, 2022; Niv, 2009), it already offers a way to think about how sadness and anger may be differentiated in the brain. A key principle to notice here is that the circuits for value and policy learning closely interact with one another. In fact, policy updates are thought to be guided by learned values, and brain-stem dopaminergic prediction error signals drive both types of learning. Thus, dissociating these two circuits would require carefully designed theory-driven manipulations and an ability to differentiate between neighboring and interacting neural populations.

8.3. Prospection vs retrospection

Our account distinguishes between emotions that are evoked by prospection concerning what may or may not happen (e.g., fear and desire) and emotions that reflect learning from what has already happened (e.g., happiness and anger). Despite having been proposed by the very person who is thought to have coined the term ‘emotion’ (Brown, 1846), such distinction between prospective and retrospective emotion has since been oddly absent from the literature (with the notable exception of Nesse and Ellsworth, 2009). Computationally, we propose this distinction is most precisely defined by saying that retrospective emotions are evoked by actual changes in expected value that are believed to have happened (‘happened’ only in the sense that the expected value has already changed, not in the sense that it already materialized and was consumed as reward), whereas prospective emotion are evoked by potential changes in value which may or may not happen. Empirically, this distinction is marked by the fact that prospective emotions intensify with time as the prospect they are concerned with draws near (Mobbs et al., 2007), whereas retrospective emotion are typically maximal when evoked and then decay with time (Rutledge et al., 2014).

The computations we proposed prospective and retrospective emotions mediate map onto what has been widely characterized in the literature as model-based and model-free reinforcement learning, respectively (Daw & Dayan, 2014; Daw & O’Doherty, 2014; Sutton, 1990). Thus, the large body of literature investigating how model-based and model-free learning are implemented in the brain could shed light on the neural basis of prospective and retrospective emotion. An overlap between these two types of emotion is suggested by the finding that both model-based and model-free learning are correlated with activity in the human striatum (Daw et al., 2011). Yet the two systems are also neurally distinguished in that model-free learning was found to be associated with reward prediction error signals in the putamen, whereas model-based learning associates with activity in the dorsomedial prefrontal cortex (Doll et al., 2015). Thus, here too, a picture of partially overlapping and partially distinct neural circuits emerges.

Further undermining the separation between these two circuits is the likely possibility that model-based prospection itself contributes to model-free learning. This contribution may take at least two main forms: model-based inferences may guide model-free credit assignment during actual experience (Moran et al., 2021), and the model-free system could learn from model-based simulation of possible experiences (Gershman et al., 2014; Mattar & Daw, 2018; Russek et al., 2017; Sutton, 1990). Indeed, recent studies indicate that simulating sequences of possible future states enables people to update their values and policy prior to actual experience (Eldar et al., 2020; Liu et al., 2019, 2021). It is easy to imagine how this such prospective simulation, by acting as a substitute for actual experience, could evoke retrospective emotions such as happiness and anger.

8.4. Mood

We proposed that moods serve to generalize inferences to subsequent experiences and prospects. To date, we are aware of only one study that investigated the neural substrates of this generalizing impact in humans (Vinckier et al., 2018). The findings indicates that the generalizing impact of a positive emotional state are mediated by activity in the ventromedial prefrontal cortex, whereas those of a negative emotional state are mediated by activity in the insula. Further research is required to substantiate these findings in other experimental settings, as well as to investigate different types and timescales of mood. Particularly promising in this respect is the development of animal models of affective biases (Hales et al., 2014). Manipulating rodents’ emotional states biases their judgements in a manner that is broadly consistent with the ideas proposed here. Developing animal models of affective biases that correspond to distinct emotions is a promising target for future research.

9. Relationship with other theoretical accounts

9.1. Other computational accounts

Our account builds on several previous suggestions concerning the computations that emotions mediate, which to date have not been jointly considered. Here we integrated these proposed computations into a unified framework that clarifies each computation’s role and the relations between them. Thus, the valence of one’s mood has previously been suggested to represent either changes in value (Eldar et al., 2016; and see Joffily and Coricelli (2013) for a similar suggestion formalized using the free energy framework) or action effectiveness (i.e., integrated advantage; (Bennett, Davidson, et al., 2021). As these two types of computation warrant different behavioral responses, we proposed that they are represented by different classes of emotions (happiness/sadness vs. content/anger) that motivate different types of behavior (activity/inactivity vs. behavioral perseverance/change).

Similarly, computations of uncertainty have been previously suggested to be represented by people’s anticipatory emotions (Hagen, 1991) and levels of stress (Peters et al., 2017). The term ‘stress’ has different definitions and is not universally recognized as an emotional state, but it is closest to what in the emotion literature is typically referred to as anxiety. Indeed, our definition of anxiety in the present paper includes uncertainty as a precondition, since there is no use trying to prevent inevitable or impossible prospects. However, for the same reason, we propose that anxiety should scale only with uncertainty that is potentially reducible through our control over the environment. Additionally, Peters et al.’s definition of stress does not separately account for uncertainty about a prospect and the change in value it would bring should it materialize. Here, we proposed that both factors are reflected in a person’s level of anxiety.

Defining the computations each emotion represents (i.e., at Marr’s computational level of analysis; Marr, 2010) opens the way to investigating algorithmic characteristics of these computations. Of particular interest is the common notion that emotions act as heuristics, in the sense that they do not represent optimal computations, but rather, cost-saving mental shortcuts. To test this idea, however, we first need to agree on what computation a particular emotion may represent, and only then can we ask whether emotions mediate these computations optimally or not. So far, this question has not been properly studied or even explicitly asked, likely due to a lack of clarity about the computations involved. We suspect that once the question is posed in this way, the common view of emotions as a type of heuristics that provides oversimplified answers to complicated problems might be put into doubt. Indeed, we have recently highlighted how rational inferences concerning higher order constructs such as global changes in value may often be mistaken for heuristics (Sharp et al., 2022). Briefly, the reason is that such inferences represent general properties of the environment (or of one’s state in it) that predict individual outcomes only on average, and can thus seem extraneous to a naïve observer focusing on an individual case. Instead, we propose, such inferences are best viewed as a rational consequence of operating with limited information in an uncertain world.

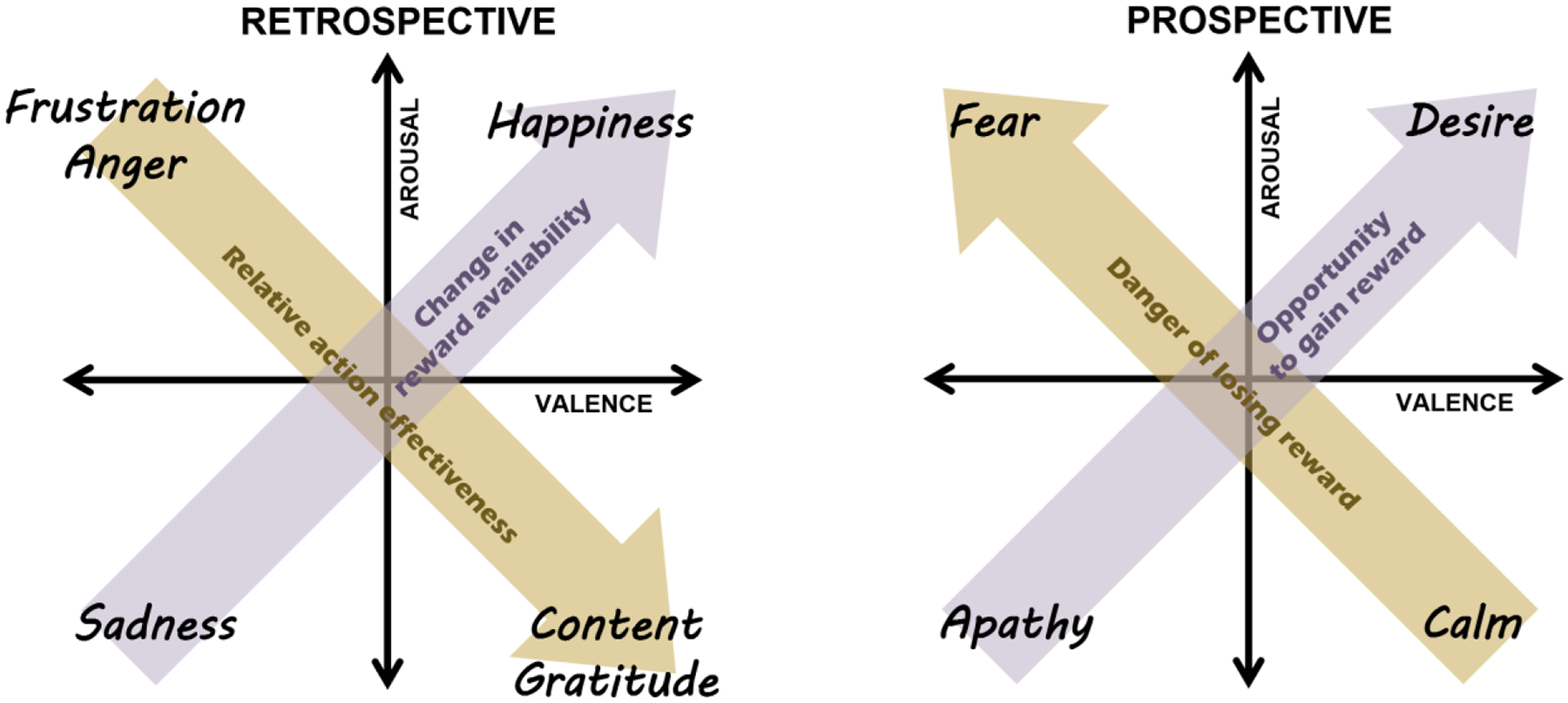

9.2. The circumplex model of emotion

Previous work linking emotion to the processing of reward and punishment has primarily considered emotion as described by the circumplex model (Russell, 1980). This influential model maps different emotions onto a two-dimensional space of valence and arousal. Within this space, it is suggested that reward drives emotional states of high arousal and positive valence, whereas punishment drives states of high arousal and negative valence. Failing to obtain reward is thought to be associated with a more complex pattern beginning with high-arousal and then culminating in low-arousal negative states (Mendl et al., 2010). Our account differs from this idea by invoking distinct emotions, and the computations associated with them, to mediate the impact of reward and punishment on valence and arousal. The result can be thought of as a three-dimensional space of emotion, with the additional dimension distinguishing between retrospective and prospective emotions (Figure 4).

Figure 4. Proposed impact of reward within the emotion circumplex.

The impact differs for changes in expected reward that have already happened (retrospective) and those that may happen in the future (prospective). Note that ‘reward’ in our account refers to a reward function that sums across both rewarding (positive) and punishing (negative) attributes of states and actions.

Our account and previous accounts make fairly similar predictions with regards to prospective emotions, but substantial differences exist with regards to retrospective emotions. First, we propose that retrospective emotions represent value differences, not reward pe se (Eldar et al., 2021). Second, our account suggests that an important dimension of emotion is the degree to which outcomes are attributed to actions, which determines where emotion lies on the continuum between anger and sadness. Third, our account predicts that a similar emotion will be evoked by decreases in expected punishment as by increases in expected reward. The evidence reviewed throughout this manuscript already provides support for some of these unique predictions, but future research could more exhaustively study points of disagreement with previous theory.

9.3. Appraisal and Evolutionary accounts