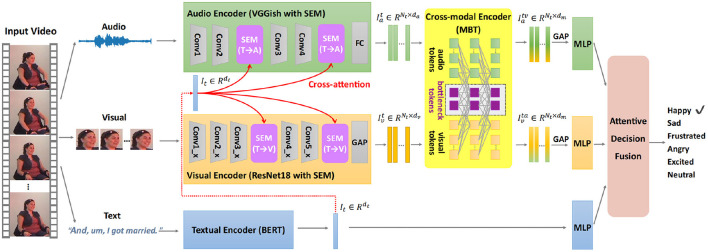

Figure 1.

The proposed end-to-end multimodal emotion recognition (MER) framework with the semantic enhancement module (SEM) and multimodal bottleneck Transformer (MBT), is denoted as MER-SEM-MBT. Given a facial video clip, the global semantic feature is first extracted through the textual encoder, which is used to guide the audio and visual representation learning through the semantic enhancement module. Then the cross-modal encoder is adopted to reinforce audio and visual representations through temporal cross-modal interaction via a multimodal bottleneck Transformer. Lastly, three separate multi-layer perceptrons (MLPs) are implemented to get unimodal decisions from audio, visual, and text modalities, respectively. Attentive fusion is performed to aggregate these decisions for final emotion prediction. The example facial video is from IEMOCAP dataset (Busso et al., 2008).