Abstract

Purpose

Today, clinical care is often provided by interprofessional virtual teams—groups of practitioners who work asynchronously and use technology to communicate. Members of such teams must be competent in interprofessional practice and the use of information technology, two targets for health professions education reform. The authors created a Web-based case system to teach and assess these competencies in health professions students.

Method

They created a four-module, six-week geriatric learning experience using a Web-based case system. Health professions students were divided into interprofessional virtual teams. Team members received profession-specific information, entered a summary of this information into the case system’s electronic health record, answered knowledge questions about the case individually, then collaborated asynchronously to answer the same questions as a team. Individual and team knowledge scores and case activity measures—number of logins, message board posts/replies, views of message board posts—were tracked.

Results

During academic year 2012–2013, 80 teams composed of 522 students from medicine, nursing, pharmacy, and social work participated. Knowledge scores varied by profession and within professions. Team scores were higher than individual scores (P < .001). Students and teams with higher knowledge scores had higher case activity measures. Team score was most highly correlated with number of message board posts/replies and was not correlated with number of views of message board posts.

Conclusions

This Web-based case system provided a novel approach to teach and assess the competencies needed for virtual teams. This approach may be a valuable new tool for measuring competency in interprofessional practice.

Improving health outcomes requires the work of high-functioning interprofessional teams.1 However, team performance is often hampered by health care providers working asynchronously, being dispersed across locations, and relying on boundary-spanning technology, such as electronic health records (EHRs), to communicate.2 Outside of health care, teams that work at different times and locations and rely heavily on technology-mediated communication are called virtual teams.3 Virtual teams are not necessarily less effective than nonvirtual teams. In comparison with effective teams that work in a synchronous, colocated manner, effective virtual teams manage information better, have more reliable channels for communication, and receive greater contributions from team members who hold a lower status in the team hierarchy.3 However, technology-mediated communication can also be isolating4; encourage inappropriate behavior that is normally suppressed by social cues5; and lead to briefer, more error-prone communication.3 Successful virtual teams require specific training6 and, with this training, can have similar outcomes to successful synchronous, colocated teams.7 To develop the interprofessional virtual teams necessary to attain our health care goals of high-quality, efficient, and patient-centered care, providers and students need to be trained to work in virtual teams.

Being an effective member of a virtual health care team requires competency in interprofessional practice and the use of information technology. Although these areas have been priorities for educators in the health professions for over a decade,1 they are poorly integrated into the curricula.8 Interprofessional education (IPE) is hampered by a lack of conceptual clarity about interprofessional work.9 While a handful of overarching principles for interprofessional practice can structure care delivery,10 the specific context of care has a tremendous influence on team interactions and the need for training. For example, interprofessional practice on a hospital resuscitation team is typically characterized by the contemporaneous interaction of multiple colocated providers within a set hierarchy.11 This type of interprofessional practice is called collaborative,12 and simulation-based education provides effective training for this type of work.13 In contrast, care in less urgent settings is often asynchronous, less structured, and characterized by shared authority.11 This type of care, typical of virtual teams, is called coordinative.12 Currently, we do not know the optimal training for practice on a coordinative care team, and educators need new approaches to teach and assess the necessary behaviors.8,9

Similarly, training in the use of information technology has not been widely integrated into health professions education.14 EHRs are the dominant information technology in health care and an essential tool for virtual teams to coordinate care, decrease errors, and improve quality.15 However, training in the use of EHRs does not regularly include the concept of asynchronous teamwork. Although education with EHRs has included classroom-based sessions, computer-based didactic modules, record reviews with feedback,16 simulation-based training of urgent care situations,17 and the care of complex patients by a single profession,18 we found no peer-reviewed studies reporting the use of EHRs to train virtual health care teams.

To train and evaluate virtual teams, we developed a Web-based case system to teach and assess the competencies in interprofessional practice as defined by the Interprofessional Education Collaborative.19 Our case system was designed to simulate select features of an EHR, such as data retrieval, documentation, and messaging. In addition, we designed the system to overcome the logistical barriers that hinder large-scale IPE, such as aligning students’ schedules and locating appropriate classroom space.20,21 We sought to structure the flow of the students’ work to stimulate collaboration, and we adopted principles from team-based learning22,23 to create educational work processes that promoted such interactions. We then embedded methods to track and assess students’ behaviors to provide much richer objective assessments than are currently found in IPE.24,25

Here, we describe the outcomes of implementing our Web-based case system to train a large number of students from four health professions to collaborate as members of virtual teams. We hypothesized that the students would display the characteristics of virtual team members by demonstrating asynchronous, active collaboration within the case system. Also, we anticipated that the benefits of teamwork would be reflected in the case outputs, such that the average team knowledge scores would exceed the average knowledge scores for each profession and that each team’s knowledge score would correlate with measures of each team’s case activity.

Method

Case system learning cycle

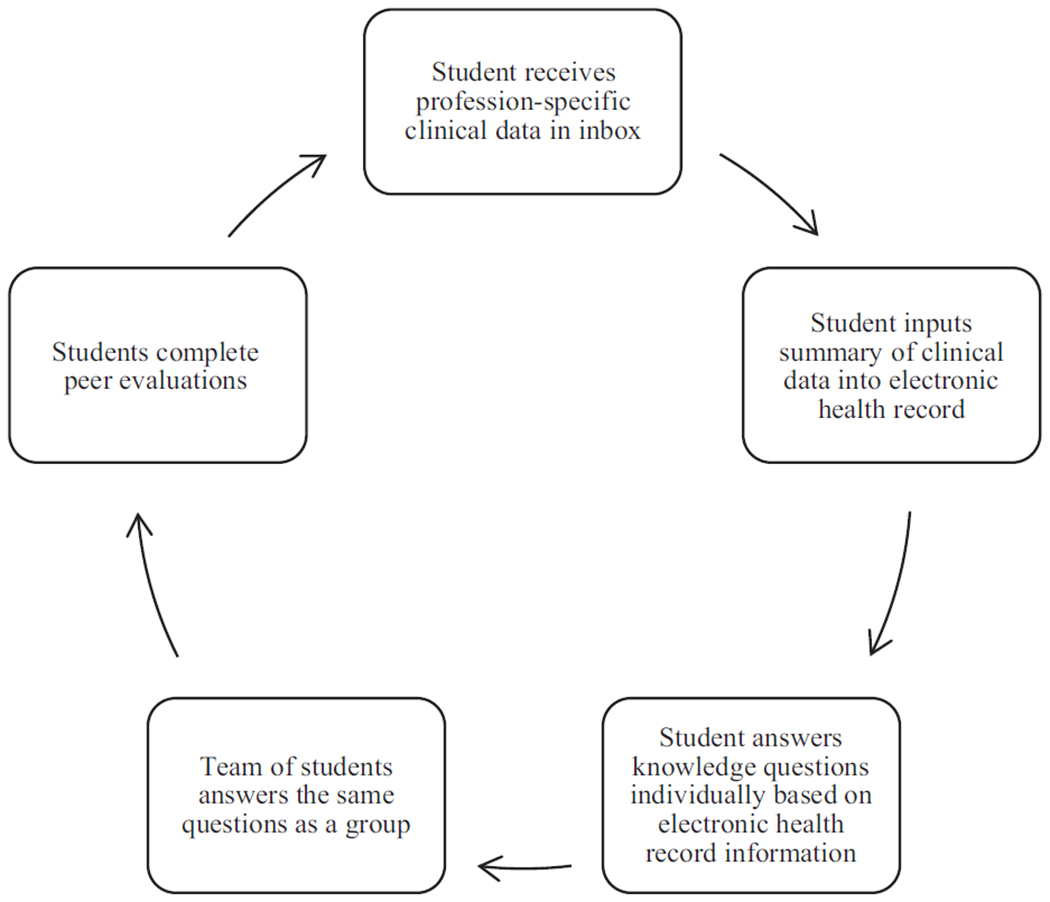

Our Web-based case system promoted interaction between students by using established principles from team-based learning, which is an instructional method that has been shown to improve learning outcomes in comparison with traditional classroom methods.22,23 To incorporate these principles, we divided the case system learning cycle into five steps (see Figure 1). First, each student received patient information, specific to his or her professional role, in the form of a patient narrative. This information represented the profession-specific clinical data about a patient that each type of provider would obtain in a given setting either through direct interaction or other sources of information, such as prescription fill records for a pharmacist. Second, students entered a synopsis of the patient information they received into the virtual EHR using a process similar to that used to document information in an EHR in practice. Once this information was entered, the team members from the other health professions could view it. Third, each student individually answered multiple-response questions related to the patient’s case, accruing points that we used to calculate an individual knowledge score at the end of the unit. Students had access to the virtual EHR where their team members had entered the information they received, which the students needed to answer the questions correctly. Fourth, the team collaborated to select answers to the same questions that they answered as individuals, generating a team knowledge score.

Figure 1.

Learning cycle of the Web-based case system used to train and assess interprofessional virtual health care teams.

To facilitate the work of the team, the case system contained an electronic message board that the team members could use to communicate about their team answers. When discussing their team answers, team members could see how many of their colleagues had selected each answer choice during the individual question step. Finally, once all team answers had been submitted, each student completed anonymous peer evaluations of the other students on the team. The students then began the next module, returning to step one of the cycle. To mirror the clinical environment, certain elements of the EHR, such as the medication list and problem list, were retained in subsequent modules while the patient moved through time, and new chapters of the clinical record were created.

Case system underlying technology

Our case system was built as a freestanding Web application by our educational technologists with design input from faculty and supporting resources from the university and an external funder (the Donald W. Reynolds Foundation provided approximately $200,000 in software development costs). It was designed to be accessible to students, preceptors, and administrators from anywhere in the world with Internet access. The administrative and preceptor interfaces allowed easy modification of case system functions, including profession-specific patient narratives, questions, debriefing information, module length, student registration, and team creation. Login and access functions for both students and preceptors were controlled through a secure password-protected interface that managed user functions. Data accrued automatically, supporting systematic reports of performance.

Structure of the case experience

During the 2012–2013 academic year, we enrolled all fourth-year students in the doctor of medicine and bachelor of science in nursing programs, 60 volunteer fourth-year students in the doctor of pharmacy program (50% of all fourth-year pharmacy students), and all second-year students in the master of social work program who had a clinical practice concentration. The students were placed on teams of 4 to 9 members for a six-week interval during one of four sequential blocks during the year. For the first three blocks, all professions were represented on each team. Because we had insufficient numbers of pharmacy students for the fourth block, only medicine, nursing, and social work students participated; for this block, the pharmacy-specific information was distributed to participating students in all disciplines. For nursing students, satisfactory completion of the case experience represented a small percentage of a course grade. For medical and social work students, satisfactory completion as judged by the faculty was required for graduation, but completion was not linked to a course. For pharmacy students, the case experience was an optional activity.

During each six-week block, teams completed four distinct modules of a single unfolding case; each module lasted about 10 days. Immediately before the block, students attended a two-hour, face-to-face orientation during which they were introduced to the case system and to their teammates. During the second half of the academic year (blocks three and four), a required team charter exercise was added to the orientation to facilitate establishing team roles, responsibilities, and communication protocols. Course leaders set the final deadlines for the submission of team answers and peer evaluations. All other team activities were structured and led entirely by the students; they were encouraged to set intermediate deadlines for the other tasks, such as entering clinical data and individual answers. Students were allowed to collaborate in any fashion they chose, including meeting face-to-face. Each team also had a faculty preceptor from the group that wrote the case. The faculty members logged on to the system in a fashion similar to the students, observed the students’ activity and interprofessional interactions, and provided occasional feedback regarding team function; they did not help teams answer case questions.

Case content and questions

The cases focused on geriatric care and followed a woman over seven years of her life and across multiple settings, including primary care, hospital, assisted living facility, subacute nursing facility, home care, and hospice. An interprofessional team of faculty from medicine, nursing, pharmacy, social work, occupational therapy, and gerontology developed the content, questions, and answers. Questions and case content were anchored to the Association of American Medical College’s geriatric competencies for medical students.26 Mirroring real life, the clinical information provided to the students in each profession intentionally lacked some important details; thus, students had to share information across professions to perform well.

For example, in the first module, pharmacy students had a complete list of both the medications the patient recently filled at the pharmacy from multiple prescribers as well as those over-the-counter medications documented at the pharmacy; nursing students received the list of medications the patient brought to the clinic visit; medical students saw a record of the medications she recently had been prescribed from one health system; and social work students had information about prescription drug coverage. Questions in this module ranged from biomedical queries about diagnosis and treatment to psychosocial prompts about ethical considerations or insurance coverage. The questions targeted profession-specific expertise and competencies. For example, a medical student would have difficulty answering a question centered on social work expertise without assistance from the team’s social work student.

Questions were constructed in multiple-response format; that is, students had to select multiple correct answers from a list of choices that ranged from 7 to 14 in number. The multiple-response format reduced the impact of chance on performance and provided a format more representative of actual patient management decisions than standard multiple-choice questions.27 Because we wanted the answer scoring to represent the approach of an expert interprofessional team, the faculty, who were all experienced clinicians in their professions, met as a team and assigned each answer choice a value ranging from +5 for the most appropriate answers to −5 for the most inappropriate answers. Each question also included an answer choice of “Outside my profession’s usual practice,” which yielded a score of 0 for that question. Students were instructed to choose this option only as a last resort. To compute a knowledge score for each individual or team, we summed all point values and multiplied that number by 10. The possible final scores ranged from −9,500 (all incorrect choices and no correct choices) to 9,200 (all correct choices), representing more than 500 individual choices per student and per team.

Assessment of student, team, and case system performance

We applied Moore’s Framework,28 a model of educational outcomes, to structure our evaluation process. Our goal was to target higher levels of learning outcomes than have been reported for most IPE activities.24 To assess students’ knowledge of geriatrics (level 3A—declarative knowledge), we calculated knowledge scores on the questions at the individual and team level. To assess student and team competence in interprofessional practice and EHR use (level 4—competence), we used case activity measures, such as the number of logins, EHR entries, message board posts and replies, and views of message board posts.

For knowledge scores and case activity, we calculated descriptive and inferential statistics using SAS 9.4 (SAS Institute, Cary, North Carolina). We compared individual scores between professions and with overall team scores using ANOVA. Because the distribution for case activity measures was skewed, we compared medians by profession using the Kruskal–Wallis test. To test the association of individual and team scores with measures of teamwork behavior, we used bivariate correlations to test relationships between individual scores, team scores, case activity measures, and team size. The institutional review board at Virginia Commonwealth University approved this study.

Results

Participation and case activity measures

Throughout the 2012–2013 academic year, 80 teams composed of 522 students completed the case experience. Teams ranged in size from 4 to 9 members, with 7 being the median number of students per team. By school, 194 students from medicine participated, 146 from nursing, 60 from pharmacy, and 122 from social work. A summary of the case activity measures is provided in Table 1. Number of logins, EHR entries, and message board posts and replies differed significantly by profession (P = .001, P < .001, and P = .046, respectively), while number of views of message board posts showed no significant difference between professions (see Table 2). We saw no difference in the case activity measures between the fall (pre–team charter exercise) and spring (post–team charter exercise) semester teams. Students from all blocks reported an average of 0.64 face-to-face meetings (range: 0–2).

Table 1.

Case Activity Measures in a Study of a Web-Based System to Train and Assess Interprofessional Virtual Health Care Teams, 2012 to 2013

| Measure | Overall no. | Average no. per student | Median no. by team | Range by team |

|---|---|---|---|---|

| Logins | 14,443 | 27.7 | 172.5 | 68–470 |

| Electronic health record entries | 6,217 | 11.9 | 74.5 | 29–164 |

| Message board posts and replies | 8,543 | 16.4 | 65.0 | 3–672 |

| Views of message board posts | 30,080 | 57.6 | 267.0 | 11–2,265 |

Table 2.

Case Activity Measures by Profession in a Study of a Web-Based System to Train and Assess Interprofessional Virtual Health Care Teams, 2012 to 2013

| Measure | Median (range) | |||

|---|---|---|---|---|

| Medical students (n = 194) | Nursing students (n = 146) | Pharmacy students (n = 60) | Social work students (n = 122) | |

| Loginsa | 21 (2–98) | 28 (5–197) | 25 (2–86) | 22 (1–114) |

| Electronic health record entriesa | 11 (0–44) | 14 (2–53) | 5 (0–19) | 7 (0–43) |

| Message board posts and repliesb | 8 (0–157) | 9 (0–131) | 10 (0–81) | 5 (0–108) |

| Views of message board posts | 24 (0–659) | 27 (0–675) | 37 (0–335) | 18 (0–460) |

Between-group differences are significant at P < .01.

Between-group differences are significant at P < .05.

Individual knowledge scores

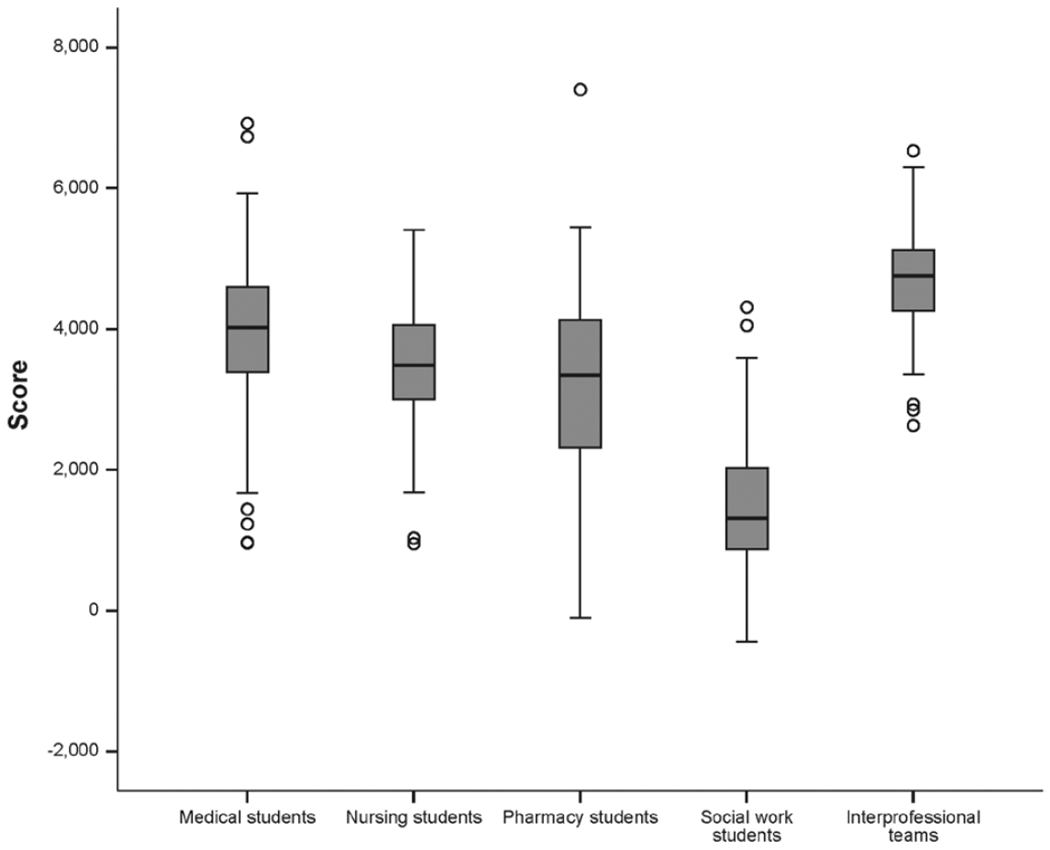

The distribution of individual scores on the content questions ranged from −440 to 7,400 and varied by profession. Medical students scored the highest (mean [M] = 3,918; standard deviation [SD] = 996), followed by nursing students (M = 3,462; SD = 825) and pharmacy students (M = 3,119; SD = 1,431). Scores for social work students (M = 1,454; SD = 901) were lowest. A one-way ANOVA showed that the effect of the student’s profession was significant (F(3,518) = 162.58; P < .001), but only some intergroup differences were significant at the level of P < .05 on post hoc tests. Scores for social work students were significantly lower than those for all of the other groups, while scores for medical students were significantly higher than those for all of the other groups. Scores for nursing students and pharmacy students were not significantly different. The distribution of knowledge scores by profession is shown in Figure 2.

Figure 2.

Distribution of knowledge scores by profession and by interprofessional team in a study of a Web-based case system to train and assess interprofessional virtual health care teams, 2012–2013. The scores for medical and social work students were significantly different from the scores for nursing and pharmacy students (P < .05). Team scores were significantly different from the knowledge scores for each profession (P < .01).

Team knowledge scores

Team scores ranged from 2,630 to 6,530. Median and average team scores were significantly higher than individual scores for all professions (P < .001) and showed a narrower range (see Figure 2). The difference between the median individual score and the median team score was 1,665 points. We found no difference in the team scores between the fall (pre–team charter exercise) and spring (post–team charter exercise) semester teams.

Correlations between case activity measures and knowledge scores

Individual scores were correlated significantly with all case activity measures—number of logins, EHR entries, message board posts and replies, and views of message board posts—with r values ranging from 0.32 to 0.39 (P < .001; see Table 3). Team score was significantly correlated with individual score (r = 0.18), number of logins (r = 0.23), number of message board posts and replies (r = 0.34), and number of views of message board posts (r = 0.27), all at a significance level of P < .001. Team score was not highly correlated with number of EHR entries (P = 0.097) or team size (P = .977). Effect sizes for the significant correlations with team score were slightly larger than the comparable effect sizes for correlations between each case activity measure and individual score (team versus individual: logins = 0.41 versus 0.32; message board posts and replies = 0.45 versus 0.39; views of message board posts = 0.45 versus 0.35).

Table 3.

Correlations Between Individual Knowledge Score, Team Knowledge Score, Case Activity Measures, and Team Size in a Study of a Web-Based Case System to Train and Assess Interprofessional Virtual Health Care Teams, 2012 to 2013

| Measure | Individual score | Individual no. of logins | Individual no. of EHR entries | Individual no. of message board posts/replies | Individual no. of views of message board posts | Team score | Team size |

|---|---|---|---|---|---|---|---|

| Individual score | — | ||||||

| Individual no. of logins | 0.32a | — | |||||

| Individual no. of EHR entries | 0.33a | 0.32a | — | ||||

| Individual no. of message board posts/replies | 0.39a | 0.50a | 0.28a | — | |||

| Individual no. of views of message board posts | 0.35a | 0.46a | 0.25a | 0.80a | — | ||

| Team score | 0.18a | 0.23a | 0.07 | 0.34a | 0.27a | — | |

| Team size | −0.03 | −0.01 | −0.10 | −0.12 | −0.03 | 0.02 | — |

Abbreviation: EHR indicates electronic health record.

Denotes significance at P < .001.

Discussion

Our Web-based case system constructed to train and assess virtual health care teams successfully engaged a large number of students across four professions in a longitudinal team exercise. Using an interface resembling an EHR with an appended message board, students answered case-related knowledge questions individually and worked asynchronously to answer the same questions as a team. This approach overcame the logistical difficulties (e.g., scheduling and space) inherent in providing a longitudinal IPE team experience20,21 and provided extensive, detailed objective assessment data of both individual and team performance. As such, this approach is a novel and effective method to teach and assess virtual teams engaged in interprofessional practice.

The data from our case system demonstrate that the students functioned and could be assessed as virtual teams.6 The case activity measures showed that all students were active participants in team activities, yet they infrequently met face-to-face. Although faculty felt that a face-to-face orientation was important and expanded that orientation by adding a team charter exercise between semesters, no difference was observed in team scores and case activity measures before and after this change. Whether the face-to-face orientation could be performed virtually deserves further study.

More important, higher levels of team collaboration were correlated with better performance. Case activity measures of collaboration, such as the number of message board posts/replies and the number of views of message board posts, were correlated with both team and individual scores, while noncollaborative measures of case activity, such as number of EHR entries and team size, were not correlated with team score. These findings suggest that team score could be a proxy measure for case activity and, potentially, virtual team performance. High-performing virtual teams outside of health care have clear channels of communication and share information equally,3 but some virtual team members can be detached and participate less frequently than others.5 In our study, we observed both phenomena: Teams with a more active exchange of information had higher scores, and those students and teams that were less engaged had lower scores. In addition, mean team scores exceeded mean individual scores by a considerable margin, and the variance of team scores was less than the variance of individual scores. These findings demonstrate the benefit of this collaborative approach to both student learning and assessment by faculty.

With the emergence of competency-based education,29 new assessment tools are needed to evaluate interprofessional practice and the use of information technology. The outputs from our case system potentially provide a new approach to student assessment in both interprofessional practice and the use of EHRs. Further efforts to correlate behaviors with scalable outcomes (e.g., individual or team score, number of views of message board posts) could add a powerful new tool for educators. Individuals with lower scores may need more coaching to learn the collaborative attitudes needed in modern health care. Although linking performance more closely with a student’s grade may improve her or his engagement, some students may lack the internal motivation to seek out collaboration independently. How poor performance as quantified by the outputs from our case system is linked to other deficiencies in a student’s academic record, application data, and practice outcomes should be evaluated in the future. Importantly, the finding that social work students scored lower as individuals likely relates to the biomedical focus of many of the questions in the case system; content then must be adjusted to the type and level of the students participating. For each profession, setting minimum passing thresholds for knowledge and case activity might represent a reasonable pathway to defining individual competency.

Finally, outputs from our case system created a rich database for the future study of virtual teams. For example, different approaches to training virtual teams (i.e., varying the intensity and duration of training and altering the approach to feedback) should be studied to examine the content and instructional methods needed to appropriately train team members. In addition, studying the leadership and teamwork patterns within the teams could identify how virtual health care teams can best approach work and suggest avenues to support implementation research in the practice environment.

Our study has several limitations. First, we conducted it at one institution, which restricts the generalizability of our findings, and reproducibility of our case system may be constrained by the cost of software development. Second, some of the teams’ work may have taken place outside of the Web-based case system and therefore was not measured in the data generated by the system. Next, only four professions were represented on the interprofessional teams. Students from other disciplines can be added to the case system as long as these additions are balanced with appropriate representation of the professions in case content and questions. Finally, the study represents a six-week exposure in an uncontrolled environment. Future studies, such as those in which trained observers evaluate the impact of training on actual interprofessional practice in the clinical environment, are needed to assess how performance in a training environment links to the behaviors of individuals and teams in the clinical setting.

Conclusions

A Web-based case system provided an effective platform to teach and assess the competencies needed for virtual teams. Students worked together asynchronously to make care decisions for an older adult. The experience engaged 522 students and overcame many of the barriers inherent in IPE.20,21 In addition, the case system provided data about student and team performance, which demonstrated correlations between case system activity measures and knowledge scores. These outputs could be used to assess individual competency in interprofessional practice and the use of information technology. Expanding and refining this approach to education may help to train students and practitioners to function effectively on virtual teams in many venues and may help them to overcome the practice challenges facing health care teams.

Acknowledgments:

The authors wish to thank Jessica Evans of Virginia Commonwealth University School of Medicine for her assistance in data collection and management and Chris Stephens and John Priestley of Virginia Commonwealth University School of Medicine for their role in the development of the Web-based case system.

Funding/Support:

This project was funded by a grant from the Donald W. Reynolds Foundation. The authors also receive support from the Josiah H. Macy, Jr. Foundation (A.W.D.), a grant award from the National Institutes of Health (UL1TR000058) (P.E.M., D.D.), and a grant award from the Health Resources Services Administration/Department of Health and Human Services (UD7HP26044A0) (A.W.D., P.A.B., P.E.M., M.F., A.C., P.P.). The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the Donald W. Reynolds Foundation, the Josiah H. Macy, Jr. Foundation, the National Center for Advancing Translational Sciences, the National Institutes of Health, or the Health Resources Services Administration/Department of Health and Human Services. The funders had no role in the design, conduct, data analysis, or manuscript preparation of this study.

Footnotes

Other disclosures: Three authors (A.W.D., P.A.B., J.B.) own a pending patent related to the Web-based case system and part of a company to which their university has licensed the underlying technology. The authors deny any other existing conflicts of interest.

Ethical approval: The institutional review board of Virginia Commonwealth University granted ethical approval on October 8, 2012 (HM20001719).

Previous presentations: Some of the findings reported here were presented at the Collaborating Across Borders–IV conference (June 2013, Vancouver, British Columbia, Canada), the annual meeting of the Donald W. Reynolds Foundation (October 2013, San Diego, California), the American Geriatrics Society Annual Meeting (May 2013, Grapevine, Texas), and the All Together Better Health 7 conference (June 2014, Pittsburgh, Pennsylvania).

Contributor Information

Alan W. Dow, assistant vice president of health sciences for interprofessional education and collaborative care and professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Peter A. Boling, chair, Division of Geriatrics, and professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Kelly S. Lockeman, assistant professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Paul E. Mazmanian, associate dean of assessment and evaluation studies and professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Moshe Feldman, assistant professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Deborah DiazGranados, assistant professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Joel Browning, director of academic information systems, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Antoinette Coe, graduate student, Virginia Commonwealth University School of Pharmacy, Richmond, Virginia.

Rachel Selby-Penczak, assistant professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Sarah Hobgood, assistant professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Linda Abbey, associate professor of medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Pamela Parsons, associate professor of nursing and medicine, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Jeffrey Delafuente, associate dean of academic affairs and professor of pharmacy, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Suzanne F. Taylor, instructor of occupational therapy, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

References

- 1.Greiner AC, Knebel E, eds. Health Professions Education: A Bridge to Quality. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]

- 2.Blumenthal D The future of quality measurement and management in a transforming health care system. JAMA. 1997;278:1622–1625. [PubMed] [Google Scholar]

- 3.Driskell JE, Radtke PH, Salas E. Virtual teams: Effects of technological mediation on team performance. Group Dyn. 2003;7:297–323. [Google Scholar]

- 4.Taha LH, Caldwell BS. Social isolation and integration in electronic environments. Behav Inf Technol. 1993;12:276–283. [Google Scholar]

- 5.Parks CD, Sanna LJ. Group Performance and Interaction. Boulder, Colo: Westview Press; 1999. [Google Scholar]

- 6.Levi D Group Dynamics for Teams. 2nd ed. Thousand Oaks, Calif: Sage; 2007. [Google Scholar]

- 7.Roch SG, Ayman R. Group decision making and perceived decision success: The role of communication medium. Group Dyn. 2005;9:15–31. [Google Scholar]

- 8.Institute of Medicine. Interprofessional Education for Collaboration: Learning How to Improve Health From Interprofessional Models Across the Continuum of Education to Practice. Washington, DC: National Academies Press; 2013. [PubMed] [Google Scholar]

- 9.Reeves S, Goldman J, Gilbert J, et al. A scoping review to improve conceptual clarity of interprofessional interventions. J Interprof Care. 2011;25:167–174. [DOI] [PubMed] [Google Scholar]

- 10.Dow AW, DiazGranados D, Mazmanian PE, Retchin SM. Applying organizational science to health care: A framework for collaborative practice. Acad Med. 2013;88:952–957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Retchin SM. A conceptual framework for interprofessional and co-managed care. Acad Med. 2008;83:929–933. [DOI] [PubMed] [Google Scholar]

- 12.Reeves S, Lewin S, Espin S, Zwarenstein M. Interprofessional Teamwork for Health and Social Care. Ames, Iowa: Wiley-Blackwell; 2010. [Google Scholar]

- 13.Cook DA, Hatala R, Brydges R, et al. Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. JAMA. 2011;306:978–988. [DOI] [PubMed] [Google Scholar]

- 14.Ellaway RH, Graves L, Greene PS. Medical education in an electronic health record-mediated world. Med Teach. 2013;35:282–286. [DOI] [PubMed] [Google Scholar]

- 15.Chaudhry B, Wang J, Wu S, et al. Systematic review: Impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:742–752. [DOI] [PubMed] [Google Scholar]

- 16.Goveia J, Van Stiphout F, Cheung Z, et al. Educational interventions to improve the meaningful use of electronic health records: A review of the literature: BEME guide no. 29. Med Teach. 2013;35:e1551–e1560. [DOI] [PubMed] [Google Scholar]

- 17.March CA, Steiger D, Scholl G, Mohan V, Hersh WR, Gold JA. Use of simulation to assess electronic health record safety in the intensive care unit: A pilot study. BMJ Open. 2013;3:e002549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Milano CE, Hardman JA, Plesiu A, Rdesinski RE, Biagioli FE. Simulated electronic health record (Sim-EHR) curriculum: Teaching EHR skills and use of the EHR for disease management and prevention. Acad Med. 2014;89:399–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Interprofessional Education Collaborative Expert Panel. Core Competencies for Interprofessional Collaborative Practice: Report of an Expert Panel. Washington, DC: Interprofessional Education Collaborative; 2011. [Google Scholar]

- 20.Jones KM, Blumenthal DK, Burke JM, et al. Interprofessional education in introductory pharmacy practice experiences at US colleges and schools of pharmacy. Am J Pharm Educ. 2012;76:80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lawlis TR, Anson J, Greenfield D. Barriers and enablers that influence sustainable interprofessional education: A literature review. J Interprof Care. 2014;28:305–310. [DOI] [PubMed] [Google Scholar]

- 22.Parmelee D, Michaelsen LK, Cook S, Hudes PD. Team-based learning: A practical guide: AMEE guide no. 65. Med Teach. 2012;34:e275–e287. [DOI] [PubMed] [Google Scholar]

- 23.Fatmi M, Hartling L, Hillier T, Campbell S, Oswald AE. The effectiveness of team-based learning on learning outcomes in health professions education: BEME guide no. 30. Med Teach. 2013;35:e1608–e1624. [DOI] [PubMed] [Google Scholar]

- 24.Abu-Rish E, Kim S, Choe L, et al. Current trends in interprofessional education of health sciences students: A literature review. J Interprof Care. 2012;26:444–451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Reeves S, Perrier L, Goldman J, Freeth D, Zwarenstein M. Interprofessional education: Effects on professional practice and healthcare outcomes (update). Cochrane Database Syst Rev. 2013;3:CD002213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Leipzig RM, Granville L, Simpson D, Anderson MB, Sauvigne K, Soriano RP. Keeping granny safe on July 1: A consensus on minimum geriatrics competencies for graduating medical students. Acad Med. 2009;84:604–610. [DOI] [PubMed] [Google Scholar]

- 27.McAlpine M, Hesketh I. Multiple response questions: Allowing for chance in authentic assessments. Paper presented at: 7th International CAA Conference; 2003; Loughborough, UK. [Google Scholar]

- 28.Moore DE Jr, Green JS, Gallis HA. Achieving desired results and improved outcomes: Integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29:1–15. [DOI] [PubMed] [Google Scholar]

- 29.Holmboe ES, Sherbino J, Long DM, Swing SR, Frank JR. The role of assessment in competency-based medical education. Med Teach. 2010;32:676–682. [DOI] [PubMed] [Google Scholar]