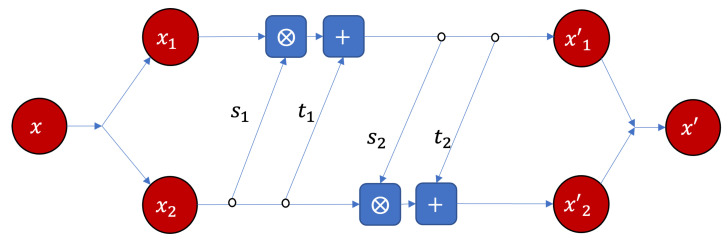

Figure 2.

The RealNVP neural network implementation of the basic module of the bijector , where and are all feed-forward neural networks with three layers, 64 hidden neurons, and ReLU active function. s and s share parameters, respectively. ⨂ and + represent element-wised product and addition, respectively. and .