Abstract

Objectives

To describe perceptions of providing, and using rapid evidence, to support decision making by two national bodies (one public health policy and one front-line clinical practice) during the COVID-19 pandemic.

Design

Descriptive qualitative study (March–August 2020): 25 semistructured interviews were conducted, transcribed verbatim and thematically analysed.

Setting

Data were obtained as part of an evaluation of two Irish national projects; the Irish COVID-19 Evidence for General Practitioners project (General Practice (GP) project) which provided relevant evidence to address clinical questions posed by GPs; and the COVID-19 Evidence Synthesis Team (Health Policy project) which produced rapid evidence products at the request of the National Public Health Emergency Team.

Participants

Purposive sample of 14 evidence providers (EPs: generated and disseminated rapid evidence) and 11 service ssers (SUs: GPs and policy-makers, who used the evidence).

Main outcome measures

Participant perceptions.

Results

The Policy Project comprised 27 EPs, producing 30 reports across 1432 person-work-days. The GP project comprised 10 members from 3 organisations, meeting 49 times and posting evidence-based answers to 126 questions. Four unique themes were generated. ‘The Work’ highlighted that a structured but flexible organisational approach to producing evidence was essential. Ensuring quality of evidence products was challenging, particularly in the context of absent or poor-quality evidence. ‘The Use’ highlighted that rapid evidence products were considered invaluable to decision making. Trust and credibility of EPs were key, however, communication difficulties were highlighted by SUs (eg, website functionality). ‘The Team’ emphasised that a highly skilled team, working collaboratively, is essential to meeting the substantial workload demands and tight turnaround time. ‘The Future’ highlighted that investing in resources, planning and embedding evidence synthesis support, is crucial to national emergency preparedness.

Conclusions

Rapid evidence products were considered invaluable to decision making. The credibility of EPs, a close relationship with SUs and having a highly skilled and adaptable team to meet the workload demands were identified as key strengths that optimised the utilisation of rapid evidence.

Ethics approval

Ethical approval was obtained from the National Research Ethics Committee for COVID-19-related Research, Ireland.

Keywords: QUALITATIVE RESEARCH, PRIMARY CARE, Public health, COVID-19, Evidence-Based Practice

WHAT IS ALREADY KNOWN ON THIS TOPIC

The response to COVID-19 has highlighted a lack of emergency health crisis preparedness, and governments, health institutions, and clinicians have, at times, advocated unproven management strategies inconsistent with evidence-based reasoning.

WHAT THIS STUDY ADDS

The perceived credibility of the evidence providers (those generating and disseminating rapid evidence) is critical to fostering trust in the service users (policy makers and front-line clinicians).

Close working relationships between evidence producers and service users are crucial.

Having highly skilled and flexible staff who are ready to pivot to meet demand is critical to the production of rapid evidence.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE AND/OR POLICY

Rapid evidence products can provide invaluable support at the national policy-making level and to direct patient care, where urgent responses are required.

Investing resources now, to plan for and embed, rapid evidence synthesis support, is crucial to national preparedness for future outbreak responses and other emergency situations

Background

SARS-CoV-2, the virus that causes COVID-19, spreads rapidly, causing millions of cases and deaths globally. Countries implemented numerous public health measures, including social distancing and lockdowns, to control its spread and impact, and health services made rapid adjustments to delivery.1

Evidence-based decision making (taking policy decisions based on best available evidence through systematic and transparent processes2 and evidence-based medicine (combining clinical expertise, patient preferences and best-available evidence when making decisions for an individual3) are central to optimising public health and clinical care. Evidence synthesis (review of existing research using systematic and explicit methods in order to clarify the evidence base4) is the cornerstone of evidence-based healthcare decisions, at both policy and individual patient levels. However, the relationship between provision of research evidence and subsequent decision making is complex. For example, despite being aware of their potential value, decision makers infrequently use evidence syntheses, such as systematic reviews, in the decision making process.5 6 The response to COVID-19 has highlighted a lack of emergency health crisis preparedness,7 and governments, health institutions and clinicians have, at times, advocated unproven management strategies inconsistent with evidence-based reasoning.8 In a pandemic context factors such as scientific uncertainty and the lack of clear and rapidly available evidence may impact on the use of evidence.9 In order to provide timely evidence to support decision making, many organisations have moved from traditional systematic reviews (which can take an average of 15 months to complete10 to rapid evidence products, such as rapid reviews (approach in which systematic review processes are streamlined or accelerated to meet decision makers’ needs in a shorter time frame11 or rapid response briefs, which provide key evidence in emergency situations.12 Research in non-emergency conditions indicates that decision makers expect the validity of rapid reviews to approximate that of systematic reviews.13

Investment in public and primary care health emergency preparedness strategies to ensure that the capacities and capabilities for evidence-informed decision making are developed ahead of the next emergency is critical.2 9 Furthermore, increasing emphasis is being placed on the need to change the evidence ecosystem to become more useful for decision making.14 15

This study sought to contribute to current literature by describing and evaluating the provision of rapid evidence synthesis, using two national Irish projects that were developed to provide evidence syntheses for policy makers and evidence based clinical recommendations for front-line general practitioners (GPs), in the context of the COVID-19 global pandemic. The case studies reflect the spectrum between evidence synthesis and evidence based clinical recommendations, both of which were needed to support public health policy and front-line clinical practice.

Methods

Setting and context

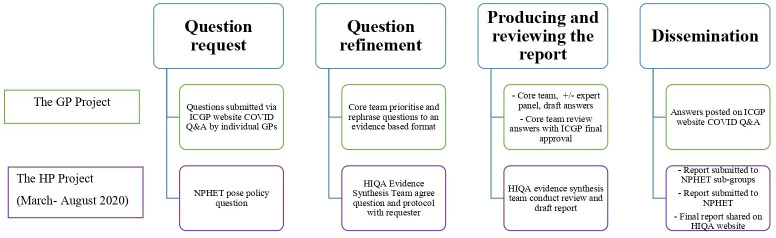

Data were obtained as part of an evaluation of two national projects that provided rapid evidence support, from March to August 2020 during the ‘first wave’ of the pandemic. The projects operated as separate, independent entities, see table 1 and figure 1.

Table 1.

Overview of included national projects

| Characteristics | GP project | HP project |

| Full title | Irish COVID-19 Evidence for General Practitioners | COVID-19 Evidence Synthesis Team |

| New or existing team | New team, established April 2020, comprised largely of staff from universities or the ICGP who volunteered their time | Existing team, employees of the Health Technology Assessment (HTA) and a related Health Research Board funded project were redeployed to the COVID-19 Evidence Synthesis Team full time. |

| Organisations involved |

|

|

| Predominant rapid evidence products | Rapid response (evaluate the literature to present the end-user with an answer based on the best available evidence16 | Rapid review (perform a synthesis to provide the end user with a conclusive answer about the direction of evidence and possibly strength of the evidence.16

These followed a standardised protocol developed a priori,39 in line with Cochrane rapid review methodology guidance.40 Abbreviated steps included: limiting the scope, limiting database searching, single screening of title and abstracts and generally not conducting meta-analysis |

GP, General Practice; HP, Health Policy; ICGP, Irish College of General Practitioners.

Figure 1.

Overview of the project production processes. GP, General Practice; HIQA, Health Information and Quality Authority; HP, Health Policy; ICGP, Irish College of General Practitioners; NPHET, National Public Health Emergency Team; Q&A, questions and answer.

The Irish COVID-19 Evidence for GP project provided relevant evidence to address clinical questions (predominantly rapid responses16 posed by GPs through an online Questions and Answer (Q&A) Service, (figure 1) The COVID-19 Evidence Synthesis Team (Health Policy (HP) project) produced rapid evidence products (predominantly rapid reviews16 on various public health topics at the request of the National Public Health Emergency Team (figure 1).

Study design

In this descriptive qualitative study,17 data were collected using semistructured interviews with purposively selected key informants. This study was reported in accordance with the standards for reporting qualitative research.18

Recruitment and sampling

Key informants from both projects were categorised as either evidence providers (EPs), those who gathered, synthesised and disseminated the required evidence, and service users (SUs), those who requested, received and used the evidence. Participants were purposively selected, using a sampling framework to include relevant stakeholders, for example, policy-makers, evidence synthesis experts and clinicians. EPs and SUs were invited via email distributed by a project gatekeeper. Consented participants were interviewed by one of two interviewers (CK and MM, both female research assistants with previous qualitative analysis experience) who had no previous connection with either project.

Data collection

Semistructured qualitative interviews were conducted remotely (18 June 2020–8 July 2020), by telephone or video conferencing. Interviews lasted on average 40.5 min (15.25–90 min), were audiorecorded, transcribed verbatim and imported into NVivo V.10.

Interview guides (online supplemental appendix 1) were developed with reference to the knowledge mobilisation framework19 and process evaluation guidance,20 to inform the breadth of topics explored, however, these were not used as analytical devices.20 Minor changes were made to the topic guide to reflect the details of the two projects, and the roles of the EP versus SU. Participants were pseudonymised according to their associated project and chronological order of interviews.

bmjebm-2021-111905supp001.pdf (170.6KB, pdf)

For context, quantitative descriptive data for the HP project data were collated over a 25-week period (9 March 2020–28 August 2020), and a 21-week period (6 April 2020–3 August 2020) for the GP project.

Data analysis

Thematic analysis21 of the data was conducted by six authors—two independent researchers (CK and MM), two researchers embedded in the HP team (BC and PB) and two researchers embedded in the GP team (MEK and LH), all with experience in evidence synthesis and qualitative data collection and analysis. This combination of researchers captured both emic (insider) and etic (outsider) perspectives and provided for a rich analysis and thick description of the data.22 For example, having researchers who were embedded in both projects, ensured the research team was informed about contextual elements such as structures and relevant national policy developments, which enabled a deeper understanding of the nuances of the transcripts.

Initial inductive coding was undertaken by CK and MM independently, following reading and rereading of the transcripts (114 codes). The initial set of inductive codes was revised collaboratively through analytical discussions with the other four listed authors, based on their in-depth reading of the transcripts, and adjustments made to the coding of transcripts as appropriate. Inductive categories, subtheme and theme development was conducted collaboratively, via nine 1-hour online meetings and two half day online workshops. Further refinement of the themes was achieved by consensus with all six authors.

Patient and public involvement

The population impacted by this research are those involved in producing and using evidence synthesis in decision making. Stakeholders from these groups were involved in the design, conduct, reporting and dissemination of this research.

Results

Characteristics of projects and participants

The HP team comprised 27 EPs. The GP EPs comprised 10 core members supported by a wider expert panel of 50 (table 2). Across the study period, there was substantial evidence production activity. Thirty reports (table 2) and 21 updates were produced by the HP team in response to questions posed by policy-makers, while 360 questions were submitted to the GP service. Of these, 126 answers were posted on the website as multiple questions on the same topic were integrated into one response (table 2). The GP team met 49 times and attendance ranged from 4 to 8 people (mean 6). The Q&A homepage was served 21 600 times, and median ratings of the quality of the answers was 4.5, (range 1 (poor) to 5 (excellent)). The HP team recorded a total of 1432.4 person work days in the study period, an average of 2.3 days per staff member per week (table 2).

Table 2.

Characteristics of projects

| Characteristics | GP project | HP project |

| No of team members | Core team: 10 (4 ICGP, 4 AUDGPI, 2 PCCTNI) Expert panel: 50 |

27 (20 HTA staff, 4 other HIQA directorate staff, 3 RCSI collaborators) |

| Work time | Not recorded* | 1432.4 days, an average of 2.3 days per staff member per week |

| Team meetings | Number of meetings: 49 Meetings were daily (6 April 2020 to 3 May 2020), then two to three per week (until 28 June 2020, then weekly. Attendance Range: 4–8 people Mean: 6 |

Number of meetings: Not recorded. Meetings occurred daily (at a minimum) between small project teams. Attendance varied depending on the size of individual projects and tasks. |

| No of products | Questions submitted by GPs: 360 Answers posted to website†:126 Answers with updates posted: 42 Podcasts: 3 |

30 publications

21 updates of the publications above. |

| Website views | The ICGP Q&A homepage has been served a total of 21 600 times during the period April - August inclusive.‡ Times podcasts listened to: Podcast 1: 70 Podcast 2: 86 Podcast 3: 104 |

HIQA unique webpage views: 39 788 |

| GP rating of helpfulness of answers | Answers rated: 65 Median rating: 4.5, (range 1–5, 5 being the best) |

Not applicable |

| Social media engagement on average§ Instagram Posts Instagram stories |

Not available | Reach 729/Engagement 44 Engagement rate 4%/ Impressions 4357 Engagement 82/ Impressions 2075 Reach 384/Impressions 395 Impression 199 |

*As the GP project comprised mostly of staff volunteering their time, formal records of time spent were not collated.

†In some cases, multiple questions on the same topic were integrated into one question and answer. Questions dealing with specific patient cases or local operational issues were not answered.

‡A summary produced from the server log associated with the Q&A section of the ICGP website gave an indication of website activity. It is important to note that a server log is a log file created and maintained by a server consisting of a list of activities; it does not collect user-specific information so the logs below are not unique. This summary is from the period April–August inclusive. The term ‘served’ refers to activity of all kind. ‘Served’ does not mean ‘viewed’ or ‘downloaded’.

§The engagement rate quantifies the level of total engagement by total account holders but each platform calculates this rate differently so they are not comparable across platforms. Impressions are the number of times content is displayed, regardless of whether it was clicked.

AUDGPI, the Academic Departments of General Practice in Ireland; GP, General Practice project; HIQA, Health Information and Quality Authority; HP, Health Policy project; HTA, Health Technology Assessment; ICGP, Irish College of General Practitioners; RCSI, Royal College of Surgeons in Ireland; PCCTNI, Primary Care Clinical Trials Network in Ireland; Q&A, Questions and Answer.

A total of 25 interviews were conducted with 14 EPs and 11 SUs (table 3).

Table 3.

Qualitative sample characteristics (n=25)

| Characteristics | GP project | HP project | Total |

| Role | |||

| Evidence providers | 7 | 7 | 14 |

| Service users | 5 | 6 | 11 |

| Gender | |||

| Male | 6 | 5 | 11 |

| Female | 6 | 8 | 14 |

GP, General Practice; HP, Health Policy.

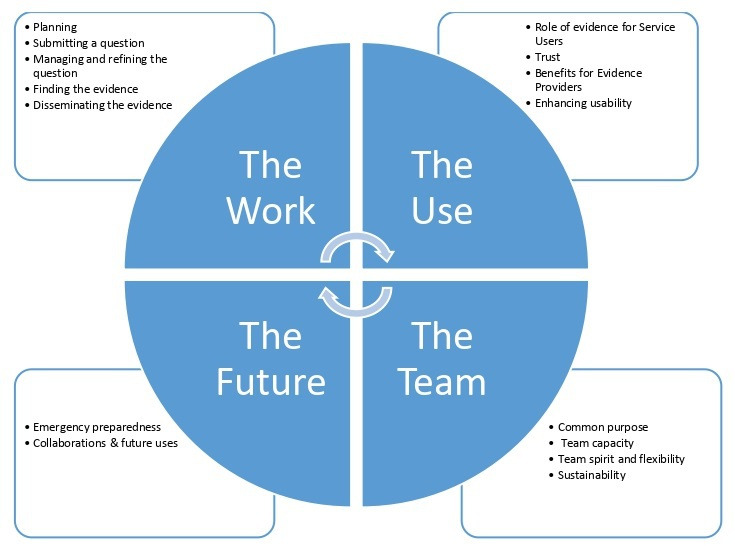

In total, 114 codes were generated across the full dataset which were categorised into four themes and 16 subthemes (figure 2). ‘The work’ was the largest theme, for both EPs and SUs on both projects, followed by ‘The team’, ‘The Use’ and ‘Future Planning’. Within each theme, certain subthemes were more pertinent to one group over another. For example, within ‘The work’, subthemes such as ‘finding the evidence’ represented the work of the EPs, more than the work of the SU’s.

Figure 2.

Overview of themes.

The Work: ‘how we can make this work?’(GP EP 01)

This theme describes participants' perceptions of the work conducted by both services, and factors that impacted this. Participants identified five steps, presented as subthemes. While the broader aspects of the work was similar for both the GP and HP services, there were some distinct and subtle differences highlighted.

Planning

EPs strongly agreed that having a structured approach to evidence production was an essential part of the process—’it was all about the planning at the beginning…’ (GP EP 04). The HP team adapted their existing HTA processes to meet the needs presented by the COVID-19 pandemic, with a focus on conducting rapid reviews as opposed to traditional systematic reviews and full health technology assessments. Whereas the GP service was a completely new collaboration requiring establishment of terms of reference and processes. It was essential however that these structured approaches were flexible enough to adapt to the changing demands of this dynamic situation and ‘…evolve as time went on’ (HP EP 04).

Submitting a question

GP questions were submitted directly via the ICGP website which was seen as ‘very simple… (and) user friendly’ (GP SU 01). Whereas with the HP SUs emailed a specified contact person, which they considered very important in terms of accessibility: ‘So I think that accessibility has been probably the highlight in many respects. So it’s not like you’re submitting a question online that it disappears into the ether for four or six weeks and then you get an answer.’ (HP SU 02).

Managing and refining the question

The process for managing a question in both projects is summarised in table 4. In the GP project, not all questions posed were answered and the core team themselves prioritised questions ‘…based on clinical importance and also the frequency that the question was being asked…and relevancy’ (GP EP 01).

Table 4.

Managing and refining the question across projects

| Characteristics | GP project | HP project |

| Setting the question | ‘…gets uploaded to a database, there is an administrator and one backup person within the college then who has access to that and they download it…, [it] is sent to the core group administrator, who disseminates it to the core group and then at our meeting we review all the questions that have come in…the question is assigned to one of the expert panel…’ (GP EP 04). | …they would engage in advance and clarify exactly what we wanted to figure out. And often that process was particularly helpful because I suppose it forces you to think a little bit more about the question and make sure that you have clarified in your own mind what exactly you’re trying to get out of it. (HP SU 02). |

| Refining a question | Questions were sometimes rephrased by the core team, for example where similar questions were merged …sometimes taking part of the question and rephrasing it so that it could be understood or could apply to more people (GP EP 04). | …what is the context of this, what are they using it for, because that then informs how you would write up the review (HP EP 07). |

In contrast, the HP process involved significant engagement between EPs and SUs to refine the question. This engagement was seen by EPs and SUs as an important step, the process of, ‘…making sure we have the right question’ (HP EP 02).

Finding the evidence

EPs felt very accountable for ensuring quality and rigour in the search for reliable evidence. The GP core team addressed this by creating a standardised template for EPs to complete when drafting an answer. However, there were still challenges dealing with conflicting, absent or poor-quality evidence and these were dealt with by discussion within the core team and accessing expert panel advice.

EPs expressed concerns regarding the quantity and quality of the evidence ‘…I think just the nature of the pandemic was that researchers were in a rush to try and get things out…So all of the evidence that we were looking at wasn’t of particularly good quality’ (HP EP 03). Furthermore, there was immense pressure to produce the evidence responses quickly resulting in modifications to the usual processes, for example, not doing certain steps in duplicate. For some of the team, the modifications caused anxiety: ‘…I suppose there'd be times when I'd be nearly having panic attacks but it was kind of anxiety inducing to be like I'm not 100%, I'm not now 95% confident that we have everything.’ (HP EP 05) However, the structured approach allowed EPs were able to stand over their evidence products.

Disseminating the evidence

SUs were generally positive about the format of the evidence products ‘The answers are really easy… very well written… written by people who are clinicians themselves so therefore the answers are very pertinent and relevant…’ (GP SU 02). Both services provided a summary answer, followed by a detailed answer and SUs appreciated this.

The use: ‘its allowed us to make decisions based on evidence’ (HP SU 02)

This theme describes participants’ perspectives of the use and value of the evidence services and suggestions for enhancing their usability.

The role of evidence for SUs

EPs identified the need for evidence in terms of supporting SUs’ care delivery and policy making: ‘Our key role or our key priority is to create the evidence for the people who are making the decisions’ (HP EP 02). There was a consistent view from SUs that the services provided were an ‘…indispensable part of the pandemic response…[that] definitely led to improved evidence-based decision-making…’ (HP SU 01). The evidence supported the delivery of care by providing SUs with information that they either could not find, or did not have time to search for themselves. This armed GPs ‘…to go back to my patients and say well this is what I know right now.’ (GP SU 1). The HP reports were considered extremely helpful to decision making in clearly highlighting the evidence available and what the evidence gaps were, enabling SUs to recognise when they needed to ‘come up with something based on the experts in the room.’ (HP SU 01).

Trust

SUs consistently saw the EPs as a trusted and reliable source. SUs had confidence in the high standards of work ‘I mean they’re one of the best people in the country doing this…So I suppose it was providing a trust mechanism to get us up-to-date information.’ (HP SU 03). In particular, GPs felt confidence in knowing the evidence was ‘collated by one of your own who knows about Irish General Practice’ (GP EP 7).

SUs recognised the evidence could be of poor quality and they trusted that the EPs would be able to identify this ‘The evidence synthesis were very good in teasing out where the evidence was and what it was saying, but also then teasing out what the evidence wasn’t saying and identifying those areas where there wasn’t a huge amount of evidence in the first place…’ (HP SU 02). SUs of both services noted that the evidence products were updated regularly, further building trust.

For the HP service, the majority of the SUs felt that making reports publicly available online contributed to transparency. However this did raise some concern for EPs who noted the difficulty of ‘managing’ (HP EP 07) controversial subjects while ‘not hiding the work’ (HP EP 07). Much of this management related to the accuracy of reporting across the media, and the accuracy of how the reports were discussed by political figures and public servants: ‘we did one on disease transmission in children and I think what actually ended up happening was that…it sort of blew up everywhere…quite different to what the actual original finding was’ (HP EP 02).

Benefits for EPs

The EPs reported a sense of satisfaction from the work, particularly when the work influenced policy: ‘I’ve been in academia and science a long time and I write impact reports and …you’re lucky if you get something through reports down the line that you can maybe link back like a thin thread back to your work. This is just so transparent, it’s just, it is incredibly motivating and satisfying and it makes you feel very worthwhile.’ (HP EP 07)

Being involved in the evidence synthesis process also provided an educational aspect and support clinically: ‘It was very helpful to me as a GP from, in my practice and also so I, I volunteered to work in the COVIDCOVID-19 hubs and I felt that being really aware of the evidence and the risk and the stuff around PPE was very helpful for me and I think also I was able to reassure my practice colleagues a lot…’ (GP EP 1)

Enhancing usability

With respect to the GP service, there was good concordance between SUs and EPs regarding ways to enhance the service. The predominant criticism was that the website functionality fell short of the needs of SUs. EPs agreed ‘I do think then the biggest one to me would be the search ability of the site. That it took us a while to get the tag lines right and they’re still pretty clunky’ (GP EP 2). A further criticism of the service related to poor communication with the SUs regarding the status of their submitted question ‘I was searching for maybe a week or two afterwards. I did see one or two answers that kind of pertained to it but I didn’t get a direct answer to my question but then again my other problem is they may have answered but I mightn’t have seen it’ (GP SU 2). EPs again, concurred with this.

With respect to the HP service, there was somewhat less agreement and consistency between the SUs and the EPs. SUs suggested that dissemination of evidence reports be more timely, there were a small number of suggestions about improving the format and consistency of the report. On the contrary, many EPs felt the timelines were too tight. They suggested spending more time on narrowing the scope of research questions and making the reports shorter. Having more feedback from the decision makers on how the reports were used would help to inform changes to the reports. Specific suggestions from individuals included allowing more time in the process for conducting the narrative types of analysis and write-ups.

The team: ‘The team that makes it. It really is’ (HP EP 07)

This theme, describes the perceptions of EPs relating to their lived experience of working on the projects, and the perceptions of SUs on their interactions with the EP teams. EPs from both services unanimously agreed that a strong sense of teamwork, positive collaborations, collegiality, support and flexibility were critical to the success of the services.

Common purpose

There was divergence of experience regarding the level of familiarity of team members with each other before they started working on COVID-19 projects. For some, existing relationships were capitalised on, for others it was a new venture that represented ‘a very steep learning curve’ (HP EP 07). Difficulties were ameliorated by all team members, new and previously acquainted, having ‘a real sense of contribution and purpose’ (GP EP 1), a ‘great sense of mission’ (HP EP 08), a commitment to ‘a common cause’ (GP EP 2). Team members were proud to be a part of the national response.

Team capacity

EPs consistently and strongly voiced the difficulties of managing the workload. As the GP service was largely voluntary, ‘there was a huge amount of goodwill’ involved in delivering the service.’ (GP EP 2). The workload demand impacted greatly on the lives of the team members ‘Oh it was immensely difficult…it’s been really, really tough for us like, and I know I'm not the only one like, working late nights, weekends, not getting much sleep no it’s definitely gotten better but initially kind of, the kind of March/April early May was just terrible I mean it was just, it was really stressful’ (HP EP 05). In spite of these demands the team members were highly committed to delivering and meeting deadlines. SUs were very cognisant of the workload and pressures EPs were under to produce rapid evidence reports ‘I mean there was one weekend we were looking for a particular set of information and I do remember it was a Friday afternoon and actually…And like her team went off and worked the weekend and had that back to us by the Sunday evening…you know, like everybody was and still is working seven days a week, you know, late into the evenings and the night’ (HP SU 03).

Team spirit and flexibility

Team members were highly supportive of each other and the. EP team’s greatest assets were flexibility and adaptiveness. As team members were under significant time pressure this brought with it challenges and necessitated a new way of working ‘But the sense was just, you know, we need to get these out as quick as we can, so we have to adapt’ (HP EP 08). SUs appreciated the team flexibility ‘t was almost like trying to see what was feasible, what could be done, what resources they had that could actually deliver what we required, that kind of bit, and there was huge flexibility there’ (HP SU 03).

Sustainability

EPs had concerns about the sustainability of the two services long-term: “…so you know there’s a lot of drop everything to work on this instead. And that’s obviously not sustainable in the long term because like I have to go back to my own job’ (HP EP 04).

One possible solution was to involve more people on the teams, but this was not without its drawbacks ‘Probably, the balance between having a small number of people who can move quickly to having a larger number so that the burden is shared a little more as regards answering questions…to keep it sustainable maybe it needs to be put out a little bit more’ (GP EP 2).

The future: ‘If we were to do it again’ (GP EP 2)

Participants considered what lessons were learnt that could guide pandemic preparedness and future applications of the services.

Emergency preparedness

One EP reflected the feeling of the majority when they commented that at the outset of the pandemic ‘…there was definitely a feeling of, of slight chaos and not knowing…’ (GP EP 7). Participants felt unprepared and did not anticipate how quickly things would change and evolve. The importance of reflecting on both of these national projects, with a view to forward planning for future pandemics and emergencies was emphasised. The value of advance planning and preparation came through strongly across both projects, and from SU and EP alike and there was unanimous agreement among SUs and EPs that rapid evidence synthesis and dissemination is essential to future pandemic preparedness: ‘I think that needs to be like having that access, like rapid access to a team that’s capable of doing these kind of rapid reviews, that should be, like absolutely has to be a part of our pandemic plan and our way forward in dealing with future pandemics’ (HP SU 01).

Collaborations

EPs from both services unanimously agreed that a strong sense of teamwork, positive collaborations, collegiality, support and flexibility were critical to the success of the services—‘I think the collaboration between the ICGP, the University departments and the primary care research network was really, worked really well and I would hope maybe as an exemplar of how we should continue to collaborate in a meaningful manner as well’ (GP EP 2). Several participants felt that nurturing and operationalising interagency collaborations during normal times, would provide a solid foundation for working together expediently during a crisis ‘…we could build a synergy there in peace time, because then if we have that done in peace time then pandemics would not be this, would not [be] as challenging.’ (HP SU 06). The importance of having flexible and adaptable protocols and a clear reporting structure was emphasised.

Discussion

Our study provides insight into how EPs and SUs perceived the provision of rapid evidence products, support and dissemination in the context of the global COVID-19 pandemic. We identified technical aspects of the process that worked and did not work, alongside a number of other factors considered important to the success of two distinct rapid evidence projects.

To meet urgent need for evidence to support decision making, evidence synthesis groups internationally have utilised approaches, for example, living reviews23 24 and rapid reviews.12 25–28 As in this study, rapid review teams elsewhere were mobilised from existing resources and expertise (eg, Norway26 and Canada)29 while others represent new collaborations (eg, Oxford).28 Similar levels of workload were reported elsewhere with turnaround times of up to 4 days in Norway and the USA.26 27 Evaluation of these approaches from the perspectives of EPs and SUs has however been limited. Before COVID-19, decision-makers indicated that they would accept some trade-off in validity in exchange for speed in evidence synthesis, however, they expect the validity of rapid reviews to come close to that of systematic reviews.13 30 While we did not specifically ask decision makers about their previous experience with rapid reviews, many of the participants had experience with using systematic reviews, particularly those who had previously worked with the HTA team. Having a highly skilled staff is critical to organisational readiness to produce rapid evidence products.16 The team involved in the HP project was a pre-existing HTA team with wide experience in conducting systematic reviews and HTAs who were mobilised quickly to provide support to policy-makers. Like other similar projects, this facilitated adaptation of existing processes to a rapid format.26 29

Data from SUs interviewed in this study confirm that rapid evidence products were invaluable at the national policy-making level and in patient care, in a real time situation where urgent responses were required. The service-users accepted the validity of the rapid review products largely based on trust and credibility. The credibility of a review producer has been reported to be critical to the acceptability and use of rapid evidence products.30 Our results reaffirm these findings. The HP EPs had established reputations and existing relationships with SUs which fostered trust and credibility, both crucial to increasing usability of the rapid reviews. While for the GP project, the established reputation of the collaborators, coupled with the knowledge that front-line GPs were involved, increased credibility and acceptability.

Improving networking between EPs and SUs, and the use of online platforms can facilitate access to knowledge and knowledge translation.2 While the GP project demonstrated both these aspects, front-line GPs as SUs were more removed from the EPs, than their counterparts in the HP project, and they noted difficulties in communication and usability of the ICGP website. Front-line GPs represent a very different evidence user in comparison to a national policy and guideline developer. Existing guidelines for health service decision-making in public health emergencies often focus on logistical considerations,31 or on secondary care settings and specific populations, such as people with cancer or diabetes.32 33 Our findings demonstrate that a completely new collaboration was able to mobilise and provide support to primary care in a relatively short period of time, however, this involved ‘a huge amount of goodwill’ (GP EP 2). EPs largely performed this additionally to other clinical and academic roles. Similar projects were established in the UK.28 Such projects based on goodwill and voluntary contribution will understandably have limited sustainability beyond the initial stages of an emergency. There is, therefore, a strong case for investment in primary health emergency preparedness strategies to develop capacities and capabilities for evidence-informed decision making ahead of the next emergency.2 9

The provision of rapid evidence products during COVID-19 has been challenging.12 First, the workload was enormous, as evidenced by the volume of reports produced during the period of this evaluation and by other similar teams26 28 29 There has been an explosion of COVID-19 literature, with an analysis of Scopus on 1 August 2021 identifying 210 183 COVID-19-related publications including 720 801 authors.34 This unprecedented level of publication creates huge workload for evidence synthesis steps such as searching and screening, resulting in long working hours which are unsustainable, particularly in voluntary roles. Second, COVID-19 research from the first wave of the pandemic is arguably of potentially lower quality than contemporaneous non-COVID-19 research.35–37 Evidence synthesis depends on the quality of primary research. Primary research that is not available, is biased, or selectively reported raises important concerns.14 This aspect of the rapid evidence process represented a significant concern for the EPs in this study. However, these concerns were offset somewhat by clear protocols and transparency of reporting regarding the quality and quantity of the evidence. In fact, this transparency was highlighted by service-users as an important factor in fostering credibility.

The experiences outlined in this report, similar to other teams,29 demonstrates that highly skilled staff are available and can be mobilised quickly to make a great contribution to decision making. However, the course of this pandemic and the extent of resourcing required also demonstrates that it is not feasible or appropriate to rely on the goodwill of individuals and organisations alone in planning for future health emergencies. Investment in infrastructure to enable and support rapid, widespread and long-term redeployment of highly skilled staff is essential. These data suggest that one mechanism for future pandemic preparedness is to repurpose and thus grow and sustain the processes and structures developed during COVID-19 (eg, repurposing of the GP project to provide a Q&A service for chronic disease management). This approach provides a framework for financial investment to enable the ongoing allocation of personnel, reducing the need for full redeployment of staff in response to a future health emergency.

Our study had several limitations. First the pool of EPs was limited by the nature of both projects, and several authors were study participants (see author contribution statement). Strict measures were followed to restrict their input, and they were not involved in any way with the data acquisition or analysis. Importantly the remaining EPs and all SUs, were independent of either project. Second there was a limited pool of SUs for whom the HP evidence syntheses was primarily intended and we did not interview all those involved. Third, although patients are increasingly involved in evidence syntheses,38 no patient representatives were part of these projects. Lastly, the perspectives of actors in other sectors are obviously crucial to consult when considering pandemic or national/global emergency preparedness, and future studies should include broader representation of respondents.

Conclusions

Evidence-based decision making and practice are central to population health and primary care, particularly during a pandemic. Our study provides insight into how EPs and SUs perceived the provision of rapid evidence products, support and dissemination in the context of the global COVID-19 pandemic. Rapid evidence products were considered invaluable to decision making. Key strengths enhancing evidence utility included the credibility of the EPs, a close working relationship between EPs and end users and having a highly skilled and adaptable team. Key challenges included workload demands, tight turnaround times, poor-quality evidence and communication issues. Investment is essential to ensure preparedness for future public health emergencies, building on learning gained from the COVID-19 pandemic experience.

Acknowledgments

The authors gratefully acknowledge the time provided by those who participated in the interviews.

Footnotes

BC and LH contributed equally.

Contributors: BC: conception and design, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content. LH: conception and design, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, administrative, technical, or material support. CK: acquisition of data, analysis and interpretation of data, critical revision of the manuscript for important intellectual content, administrative, technical or material support. MM: acquisition of data, analysis and interpretation of data, critical revision of the manuscript for important intellectual content, administrative, technical or material support. PB: acquisition of data, analysis and interpretation of data, critical revision of the manuscript for important intellectual content, administrative, technical or material support. MK: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. SS: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical, or material support. MR: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. CC: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. MO’N: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical, or material support. EW: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. AWM: conception and design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. MEK: conception and design, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, administrative, technical, or material support, supervision.*Due to their key roles as Evidence Providers, five authors were participants in the qualitative strand of this study as the pool of potential participants was limited. Apart from being interviewed, these authors were not involved in acquisition of data, analysis or interpretation of data. They had no access to the audio, interview transcripts or the quantitative data files. They did not participate in the writing the original draft of the paper. Their roles were strictly reserved to study conception, study design, critical revision of the manuscript for important intellectual content, administrative, technical or material support. MEK acts as guarantor.

Funding: BC is funded by Health Research Board (HRB) Emerging Investigator Award (EIA-2019-09). Funding was provided, for transcription costs, by the Irish College of General Practitioners (ICGP), the Academic Departments of General Practice in Ireland (AUDGPI) and the HRB Primary Care Clinical Trials Network in Ireland (PCCTNI).

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are available on reasonable request. The data sets generated and/or analysed during the current study are not publicly available due to privacy/confidentiality concerns. Reasonable requests for access can be made to the corresponding author who will consider any such requests in collaboration with the NREC COVID-19.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study involves human participants and was approved by Ethical approval was obtained from the National Research Ethics Committee for COVID-19-related Research (NREC COVID-19), Ireland. Participants gave informed consent to participate in the study before taking part.

References

- 1. Harris M, Bhatti Y, Buckley J, et al. Fast and frugal innovations in response to the COVID-19 pandemic. Nat Med 2020;26:814–7. 10.1038/s41591-020-0889-1 [DOI] [PubMed] [Google Scholar]

- 2. European Centre for Disease Prevention and Control . The use of evidence in decision-making during public health emergencies. Stockholm: ECDC, 2019. [Google Scholar]

- 3. Sackett DL, Rosenberg WM, Gray JA, et al. Evidence based medicine: what it is and what it isn't. BMJ 1996;312:71–2. 10.1136/bmj.312.7023.71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Gough D, Davies P, Jamtvedt G, et al. Evidence synthesis International (ESI): position statement. Syst Rev 2020;9:155. 10.1186/s13643-020-01415-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Tricco AC, Cardoso R, Thomas SM, et al. Barriers and facilitators to uptake of systematic reviews by policy makers and health care managers: a scoping review. Implement Sci 2015;11:4. 10.1186/s13012-016-0370-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Bowen S, Botting I, Graham ID, et al. Experience of Health Leadership in Partnering With University-Based Researchers in Canada - A Call to "Re-imagine" Research. Int J Health Policy Manag 2019;8:684–99. 10.15171/ijhpm.2019.66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Sturmberg JP, Tsasis P, Hoemeke L. COVID-19 – an opportunity to redesign health policy thinking. Int J Health Policy Manag 2020. 10.34172/ijhpm.2020.132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Djulbegovic B, Guyatt G. Evidence-based medicine in times of crisis. J Clin Epidemiol 2020;126:164–6. 10.1016/j.jclinepi.2020.07.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Salajan A, Tsolova S, Ciotti M, et al. To what extent does evidence support decision making during infectious disease outbreaks? A scoping literature review. Evid Policy 2020;16:453–75. 10.1332/174426420X15808913064302 [DOI] [Google Scholar]

- 10. Borah R, Brown AW, Capers PL, et al. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the prospero registry. BMJ Open 2017;7:e012545. 10.1136/bmjopen-2016-012545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Langlois EV, Straus SE, Antony J, et al. Using rapid reviews to strengthen health policy and systems and progress towards universal health coverage. BMJ Glob Health 2019;4:e001178. 10.1136/bmjgh-2018-001178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Tricco AC, Garritty CM, Boulos L, et al. Rapid review methods more challenging during COVID-19: commentary with a focus on 8 knowledge synthesis steps. J Clin Epidemiol 2020;126:177–83. 10.1016/j.jclinepi.2020.06.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Wagner G, Nussbaumer-Streit B, Greimel J, et al. Trading certainty for speed - how much uncertainty are decisionmakers and guideline developers willing to accept when using rapid reviews: an international survey. BMC Med Res Methodol 2017;17:121. 10.1186/s12874-017-0406-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Boutron I, Créquit P, Williams H, et al. Future of evidence ecosystem series: 1. Introduction evidence synthesis ecosystem needs dramatic change. J Clin Epidemiol 2020;123:135–42. 10.1016/j.jclinepi.2020.01.024 [DOI] [PubMed] [Google Scholar]

- 15. Akl EA, Haddaway NR, Rada G, et al. Future of evidence ecosystem series: evidence synthesis 2.0: when systematic, scoping, rapid, living, and overviews of reviews come together. J Clin Epidemiol 2020;123:162–5. 10.1016/j.jclinepi.2020.01.025 [DOI] [PubMed] [Google Scholar]

- 16. Hartling L, Guise J, Kato E. EPC Methods: An Exploration of Methods and Context for the Production of Rapid Reviews. Research White Paper.(Prepared by the Scientific Resource Center under Contract No 290-2012-00004-C) AHRQ Publication No 15- EHC008-EF Rockville, MD: Agency for Healthcare Research and Quality, 2015. Available: www.effectivehealthcare.ahrq.gov/reports/final.cfm [PubMed]

- 17. Bradbury-Jones C, Breckenridge J, Clark MT, et al. The state of qualitative research in health and social science literature: a focused mapping review and synthesis. Int J Soc Res Methodol 2017;20:627–45. 10.1080/13645579.2016.1270583 [DOI] [Google Scholar]

- 18. O'Brien BC, Harris IB, Beckman TJ, et al. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med 2014;89:1245–51. 10.1097/ACM.0000000000000388 [DOI] [PubMed] [Google Scholar]

- 19. Ward V, House A, Hamer S. Developing a framework for transferring knowledge into action: a thematic analysis of the literature. J Health Serv Res Policy 2009;14:156–64. 10.1258/jhsrp.2009.008120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: medical Research Council guidance. BMJ 2015;350:h1258. 10.1136/bmj.h1258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol 2006;3:77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 22. De Poy E, Gitlin L. Introduction to research: understanding and applying multiple strategies. 5th ed. St. Louis: Elsevier, 2015. [Google Scholar]

- 23. Agarwal A, Rochwerg B, Lamontagne F, et al. A living WHO guideline on drugs for covid-19. BMJ 2020;370:m3379. 10.1136/bmj.m3379 [DOI] [PubMed] [Google Scholar]

- 24. Boutron I, Chaimani A, Meerpohl JJ, et al. The COVID-NMA project: building an evidence ecosystem for the COVID-19 pandemic. Ann Intern Med 2020;173:1015–7. 10.7326/M20-5261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. EUnetHTA . EUnetHTA response to COVID-19 2021. Available: https://www.eunethta.eu/services/covid-19/ [Accessed 13 Oct 2021].

- 26. Fretheim A, Brurberg KG, Forland F. Rapid reviews for rapid decision-making during the coronavirus disease (COVID-19) pandemic, Norway, 2020. Euro Surveill 2020;25. 10.2807/1560-7917.ES.2020.25.19.2000687 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Murad MH, Nayfeh T, Urtecho Suarez M, et al. A framework for evidence synthesis programs to respond to a pandemic. Mayo Clin Proc 2020;95:1426–9. 10.1016/j.mayocp.2020.05.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. The centre for evidence-based medicine. Oxford COVID-19 evidence service, 2021. Available: https://www.cebm.net/oxford-covid-19-evidence-service/

- 29. Neil-Sztramko SE, Belita E, Traynor RL, et al. Methods to support evidence-informed decision-making in the midst of COVID-19: creation and evolution of a rapid review service from the National collaborating centre for methods and tools. BMC Med Res Methodol 2021;21:231. 10.1186/s12874-021-01436-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Hartling L, Guise J-M, Hempel S, et al. Fit for purpose: perspectives on rapid reviews from end-user interviews. Syst Rev 2017;6:32. 10.1186/s13643-017-0425-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Lee A, Chuh AAT. Facing the threat of influenza pandemic - roles of and implications to general practitioners. BMC Public Health 2010;10:661. 10.1186/1471-2458-10-661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Al-Quteimat OM, Amer AM. The impact of the COVID-19 pandemic on cancer patients. Am J Clin Oncol 2020;43:452–5. 10.1097/COC.0000000000000712 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Allweiss P. Diabetes and disasters: recent studies and resources for preparedness. Curr Diab Rep 2019;19:131. 10.1007/s11892-019-1258-7 [DOI] [PubMed] [Google Scholar]

- 34. Ioannidis JPA, Salholz-Hillel M, Boyack KW, et al. The rapid, massive growth of COVID-19 authors in the scientific literature. R Soc Open Sci 2021;8:210389. 10.1098/rsos.210389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Quinn TJ, Burton JK, Carter B, et al. Following the science? comparison of methodological and reporting quality of covid-19 and other research from the first wave of the pandemic. BMC Med 2021;19:46. 10.1186/s12916-021-01920-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Dobler CC. Poor quality research and clinical practice during COVID-19. Breathe 2020;16:200112. 10.1183/20734735.0112-2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Rosenberger KJ, Xu C, Lin L. Methodological assessment of systematic reviews and meta-analyses on COVID-19: a meta-epidemiological study. J Eval Clin Pract 2021;27:1123–33. 10.1111/jep.13578 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Ellis U, Kitchin V, Vis-Dunbar M. Identification and reporting of patient and public partner authorship on knowledge syntheses: rapid review. J Particip Med 2021;13:e27141. 10.2196/27141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Health Information and Quality Authority (HIQA) . Protocol for evidence synthesis support - COVID-19. Dublin: HIQA, 2020. https://www.hiqa.ie/sites/default/files/2020-04/Protocol-for-evidence-synthesis-support_1-4-COVID-19.pdf [Google Scholar]

- 40. Garritty C, Gartlehner G, Nussbaumer-Streit B, et al. Cochrane rapid reviews methods group offers evidence-informed guidance to conduct rapid reviews. J Clin Epidemiol 2021;130:13–22. 10.1016/j.jclinepi.2020.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjebm-2021-111905supp001.pdf (170.6KB, pdf)

Data Availability Statement

Data are available on reasonable request. The data sets generated and/or analysed during the current study are not publicly available due to privacy/confidentiality concerns. Reasonable requests for access can be made to the corresponding author who will consider any such requests in collaboration with the NREC COVID-19.