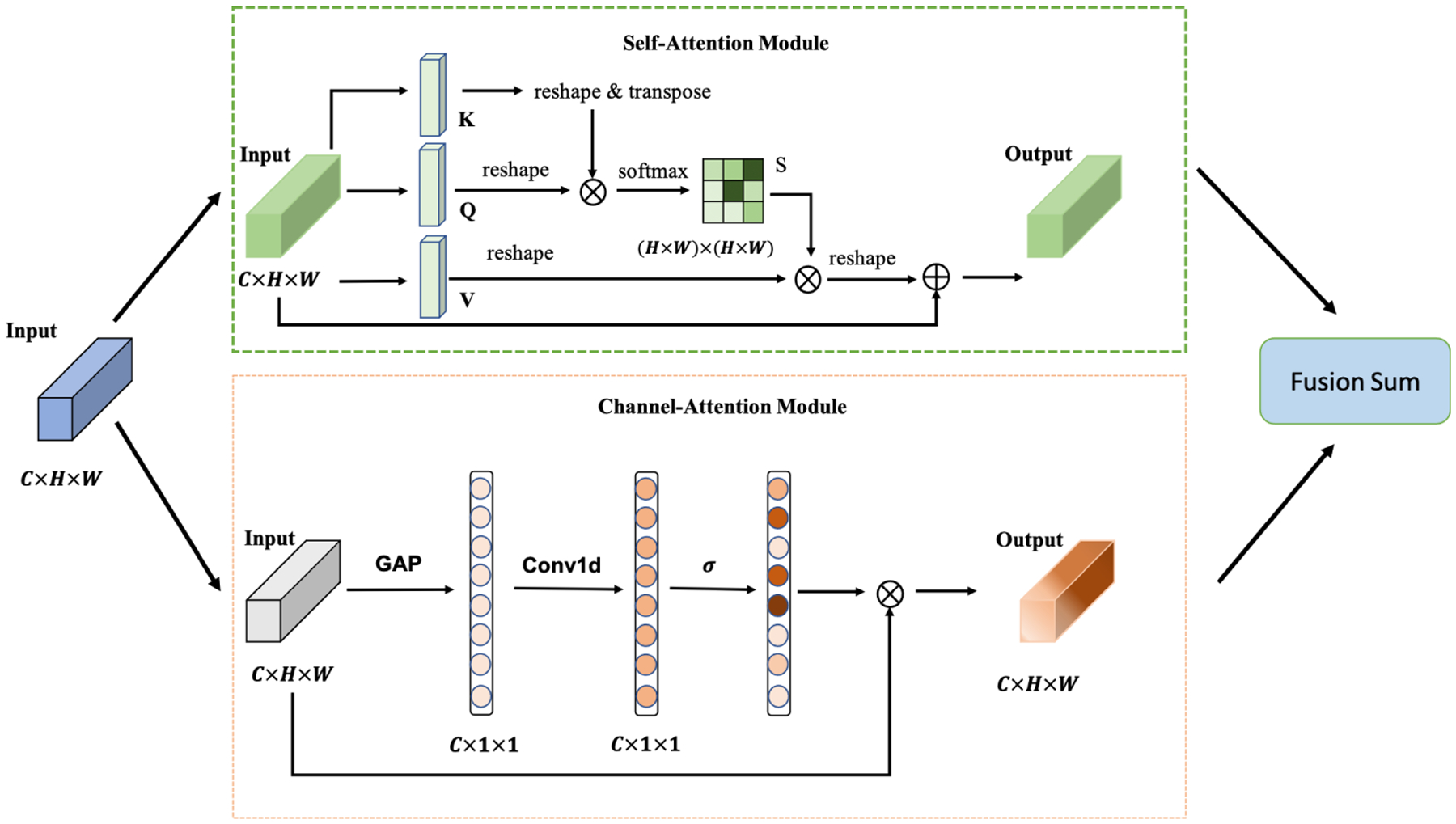

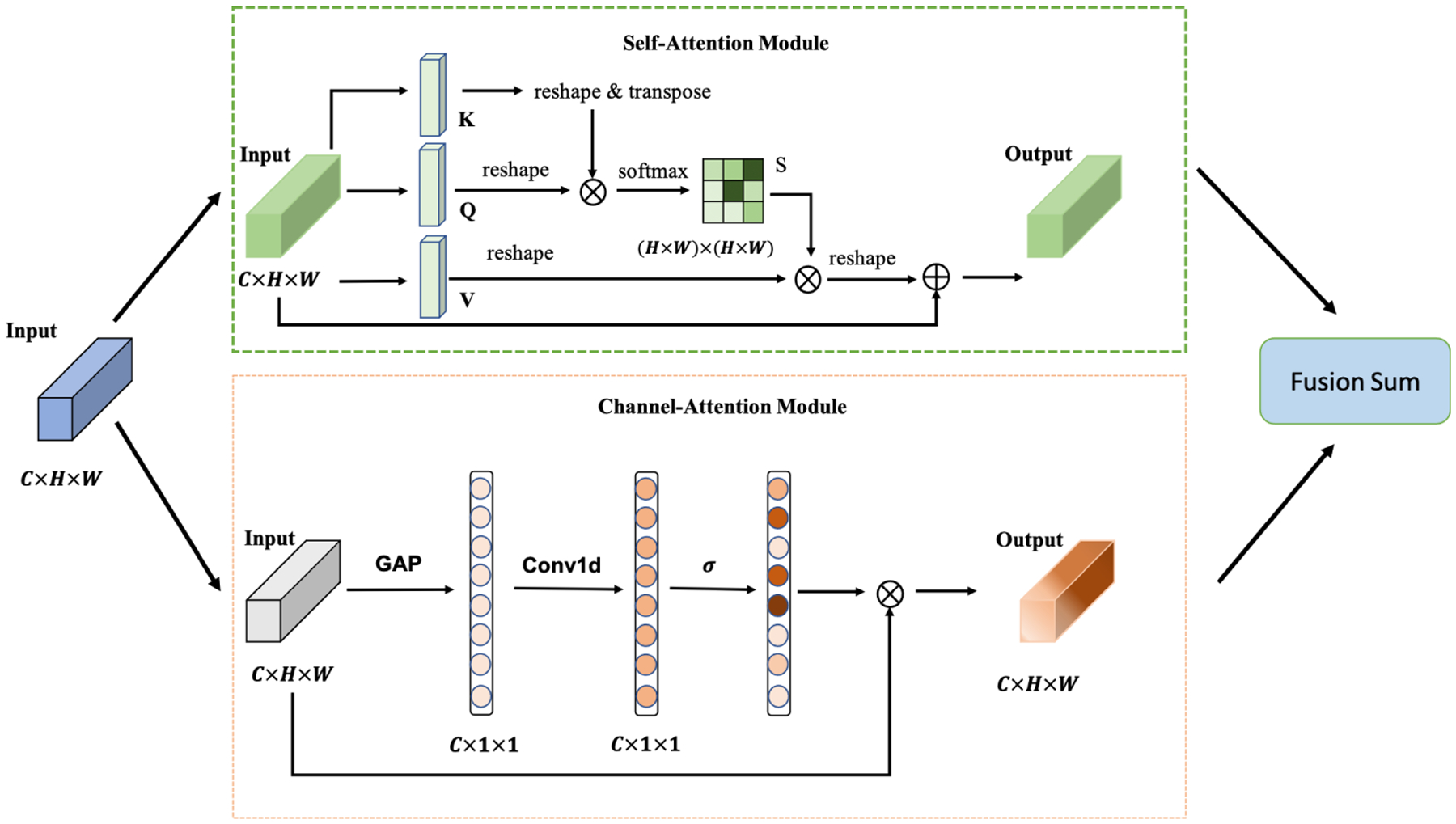

Figure 3.

The structure of the attention module. The input of the attention module is the extracted features of the CNN backbone. The output of the attention module is forwarded to the classifier.

The structure of the attention module. The input of the attention module is the extracted features of the CNN backbone. The output of the attention module is forwarded to the classifier.