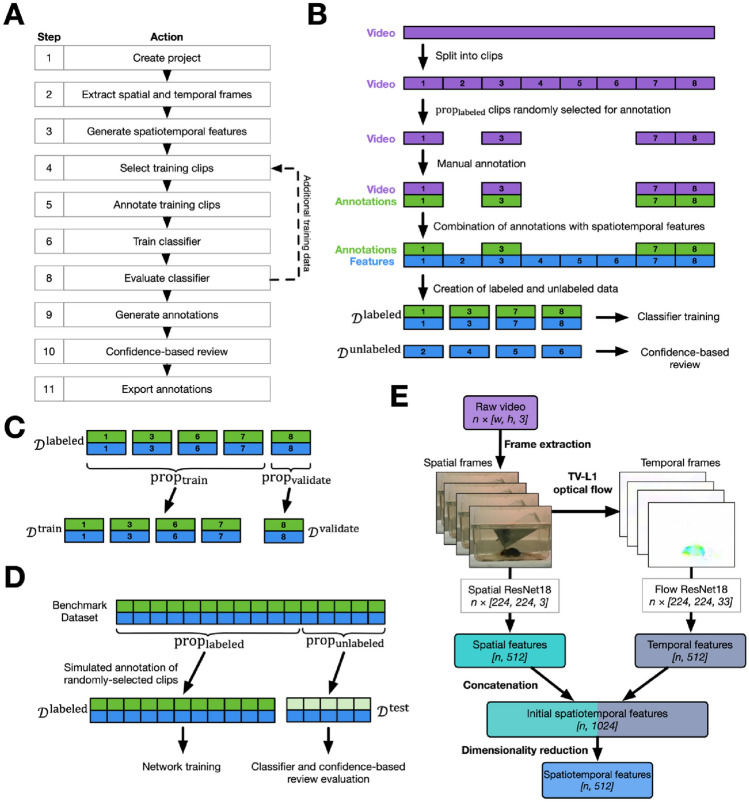

Figure 1.

Toolbox workflow and data selection process. (A) Workflow for the DeepAction toolbox. Arrows indicate the flow of project actions, with the dashed arrow denoting that, following training of the classifier, additional training data can be annotated and used to re-train the classifier. (B) An overview of the clip selection process. Long videos are divided into clips of a user-specified length, from which a user-specified proportion () are randomly selected for annotation (). The selected video clips are then annotated, and these annotations are used in combination with their corresponding features to train the classifier. The trained classifier is used to generate predictions and confidence scores for the non-selected clips (), which the user can then review and correct as necessary. (C) Labeled data are further divided into training () and validation () data. (D) Process for simulating clip-selection using our benchmark datasets, where we simulate selecting of the data for labeling () and evaluate it on the unselected data (). (E) Process to generate spatiotemporal features from video frames. Raw video frames are extracted from the video file (“frame extraction”). The movement between frames is calculated using TV-L1 optical flow and then represented visually as the temporal frames. Spatial and temporal frames are input into their corresponding pretrained CNN (“spatial ResNet18” and “flow Resnet18,” respectively), from which spatial and temporal features are extracted. The spatial and temporal features are then concatenated, and then their dimensionality is reduced to generate the final spatiotemporal features that are used to train the classifier. Dimensionality is shown in italicized brackets.