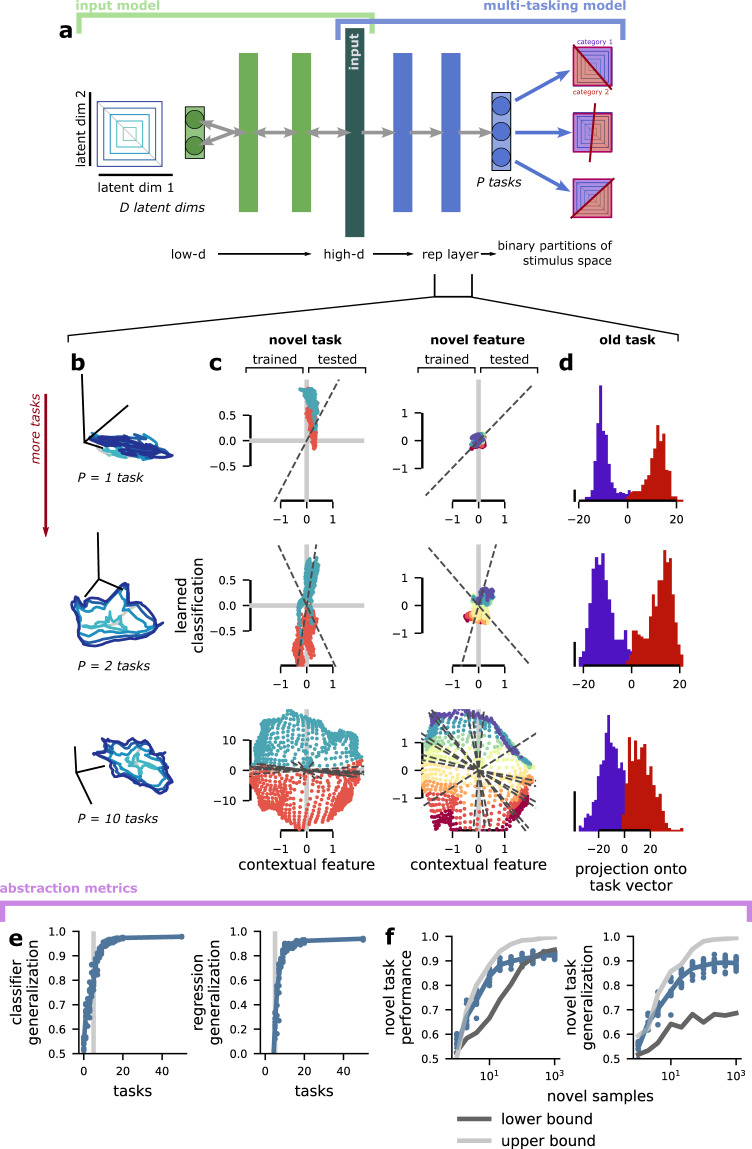

Fig. 3. The emergence of abstraction from classification task learning.

a Schematic of the multi-tasking model. It receives a high-dimensional input representation of D latent variables (here, from the standard input, as shown in Fig. 1e, left) and learns to perform P binary classifications on the latent variables. We study the representations that this induces in the layer prior to the output: the representation layer. b Visualization of the concentric square structure as transformed in the representation layer of a multi-tasking model trained to perform one (top), two (middle), and ten (bottom) tasks. The visualization procedure is the same as Fig. 2c. c The same as b, but for visualizations based on classifier (left) and regression (right) generalization. The classifier (regression) model is learned on the left side of the plot, and generalized to the right side of the plot. The output of the model is given on the y axis and each point is colored according to the true latent variable category (i.e., sign) or value. The visualization procedure is the same as Fig. 2e. The visualization shows that generalization performance increases with the number of tasks P (increasing from top to bottom). d The activation along the output dimension for a single task learned by the multi-tasking model for the two different output categories (purple and red). The distribution of activity is bimodal for multi-tasking models trained with one or two tasks, but becomes less so for more tasks. e The classifier (left) and regression (right) metrics applied to model representations with different numbers of tasks. f The standard (left) and generalization (right) performance of a classifier trained to perform a novel task with limited samples using the representations from a multi-tasking model trained with P = 10 tasks as input. The lower (dark gray) and upper (light gray) bounds are the standard or generalization performance of a classifier trained on the input representations (lower) and directly on the latent variables (upper). Note that the multi-tasking model performance is close to that of training directly on the latent variables in all cases.