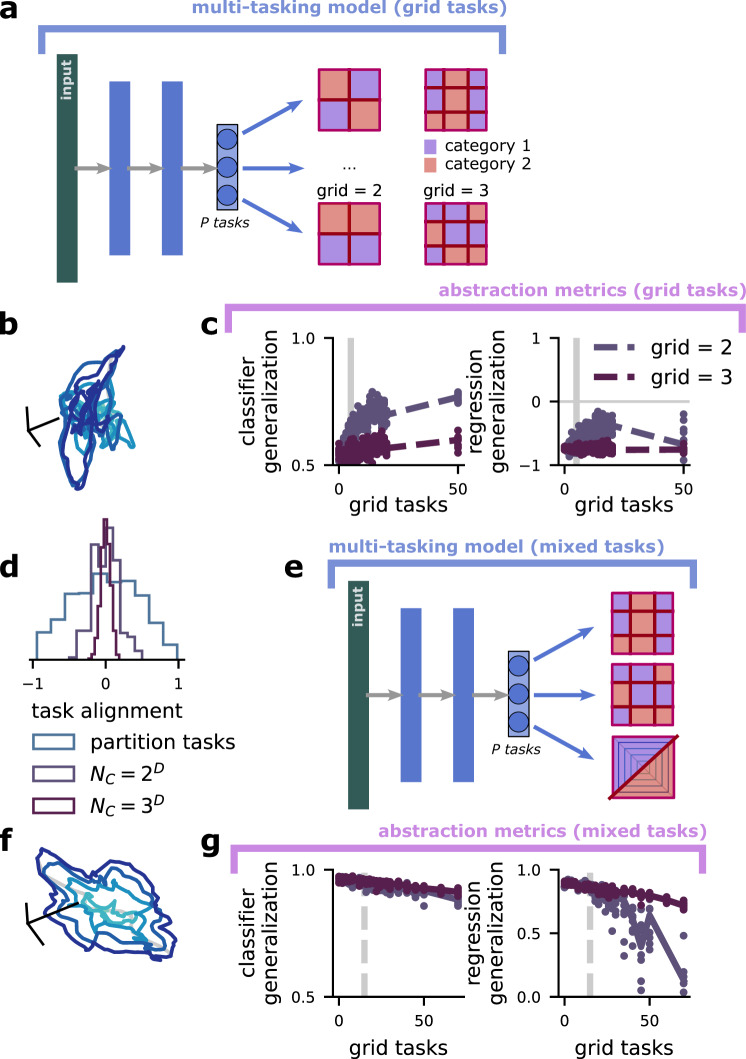

Fig. 4. Abstract representations emerge for heterogeneous tasks, and in spite of high-dimensional grid tasks.

a Schematic of the multi-tasking model with grid tasks. They are defined by the grid size, grid, the number of regions along each dimension (top: grid = 2; bottom: grid = 3), and the number of latent variables, D. There are gridD total grid chambers, which are randomly assigned to category 1 (red) or category 2 (blue). Some grid tasks are aligned with the latent variables by chance (as in top left), but this fraction is small for even moderate D. b Visualization of the representation layer of a multi-tasking model trained only on grid tasks, with P = 15. c Quantification of the abstraction developed by a grid task multi-tasking model. (left) Classifier generalization performance. (right) Regression generalization performance. d The alignment (cosine similarity) between between randomly chosen tasks for latent variable-aligned classification tasks, n = 2 and D = 5 grid tasks, and n = 3 and D = 5 grid tasks. e Schematic of the multi-tasking model with a mixture of grid and linear tasks. f Same as b, but for a multi-tasking model trained with a mixture of: P = 15 latent variable-aligned classification tasks and a variable number of grid tasks (x axis). g Same as c, but for a multi-tasking model trained with P = 15 latent variable-aligned classification tasks and a variable number of grid tasks. While the multi-tasking model trained only with grid tasks does not develop abstract representations, the multi-tasking model trained with a combination of grid and linear tasks does – even when the number of grid tasks outnumbers the number of linear tasks.