Abstract

Objective.

Fast and accurate auto-segmentation is essential for magnetic resonance-guided adaptive radiation therapy (MRgART). Deep learning auto-segmentation (DLAS) is not always clinically acceptable, particularly for complex abdominal organs. We previously reported an automatic contour refinement (ACR) solution of using an active contour model (ACM) to partially correct the DLAS contours. This study aims to develop a DL-based ACR model to work in conjunction with ACM-ACR to further improve the contour accuracy.

Approach.

The DL-ACR model was trained and tested using bowel contours created by an in-house DLAS system from 160 MR sets (76 from MR-simulation and 84 from MR-Linac). The contours were classified into acceptable, minor-error and major-error groups using two approaches of contour quality classification (CQC), based on the AAPM TG-132 recommendation and an in-house classification model, respectively. For the major-error group, DL-ACR was applied subsequently after ACM-ACR to further refine the contours. For the minor-error group, contours were directly corrected by DL-ACR without applying an initial ACM-ACR. The ACR workflow was performed separately for the two CQC methods and was evaluated using contours from 25 image sets as independent testing data.

Main results.

The best ACR performance was observed in the MR-simulation testing set using CQC by TG-132: (1) for the major-error group, 44% (177/401) were improved to minor-error group and 5% (22/401) became acceptable by applying ACM-ACR; among these 177 contours that shifted from major-error to minor-error with ACM-ACR, DL-ACR further refined 49% (87/177) to acceptable; and overall, 36% (145/401) were improved to minor-error contours, and 30% (119/401) became acceptable after sequentially applying ACM-ACR and DL-ACR; (2) for the minor-error group, 43% (320/750) were improved to acceptable contours using DL-ACR.

Significance.

The obtained ACR workflow substantially improves the accuracy of DLAS bowel contours, minimizing the manual editing time and accelerating the segmentation process of MRgART.

Keywords: automatic contour refinement, deep learning, MR-guided radiotherapy

1. Introduction

MR-Linac (MRL) that integrates a magnetic resonance imaging (MRI) scanner and a linear accelerator has been recently introduced in radiation therapy (RT) clinics.(Lagendijk, Raaymakers and Van Vulpen 2014, Mutic and Dempsey 2014) The MR imaging on MRL provides superior soft-tissue contrast as compared to CT, in addition to functional information and real-time imaging, enabling MR-guided adaptive radiotherapy (MRgART).(Winkel et al. 2019) In particular, the online adaptive replanning (OLAR) can substantially improve the accuracy and effectiveness of RT by accounting for the patient inter-fraction changes at each treatment fraction. However, one of the biggest challenges in the current MRI-guided OLAR is the impractically long time needed to re-contour the patient’s anatomy of the day, which may exceed 30 minutes by manual segmentation in a tumor site with complex anatomy such as abdomen.(Paulson et al. 2020, Lamb et al. 2017, Güngör et al. 2021)

In recent years, deep learning (DL)-based approaches, in particular the convolutional neural networks (CNNs), have been extensively investigated in order to quickly and automatically generate the organ contours on different imaging modalities. Although previous studies reported promising DL auto-segmentation (DLAS) results on MRI, the auto-segmented contours for very complex structures can still fail to satisfy clinical acceptance. Bowels, as one of the most difficult organs to segment in the abdomen, have not been well examined in such studies due to their huge variations in shapes and sizes. Two published articles(Fu et al. 2018, Chen et al. 2020) that developed DLAS on abdominal MRI included bowels in their studies. Fu et al.(Fu et al. 2018) segmented bowels on images acquired on a MRL and achieved an average Dice similarity coefficient (DSC) of 0.866 and Hausdorff distance (HD) of 5.90 mm. Chen et al.(Chen et al. 2020) demonstrated DLAS on chemical shift MR images and obtained DSC of 0.873 and mean distance to agreement (MDA) of 1.84 mm. These results indicate that a sizable portion of the DLAS contours remain not accurate enough for clinical use. Consequently, further editing is required for the inaccurate DLAS contours before they can be used clinically. Very recently, Zhang et al. (Zhang et al. 2022b) reported a DL-based practical method to classify a contour (including DLAS) into the acceptable, minor- or major-error groups according to the subsequent editing effort required to make the contour clinical acceptable for RT.

To minimize the subsequent manual editing effort after DLAS, solutions for automatic contour refinement (ACR) are highly desirable. Very recently, we reported an ACR solution of using an active contour model (ACM) method based on DLAS probability map and demonstrated that the ACM method can correct for some inaccurate DLAS contours of bowels, especially those with major errors.(Ding et al. 2022) However, the ACM model had limited success in correcting suboptimal contours (contours with minor errors) due to the intrinsic limitation of the DLAS probability map. Therefore, more robust ACR methods are needed to improve ACR, thus, accelerating the segmentation process of MRgART.

In this study, we aim to develop a DL-based ACR method to work in conjunction with the previously developed ACM method to improve the overall performance of ACR for MRgART. MRI datasets acquired in our routine clinic were used to develop and test the DL and the combined ACR solutions and their performance was evaluated on DLAS contours of bowels based on practical criteria. The studied ACR approaches will be a key component of our ongoing efforts towards developing a machine learning accelerated online adaptive replanning (MOLAR) workflow to substantially speed up the online MRgART process. This MOLAR workflow includes (i) automatic determination for the necessity of adaptive replanning (Parchur et al. 2022, Nasief et al. 2022, Lim et al. 2020), (ii) automatic creation of synthetic CT from daily MRI (Ahunbay et al. 2022, Ahunbay et al. 2019), (iii) a fast four-step segmentation process on daily MRI (e.g., DLAS (Zhang et al. 2020, Amjad et al. 2022, Liang et al. 2020), automatic contour quality assurance ACQA (Zhang et al. 2019), ACR(Ding et al. 2022), and semi-automatic and manual contour editing (Zhang et al. 2022c)).

2. Methods

This study was approved by the Institutional Review Board of Medical College of Wisconsin.

2.1. Datasets

Two sets of abdominal MRI data acquired during routine RT from patients with abdominal cancers were used in this study: 1) 76 scans from MR-simulation (MR-SIM) and 2) 84 from MRgART with an MRL. The MR-SIM images were acquired on a 3T MRI scanner (Verio, Siemens, Germany) using an axial T2-weighted HASTE (half-Fourier singleshot turbo spin-echo) sequence(Semelka et al. 1996), and the MRL images were motion-averaged MRI derived from 4D-MRI acquired on a 1.5T MRL (Unity, Elekta, Sweden) using either turbo field-echo (TFE) or balanced turbo field-echo (BTFE) sequences(Paulson et al. 2020). Details of the two datasets with the acquisition parameters along other information are summarized in Table 1(a). All MRIs were standardized in MIM software (MIM Software Inc, USA) using an in-house workflow(Zhang et al. 2020) for magnetic field inhomogeneity correction and image denoising. The ground truth contours of small and large bowels were delineated manually by an experienced researcher and independently verified by two radiation oncologists following the well-established contouring consensus guidelines (Amjad et al. 2022, Lukovic et al. 2020, Heerkens et al. 2017) to avoid contouring uncertainties.

Table 1.

MRI datasets used for deep learning model training, validation and testing.

| (a) MRI acquisition parameters | |||

|---|---|---|---|

| Dataset information | MR-SIM | MRL | |

| Number of scans | 76 | 84 | |

| Number of patients | 76 | 42 | |

| MRI sequence | T2-weighted HASTE | TFE (37 scans) | BTFE (47 scans) |

| repetition time (msec) | 2000 | 4.40~5.32 | 4.30~5.09 |

| echo time (msec) | 95~98 | 1.85~1.98 | 2.07~2.21 |

| flip angle (°) | 150~160 | 25 | 50 |

| matrix size | 320×212 ~ 320×288 | 256×256 ~ 352×352 | 256×256 ~ 384×384 |

| pixel size (mm2) | 1.06×1.06 ~ 1.31×1.31 | 1.59×1.59 ~ 1.64×1.64 | 1.46×1.46 ~ 1.64×1.64 |

| slice thickness (mm) | 3~6 | 2.38~2.50 | 2.38~2.50 |

| (b) Datasets for DL-ACR U-Net models | |||

| Number of scans (number of minor-error contours) | |||

| Model – CQC1 | |||

| Training set | 46 (1822) | 69 (1887) | |

| Validation set | 15 (722) | 5 (333) | |

| Testing set | 15 (750) | 10 (732) | |

| Model – CQC2 | |||

| Training set | 46 (3230) | 69 (3999) | |

| Validation set | 15 (1220) | 5 (418) | |

| Testing set | 15 (1344) | 10 (1033) | |

| (c) Datasets for ACR performance evaluation | |||

| Number of scans (number of bowel contours) | |||

| Model – CQC1 | |||

| Major-error group | 15 (401) | 10 (485) | |

| Minor-error group | 15 (750) | 10 (732) | |

| Model – CQC2 | |||

| Major-error group | 15 (433) | 10 (574) | |

| Minor-error group | 15 (1344) | 10 (1033) | |

2.2. Deep learning Auto-segmentation

The MRI sets were input into previously-developed DLAS models implemented in a research tool (Admire, Elekta, Sweden)(Amjad et al. 2020, Amjad et al. 2021) to generate DLAS contours of small and large bowels. These DLAS models were developed based on a 3D deep CNN architecture (a modified 3D-ResUNet)(Yu et al. 2017, Amjad et al. 2022) to automatically segment multiple abdominal organs on different MRI contrasts. The obtained DLAS contours of bowels were used as the initial contours in this study to develop and test the ACR performance.

2.3. Image preprocessing

For the ACR application, the image intensity was normalized to the range [0, 255], and the image contrast was further enhanced using a contrast limited adaptive histogram equalization method(Zuiderveld 1994). Each MRI slice was then cropped into multiple 2D subregions based on the initial DLAS bowel contours (after dilation using a square structuring element of size 25×25), and thus each cropped subregion would include at least one complete bowel loop of the initial contours. The subregion images and contours were resized to a fixed size of 96×96 pixels before feeding into our proposed ACR workflow. After the contour correction, the subregions were resized back to their original sizes for contour quality evaluation.

2.4. Contour quality classification

Contour quality/accuracy for all contours (both initial DLAS contours and post-ACR contours) were classified using the following two contour quality classification (CQC) methods based on two sets of criteria into three groups: acceptable, minor-error, and major-error.

CQC1:

Classification based on the AAPM Task Group report 132 (TG-132)(Brock et al. 2017). TG-132 provides recommendations on evaluating 3D contours using quantitative metrics. Based on our practice and considering the recommended criteria from TG-132, the most commonly used metrics, DSC and MDA, were used to classify our DLAS contours in 2D: acceptable group with DSC ≥ 0.8 and MDA ≤ 3 mm, major-error group with DSC <0.5 or MDA >8mm, and minor-error group with the remaining contours.

CQC2:

Classification based on the subsequent editing effort as recently proposed by Zhang et al (Zhang et al. 2022b). The acceptable, minor- and major-error groups were defined by the machine learning classification models using a total of 7 quantitative accuracy metrics, including DSC, MDA, HD, surface DSC (sDSC)(Nikolov et al. 2018), added path length (APL)(Vaassen et al. 2020), slice area and relative APL (rAPL). The sDSC calculates the surface overlap of two contours, and the APL represents the surface length of the ground truth contour that is not correctly traced by the initial DLAS contour. These two relatively new metrics have been proven to be more clinically relevant as they are better correlated with the contour editing time(Vaassen et al. 2020).

2.5. Automatic contour refinement workflow

Our proposed ACR workflow incorporates two methods as follows.

ACM-ACR:

the previously developed ACM method (Ding et al. 2022). It has been previously shown that the ACM method can be particularly useful for transferring inaccurate contours from major-error into minor-error or acceptable (Ding et al. 2022).

DL-ACR:

the DL-based ACR models that can work in conjunction with ACM-ACR. Based on the U-Net architecture(Ronneberger, Fischer and Brox 2015), the DL models were developed presently to primarily correct for the minor-error contours and also further refine the major-error contours improved by the ACM-ACR method. Based on the two different CQC methods mentioned above, two U-Net models were trained using the corresponding defined minor-error contours from both the MR-SIM and MRL datasets. Table 1(b) lists the numbers of scans and contours used for training, validation and testing of the two U-Net models. For both MR-SIM and MRL datasets, the scans in each set (training, validation, and testing) were randomly selected but kept the same for both models. For any given patient, including the patients with multiple scans acquired with different sequences, images from the same patient were always used in one set (training, validation, or testing).

Both models (U-Net Model-CQC1 and U-Net Model-CQC2) were trained with two input channels: 1) the pre-processed MRI images, which were further normalized to [0,1], and 2) the initial minor-error contours used as a guidance since they were assumed to be close to the ground truth. Data augmentation was performed both offline(Dang et al. 2022) (3D affine transformations including shifting, rotation, and scaling, elastic B-spline deformation, and image quality augmentation; see Supplementary materials for more details) and online (rotation, flipping and scaling) to expand the diversity of the training set. Both networks were trained using a batch size of 16 for 300 epochs and Adam optimizer(Kingma and Ba 2014) with a learning rate of 10−5, and validation was performed after each epoch. The U-Net outputs were compressed into the range (0,1) using a sigmoid function, and the training objective was to minimize the binary cross entropy loss between the U-Net outputs and ground truth contours. A threshold of 0.5 was applied to binarize the U-Net outputs and obtain the final corrected contours. The model training, validation and testing were performed in Keras with TensorFlow backend(Abadi et al. 2016) on a Windows workstation with an NVIDIA GTX 1060 GPU.

A schematic illustration of the main steps in our ACR workflow is shown in Figure 1.

Figure 1.

A schematic illustration of the combined ACR workflow including both ACM-ACR and DL-ACR methods.

2.6. Performance Evaluation

Since both major- and minor-error groups would be corrected by the DL-ACR model, only contours from the testing set were used to evaluate the performance of the combined ACR workflow. For the major-error group, the DL-ACR models were applied subsequently after ACMACR to further refine the contours. While for the minor-error group, contours were directly corrected by DL-ACR without first using ACM-ACR. The evaluation was performed separately for the MR-SIM and MRL datasets. Table 1(c) lists the numbers of bowel contours with major and minor errors in the two datasets used for the model performance evaluation. The contour quality was measured by DSC, MDA, HD 95%, sDSC, and APL compared with the manually delineated ground truth contours. A 2-mm tolerance was used in the calculation of the sDSC and APL as a distance difference within 2 mm between the initial or ACR contour and the ground truth contour can be practically acceptable. Changes of these five accuracy metrics for the contours obtained before and after each ACR step were analyzed using the paired t-test to assess whether the improvements from the ACR workflow were statistically significant.

3. Results

Table 2 summarizes the performances of the previous ACM-ACR, the newly developed DL-ACR and the combined ACR workflow based on CQC1 with the MR-SIM and MRL testing data, respectively. Table 3 reports the corresponding ACR performance based on CQC2. The best ACR performance was seen in the MR-SIM dataset when using the ACR workflow developed based on CQC1 (Table 2): (1) for the major-error group, 44% (177/401) of the contours were improved to minor-error group and 5% (22/401) became acceptable by applying ACM-ACR; among the 177 contours that were turned into minor-error group, DL-ACR further refined 49% (87/177) to acceptable; and overall, 36% (145/401) were improved to minor-error contours, and 30% (119/401) became acceptable after sequentially applying the ACM-ACR and DL-ACR methods; (2) for the minor-error group, 43% (320/750) were directly improved to acceptable contours by DL-ACR.

Table 2.

Performance of the combined ACR workflow on the MR-SIM and MRL testing sets based on CQC1 (the final results are in bold).

| CQC1 | Major-error group | Minor-error group | |||

|---|---|---|---|---|---|

| initial DLAS contours | after ACM-ACR | after DL-ACR | initial DLAS contours | after DL-ACR | |

| MR-SIM | |||||

| numbers of contours improved to minor-error group | N/A | 44% (177/401)* | 36% (145/401) | N/A | N/A |

| numbers of contours improved to acceptable group | N/A | 5% (22/401) | 30% (119/401) | N/A | 43% (320/750) |

| DSC | 0.33 (±0.15) | 0.53 (±0.17) | 0.63 (±0.23) | 0.71 (±0.10) | 0.79 (±0.12) |

| MDA (mm) | 7.78 (±3.02) | 6.15 (±3.62) | 5.33 (±4.27) | 3.65 (±1.55) | 3.22 (±2.24) |

| HD 95% (mm) | 20.27 (±8.25) | 17.36 (±10.24) | 15.59 (±12.19) | 13.66 (±6.58) | 12.29 (±8.85) |

| sDSC | 0.28 (±0.15) | 0.41 (±0.19) | 0.51 (±0.25) | 0.55 (±0.15) | 0.65 (±0.20) |

| APL (mm) | 83.26 (±38.42) | 68.70 (±42.88) | 57.44 (±45.20) | 83.44 (±60.80) | 71.79 (±69.53) |

| MRL | |||||

| numbers of contours improved to minor-error group numbers of contours | N/A | 42% (202/485)† | 35% (172/485) | N/A | N/A |

| numbers of contours improved to acceptable group | N/A | 8% (38/485) | 28% (136/485) | N/A | 36% (267/732) |

| DSC | 0.34 (±0.16) | 0.54 (±0.19) | 0.62 (±0.25) | 0.70 (±0.10) | 0.77 (±0.14) |

| MDA (mm) | 9.64 (±5.36) | 8.11 (±6.91) | 7.37 (±8.23) | 4.39 (±1.69) |

4.13 (±2.95)

p = 0.009 |

| HD 95% (mm) | 26.18 (±14.59) | 24.05 (±18.49) | 22.18 (±21.68) | 17.34 (±7.21) |

16.43 (±11.56)

p = 0.017 |

| sDSC | 0.29 (±0.14) | 0.43 (±0.19) | 0.51 (±0.25) | 0.57 (±0.14) | 0.65 (±0.20) |

| APL (mm) | 101.59 (±47.66) | 81.61 (±46.35) | 69.30 (±51.01) | 83.43 (±67.05) | 69.75 (±72.28) |

Note: paired t test p values <<0.001 after each ACR step if not listed in the table (see Supplementary materials for the exact p values).

among these 177 contours that were improved to minor-error group by ACM-ACR, 87 (49%) were further improved to acceptable contours by DL-ACR.

among these 202 contours that were improved to minor-error group by ACM-ACR, 88 (44%) were further improved to acceptable contours by DL-ACR.

Table 3.

Performance of the combined ACR workflow on the MR-SIM and MRL testing sets based on CQC2 (the final results are in bold).

| CQC2 | Major-error group | Minor-error group | |||

|---|---|---|---|---|---|

| initial DLAS contours | after ACM-ACR | after DL-ACR | initial DLAS contours | after DL-ACR | |

| MR-SIM | |||||

| numbers of contours improved to minor-error group | N/A | 31% (134/433)* | 35% (151/433) | N/A | N/A |

| numbers of contours improved to acceptable group | N/A | 1% (5/433) | 5% (24/433) | N/A | 29% (391/1344) |

| DSC | 0.56 (±0.25) | 0.65 (±0.20) | 0.70 (±0.20) | 0.73 (±0.23) | 0.82 (±0.16) |

| MDA | 6.59 (±3.29) | 5.72 (±3.26) | 5.21 (±3.38) | 3.15 (±2.34) | 2.46 (±2.10) |

| HD 95% (mm) | 19.36 (±7.93) | 17.82 (±9.59) | 16.84 (±10.24) | 10.87 (±6.99) | 9.42 (±7.77) |

| sDSC | 0.37 (±0.19) | 0.45 (±0.18) | 0.50 (±0.20) | 0.62 (±0.22) | 0.73 (±0.19) |

| APL | 128.28 (±60.52) | 112.02 (±63.90) | 106.93 (±71.13) | 62.45 (±48.36) | 50.90 (±52.55) |

| MRL | |||||

| numbers of contours improved to minor-error group | N/A | 22% (129/574)† | 28% (159/574) | N/A | N/A |

| numbers of contours improved to acceptable group | N/A | 2% (10/574) | 5% (27/574) | N/A | 21% (222/1033) |

| DSC | 0.53 (±0.21) | 0.64 (±0.18) | 0.67 (±0.19) | 0.69 (±0.25) | 0.79 (±0.16) |

| MDA | 7.88 (±5.34) | 7.28 (±6.44) | 7.03 (±6.61) | 4.07 (±3.26) | 3.14 (±2.53) |

| HD 95% (mm) | 24.54 (±13.86) | 23.27 (±16.99) |

23.02 (±17.69)

p = 0.108 |

13.64 (±8.70) | 11.84 (±8.77) |

| sDSC | 0.39 (±0.17) | 0.45 (±0.18) | 0.49 (±0.20) | 0.62 (±0.24) | 0.70 (±0.19) |

| APL | 123.79 (±63.69) | 109.64 (±68.21) | 105.44 (±74.09) | 54.40 (±38.61) | 45.01 (±37.26) |

Note: paired t test p values <<0.001 after each ACR step if not listed in the table (see Supplementary materials for the exact p values).

among these 134 contours that were improved to minor-error group by ACM-ACR, 21 (16%) were further improved to acceptable contours by DL-ACR

among these 129 contours that were improved to minor-error group by ACM-ACR, 9 (7%) were further improved to acceptable contours by DL-ACR

Larger improvements were observed for the combined ACR workflow developed based on CQC1 as compared to that for CQC2. The combined ACR workflow also performed better on MR-SIM data compared to MRL data. Nonetheless, no matter which CQC method was chosen, and which dataset was tested, except for HD 95%, the other four contour accuracy metrics, DSC, MDA, sDSC, and APL, were all significantly improved (p values <0.05; and most p values <<0.001) after each ACR step (see Supplementary materials for the exact p values). The execution time to refine a testing contour was less than 2 seconds for ACM-ACR and less than 1 second for DLACR on a GTX 1060 GPU.

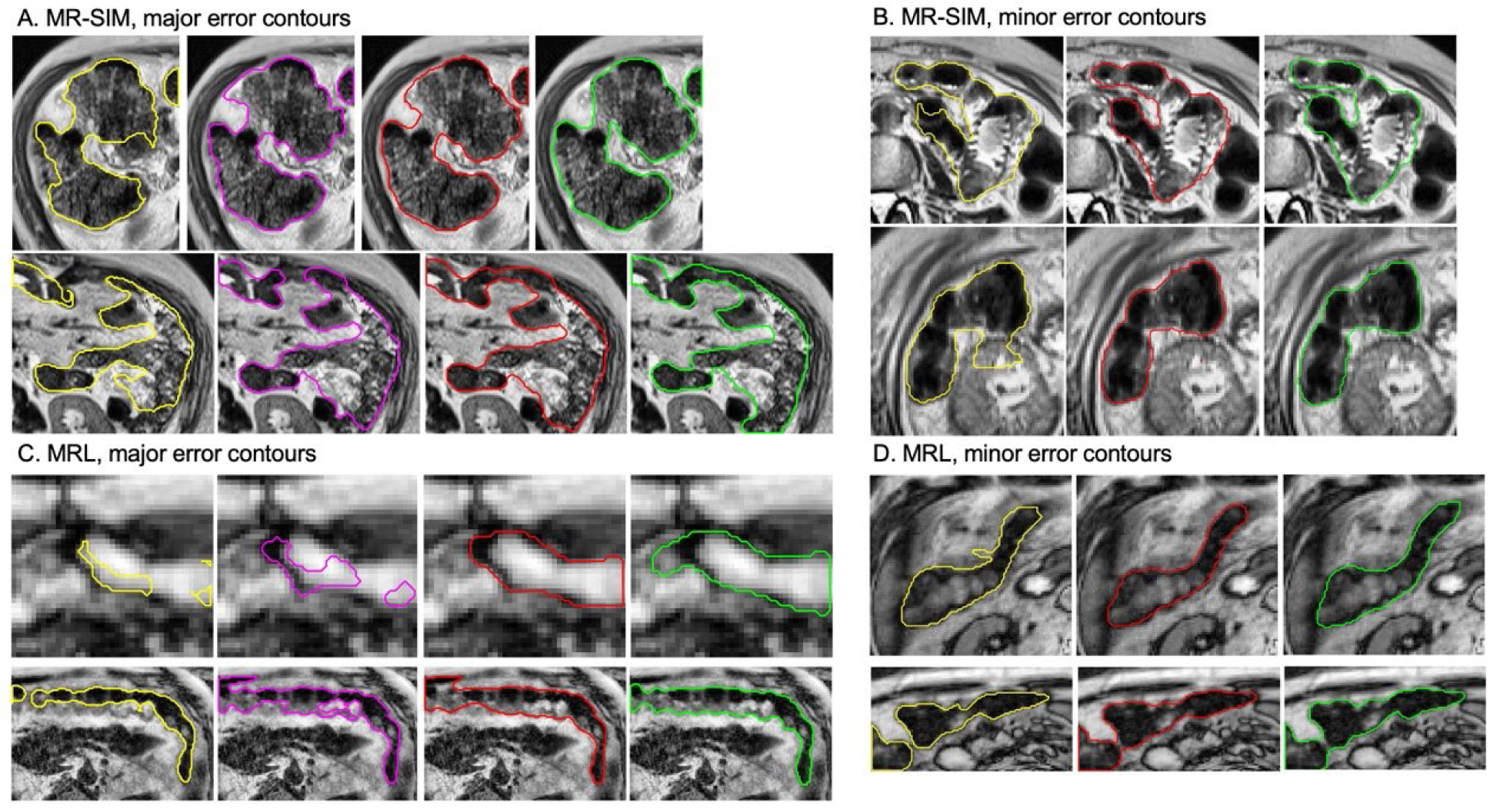

Figures 2 and 3 show examples of the performance of the combined ACR workflow developed based on CQC1 and CQC2, respectively. Upon comparison with the initial DLAS contours, the combined ACR workflow was found to deliver substantially improved contour accuracy. Even for the very irregularly shaped bowel contours, the ACR workflow was able to improve the contour quality.

Figure 2.

Examples of the correction performance using the ACR workflow evaluated based on CQC1: initial DLAS contour (yellow), ACR workflow-ACM corrected contour (magenta), ACR workflow-U-Net corrected contour (red), and ground truth contours (green) from MR-SIM and MRL testing data, respectively. Images are rescaled for better visualization and do not reflect their original sizes. Abbreviations: ACR = automatic contour refinement; DLAS = deep learning auto-segmentation; ACM = active contour model.

Figure 3.

Examples of the correction performance using the ACR workflow evaluated based on CQC2: initial DLAS contour (yellow), ACR workflow-ACM corrected contour (magenta), ACR workflow-U-Net corrected contour (red), and ground truth contours (green) from MR-SIM and MRL testing data, respectively. Images are rescaled for better visualization and do not reflect their original sizes. Abbreviations: ACR = automatic contour refinement; DLAS = deep learning auto-segmentation; ACM = active contour model.

4. Discussion

In this study, a deep learning based automatic contour refinement method was developed to quickly correct inaccurate bowel contours generated by DLAS. The DL-ACR method was developed particularly to address DLAS contours with minor errors and was combined with the previously developed ACM-ACR to address the contours with major errors. It is observed that the combined ACR workflow can substantially correct for the inaccurate DLAS contours of complex bowels, thus minimizing the manual editing and accelerating segmentation process for MRgART.

Although DLAS has been widely investigated and applied for organ auto-contouring in many clinical applications, the current DLAS methods still has limited success particularly for complex structures in abdomen, such as bowels. The manual editing of bowel loops is generally time-consuming and labor-intensive due to their irregular shapes. In particular, the small and large bowels usually belong to the organs at risk for RT of abdominal cancers. During MRI-guided OLAR, fast recontouring on the MRI of the day is highly desired. Previously, we attempted to automatically correct the inaccurate bowel contours by implementing an improved ACM(Ding et al. 2022). Although major-error contours could be largely improved with higher contour quality, only a small amount of them (<10%) could be corrected to reach practically acceptable level per the TG-132 recommendation (DSC ≥ 0.8 and MDA ≤ 3mm). In this study, we developed DL-based U-Net models to work in conjunction with the ACM to further improve the contour refinement for the major-error contours. Compared to the DL only refinement, the combined methods substantially improved the major-error contour refinement. As shown in Table 2, after the two-step ACR workflow (ACM-ACR + DL-ACR), 30% and 28% of the major-error contours were eventually improved to practically acceptable per the TG-132 recommendation (CQC1) for MRSIM and MRL datasets, respectively. For contours with minor errors, the previous ACM method could also effectively improve the contour accuracy, but the average accuracy metrics changes, particularly for MDA, had minimal or no improvement. In this study, the trained DL-ACR models were directly used to correct for the minor-error contours specifically, and indeed, it outperformed the ACM (see Supplementary materials for direct comparison). In addition, the same U-Net models were also effective to further refine the ACM-corrected major-error contours as mentioned above.

In this study, we adopted two approaches to classify the inaccurate contour quality: CQC1 which was the conventional way based on DSC and MDA (TG-132 recommendation), and CQC2 which was the clinical applicability-oriented automatic contour evaluation models developed by our group (Zhang et al. 2022b). Instead of only relying on DSC and MDA to evaluate the contour quality, our automatic CQC2 method incorporated a total of 7 quantitative accuracy metrics, including DSC, MDA, HD, sDSC, APL, slice area and rAPL, to classify the contour quality. We implemented our pre-trained automatic CQC2 models for small and large bowels in this study, and then developed and evaluated the following ACR workflow using the same criterion (CQC2). As expected, the ACR performance was different between using the two classification methods. In general, the CQC2 method is more stringent than CQC1 method as more accuracy metrics were used to train the CQC2 models based on the human visual reviewing and labeling. This can be seen from Table 1(c), where more inaccurate contours are identified by CQC2 from the same testing scans. Even with the tighter criteria of CQC2, the proposed combined ACR workflow can improve 40% of the major-error group (35% to minor-error and 5% to acceptable groups), and 29% of the minor-error group for MR-SIM data (33% and 21% respectively for MRL), which can significantly reduce the workload for manual correction and improve the contouring efficiency.

The combined ACR workflow was seen to perform better on MR-SIM data than MRL data. This is primarily due to the relatively lower image quality of the MRL scans, which contain the motion artifacts as they were motion average images derived from 4D MRIs(Paulson et al. 2020). In contrast, the MR-SIM scans were acquired with a respiration trigger. It is expected that the model performance will be improved when larger training data sets become available.

The current study has several limitations. First, the ACR algorithms have intrinsic limitations. For example, as mentioned in our previously publication, the correction performance of the ACM process is inherently limited by the accuracy of the probability maps from the DLAS, which can affect the subsequent DL-ACR performance (the ACM output would be used as the input). The current U-Net models can also be improved by using more advanced DL networks and larger training datasets. In addition, both the DLAS used to generate the initial contours and our ACR workflow have difficulties in distinguishing small and large bowels, which were treated as separate contours through the entire process. It is anticipated that better correction performance can be achieved if small and large bowels are combined. Second, although the contour quality has been shown to be substantially improved by the proposed ACR workflow, a fair portion of the contours undergoing the ACR workflow remained inaccurate or suboptimal, which requires manual editing subsequently. More robust ACR methods are still needed.

It is also noted that, even though in this study the CQC models rely on ground truth contours to identify the contour quality categories (acceptable, minor-error and major-error), our group is currently developing a deep learning based automatic contour quality assurance (DL-ACQA) tool for clinical application (Zhang et al. 2022a). This DL-ACQA tool was designed to classify any contours (including DLAS) on the images without ground truth. Our preliminary results show that DL-ACQA models achieved areas under receiver operating characteristic curves (AUCs) in the range of 0.978 to 0.997 and the accuracy in the range of 90% to 94% for five abdominal organs (large bowel, small bowel, stomach, duodenum, and pancreas)(Zhang et al. 2022a). The identified minor-error and major-error contours will then be corrected using the proposed ACR workflow in this study. In addition, the DL-ACQA and ACR may be performed iteratively to further improve the contour quality during the clinical implementation, which is a part of our future work.

The present ACR workflow is a part of our effort to develop the 4-step segmentation process in the MOLAR workflow for MRgART. Within the desired MOLAR workflow, a quick auto-segmentation of the organs at risk on daily MRI will be performed using a multi-layer pipeline that includes (1) auto-segmenting using DL models trained with multi-sequence MRIs (Zhang et al. 2020, Amjad et al. 2022, Liang et al. 2020), (2) automatically validating and classifying the auto-segmented contours using a DL based classification pipeline (Zhang et al. 2019), (3) automatically refining the identified inaccurate contours (Ding et al. 2022) including the presented approaches in this study, and (4) editing the remaining inaccurate contours using an interactive semi-automatic and manually editing tools (Zhang et al. 2022c). The entire 4-step segmentation pipeline is designed and developed to be fully automated, except for the last editing step. It is expected that such a segmentation solution will substantially accelerate the recontouring process and make MRI-guided OLAR more practical.

5. Conclusion

A deep learning based automatic contour refinement method is developed to correct for inaccurate contours such as those from auto-segmentation. The DL-ACR method, when combined with the previously developed ACM-ACR, can substantially improve the quality of inaccurate MRI contours of small and large bowels in a fully automated and fast manner, minimizing the subsequent manual editing required for DLAS, thus, accelerating MRgART.

Supplementary Material

Acknowledgements

Inputs from Ergun Ahunbay, Ph.D, Haidy Nasief, Ph.D, Eric Paulson, Ph.D, William Hall, MD, Beth Erickson, MD, Jiaofeng Xu, PhD, and Virgil Willcut, MS are appreciated. The research was partially supported by the Medical College of Wisconsin Fotsch Foundation and the National Cancer Institute of the National Institutes of Health under award number R01CA247960. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References:

- Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G & Isard M. 2016. {TensorFlow}: a system for {Large-Scale} machine learning. In 12th USENIX symposium on operating systems design and implementation (OSDI 16), 265–283. [Google Scholar]

- Ahunbay E, Parchur AK, Paulson E, Chen X, Omari E & Li XA (2022) Development and implementation of an automatic air delineation technique for MRI-guided adaptive radiation therapy. Physics in Medicine & Biology, 67, 145011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahunbay EE, Thapa R, Chen X, Paulson E & Li XA (2019) A technique to rapidly generate synthetic computed tomography for magnetic resonance imaging–guided online adaptive replanning: an exploratory study. International Journal of Radiation Oncology* Biology* Physics, 103, 1261–1270. [DOI] [PubMed] [Google Scholar]

- Amjad A, Xu J, Thill D, Lawton C, Hall W, Awan MJ, Shukla M, Erickson BA & Li XA (2022) General and custom deep learning autosegmentation models for organs in head and neck, abdomen, and male pelvis. Medical Physics, 49, 1686–1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amjad A, Xu J, Thill D, O’Connell N, Buchanan L, Jones I, Hall W, Erickson B & Li A (2020) Deep Learning-based Auto-segmentation on CT and MRI for Abdominal Structures. International Journal of Radiation Oncology, Biology, Physics, 108, S100–S101. [Google Scholar]

- Amjad A, Xu J, Zhu X, Buchanan L, Kun T, O’Connell N, Thill D, Erickson B, Hall W & Li X. 2021. Deep Learning Auto-Segmentation on Multi-Sequence MRI for MR-Guided Adaptive Radiation Therapy. In American Society for Radiation Oncology (ASTRO) Annual Meeting. [Google Scholar]

- Brock KK, Mutic S, McNutt TR, Li H & Kessler ML (2017) Use of image registration and fusion algorithms and techniques in radiotherapy: Report of the AAPM Radiation Therapy Committee Task Group No. 132. Medical physics, 44, e43–e76. [DOI] [PubMed] [Google Scholar]

- Chen Y, Ruan D, Xiao J, Wang L, Sun B, Saouaf R, Yang W, Li D & Fan Z (2020) Fully automated multi-organ segmentation in abdominal magnetic resonance imaging with deep neural networks. Medical physics, 47, 4971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dang N, Zhang Y, Amjad A, Ding J, Sarosiek C & Li X. 2022. Automatic Image and Contour Augmentation for Deep Learning Auto-Segmentation of Complex Anatomy. In MEDICAL PHYSICS, E158–E158. WILEY 111 RIVER ST, HOBOKEN 07030–5774, NJ USA. [Google Scholar]

- Ding J, Zhang Y, Amjad A, Xu J, Thill D & Li XA (2022) Automatic contour refinement for deep learning auto-segmentation of complex organs in MRI-guided adaptive radiotherapy. Advances in Radiation Oncology, 100968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Y, Mazur TR, Wu X, Liu S, Chang X, Lu Y, Li HH, Kim H, Roach MC & Henke L (2018) A novel MRI segmentation method using CNN-based correction network for MRI-guided adaptive radiotherapy. Medical physics, 45, 5129–5137. [DOI] [PubMed] [Google Scholar]

- Güngör G, Serbez İ, Temur B, Gür G, Kayalılar N, Mustafayev TZ, Korkmaz L, Aydın G, Yapıcı B & Atalar B (2021) Time Analysis of Online Adaptive Magnetic Resonance–Guided Radiation Therapy Workflow According to Anatomical Sites. Practical Radiation Oncology, 11, e11–e21. [DOI] [PubMed] [Google Scholar]

- Heerkens HD, Hall W, Li X, Knechtges P, Dalah E, Paulson E, van den Berg C, Meijer G, Koay E & Crane C (2017) Recommendations for MRI-based contouring of gross tumor volume and organs at risk for radiation therapy of pancreatic cancer. Practical radiation oncology, 7, 126–136. [DOI] [PubMed] [Google Scholar]

- Kingma DP & Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. [Google Scholar]

- Lagendijk JJ, Raaymakers BW & Van Vulpen M. 2014. The magnetic resonance imaging–linac system. In Seminars in radiation oncology, 207–209. Elsevier. [DOI] [PubMed] [Google Scholar]

- Lamb J, Cao M, Kishan A, Agazaryan N, Thomas DH, Shaverdian N, Yang Y, Ray S, Low DA & Raldow A (2017) Online adaptive radiation therapy: implementation of a new process of care. Cureus, 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang Y, Schott D, Zhang Y, Wang Z, Nasief H, Paulson E, Hall W, Knechtges P, Erickson B & Li XA (2020) Auto-segmentation of pancreatic tumor in multi-parametric MRI using deep convolutional neural networks. Radiotherapy and Oncology, 145, 193–200. [DOI] [PubMed] [Google Scholar]

- Lim SN, Ahunbay EE, Nasief H, Zheng C, Lawton C & Li XA (2020) Indications of online adaptive replanning based on organ deformation. Practical radiation oncology, 10, e95–e102. [DOI] [PubMed] [Google Scholar]

- Lukovic J, Henke L, Gani C, Kim TK, Stanescu T, Hosni A, Lindsay P, Erickson B, Khor R & Eccles C (2020) MRI-based upper abdominal organs-at-risk atlas for radiation oncology. International Journal of Radiation Oncology* Biology* Physics, 106, 743–753. [DOI] [PubMed] [Google Scholar]

- Mutic S & Dempsey JF. 2014. The ViewRay system: magnetic resonance–guided and controlled radiotherapy. In Seminars in radiation oncology, 196–199. Elsevier. [DOI] [PubMed] [Google Scholar]

- Nasief HG, Parchur AK, Omari E, Zhang Y, Chen X, Paulson E, Hall WA, Erickson B & Li XA (2022) Predicting necessity of daily online adaptive replanning based on wavelet image features for MRI guided adaptive radiation therapy. Radiotherapy and Oncology, 176, 165–171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nikolov S, Blackwell S, Zverovitch A, Mendes R, Livne M, De Fauw J, Patel Y, Meyer C, Askham H & Romera-Paredes B (2018) Deep learning to achieve clinically applicable segmentation of head and neck anatomy for radiotherapy. arXiv preprint arXiv:1809.04430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parchur AK, Lim S, Nasief HG, Omari EA, Zhang Y, Paulson ES, Hall WA, Erickson B & Li XA (2022) Auto-detection of necessity for MRI-guided online adaptive replanning using a machine learning classifier. Medical Physics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulson ES, Ahunbay E, Chen X, Mickevicius NJ, Chen G-P, Schultz C, Erickson B, Straza M, Hall WA & Li XA (2020) 4D-MRI driven MR-guided online adaptive radiotherapy for abdominal stereotactic body radiation therapy on a high field MR-Linac: Implementation and initial clinical experience. Clinical and translational radiation oncology, 23, 72–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ronneberger O, Fischer P & Brox T. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, 234–241. Springer. [Google Scholar]

- Semelka RC, Kelekis NL, Thomasson D, Brown MA & Laub GA (1996) HASTE MR imaging: description of technique and preliminary results in the abdomen. Journal of Magnetic Resonance Imaging, 6, 698–699. [DOI] [PubMed] [Google Scholar]

- Vaassen F, Hazelaar C, Vaniqui A, Gooding M, van der Heyden B, Canters R & van Elmpt W (2020) Evaluation of measures for assessing time-saving of automatic organ-at-risk segmentation in radiotherapy. Physics and Imaging in Radiation Oncology, 13, 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winkel D, Bol GH, Kroon PS, van Asselen B, Hackett SS, Werensteijn-Honingh AM, Intven MP, Eppinga WS, Tijssen RH & Kerkmeijer LG (2019) Adaptive radiotherapy: the Elekta Unity MR-linac concept. Clinical and translational radiation oncology, 18, 54–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu L, Yang X, Chen H, Qin J & Heng PA. 2017. Volumetric ConvNets with mixed residual connections for automated prostate segmentation from 3D MR images. In Thirty-first AAAI conference on artificial intelligence. [Google Scholar]

- Zhang Y, Amjad A, Ding J, Dang N, Sarosiek C & Li A (2022a) A Multi-Layer Auto-Segmentation Quality Assurance and Correction Pipeline for MR-Guided Adaptive Radiotherapy. International Journal of Radiation Oncology, Biology, Physics, 114, S162. [Google Scholar]

- Zhang Y, Ding J, Amjad A, Sarosiek C, Dang N, Hall W, Erickson B & Li X. 2022b. Clinical Applicability-Oriented Contour Quality Classification for Auto-Segmentation. In MEDICAL PHYSICS, E261–E261. WILEY 111 RIVER ST, HOBOKEN 07030–5774, NJ USA. [Google Scholar]

- Zhang Y, Liang Y, Ding J, Amjad A, Paulson E, Ahunbay E, Hall WA, Erickson B & Li XA (2022c) A prior knowledge guided deep learning based semi-automatic segmentation for complex anatomy on MRI. International Journal of Radiation Oncology* Biology* Physics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y, Paulson E, Lim S, Hall WA, Ahunbay E, Mickevicius NJ, Straza MW, Erickson B & Li XA (2020) A Patient-Specific Autosegmentation Strategy Using Multi-Input Deformable Image Registration for Magnetic Resonance Imaging–Guided Online Adaptive Radiation Therapy: A Feasibility Study. Advances in radiation oncology, 5, 1350–1358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y, Plautz TE, Hao Y, Kinchen C & Li XA (2019) Texture-based, automatic contour validation for online adaptive replanning: a feasibility study on abdominal organs. Medical physics, 46, 4010–4020. [DOI] [PubMed] [Google Scholar]

- Zuiderveld K (1994) Contrast limited adaptive histogram equalization. Graphics gems, 474–485. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.