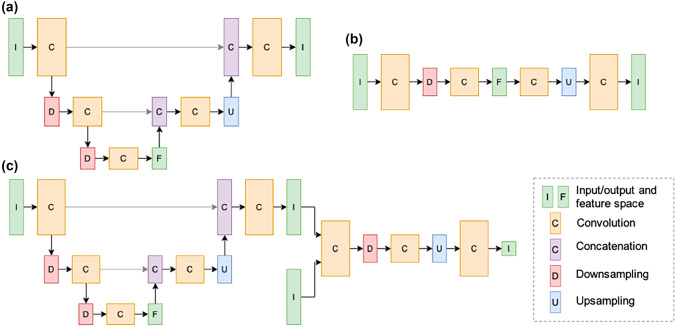

Fig. 2.

(a) Unet consists of a fully convolutional encoder and decoder interconnected by concatenating feature maps which assists in propagating spatial information to deep network layers. (b) Autoencoder consists of an encoder which maps images to a latent space of reduced dimensionality and a decoder which maps the latent space vector to image space. The dimensionality reduction mitigates random variations in the input while preserving image features necessary for image reconstruction. (c) Generative adversarial network consists of a generator network which produces an estimate of a ground-truth image and a discriminator which attempts to discern between synthesized images and ground truth images. Parameters for each network are updated in an alternating fashion resulting in generator outputs which are indistinguishable from ground truth images from the perspective of the discriminator.