Abstract

Generative adversarial networks (GANs) are one powerful type of deep learning models that have been successfully utilized in numerous fields. They belong to the broader family of generative methods, which learn to generate realistic data with a probabilistic model by learning distributions from real samples. In the clinical context, GANs have shown enhanced capabilities in capturing spatially complex, nonlinear, and potentially subtle disease effects compared to traditional generative methods. This review critically appraises the existing literature on the applications of GANs in imaging studies of various neurological conditions, including Alzheimer’s disease, brain tumors, brain aging, and multiple sclerosis. We provide an intuitive explanation of various GAN methods for each application and further discuss the main challenges, open questions, and promising future directions of leveraging GANs in neuroimaging. We aim to bridge the gap between advanced deep learning methods and neurology research by highlighting how GANs can be leveraged to support clinical decision making and contribute to a better understanding of the structural and functional patterns of brain diseases.

Keywords: Generative adversarial network, GAN, Neuroimaging, Pathology, Review

1. Introduction

Advances in medical imaging techniques, including magnetic resonance imaging (MRI) and positron emission tomography (PET), have provided in vivo imaging-derived phenotypes capturing patterns of brain development, aging as well as of various diseases and disorders (Yu et al., 2018; Myszczynska et al., 2020; Rajpurkar et al., 2022). Embracing the “big data” era, the medical imaging community has widely adopted artificial intelligence (AI) for data analysis, from traditional statistical methods to machine learning (ML) models, which provides promise toward clinical translation (Habes et al., 2020; Thompson et al., 2020; Marek et al., 2022). Statistical tools such as univariate and multivariate prediction models are empowered to learn the associations between structural/functional variability and cognitive/psychiatric symptomatology in the human brain. Notably, advanced AI techniques have been successfully utilized in numerous clinical applications, such as computer-aided diagnosis, disease biomarker identification, and personalized disease risk quantification, which are bound to further revolutionize medical research and clinical practice. Among these techniques, deep learning (DL) has drawn increasing attention in medical imaging. DL algorithms are powerful in capturing the complex non-linear relationships between input features, thereby extracting low-to-high level latent features that are predictive of the response of interest (Zhou et al., 2021; Singh et al., 2022; Bethlehem et al., 2022; Abrol et al., 2021; Davatzikos, 2019). So far, DL has been widely adopted in medical image processing tasks such as registration, reconstruction, segmentation, and synthesis, and analysis tasks such as disease diagnosis, anomaly detection, and pathology and prognosis evolution prediction.

Generative adversarial networks (GANs), first introduced in 2014 by Goodfellow et al. (2014) have had a profound influence in DL, leading to numerous applications. GAN is a generative method which synthesizes realistic-looking features/images by learning the sample distribution from real data. GAN and its variants have shown great promise in image generation tasks such as image enhancement, cross-modality synthesis, text-to-image synthesis, and image-to-image translation (Gui et al., 2022; Wang et al., 2021 a). This technique is particularly promising for neuroimaging and clinical neuroscience applications because it is capable of discovering and reproducing the complex and non-linear pathology patterns from medical images and data.

Many previous reviews of GANs focused on technical details of image synthesis in medical imaging (Yi et al., 2019; Laino et al., 2022; Qu et al., 2021; Jeong et al., 2022; Sorin et al., 2020). However, less attention has been paid to the adoption of GANs in clinical neuroimaging studies. In this review, we present the current state of GANs in neuroimaging research, in various applications including neurodegenerative disease diagnosis, cancer and anomaly detection, brain development modeling, dementia trajectory tracing, lesion evolution prediction, and tumor growth estimation. By showcasing these different applications discussed in the literature, we demonstrate the advantages of GANs for neuroimaging studies, compared to traditional ML methods. We also discuss the current limitations of GANs and potential opportunities for adopting GANs in future neuroimaging research.

Review perspective.

Our review emphasizes discovery and analysis of imaging phenotypes associated with neurological diseases via deep learning techniques, focusing on GANs in particular. We exclude papers solely focusing on methodology development for tasks such as image synthesis, registration, segmentation, reconstruction, modality translation, and dataset enlargement. We filter research articles on Google Scholar, PubMed, and several pre-print platforms containing words such as ‘GAN’, ‘Generative Adversarial Network’, ‘Medical Imaging’, and ‘Brain’. We further screen the titles and abstracts for a thematic match. To provide a thorough review, we build connection graphs for each included paper using Semantic Scholar in order to find additional relevant publications. Finally, detailed examination of the methods and results of each paper helped us decide if they fall in our review scope. Based on the application areas of the selected papers, we divide them into two main categories: clinical diagnosis and disease progression. Each category is further split into finer tasks, which are described in the manuscript organization section.

Manuscript organization.

The rest of the paper is organized as follows. In Section 2, we provide background knowledge on GANs and their algorithmic extensions for applications in neuroimaging. From Sections 3 to 4, we comprehensively illustrate the applications of GANs in neurological research using imaging phenotypes. We discuss clinical diagnosis, including disease classification, with a primary focus on Alzheimer’s disease and brain tumor detection in Section 3. In Section 4, we present modeling of imaging patterns of brain change in cognitively unimpaired brain aging and in several diseases, including Alzheimer’s disease, brain lesion evolution and tumor growth. For each application, we introduce the background and challenges, describe the essential methodology for tackling the problem, showcase its advantages and promises from evaluation results, as well as critique the limitations and pitfalls. Finally, in Section 5, we suggest potential promising future directions and discuss open questions for each neurological application utilizing GANs, based on current issues and challenges.

2. Preliminaries on GANs

GANs have the ability to approximate complex probability distributions and thereby generate realistic patterns or images, as well as capture effects of pathologic processes on imaging phenotypes. We will describe the mechanism of the standard GAN and its usage in neuroimaging studies (see Table 1). Then, we showcase a few GAN variants whose architectures have been modified to suit specific clinical tasks, such as disease diagnosis and prognosis.

Table 1.

Frequently applied GAN architectures in neuroimaging. Publications are ordered by year in ascending order.

| Publication | Highlights |

|---|---|

| GAN (Goodfellow et al., 2014) | Original GAN |

| CGAN (Mirza and Osindero, 2014) | Conditional GAN |

| InfoGAN (Chen et al., 2016) | Interpretable representation learning |

| CycleGAN (Zhu et al., 2017) | Unpaired image-to-image translation |

| WGAN (Gulrajani et al., 2017) | Wasserstein GAN |

| PGGAN (Karras et al., 2018) | Progressive growing GAN |

| MUNIT (Huang et al., 2018) | Multi-modal unsupervised image-to-image translation |

| SAGAN (Zhang et al., 2019a) | Self-attention GAN |

| ClusterGAN (Mukherjee et al., 2019) | Clustering GAN |

| Rev-GAN (van der Ouderaa and Worrall, 2019) | Reversible GAN |

| StyleGAN (Karras et al., 2019) | Style-transfer GAN |

2.1. Original GAN

GAN was first proposed by Goodfellow et al. (2014) to overcome the intractable probabilistic computation difficulty that deep generative models, such as the deep Boltzmann machine (Salakhutdinov and Larochelle, 2010), usually suffer from. There are two components in a GAN: the generator and the discriminator, as shown in Fig. 1 A. Intuitively, we can think of the framework mechanism as a two-player game – player A and player B competing with each other to produce fake images and detect them. The game drives both parties to improve their techniques until the fake images are indistinguishable from the real images. Given a finite collection of data points x sampled from the natural distribution, which is unknown, we would like to learn or approximate the natural distribution from the observations. A generator is defined to be a mapping function that projects noise variables sampled from a prior distribution to the data space. The prior distribution can be uniform or Gaussian, and the generator is parametrized by differentiable neural networks. Given the output of the generator and the real observations, the discriminator, which is also parameterized by neural networks, outputs the probability that the input comes from a real sample distribution. In this two-player game, we simultaneously train the generator to minimize the probability that the discriminator treats the generated image as fake and train the discriminator to maximize the probability of identifying the generated image as fake. This technique is called adversarial training.

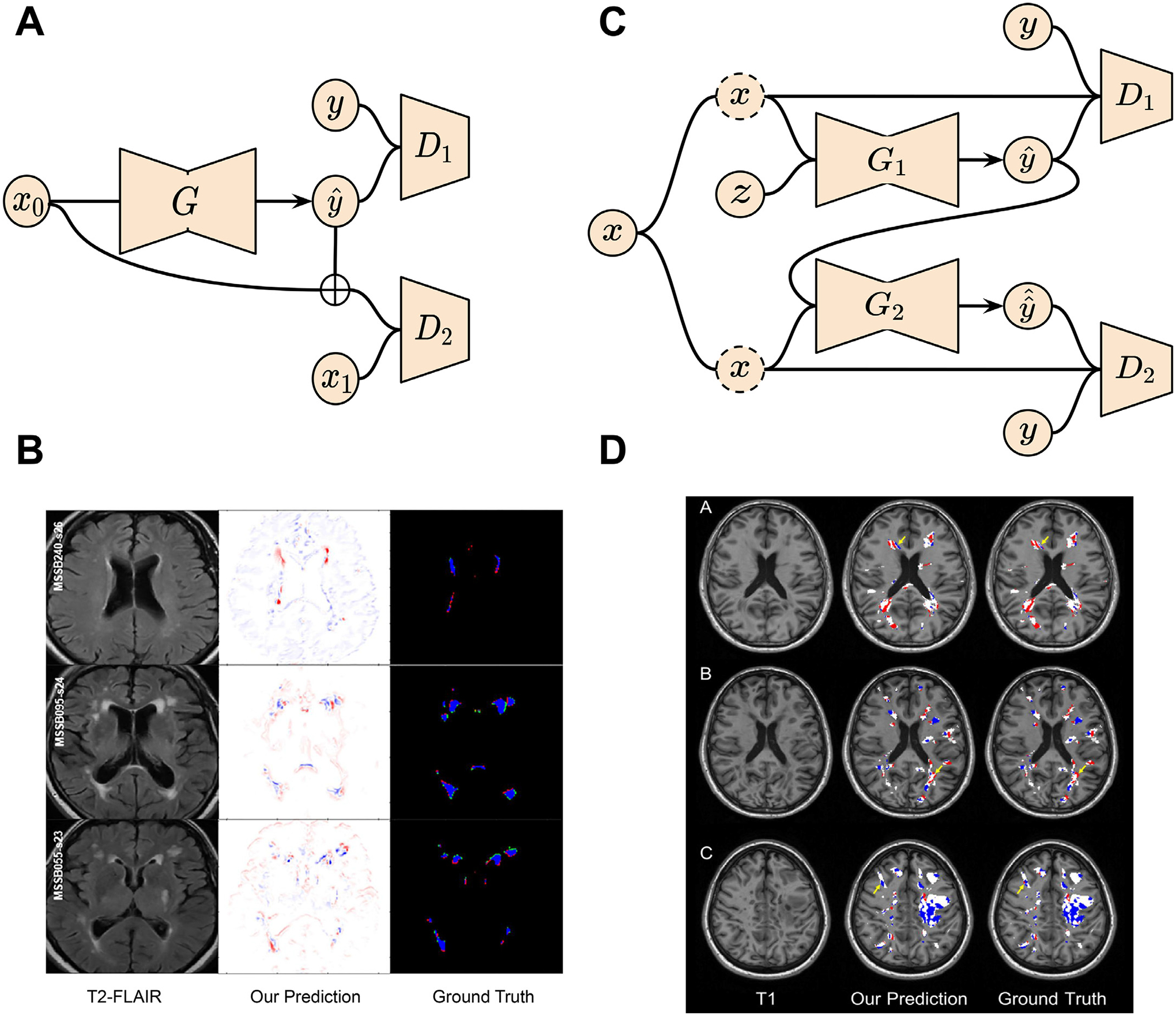

Fig. 1.

Semantics of the original GAN and its extensions. (A) Original GAN architecture where z, x and y denote random noise, generated image, and real image. G and D represent generator and discriminator separately. Wasserstein GAN, deep convolutional GAN, and self-attention GAN share the same structure of the original GAN but use a different loss function, convolutional block and self-attention module, respectively. (B) Progressive growing GAN architecture. The resolution of each generated image x1,x2,. .., xn and real image y1, y2,. .., yn is increasing from left to right. The number of layers within each generator G1, G2,. .., Gn and discriminator D1, D2,. .., Dn is also growing accordingly. (C) Conditional GAN architecture where z, y, x, x′ denote random noise, extra label/information, real data, and generated data, respectively. Concatenation of random noise z and label y are input to the generator and concatenation of label y and generated/real data are input to the discriminator. (D) Cycle-GAN structure where x1, y2 denote umpired data from two different modalities, and , denote generated data for the corresponding modality. G1 transform data from modality X to Y, and G2 transforms inversely. Generated data is reconstructed back to input x1 through G2 and same for generated data . (E) Info-GAN structure, where z, c, x, x′ denote random noise, informative part of latent variable, real data, and generated data. Concatenation of z and c are input to the generator. Informative latent c are reconstructed through an encoder E from generated data x′ = G(z, c). (F) MUNIT GAN structure where x1, y2, , denote unpaired data and generated data from two different modalities, X and Y. , , , denote content and style variables derived from data from two modalities, respectively. Data x1 is firstly decoded into content and style variable respectively. Then, the content variable is concatenated with a style variable from the Y modality to generate data through the generator G1. Concatenation of and are used as input for reconstruct x1 through G2. Same process also applies to the reverse direction. Images are taken and adapted from Goodfellow et al. (2014), Karras et al. (2018), Chen et al. (2016), Mirza and Osindero (2014), Zhu et al. (2017), Huang et al. (2018).

At convergence, the discriminator should theoretically output 50% probability for any input and the generator produces samples that are indistinguishable from the real data. One advantage of GAN is that it can generate clear and high quality images whereas another popular deep generative model, the variational autoencoder (VAE) (Kingma and Welling, 2013), can only produce blurry figures. Thus, GANs are well-suited for many applications in neuroimaging research, such as generating heterogeneous pathological patterns by mapping a healthy control image to potential reproducible disease signatures for subtype discovery. GANs can also predict the evolution of brain lesions or tumors for personalized disease diagnosis and prognosis. We discuss several popular variants of GANs that have been adapted for different areas of neuroimaging research in the next subsection.

2.2. Variants of GAN

Based on the original GAN model, different variants were proposed in recent years for two main purposes: solving limitations of the original GAN and adapting it to different applications. Here, we introduce the main variants that have been applied in the neuroimaging studies that will be discussed in the following sections.

2.2.1. Challenge oriented variants

Though the original GAN model has shown promising performance in generating realistic high-dimensional data, it still suffers from problems such as unstable optimization during training, mode collapse (learning to generate images following distributions of only a subset of training images), and poor quality (visually chaotic or blurry images) of generated data. Many GAN variants were proposed for solving these issues and also proved to be helpful in generating high-quality neuroimaging data (Bowles et al., 2018; Han et al., 2019; Gao et al., 2022).

Wasserstein GAN (W-GAN).

W-GAN (Arjovsky et al., 2017) is one of the important variants proposed to address unstable optimization and mode collapse. Compared to the original GAN model, W-GAN shares a similar min-max training procedure but has a different loss function. With the new loss function, the training procedure aims to minimize the Wasserstein distance between distributions of generated data and real data, which is shown to be a better distance measure for image synthesis problems. The WGAN-GP (Gulrajani et al., 2017) (W-GAN with gradient penalty) model was introduced as one improvement on W-GAN for more stable training with the gradient penalty method.

Besides variants in loss function, some other works propose to modify model structures for improving the quality of generated images.

Deep convolutional GAN (DC-GAN) and progressive growing GAN (PG-GAN).

DC-GAN (Radford et al., 2016) is one of the earliest models that uses convolutional layers in both generator and discriminator for stable generation of higher quality RGB images. PG-GAN (Karras et al., 2018) (Fig. 1 B), further achieves large high-resolution image generation by progressively increasing the number of layers during the training process. Systematic addition of the layers in both generator and discriminator enables the model to effectively learn from coarse-level details to finer details.

Self-attention GAN (SA-GAN).

SA-GAN (Zhang et al., 2019) leverages a self-attention mechanism in convolutional GANs. The self-attention module, complementary to convolutions, helps with modeling long range, multi-level dependencies across image regions, and thus avoids using only spatially local properties for generating high-resolution images.

2.2.2. Application oriented variants

Besides addressing broader methodological challenges above, many other variants of GANs were developed for specific applications. Several applications in computer vision are also of great interest to the neuroimaging community, including informative latent space and conditional image generation.

2.2.2.1. Informative latent space.

The latent vector in the GAN model is conventionally used as a random input for generating images, but does not have clear correspondence with the generated output data in an interpretable way. An informative latent space will help people interpret both the generative model and generated data, and make better use of them. In the field of neuroimaging, an informative latent space can be a low-dimensional representation for uncovering disease related imaging patterns (Bowles et al., 2018; Yang et al., 2021). Therefore, there are several variants proposed along this direction to make the latent vector correspond to features of generated images in an easily interpretable way:

Info-GAN and Cluster-GAN.

Info-GAN (Chen et al., 2016) (Fig. 1 E) divides latent variable into two parts z and c and enables c to explicitly explain features in generated data G(z, c). The model introduces a parameterized approximation of inverse posterior distribution Q (c|G(z, c)) which helps maximize the mutual information between latent variables and generated data, I(c, G(z, c)). Minimization of mutual information through Q (c|G(z, c)) can also be understood as a regularization on inverse reconstruction of latent variables from generated data. Therefore, compared with basic GAN, Info-GAN alternatively solves an information-regularized minimax game. Similar to Info-GAN, Cluster-GAN (Mukherjee et al., 2019) shared the idea of reconstructing latent variables from generated data, but employed discrete latent variables, further enabling clustering through the latent space.

2.2.2.2. Conditional image generation.

Conditional image generation, including image-to-image translation, generates images using some prior information instead of random input. For example, in the field of neuroimaging, there are several works approaches focusing on transformation of neuroimages among different modalities (Lin et al., 2021; Yan et al., 2018; Wei et al., 2020) and generation of neuroimages based on clinical information (Ravi et al., 2022). Most of these works are related to the following variants:

Conditional-GAN (C-GAN).

C-GAN (Mirza and Osindero, 2014) (Fig. 1 C) allows extra information, y, to be fed to both generator and discriminator, and thus is able to generate data x based on y information. y can be specific clinical information or the corresponding image in the source modality for image-to-image translation tasks.

Cycle-GAN.

Paired data y are not available in many cases, especially in the neuroimaging field. Dealing with this problem, Cycle-GAN (Zhu et al., 2017) (Fig. 1 D) enables unpaired image-to-image translation by imposing a specific cycle consistency loss for regularization besides standard GAN loss. The model has two mapping functions, G and F, which transform data from source to target and from target to source domain, respectively, while encouraging that generated output data can be reconstructed back to the input data, i.e. F (G(x)) ≈ x and G(F (y)) ≈ y.

Reversible-GAN (Rev-GAN).

Reversible-GAN (van der Ouderaa and Worrall, 2019) is an extension of Cycle-GAN. By utilizing invertible neural networks, the model possesses cycle-consistencies by design without explicitly constructing an inverse mapping function, thus achieving both output fidelity and memory efficiency.

Multimodal unsupervised image-to-image translation GAN (MUNIT-GAN).

Both Cycle-GAN and Rev-GAN assume one-to-one mapping in image translation, ignoring diversities in transformation directions. MUNIT-GAN (Huang et al., 2018) (Fig. 1 F) tackles this problem by first encoding the source data into one shared content space C, and one domain-specific style space S. The content code of the input is combined with different style codes in the target style space to generate target data with distinct styles.

2.3. Evaluation metrics

A set of metrics has been used for evaluating the quality of data generated by GAN-based models. Mean square error (MSE), peak signal-to-noise ratio (PSNR) (Wang et al., 2004), and structural similarity (SSIM) (Wang et al., 2004) were proposed for quantifying similarities or distances between paired data. Thus, they are typically used for comparing the generated data with the ground truth images. Specifically, MSE and PSNR measure the absolute pixel-wise distances between two images, while SSIM measures the structural similarity by considering dependencies among pixels. Two other metrics, Fréchet inception distance (FID) (Heusel et al., 2017) and maximum mean discrepancy (MMD) (Tolstikhin et al., 2016), are utilized for computing distances between two data distributions when there is no paired ground truth. In the application of GAN-based models, they are often applied to measure similarities between distributions of generated and real data.

3. GANs in clinical diagnosis

Accurate disease diagnosis is necessary for early intervention that may potentially delay disease progression. This is especially true in the case of neurodegenerative diseases, which are often highly heterogeneous, comorbid, and progress rapidly with severe impacts on the physical and cognitive function of patients. In the last decade, there has been pivotal progress in imaging techniques such as structural MRI, Fluorodeoxyglucose-PET (FDG-PET) and resting state functional MRI (rs-fMRI), enabling more precise and accurate measurement of disease-related structural and functional brain change in vivo. Simultaneously, advanced DL methods have been developed to analyze large, high-dimensional datasets and perform tasks such as disease classification and anomaly detection. GANs in particular have been leveraged to improve performance in both of these tasks.

This section is organized as follows. First, we discuss the use of GANs in disease classification frameworks with a primary focus on Alzheimer’s disease. This is first discussed in the context of single modality imaging and then multimodal imaging. Next, we discuss the use of GANs in anomaly detection (see Table 2).

Table 2.

Overview of publications utilizing GANs to assist disease diagnosis. Publications are clustered by categories and ordered by year in ascending order.

| Publication | Method | Dataset | Modality | Highlights |

|---|---|---|---|---|

| Disease Classification | ||||

| (Yan et al., 2018) | Conditional GAN | ADNI | MRI, PET | Amyloid PET generation and MCI prediction |

| (Liu et al., 2020) | GAN | ADNI | MRI, PET | MCI conversion prediction |

| (Mirakhorli et al., 2020) | GNN & GAN | ADNI | rs-fMRI | AD-related patterns extraction |

| (Pan et al., 2021b) | Feature-consistent GAN | ADNI | MRI, PET | Joint synthesis and diagnosis |

| (Lin et al., 2021) | Reversible GAN | ADNI | MRI, PET | Bidirectional mapping between modalities |

| (Gao et al., 2021) | Pyramid and Attention GAN | ADNI | MRI, PET | Missing modality imputation |

| (Yu et al., 2021) | Higher-order pooling GAN | ADNI | MRI | Semi-supervised learning |

| (Pan et al., 2021a) | Decoupling GAN | ADNI | DTI, rs-fMRI | Abnormal neural circuits detection |

| (Zuo et al., 2021) | Hypergraph perceptual network | ADNI | MRI, DTI, rs-fMRI | Abnormal brain connections analysis |

| Tumor Detection | ||||

| (Han et al., 2019) | PGGAN & MUNIT | BRATS | MRI | Tumor image augmentation |

| (Huang et al., 2019) | Context-aware GAN | BRATS | T1, T2, FLAIR | Glioma severity grading |

| (Park et al., 2021) | StyleGAN | Private | T1, T2, FLAIR | IDH-mutant glioblastomas generation |

| Anomaly Detection | ||||

| (Wei et al., 2019) | Sketcher-Refiner GAN | Private | PET, DTI | Myelin content in Multiple Sclerosis |

3.1. Disease classification

Classification involves clustering observations into distinct groups and assigning class labels to these groups based on associated input features. In the context of disease diagnosis, the classes could refer to disease stages or disease subtypes, for example. Previous work in disease classification relied on traditional ML techniques, such as SVM and logistic regression (Varol et al., 2017; Dong et al., 2016). GANs can glean and analyze patterns in high-dimensional, multi-modal imaging datasets, detecting signs of neurodegenerative processes and underlying pathology at preclinical stages. They can also synthesize whole images across modalities, which can be used to assist classification downstream.

While GANs can be applied to many different disease datasets, most of the papers we discuss below focus on Alzheimer’s disease, which is an irreversible neurodegenerative disease that debilitates cognitive abilities. It is the leading cause of dementia and currently impacts five million people in the United States (Arvanitakis et al., 2019). GANs can derive powerful imaging markers for individualized diagnosis, classification into conversion groups, or even prediction of onset at preclinical and cognitively unimpaired stages.

3.1.1. Disease classification with single-modality imaging

3.1.1.1. Structural MRI.

Structural imaging, including T1- and T2- weighted MRI, helps visualize brain anatomy such as shape, position, and size of tissues within the brain. Features that are commonly derived from structural MRI include regional brain volumes from T1-weighted scans and tissue hyperintensities from T2-weighted scans. Other brain characteristics such as tissue composition fractions and intracranial volume can also be extracted from these imaging modalities. Volumetric regional and whole brain atrophies derived from structural MRI are now identified as valid biomarkers of neurodegeneration, and have been used for clinical assessment and diagnosis (Frisoni et al., 2010 b). Besides diagnostic utility, features extracted from structural imaging have been used as imaging endpoints to quantify outcomes in clinical trials of disease modifying therapies (Frisoni et al., 2010 b).

Machine learning methods such as random forests have been proposed for automated Alzheimer’s disease classification but these methods require careful feature extraction and selection (Ramírez et al., 2010). Extracting features from structural MRI involves complex preprocessing steps, and the following feature selection phase requires advanced clinical knowledge. Supervised deep learning methods, such as convolutional neural networks (CNN), have also been proposed for Alzheimer’s disease classification (Oh et al., 2019; Sarraf and Tofighi, 2016). While CNNs can implicitly extract hierarchical features from images, they require large amounts of labeled training data, which are not always available (Oh et al., 2019; Sarraf and Tofighi, 2016).

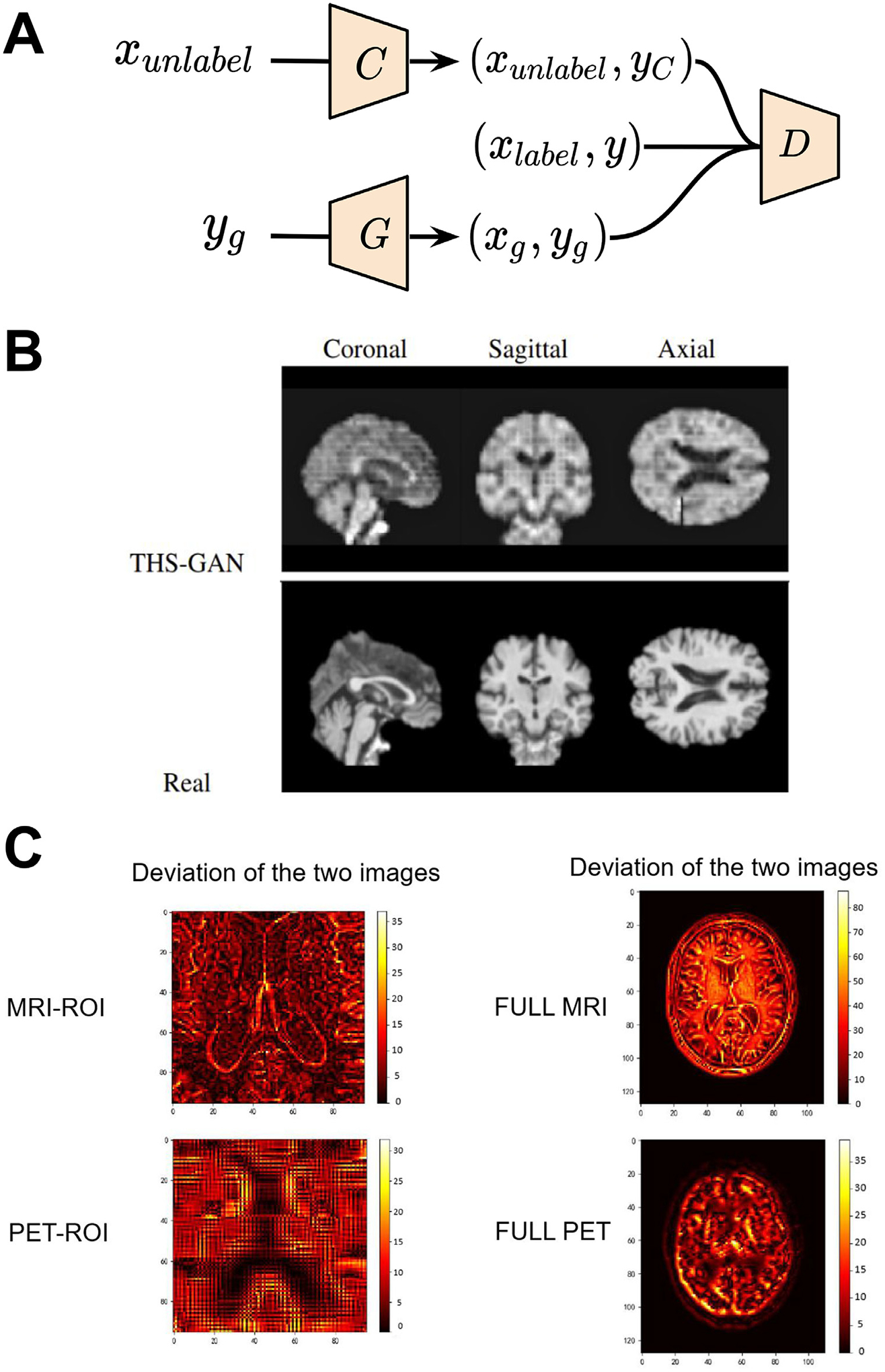

Therefore, Yu et al. (2021) propose a 3D semi-supervised learning based GAN (THS-GAN) which utilizes both labeled and unlabeled T1-weighted MRI for classifying mild cognitive impairment and Alzheimer’s disease. THS-GAN is modified based on C-GAN, and it has a generator, a discriminator, and a classifier. The schematic of the network architecture is shown in Fig. 2A. The generator uses 3D transposed convolutions to generate 3D T1-weighted MRI, while the discriminator and classifier use 3D DenseNet (Gu, 2017) to extract features from high dimensional MR volumes. Specifically, the generator which is conditioned on disease class produces a fake image-label pair, whereas the classifier takes an unlabeled image, predicts its corresponding disease category, and produces image-label pairs for unlabelled images. The discriminator’s job is to identify whether an image-label pair comes from the real data distribution. When training three of them together, the generator tends to generate more realistic images for a given disease class, the classifier tries to improve its predictive accuracy, while the discriminator will maximize the probability of assigning fake labels to the image-label pairs generated from the classifier and generator. During evaluation, the model is able to learn and generate plausible images (as shown in Fig. 2 B). The method achieves 95.5% accuracy in Alzheimer’s disease v.s. healthy control classification, and 89.29% accuracy in mild cognitive impairment v.s. healthy control classification. We will discuss other GAN-based techniques that use structural imaging for disease classification in the multi-modal imaging section.

Fig. 2.

GAN applications in disease classification with single- and multi-modal imaging. (A) Schematic of THS-GAN for Alzheimer’s disease and mild cognitive impairment classifications. (B) Comparison of synthesized brain MR images from THS-GAN and real T1-weighted scans with coronal, sagittal, and axial views for different training epochs. (C) Deviation between real image and synthetic images generated by Rev-GAN. In the deviation image, the yellow color represents large differences, and the dark colors denote small deviations. Images are taken and adapted from Lin et al. (2021), Yu et al. (2021).

3.1.1.2. Resting-state fMRI.

Rs-fMRI measures the time series of the blood-oxygenation-level-dependent (BOLD) fluctuations across brain anatomical regions. It relies on the underlying assumption that brain regions that co-activate, i.e. reliably demonstrate synchronous, low-frequency fluctuations in BOLD, are more likely to be involved in similar neural processes than regions that do not co-activate. Computing the Pearson correlations between the time series recorded in different brain regions provides estimates of functional connectivity, which is used to extract resting-state functional networks (rsFNs). These networks show patterns of synchronous activity across a set of distributed brain areas and provide potential biomarkers for a variety of illnesses (Damoiseaux et al., 2006; Horovitz et al., 2008; Smith et al., 2009). Specifically, changes in the representation of rsFNs have been observed in groups suffering from brain disorders such as epilepsy, schizophrenia, attention deficit hyperactivity disorder and major depressive disorder, and diseases such as Alzheimer’s and Parkinson’s (Rajpoot et al., 2015; Koch et al., 2015; Wang et al., 2013; Wee et al., 2012; Díez-Cirarda et al., 2018; Dansereau et al., 2017). Prior works in fMRI-based disease classification rely on common machine learning techniques such as support vector machine (SVM) and nearest neighbors (Saccà et al., 2018). These techniques require careful feature engineering and feature selection to achieve optimal performance.

Recently, deep learning techniques such as convolutional neural networks have been used for disease classification based on automatic feature extraction (Wen et al., 2018). However, due to insufficient data especially from rs-fMRI, these methods show poor generalizability. To overcome the limitations of both traditional ML and DL, researchers propose to use GANs to improve the classification performance. Zhao et al. (2020) propose adapting a 2D GAN model for disease classification using rs-fMRI. They use a GAN architecture to classify individuals with mental disorders from healthy controls (HC) based on functional connectivity (FC). FC assesses temporal relationships of a subject’s brain functional networks by computing the pairwise correlation between the spatially segregated networks (Du et al., 2015). Hence, FC reflects connectivity/synchronized activity of the brain networks and can be potentially used to identify fMRI-based biomarkers for disease classification. The generator takes a noise vector as input and learns to generate fake FC networks. The discriminator is used to classify mental disorders from HC, and discriminate between real and fake images. Finally, the model can be trained by optimizing an objective function that combines both adversarial loss and classification loss. The model performance is evaluated on two tasks, namely major depression disorder and schizophrenia classifications. The performance of the GAN model is validated against six classification techniques including k-nearest neighbors, adaboost, naive Bayes, Gaussian processes, SVM, and deep neural net. The GAN model outperforms all other methods in both tasks, i.e., major depressive disorder classification and schizophrenia classification, suggesting its utility as a potentially powerful tool to aid discriminative diagnosis.

Since the topology of brain connectomes is close to graphs, a natural extension would be boosting the classification model by using both graph structures and GANs. Mirakhorli et al. (2020) use FC to identify abnormal changes in the brain due to Alzheimer’s disease. The technique leverages FC to represent the human brain as a graph and then uses a graph neural network to learn structures which differentiate Alzheimer’s disease subjects from healthy individuals. Here, a VAE which is implemented by graph convolutional operators serves as a generator and a discriminator is used to improve the recovery of the graphs. At inference, the encoder part of the VAE converts graph data into a low-dimensional space, and then abnormal signals (salience alteration of the brain connection) can be detected by comparing the differences of the graph properties (first-and second-order proximities) within these latent space features. The model achieves an average five-fold cross-validation accuracy of 85.2% for the three-way classification. The model also finds that abnormal connections of the frontal gyrus and precentral gyrus with other regions have a high percentage of Alzheimer’s disease risk in the early stages and fall into the effective biomarkers category. Additionally, the olfactory cortex, supplementary motor area, and rolandic operculum have a high contribution to classify mild cognitive impairment patients. By recovering the missing connections with a generative approach and distinguishing the abnormal partial correlations from the healthy ones, the model provides biological-meaningful findings with high accuracy disease classification performance.

The studies mentioned above focus solely on functional connectivity. Even though performing classification using single-modality data from structural or functional MRI provides reasonable diagnostic accuracy, it can be boosted by using multi-modality data since additional modalities provide complementary information.

3.1.1.3. Disease classification with multi-modal imaging.

Multiple imaging modalities, such as MRI, PET, diffusion tensor imaging (DTI), and rs-fMRI, help in capturing diverse pathology patterns that may highlight different disease-relevant regions in the brain. This enhances the ability of disease classification models to distinguish diseases that are often comorbid, such as Alzheimer’s and Parkinson’s. However, the use of multi-modal imaging features is particularly challenging because of data sharing limitations, patient dropout, and relatively limited datasets with all modalities. Previous studies address this issue by simply discarding modality-incomplete samples (Zhang and Shen, 2012; Calhoun and Sui, 2016; Frisoni et al., 2010 a; Jie et al., 2016). This approach is prone to reducing classification accuracy and deteriorating the model’s generalizability due to the limited sample size. Instead, GANs can better handle missing data in multi-modal datasets by generating the missing images and preserving sample size, thereby boosting downstream classification performance.

Previous work shows direct and indirect relationships between functional and structural pathways within the human brain (Honey et al., 2009; Fukushima et al., 2018). These interesting studies have pivoted clinical research towards multi-modal integration to reliably infer brain connectivity. They also provided key insights into brain dysfunction in neurological disorders such as autism (Cociu et al., 2018), schizophrenia (Li et al., 2020 a), and attention deficit hyperactivity disorder (Qureshi et al., 2017).

Pan et al. (2021 a) propose to use multi-modal imaging to detect crucial discriminative neural circuits between Alzheimer’s disease patients and healthy subjects. The model can effectively extract complementary topology information between rs-fMRI and DTI using a decoupling deep learning model (DecGAN). DecGAN consists of a generator, a discriminator, a decoupling module, and a classification module. The generator and discriminator modules capture the complex distribution of functional brain networks without explicitly modeling the probability density function. The decoupling module is trained to detect the sparse graphs which store relationships between region-of-interest connectivity, such that the classification module can accurately separate Alzheimer’s disease and the healthy ones when taking these sparse graphs as inputs. The method shows accurate classification performance when discriminating HC v.s. early mild cognitive impairment (86.2% in accuracy), HC v.s. late mild cognitive impairment (85.7% in accuracy) and HC v.s. Alzheimer’s disease (85.2% in accuracy). The model also finds that limbic lobe and occipital lobe are highly correlated to Alzheimer’s disease pathology (Migliaccio et al., 2015; Takahashi et al., 2017). One major limitation of this work is that the authors assume the coupling between two regions is static, which conflicts with several recent studies that show functional connectivity is dynamic (Hutchison et al., 2013; Honey et al., 2009; Barttfeld et al., 2015). Moreover, the sample size of the study is small (236 subjects), which hinders the generalizability and reproducibility of region-of-interests detected by the model. Future studies could incorporate dynamic connectivity and detect dynamic changes predictive of Alzheimer’s disease or other neurodegenerative diseases and disorders.

Lin et al. (2021) use a 3D Rev-GAN (van der Ouderaa and Worrall, 2019) for missing data imputation and then evaluate the effect of the inclusion of GANs-generated images in Alzheimer’s disease v.s. cognitively unimpaired (CU) as well as stable mild cognitive impairment v.s. progressive mild cognitive impairment classification. The method is evaluated on CN subjects, subjects with stable mild cognitive impairment and progressive mild cognitive impairment and subjects with Alzheimer’s disease. Hippocampus images are used in addition to the full brain images in the experiments. Rev-GAN constructs synthetic PET images with high image quality that slightly deviates from real PET scans. Compared to other image synthesis methods that perform more processing steps to achieve higher alignment between the different modalities (Pan et al., 2018,2019, 2020; Hu et al., 2019), this approach yields comparable PSNR and higher SSIM in PET images synthesis. In terms of MR hippocampus images synthesis, the Rev-GAN achieves the highest SSIM and PSNR. The model performance drops for the full image reconstruction due to the difficulties in mapping the structure information such as the skull of the MR image from the functional image. Overall, the use of only one generator to perform bidirectional image synthesis in combination with the stability of reversible architecture enables the training of deeper networks with low memory cost. Therefore, the non-linear fitting ability of the model is enhanced, resulting in the construction of high quality images (see Fig. 2 C).

After imputing the missing data with GANs, Alzheimer’s disease diagnosis and mild cognitive impairment to Alzheimer’s disease conversion prediction are implemented using a multi-modal 3D CNN. The model trained using real hippocampus images for one modality and fully synthetic data from the other modality yields similar, sometimes superior, performance compared to the model using real data for both modalities, and always higher performance than the model using missing data. In terms of full images, although the quality of the generated MR full images is not as good as that of the generated hippocampus images, the classification accuracy using synthetic MR full images exceeds 90% for the Alzheimer’s disease diagnosis and 73% for the mild cognitive impairment to Alzheimer’s disease conversion prediction. Overall, the prominent improvement of the classification results with the use of GAN-synthetic data reveals the ability of the image synthesis model to construct images of high quality which also contain useful information about the disease, thus significantly contributing to Alzheimer’s diagnosis and mild cognitive impairment conversion prediction.

In this study, the authors use missing data synthesis to improve the Alzheimer’s disease diagnosis and the prediction of mild cognitive impairment to Alzheimer’s disease conversion. However, less attention has been devoted to the FDG metabolic changes and the biological significance of the imputed data compared to real data. Additionally, a pre-requisite for successful MRI-to-PET mapping is that the disease affects the tissue structure and metabolic function at the same time. Further exploration of the MRI-PET relationship in diseases such as cancer where structural and functional changes do not occur simultaneously is needed.

To date, relatively less attention has been devoted to the generation of amyloid PET images. Amyloid PET measures the amount of amyloid beta protein aggregation in the brain (Nordberg, 2004), which is one of the key hallmarks of Alzheimer’s disease. However, the availability of PET scans is extremely limited (compared to the MR scans) due to the radioactive exposure and high cost. Yan et al. (2018) use a 3D conditional GAN to construct 18 F-florbetapir PET images from MR images and then compare the performance of their method with traditional data augmentation techniques, such as image rotation and flipping, in a mild cognitive impairment classification task. The conditional GAN generator is a U-Net (Ronneberger et al., 2015) based CNN with skip connections and the discriminator is PatchGAN discriminator (Zhu et al., 2017). The generator is not only trained to fool the discriminator but also to construct images as close to reality as possible. Paired PET-MRI images are used to train the C-GAN. Then, the trained model is applied to generate PET images from MR images and the real PET images are used for the evaluation of C-GAN using SSIM metric. The SSIM reaches 0.95, thus indicating the ability of the model to generate images with high similarity with the real images. To classify stable mild cognitive impairment v.s. progressive mild cognitive impairment, a residual network (ResNet) (He et al., 2016) is built. The ResNet is trained using PET images from three scenarios: real PET images only, combined real PET and PET images generated using traditional augmentation techniques, and combined real PET and C-GAN-generated PET images. The classification performance increases with the aid of synthetic data. Between the two approaches, the inclusion of C-GAN-generated PET images in training results in higher performance compared to the model that used images generated using traditional augmentation techniques and this reveals the superiority of GANs in image synthesis over traditional image augmentation techniques. Medical images are different from the natural ones with a certain centering, alignment and asymmetry geometry, as well as characteristics such as contrast and brightness. Thus, computer vision augmentation techniques, such as adjusting brightness or contrast, adding noise, might alter the semantic content of the image; for example, the distinction between gray and white matter tissues can be impeded when changing the contrast of the image.

Hu et al. (2022) extend the MR-to-PET synthesis framework by developing a 3D end-to-end network, called bidirectional mapping GAN (BMGAN). This model adopts 3D Dense U-Net, a variant of U-Net (Çiçek et al., 2016) that leverages the dense connections of DenseNet (Gu, 2017), as the generator to synthesize brain PET images from MR images. The densely connected paths between layers in DenseNet tackle the vanishing gradient problem, foster feature propagation and information flow, and reduce the number of network parameters. One advantage of BMGAN is that it sets up an invertible connection between the brain PET images and the latent vectors. The model does not only learn a forward mapping from the latent vector to the PET images as traditional GANs, but also learns a backward mapping that returns the PET images back to the latent space by training an encoder simultaneously. This mechanism enables the synthesis of perceptually realistic PET images while retaining the distinct features of brain structures across individuals. Beside the high-quality generated images, the effectiveness of these images in disease diagnosis has been demonstrated by performing Alzheimer’s disease v.s. normal classification. The classification performance (AUC) using the BMGAN synthesized PET images is better than those generated by state-of-the-art medical imaging cross-modality synthesis models such as CGAN and PGAN (Dar et al., 2019).

3.1.1.4. Joint image synthesis and classification paradigm.

Many imputation methods for multi-modality neuroimaging datasets usually treat image synthesis and disease diagnosis as two separate tasks (Lin et al., 2021; Yan et al., 2018; Pan et al., 2021a). This ignores the fact that different modalities may identify different relevant regions in the brain relevant to the disease being studied. Performing image synthesis and classification in a joint framework enables deep learning networks to leverage correlations across input modalities.

Gao et al. (2022) propose a 3D task-induced pyramid and attention GAN (TPA-GAN) to generate missing PET data given the paired MRI. The pyramid convolution layers can capture multilevel features of MRI while the attention module eliminates redundant information and accelerates convergence of the network. The task-induced discriminator helps generate images that retain information specific for disease classification. Then, a pathwise-dense CNN (PT-DCN) gradually learns and combines the multimodal features from both real and imputed images towards the final disease classification. The pathwise transfer blocks consist of a concatenation layer, convolution layer, batch normalization and ReLU activation layer, and a larger convolution layer. These blocks are used to communicate information across the two paths of PET and MRI, making full use of complementary information in these two modalities. Under SSIM, PNSR and MDD metrics, the TPA-GAN outperforms several baseline methods, including a CycleGAN variant developed by Pan et al. (2018) for generation of PET images using MRI. In the experiments, the authors use ADNI-1 for training and ADNI-2 for testing, which could be an issue if the imaging data is not harmonized appropriately across scanner-changes, acquisition protocols, and subject demographics. In the future, cross-study transfer learning or domain adaptation techniques can be investigated to alleviate the problem. The following work leverages this idea to improve the power of a sample-size limited clinical study.

Pan et al. (2021b) extend on the joint synthesis-classification method developed by Gao et al. (2022) by maximizing image similarity within modalities. They propose a disease-image-specific deep learning (DSDL) framework for joint neuroimage synthesis and disease diagnosis using incomplete multi-modality neuroimages. First, disease characteristics specific to a given image modality are implicitly modeled and output by a disease-image-specific network (DSNet), which takes whole-brain images as input. A feature-consistency GAN (FGAN) then imputes the missing images. The FGAN encourages feature maps between pairs of synthetic and real images to be consistent while preserving the disease-image-specific information, using the outputs generated by DSNet. Therefore, the FGAN is correlated with DSNet and synthesizes the missing modalities in a diagnosis-oriented manner, resulting in better performance. Specifically, the DSNet achieves an diagnostic performance of 94.39% with only MRI and 94.92% with MRI and PET when using AUROC as the metric.

The joint neuroimage synthesis and representation learning (JSRL) framework proposed by Liu et al. (2020) offers a few advantages compared to the previous works. The model integrates image synthesis and representation learning into a unified framework where the synthesized multimodal representations are used as inputs for representation learning. The framework leverages transfer learning for prediction of conversion in subjects with subjective cognitive decline, which is the self-reported experience of worsening confusion or memory loss. JSRL consists of two major components: a GAN for synthesizing missing neuroimaging data, and a classification network for learning neuroimage representations and predicting the progression of subjective cognitive decline. These two subnetworks share the same feature encoding module, encouraging the generated data to be prediction-oriented. The underlying association among multimodal images can be effectively modeled for accurate prediction with an AUROC of 71.3%. In summary, this method focuses on improving the classification of subjective cognitive decline subjects using incomplete multimodal neuroimaging data. Since subjective cognitive decline is one of the earliest noticeable symptoms of Alzheimer’s disease and related dementias, the classification is clinically useful to begin targeted interventions earlier in these subjects. This work is among the first multimodal neuroimaging-based studies for subjective cognitive decline conversion prediction, which avoids the need to individually extract MRI and real or synthetic PET features as in previous works. JSRL leverages transfer learning by harnessing a large scale ADNI database to model a smaller scale database on subjective cognitive decline, which significantly increases the power of this study.

While many studies apply GANs for image synthesis and classification in neurodegenerative diseases, GANs have broader application in neurology, including detection of brain tumors and imaging anomalies. These applications are discussed in the next section.

3.2. Tumor and anomaly detection

3.2.1. Supervised tumor detection

Brain tumors, abnormal proliferations of cells in the brain, comprise a large portion of deaths related to cancer worldwide (Lapointe et al., 2018). Tumor detection and classification is an active research area in the medical imaging community; however, available imaging data for this research purpose remains relatively limited (Bakas et al., 2018). To tackle this problem, many of the following recent works leverage the generative abilities of GANs for dataset enrichment and augmentation.

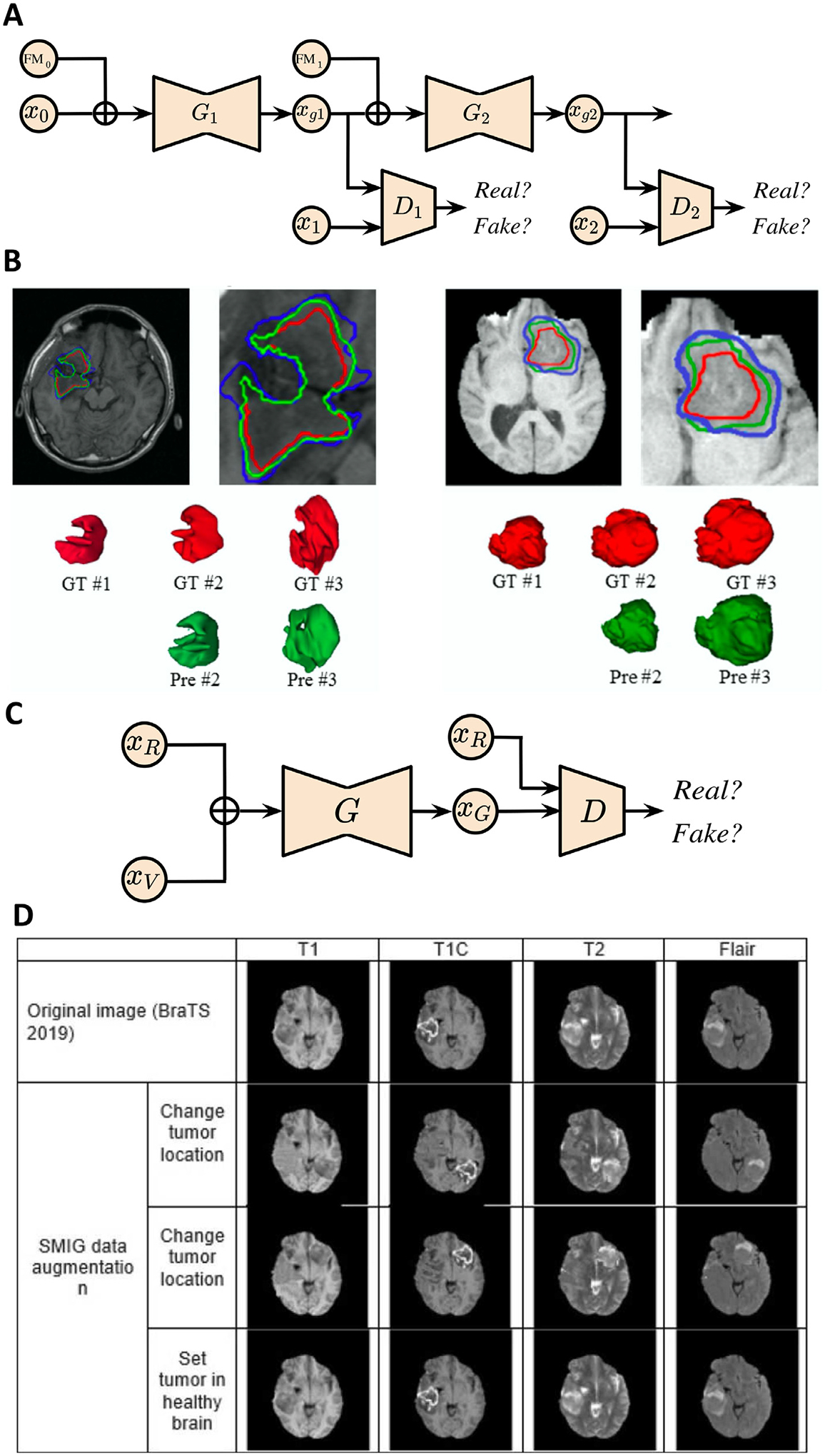

Han et al. (2019) demonstrate the use of GANs in improving the performance of a brain tumor detection network. They propose a two-step method for enriching the training dataset via data augmentation by generating additional samples of normal and pathologic images. They use an initial 2D noise-to-image GAN to produce the anatomical content and rough attributes of a scan and sequentially an unpaired image-to-image translation network to refine these images.

Park et al. (2021) utilize StyleGAN to create synthetic images while preserving the morphologic variations to improve the diagnostic accuracy of isocitrate dehydrogenase (IDH) mutant gliomas. The 2D GAN model was trained on normal brains and IDH-mutant high-grade astrocytomas to generate the corresponding contrast-enhanced (CE) T1-weighted and fluid-attenuated inversion recovery (FLAIR) images. The authors further develop a diagnostic model from the morphologic characteristics of both realistic and synthetic data to validate that the synthetic data generated by GAN can improve molecular prediction for IDH status of glioblastomas.

While these approaches show initial promise, GAN-based dataset enrichment has not been thoroughly studied for brain tumors. For example, GANs might not be able to capture the sample distribution of highly heterogeneous tumor data when only limited data is available. Given this challenge, GAN-based unsupervised anomaly detection offers distinct advantages for tumor detection.

3.2.2. Anomaly detection

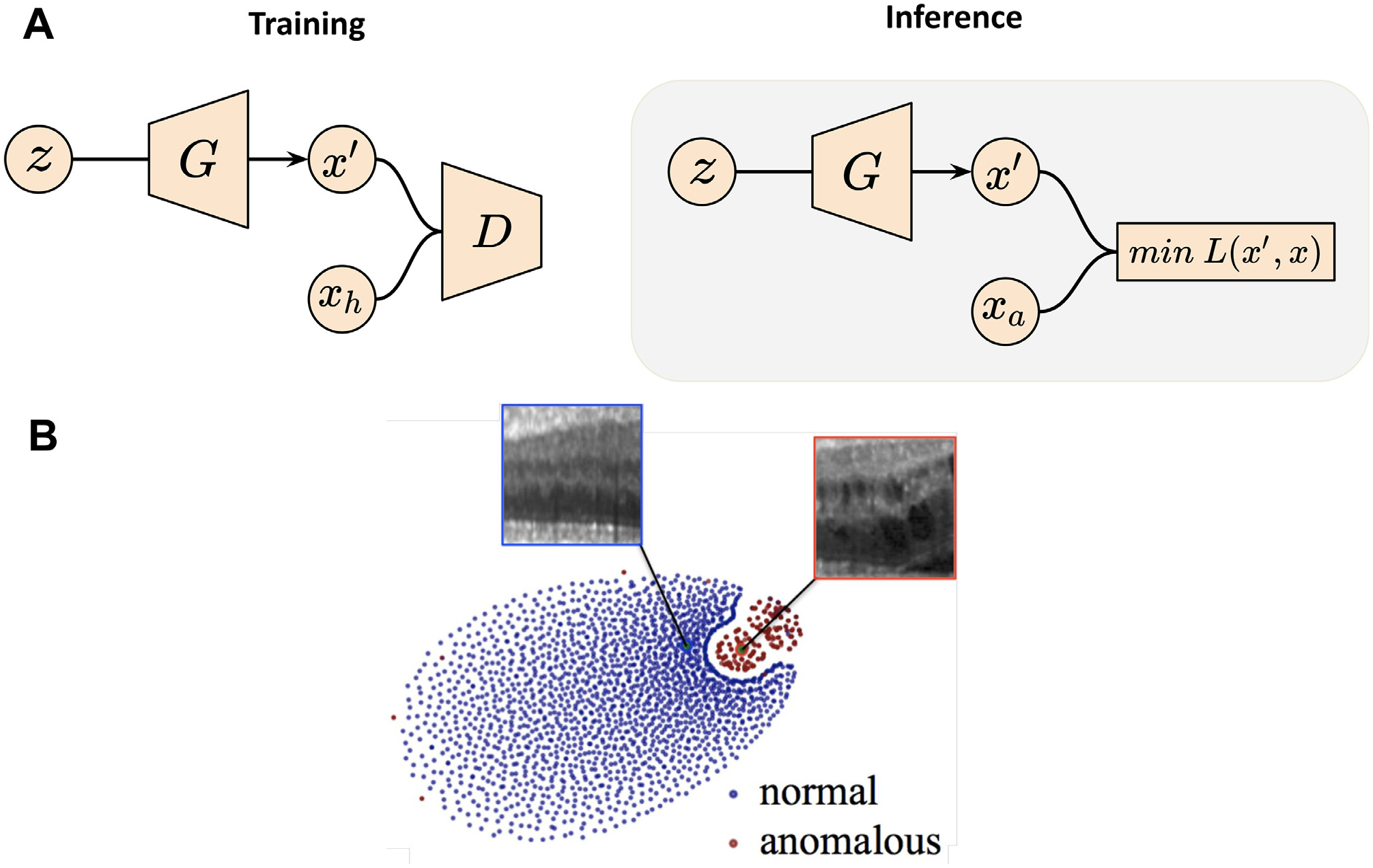

Anomaly detection, the identification of scans that deviate from the normative distribution, offers a path toward identifying pathology even when the anomalous group is not explicitly defined. Anomaly detection has most often been used for the detection and segmentation of tumors and lesions (Nguyen et al., 2023; Bengs et al., 2021). Prior approaches for anomaly detection are not suited for use at the image level and often use regional summary measures from segmented brain regions to identify abnormalities from scans. For example, one-class SVM is used to define the normative group allowing outliers to be identified (El Azami et al., 2013; Retico et al., 2016). These methods have shown promise in specific cases but are sensitive to the selection of summary measures used to represent the scan. In general, anomaly detection models are trained using both healthy and anomalous brain scans or healthy scans only. Focusing more on the latter case, GAN-based anomaly detection in neuroimaging stems from the ability of GANs to model the normative distribution of brains accurately. When substantial deviations from the expected distribution occur, the model can infer the presence of abnormalities, which has been leveraged in neuroimaging for lesion and tumor detection. In 2017, AnoGAN (Schlegl et al., 2017) gained popularity as an anomaly detection method using only normative samples to define its detection criteria. AnoGAN uses a generator to learn the mapping from a low-dimensional latent space to normal 2D images, defining normal/healthy regions in the latent space. When a new image is encountered, the latent representation whose reconstruction matches the new image most closely would be selected via backpropagation-guided sampling. Since the generator is only trained on normal samples, the learned latent space cannot adequately represent the variation of anomalous scans, and thereby the reconstructed images from anomalous images often differed in the anomalous regions (see Fig. 3). If deviation between the reconstructed and original image is observed, the image would then be marked as anomalous.

Fig. 3.

GAN applications in neuroimaging-based anomaly detection. (A) Schema of AnoGAN. Training is performed on health subjects to learn z, a latent space representing the data distribution. Inference is performed by sampling from z to generate image x′, such that the difference between x′ and the anomalous image xa is minimized. (B) Illustration of the latent space distribution produced by AnoGAN. Images are taken and adapted from Schlegl et al. (2017).

Similarly, Nguyen et al. (2023) develop an unsupervised brain tumor segmentation/detection method leveraging GAN-based image in-painting technique – the reconstruction of partially obscured areas of an image from the surrounding context. If the network is trained on healthy images, anomalous regions of an image will be in-painted. The method performed well in images with smaller, local anomalies as the surrounding context contained enough information for the in-painting of a healthy region. Training in this scenario is performed by randomly masking a part of an image and asking the generator to recreate the missing portion based on the unmasked regions. During inference, the target image is masked in many different positions and then reconstructed by the network. If there exists deviation between the reconstructed and original images in several subsets of the masked images, this subject is likely to be marked as an anomaly. By repeatedly masking various parts of the scan, the authors are able to generate a tumor segmentation mask. The authors report an improvement of tumor segmentation performance over AnoGAN with a Dice score from 38% to 77%. Bengs et al. (2021) implement a similar idea of unsupervised anomaly detection to detect brain tumors using VAE-based image in-painting. The authors demonstrate an improvement over prior 2D methods in brain tumor segmentation with a Dice score from 25% to 31%. Although image in-painting methods have shown promise for abnormality detection, they have two major limitations. Firstly, they do not perform well when the abnormality is large or has a global effect on the scan; secondly, the algorithms may produce false positives in regions where normal anatomical variation is high, because there might be multiple acceptable ways of in-painting a region only based on its surrounding appearance.

GAN-based methods have offered a unique way to identify deviations from the healthy distribution. In particular, GAN-based unsupervised anomaly detection shows promising performance when pathology data is limited and difficult to acquire. There are a variety of potential clinical and research-based applications for such methods. It is foreseeable that these methods will be useful in triaging scans, with critical or time sensitive pathology, for a radiologist to read. While most published works have focused on identifying gross abnormalities, such as stroke and tumor lesions, it remains to be seen how well similar approaches perform in identifying subtler pathology. Within the domain of anomaly and tumor detection, GANs have also shown promise in enriching training data in cases with limited or missing data.

4. GANs in brain aging and modeling disease progression

To understand how a patient’s brain changes due to pathology, it is important to first understand how the brain evolves in the absence of pathology. This motivates the need for methods that specifically tackle the problems with modeling healthy brain aging, and how GANs can be utilized to simulate subject specific brain aging (Xia et al., 2021). Additionally, modeling abnormal disease specific brain changes is also of clinical significance. Disease progression modeling can help screen for people at risk of developing neurological conditions and also help plan preventative measures or treatment options. This section will discuss the challenges in modeling healthy and abnormal brain changes and motivate the need and utility of GANs in disease prognosis. We will also review various existing GAN models that are designed to model healthy and abnormal brain changes (see Table 3).

Table 3.

Overview of publications utilizing GANs to assist brain development and disease progression analysis. Publications are clustered by categories and ordered by year in ascending order.

| Publication | Method | Dataset | Modality | Highlights |

|---|---|---|---|---|

| Brain Aging | ||||

| Xia et al. (2021) | Transformer GAN | Cam-CAN, ADNI | MRI | Synthesize aging brain without longitudinal data |

| Peng et al. (2021) | Perceptual GAN | IBIS | T1, T2 | Infant brain longitudinal imputation |

| Alzheimer’s Progression | ||||

| Bowles et al. (2018) | WGAN | ADNI | MRI | Disentangle visual appearance of AD using latent encoding |

| Wegmayr et al. (2019) | Recursive GAN | ADNI, AIBL | MRI | Conversion prognosis from MCI to AD |

| Zhao et al. (2020b) | Multi-information GAN | ADNI, OASIS | MRI | Progression Stage classification |

| Yang et al. (2021) | Cluster & Info-GAN | ADNI, BLSA | MRI | AD subtypes imaging patterns discovery |

| Ravi et al. (2022) | Conditional GAN | ADNI | MRI | Spatiotemporal, biologically-informed constrained |

| Lesion Evolution | ||||

| Rachmadi et al. (2020) | Multi-discriminator GAN | Private | T1, T2, FLAIR | White matter hyperintensities evolution prediction |

| Wei et al. (2020) | Conditional Attention GAN | Private | MRI, PET | Myelin content prediction in multiple sclerosis |

| Tumor Growth | ||||

| Elazab et al. (2020) | Stacked Conditional GAN | Private, BRATS | T1, T2, FLAIR | Glioma growth prediction |

| Kamli et al. (2020) | GAN | TCIA, ADNI | T1, T2, FLAIR | Glioblastoma tumors growth prediction |

4.1. Brain aging

The human brain undergoes morphological and functional changes with age. Deviations from these normative brain changes might be indicative of an underlying pathology. Neuroimaging techniques such as structural and functional MRI have been successfully applied (Kim et al., 2021) to measure and assess these brain changes. Modeling brain aging trajectories and simulating future brain states can be valuable in a number of applications including early detection of neurological conditions and imputation for missing data in longitudinal studies. In prior work, researchers developed common atlas models (Habas et al., 2010; Huizinga et al., 2018; Dittrich et al., 2014) as spatio-temporal references of brain development and aging. One of the main challenges with this approach is that individuals might exhibit unique brain aging trajectories based on their lifestyle and health status. However, common atlas models might not preserve this inter-subject variability resulting in in-accurate modeling. In recent years, GANs have been proposed to combat this issue and generate subject-specific brain aging image synthesis.

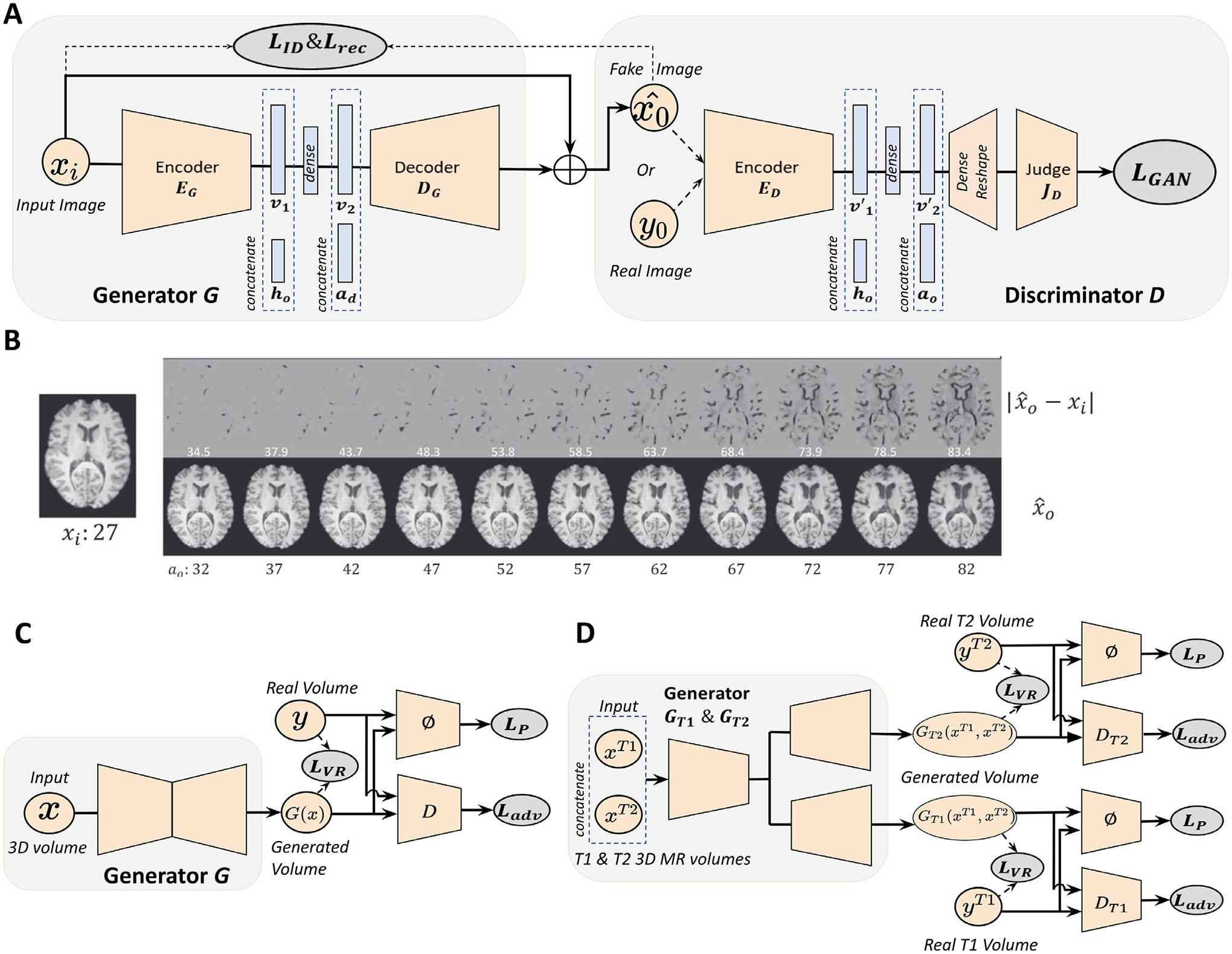

Xia et al. (2021) designed a conditional GAN that synthesizes subject-specific brain images given a target age and health condition. Their unique model learns to synthesize older brain MR scans from a subject’s current brain scan without relying on any longitudinal scans to guide the synthesis. As depicted in Fig. 4 A, their model consists of a generator that is conditioned on the target health state and the difference between current age and target age of the subject. The generator takes a 2D T1-weighted MRI and synthesizes brain images that correspond to target age and health state (control/mild cognitive impairment/Alzheimer’s disease) while preserving subject identity. On the other hand, the discriminator ensures that the generated images correspond to the target age and health state by learning the joint distribution of the brain image, target age, and target health state. To preserve individual brain characteristics of the subjects during modeling, the authors train the GAN model with a combination loss function that has three elements: an adversarial loss (LGAN is a Wasserstein loss with gradient penalty) that encourages the model to generate realistic brain images, an identitypreservation loss (LID) that encourages network to preserve the subject’s unique characteristics during image generation, and finally a reconstruction loss (Lrec) that encourages the network to reconstruct input when the generator is conditioned on the same age and health state as the input. Fig. 4B shows the results of the conditional GAN in synthesizing images of the brain at multiple target ages. Although the predicted apparent age of synthesized images in Fig. 4B is very close to the target age, one limitation of the method is that the subject identity might not be preserved during image synthesis. The authors only incorporate age and health state in the modeling process, but other factors such as gender and genotype can help model finer subject details that might help preserve subject identity. Additionally, the model was trained to synthesize older brain scans from younger brain scans but not vice-versa. Modeling the opposite will not only strengthen the usability of the model for imputing missing timepoints but also provide a more robust model that preserves subject identity. Another major limitation is that the model uses a 2D design, to improve visual quality of the generated brain images, 3D architectures can be adapted to model the brain as a whole volume. The subsequent paper tackles some of these limitations.

Fig. 4.

GAN applications in modeling healthy brain aging. (A) Schematic of the conditional GAN model for modeling the brain aging process across the whole lifespan. xi : generator input; ℎ0 : target age vector; ad : age difference between current age ai and target age a0; : generator output; v1, v2, v′1, v′2: latent embedding. Generator synthesizes brain image of target age and health state, and judge network gives a discrimination score of whether the image given to the discriminator is real or fake. LID, Lrec, LGAN refer to identity loss, reconstruction loss and adversarial loss, respectively. (B) Examples of healthy brain aging modeling using the GAN described in (A). Bottom panel shows the images synthesized at different target ages a0, and the top panel shows the absolute difference between input image xi and synthesized image . (C) Schematic of the perceptual adversarial network (PGAN). (D) Multi-modal perceptual adversarial network (MPGAN) architecture. x, xT1, xT2: input 3D MR volume; G(x), GT1 (xT1, xT2), GT2 (xT1, xT2): generated output; y, yT1, yT2: real 3D MR volume; D, DT1, DT2: discriminator networks; ϕ: feature extraction network; LVR, LP, Ladv refer to voxel-wise reconstruction loss, perceptual loss, and adversarial loss. Images are taken and adapted from Xia et al. (2021), Peng et al. (2021).

Unlike the 2D model presented by Xia et al. (2021), Peng et al. (2021) introduce 3D models that longitudinally predict brain volumes in infants during their first year of life. The first model they introduce is a single-input-single-output model called perceptual adversarial network (PGAN). As depicted in Fig. 4C, PGAN has a 3D U-Net (Çiçek et al., 2016) as the generator which aids in volumetric processing. The generator takes T1 or T2 weighted brain images from an initial timepoint and learns to generate corresponding longitudinal brain images. The discriminator learns to discriminate the fake images from the real images using adversarial loss LGAN. Additionally, a voxel-wise reconstruction loss LV R encourages the voxel intensities of the generated images to be close to the corresponding voxel intensities of the ground truth images. Since the voxel-wise reconstruction loss might over-smooth the generated images an additional loss function called perceptual loss LP is introduced. Perpetual loss helps preserve the sharpness of the generated images. Since MRI sequences capture complementary features of the brain, the authors propose a second model called multi-contrast perceptual adversarial network (MPGAN). This model extends the PGAN architecture to incorporate multiple modality inputs and outputs, thereby learning complimentary features from both T1- and T2-weighted brain images. As depicted in Fig. 4D, MPGAN has two generators based on a 3D U-Net architecture, but unlike PGAN, the 3D U-Net has a shared encoder that takes T1- and T2-weighted images at a given time point as input, and two independent decoders that synthesize the longitudinal T1- and T2-weighted brain images, respectively. Two discriminator networks learn to discriminate between real and fake T1- and T2-weighted images. The models are evaluated on an infant brain imaging dataset with T1- and T2-weighted volumes available at 6 months and 12 months of life. The model performance is measured on two tasks: predicting six month images from twelve month images as well as predicting twelve month images from six month images. A major limitation of this work is that it’s constrained to modeling two timepoints since paired images are used to train and model brain aging at 6 and 12 months of life. Hence the model cannot synthesize brain images over the whole lifespan, to do so would require scanning subjects across their whole lifespan. This leads us back to a recurring problem in modeling the brain aging process: models might require longitudinal data for training, which may be infeasible to acquire.

Brain aging is a complex process and each individual presents a unique brain aging trajectory that is influenced by their age, genetic code, demographics, and any underlying neuropathy. GANs allow for subject specific synthesis of the aging brain, but there is no guarantee that the subject identity is preserved during synthesis. Hence, future research needs to focus on integrating multiomic, imaging, and clinical data for brain aging synthesis while ensuring the preservation of subject identity.

4.2. Alzheimer’s disease progression

The human brain deviates from normative brain aging when underlying disease processes affect its structure and function. Disease progression models trained on longitudinal imaging data can characterize the future course of the disease progression, making them valuable for clinical trial management, treatment planning and prognosis. Traditional ML algorithms have been widely applied for modeling Alzheimer’s disease progression, with a focus on extrapolating biomarker metrics and cognitive scores. For example, Zhou et al. (2012) propose a least absolute shrinkage and selection operator (LASSO) formulation to predict Alzheimer’s disease patients’ cognitive scores at different time points. Recently, disease progression modeling is not only approached as a regression task, but also a generative task where models generate realistic high-dimensional image data. Researchers leverage the ability of GANs to synthesize realistic images and other data in general, in order to simulate future states of Alzheimer’s disease (Bowles et al., 2018; Yang et al., 2021; Ravi et al., 2022). Generating realistic high dimensional data in the medical field is far from being considered a trivial task, due to the complexity and irregular availability of longitudinal and annotated data. GANs have been predominantly used in disease progression modeling because of their superior ability in learning sharp distributions from training data and producing high resolution images, compared to other generative techniques (Bowles et al., 2018; Yang et al., 2021; Ravi et al., 2022; Xia et al., 2021; Peng et al., 2021).

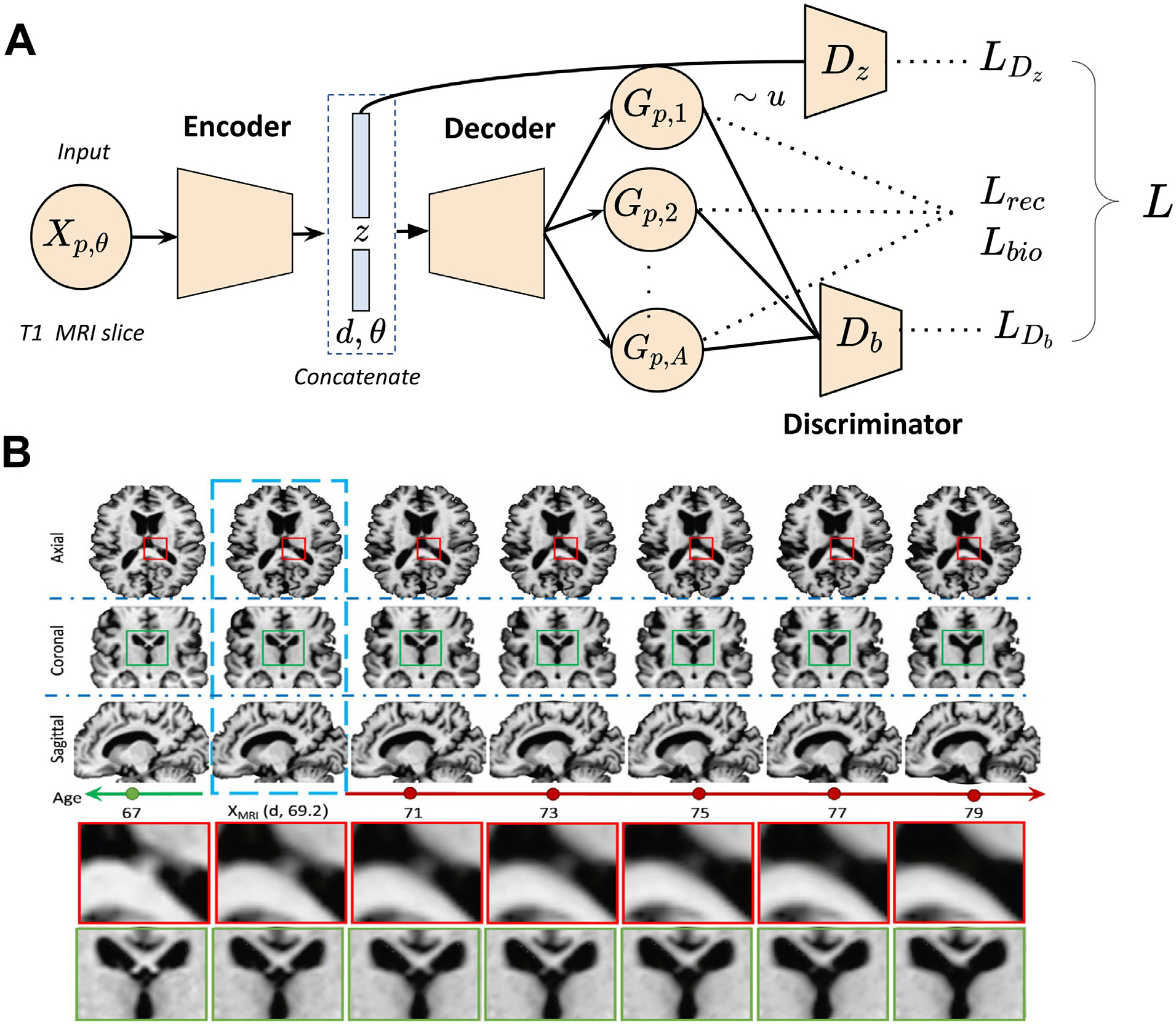

For example, Ravi et al. (2022) present a 4D-degenerative adversarial neuroimage net (4D-DANI-Net), with the goal of generating high resolution, longitudinal 3D MRIs that mimic the personalized neurodegeneration using spatiotemporal and biologically-informed constraints. The 4D-DANI-Net is composed of three main blocks:a preprocessing block, a progression block, and a 3D super resolution block. The preprocessing blockremoves irrelevant variations in the data. The progression modeling block is implemented using the degenerative adversarial neuroimage (DANI) net (Ravi et al., 2019) (see Fig. 5A). A conditional autoencoder (CAD), a set of adversarial networks and a set of biological constraints are the main elements of DANI net. The CAD is responsible for producing the longitudinal 2D MRI with the use of the biological constraints in the optimization and a discriminator that is going to compare the fake longitudinal data produced by the CAD with the real ones. Multiple DANI-Nets are trained, one for every 2D MR slice, and all these DANI nets compose the progression model of the 4D-DANI-Net. In order to unify the low resolution images produced by the DANI nets, the authors employ the 3D super-resolution block that produces the 3D high resolution MR image. The final block is the super resolution one that transforms the low-resolution images, produced by the DANI nets, into the 3D high resolution MR image. In Fig. 5B, there are qualitative results that showcase the ability of 4D-DANI-Net to produce MR scans over time that correspond in different ages of the same subject.

Fig. 5.

GAN applications in generating disease progression scans from a single time point. (A) Schematic of DANI net. The input to DANI net is a T1 MR image from subject p at age θ with diagnosis d. The output of the decoder is a set of longitudinal scans. Several loss functions (reconstruction loss Lrec, biological constraints Lbio, discriminator losses LDz and LDb) are combined together to train DANI net using a single time point of subject p. (B) Longitudinal MRIs synthesized using 4D-DANI-Net for a 69 years old cognitive normal subject from three orientations. The blue box indicates the input MRI and other images are synthesized MR scans from the model. Two magnified regions are illustrated at the bottom panel. Images are taken and adapted from Ravi et al. (2019).

In the same spectrum of simulating Alzheimer’s disease progression but in 2D space, Bowles et al. (2018) built a progression model for Alzheimer’s disease that leverages the imaging arithmetic and isolates the features in the latent space that correspond to Alzheimer’s pathology. The core model is W-GAN along with a re-weighting scheme. The re-weighting scheme increases the weighting of those real images that are misclassified. This forces the discriminator to better represent the most extreme parts of the images, which in turn forces the generator to produce images from this region. Using imaging arithmetic and the latent encodings that correspond to Alzheimer’s disease features one can simulate scans with Alzheimer’s with different grades of severity. For example, by adding the latent encoding of Alzheimer’s, with a specific scaling, in the MR scan of a cognitive normal subject one can see en-larged ventricles and cortical atrophy in the output MRI. However, this paper makes several potentially problematic assumptions. They have assumed that the progression is a linear process over time. Furthermore, they hypothesized that morphological changes across all subjects with Alzheimer’s disease are symmetric. Additionally, this methodology is developed using a small window size, 64 by 64 which therefore makes it unrealistic for a use case scenario.

Yang et al. (2021) present an alternative GAN-based approach for Alzheimer’s disease progression analysis built on tabular volumetric data. Firstly, they worked on disentangling the structural heterogeneity of the diseased brain and then with meta-analysis connected the cross-sectional patterns to longitudinal data. They propose semi-supervised clustering GAN (SMILE-GAN), a method that manages to disentangle pathologic neuroanatomical heterogeneity and define subtypes of neurodegeneration. In general, SMILE-GAN learns mappings from cognitive unimpaired individuals to dementia patients in a generative approach. Through this approach, SMILE-GAN captures disease effects that contribute to brain anatomy changes and avoids learning non-disease related variations such as covariate effects. Technically, the model learns one-to-many mappings from the CN group, X, to the patient (PT) group Y. The goal is to learn a mapping function f: X × Z → Y which generates fake PT data from real CN data. The subtype variable z is used as an additional input to the mapping function f, along with the CN scan x. Along with the mapping function, the model also trains the discriminator D to distinguish real PT data y from synthesized PT y′ The optimal number of clusters is determined using cross-validation and evaluating for reproducibility of the results. These clusters define a four-dimensional coordinate system that captures major neuroanatomical patterns which are visualized in Fig. 6B.

Fig. 6.

GAN applications in disease subtypes discovery (four-dimensional coordinate system developed by SMILE-GAN). (A) Voxel-wise statistical comparison (onesided t-test) between cognitive normal subjects and subjects that predominantly belong to each of the four Alzheimer’s disease neuroanatomical patterns. (B) Visualization of subjects that belong to the four subtype clusters in a diamond plot. Images are taken and adapted from Yang et al. (2021).

This work goes on to connect the identified clusters with longitudinal progression pathways. Yang et al. (2021) provide an alternative and robust way to model progression, by identifying patterns of atrophy. No assumptions on imaging data distribution and its independence from confounding factors and variability make it a powerful model that could potentially be used in subtyping of other heterogeneous diseases. Furthermore, with more representative data such a method can potentially identify more intricate subtypes that now are covert due to its limited instances in the current datasets.

Modeling the progression of Alzheimer’s is a challenging task due to the complexity, availability, and the multiple modalities, ranging from imaging ones such as MRI and PET to genomic and clinical information. Understanding the underlying biological processes and trying to comprehend potential factors that connect these modalities is the way to interpret and build knowledge for heterogeneous diseases such as Alzheimer’s. A future challenge and research opportunity is to develop GAN models incorporating high resolution genomic information as single nucleotide polymorphisms (SNPs). Exploring a pathway between genomic data and imaging signatures will shed light on Alzheimer’s endophenotypes and such knowledge is valuable for potential future treatments.

4.3. Progression of brain lesions

MRI-visible brain lesions, such as white matter hyperintensities and multiple sclerosis lesions, reflect white matter or gray matter damage caused by chronic ischaemia associated with cerebral small vessel disease or inflammation that results from the malfunction of the immune system (Wardlaw et al., 2017; Bodini et al., 2016). Since white matter hyperintensities play a key role in aging, stroke, and dementia, it is important to quantify white matter hyperintensities using measures such as volume, shape and location. These measures are associated with the presence and severity of clinical symptoms that support diagnosis, prognosis, and treatment monitoring (Kuijf et al., 2019). We can observe the hyperintense regions clearly in T2-weighted and FLAIR brain MRI. The evolution of white matter hyperintensities over a period of time can be characterized as volume decrease (regress), volume stability, or volume increase (progress). It is challenging to predict the evolution of white matter hyperintensities because its influence factors such as hypertension and aging are poorly understood (Wardlaw et al., 2013). White matter hyperintensities evolution prediction is under-explored in the literature, though other lesion progression, e.g. ischemic stroke lesion, has been modeled using a non-linear registration method called longitudinal metamorphosis (Rekik et al., 2014).