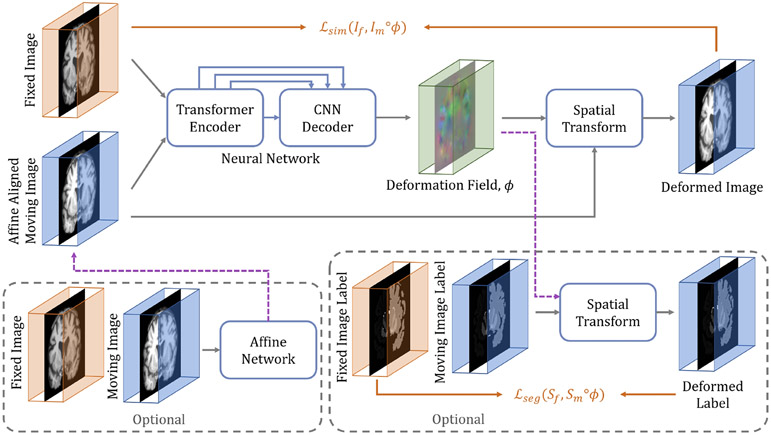

Fig. 3:

The overall framework of the proposed Transformer-based image registration model, TransMorph. The proposed hybrid Transformer-ConvNet network takes two inputs: a fixed image and a moving image that is affinely aligned with the fixed image. The network generates a nonlinear warping function, which is then applied to the moving image through a spatial transformation function. If an image pair has not been affinely aligned, an affine Transformer may be used prior to the deformable registration (left dashed box). Additionally, auxiliary anatomical segmentations may be leveraged during training the proposed network (right dashed box).