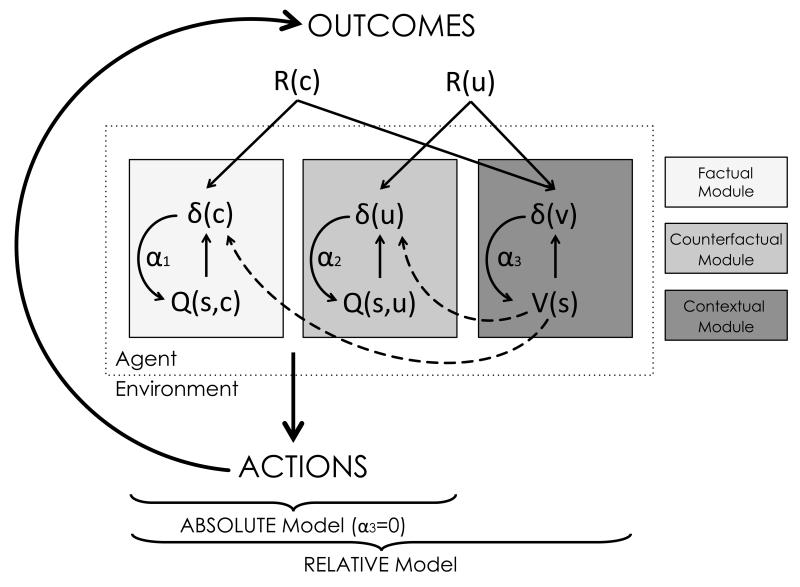

Figure 3. computational architecture.

The schematic illustrates the computational architecture used for data analysis. For each context (or state) ‘s’, the agent tracks option values (Q(s,:)), which are used to decide amongst alternative courses of action. In all contexts, the agent is informed about the outcome corresponding to the chosen option (R(c)), which is used to update the chosen option value (Q(s,c)) via a prediction error (δ(c)) . This computational module (“factual learning”) requires a learning rate (α1). In the complete feedback condition, the agent is also informed about the outcome of the unselected option (R(u)), which is used to update the unselected option value (Q(s,u)) via a prediction error (δ(u)). This computational module (“counterfactual learning”) requires a specific learning rate (α2). In addition to tracking option value, the agent also tracks the value of the context (V(s)), which is also updated via a prediction error (δ(v)), integrating over all available feedback information (R(c) and R(u), in the complete feedback contexts and Q(s,u) in the partial feedback contexts). This computational module (“contextual learning”) requires a specific learning rate (α3). The RELATIVE model can be reduced to the ABSOLUTE model by suppressing the contextual learning module (i.e. assuming α3=0).