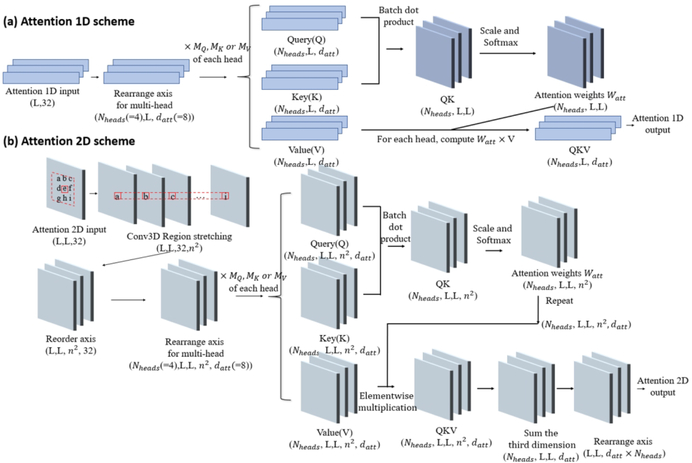

Figure 2.

Schematic illustration of 1D and 2D attention mechanism. (a) The scheme for 1D attention mechanism. The input is first transformed into a vector of size (Nheads, L, datt) for the efficient multi-headed attention implementation. For each head, the vector of size (L, datt) is multiplied to three different trainable matrices of size (datt, datt) to generate Query(Q), Key(K), and Value(V). Different heads have their own transformation matrices for Q, K and V. Q and K first go through a batch dot product operation, resulting in a new vector QK with size (Nheads, L, L). QK is then scaled and normalized with Softmax function on the last axis, which becomes the attention score Watt. The product of Watt × V for each head becomes the 1D attention output. (b) The scheme for 2D attention mechanism. The 2D input is first transformed with a 3D convolution and becomes a stretched vector of size (L, L, 32, n2). It is then computed with the similar attention operation as the 1D attention scheme on the last axis.