Abstract

This study investigated the interaction between top-down attentional control and multisensory processing in humans. Using semantically congruent and incongruent audiovisual stimulus streams, we found target detection to be consistently improved in the setting of distributed audiovisual attention versus focused visual attention. This performance benefit was manifested as faster reaction times for congruent audiovisual stimuli, and as accuracy improvements for incongruent stimuli, resulting in a resolution of stimulus interference. Electrophysiological recordings revealed that these behavioral enhancements were associated with reduced neural processing of both auditory and visual components of the audiovisual stimuli under distributed vs. focused visual attention. These neural changes were observed at early processing latencies, within 100–300 ms post-stimulus onset, and localized to auditory, visual, and polysensory temporal cortices. These results highlight a novel neural mechanism for top-down driven performance benefits via enhanced efficacy of sensory neural processing during distributed audiovisual attention relative to focused visual attention.

INTRODUCTION

Our natural environment is multisensory, and accordingly we frequently process auditory and visual sensory inputs simultaneously. The neural integration of multisensory information is in turn intimately linked to the allocated focus or distribution of attention, which allows for dynamic selection and processing of sensory signals that are relevant for behavior.

Within-sensory-modality attention has been extensively characterized in the visual and auditory domains. As per the biased competition model, selective attention to a spatial location, object or perceptual feature within a modality amplifies the sensory neural responses for the selected signal and suppresses irrelevant responses (Desimone & Duncan, 1995, Desimone, 1998, Kastner & Ungerleider, 2001, Gazzaley et al., 2005, Beck & Kastner, 2009). This mechanism has been shown to underlie improved behavioral performance for detection of the attended item. In contrast to selective attention, divided attention within a modality to concurrent sensory signals, generates reduced neural responses and relatively compromised performance for each item competing for attention. These observations are mainly attributed to limited attentional resources (Lavie, 2005). A crucial question that arises is how these principles of attention extend to interactions across sensory modalities (Talsma et al., 2010).

In this study, we compare the influence of attention focused on one sensory modality (visual) to attention distributed across modalities (auditory and visual) on target detection of concurrently presented auditory and visual stimuli. Additionally, we assess neural mechanisms underlying how attention impacts performance, as well as how these behavioral and neural influences are modulated as a function of congruent vs. incongruent information in the audiovisual domains. To the best of our knowledge, only two prior studies have neurobehaviorally assessed the interaction of multisensory processing and unisensory vs. multisensory attention goals (Talsma et al., 2007, Degerman et al., 2007), and no study has explored these influences in the context of audiovisual stimulus congruity. Using arbitrary audiovisual stimulus combinations (simple shapes and tones), both prior studies found neural evidence for enhanced processing during multisensory attention, but did not find these neural effects to benefit perceptual performance. We aimed to resolve these inconsistencies between behavior and underlying neurophysiology in the current study via the use of a novel paradigm utilizing inherently congruent and incongruent stimuli that are often seen and heard in the real-world.

We hypothesized that distributing attention across sensory modalities, relative to focused attention to a single modality, would have differential effects for congruent vs. incongruent stimulus streams. For congruent stimuli, perceptual performance could be facilitated by distributed audiovisual attention compared to focused unisensory attention. In the case of incongruent stimuli, however, distributed attention could generate greater interference and hence degrade performance, as also hypothesized in a prior behavioral study (Mozolic et al., 2008). Our behavioral and neurophysiological results are evaluated from the perspective of these hypotheses.

MATERIALS &METHODS

Participants

Twenty healthy young adults (mean age 23.4 years; range 19–29 years; 10 females) gave informed consent to participate in the study approved by the Committee on Human Research at the University of California in San Francisco. All participants had normal or corrected-to-normal vision as examined using a Snellen chart and normal hearing as estimated by an audiometry software application UHear©. Additionally, all participants were required to have a minimum of 12 years of education.

Stimuli & Experimental procedure

Stimuli were presented on Presentation software (Neurobehavioral Systems, Inc.) run on a Dell Optiplex GX620 with a 22” Mitsubishi Diamond Pro 2040U CRT monitor. Participants were seated with a chin rest in a dark room 80 cm from the monitor. Visual stimuli (v), were words presented as black text in Arial font in a grey square sized 4.8° at the fovea. Auditory words (a), were spoken in a male voice, normalized and equated in average power spectral density, and presented to participants at a comfortable sound level of 65dB SPL using insert earphones (Cortech Solutions, LLC). Prior to the experiment participants were presented with all auditory stimuli once, which they repeated to ensure 100% word recognition. All spoken and printed word nouns were simple, mostly monosyllabic everyday usage words e.g. tree, rock, vase, bike, tile, book, plate, soda, ice, boat etc. The experiment used 116 unique written and corresponding spoken words; of these 46 words were animal names (cat, chimp, cow, deer, bear, hippo, dog, rat, toad, fish etc.) and served as targets. Visual stimuli were presented for a duration of 100 ms, all auditory presentations had a 250 ms duration, and audiovisual stimuli (av) had simultaneous onset of the auditory and visual stimulus constituents. Each experimental run consisted of 360 randomized stimuli (shuffled from the set of 116 unique stimuli), with an equivalent 120 (v) alone, (a) alone and (av) stimulus presentations. The inter-stimulus interval for all stimulus types was jittered at 800–1100 ms. Each experimental block run thus lasted 6 min, with a few seconds of a self-paced break available to participants every quarter block. Stimuli were randomized at each block quarter to ensure equivalent distribution of (a), (v) and (av) stimuli in each quarter.

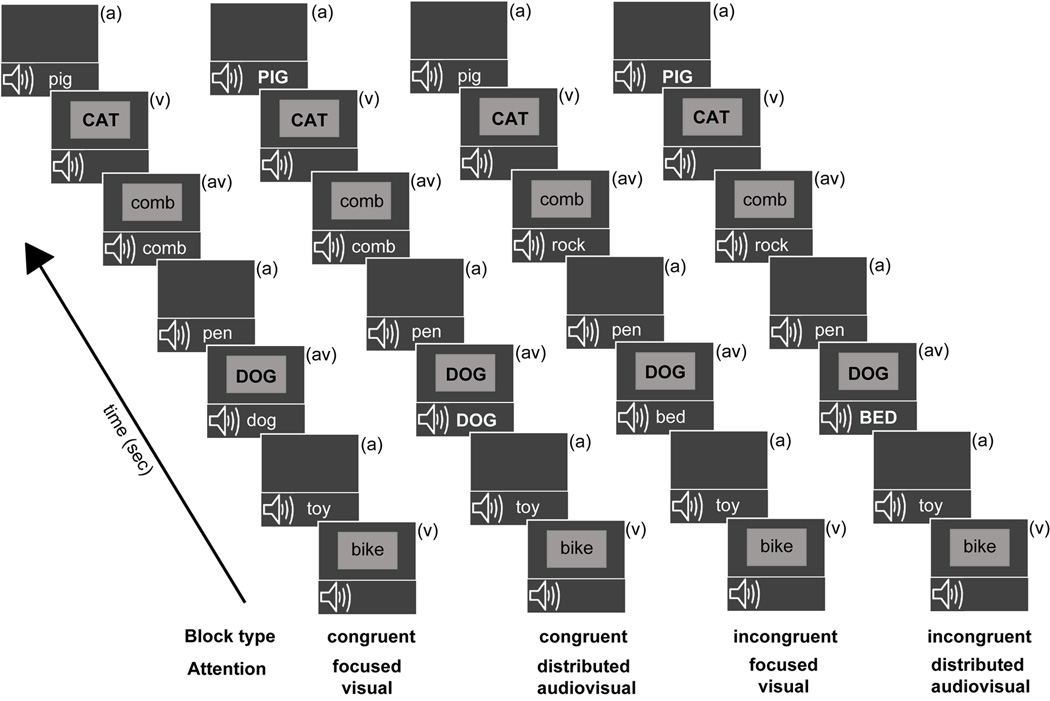

There were four unique block types presented randomly (Figure 1), with each block type repeated twice and the repeat presentation occurring after each block type had been presented at least once. Participants were briefed as per the upcoming block type before each block presentation: Block type 1: Congruent - Focused Visual; Block type 2: Congruent - Distributed Audiovisual; Block type 3: Incongruent - Focused Visual; Block type 4: Incongruent - Distributed Audiovisual. Block type (1) had congruent (av) stimuli and participants were instructed to focus attention only on the visual stream and respond with a button press to visual animal targets, whether appearing as (v) alone or (av) stimuli (congruent focused visual attention block). In block type (2) (av) stimuli were again congruent and participants were instructed to distribute attention across both auditory and visual modalities and detect all animal names, appearing either in the (v), (a) or (av) stream (congruent distributed audiovisual attention block). In block type (3) (av) stimuli were incongruent and participants were instructed to focus attention on the visual stream only and respond to visual animal targets, either appearing alone or co-occurring with a conflicting non-animal auditory stimulus (incongruent focused visual attention block). Lastly, in block type (4) (av) stimuli were incongruent and participants distributed attention to both (a) and (v) stimuli detecting animal names in either (v), (a) or incongruent (av) stream (incongruent distributed audiovisual attention block). Note that Focused Auditory block types were not included in the experiment in order to constrain the number of experimental manipulations and provide high quality neurobehavioral data minimally contaminated by fatigue effects.

Figure 1.

Overview of experimental block design. All blocks consisted of randomly interspersed auditory only (a), visual only (v), and simultaneous audiovisual (av) stimuli, labeled in each frame. The auditory and visual constituent stimuli of audiovisual trials matched during the two congruent blocks, and did not match on incongruent blocks. Target stimuli (animal words) in each block stream are depicted in uppercase (though they did not differ in actual salience during the experiment). During the focused visual attention blocks, participants detected visual animal word targets occurring in either the (v) or (av) stream. During the distributed audiovisual attention blocks, participants detected animal targets occurring in either of three stimulus streams.

Targets in the (a), (v), or (av) streams appeared at 20% probability. To further clarify, for the (av) stream in congruent blocks ((1) and (2)), visual animal targets were paired with related auditory animal targets, while in incongruent blocks ((3) and (4)) visual animal targets were paired with auditory non-animal stimuli. This (av) stimuli pairing scheme was unknown to participants and maintained the same number of visual constituent targets within the (av) streams across all blocks. Note that performance metrics were obtained for targets in the (v) and (av) streams in all blocks, while performance on targets in the (a) stream was only obtained in the distributed audiovisual attention blocks (2) and (4); targets in the (a) stream in the focused visual attention blocks (1) and (3) were not attended to and did not have associated responses.

Participants were instructed to fixate at the center of the screen at all times, and were provided feedback as per their average percent correct accuracy and RTs at the end of each block. Speed and accuracy were both emphasized in the behavior, and correct responses were scored within a 200–1200 ms period after stimulus onset. Correct responses to targets were categorized as ‘hits’ while responses to non-target stimuli in either modality were classified as ‘false alarms’. The hit and false alarm rates were used to derive the sensitivity estimate d′ in each modality (MacMillan & Creelman, 1991).

EEG Data acquisition

Data were recorded during 8 blocks (2 per block type) yielding 192 epochs of data for each standard (v)/ (a)/ (av) stimulus (and 48 epochs per target) per block type. Electrophysiological signals were recorded with a BioSemi ActiveTwo 64-channel EEG acquisition system in conjunction with BioSemi ActiView software (Cortech Solutions, LLC). Signals were amplified and digitized at 1024 Hz with a 24-bit resolution. All electrode offsets were maintained between +/−20 mV.

The three-dimensional coordinates of each electrode and of three fiducial landmarks (the left and right pre-auricular points and the nasion) were determined by means of a BrainSight (Rogue Research, Inc.) spatial digitizer. The mean Cartesian coordinates for each site were averaged across all subjects and used for topographic mapping and source localization procedures.

Data analysis

Raw EEG data were digitally re-referenced off-line to the average of the left and right mastoids. Eye artifacts were removed through independent component analyses by excluding components consistent with topographies for blinks and eye movements and the electrooculogram time-series. Data were high-pass filtered at 0.1 Hz to exclude ultraslow DC drifts. This preprocessing was conducted in the Matlab (The Mathworks, Inc.) EEGLab toolbox (Swartz Center for Computational Neuroscience, UC San Diego). Further data analyses were performed using custom ERPSS software (Event-Related Potential Software System, UC San Diego). All ERP analyses were confined to the standard (non-target) (v), (a) and (av) stimuli. Signals were averaged in 500 ms epochs with a 100 ms pre-stimulus interval. The averages were digitally low-pass filtered with a Gaussian finite impulse function (3 dB attenuation at 46 Hz) to remove high-frequency noise produced by muscle movements and external electrical sources. Epochs that exceeded a voltage threshold of +/−75 µV were rejected.

Components of interest were quantified in the 0–300 ms ERPs over distinct electrode sets that corresponded to sites at which component peak amplitudes were maximal. Components in the auditory N1 (110–120 ms) and P2 (175–225 ms) latency were measured at 9 fronto-central electrodes (FC1/2, C1/2, CP1/2, FCz, Cz, CPz). Relevant early visual processing was quantified over occipital sites corresponding to the peak topography of the visual P1 component (PO3/4, PO7/8, O1/2 and POz, Oz) during the peak latency intervals of 130–140 ms and 110–130 ms for congruent and incongruent stimulus processing respectively, and 6 lateral occipital electrodes (PO7/8, P7/P8, P9/P10) were used to quantify processing during the visual N1 latency (160–190 ms). Statistical analyses for ERP components as well as behavioral data utilized repeated-measures analyses of variance (ANOVAs) with a Greenhouse-Geisser correction when appropriate. Post-hoc analyses consisted of two-tailed t-tests. This ERP component analysis was additionally confirmed by conducting running point-wise two-tailed paired t-tests at all scalp electrode sites. In this analysis, a significant difference is considered if at least 10 consecutive data points meet the 0.05 alpha criterion and is a suitable alternative to Bonferroni correction for multiple comparisons (Guthrie and Buchwald, 1991, Murray et al., 2001, Molholm et al., 2002). This analysis did not yield any new effects other than the components of interest described above.

Of note, here we refrained from analyses of later processes (> 300 ms post-stimulus onset) as it is not easy to distinguish whether such processes reflect a sensory/multisensory contribution or decision making/ response selection processes that are active at these latencies.

Scalp distributions of select difference wave components were compared after normalizing their amplitudes prior to ANOVA according to the method described by McCarthy and Wood (1985). Comparisons were made over 40 electrodes spanning frontal, central, parietal, and occipital sites (16 in each hemisphere and 8 over midline). Differences in scalp distribution were reflected in significant attention condition (focused vs. distributed) by electrode interactions.

Modeling of ERP sources

Inverse source modeling was performed to estimate the intracranial generators of the components within the grand-averaged difference waves that represented significant modulations in congruent and incongruent multisensory processing. Source locations were estimated by distributed linear inverse solutions based on a local auto-regressive average (LAURA) (Grave de Peralta et al., 2001). LAURA estimates three-dimensional current density distributions using a realistic head model with a solution space of 4024 nodes equally distributed within the gray matter of the average template brain of the Montreal Neurological Institute. It makes no a priori assumptions regarding the number of sources or their locations and can deal with multiple simultaneously active sources (Michel et al., 2001). LAURA analyses were implemented using CARTOOL software by Denis Brunet (http://sites.google.com/site/fbmlab/cartool). To ascertain the anatomical brain regions giving rise to the difference wave components, the current source distributions estimated by LAURA were transformed into the standardized Montreal Neurological Institute (MNI) coordinate system using SPM5 software (Wellcome Department of Imaging Neuroscience, London, England).

RESULTS

Our paradigm consisted of rapidly presented spoken (auditory (a)) and written (visual (v)) nouns, either presented independently or concurrently (av) (Figure 1). Concurrent stimuli were presented in blocks in which they were either semantically congruent (e.g., a = ‘comb’; v = ‘comb’) or incongruent (e.g., a = ‘rock’; v = ‘comb’). For both congruent and incongruent blocks, two attention manipulations were assessed: focused visual attention and distributed audiovisual attention. The participant’s goal was to respond with a button-press to the presentation of a stimulus from a specific category target (i.e., animal names) when detected exclusively in the visual modality (focused attention condition) or in either auditory or visual modality (distributed attention condition). Importantly, the goals were never divided across different tasks (e.g. monitoring stimuli from multiple categories, such as animals and vehicles); thus, we investigated selective attention towards a single task goal focused within or distributed across sensory modalities. To summarize, for both congruent and incongruent blocks, two attentional variations (focused vs. distributed) were investigated under identical stimulus presentations, providing the opportunity to observe the impact of top-down goals on processing identical bottom-up inputs.

Behavioral Performance

Detection performance is represented by sensitivity estimates (d’) and by response times (RT (ms)) for (v), (a), and (av) target stimuli (Table 1). d’ estimates were calculated in each modality from the hits and false alarm rates for target and non-target stimuli in that modality, respectively (MacMillan & Creelman, 1991). To compare the impact of focused vs. distributed attention on multisensory processing, performance indices were generated for the difference in performance between multisensory (av) and unisensory (v) stimuli and compared across the attentional manipulations, separately for the congruent and incongruent blocks. Figure 2 shows differential (av-v) accuracy (d’) and RT metrics for distributed attention trials relative to focused attention trials in all study participants; the unity line references equivalent performance across the two attention manipulations and the square data point represents the sample mean. Of note, there is no parallel (av-a) performance comparison across the two attentional manipulations as auditory targets were detected only in blocks with attention distributed to both auditory and visual inputs.

Table 1.

Details of behavioral measures observed for target stimuli during the four blocked tasks, values represented as means +/− standard errors of mean (sem). (v) = visual, (av) = audiovisual, and (a) = auditory.

| Block type/ Attention |

Target Stimulus |

d’ (sem) | Target Hits % (sem) |

Non-target False alarms % (sem) |

Reaction time ms (sem) |

|---|---|---|---|---|---|

| Congruent | (v) | 5.2 (0.2) | 97.5 (0.7) | 0.5 (0.1) | 554 (9) |

| Focused | (av) | 5.7 (0.2) | 98.3 (0.8) | 0.5 (0.1) | 545 (9) |

| Congruent | (v) | 5.0 (0.2) | 97.5 (0.6) | 0.8 (0.1) | 548 (7) |

| Distributed | (av) | 5.9 (0.2) | 99.5 (0.3) | 0.9 (0.2) | 523 (8) |

| (a) | 4.1 (0.2) | 90.1 (1.8) | 0.5 (0.1) | 680 (12) | |

| Incongruent | (v) | 5.4 (0.2) | 97.3 (1.2) | 0.6 (0.1) | 548 (9) |

| Focused | (av) | 4.9 (0.2) | 96.9 (0.7) | 0.7 (0.1) | 550 (8) |

| Incongruent | (v) | 5.1 (0.2) | 97.6 (0.7) | 1.0 (0.3) | 538 (8) |

| Distributed | (av) | 5.4 (0.2) | 98.5 (0.5) | 0.9 (0.2) | 544 (9) |

| (a) | 4.4 (0.2) | 91.7 (2.0) | 0.5 (0.1) | 681 (11) | |

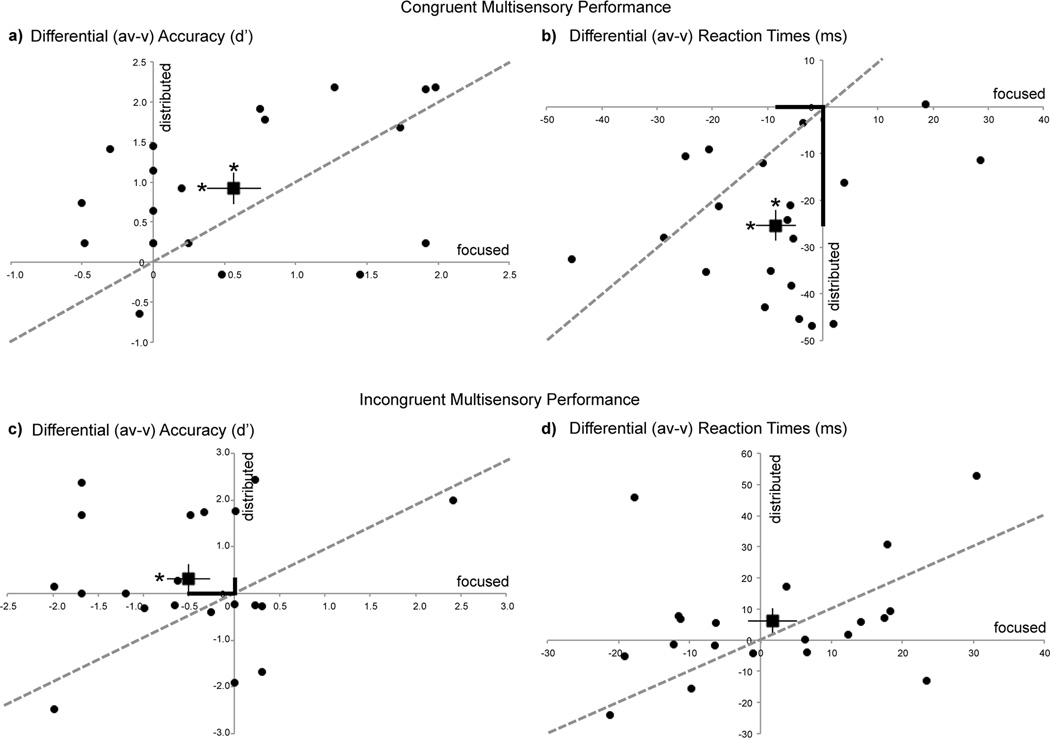

Figure 2.

Behavioral performance during distributed audiovisual attention relative to focused visual attention depicted as (av – v) normalized measures for all participants (circular data points). The square data point with error bars represents the sample mean and s.e.m. The unity line references equivalent performance across the two attention manipulations. Measures are shown as differential d’ (a,c) and differential RTs (b,d). Asterisks on the mean data points represent significant (av) vs. (v) performance differences (on horizontal error bars for focused attention & on vertical error bars for distributed attention). Bolded axial distances in (b) and (c) emphasize significant performance differences between the focused and distributed attention conditions. Note that distributed attention results in superior performance on congruent trials (RT) and incongruent trials (d').

Effects of attention on congruent multisensory performance

For congruent blocks, accuracy for (av) targets were significantly greater than (v) targets independent of attentional goals (Fig. 2a: positive (av-v) indices), observed as a main effect of stimulus type in repeated measures ANOVAs with stimulus type ((av) vs. (v)) and attention (focused vs. distributed) as factors (F(1,19)=20.69, p=0.0002). Post-hoc paired t-tests showed that (av) accuracies were consistently superior to (v) accuracies in the focused (t(19)=2.99, p=0.007) as well as distributed attention condition (t(19)=4.66, p=0.0002) (as indicated by x and y axes asterisks above the mean data point in Fig. 2a). This result revealed a stimulus congruency facilitation effect. There was no significant main effect of attention (F(1,19)=0.04, p=0.8), and the interaction between the attention manipulation and stimulus type trended to significance (F(1,19)=2.89, p=0.1). A similar ANOVA conducted for target RTs showed a comparable facilitation effect, with responses for (av) targets significantly faster than (v) targets (main effect of stimulus type: F(1,19)=39.50, p<0.0001) (Fig. 2b: negative (av-v) indices). Again, post-hoc paired t-tests showed this effect to be significant during focused (t(19)=2.35, p=0.03) and distributed attention (t(19)=7.65, p<0.0001). The ANOVA for RTs additionally showed a main effect of attention (F(1,19)=16.39, p=0.0007). Critically, a stimulus type × attention interaction was found (F(1,19)=14.92, p=0.001), such that (av-v) RTs were relatively faster in the distributed vs. focused attention condition (emphasized in Fig. 2b by the longer y axis relative to x axis distance of the sample mean data point). Of note, these relatively faster (av-v) RTs during distributed attention were not accompanied by any decrements in accuracy, i.e. there was no speed-accuracy tradeoff. Thus, congruent audiovisual stimuli resulted in overall better detection performance compared to visual stimuli alone (both accuracy and RT), and distributed audiovisual attention enhanced this stimulus congruency facilitation by improving performance (RT) relative to focused visual attention.

Effects of attention on incongruent multisensory performance

Similar repeated measures ANOVAs as for congruent blocks were conducted for incongruent blocks. These revealed no main effects of stimulus type ((av) vs. (v), F(1,19)=0.20, p=0.7) or attention (focused vs. distributed, F(1,19)=0.23, p=0.6) on accuracy d’ measures. Yet, a significant attention × stimulus type interaction was observed (F(1,19)=5.04, p=0.04, emphasized in Fig. 2c by the shorter y axis relative to x axis distance of the sample mean data point). Post-hoc t-tests showed that d' accuracy on incongruent (av) targets was significantly diminished relative to (v) targets during focused visual attention, revealing a stimulus incongruency interference effect (t(19)=2.13, p=0.046) (x axis asterisk on mean data point in Fig. 2c). Notably, however, this interference effect was resolved during distributed attention, such that incongruent (av) target accuracy did not differ from accuracy on (v) targets (t(19)=0.99, p=0.3). Neither significant main effects of attention or stimulus type, nor an interaction between these factors was observed in ANOVAs for target RTs in incongruent blocks (Fig. 2d). Thus, distributed attention to incongruent audiovisual stimuli resulted in improved detection performance (d’ measure) relative to focused attention, and notably without a speed-accuracy tradeoff.

Importantly, performance on visual alone trials that served as a baseline measure (horizontal zero line: Fig. 2) did not differ as a function of condition, as evaluated in a repeated measures ANOVA with block type (congruent vs. incongruent) and attention (focused vs. distributed) as factors. The main effect of block type did not reach significance for either visual accuracy (F(1,19)=0.83, p=0.4) or RT measures (F(1,19)=3.24, p=0.09). Similarly there was no main effect of type of attention on visual performance alone (accuracy: F(1,19)=1.41, p=0.3; RT: F(1,19)=2.84, p=0.1). Lastly, performance on auditory alone (a) targets, which only occurred in the distributed attention conditions did not significantly differ in d’ or RT measures across congruent vs. incongruent block types.

Event-related Potential (ERP) Responses

Effects of attention on congruent multisensory processing

Behaviorally, we found that distributed audiovisual attention improved detection performance relative to focused visual attention for congruent audiovisual stimuli via more rapid RTs (Fig. 2b). As both manipulations incorporated attention to the visual modality, we investigated whether the visual constituent of the congruent (av) stimulus was differentially processed under distributed vs. focused attention. Visual constituent processing was obtained at occipital sites by subtracting the auditory alone ERP from the audiovisual ERP within each attention block (Calvert et al., 2004, Molholm et al., 2004). An ANOVA with attention type as a factor conducted on the (av-a) difference waves revealed significantly reduced signal amplitudes at a latency of 130–140 ms in the distributed relative to focused attention condition (F(1,19)=4.65, p=0.04). A similar effect of attention was also observed at the 160–190 ms latency range at more lateral occipital sites (F(1,19)=5.26, p=0.03, Fig. 3a (positive µV plotted below horizontal axis) and b). These observed (av-a) differences were not driven by differences in auditory alone ERPs, which were non-significant across the two attention manipulations at these occipital sites.

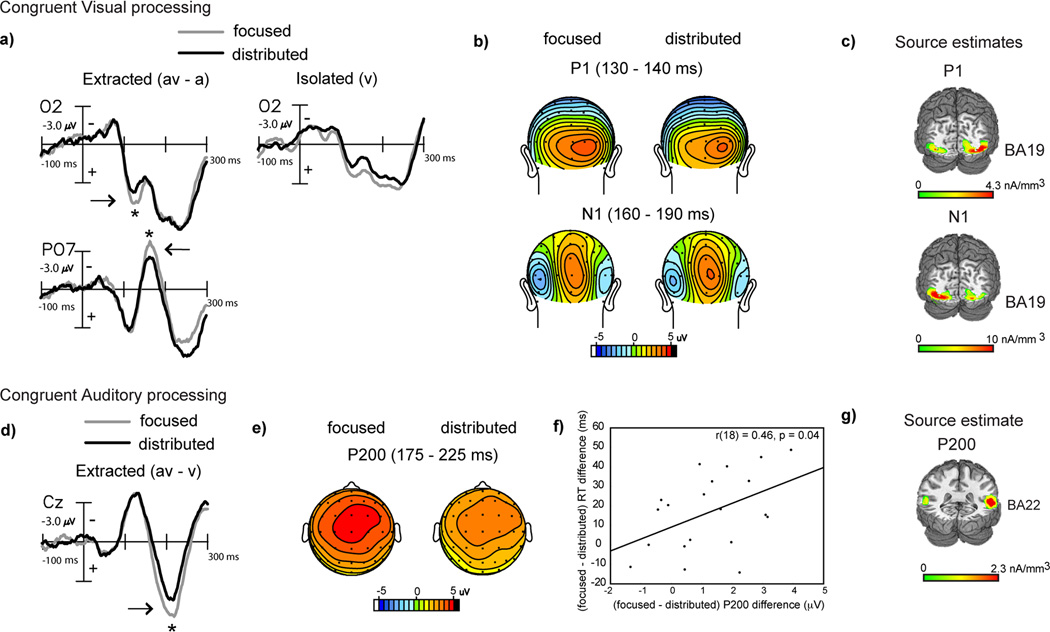

Figure 3.

Grand-averaged difference waves (n=20) depicting multisensory processing during the congruent trials compared for the focused and distributed attention conditions. a) Extracted processing for the visual constituent of multisensory stimulation (av-a) at occipital sites (O2 and PO7) showing significant amplitude differences at 130–140 ms and 160–190 ms, with corresponding topographical maps in (b) and source estimates in (c). Corresponding ERPs elicited to isolated visual targets are also shown in (a) for reference, to the right of the extracted difference waves. d) Extracted processing for the auditory constituent of multisensory stimulation (av-v) showing attention related differences at 175–225 ms latency (P200 component) at a medial central site (Cz) (positive voltage plotted below horizontal axis). e) Topographical voltage maps corresponding to the P200 component difference. f) Positive neuro-behavioral correlations between P200 modulation and RT differences across the two attention conditions. g) Current source estimates for the P200 component.

Source estimates of the extracted visual processing signal at 130–140 ms and at 160–190 ms modeled within the (av-a) difference waves under focused attention, showed neural generators in extrastriate visual cortex (in the region of BA 19: Fig. 3c, MNI co-ordinates of the peak of the source clusters in Table 2). We, thus, observed that these two difference wave components respectively resembled the P1 and N1 components commonly elicited in the visual evoked potential in their timing, topography and location of occipital source clusters (Gomez Gonzalez et al., 1994, Di Russo et al., 2002, 2003). Thus, distributed audiovisual attention was associated with reduced visual constituent processing compared to focused visual attention, which is consistent with observations of sensory processing under unimodal divided attention (Desimone & Duncan, 1995, Desimone, 1998, Kastner & Ungerleider, 2001, Beck & Kastner, 2009, Reddy et al., 2009). ERPs elicited to isolated (v) targets under focused visual vs. distributed audiovisual attention provide confirmation that unimodal visual target processing was indeed reduced in the latter condition (Fig. 3a right column).

Table 2.

MNI coordinates of the peak of the source clusters as estimated in LAURA at relevant component latencies identified in the extracted visual (av-a) and extracted auditory (av-v) difference waveforms for congruent and incongruent blocks. All sources were modeled for difference waves in the focused visual attention condition.

| Block type | Difference wave |

latency (ms) |

x (mm) |

y (mm) |

z (mm) |

|---|---|---|---|---|---|

| Congruent | (av-a) | 130–140 | ±29 | −71 | −1 |

| (av-a) | 160–190 | ±27 | −75 | −4 | |

| (av-v) | 175–225 | ±56 | −33 | +7 | |

| Incongruent | (av-a) | 110–120 | ±30 | −71 | −2 |

| (av-v) | 110–120 | ±58 | −35 | +4 | |

In contrast to the visual modality that was attended in both focused and distributed attention conditions, the auditory modality was only attended to in the distributed condition. To compare auditory processing of the congruent (av) stimulus during distributed attention vs. when auditory information was task-irrelevant (i.e., during focused visual attention), we analyzed the auditory constituent at fronto-central electrode sites, where an auditory ERP is typically observed. This was accomplished by subtracting the visual alone ERP from the audiovisual ERP for each attention condition (Calvert et al., 2004, Busse et al., 2005, Fiebelkorn et al., 2010). (The visual alone ERPs were not different at fronto-central sites under the two types of attention.) An ANOVA with attention type as a factor conducted on the (av-v) difference ERPs showed a significant early positive component difference at 175–225 ms (P200) (F(1,19)=14.3, p=0.001), which was larger when the auditory information was task-irrelevant relative to levels in the distributed attention condition (Fig. 3d, e). This difference in auditory constituent processing was positively correlated with the relative multisensory RT improvement for distributed vs. focused attention observed in Fig. 2b (r(18)=0.46, p=0.04, Fig 3f), revealing that reduced (av-v) neural processing under distributed attention was associated with better (av-v) behavioral performance.

The neural generators of the grand-averaged P200 difference wave component were modeled in the focused visual attention condition, which contained greater signal amplitude but similar scalp topography as the P200 component in the distributed condition. The source estimates revealed that the P200 component could be accounted for by bilateral current sources in the region of the superior temporal gyrus (STG, BA 22: Fig. 3g, MNI co-ordinates of the peak of the source cluster provided in Table 2). Though this P200 resembled the P2 component ubiquitously found in the auditory evoked response (reviewed in Crowley and Colrain, 2004), its localization to STG - a known site for multisensory integration (Calvert 2001, Calvert et al., 2004, Beauchamp, 2005, Ghazanfar and Schroeder, 2006), indicates the polysensory contribution to this process.

Effects of attention on incongruent multisensory processing

Behaviorally, we found that distributed attention improved performance relative to focused attention for incongruent audiovisual stimuli via recovery of accuracy interference costs (Fig. 2c). Parallel to the ERP analysis for congruent stimuli, we first analyzed the visual constituent of incongruent (av) stimulus processing using (av-a) difference waves obtained within the focused and distributed attention blocks. Early extracted visual processing signals, compared by ANOVAs with attention type as a factor, differed at occipital sites during the latency range of 110–130 ms with significantly reduced amplitudes in the distributed relative to the focused attention (av-a) difference waves (F(1,19)=4.43, p=0.04) (Fig. 4a, b), auditory alone ERPs were no different at these sites. Source estimates of this difference wave component revealed neural generators in extrastriate visual cortex (BA 19) (Fig. 4c, MNI co-ordinates in Table 2), overlapping the source estimates for the similar latency component in the congruent extracted visual difference wave. Again, early visual constituent processing of the (av) stimulus was reduced under distributed relative to focused visual attention. Additionally, this result was consistent with early sensory processing of isolated (v) targets, which showed reduced processing under distributed audiovisual vs. focused visual attention (Fig. 4a right column).

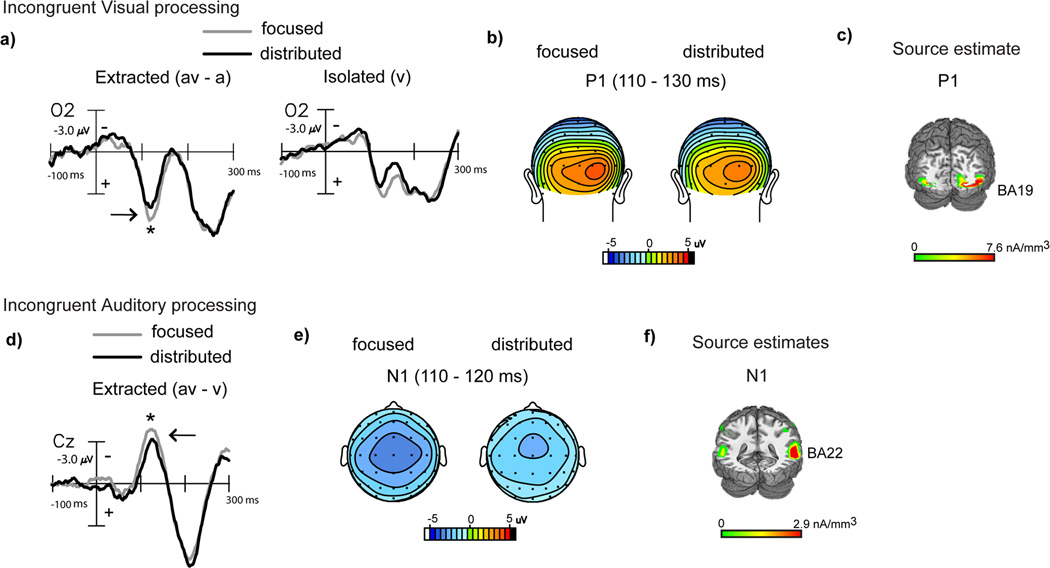

Figure 4.

Grand-averaged difference waves (n=20) depicting multisensory processing during the incongruent trials compared for the focused and distributed attention conditions. a) Extracted processing for the visual constituent of multisensory stimulation (av-a) at occipital site (O2) showing significant amplitude differences at 110–130 ms, with corresponding topographical maps in (b) and source estimates in (c). Corresponding ERPs elicited to isolated visual targets are also shown in (a) for reference, to the right of the extracted difference waves. d) Extracted processing for the auditory constituent of multisensory stimulation (av-v) showing attention related differences at 110–120 ms at a medial central site (Cz), with corresponding topographical maps in (e) and source estimates in (f).

The extracted auditory constituent reflected in the (av-v) waves in the setting of audiovisual incongruency, compared by ANOVAs with attention type as a factor, showed significant amplitude differences in early auditory processing at 110–120 ms latency (F(1,19)=4.97, p=0.04) (Fig. 4d, e); visual alone ERPs did not significantly differ at these sites. When modeled for inverse source solutions, this difference wave component localized to the middle temporal gyrus (BA 22) adjacent to auditory cortex (BA 42) (Fig. 4f, MNI co-ordinates of the peak of the source cluster in Table 2). We observed that this component resembled the auditory N1 in latency, topography and approximate source generators. Of note, despite the overall similarity in waveforms across congruent and incongruent stimulus processing, the attention-related component differences during incongruent processing emerged at distinct latencies, and even earlier (110–120 ms) than processing differences during congruent processing (175–225 ms). Yet, consistent with results for auditory constituent processing of congruent (av) stimuli, processing of the incongruent auditory constituent signal was also observed to be relatively decreased during distributed audiovisual vs. focused visual attention. In this case, however, neurobehavioral correlations for the auditory N1 latency (av-v) processing difference vs. (av-v) multisensory accuracy improvement under distributed relative to focused attention trended towards but did not reach significance (r(18)=0.39, p=0.09).

DISCUSSION

In the present study, we investigated how processing of semantically congruent and incongruent audiovisual stimuli are influenced by the allocation of attentional focus to either a single sensory domain (visual) or distributed across the senses (auditory and visual). Behavioral findings showed that congruent audiovisual detection performance was enhanced relative to isolated visual detection during focused visual attention, and that attention distributed across both modalities further facilitated audiovisual performance via faster response times. Performance on incongruent audiovisual stimuli, in contrast, suffered a performance decrement relative to visual stimuli under focused visual attention, but remarkably this accuracy decrement was resolved under distributed attention. Further, event-related potential recordings consistently revealed that processing of the visual and auditory constituents of the audiovisual stimuli were markedly reduced during distributed relative to focused attention, whether or not the sensory modality in the focused condition was task-relevant (visual) or irrelevant (auditory). Thus, these results demonstrate a novel association between improved behavioral performance and increased neural efficiency, as reflected by reduced auditory and visual processing during distributed audiovisual attention.

Previous studies using elementary auditory (tone) and visual (shape/grating) stimulus pairings have shown that perceptual performance involving multisensory stimuli is improved relative to unimodal stimuli (Giard and Peronnet, 1999, Fort et al., 2002, Molholm et al, 2002, Talsma et al., 2007, Burg et al., 2011). Performance gains were consistently observed for audiovisual vs. unimodal stimuli when the auditory and visual constituents of the audiovisual stimulus belonged to the same object or had prior object categorization, but not otherwise (Fort et al., 2002, Degerman et al., 2007, Talsma et al., 2007). Studies with more complex naturalistic stimuli such as pairings of animal pictures and animal sounds (Molholm et al., 2004) and auditory speech paired with facial lip movements (Senkowski et al., 2008, Schroeder et al., 2008) have further shown that multisensory stimuli containing conflicting auditory and visual parts have negative or null performance impact relative to unimodal performance. In the majority of studies, with the exception of two (Degerman et al., 2007, Talsma et al., 2007), multisensory performance was investigated without any manipulation of the focus of attention. The two studies in exception compared performance when attention was focused unimodally or divided across an auditory and visual task; yet observations were made using arbitrary associations of elementary auditory and visual stimuli. Our study is unique in its investigation of selective attention either focused to a modality or distributed across the senses and notably for inherently congruent and incongruent stimuli.

The surprising novel behavioral finding in our study is that distributing attention across both auditory and visual domains not only enhances performance for congruent (av) stimuli, but also resolves interference for incongruent (av) stimuli. Although such interference resolution was unexpected, we posit that it results from efficient top-down regulation of automatic bottom-up processing when attention is distributed. During focused visual attention to incongruent (av) stimuli, the concurrent and conflicting irrelevant auditory stream may capture (bottom-up) attention in a detrimental manner (Busse et al., 2005, Fiebelkorn et al., 2010, Zimmer et al., 2010a,b). Top-down monitoring of both sensory streams during distributed attention may minimize such interruptive bottom-up capture and may even actively suppress the interfering stream, leading to better performance. Such a regulatory mechanism may also apply for congruent (av) stimuli, wherein exclusive focus on the visual modality may weaken the automatic spatio-temporal and semantic audiovisual binding, while distributed top-down attention regulation may optimally facilitate it.

The EEG data revealed that distributed audiovisual relative to focused visual attention led to reduced neural processing for both the visual and auditory constituents of the (av) stimuli. The visual constituent showed reduced ERP amplitudes during distributed attention at early visual P1 and N1 latencies; the visual P1-like attention effect was elicited for both congruent and incongruent (av) processing, while the N1 effect was only observed for congruent (av) processing. Congruent (av) stimuli have been previously noted to generate a visual N1-like effect (Molholm et al., 2004), however, the differential modulation of early visual sensory processing by distributed vs. focused attention is a novel finding. However, this is not unexpected and consistent with the well-documented finding that limited attentional resources within a modality, as during distributed relative to focused visual attention, are associated with reduced neural responses (Lavie, 2005).

Parallel to the findings for visual processing, the auditory constituent neural signals were found to be reduced during distributed attention, at 200 ms peak latencies for congruent (av) processing and at 115 ms peak latencies for incongruent processing. Additionally for congruent stimuli, the amplitude reduction observed for the P200 component during distributed attention was directly correlated with the faster (av) reaction times evidenced in this condition. The P200 localized to superior temporal cortex (a known site for multisensory integration: Calvert, 2001, Beauchamp, 2005); thus its neurobehavioral correlation underlies the polysensory contribution to the behavioral findings.

Notably, however, in the analysis for auditory constituent processing, the auditory signal under distributed audiovisual attention was compared to the signal during focused visual attention when the auditory information was task-irrelevant. The reduction in auditory constituent processing is a surprising finding given that attentional allocation is known to be associated with enhanced sensory processing. However, these neural results are consistent with the current behavioral findings and can be explained by viewing top-down attention as a dynamic regulatory process. An interfering, concurrent auditory stimulus in the case of incongruent (av) stimuli has been shown to capture bottom-up attention such that auditory neural processing is enhanced (Busse et al., 2005, Fiebelkorn et al., 2010, Zimmer et al., 2010a,b). We hypothesize that distributed top-down attention reduces this bottom-up capture by the interfering auditory stream and/or may even suppress the interfering stream, resulting in reduced early auditory processing and a resolution of behavioral interference effects as observed here.

Of note, we found all neural processing differences to be amplitude rather than latency modulations. That no significant latency differences were found in our comparisons may be attributed to our focus on early multisensory processing (0–300 ms), which has been evidenced to be rapid and convergent within unisensory cortices (Schroeder and Foxe, 2002, 2005) with neural response enhancement/ suppression related modulation mechanisms (Ghazanfar and Schroeder, 2006). Also, as a focused auditory attention manipulation was not included in the study, the current findings maybe specific to comparisons of distributed audiovisual attention versus focused visual attention; modality generalization needs to be pursued in future research.

The two prior neurobehavioral studies that manipulated attention (focus unimodally or divide attention across auditory and visual tasks), found evidence for enhanced early multisensory ERP processing (Talsma et al., 2007) and enhanced multisensory-related fMRI responses in superior temporal cortex (Degerman et al., 2007) under divided attention. These enhancements were associated with null or negative multisensory performance (under divided relative to unimodal attention) in the former and latter study, respectively. Although these findings appear contrary to our results, these studies differ from the current investigation in: (1) the use of elementary auditory and visual stimuli with no inherent (in) congruencies, and (2) the manipulation of attention being divided across distinct auditory and visual tasks in contrast to selective attention directed at a single task being distributed across modalities, as in the current study. These crucial study differences may be manifest in the different underlying neural changes and behavioral consequences. To note, consistent with these studies and contrary to ours, a prior behavioral study that used a similar task design as us did not find any significant performance differences for semantically incongruent audiovisual pairings processed under unisensory vs. multisensory attention goals (Mozolic et al., 2008). This study, however, employed a two alternative-forced-choice response scheme, different and more cognitively complex than the detection scheme in the present experiment. It is possible then that any interference resolution under distributed attention as we observe, maybe annulled in the Mozolic et al. study by the additional conflict introduced at the level of decision making to choose the appropriate response.

Many crossmodal studies to-date have reported that multisensory attention is associated with enhancements in neural processing (reviewed in Talsma et al., 2010). However, we posit that distributed audiovisual attention, which was beneficial for multisensory behavior relative to focused visual attention, is characterized by reduced sensorineural processing. Such associations between improved performance and reduced neural processing have been more commonly observed in the perceptual learning literature. Recent ERP & fMRI studies have evidenced that perceptual training associated with improved behavioral performance results in reduced neural processing in perceptual (Ding et al., 2003, Mukai et al., 2005, Alain & Snyder, 2008, Kelley and Yantis, 2010) and even working memory tasks (Berry et al., 2010), and that individuals with trained attentional expertise exhibit reduced responses to task-irrelevant information (Mishra et al., 2011). These training-induced plasticity studies interpret these data as a reflection of increased neural efficacy impacting improved behavioral performance. Overall, our findings show that distributing attention across the senses can be beneficial in a multisensory environment, and further demonstrate novel neural underpinnings for such behavioral enhancements in the form of reduced processing within unisensory auditory and visual cortices, as well as polysensory temporal regions.

ACKNOWLEDGEMENTS

This work was supported by the National Institute of Health Grant 5R01AG030395 (AG) and the Program for Breakthrough Biomedical Research grant (JM). We would like to thank Jacqueline Boccanfuso, Joe Darin and Pin-wei Chen for their assistance with data collection.

Footnotes

AUTHOR CONTRIBUTIONS

J.M. and A.G. conceptualized the study. J.M. performed data collection and analysis. J.M. and A.G. wrote the paper.

REFERENCES

- Alain C, Snyder JS. Age-related differences in auditory evoked responses during rapid perceptual learning. Clinical neurophysiology. 2008;119:356–366. doi: 10.1016/j.clinph.2007.10.024. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Current opinion in neurobiology. 2005;15:145–153. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Beck DM, Kastner S. Top-down and bottom-up mechanisms in biasing competition in the human brain. Vision research. 2009;49:1154–1165. doi: 10.1016/j.visres.2008.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry AS, Zanto TP, Clapp WC, Hardy JL, Delahunt PB, Mahncke HW, Gazzaley A. The Influence of Perceptual Training on Working Memory in Older Adults. In: Rogers N, editor. PLoS ONE. Vol. 5. 2010. p. e11537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van der Burg E, Talsma D, Olivers CNL, Hickey C, Theeuwes J. Early multisensory interactions affect the competition among multiple visual objects. NeuroImage. 2011;55:1208–1218. doi: 10.1016/j.neuroimage.2010.12.068. [DOI] [PubMed] [Google Scholar]

- Busse L, Roberts KC, Crist RE, Weissman DH, Woldorff MG. The spread of attention across modalities and space in a multisensory object. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:18751–18756. doi: 10.1073/pnas.0507704102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA. Cerebral cortex. Vol. 11. New York, NY: 2001. Crossmodal processing in the human brain: insights from functional neuroimaging studies; pp. 1110–1123. 1991. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Spence C, Stein BE. The Handbook of Multisensory Processing. 2004 web-support@bath.ac.uk.

- Crowley KE, Colrain IM. A review of the evidence for P2 being an independent component process: age, sleep and modality. Clinical neurophysiology : official journal of the International Federation of Clinical Neurophysiology. 2004;115:732–744. doi: 10.1016/j.clinph.2003.11.021. [DOI] [PubMed] [Google Scholar]

- Degerman A, Rinne T, Pekkola J, Autti T, Jääskeläinen IP, Sams M, Alho K. Human brain activity associated with audiovisual perception and attention. NeuroImage. 2007;34:1683–1691. doi: 10.1016/j.neuroimage.2006.11.019. [DOI] [PubMed] [Google Scholar]

- Desimone R. Visual attention mediated by biased competition in extrastriate visual cortex. Philosophical transactions of the Royal Society of London. Series B, Biological sciences. 1998;353:1245–1255. doi: 10.1098/rstb.1998.0280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural Mechanisms of Selective Visual Attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Ding Y, Song Y, Fan S, Qu Z, Chen L. Specificity and generalization of visual perceptual learning in humans: an event-related potential study. Neuroreport. 2003;14:587–590. doi: 10.1097/00001756-200303240-00012. [DOI] [PubMed] [Google Scholar]

- Fiebelkorn IC, Foxe JJ, Molholm S. Cerebral cortex. Vol. 20. New York, NY: 2010. Dual mechanisms for the cross-sensory spread of attention: how much do learned associations matter? pp. 109–120. 1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fort A, Delpuech C, Pernier J, Giard MH. Early auditory-visual interactions in human cortex during nonredundant target identification. Brain research. Cognitive brain research. 2002;14:20–30. doi: 10.1016/s0926-6410(02)00058-7. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, McEvoy K, Knight RT, D’Esposito M. Top-down enhancement and suppression of the magnitude and speed of neural activity. Journal of cognitive neuroscience. 2005;17:507–517. doi: 10.1162/0898929053279522. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends in cognitive sciences. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. Journal of cognitive neuroscience. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Gomez Gonzalez CM, Clark VP, Fan S, Luck SJ, Hillyard SA. Sources of attention-sensitive visual event-related potentials. Brain topography. 1994;7:41–51. doi: 10.1007/BF01184836. [DOI] [PubMed] [Google Scholar]

- Grave de Peralta Menendez R, Gonzalez Andino S, Lantz G, Michel CM, Landis T. Noninvasive localization of electromagnetic epileptic activity. I. Method descriptions and simulations. Brain topography. 2001;14:131–137. doi: 10.1023/a:1012944913650. [DOI] [PubMed] [Google Scholar]

- Guthrie D, Buchwald JS. Significance Testing of Difference Potentials. Psychophysiology. 1991;28:240–244. doi: 10.1111/j.1469-8986.1991.tb00417.x. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. The neural basis of biased competition in human visual cortex. Neuropsychologia. 2001;39:1263–1276. doi: 10.1016/s0028-3932(01)00116-6. [DOI] [PubMed] [Google Scholar]

- Kelley TA, Yantis S. Neural Correlates of Learning to Attend. Frontiers in Human Neuroscience. 2010;4:216. doi: 10.3389/fnhum.2010.00216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: selective attention under load. Trends in cognitive sciences. 2005;9:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection theory: A user’s guide. New York: Cambridge University Press; 1991. [Google Scholar]

- McCarthy G, Wood CC. Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalography and clinical neurophysiology. 1985;62:203–208. doi: 10.1016/0168-5597(85)90015-2. [DOI] [PubMed] [Google Scholar]

- Michel CM, Thut G, Morand S, Khateb A, Pegna AJ, Grave de Peralta R, Gonzalez S, Seeck M, Landis T. Electric source imaging of human brain functions. Brain research. Brain research reviews. 2001;36:108–118. doi: 10.1016/s0165-0173(01)00086-8. [DOI] [PubMed] [Google Scholar]

- Mishra J, Zinni M, Bavelier D, Hillyard SA. Neural Basis of Superior Performance of Action Videogame Players in an Attention-Demanding Task. Journal of Neuroscience. 2011;31:992–998. doi: 10.1523/JNEUROSCI.4834-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt DC, Foxe JJ. Cerebral cortex. Vol. 14. New York, NY: 2004. Multisensory visual-auditory object recognition in humans: a high-density electrical mapping study; pp. 452–465. 1991. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain research. Cognitive brain research. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Mozolic JL, Hugenschmidt CE, Peiffer AM, Laurienti PJ. Modality-specific selective attention attenuates multisensory integration. Experimental brain research. 2008;184:39–52. doi: 10.1007/s00221-007-1080-3. [DOI] [PubMed] [Google Scholar]

- Mukai I, Kim D, Fukunaga M, Japee S, Marrett S, Ungerleider LG. Activations in visual and attention-related areas predict and correlate with the degree of perceptual learning. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2007;27:11401–11411. doi: 10.1523/JNEUROSCI.3002-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray MM, Foxe JJ, Higgins BA, Javitt DC, Schroeder CE. Visuo-spatial neural response interactions in early cortical processing during a simple reaction time task: a high-density electrical mapping study. Neuropsychologia. 2001;39:828–844. doi: 10.1016/s0028-3932(01)00004-5. [DOI] [PubMed] [Google Scholar]

- Reddy L, Kanwisher NG, VanRullen R. Attention and biased competition in multi-voxel object representations. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:21447–21452. doi: 10.1073/pnas.0907330106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Russo F, Martínez A, Hillyard SA. Cerebral cortex. Vol. 13. New York, NY: 2003. Source analysis of event-related cortical activity during visuo-spatial attention; pp. 486–499. 1991. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Martínez A, Sereno MI, Pitzalis S, Hillyard SA. Cortical sources of the early components of the visual evoked potential. Human brain mapping. 2002;15:95–111. doi: 10.1002/hbm.10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Current opinion in neurobiology. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Cognitive Brain Research. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends in cognitive sciences. 2008;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senkowski D, Saint-Amour D, Gruber T, Foxe JJ. Look who’s talking: the deployment of visuo-spatial attention during multisensory speech processing under noisy environmental conditions. NeuroImage. 2008;43:379–387. doi: 10.1016/j.neuroimage.2008.06.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talsma D, Doty TJ, Woldorff MG. Cerebral cortex. Vol. 17. New York, NY: 2007. Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? pp. 679–690. 1991. [DOI] [PubMed] [Google Scholar]

- Talsma D, Senkowski D, Soto-Faraco S, Woldorff MG. The multifaceted interplay between attention and multisensory integration. Trends in cognitive sciences. 2010;14:400–410. doi: 10.1016/j.tics.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talsma D, Woldorff MG. Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. Journal of cognitive neuroscience. 2005;17:1098–1114. doi: 10.1162/0898929054475172. [DOI] [PubMed] [Google Scholar]

- Zimmer U, Itthipanyanan S, Grent-’t-Jong T, Woldorff MG. The electrophysiological time course of the interaction of stimulus conflict and the multisensory spread of attention. European Journal of Neuroscience. 2010a;31:1744–1754. doi: 10.1111/j.1460-9568.2010.07229.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmer U, Roberts KC, Harshbarger TB, Woldorff MG. Multisensory conflict modulates the spread of visual attention across a multisensory object. NeuroImage. 2010b;52:606–616. doi: 10.1016/j.neuroimage.2010.04.245. [DOI] [PMC free article] [PubMed] [Google Scholar]