Abstract

Background

Although research participation is essential for clinical investigation, few quantitative outcome measures exist to assess participants’ experiences. To address this, we developed and deployed a survey at 15 NIH-supported clinical research centers to assess participant-centered outcomes; we report responses from 4,961 participants.

Methods

Survey questions addressed core aspects of the research participants’ experience, including their overall rating, motivation, trust, and informed consent. We describe participant characteristics, responses to individual questions, and correlations among responses.

Results

Respondents broadly represented the research population in sex, race, and ethnicity. Seventy-three percent awarded top ratings to their overall research experience and 94% reported no pressure to enroll. Top ratings correlated with feeling treated with respect, listened to, and having access to the research team (R2=0.80 - 0.96). White participants trusted researchers (88%) than did non-white participants collectively (80%) (p<0.0001). Many participants felt fully prepared by the informed consent process (67%) and wanted to receive research results (72%).

Conclusions

Our survey demonstrates that a majority of participants at NIH-supported clinical research centers rate their research experience very positively and that participant-centered outcome measures identify actionable items for improvement of participant’s experiences, research protections, and the conduct of clinical investigation.

Introduction

Human subjects’ participation in research studies is vital to advancing medical science. Optimizing participant’s experiences while simultaneously ensuring that studies are conducted safely and ethically are critically important to successful conduct of clinical research. Modern patient-centered approaches to selecting outcome measures look less to patient ‘satisfaction,’ which is a relative concept, dependent on the individual’s construct and perspective,1 and instead favor asking patients for their perceptions of what actually occurred, collecting actionable data enabling the care team to design specific interventions. Decades of empiric research investigating patients’ experiences in hospital settings using standardized, validated surveys that measure patients’ perceptions of their clinical care have led to performance improvement programs that have had a major impact on improving clinical care.2 As a result, such surveys have been incorporated into hospital accreditation and hospital reimbursement programs.3 In contrast, although intense interest has been expressed about whether clinical research studies are conducted according to high bioethical standards and what motivates research participants to volunteer,4-8 we are unaware of any validated surveys that obtain empiric participant-centered outcomes to judge the effectiveness of current practices or to make improvements based on participants’ experiences and perspectives For example, using the patient-centered orientation described above, rather than asking how satisfied a participant was with the consent process, one can ask whether she or he understood the consent discussions and whether the participant’s experiences during the study matched her or his expectations developed during the recruitment and consent process.9

To address the deficiencies in measures used and approach to assessing the research participant experience, we first rigorously developed a standardized Research Participant Perception Survey (RPPS) based on themes from focus groups of research participants and research professionals.10 We then deployed the survey to research participants at 15 NIH-supported clinical research centers in the United States and validated the tool based on returned responses.9 The goal of the current study was to obtain outcome data from the survey that can be used to inform the public about participation in research studies, enhance participants’ experiences and protections, and improve the conduct of clinical research through continuous performance improvement. We recently reported a brief summary of select aspects of our study;11 the present publication reports the comprehensive and detailed description of the research.

Methods

Participating Institutions

The following institutions participated in the fielding of the survey: Baystate Medical Center, Boston University, Clinical Center at the National Institutes of Health, Duke University, Feinstein Institute for Medical Research, Johns Hopkins University, Oregon Health & Science University, Stanford University, The Rockefeller University, The University of Rochester, Tufts New England Medical Center, University Hospitals of Cleveland, Case Western Reserve University, University of Texas Southwestern Medical Center, Vanderbilt University, and Yale University.

The Questionnaire

The RPPS design, questions, and response scales have been reported previously,9 and are aligned with the structure and standards used in The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey.3 Briefly, the RPPS questionnaire included 77 questions addressing the full continuum of the research participation experience, from the time when the volunteer first learned about the research study, through the consent process and study conduct, until the completion of participation. Eleven of the questions were designed to assess characteristics of the participants’ demographics including race, ethnicity, primary language, previous research participation, whether they participated in the research protocol as a healthy volunteer or as a disease-affected participant (protocol type), and their motivation for joining and remaining in the study).9 Ethical and regulatory and privacy board approvals of the survey fielding were obtained at each site as required.9

Survey Distribution and Validation

The RPPS survey was distributed to 18,890 research participants at 15 NIH-supported clinical research centers (13 sites with Clinical and Translational Science Awards (CSTAs), one General Clinical Research Center (GCRC), and the NIH Clinical Center); 92% (17,203) of mailed surveys were deliverable. Survey reliability and validity were assessed based on 4,961 returned surveys and included tests of face value and content validity, a robust assessment of survey and item completion, and psychometric analysis.9 The 29% response rate was evaluated for representativeness by comparison with the HCAHPS survey upon which it was modeled, and by comparing the response sample with those of the overall survey sample. The survey sample at each institution consisted of either a random sample of the unselected total available sample of research participant from the two years prior to fielding, or a subset of participants that excluded specific population(s) (most commonly mental health, substance abuse, or HIV studies) according to the restrictions placed by local IRBs, privacy boards or leadership.9 Ten of 15 participating institutions (representing 80% of the response sample) placed no restriction or only a single restriction on the inclusion of participants in the survey dataset. Assessment of non-responders through telephone contact was considered, but was rejected because it would compromise the uniformity of response mode, investigators believed it violated participants’ autonomy to decline, and most institutions prohibited it based on privacy considerations.

Question Scoring

“Top-Box” Scoring

The scoring for actionable and overall questions was performed in alignment with HCAHPS standards using the “top-box” response and “positive” scores. The “top box” response is the optimal or most positive response(s) option for a given question. The “positive” score is the percentage of all responses in a sample, after excluding “not applicable” or blank responses, that are “top box” responses.12

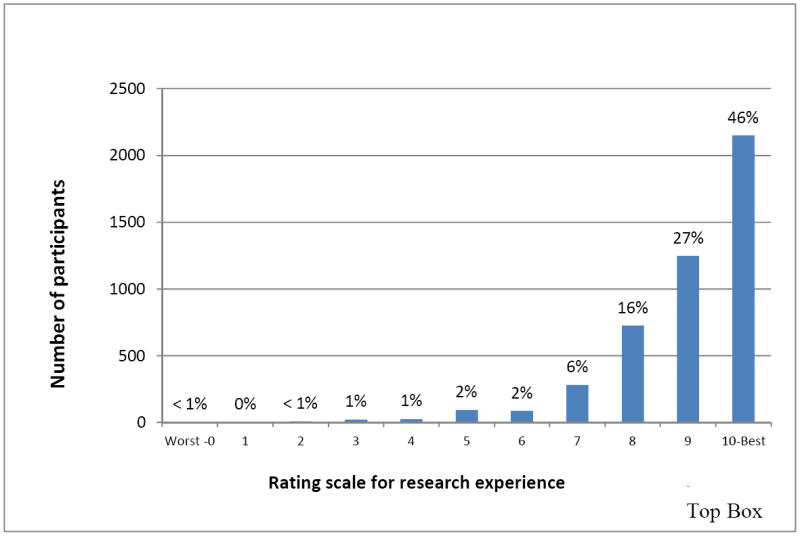

Overall Experience

Research participants were asked to rate their overall experiences on a scale from 0 (worst) to 10 (best), and to indicate whether they would recommend joining a research study to a friend or family members.3,9 The “top-box” response for this question was defined as either a 9 or 10, based on evidence that this definition reduces sensitivity to patient response tendency. 13

Actionable Questions

Fifty-five questions were considered actionable (i.e., asking whether, and how often, specific events or activities happened). Actionable questions included whether participants felt they exercised autonomy, understood the components of informed consent and other critical information, and felt respected and valued by the research team.9 Actionable questions were first analyzed after dichotomizing the responses into “top-box” scores versus all other responses. To provide more detail, some responses were further analyzed based on an ordinal scale.

Motivation Questions

Questions addressing motivation to join, stay in, or leave a research study asked participants to use a four-point scale to rate the importance of each of 12 to 15 possible factors affecting their decisions. The rating “Very Important” was the “top-box” response for dichotomized analyses of these questions. Mean rating scores were used descriptively to compare rankings of factors between subgroups. Subgroups for these analyses were defined by whether the participant enrolled in a study that did or did not require a disease/disorder for eligibility, or did or did not involve a study drug or procedure.

Dimensions

To analyze broad themes and facilitate summarizing the results, related actionable questions were grouped into validated conceptual domains or dimensions. Three dimensions have elements in common with perception surveys of medical care (Respect for Patient Preferences, Education/Information/Communication, and Coordination of Care).4 Two novel dimensions, Informed Consent and Trust, captured information fundamental to clinical research participation.9

Statistical Methods

The main descriptive statistics for all respondents and for various subgroups were frequencies of individual questions, or cross-tabulations of pairs of questions. To compare the proportions of “top box” responses for a question (the outcome) between two groups, Fisher’s exact test was used. If the groups defined by the second question retained their original ordinal scale, logistic regression was used to compute a test for trend in the proportions, and if more than two groups were defined by the second question but the groups were unordered (e.g. race), the extension of Fisher’s exact test was used (test of homogeneity). To assess the correlation between two variables, both on an ordinal scale, Spearman’s nonparametric correlation was computed. For sets of possible motivators for joining or remaining in a study, motivators were ranked (either overall or within subgroups) by comparing their mean scores, where a response of “Very important” was scored as ‘1,’ “Somewhat important” as ‘2,’ “Not very important” as ‘3,’ and “Not important at all” as ‘4.’ To assess which small set of questions or factors best predicted the primary outcome of a participants’ “overall experience” (using the 0 to 10 scale), forward stepwise multiple regression was used, with all actionable research participant experience questions asked on a four point scale. Items entered the model if they had an alpha <0.05, and were removed if p>0.10. All p-values are two-sided, with p<0.05 considered statistically significant.

Results

Demographics

4,961 completed surveys were received from the 15 participating centers. The ethnic composition of the aggregate sample was 5% Hispanic. White participants made up the largest racial group (85%), followed by African-Americans (12%), Asians (3%), Native American or Alaskan Natives, and Native Hawaiian (2%) or Pacific Islanders (1%). The demographics of the response set have been previously reported.9 The racial and ethnic characteristics of the response sample were comparable to those of the overall research population to whom the survey was mailed (mailing sample), based on data from the eight institutions able to provide data on race and ethnicity for their entire sample population (Table 1). Gender was reported from 4 of the 15 centers; participants from these 4 centers represented approximately 20% of both the mailing and response samples. Females made up 61% of the mailing sample and 60% of the response sample from these centers. Thirty-seven percent of respondents reported participating as healthy volunteers; the remainder participated based on having a disease or disorder being studied. Forty-six percent of participants received a new drug or device or experienced a study procedure.9

Table 1.

Demographics of research participants to whom the survey was sent compared to those who responded.*

| Participant characteristics | Mailed sample* | Response sample for the centers providing ‘mailing sample’ data | |||

|---|---|---|---|---|---|

|

| |||||

| Ethnicity | N | % | N | % | |

| Hispanic | 784 | 6.70% | 120 | 6.70% | |

| Non-Hispanic | 10,848 | 93.30% | 1,684 | 93.30% | |

|

| |||||

| Race | |||||

|

| |||||

| American Indian or Alaskan Native | 98 | 0.7% | 35 | 1.5% | |

| Asian | 474 | 3.3% | 79 | 3.4% | |

| Black or African American | 1,509 | 10.4% | 210 | 9.2% | |

| Native Hawaiian/Pacific Islander | 45 | 0.3% | 13 | 0.6% | |

| White | 10,909 | 74.8% | 1,958 | 85.3% | |

| More than one race | 827 | 5.7% | |||

| Unknown/missing | 716 | 4.9% | |||

|

| |||||

| Gender | |||||

|

| |||||

| Female | 1991 | 54.9% | 553 | 56.8% | |

| Male | 1507 | 41.5% | 392 | 40.2% | |

| Missing | 130 | 3.6% | 29 | 3.0% | |

Data are from 6 centers that provided the data on ethnicity, 8 centers that provided data on race, and 4 centers that provided data on gender.

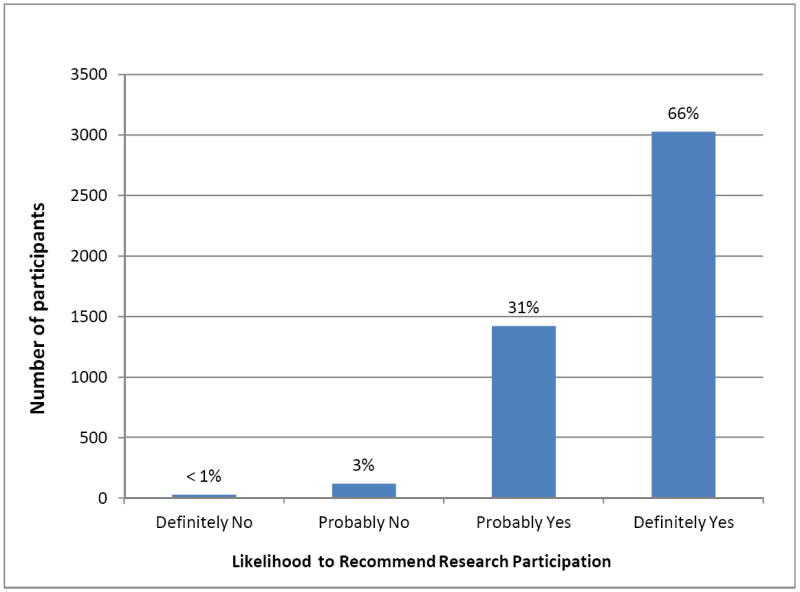

Overall Experience and Willingness to Recommend Participation to Others

As we previously reported,11 in aggregate, 73% of participants (range across centers, 61-81%) rated their overall research experience very highly (“9” or “10”) (Figure 1) and 66% reported they would “definitely” recommend research participation to friends or family (Figure 2). Participants’ overall ratings did not differ based on whether they participated as healthy volunteers or disease-affected individuals (p=0.09), whether their protocols involved investigational agents or procedures (p=0.92), or how they learned about the study (p=0.27). Participants were more likely to rate their overall experiences very highly when they: trusted the investigators and nurses, felt treated with respect by the investigators and nurses, felt that investigators and nurses listened to them, received understandable answers from investigators and nurses in response to questions, and were able to meet with the principal investigator as much as they wanted (all p<0.0001 for trend; Table 2). In a multiple regression analysis, participants’ answers to the question “How often were you treated with courtesy/respect by the investigator/doctor?” accounted for 82% of the variance in participants’ overall ratings of their research experiences, with an adjusted R2 value of 0.81. Collectively, the answers to six questions incorporating the above themes increased the adjusted R2 to 0.96 (Table 3).

Figure 1.

Participants’ ratings of their overall research experience (N=4961)

Figure 2.

Participants’ ratings of their likelihood to recommend research participation to family or friends (N=4961).

Table 2.

Relationship of “Top Box” rating of the overall research experience and respondent characteristic or responses to specific actionable questions (N=3180-4961)

| Respondent characteristic or Question Response Option (percentage) | Percentage with a Particular Characteristic or Question Response Option Who Rated Their Overall Research Experience “Top Box”* | P-Value |

|---|---|---|

|

| ||

|

Sample Characteristics

| ||

| Study limited to participants with certain disease | ||

| Yes (63%) | 73 | Exact P=0.09 |

| No (37%) | 74 | |

|

| ||

| Study involved drug or new device/procedure | ||

| Yes (49%) | 74 | Exact P=0.92 |

| No (51%) | 74 | |

|

| ||

|

Dimension: Communication/Information/Education

| ||

| Research doctor listened carefully | ||

| Always (86%) | 79 | Trend P<0.0001 |

| Usually (11%) | 41 | |

| Sometimes/Never (3%) | 26 | |

|

| ||

| Nurse listened carefully | ||

| Always (97%) | 79 | Trend P<0.0001 |

| Usually (1%) | 35 | |

| Sometimes/Never (1%) | 18 | |

|

| ||

| Research doctor answered questions so I could understand | ||

| Always (87%) | 79 | Trend P<0.0001 |

| Usually (11%) | 38 | |

| Sometimes/Never (2%) | 31 | |

|

| ||

| Nurse answered questions so I could understand | ||

| Always (91%) | 78 | Trend P<0.0001 |

| Usually (7%) | 33 | |

| Sometimes/Never (1%) | 21 | |

|

| ||

|

Dimension: Respect for Participant Preferences

| ||

| Principal investigator treated me with courtesy and respect | ||

| Always (94%) | 77 | Trend P<0.0001 |

| Usually (5%) | 23 | |

| Sometimes/Never (1%) | 26 | |

|

| ||

| Research nurse treated me with courtesy and respect | ||

| Always (96%) | 76 | Trend P<0.0001 |

| Usually (4%) | 19 | |

| Sometimes/Never (<1%) | 19 | |

|

| ||

| Met with principal investigator as much as I wanted to | ||

| Always (61%) | 84 | Trend P<0.0001 |

| Usually (28%) | 60 | |

| Sometimes/Never (11%) | 50 | |

|

| ||

|

Dimension: Trust

| ||

| Had confidence and trust in the principal investigator | ||

| Always (90%) | 78 | Trend P<0.001 |

| Usually (8%) | 33 | |

| Sometimes/Never (1%) | 27 | |

|

| ||

| Had confidence and trust in the research nurse | ||

| Always (90%) | 78 | Trend P<0.0001 |

| Usually (9%) | 32 | |

| Sometimes/Never (2%) | 20 | |

|

| ||

| Dimension: Informed Consent | ||

|

| ||

| Felt pressure from the research staff to join | ||

| Never (94%) | 75 | P<0.001 |

| Not Never** (6%) | 46 | |

|

| ||

| Risks included in the informed consent form | ||

| Always (81%) | 80 | P<0.001 |

| Not Always*** (19%) | 49 | |

|

| ||

| Study details included in the informed consent form | ||

| Yes (80%) | 80 | P<0.001 |

| No (20%) | 52 | |

|

| ||

| Had enough time before signing consent | ||

| Yes (79%) | 79 | P<0.001 |

| No (21%) | 49 | |

|

| ||

| Informed consent form understandable | ||

| Always (78%) | 79 | P<0.001 |

| Not Always* (22%) | 54 | |

|

| ||

| Prepared for what to expect by informed consent form | ||

| Completely (67%) | 83 | P<0.001 |

| Mostly + Somewhat + Not at all (33%) | 53 | |

“Top-Box” ratings were either a “9” or “10” on a 10 point scale, with 10 being best.

”Not Never” combines responses “Sometimes” + “Usually” + “Always”

”Not Always” combines responses “Usually” + “Sometimes” + “Never”

Table 3.

Final multiple regression model with “overall research participant experience” as the outcome

| Items included in model* | R2 | Adjusted R2 for each additional question in the model** |

|---|---|---|

|

| ||

| • Treated with courtesy and respect by the investigator or research doctor | 0.816 | 0.809 |

| • Prepared for what happened by information and discussions provided before participation | 0.896 | 0.888 |

| • Research doctor or investigator listened carefully | 0.939 | 0.932 |

| • Prepared for what to expect by informed consent documents | 0.950 | 0.942 |

| • Knew how to reach research team | 0.961 | 0.953 |

| • Able to reach member of research team when needed | 0.968 | 0.959 |

Item phrases summarize the content of the question and not its exact wording in the questionnaire.

Adjusted R2 serves as an indicator of the ability of a subset of items in the questionnaire to explain overall research participant experiences (Adjusted R2 – 0.959, F-4.44, p<0.046).

Informed Consent

Nearly all participants reported experiencing no pressure either to join or to stay in the study, and virtually all participants understood they could leave the study if they wanted (Table 2). Approximately 80% of participants felt that informed consent documents and discussions provided understandable information that explained the study, including the risks. However, only 67% of respondents felt “completely prepared” for what to expect in the study by the consent form. Participants’ feelings about the quality of the informed consent process showed a strong positive relationship to their overall research experience (Table 2).

Motivation to Join and Remain in a Study

The motivation to join a research study most often rated as ‘very important’ was “To help others” (64%), followed by “Concern about the topic” (56%). These remained the top motivations regardless of participant or study type (Table 4). The next most important motivations for participants with a disease or in a therapeutic study were “To find out more about my disease,” and “To gain access to new treatment,” whereas healthy volunteers and those not receiving experimental therapies rated “Because of a center’s reputation” and “To obtain education/learning” as the next most important motivations. Only 14% of participants rated “To earn money” as ‘Very important,’ with healthy volunteers ranking it 6th in importance; participants with specific diseases or participating in therapeutic studies ranked it 12th. “To obtain free health care” was “Very important” to only 12% of participants. Participants’ motivations to remain in a study identified “Feeling valued as a partner,” and “Perceived benefits” as important factors (Table 4)

Table 4.

Ranked weighted averages of participants’ ratings of the factors influencing their decisions to join or to stay in a research study (N= 4000-4300)

| Factors Influencing Decision | Relative importance in decision to join a study | Relative importance in decision to remain in a study | ||||||

|---|---|---|---|---|---|---|---|---|

| Subgroups | Subgroups | |||||||

| Healthy Volunteer | Disease Affected volunteer | Study involves drug device, procedure | Healthy Volunteer | Disease-affected volunteer | Study involves drug device, procedure | |||

| No | Yes | No | Yes | |||||

| To help others | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 |

| Concern about the topic | 2 | 2 | 2 | 2 | 2 | 3 | 2 | 2 |

| Because of center’s reputation | 3 | 6 | 4 | 5 | 4 | 7 | 4 | 7 |

| To obtain education/learning | 4 | 5 | 3 | 6 | 5 | 5 | 3 | 6 |

| To find out more about my disease | 7 | 3 | 5 | 4 | 8 | 1 | 6 | 4 |

| To gain access to new treatment | 8 | 4 | 6 | 3 | 10 | 6 | 8 | 5 |

| Because no other options available | 11 | 7 | 11 | 7 | 13 | 10 | 12 | 10 |

| To obtain free healthcare | 9 | 10 | 10 | 9 | 12 | 12 | 13 | 12 |

| Because of prior positive experience | 5 | 8 | 7 | 8 | 7 | 11 | 9 | 11 |

| To earn money/payment | 6 | 12 | 8 | 12 | 9 | 15 | 11 | 15 |

| Because of family influence | 10 | 11 | 9 | 11 | 14 | 14 | 14 | 14 |

| Because of caregiver encouragement | 12 | 9 | 12 | 10 | 15 | 13 | 15 | 13 |

| Because of relationship with the team | - | - | - | - | 11 | 9 | 10 | 9 |

| Because of improved health | - | - | - | - | 6 | 4 | 7 | 3 |

| Because of feeling valued | - | - | - | - | 3 | 8 | 5 | 8 |

Sharing Research Study-Related Data with Participants

As we previously reported11 twenty-three percent of participants reported receiving a summary of research results. Of those who did not receive a summary, 85% indicated that they would have liked to have received one. Similarly, 65% of all participants wanted to receive the results of their routine lab studies. When asked to rate items that “Would be important in a future study,” 62% of respondents rated as “Very important” the “Sharing of routine test results with me or my doctor” and 72% gave the same rating to having a “Summary of the overall research results shared with me.”

Trust

As we previously reported,11 overall, 86% of respondents trusted the research team completely. Of these, many felt that they were treated with courtesy and respect (99%), were treated as valued partners (79%), and were listened to carefully by investigators (93%) or research coordinators (95%). Based on historical reports of human protections violations involving minority populations14 and a general perception of persisting mistrust of medical research by minority populations15,16 we analyzed trust data of subgroups by race and ethnicity. Among the 5 racial/ethnic groups analyzed, whites had a somewhat higher level of trust for the research team (88% “top-box” responses; p<0.0001) than did the 4 non-white groups collectively, whose percentages did not differ significantly (p=0.88), ranging narrowly from 78 to 82% (Table 5).

Table 5.

Subgroup analyses for trust and confidence in the research team

| Race/Ethnic Group (n=4407) | Percentage with “top-box” Overall Experience rating responding “Always” to having trust/confidence in both investigator and nurse* | |

|---|---|---|

| White (n=3503) | 88 | p<0.0001 |

| Asian (n=120) | 79 | p=0.88 across Non-White groups |

| African-American (n=465) | 80 | |

| More than one race (n=111) | 78 | |

| Hispanic (n=208) | 82 | |

| All respondents | 86 |

Responses were dichotomized into ‘always’ had trust and confidence in the research team, or ‘not always’.

“Top-Box” responses rates among non-white groups were not significantly different from each other (p – 0.88, Fisher’s exact test), but were lower as a group than the “Top-Box” response rate of whites (p<0.0001).

Generalizability of Findings

The generalizability of the results of our study may be limited by the response rate and the types of institutions that participated. The response rate in our study, 29%, is similar to the ~33% national response rate in the same year for the HCAHPS hospital survey, which is used to judge the quality of institutional performance as the basis for reimbursement by the Centers for Medicare and Medicaid Services (CMS),17 but somewhat lower than the rates for mailed surveys (35 and 38%) in the validation studies of the HCAHPS survey.18,19 We did not attempt to contact non-responders using financial incentives and telephone contacts, as these methods have been demonstrated to introduce positive bias.18,19 In fact, HCAHPS makes a downward adjustment of the favorable response rates of telephone surveys to better match them to mail surveys.19 Moreover, our population was similar in gender, age and education to the mailed response population in the large HCAHPS validation study, in which consistency of survey mode (e.g., mailed surveys) was more important in minimizing bias than were patient-mix adjustments to account for nonresponse bias.9,18

In an attempt to detect potential response bias, we examined whether the centers differed in their overall ratings according to response rate. The percentages of participants rating their overall experiences as very positive in the two institutions with the highest response rates (74 and 70%, respectively) were 75 and 69%, which are very similar to the favorable ratings of 74 and 67% in the institutions with the lowest response rates (18 and 23%, respectively), as well as very similar to the overall rate for the entire study population of 73%. When dichotomized into groups with response rates above and below the mean value, the positive overall ratings were 75 and 71%, respectively. Responders from the institutions with above average responses were somewhat more likely than those in the lower responding group to recommend joining a research study (69 vs. 62%).

We also examined whether later responders to the two-wave mailing were different from earlier responders based on a presumption that late responders may share some characteristics of non-responders. Approximately 77% of respondents submitted their responses after the first request and the remainder after the second. The very favorable scores for overall ratings were 75 and 67% in those responding to the initial and subsequent request, respectively, and the likelihood of recommending participation to others was 67 and 62%, respectively. Thus, based on these internal data, whereas responses varied slightly by institutional response rate and timing of responses, the broad conclusions of our study are the same across these variables.

To further examine the generalizability of our findings, we compared the demographics of the respondents to those of the sample populations. We obtained gender, age, ethnicity and race data on the mailed research population sample from 4, 6 and 8 of our 15 participating centers respectively (some centers did not have all variables available) representing 62-77% of the total mailed sample, and compared them with the demographics of the response sample from those same institutions. The results are shown in Table 1 and demonstrate very similar ethnicity and gender distributions in those receiving the survey compared to those responding to the survey. The racial data are also similar; a somewhat higher percentage of white participants responded than were mailed the survey, but this higher response rate may reflect, at least in part, a smaller percentage of “More than one race” or “Unknown/missing” responses for race in the response sample.

Our study was conducted primarily at major academic medical centers that focus on conducting clinical research and that were funded through the NIH GCRC and CTSA programs and the NIH Intramural Program. Thus, the results may not be generalizable beyond this cohort. However, since 61 CTSA institutions are now broadly distributed across the U.S., they represent an important segment of clinical research conducted in the U.S. to which the work may be generalizable.

Discussion

Our study provides comprehensive outcome data on how 4,961 participants from 15 different NIH-supported clinical research centers perceived their research experiences. We found that nearly three-quarters of respondents rated overall research experiences very high, and that two-thirds would definitely recommend participating in research studies to friends and family members. We also found that two-thirds of respondents felt fully prepared by the consent process for their participation, and nearly all respondents felt free from pressure to join the study and knew that they were free to leave the study at will. While these data are in general heartening with regard to most participants’ experiences, we consider it troubling that approximately one-third of participants did not feel fully prepared by the informed consent process and approximately one-fifth did not fully understand the consent document. This information can be used to inform process improvement, and the survey can then be redeployed to measure the efficacy of the interventions using these participant-centered measures.

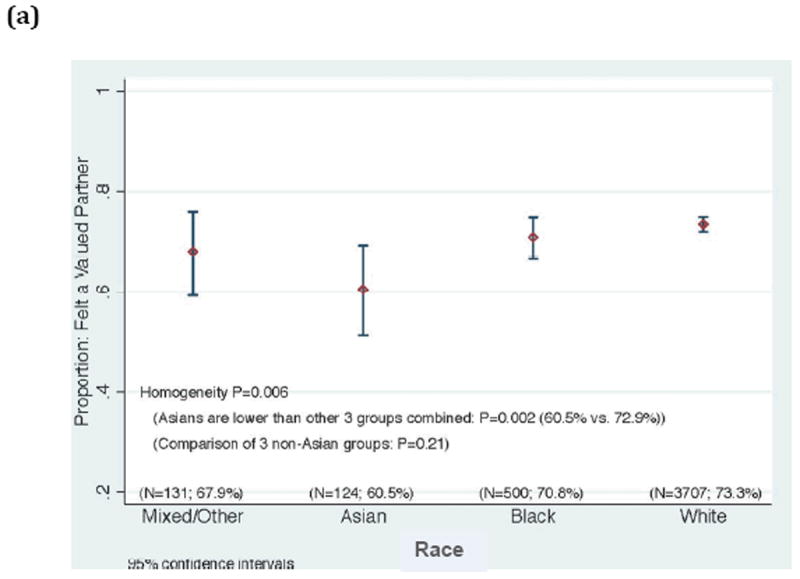

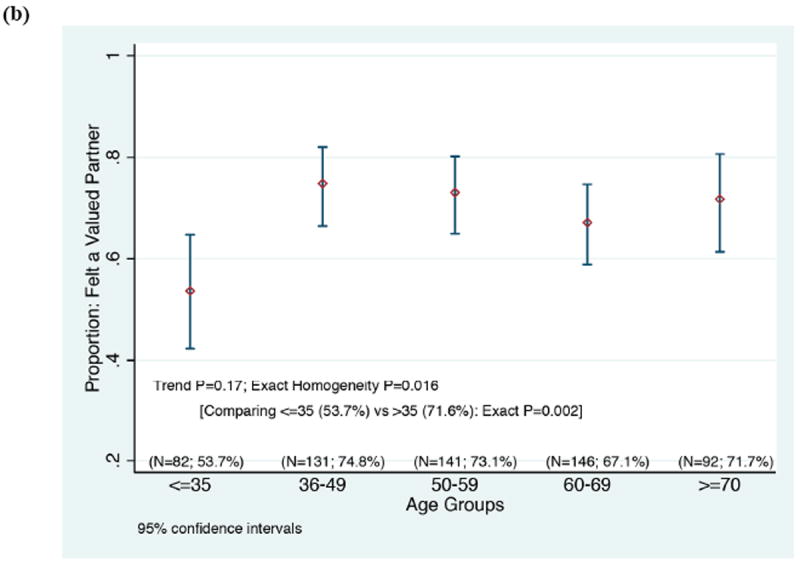

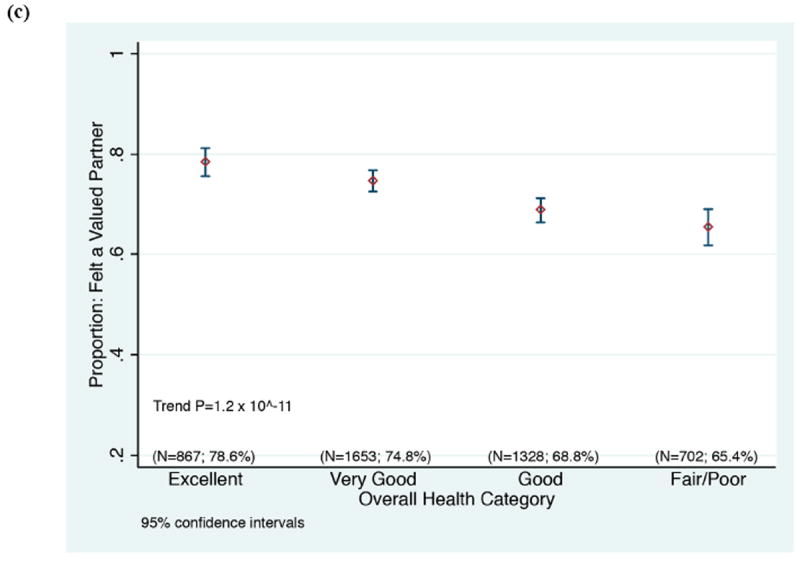

Based on the multiple regression analysis, we hypothesize that the greatest benefit in improving participants’ perceptions will come from investigators and staff demonstrating respect for participants as valued partners in the research endeavor, listening carefully to them, sharing research data with them, making sure that they know how to reach members of the research team, being available when they make contact, and making sure that participants fully understand what to expect when enrolling in a study (Table 3). Since feeling valued as a partner in the research undertaking was highly correlated as an individual question with participants’ ratings of their overall experience across education and protocol type, we note that younger participants, Asian participants, and participants in poorer health who gave their experiences high overall ratings were less likely than other groups to feel like valued partners (Figure 3). Targeted additional research is needed to validate whether the underpinnings of the positive experiences in these groups are indeed different.

Figure 3.

Correlation between rating the overall experience with an optimal, top box score (“9” or “10”), and rating “Feeling like a valued partner in research” with a top box score (“always”), according to a) race, b) participant age, and c) participant’s self-rating of his/her overall health.

Our data also indicate that altruism is a major motivation for research participants across race, education, and protocol type, providing support for similar conclusions from previous smaller studies.4,5,20,21 Of note, even participants whose diseases were being treated as part of the study, ranked altruism as their highest motivation, although they also considered personal benefit very important, a combination that has been termed “conditional altruism.”10,21 We conclude that focusing on participant altruism provides an important way to engage the public in the research partnership and improve both participation rates and research experiences.

A large majority of participants want to receive information about the results of the study, perhaps reflecting their desire to be considered valued members of the research team and to assess the impact of their altruistic action. While returning aggregate study results to participants raises a number of potentially complex issues of logistics and participant education, and the return of personal research results is often limited by the regulatory restrictions of the Clinical Laboratory Improvement Act, we believe that it deserves serious attention as standard policy.22-25. In recent years, regulators have required that aggregate clinical trial data be shared on public websites,26 however, only recently has systematic access for the participant been entertained.27 Providing even basic interim and/or final study summaries, signed by the lead investigator, would demonstrate respect for participants’ contribution to, and investment in, the research

Given the concerns expressed in the Institute of Medicine’s 2010 report about the public’s trust in the research enterprise,28 the relatively high percentage of participants expressing trust in research team members is reassuring. Nonetheless, the difference in trust experienced by white participants versus non-white racial groups as a whole raises important questions that require further study data. Interestingly, the level of trust among African-American participants was similar to that of other non-white participants, despite concerns that mistrust of medical research is especially prevalent among African-Americans.15 The increasing consideration given recently to patient-centered outcomes29 and to community participation in clinical research30 further indicate the need for performance improvement in clinical investigation. Similarly, concerns about conducting research among the under-and un-insured may also implicate trust, both trust in individual investigators and trust in systems.31,32 Although our study was unable to analyze responses by socioeconomic status (SES) proxies (education, income, or insurance status), future studies should explore the role of trust in differences in research participation experiences among lower SES and safety-net populations. This might be particularly important as researchers examine clinical research conducted among community provider organizations, as recruitment and retention practices may be very different outside of the major NIH-supported institutions. The increasing consideration given patient-centered outcomes and community participation in the clinical research endeavor, signals both need and opportunity for using participant-centered outcomes to drive performance improvement in clinical investigation.

Given the demonstrable value of empiric evidence in science, it is surprising that there have been so few previous attempts to obtain broad-based data from research participants using validated survey instruments. One might have thought that the intense focus on the ethical principles, rules, and oversight of research involving human subjects after the Nuremberg code in 1946,33 the description of unethical human research studies by Beecher in 1966,34 and the public outcry in 1972 in response to revelations about the Tuskegee Public Health Service Study,14 would have stimulated an attempt to learn systematically about research participants’ perceptions first-hand. Klitzman and Appelbaum have explained the decision of the U.S. Department of Health, Education, and Welfare in the 1970s to institute a “prospective regulatory approach” through IRB review as reflecting the need for immediate action in response to the abuses that were identified. This prospective approach led to a focus on process indicators, such as properly produced and executed informed consent forms, as well as procedures for monitoring and auditing of studies. These measures provide valuable safeguards against unethical human investigation, but they do not assess whether the desired outcome has been achieved as perceived by the research participant. To address this deficiency, they called for instituting retrospective analysis based on “objective, validated questionnaires” to assess “how well subjects understood the study or whether they were distressed by the research procedures.”35 We believe that this need remains unmet, and that our study and the developed survey contribute to progress on this important goal.

Our study has several limitations. As with all survey research, non-response bias may affect the results. We could not assess the potential impact of non-response bias directly because of privacy concerns. Our survey completion response rate was, however, comparable to that of typical hospital surveys with demonstrated utility in patient-centered process improvement9 and our assessments of late responders and high and low responding centers did not reveal any strong trends toward non-response bias. Another limitation may be that individuals overstated their altruistic motives to appear more socially acceptable. To minimize this effect we chose an anonymous survey format and a mode of administration (i.e., mail rather than telephone or face-to-face methods) that were intended to encourage candor and were consistent with analogous patient-care surveys.

Since our study was conducted at NIH-supported clinical research centers, the results may not be broadly representative of clinical research conducted throughout the U.S. These institutions tend to focus on clinical investigation and provide resources to support such research. For example, many participating centers were accredited by the Association for Accreditation of Human Research Protection Programs9 and 80% of CTSAs utilize Research Subject Advocates to enhance informed consent and other participant protections.36 Since the survey instrument we developed is now publically available (http://www.nationalresearch.com/research-participant-survey/), future studies can address this important issue directly. Our study originated from a bilateral collaboration between the NIH Clinical Center and the Rockefeller University General Clinical Research Center prior to the establishment of the NIH Clinical and Translational Science Award (CTSA) program, but the charge to the CTSAs to improve the clinical research enterprise, and the resources made available through the CTSA program to support this project, were extremely important in enabling the study. The recent Institute of Medicine report reviewing the CTSA program30 emphasized the need for developing a “learning health care system”37 and we believe that the participant outcomes obtained with the use of our survey are an important component of a “learning clinical research system.” Broad participation by the 61 currently funded CTSAs and other institutions in using and refining this survey questionnaire would provide both robust benchmarking data and opportunities to identify and disseminate best practices. These are vital elements in continuous performance improvement of the clinical research enterprise, the ultimate goal of this research.

Acknowledgments

Supported in part by grants UL1 TR000043, UL1-TR000157, UL1TR000128, UL1TR000093, 1-U54-AI108332-01, UL1TR000445, UL1 RR024145, UL1 TR001064, L1 TR000073, UL1TR000439, UL1TR001105, UL1 RR024160, UL1-RR-024128, and UL1-TR-00111 from the National Center for Research Resources (NCRR) and the National Center for Advancing Sciences (NCATS), National Institutes of Health.

The authors thank the following individuals for their collaboration in executing the study:

Hal Jenson, MD, MBA, and Marybeth Kennedy, RN, Baystate Medical Center, Tufts CTSA, Springfield, Massachusetts

Kimberly Lucas-Russell, MPH, Boston University, Boston, Massachusetts

Wesley Byerly, PharmD, Laura Beskow, PhD, MPH, and Jennifer Holcomb, MA, Duke University School of Medicine, Durham, North Carolina

Cynthia Hahn, Feinstein Institute for Medical Research, Manhasset, New York

Julie Mitchell, Oregon Health Sciences University, Portland, Oregon

Nicholas Gaich, Stanford University, SPECTRUM, Stanford, California

Elizabeth Martinez, BSN, and Cheryl Dennison Himmelfarb, PhD, RN, The Johns Hopkins University Medical Center, Baltimore, Maryland

Nancy Needler, Eric P. Rubinstein, JD, MPH, University of Rochester, Rochester, New York

Veronica Testa, RN, Tufts New England Medical Center, Boston, Massachusetts

Andrea Nassen, RN, MSN, University of Texas Southwestern Medical Center, Dallas, Texas

Carol Fedor, RN, ND, Valerie Wiesbrock, MA, University Hospitals of Cleveland, Cleveland, Ohio

Kirstin Scott, MPH, and Jan Zolkower, MSHL, Vanderbilt University, Nashville, Tennessee

Jean Larson, RN, Yale University, New Haven, Connecticut

Michael Murray, PhD, Sarah Fryda, and Sarah Winchell, NRC Picker, Inc, Toronto, Ontario Canada, and Oshkosh, Wisconsin

Footnotes

Conflict of Interest

National Research Corporation Picker, Inc. provided survey fielding, validation, and reporting expertise as part of a contract to the Rockefeller University. Dr. Yessis was formerly employed by NRC Picker during the design of the research. Rockefeller University has granted a royalty- free license to National Research Corporation Picker to administer the survey commercially. Neither Dr. Yessis, nor any of the authors nor their hosting institutions has any financial interest in National Research Corporation Picker, Inc. (formerly NRC Picker, Inc.) or any future commercial survey.

References

- 1.Crow R, Gage H, Hampson S, et al. The measurement of satisfaction with healthcare: implications for practice from a systematic review of the literature. Health Technol Assess. 2002;6(32):1–244. doi: 10.3310/hta6320. [DOI] [PubMed] [Google Scholar]

- 2.Isaac T, Zaslavsky AM, Cleary PD, Landon BE. The relationship between patients’ perception of care and measures of hospital quality and safety. Health Serv Res. 2010 Aug;45(4):1024–1040. doi: 10.1111/j.1475-6773.2010.01122.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Centers for Medicare and Medicare Services. HCAHPS: Patients’ Perspectives of Care Survey, 2011. [May 25, 2011]; http://www.cms.gov/HospitalQualityInits/30_HospitalHCAHPS.asp.

- 4.Madsen SM, Mirza MR, Holm S, Hilsted KL, Kampmann K, Riis P. Attitudes towards clinical research amongst participants and nonparticipants. J Intern Med. 2002 Feb;251(2):156–168. doi: 10.1046/j.1365-2796.2002.00949.x. [DOI] [PubMed] [Google Scholar]

- 5.Hallowell N, Cooke S, Crawford G, Lucassen A, Parker M, Snowdon C. An investigation of patients’ motivations for their participation in genetics-related research. J Med Ethics. 2010 Jan;36(1):37–45. doi: 10.1136/jme.2009.029264. [DOI] [PubMed] [Google Scholar]

- 6.Lee LM, Yessis J, Kost RG, Henderson DK. Managing clinical risk and measuring participants’ perceptions of the clinical research process. In: Ogibene FO, Gallin JI, editors. Principles and Practice of Clinical Research. 3. Boston: Elsevier; 2012. pp. 573–588. [Google Scholar]

- 7.Ryan KJ, Brady JV, Cooke RE, et al. The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects. 1979 http://www.hhs.gov/ohrp/humansubjects/guidance/belmont.html. [PubMed]

- 8.Stunkel L, Grady C. More than the money: a review of the literature examining healthy volunteer motivations. Contemp Clin Trials. 2011 May;32(3):342–352. doi: 10.1016/j.cct.2010.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yessis JL, Kost RG, Lee LM, Coller BS, Henderson DK. Development of a Research Participants’ Perception Survey to Improve Clinical Research. Clin Transl Sci. 2012 Dec;5(6):452–460. doi: 10.1111/j.1752-8062.2012.00443.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kost R, Lee L, Yessis J, Coller B, Henderson D. Assessing Research Participants Perceptions of their Clinical Research Experiences. Clinical and Translational Science. 2011;4(6):403–413. doi: 10.1111/j.1752-8062.2011.00349.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kost RG, Lee LM, Yessis J, Wesley RA, Henderson DK, Coller BS. Assessing Participant-Centered Outcomes to Improve Clinical Research. NEJM. 2013 Dec 5;369(23):2179–2181. doi: 10.1056/NEJMp1311461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.CMS.gov. CAHPSRHospitalSurvey. [November 25, 2012];Summary Analyses. http://www.hcahpsonline.org/SummaryAnalyses.aspx.

- 13.Damiano P, Elliott M, Tyler M, Hays R. Differential use of the CAHPS® 0–10 global rating scale by medicaid and commercial populations. Health Serv Outcomes Res Method. 2004 Dec 01;5(3-4):193–205. [Google Scholar]

- 14.Emanuel E, Couch R, Arras J, Moreno J, Grady C. Part I – Scandals and Tragedies of Research with Human Participants: Nuremberg, the Jewish Chronic Disease Hospital, Beecher, and Tuskegee. In: Emanuel E, Couch R, Arras J, Moreno J, Grady C, editors. Ethical and Regulatory Aspects of Clinical Research. Baltimore: Johns Hopkins University Press; 2003. pp. 1–23. [Google Scholar]

- 15.Freimuth V, Quinn S, Thomas S, et al. African Americans’ views on research and the Tuskegee Syphilis Study. Soc Sci Med. 2001;52(5):797–808. doi: 10.1016/s0277-9536(00)00178-7. [DOI] [PubMed] [Google Scholar]

- 16.Wendler D, Kington R, Madans J, et al. Are racial and ethnic minorities less willing to participate in health research? PLoS Med. 2006 Feb;3(2):e19. doi: 10.1371/journal.pmed.0030019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.CMS. Summary of HCAHPS Survey Results January 2010 to December 2010 Discharges. 2011 http://www.hcahpsonline.org/files/HCAHPS%20Survey%20Results%20Table%20(Report_HEI_October_2011_States).pdf.

- 18.Elliott MN, Zaslavsky AM, Goldstein E, et al. Effects of Survey Mode, Patient Mix, and Nonresponse on CAHPS® Hospital Survey Scores. Health Services Research. 2009;44(2p1):501–518. doi: 10.1111/j.1475-6773.2008.00914.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.de Vries H, Elliott MN, Hepner KA, Keller SD, Hays RD. Equivalence of mail and telephone responses to the CAHPS Hospital Survey. Health Serv Res. 2005 Dec;40(6 Pt 2):2120–2139. doi: 10.1111/j.1475-6773.2005.00479.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sugarman J, Kass NE, Goodman SN, Perentesis P, Fernandes P, Faden RR. What patients say about medical research. IRB. 1998 Jul-Aug;20(4):1–7. [PubMed] [Google Scholar]

- 21.McCann SK, Campbell MK, Entwistle VA. Reasons for participating in randomised controlled trials: conditional altruism and considerations for self. Trials. 2010;11:31. doi: 10.1186/1745-6215-11-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Das S, Bale SJ, Ledbetter DH. Molecular genetic testing for ultra rare diseases: models for translation from the research laboratory to the CLIA-certified diagnostic laboratory. Genet Med. 2008;10(5):332–336. doi: 10.1097/GIM.0b013e318172838d. [DOI] [PubMed] [Google Scholar]

- 23.Siegfried JD, Morales A, Kushner JD, et al. Return of Genetic Results in the Familial Dilated Cardiomyopathy Research Project. J Genet Couns. 2013;22(2):164–174. doi: 10.1007/s10897-012-9532-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Partridge AH, Winer EP. Sharing study results with trial participants: time for action. J Clin Oncol. 2009 Feb 20;27(6):838–839. doi: 10.1200/JCO.2008.20.0865. [DOI] [PubMed] [Google Scholar]

- 25.Dressler LG, Smolek S, Ponsaran R, et al. IRB perspectives on the return of individual results from genomic research. Genet Med. 2012;14(2):215–222. doi: 10.1038/gim.2011.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials.gov results database--update and key issues. N Engl J Med. 2011 Mar 3;364(9):852–860. doi: 10.1056/NEJMsa1012065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Pfizer. [Jan 27, 2014];Pfizer press release. 2014 http://www.pfizer.com/news/press-release/press-release-detail/pfizer_expands_clinical_trial_data_access_policy_and_launches_data_access_portal.

- 28.Institute of Medicine (US) Forum on Drug Discovery D, and Translation. Transforming Clinical Reseach in the United States: Challenges and Opportunities: Workshop Summary. Paper presented at: National Academies Press (US); 2010; Washington (DC). National Academies Press (US); Available from: http://www.ncbi.nlm.nih.gov/books/NBK50892/ [PubMed] [Google Scholar]

- 29.PCORI. Patient Centered Outcomes Research Institute. [July 6, 2013];2013 http://www.pcori.org/research-we-support/pcor/

- 30.Institute of Medicine. The CTSA Program at NIH: Opportunities for Advancing Clinical and Translational Research. Washington, DC: The National Academies Press; 2013. p. 178. [PubMed] [Google Scholar]

- 31.Shea JA, Micco E, Dean LT, McMurphy S, Schwartz JS, Armstrong K. Development of a revised Health Care System Distrust scale. J Gen Intern Med. 2008 Jun;23(6):727–732. doi: 10.1007/s11606-008-0575-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Armstrong K, McMurphy S, Dean LT, et al. Differences in the patterns of health care system distrust between blacks and whites. J Gen Intern Med. 2008 Jun;23(6):827–833. doi: 10.1007/s11606-008-0561-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Shuster E. Fifty years later: the significance of the Nuremberg Code. N Engl J Med. 1997 Nov 13;337(20):1436–1440. doi: 10.1056/NEJM199711133372006. [DOI] [PubMed] [Google Scholar]

- 34.Beecher HK. Ethics and Clinical Research. New England Journal of Medicine. 1966;274:1354–1360. doi: 10.1056/NEJM196606162742405. [DOI] [PubMed] [Google Scholar]

- 35.Klitzman R, Appelbaum PS. Research ethics. To protect human subjects, review what was done, not proposed. Science. 2012 Mar 30;335(6076):1576–1577. doi: 10.1126/science.1217225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kost RG, Reider C, Stephens J, Schuff KG. Research subject advocacy: program implementation and evaluation at clinical and translational science award centers. Acad Med. 2012 Sep;87(9):1228–1236. doi: 10.1097/ACM.0b013e3182628afa. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Institute of Medicine BCaLCTPtCLHCiA. Best Care at Lower Cost: The Path to Continuously Learning Health Care in America. In: Mark Smith RS, Stuckhardt Leigh, McGinnis JMichael, editors. America CotLHCSi. Washington DC: The National Academies Press; 2013. http://www.iom.edu/Reports/2012/Best-Care-at-Lower-Cost-The-Path-to-Continuously-Learning-Health-Care-in-America.aspx. [PubMed] [Google Scholar]