Abstract

Purpose

Cone-Beam Computed Tomography (CBCT) is one of the primary imaging modalities in radiation therapy, dentistry, and orthopedic interventions. While CBCT provides crucial intraoperative information, it is bounded by a limited imaging volume, resulting in reduced effectiveness. This paper introduces an approach allowing real-time intraoperative stitching of overlapping and non-overlapping CBCT volumes to enable 3D measurements on large anatomical structures.

Methods

A CBCT-capable mobile C-arm is augmented with a Red-Green-Blue-Depth (RGBD) camera. An off-line co-calibration of the two imaging modalities results in co-registered video, infrared, and X-ray views of the surgical scene. Then, automatic stitching of multiple small, non-overlapping CBCT volumes is possible by recovering the relative motion of the C-arm with respect to the patient based on the camera observations. We propose three methods to recover the relative pose: RGB-based tracking of visual markers that are placed near the surgical site, RGBD-based simultaneous localization and mapping (SLAM) of the surgical scene which incorporates both color and depth information for pose estimation, and surface tracking of the patient using only depth data provided by the RGBD sensor.

Results

On an animal cadaver, we show stitching errors as low as 0.33 mm, 0.91 mm, and 1.72mm when the visual marker, RGBD SLAM, and surface data are used for tracking, respectively.

Conclusions

The proposed method overcomes one of the major limitations of CBCT C-arm systems by integrating vision-based tracking and expanding the imaging volume without any intraoperative use of calibration grids or external tracking systems. We believe this solution to be most appropriate for 3D intraoperative verification of several orthopedic procedures.

I. INTRODUCTION

Intraoperative 3D X-ray Cone-Beam Computed Tomography (CBCT) during orthopedic and trauma surgeries has the potential to reduce the need of revision surgeries1 and improve patient safety. Several works have emphasized the advantages that C-arm CBCT offers for guidance in orthopedic procedures for head and neck surgery2,3, spine surgery4, and (Kirschner wire) K-wire placement in pelvic fractures.5,6 Other medical specialties, such as angiography7, dentistry8 or radiation therapy9, have reported similar benefits when using CBCT. However, commonly used CBCT devices exhibit a limited field of view of the projection images, and are constrained in their scanning motion. The limited view results in reduced effectiveness of the imaging modality in orthopedic interventions due to the small volume reconstructed.

For orthopedic traumatologists, restoring the correct length, alignment, and rotation of the affected extremity is the goal of any fracture management strategy regardless of the fixation technique. This can be difficult with the use of conventional fluoroscopy with limited field of view and lack of 3D cues. For instance, it is estimated that malalignment (> 5° in the coronal or sagittal plane) is seen in approximately 10%, and malrotation (> 15°) in up to approximately 30% of femoral nailing cases.10,11

Intraoperative stitching of 2D fluoroscopic images has been investigated to address these issues.12 Radio-opaque markers attached to surgical tools were used to perform the stitching. Trajectory visualization and total length measurement were the most frequent features used by the surgeons in the stitched view. The outcome of 2D stitching was overall reported promising for future development. Similarly, X-ray translucent references were employed and positioned under the bone for 2D X-ray mosaicing.13,14 An alternative approach used optical features acquired from an adjacent camera to recover the stitching transformation.15 The aforementioned methods only addressed stitching in 2D, and their generalization to 3D stitching has remained a challenge.

Orthopedic sports-related and adult reconstruction procedures could benefit from stitched 3D intraoperative CBCT. For example, high tibial and distal femoral osteotomies are utilized to shift contact forces in the knee in patients with unilateral knee osteoarthritis. These osteotomies rely on precise correction of the mechanical axis to achieve positive clinical results. Computer-aided navigation systems and optical tackers have shown to help achieve the desired correction in similar procedures;16–18 however, they impose changes to the workflow, e. g. by requiring registration and skeletal fixed reference bases. Moreover, navigation systems are complex to setup, and rely on preoperative patient data that is outdated. Intraoperative CBCT has the potential to provide a navigation system for osteotomies about the knee while integrating well with the conventional surgical workflow.

Another promising use for intraoperative CBCT in orthopedics is for comminuted fractures of the mid femur. Intraoperative 3D CBCT has the potential to verify length, alignment, and rotation and to reduce the need for revision surgery due to malreduction.1 In Fig. 1 the difficulty in addressing rotational alignment in mid-shaft comminuted femur fractures and the clinical impact of misalignment is demonstrated. Fig. 2 demonstrates the anatomical landmarks used to estimate the 3D position of the bone. Traditionally, to ensure proper femoral rotation, the contralateral leg is used as a reference: First, an AP radiograph of the contralateral hip is acquired, and particular attention is paid to anatomical landmarks such as how much of the lesser trochanter is visible along the medial side of the femur. Second, the C-arm is translated distally to the knee and then rotated ~90° to obtain a lateral radiograph of the healthy knee with the posterior condyles overlapping. These two images, the AP of the hip and lateral of the knee, determine the rotational alignment of the healthy side. To ensure correct rotational alignment of the injured side, an AP of the hip (on the injured side) is obtained, attempting to reproduce the AP radiograph acquired of the contralateral side (a similar amount of lesser trochanter visible along the medial side of the femur). This ensures that the position of both hips is similar. The C-arm is then moved distally to the knee of the injured femur and rotated ~90° to a lateral view. This lateral image should match that of the healthy side. If they do not match, rotational correction of the femur can be performed, attempting to obtain a lateral radiograph of the knee on the injured side similar to that of the contralateral side. This procedure motivates the need for intraoperative 3D imaging with large field of view, where leg length discrepancy and malrotation can be quantified intraoperatively and compared with the geometric measurements from the preoperative CT scan of the contralateral side. CBCT can also be used in robot-assisted intervention and provide navigation for continuum robots used for minimally invasive treatment of pelvic osteolysis19 and osteonecrosis of the femoral head.20

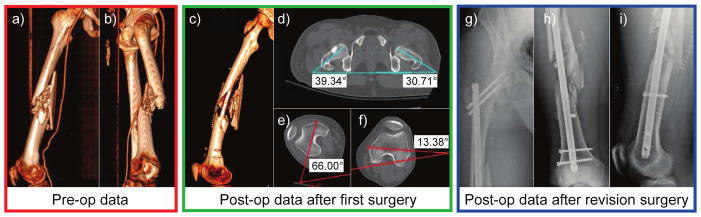

FIG. 1.

Difficulties arise in addressing rotational alignment in long bone fractures - The 3D preoperative CT scan of the right femur of a patient with a ballistic fracture of the femoral shaft is shown in (a–b). As seen in these images, due to the significant comminution, there are few anatomical cues as to the correct rotational alignment of the bone. (c) shows the postoperative CT of the same femur after reduction and placement of a cephallomedullary nail. The varus/valgus alignment appears to be restored (see Fig. 2); however, significant rotational malalignment is present with excessive external rotation of the distal aspect of the femur. Axial cuts from the postoperative CT scan are shown in (d–f). As shown in (d), the hips are in relatively similar position (right hip ~ 10° externally rotated vs. the left). However, in (e), the operative right knee is over 40° more externally rotated than the healthy contralateral side in (f). Figures (g–i) show the anteroposterior (AP) view of the right hip, AP view of the right femur, and the lateral postoperative radiographs after revision cephalomedullary nailing with correction of the rotational deformity. The revision surgery includes removal and correct replacement of the intramedullary nail.

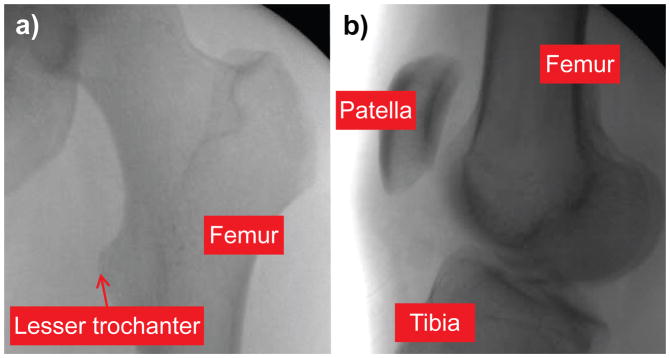

FIG. 2.

Contralateral images for guidance in rotational alignment - (a) and (b) are intraoperative fluoroscopic images from the revision surgery; AP view of the contralateral hip and lateral view of the contralateral knee. These images were utilized to guide rotational alignment of the fractured femur. By visualizing landmarks on these radiographs and understanding the change in angulation of the C-arm, the surgeon can estimate the rotational alignment of the healthy femur and attempt to recreate this alignment on the operative side.

To produce larger volumes, panoramic CBCT is proposed in9 by stitching overlapping X-ray images acquired from the anatomy. Reconstruction quality is ensured by requiring sufficient overlap of the projection images, which in return increases the X-ray dose. Moreover, the reconstructed volume is vulnerable to artifacts introduced by image stitching. An automatic 3D image stitching technique is proposed in.21 Under the assumption that the orientational misalignment is negligible, and sub-volumes are only translated, the stitching is performed using phase correlation as a global similarity measure, and Normalized Cross Correlation (NCC) as the local cost. Since NCC depends only on information in the overlapping area of the 3D volumes, sufficient overlap between 3D volumes is imperative. To reduce the X-ray exposure, Lamecker et al.22 incorporated prior knowledge from statistical shape models to perform 3D reconstruction.

To optimally support the surgical intervention, our focus are CBCT alignment techniques that do not require the change of workflow or additional devices in the operating theater. To avoid excessive radiation, we assume that no overlap between CBCT volumes exists.23 These constraints motivate our work and lead to the development of three novel methods presented in this manuscript. We also discuss and compare the results of this work to the technique proposed in24 as the first self-contained system for CBCT stitching. To avoid the introduction of additional devices, such as computer or camera carts, we co-register the X-ray source to a color and depth camera, and track the C-arm relative to the patient based on the Red-Green-Blue-Depth (RGBD) observations.25–28 This allows the mobile C-arm to remain self-contained, and independent of additional devices or the operating theater. Additionally, the image quality of each individual CBCT volume remains intact, and the radiation dose is linearly proportional to the size and number of individual CBCT volumes.

II. EXPERIMENTAL SETUP AND DATA ACQUISITION

A. Experimental Setup

Our system is composed of a mobile C-arm, ARCADIS Orbic 3D, from Siemens Healthineers and an Intel Realsense SR300 RGBD camera. The SR300 is relatively small (X= 110.0±0.2 mm, Y= 12.6±0.1 mm, Z= 3.8–4.1 mm), and integrates a full-HD RGB camera, and an infrared projector and infrared camera, which enable the computation of depth maps. The SR300 is designed for short ranges from 0.2m to 1.2m for indoor use. Access to raw RGB and infrared data is possible using the Intel RealSense SDK. The C-arm is connected via Ethernet to the computer for CBCT data transfer, and the RGBD camera is connected via powered USB 3.0 for real-time frame capturing.

B. CBCT Volume and Video Acquisition

To acquire a CBCT volume, the patient is positioned under guidance of the lasers. Then, the motorized C-arm orbits 190° around the center visualized by the laser lines, and automatically acquires a total of 100 2D X-ray images. Reconstruction is performed using a maximum-likelihood expectation-maximization iterative reconstruction method29, resulting in a cubic volume with 512 voxels along each axis and an isotropic voxel size of 0.2475 mm. For the purpose of reconstruction, we use the following geometrical parameters provided by the manufacturer: source-to-detector distance: 980.00 mm, source-iso-center distance: 600.00 mm, angle range: 190°, detector size: 230.00mm × 230.00 mm.

III. GEOMETRIC CALIBRATION AND RECONSTRUCTION WITH THE RGBD AUGMENTED C-ARM

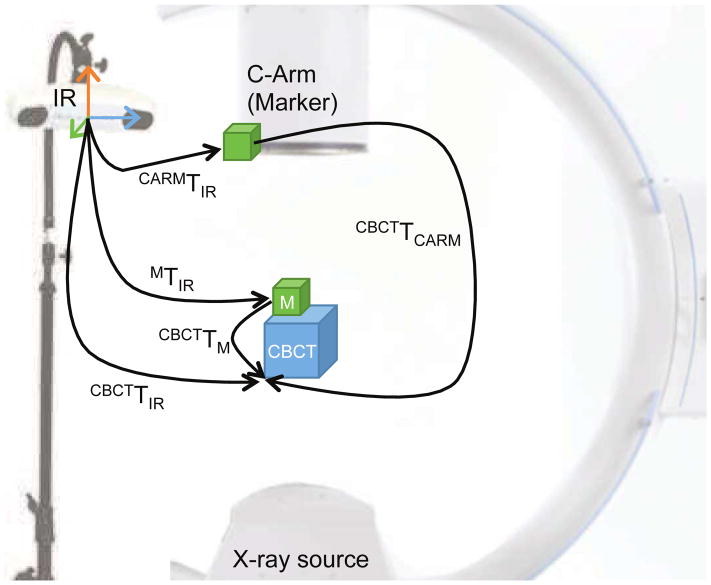

To stitch non-overlapping CBCT volumes, the transformation between individual volumes needs to be recovered. In this work, we assume that only the relative relationship between CBCT volumes is of interest, and that the patient does not move during the CBCT scan. In contrast to previous external tracking systems, our approach relies on a 3D color camera providing depth (D) information for each red, green, and blue (RGB) pixel. To keep the workflow disruption and limitation of free workspace minimal, the RGBD camera is mounted on the C-arm gantry close to the detector as shown in Fig. 5.

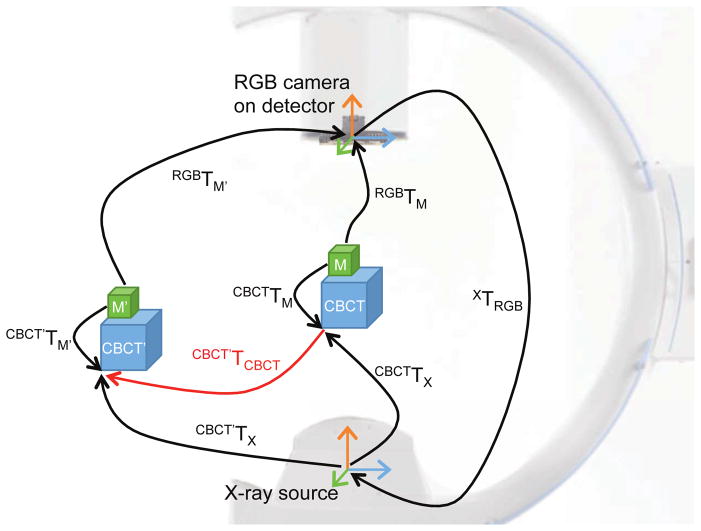

FIG. 5.

The relative displacement of CBCT volumes (CBCT′TCBCT) is estimated from the tracking data computed using the camera mounted on the C-arm. This requires the calibration of camera and X-ray source (XTRGB), and the known relationship of X-ray source and CBCT volume (CBCTTX). The pose of the marker is observed by the camera (RGBTM), while the transformation from marker pose to CBCT volume (CBCTTM) is computed once and assumed to remain constant.

After a one-time calibration of the RGBD camera to the X-ray source, CBCT reconstruction is performed. Next, we use the calibration information and the 3D patient data to perform vision-based CBCT stitching.

a. RGB Camera, Depth Sensor, and X-ray Source Calibration

To simultaneously calibrate and estimate relationships between RGB, depth, and X-ray camera, we designed a radiopaque checkerboard that can also be detected by the color camera and in the infrared image of the depth sensor. This hybrid checkerboard, shown in Fig. 3, allows for offline calibration of the imaging sources which takes place when the patient is not present. Due to the rigid construction of the RGBD camera on the C-arm gantry, the relative extrinsic calibration will remain valid as long as the RGBD camera is not moved on the C-arm. To calibrate the RGB camera and depth sensor, we deploy a combination of automatic checkerboard detection and pose estimation30 and non-linear optimization31, resulting in camera projection matrices PRGB and PD. In this camera calibration approach, the checkerboard is assumed to be at Z = 0 at each pose. This assumption reduces the calibration problem from xi = PiXi to xi = HiX̃i, where xi is the image points in homogeneous coordinates for the i-th pose, Pi is the camera calibration matrix, Xi = [Xi, Yi, Zi, 1]⊤ is the checkerboard corners in 3D homogeneous coordinates, Hi is a 3 × 3 homography, and X̃i = [Xi, Yi, 1]⊤. Hi is solved for each pose of the checkerboard, and then used to estimate the intrinsic and extrinsic parameters of the cameras. The output of this closed-form solution is then used as initialization for a non-linear optimization approach32 that minimizes a geometric cost based on maximum likelihood estimation. The radial distortion is modeled inside the geometric cost using a degree 4 polynomial. The general projection matrices can be solved for all i = {1, …, N} valid checkerboard poses CBi, with N ≥ 3. For each of the checkerboard poses we obtain the transformations from camera origin to checkerboard origin CBiTRGB, and from depth sensor origin to checkerboard origin CBiTD.

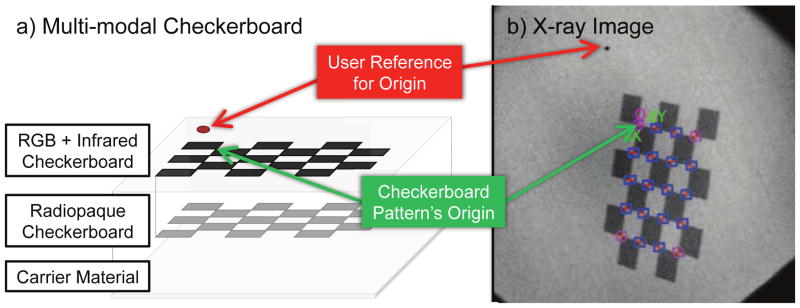

FIG. 3.

Checkerboard is designed to be fully visible in RGB, depth, and X-ray images.

Thin metal sheets behind black checkerboard squares make the pattern visible in X-ray as they cause a low contrast between different checkerboard fields and the surrounding image intensities. After labeling the outer corners of the checkerboard, the corner points within this rectangle are detected automatically. The user reference point shown in Fig. 3 is used to ensure consistent labeling of checkerboard corners with respect to the origin. The checkerboard poses CBiTX and camera projection matrix PX can then be estimated similarly to an optical camera. In contrast to a standard camera, the X-ray imaging device provides flipped images to give the medical staff the impression that they are looking from the detector towards the source. Therefore, the images are treated as if they were in a left-hand coordinate frame. An additional pre-processing step needs to be deployed to convert the images to their original form. This pre-processing step includes a 90° counterclockwise rotation, followed by a horizontal flip of the X-ray images.

Finally, for each checkerboard pose CBi the RGB camera, depth sensor, and X-ray source poses are known, allowing for a simultaneous optimization of the three transformations: RGB camera to X-ray source XTRGB, depth sensor to X-ray source XTD, and RGB camera to depth sensor DTRGB.33 This process concludes the one-time calibration step required at the time of system setup.

b. CBCT Reconstruction

We hypothesize that the relationship between each X-ray projection image and the CBCT volume (CBCTTX) is known by means of reconstruction. To obtain the accurate and precise projection matrices, we performed a CBCT volume reconstruction and verified the X-ray source extrinsics using 2D/3D registration. This registration was initialized by the projection matrices provided by the C-arm factory calibration. Next, an updated estimate of the projection geometry was computed, and the final projection matrices were constructed based on the known X-ray source geometry (intrinsics) and the orbital motion (extrinsics).

If the orbital C-arm motion deviates from the assumed path, it leads to erroneous projection matrices. Utilizing 2D/3D registration based on Digitally Reconstructed Radiographs, NCC similarity cost,34 and Bound Constrained By Quadratic Approximation optimization,35 we verified the X-ray source extrinsics for each projection image. It is important to note that CBCT-capable C-arms are regularly re-calibrated when used in the clinical environment. In cases where the internal calibration of the C-arm differs slightly from the true scan trajectory, the volumetric reconstruction will exhibit artifact. In the presence of small artifacts, it is still possible to measure geometric information such as length and angles. However, in case of significant deviation, the prior hypothesis of a known relationship between X-ray source origin and CBCT is no longer valid and requires re-calibration of the C-arm.

IV. VISION-BASED STITCHING TECHNIQUES FOR NON-OVERLAPPING CBCT VOLUMES

After calibration of the cameras and X-ray source, the intrinsics and extrinsics of each imaging device are known. The calibration allows to track the patient using the RGB camera or depth sensor and apply this transformation to the CBCT volumes. In Sections IV A-IV C we introduce stitching using visual markers, 3D color features, and surface depth information.

To measure the leg length and the anatomical angles of a femur bone, two CBCT scans from the two ends of the bone are sufficient. Therefore, in the following sections we only discuss the stitching of two non-overlapping CBCT volumes. However, all the proposed solutions can also be deployed to stitch larger numbers of overlapping or non-overlapping CBCT volumes.

A. Vision-based Marker Tracking Techniques

This tracking technique relies on flat markers with a high contrast pattern that are easily detected in an image. The pose can be retrieved as the true marker size is known.36 In the following we investigate two approaches: first, a cubical marker is placed near the patient and the detector is above the bed. Second, an array of markers is attached under the bed and the detector is below the bed while the C-arm is re-positioned. These two marker strategies are shown in Fig. 4. The underlying tracking method is similar among these two methods, but each of the two approaches has its advantages and limitations which are discussed in Sec. VII.

FIG. 4.

Tracking using visual marker is performed by (a) placing a cubical visual marker near the patient, or (b) attaching an array of markers below the surgical bed.

a. Visual Marker Tracking of Patient

To enable visual marker tracking, we deploy a multi-marker strategy and arrange markers on all sides of a cube, resulting in an increased robustness and pose-estimation accuracy. The marker cube is then rigidly attached to the anatomy of interest and tracked using the RGB stream of the camera.

After performing the orbital rotation and acquiring the projection images for the reconstruction of the first CBCT volume, the C-arm is rotated to a pose for which CBCTTX is known (see Sec. III). Ideally, this pose is chosen to provide an optimal view of relative displacement of the marker cube, as the markers are tracked based on the color camera view. The center of the first CBCT volume is defined to be the world origin, and the marker cube M is represented in this coordinate frame based on camera to X-ray source calibration:

| (1) |

The transformations are depicted in Fig. 5. The surgical table or the C-arm is repositioned to acquire the second CBCT volume. During this movement, the scene and the marker cube are observed using the color camera, allowing for the computation of the new pose of the marker cube RGBTM′. Under the assumption that the relationship between CBCT volume and marker (Eq. 1) did not change as the marker remained fixed to the patient for the duration between two CBCT scans, the relative displacement of the CBCT volumes is expressed as:

| (2) |

b. Visual Marker Tracking of Surgical Table

In many orthopedic interventions, the C-arm is used to validate the reduction of complex fractures. This is mostly done by moving the C-arm rather than the injured patient. Consequently, we hypothesize that the patient remains on the surgical table and only the relationship between table and C-arm is of interest, which has also been assumed in previous work.15

A pre-defined array of markers is mounted on the bottom of the surgical table, which allows the estimation of the pose of the C-arm relative to the table. While re-arranging the C-arm to acquire multiple CBCT scans, the C-arm detector is positioned under the bed where the RGBD camera observes the array of markers. Again, this allows for the estimation of RGBTM′ and, thus, stitching.

B. RGBD Simultaneous Localization and Mapping for Tracking

RGBD devices allow for fusion of color and depth information and enable scale recovery of visual features. We aim at using RGB and depth channels concurrently to track the displacement of patient relative to a C-arm during multiple CBCT acquisitions.

Simultaneous Localization and Mapping (SLAM) has been used in the past few decades to recover the pose of a sensor in an unknown environment. The underlying method in SLAM is the simultaneous estimation of the pose of perceived landmarks, and updating the position of a sensing device.37 An RGBD SLAM was introduced in38 where the visual features are extracted from 2D frames, and later the depth associated with those features are computed from the depth sensor in the RGBD camera. These 3D features are then used to initialize a RANdom SAmple Consensus (RANSAC) method to estimate the relative poses of the sensor by fitting a 6 DOF rigid transformation.39

RGBD SLAM enables the recovery of the camera trajectory in an arbitrary environment without prior models; rather, SLAM incrementally creates a global 3D map of the scene in real-time. We assume that the global 3D map is rigidly connected to the CBCT volume, which allows for the computation of the relative volume displacement using Eq. 3, where fRGB and fRGB′ are sets of features in RGB and RGB′ frames, π is the projection operator, d is the dense depth map, and x is the set of 2D feature points.

| (3) |

C. Surface Reconstruction and Tracking Using Depth Information

Surface information obtained from the depth sensor in an RGBD camera can be used to reconstruct the patient’s surface, which simultaneously enables the estimation of the sensor trajectory. KinectFusion provides a dense surface reconstruction of a complex environment and estimates the pose of the sensor in real-time.40 Our goal is to use the depth camera view and observe the displacement, track the scene, and consequently compute the relative movement between the acquisition of CBCT volumes. This tracking method involves no markers, and the surgical site is used as reference (real-surgery condition).

KinectFusion relies on a multi-scale Iterative Closest Point (ICP) with a point-to-plane distance function and registers the current measurement of the depth sensor to a globally fused model. The ICP incorporates points from both the foreground as well as the background and estimates rigid transformations between frames. Therefore, a moving object with a static background causes unreliable tracking. Thus, multiple non-overlapping CBCT volumes are only acquired by re-positioning the C-arm instead of the surgical table.

Similar transformations shown in Fig. 5 are used to compute the relative CBCT displacement CBCT′TCBCT, where D defines the depth coordinate frame, D′T̂D is the relative camera pose computed using KinectFusion, VD and VD′ are vertex maps at frames D and D′, and ND is the normal map at frame D:

| (4) |

V. RELATED WORK AS REFERENCE TECHNIQUES

To provide a reasonable reference to our vision-based tracking techniques, we briefly introduce an infrared tracking system to perform CBCT volume stitching (Sec. VA). This chapter concludes with a brief overview of our previously published vision-based stitching technique24 (Sec. VB) put in context of our chain of transformations.

A. Infrared Tracking System

In the following we first discuss the calibration of the C-arm to the CBCT coordinate frame, and subsequently the C-arm to patient tracking using this calibration.

a. Calibration

This step includes attaching passive markers to the C-arm and calibrating them to the CBCT coordinate frame. This calibration later allows us to close the patient, CBCT, and C-arm transformation loop and estimate relative displacements. The spatial relation of the markers on the C-arm with respect to the CBCT coordinate frame is illustrated in Fig. 6 and is defined as:

| (5) |

FIG. 6.

An infrared tracking system is used for alignment and stitching of CBCT volumes. This method serves as reference standard for the evaluation of vision-based techniques.

The first step in solving Eq. (5) is to compute CBCTTIR. This estimation requires at least three marker positions in both CBCT and IR coordinate frames. Thus, a CBCT scan of another set of markers (M in Fig. 6) is acquired and the spherical markers are located in the CBCT volume. Here, we attempt to directly localize the spherical markers in the CBCT image instead of X-ray projections.41 To this end, a bilateral filter is applied to the CBCT image to remove noise while preserving edges. Next, weak edges are removed by thresholding the gradient of the CBCT, while strong edges corresponding to the surface points on the spheres are preserved. The resulting points are clustered into three partitions (one cluster per sphere), and the centroid of each cluster is computed. Then an exhaustive search is performed in the neighborhood around the centroid with the radius of ±(r + δ), where r is the sphere radius (6.00mm) and δ is the uncertainty range (2.00 mm). The sphere center is localized by a least-square minimization using its parametric model. Since the sphere size is provided by the manufacturer, we avoid using classic RANSAC or Hough-like methods as they also optimize over the sphere radius. We then use the non-iterative least-squares method suggested in 42 and solve for CBCTTIR based on singular value decomposition. Consequently, we can close the calibration loop and solve Eq. 5 using CBCTTIR, and CarmTIR which is directly measured from the IR tracker.

b. Tracking

The tracking stream provided for each marker configuration allows for computing the motion of the patient. After the first CBCT volume is acquired, the relative patient displacement is estimated before the next CBCT scan is performed.

Considering the case where the C-arm is re-positioned (from Carm to Carm′ coordinate frame) to acquire CBCT volumes (CBCT and CBCT′ coordinate frames), and the patient is fixed on the surgical table, the relative transformation from IR tracker to CBCT volumes are defined as follows:

| (6) |

The relation between the C-arm and the CBCT is fixed, hence . We can then define the relative transformation from CBCT to CBCT′ as:

| (7) |

To consider patient movement, markers (coordinate frame M in Fig. 6) may also be attached to the patient (e. g. screwed into the bone), and tracked in the IR tracker coordinate frame. CBCTTM is then defined as:

| (8) |

Assuming that the transformation between CBCT and marker is fixed during the intervention ( ) and combining Eq. 6 and Eq. 8, volume poses in the tracker coordinate frame are defined as:

| (9) |

solving Eq. 9 leads to recovery of CBCT displacement using Eq. 7.

B. Two-dimensional Feature Tracking

In this approach, the positioning laser in the base of the C-arm is used to recover the 3D depth scales of feature points observed in RGB frames, and consequently stitch the sub-volumes. The details for estimating the frame-by-frame transformation are discussed in.24

VI. EXPERIMENTS AND RESULTS

In this section, we report the results of our vision-based methods to stitch multiple CBCT volumes as presented in Sec. IV. The same experiments are preformed using the methods outlined in Sec. V, namely using a commercially available infrared tracking system, and our previously published technique.24 Finally, we compare the results of the aforementioned approaches to image-based stitching of overlapping CBCT volumes.

A. Calibration Results

The calibration of the RGBD/X-ray system is achieved using a multi-modal checkerboard (see Fig. 3), which is observed at multiple poses using the RGB camera, depth sensor, and the X-ray system. We use a 5 × 6 checkerboard where each square has a dimension of 12.655 mm. The radiopaque metal checkerboard pattern is attached to the black-and-white pattern that is printed on a paper. Therefore, the distance between these two checkerboards is equal to the thickness of a sheet of paper which is considered negligible. For the purpose of stereo calibration we can then assume all three cameras (RGB, infrared, and X-ray cameras) observe the same pattern. 72 image triplets (RGB, infrared, and X-ray images) were recorded for the stereo calibration. Images with high reprojection errors or significant motion blurring artifacts were discarded from this list for a more accurate stereo calibration.

The stereo calibration between the X-ray source and the RGB camera was eventually performed using 42 image pairs with the overall mean error of 0.86 pixels. The RGB and infrared cameras were calibrated using 59 image pairs, and an overall reprojection error of 0.17 pixels was achieved. The mean stereo reprojection error d̄repro is defined as:

| (10) |

where N is the total number of image pairs, M is the number of checkerboard corners in each frame, {x ↔ x′} are the vectors of detected checkerboard corners among the image pairs, and x̂ and x̂′ are vectors containing the projection of the 3D checkerboard corners in each of the images.

The stereo calibration was repeated twice while performing the stitching experiments. The mean translational and rotational change in the stereo extrinsic parameters were 1.42mm and 1.05°, respectively.

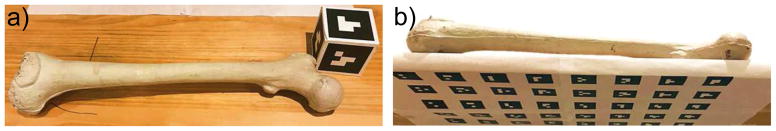

B. Stitching Results

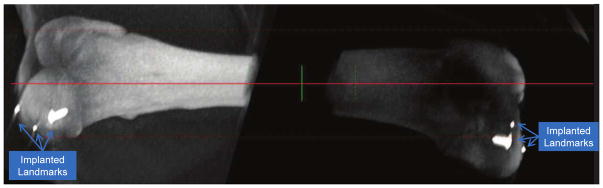

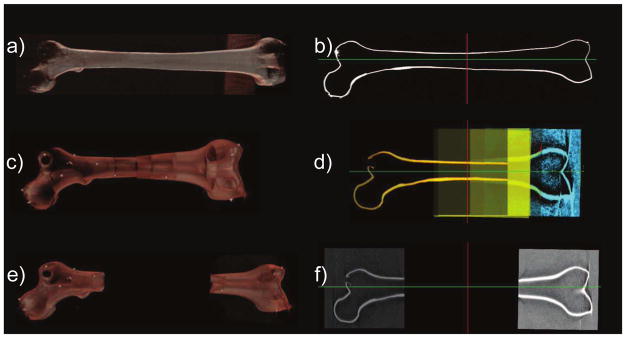

Our vision-based tracking methods are all tested and evaluated on an animal cadaver (pig femur). For these experiments, we performed the stitching of CBCT volumes with each method individually under realistic surgery conditions. The C-arm was translated for the acquisition of multiple CBCT volumes when the detector was located at AP orientation. The geometric relation of the AP view to the C-arm was estimated using an intensity-based 2D/3D registration with a target registration error of 0.29 mm. Subsequently, we measured the absolute distance between the implanted landmarks inside the animal cadaver and compared the results to a ground-truth acquired from a CT scan. The CT scan had an isotropic voxel size of 0.5 mm. The outcome of these experiments were compared to an infrared-based tracking approach (baseline method), as well as image-based stitching approach. Stitching errors for all proposed methods are reported in Table I. This stitching error is defined as the difference in the distance of the landmarks on the opposite sides of the bone (femoral head and knee sides). For each pair of landmarks, the error distance between BB landmarks is computed as , where superscripts (S) and (G) refer to measurements from stitching and the ground-truth data, respectively. The subscripts (F) and (K) refer to landmarks on the femoral head and the knee side of the femur bone, respectively. Standard deviation is also reported based on the variations in these stitching errors for all pairs of BB landmarks. In Fig. 7, the non-overlapping stitching of the CBCT volumes of the pig femur are shown.

TABLE I.

Errors are computed by measuring the average of the absolute distances between 8 radiolucent landmarks implanted into the femur head, greater trochanter, patella, and the condyle. The residual distances are measured between the opposite sides of the femur (hip to knee). Errors in angular measurements for tibio femoral (TF) and lateral-distal femoral (LDF) are reported in the last two columns. Each method is tested twice on the animal cadaver. The C-arm translation was nearly 210mm to acquire each non-overlapping CBCT volume. The first four rows present the results using vision-based methods suggested in this paper. We then present the errors of registration using external trackers as well as image-based stitching of overlapping CBCT volumes with NCC similarity measure. Note that in this table the results of stitching using 2D features (Sec. VB) are not presented as measurements on a similar animal specimen were not reported in.24 All errors are measured by comparing the stitching measurements with the measurements from a complete CT of the porcine specimen as ground-truth.

| Tracking Method | Stitching Error | Absolute Distance Error | TF Error | LDF Error |

|---|---|---|---|---|

| (mm) - mean ± SD | (%) - mean | mean ± SD | mean ± SD | |

| Marker Tracking of Patient (Sec. IV A-a) | 0.33 ± 0.30 | 0.14 | 0.6° ± 0.2° | 0.5° ± 0.3° |

| Marker Tracking of Surgical Bed (Sec. IV A-b) | 0.62 ± 0.21 | 0.26 | 0.7° ± 0.3° | 2.2° ± 0.4° |

| RGBD-SLAM Tracking (Sec. IV B) | 0.91 ± 0.59 | 0.42 | 0.5° ± 0.2° | 0.6° ± 0.4° |

| Surface Data Tracking (Sec. IV C) | 1.72 ± 0.72 | 0.79 | 1.0° ± 0.7° | 3.1° ± 1.9° |

|

| ||||

| Infrared Tracking (Sec. VA) | 1.64 ± 0.87 | 0.73 | 0.3° ± 0.1° | 2.4° ± 0.8° |

| Image-based Registration | 9.27 ± 2.11 | 6.52 | 1.2° ± 0.5° | 2.7° ± 1.5° |

FIG. 7.

Parallel projection through two CBCT volumes acquired from an animal cadaver to create a DRR-like visualization.

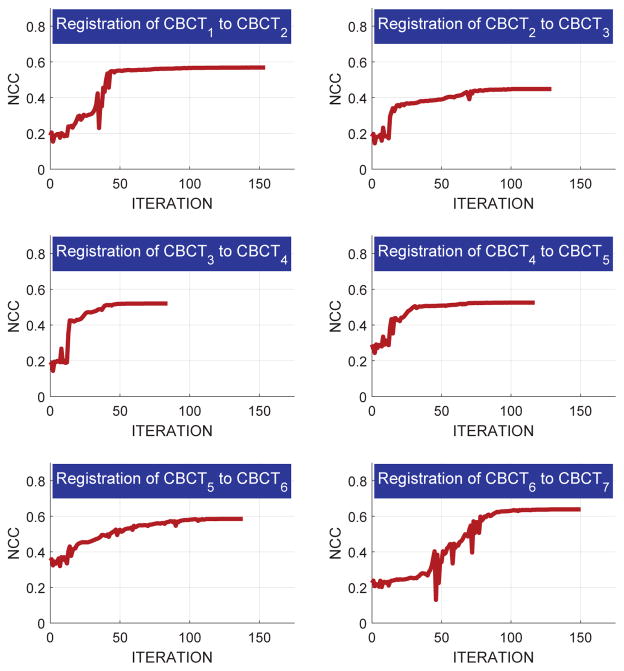

The lowest tracking error of 0.33 ± 0.30mm is achieved by tracking the cubical visual marker attached to the patient. Marker-less stitching using RGBD-SLAM exhibits submillimeter error (0.91 mm), while tracking only using depth cues results in a higher error of 1.72 mm. The alignment of CBCT volumes using an infrared tracker also has errors larger than a millimeter. The stitching of overlapping CBCT volumes yielded a substantially higher error (9.27 mm) compared to every other method in Sec. IV and V. Fig 8 shows the convergence of the NCC registration cost when stitching using image information. The NCC similarity cost between the (k)-th and (k + 1)-th CBCT is defined as:

| (11) |

where Ωk,k+1 is the common spatial domain of the mean normalized volumes CBCT(k) and CBCT(k+1), (R, t) are rotation and translation parameters, and σk and σk+1 are the standard deviations of the CBCT intensities. In this experiment, seven CBCT scans were acquired to image the entire phantom. Every two consecutive CBCT scans were acquired with 50.0mm to 60.0mm in-plane translation of the C-arm in between to ensure nearly half volume overlap (CBCT volume size along each dimension is 127 mm). The optimization never reached the maximum number of iteration threshold that was set to 500. Image-based registration was performed on the original volumes, with no filtering or down-sampling of the images.

FIG. 8.

Optimization of the NCC similarity cost for registering multiple overlapping CBCT volumes acquired from a femur phantom.

In Table I, we also report the angles between the mechanical and the anatomical axes of the femur (Tibio Femoral Angle), as well as the angle between the mechanical axis and the knee joint line (Lateral-distal Femoral Angle) using the vision-based stitching methods. The results indicate minute variations among different methods.

These methods are also evaluated on a long radiopaque femur phantom. The stitched volumes are shown in Fig. 9, and the stitching errors for each method are reported in Table. II.

FIG. 9.

(a–b) are the volume rendering and a single slice from a CT scan of a femur phantom. (c–d) are the corresponding views of the volume using image-based registration. The image-based registration uses seven overlapping CBCT volumes and results in significantly shorter total length of the bone (results in Table II). This incorrect alignment is due to insufficient amount of information in the overlapping region, especially for volumes acquired from the shaft of the bone. The shaft of the bone is a homogeneous region where the registration optimizer converges to local optima. (e–f) are the similar views of a non-overlapping stitched volume using RGBD-SLAM.

TABLE II.

The errors on a long femur phantom are reported similar to the measurements in Table I. The length from the femur neck to the intercondylar fossa of the dry phantom is approximately 369 mm. To measure the distance errors, a total of 12 landmarks are attached to the femur (6 metal beads on each end). Stitching with each method is repeated three times, and all errors are computed by comparing the measurements to the ground-truth measurements in a CT scan of the phantom.

| Tracking Method | Stitching Error | Absolute Distance Error | TF Error | LDF Error |

|---|---|---|---|---|

| (mm) - mean ± SD | (%) - mean | mean ± SD | mean ± SD | |

| Marker Tracking of Patient (Sec. IV A-a) | 0.59 ±0.37 | 0.20 | 0.8° ± 0.3° | 2.9° ± 0.5° |

| Marker Tracking of Surgical Bed (Sec. IV A-b) | 0.66 ±0.18 | 0.23 | 0.7° ± 0.4° | 2.3° ± 0.8° |

| RGBD-SLAM Tracking (Sec. IV B) | 1.01 ±0.41 | 0.38 | 0.8° ± 0.6° | 0.9° ± 0.6° |

| Surface Data Tracking (Sec. IV C) | 2.53 ±1.11 | 0.87 | 1.9° ± 0.9° | 4.1° ± 2.2° |

|

| ||||

| Infrared Tracking (Sec. VA) | 1.76 ±0.99 | 0.61 | 1.1° ± 0.3° | 2.7° ± 0.6° |

| 2D Feature Tracking (Sec. VB)24 | 1.18 ±0.28 | 0.62 | - | - |

| Image-based Registration | 68.6 ±22.5 | 23.4 | 3.9° ± 2.0° | 5.2° ± 1.7° |

VII. DISCUSSION AND CONCLUSION

In this work, we presented three vision-based techniques to stitch non-overlapping CBCT volumes intraoperatively. Our system design allowed for tracking of the patient or C-arm movement with minimal increase of workflow complexity and without introduction of external tracking systems. We attached an RGB and depth camera to a mobile C-arm, and deployed computer vision techniques to track changes in C-arm pose and, consequently, stitch the sub-volumes. The proposed methods employ visual marker tracking, RGBD-based SLAM, and surface tracking by fusing depth data to a single global surface model. These approaches estimate the relative CBCT volume displacement based on only RGB, a combination of RGB and depth, or only depth information. As a result, stitching is performed with lower dose, linearly proportional to the size of non-overlapping sub-volumes. We anticipate our methods to be particularly appropriate for intraoperative planning and validation for long bone fractures or joint replacement interventions, where multi-axis alignment and absolute distances are difficult to visualize and measure from the 2D X-ray views.

The RGBD camera is mounted on the C-arm using a rigid construction to ensure that it remains fixed with respect to the image intensifier. Previous studies showed the validity of the one-time calibration of an optical camera on the C-arm.25 However, due to mechanical sagging of the C-arm, the stereo extrinsic parameters between the RGBD device and the X-ray source are subject to small changes when the C-arm rotates to different angles.43

During the re-arrangement of the C-arm and the patient for the next CBCT acquisition, the vision-based tracking results are recorded. For this re-arrangement we consider the clinically realistic scenario of a moving C-arm and a static patient. However, as extensively discussed in Sec. IV, for marker-based methods the relative movement of the patient to C-arm is recorded, hence there are no limitations on allowed motions.

We performed the validation experiments on an animal cadaver, and compared the non-overlapping stitching outcome to an infrared tracking system and image-based registration using overlapping CBCT volumes. In these experiments we used a CT scan of the animal cadaver as the ground-truth data. The visual marker-based tracking achieved the lowest tracking error (0.33mm) among all methods. The high accuracy is due to utilizing a multi-marker strategy which avoids tracking in shallow angles. The RGBD camera has a larger field of view compared to the X-ray imaging device. Therefore, the marker can be placed in the overlapping camera views. For example, in the case of imaging the femoral head and the condyle, the visual marker can be placed near the femoral shaft. The marker only needs to remain fixed with respect to the patient for the duration which the C-arm is re-positioned and need not be present for the CBCT acquisitions. Therefore, certain clinical limitations, such as changes to the scene, draping, patient movement, or presence of surgical tools in the scene are not limiting factors.

Visual marker tracking of patient (Sec. IV A-a) requires an additional marker to be introduced directly into the surgical scene. Doing so is beneficial, as C-arm displacements are tracked with respect to the patient, suggesting that patient movements on the surgical bed will be accurately reflected in the stitching outcome. Yet, this approach increases setup complexity, as sterility and appropriate placement of the marker must be ensured. On the other hand, tracking of the surgical table with an array of visual markers under the bed (Sec. IV A-b) does not account for patient movement on the bed. However, it has the benefit of not requiring any additional markers attached to the patient. Furthermore, since the array of markers is larger in dimensions compared to the cube marker, the tracking accuracy will not decrease significantly for larger displacements of the C-arm. This consistent tracking quality using the array of markers is seen when comparing the stitching errors in Tables I and II where the error only increases from 0.62mm to 0.66 mm. The standard deviation for this tracking method is also the lowest compared to every other tracking approach shown in the tables.

Stitching based on the tracking with RGB and depth information together has 0.91 mm error, and tracking solely based on depth information has 1.72 mm error. In a clinically realistic scenario, the surgical site comprises drapes, blood, exposed anatomy, and surgical tools which allows the extraction of large number of useful color features in a color image. The authors believe that a marker-less RGBD-SLAM stitching system can use the aforementioned color information, as well as the depth information from the co-calibrated depth camera, and provide reliable CBCT image stitching for orthopedic interventions.

The angular errors in Tables I and II indicate a larger ranking in error for LDF angles compared to TF. TF angular error is most affected by the translational component along the shaft of the bone. Lower TF errors therefore indicate lower errors in leg length. On the other hand, LDF angular errors correlate with in-plane malalignment.

The use of external infrared tracking systems to observe the displacement of patients are widely accepted in clinical practice, and are usually not deployed to automatically align and stitch multiple CBCT volumes. A major disadvantage of external tracking systems is the introduction of additional hardware to the operating room, and the accumulation of tracking errors when tracking both the patient and C-arm.

Prior method for stitching of CBCT volumes uses an RGB camera attached near the X-ray source.24 In this method, all image features are approximated to be in the same depth scale from the camera base. Hence, very limited number of features close to the laser line are used for tracking. This will contribute to poor tracking when the C-arm is rotated as well as translated.

The stitching errors of the vision-based methods are also compared to image-based stitching of overlapping CBCT volumes in Tables I and II. Image-based approach yielded high errors for both the animal cadaver (9.27 mm) as well as the dry bone phantom (68.6 mm) because of insufficient and homogeneous information in the overlapping region. The errors are reported lower when registering the porcine specimen due to shorter length of the bone and presence of soft tissue in the overlapping region.

In Fig. 8 we demonstrated the cost for registering multiple overlapping CBCT volumes. The NCC similarity measure reached higher values (0.6 ± 0.04) when registering CBCT volumes acquired from the two ends of the bone which had more dominant structures, and yielded lower similarity scores at the shaft of the phantom. Results of image-based registration in Tables I and II and Fig. 9 show high stitching error using only image-based solution, as the registration converged to local optima at the shaft of the bone.

We also avoided stitching of projection images due to the potential parallax effect which causes incorrect stitching and the length and angles between the anatomical landmarks will not be preserved in the stitched volume.

The benefits of using cameras with a C-arm for radiation and patients safety, scene observation, and augmented reality has been emphasized in the past. This work presents a 3D/3D intraoperative image stitching technique using a similar opto-X-ray system. Our approach does not limit the working space, and only requires minimal additional hardware which is the RGBD camera near the C-arm detector. The C-arm remains mobile, self-contained, and independent of the operating room. Further studies are underway to evaluate the effectiveness of CBCT stitching for interlocking and hip arthroplasty procedures on cadaver specimens. Finally, we plan to integrate the RGBD sensor into the gantry of the C-arm to avoid accidental misalignments.25

Considerations for Clinical Deployment

The success of translation for each of the proposed vision-based stitching solutions depends on the requirements of the surgery. While our visual marker-based approach yielded very low stitching errors, it increased the setup complexity by requiring external markers to be fixed to the patient during C-arm re-arrangement. Conversely, RGBD-SLAM tracking allowed for increased flexibility as no external markers are required. However, this flexibility came at the cost of slightly higher stitching errors. Yet, in angular measurements RGBD-SLAM outperformed the marker-based tracking and yielded smaller errors. The stitching errors for both marker-based and marker-less methods were well below 1.00 cm, which is considered ”well tolerated” for leg length discrepancy in the orthopedic literature.44 An angular error greater than 5° in any plane is considered as malrotation in orthopedics literature.10 All methods proposed in this manuscript exhibited angular errors below this threshold.

Acknowledgments

The authors want to thank Wolfgang Wein and his team from ImFusion GmbH for the opportunity of using ImFusion Suite, and Gerhard Kleinzig and Sebastian Vogt from SIEMENS for their support and making an ARCADIS Orbic 3D available for this research. Research in this publication was supported by NIH under Award Number R01EB0223939, Graduate Student Fellowship from Johns Hopkins Applied Physics Laboratory, and Johns Hopkins University internal funding sources.

Footnotes

Conflict of interest: The authors have no conflicts to disclose.

Contributor Information

Javad Fotouhi, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA.

Bernhard Fuerst, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA.

Mathias Unberath, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA.

Stefan Reichenstein, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA.

Sing Chun Lee, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA.

Alex A. Johnson, Department of Orthopaedic Surgery, Johns Hopkins Hospital, Baltimore, USA

Greg M. Osgood, Department of Orthopaedic Surgery, Johns Hopkins Hospital, Baltimore, USA

Mehran Armand, Department of Mechanical Engineering, Johns Hopkins University, Baltimore, USA and Johns Hopkins University Applied Physics Laboratory, Laurel, USA.

Nassir Navab, Computer Aided Medical Procedures, Johns Hopkins University, Baltimore, USA and Computer Aided Medical Procedures, Technical University of Munich, Munich, Germany.

References

- 1.Carelsen B, Haverlag R, Ubbink DTh, Luitse JSK, Goslings JC. Does intraoperative fluoroscopic 3d imaging provide extra information for fracture surgery? Archives of Orthopaedic and Trauma Surgery. 2008;128:1419–1424. doi: 10.1007/s00402-008-0740-5. [DOI] [PubMed] [Google Scholar]

- 2.Daly MJ, Siewerdsen JH, Moseley DJ, Jaffray DA, Irish JC. Intraoperative cone-beam ct for guidance of head and neck surgery: assessment of dose and image quality using a c-arm prototype. Medical physics. 2006;33:3767–3780. doi: 10.1118/1.2349687. [DOI] [PubMed] [Google Scholar]

- 3.Mirota Daniel J, Uneri Ali, Schafer Sebastian, et al. SPIE Medical Imaging. International Society for Optics and Photonics; 2011. High-accuracy 3d image-based registration of endoscopic video to c-arm cone-beam ct for image-guided skull base surgery; pp. 79640J–79640J. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schafer S, Nithiananthan S, Mirota DJ, et al. Mobile c-arm cone-beam ct for guidance of spine surgery: image quality, radiation dose, and integration with interventional guidance. Medical physics. 2011;38:4563–4574. doi: 10.1118/1.3597566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fischer Marius, Fuerst Bernhard, Lee Sing Chun, et al. Preclinical usability study of multiple augmented reality concepts for k-wire placement. International Journal of Computer Assisted Radiology and Surgery. 2016:1–8. doi: 10.1007/s11548-016-1363-x. [DOI] [PubMed] [Google Scholar]

- 6.Fotouhi Javad, Fuerst Bernhard, Lee Sing Chun, et al. Interventional 3d augmented reality for orthopedic and trauma surgery. 16th Annual Meeting of the International Society for Computer Assisted Orthopedic Surgery (CAOS); 2016. [Google Scholar]

- 7.Unberath Mathias, Aichert André, Achenbach Stephan, Maier Andreas. Consistency-based respiratory motion estimation in rotational angiography. Medical Physics. 2017;44 doi: 10.1002/mp.12021. [DOI] [PubMed] [Google Scholar]

- 8.Pauwels Ruben, Araki Kazuyuki, Siewerdsen JH, Thongvigitmanee Saowapak S. Technical aspects of dental CBCT: state of the art. Dentomaxillofacial Radiology. 2014;44 doi: 10.1259/dmfr.20140224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chang Jenghwa, Zhou Lili, Wang Song, Clifford Chao KS. Panoramic cone beam computed tomography. Medical Physics. 2012;39:2930–2946. doi: 10.1118/1.4704640. [DOI] [PubMed] [Google Scholar]

- 10.Ricci William M, Bellabarba Carlo, Lewis Robert, et al. Angular malalignment after intramedullary nailing of femoral shaft fractures. Journal of orthopaedic trauma. 2001;15:90–95. doi: 10.1097/00005131-200102000-00003. [DOI] [PubMed] [Google Scholar]

- 11.Jaarsma RL, Pakvis DFM, Verdonschot N, Biert J, Van Kampen A. Rotational malalignment after intramedullary nailing of femoral fractures. Journal of orthopaedic trauma. 2004;18:403–409. doi: 10.1097/00005131-200408000-00002. [DOI] [PubMed] [Google Scholar]

- 12.Kraus Michael, von dem Berge Stephanie, Schoell Hendrik, Krischak Gert, Gebhard Florian. Integration of fluoroscopy-based guidance in orthopaedic trauma surgery a prospective cohort study. Injury. 2013;44:1486–1492. doi: 10.1016/j.injury.2013.02.008. [DOI] [PubMed] [Google Scholar]

- 13.Messmer Peter, Matthews Felix, Wullschleger Christoph, Hügli Rolf, Regazzoni Pietro, Jacob Augustinus L. Image fusion for intraoperative control of axis in long bone fracture treatment. European Journal of Trauma. 2006;32:555–561. [Google Scholar]

- 14.Chen Cheng, Kojcev Risto, Haschtmann Daniel, Fekete Tamas, Nolte Lutz, Zheng Guoyan. Ruler Based Automatic C-Arm Image Stitching Without Overlapping Constraint. Journal of Digital Imaging. 2015:1–7. doi: 10.1007/s10278-014-9763-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Lejing, Traub Joerg, Weidert Simon, Heining Sandro Michael, Euler Ekkehard, Navab Nassir. Parallax-free intra-operative X-ray image stitching. Medical Image Analysis. 2010;14:674–686. doi: 10.1016/j.media.2010.05.007. [DOI] [PubMed] [Google Scholar]

- 16.Hankemeier S, Hufner T, Wang G, et al. Navigated open-wedge high tibial osteotomy: advantages and disadvantages compared to the conventional technique in a cadaver study. Knee Surgery, Sports Traumatology, Arthroscopy. 2006;14:917–921. doi: 10.1007/s00167-006-0035-8. [DOI] [PubMed] [Google Scholar]

- 17.Siewerdsen Jeffrey H. Cone-beam ct with a flat-panel detector: from image science to image-guided surgery. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment. 2011;648:S241–S250. doi: 10.1016/j.nima.2010.11.088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dang H, Otake Y, Schafer S, Stayman JW, Kleinszig G, Siewerdsen JH. Robust methods for automatic image-to-world registration in cone-beam ct interventional guidance. Medical physics. 2012;39:6484–6498. doi: 10.1118/1.4754589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wilkening Paul, Alambeigi Farshid, Murphy Ryan J, Taylor Russell H, Armand Mehran. Development and experimental evaluation of concurrent control of a robotic arm and continuum manipulator for osteolytic lesion treatment. IEEE Robotics and Automation Letters. 2017;2:1625–1631. doi: 10.1109/lra.2017.2678543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Alambeigi Farshid, Wang Yu, Sefati Shahriar, Gao Cong, Murphy Ryan J, Iordachita Iulian, Taylor Russel H, Khanuja Harpal, Armand Mehran. A curved-drilling approach in core decompression of the femoral head osteonecrosis using a continuum manipulator. IEEE Robotics and Automation Letters. 2017;2:1480–1487. [Google Scholar]

- 21.Emmenlauer Mario, Ronneberger Olaf, Ponti Aaron, et al. XuvTools: free, fast and reliable stitching of large 3D datasets. Journal of microscopy. 2009;233:42–60. doi: 10.1111/j.1365-2818.2008.03094.x. [DOI] [PubMed] [Google Scholar]

- 22.Lamecker H, Wenckebach TH, Hege H-C. Atlas-based 3D-Shape Reconstruction from X-Ray Images. Pattern Recognition, 2006. ICPR 2006. 18th International Conference on; 2006. pp. 371–374. [Google Scholar]

- 23.Yigitsoy Mehmet, Fotouhi Javad, Navab Nassir. Hough space parametrization: Ensuring global consistency in intensity-based registration. International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer; 2014. pp. 275–282. [DOI] [PubMed] [Google Scholar]

- 24.Fuerst Bernhard, Fotouhi Javad, Navab Nassir. Vision-based intraoperative cone-beam ct stitching for non-overlapping volumes. International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer; 2015. pp. 387–395. [Google Scholar]

- 25.Navab N, Heining SM, Traub J. Camera Augmented Mobile C-Arm (CAMC): Calibration, Accuracy Study, and Clinical Applications. Medical Imaging, IEEE Transactions on. 2010;29:1412–1423. doi: 10.1109/TMI.2009.2021947. [DOI] [PubMed] [Google Scholar]

- 26.Lee Sing Chun, Fuerst Bernhard, Fotouhi Javad, Fischer Marius, Osgood Greg, Navab Nassir. Calibration of rgbd camera and cone-beam ct for 3d intra-operative mixed reality visualization. International journal of computer assisted radiology and surgery. 2016;11:967–975. doi: 10.1007/s11548-016-1396-1. [DOI] [PubMed] [Google Scholar]

- 27.Fotouhi Javad, Fuerst Bernhard, Johnson Alex, et al. Pose-aware c-arm for automatic re-initialization of interventional 2d/3d image registration. International Journal of Computer Assisted Radiology and Surgery. 2017:1–10. doi: 10.1007/s11548-017-1611-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fotouhi Javad, Fuerst Bernhard, Wein Wolfgang, Navab Nassir. Can real-time rgbd enhance intraoperative cone-beam ct? International Journal of Computer Assisted Radiology and Surgery. 2017:1–9. doi: 10.1007/s11548-017-1572-y. [DOI] [PubMed] [Google Scholar]

- 29.Oehler M, Buzug TM, et al. Statistical image reconstruction for inconsistent ct projection data. Methods of information in medicine. 2007;46:261–269. doi: 10.1160/ME9041. [DOI] [PubMed] [Google Scholar]

- 30.Geiger Andreas, Moosmann Frank, Car Ömer, Schuster Bernhard. Automatic camera and range sensor calibration using a single shot. Robotics and Automation (ICRA), 2012 IEEE International Conference on; IEEE; 2012. pp. 3936–3943. [Google Scholar]

- 31.Zhang Zhengyou. A flexible new technique for camera calibration. IEEE Transactions on pattern analysis and machine intelligence. 2000;22:1330–1334. [Google Scholar]

- 32.Marquardt Donald W. An algorithm for least-squares estimation of nonlinear parameters. Journal of the society for Industrial and Applied Mathematics. 1963;11:431–441. [Google Scholar]

- 33.Svoboda Tomáš, Martinec Daniel, Pajdla Tomáš. A convenient multi-camera self-calibration for virtual environments. PRESENCE: Teleoperators and Virtual Environments. 2005;14:407–422. [Google Scholar]

- 34.Lewis JP. Fast normalized cross-correlation. Vision interface. 1995;10:120–123. [Google Scholar]

- 35.Powell Michael JD. The bobyqa algorithm for bound constrained optimization without derivatives. University of Cambridge; Cambridge: 2009. Cambridge NA Report NA2009/06. [Google Scholar]

- 36.Kato Hirokazu, Billinghurst Mark. Marker tracking and hmd calibration for a video-based augmented reality conferencing system. Augmented Reality, 1999.(IWAR’99) Proceedings. 2nd IEEE and ACM International Workshop on; IEEE; 1999. pp. 85–94. [Google Scholar]

- 37.Smith Randall, Self Matthew, Cheeseman Peter. Autonomous robot vehicles. Springer; 1990. Estimating uncertain spatial relationships in robotics; pp. 167–193. [Google Scholar]

- 38.Endres Felix, Hess Jürgen, Engelhard Nikolas, Sturm Jürgen, Cremers Daniel, Burgard Wolfram. An evaluation of the rgb-d slam system. Robotics and Automation (ICRA), 2012 IEEE International Conference on; IEEE; 2012. pp. 1691–1696. [Google Scholar]

- 39.Fischler Martin A, Bolles Robert C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM. 1981;24:381–395. [Google Scholar]

- 40.Newcombe Richard A, Izadi Shahram, Hilliges Otmar, et al. Kinectfusion: Real-time dense surface mapping and tracking. Mixed and augmented reality (ISMAR), 2011 10th IEEE international symposium on; IEEE; 2011. pp. 127–136. [Google Scholar]

- 41.Yaniv Ziv. Localizing spherical fiducials in c-arm based cone-beam ct. Medical physics. 2009;36:4957–4966. doi: 10.1118/1.3233684. [DOI] [PubMed] [Google Scholar]

- 42.Somani Arun K, Huang Thomas S, Blostein Steven D. Least-squares fitting of two 3-D point sets. PAMI, IEEE Transactions on. 1987:698–700. doi: 10.1109/tpami.1987.4767965. [DOI] [PubMed] [Google Scholar]

- 43.Fotouhi Javad, Alexander Clayton P, Unberath Mathias, Taylor Giacomo, Lee Sing Chun, Fuerst Bernhard, Johnson Alex, Osgood Greg M, Taylor Russell H, Khanuja Harpal, et al. Plan in 2-d, execute in 3-d: an augmented reality solution for cup placement in total hip arthroplasty. Journal of Medical Imaging. 2018;5:021205. doi: 10.1117/1.JMI.5.2.021205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Maloney William J, Keeney James A. Leg length discrepancy after total hip arthroplasty. The Journal of arthroplasty. 2004;19:108–110. doi: 10.1016/j.arth.2004.02.018. [DOI] [PubMed] [Google Scholar]