Abstract

Importance

To support treatment assignment, mechanistic biomarkers should be selectively sensitive to specific interventions. Here, we examine whether different components of reinforcement learning in humans satisfy this necessary precondition. We focus on pharmacological manipulations of dopamine and serotonin that form the backbone of first-line management of common mental illnesses such as depression and anxiety.

Objective

To perform a meta-analysis of pharmacological manipulations of dopamine and serotonin and examine whether they show distinct associations with reinforcement learning components in humans.

Data Sources

Ovid MEDLINE/PubMed, Embase, and PsycInfo databases were searched for studies published between January 1, 1946 and January 19, 2023 (repeated April 9, 2024, and October 15, 2024) investigating dopaminergic or serotonergic effects on reward/punishment processes in humans, according to PRISMA guidelines.

Study Selection

Studies reporting randomized, placebo-controlled, dopaminergic or serotonergic manipulations on a behavioral outcome from a reward/punishment processing task in healthy humans were included.

Data Extraction and Synthesis

Standardized mean difference (SMD) scores were calculated for the comparison between each drug (dopamine/serotonin) and placebo on a behavioral reward or punishment outcome and quantified in random-effects models for overall reward/punishment processes and four main subcategories. Study quality (Cochrane Collaboration’s tool), moderators, heterogeneity, and publication bias were also assessed.

Main Outcome(s) and Measure(s)

Performance on reward/punishment processing tasks.

Results

In total, 68 dopamine and 39 serotonin studies in healthy volunteers were included (Ndopamine=2291, Nplacebo=2284; Nserotonin=1491, Nplacebo=1523). Dopamine was associated with an increase in overall reward (SMD=0.18, 95%CI [0.09 0.28]) but not punishment function (SMD=- 0.06, 95%CI [-0.26,0.13]). Serotonin was not meaningfully associated with overall punishment (SMD=0.22, 95%CI [-0.04,0.49]) or reward (SMD=0.02, 95%CI [-0.33,0.36]). Importantly, dopaminergic and serotonergic manipulations had distinct associations with subcomponents. Dopamine was associated with reward learning/sensitivity (SMD=0.26, 95%CI [0.11,0.40]), reward discounting (SMD=-0.08, 95%CI [-0.14,-0.01]) and reward vigor (SMD=0.32, 95%CI [0.11,0.54]). By contrast, serotonin was associated with punishment learning/sensitivity (SMD=0.32, 95%CI [0.05,0.59]), reward discounting (SMD=-0.35, 95%CI [-0.67,-0.02]), and aversive Pavlovian processes (within-subject studies only; SMD=0.36, 95%CI [0.20,0.53]).

Conclusions and Relevance

Pharmacological manipulations of both dopamine and serotonin have measurable associations with reinforcement learning in humans. The selective associations with different components suggests that reinforcement learning tasks could form the basis of selective, mechanistically interpretable biomarkers to support treatment assignment.

Introduction

Dopamine and serotonin are two critical neuromodulators in the brain, both playing central roles in clinical and basic neuroscience. Clinically, they are the targets of different antidepressants, which are equally effective in treating mood disorders1. Yet, basic neuroscience suggests they serve distinct functions in behavior, particularly in reinforcement learning (RL). If dopamine and serotonin indeed have distinct effects in RL, as current theories predict, this could help identify new mechanisms to guide much-needed optimized treatments. Conversely, if they prove indistinguishable in humans, prevailing neuroscientific models would require substantial revision. Resolving this question in humans is therefore critical.

RL describes how rewards and losses guide behavior and is strongly related to depression. It is impaired in individuals who are currently depressed2,3, and has substantial face validity as a core mechanism leading to or maintaining depression4. RL is also intricately linked to the neuromodulator systems dopamine and serotonin as the brain appears to use these to broadcast key RL signals5,6.

However, a key challenge here lies in reconciling two seemingly contradictory bodies of research. On one hand, antidepressants targeting dopamine or serotonin appear similarly effective in treating depression1. On the other hand, substantial neuroscience research indicate that dopamine and serotonin play distinct roles in RL5–8. One possible resolution is that different antidepressants engage different components of RL. Such a selectivity could potentially explain why individuals who do not respond to serotonergic medications may respond to more dopaminergic medications. Indeed, dopaminergic antidepressants are second-line options when first-line serotonergic treatments fail9,10. As a necessary precondition for this, manipulating different neuromodulators must demonstrably affect distinct RL processes in humans—a question that remains unresolved. If validated, RL-based measures could serve as markers to guide treatment choices and inform the development of novel therapies. Thus, adopting an RL framework to clarify the cognitive roles of dopamine and serotonin represents a critical first step toward identifying candidate mechanisms driving the therapeutic effects of these antidepressants.

RL is however not a single process but a collection of mechanisms that are hypothesized to involve dopamine and serotonin in distinct ways11. Basic neuroscience highlights that dopamine is a key modulator of appetitive processes, particularly reward learning, reward discounting and reward response vigor6,11–13. Serotonin, while more complex, is often linked to aversive processes such as punishment learning, or inhibition in the face of punishments8,14. However, human studies on these neuromodulators in RL have yielded mixed and sometimes conflicting results15,16. These inconsistencies hinder our understanding of dopamine and serotonin in human behavior and the development of optimized treatments for mood and anxiety disorders. Therefore, we performed a meta-analysis of the effects of pharmacological manipulations of dopamine and serotonin on RL. Given the limited number of studies, for increased power we a) considered pharmacological manipulations broadly, not only antidepressants; and b) focused on healthy participants due to few patient studies. Our first aim was to ask whether dopamine and serotonin alter overall reward and punishment processing. Our second aim was to identify whether they affect specific RL subcomponents. Based on theoretical frameworks and a systematic review of the literature we identified and focused the meta-analysis on the following subcomponents of RL.

Reward and punishment learning

A key component of RL involves trial-and-error learning from rewards and punishments via prediction errors (the difference between expected and actual outcomes)6. Dopamine has been extensively implicated in signaling reward prediction errors6,17. Some theories also postulate that dopamine dips may drive punishment learning18, while computational models suggest this is under serotonin’s control14,19. Recent animal studies have however implicated serotonin in reward learning as well, firing to reward-predicting cues or contrarily, suppressing reward learning5,20. To examine these processes in humans, studies mostly employ probabilistic instrumental learning tasks.

Pavlovian bias

Choices can be driven by reflexive Pavlovian biases, where appetitive biases prompt approach toward rewards and aversive biases trigger inhibition in response to punishment, even when suboptimal21. Influential models propose that serotonin promotes aversive Pavlovian biases, i.e., inhibition in aversive contexts8,22–24. Human studies often use go/no-go tasks where these biases interfere with optimal actions or Pavlovian-instrumental transfer tasks21,25.

Reward discounting

Decision-making also requires balancing rewards against costs. Current theories predict that dopamine signals benefits over costs, while serotonin may promote willingness to wait or exert effort for larger rewards by modulating perceived costs. Both mechanisms thus contribute to reduced reward discounting26–30. These processes are studied using tasks that contrast immediate or low effort, small rewards, with delayed or high effort, larger reward, explicit choices.

Reward response vigor

Motivation involves deciding how vigorously actions need to be executed to obtain rewards. Dopamine is theorized to signal average reward rates, enhancing response vigor31,32. The role of serotonin is less defined, though an early computational theory suggest it may also modulate the long-term reward rates14. Tasks in this domain assess reaction times or effort exertion relative to reward magnitude or average reward rate.

Overall, this meta-analysis aims to provide a clearer understanding of how dopamine and serotonin shape RL in humans, contributing to both theoretical and clinical advances.

Methods

Systematic review

We conducted a preregistered systematic review and meta-analysis (PROSPERO:CRD42022363747) according to PRISMA guidelines33 (eAppendix in the Supplement). The Ovid MEDLINE/PubMed, Embase, and PsycInfo databases were searched for articles published between January 1, 1946, and January 21, 2023. The search was repeated on April 9, 2024 and October 15, 2024 for additional articles published since 2023. Titles or abstracts were searched containing the terms (dopamine* or seroton* or tryptophan* or tyrosine*) and (reward* or motivat* or punish* or reinforce* or decision* or effort* or learn* or incentive* or volition or choice*) and (participa* or subject* or individ* or human* or healthy* or investigat* or experiment*) and restricted to articles in English and humans. Detailed description of the search strategy is described in eTable1. In addition to database searches, one study was identified through a relevant review article, and one was suggested by an expert in the field.

We included studies reporting 1) interventions manipulating either dopamine or serotonin, in 2) healthy human volunteers (18-65 years old), 3) randomized placebo-controlled studies that 4) reported a behavioral outcome from a reward and/or punishment processing task. We only included samples that 5) did not overlap with other included datasets and 6) contained sufficient information to calculate standardized mean difference (SMD) scores between placebo and drug conditions (see Supplement).

Meta-analysis

Effect sizes were calculated for behavioral outcomes relating to reward and punishment learning/sensitivity, aversive and appetitive Pavlovian bias, reward vigor, and reward discounting. To evaluate overall reward and punishment processes, we consolidated the components into two main categories. The overall reward category combined reward learning/sensitivity, appetitive Pavlovian bias, reward vigor, and reward discounting-reversing the discounting direction so that a positive SMD represents increased reward weighting. The overall punishment function combined punishment learning/sensitivity and aversive Pavlovian bias. We also identified risk attitude and model-based learning/flexibility as two additional RL subcomponents. However since these are not straightforward to combine with the other subcomponents, we consider them separately (see Supplement).

Pharmacologically, we pooled data from both agonists and antagonists, assuming symmetrical effects for drugs that upregulate or downregulate a neuromodulator. We recoded antagonist effects as if they were agonist effects. For example, if a study reported that a dopamine antagonist reduced reward vigor, we recoded this effect to represent an agonist increasing reward vigor. Some dopamine studies used single low doses of agonists/antagonists intended to specifically target presynaptic autoreceptors. These effects were interpreted contrary to their typical activity profile (e.g., a low-dose agonist was coded as an antagonist), as suggested by the literature (see Supplement). Thus, for a low-dose dopamine agonist that e.g., reduced reward learning, we first classified it as an antagonist reducing reward learning, and then recoded it as an agonist increasing reward learning, maintaining a consistent directionality across studies. To assess the impact of this interpretation, we conducted sensitivity analyses using the original drug activity profiles as well.

Both within- and between-subject designs were included. The detailed procedures for calculating between- and within-subject SMDs including the conversion procedures are described in Supplement, with comparisons of different effect size conversions for within-subject studies depicted in eFigure1. Random-effects models were conducted in R (version 4.3.3) using metafor34 packages to examine the association between upregulating dopamine and serotonin with placebo on overall reward and punishment processes and subcomponents. Heterogeneity, publication bias, moderators and study quality were also assessed (see Supplement).

Results

Data from 107 studies were included (Ndopamine=2291, Nplacebo=2284; Nserotonin=1491, Nplacebo=1523; Figure 1; eTable2). Risk of bias was generally high for both randomization and allocation concealment, but low for blinding of outcome assessment (eFigure2).

Figure 1. Flow Diagram of Study Selection and Inclusion.

Note that the full text of 313 studies was assessed for eligibility as 9 studies reported data both for dopamine and serotonin conditions. Abbreviations: DA: dopamine; SHT: serotonin.

Meta-analysis results

Dopamine

Overall, dopamine had a small positive association with reward processes (SMD=0.18, 95%CI [0.09, 0.28]) but a negligible negative association with overall punishment processes (SMD=- 0.06, 95%CI [-0.26, 0.13]; eFigure3). Analyses according to the original drug activity profile changed inference for the overall reward process; eFigure4).

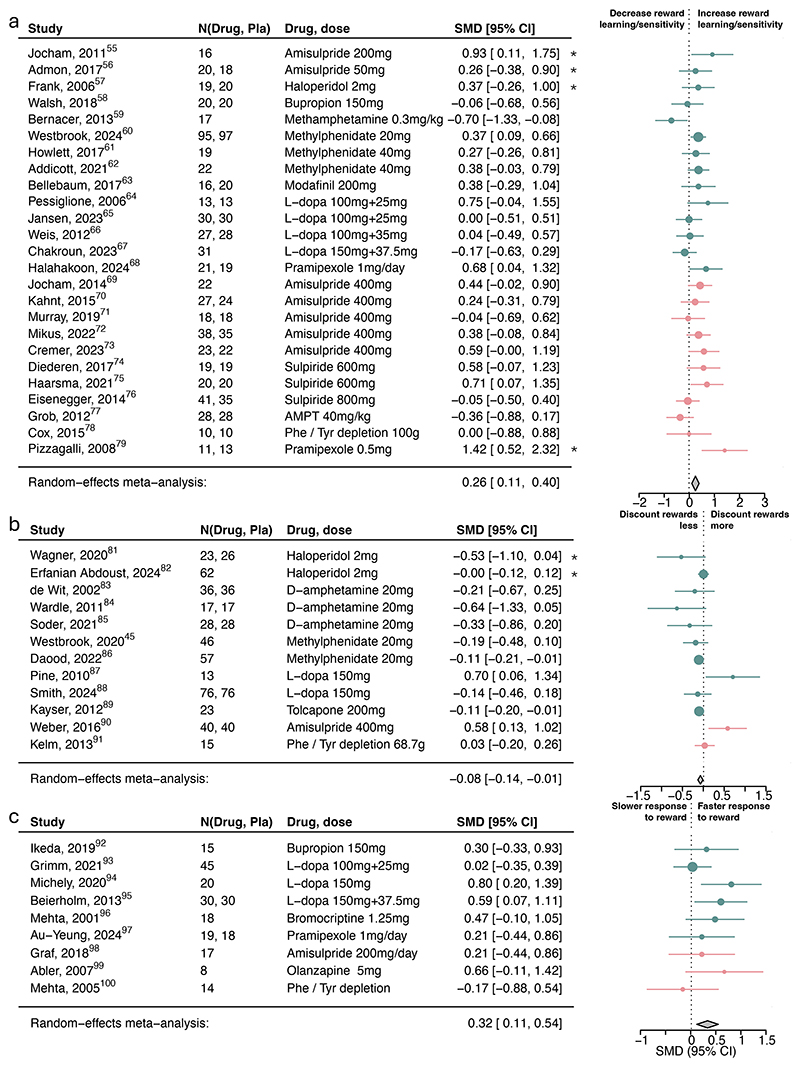

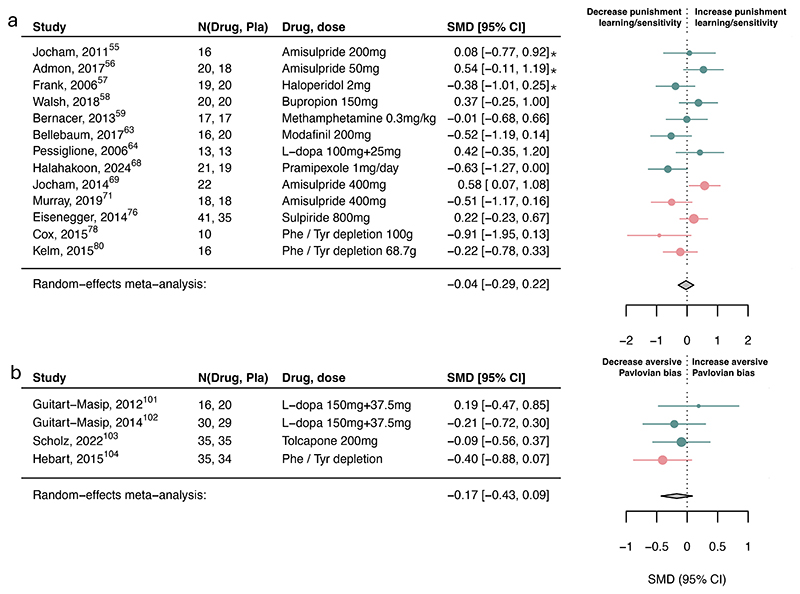

Dopamine increased reward learning/sensitivity (Figure 2a; SMD=0.26, 95%CI [0.11, 0.40]), reward vigor (SMD=0.32, 95%CI [0.11, 0.54]; Figure 2c) and decreased reward discounting (SMD=-0.08, 95%CI [-0.14, -0.01]; Figure 2b), but were not associated with any other subcomponents (Figure 3; eFigure5). Analyses according to the original drug activity profile influenced the inference of dopamine on reward learning/sensitivity and reward discounting but no other ones (eFigure6). We also explored the associations with computational learning rate and sensitivity parameters, but there were far fewer studies reporting these and no clear pattern emerged (eFigure7).

Figure 2. Association of dopamine upregulation with reward subcomponents.

Standardized mean differences (SMDs) of the association between upregulating dopamine versus placebo and a) reward learning/sensitivity, b) reward discounting, and c) reward response vigor. Pla indicates placebo. We recoded antagonist effects as if they were agonists (pink SMD point estimates and 9S% Cl). Green SMD point estimates and 9S% Cl indicate studies that were coded as original agonists. Asterisks indicate studies that used a low dose of an agonist or antagonists. These effects were interpreted contrary to their typical activity profile (e.g., a low-dose agonist acting antagonistically), as suggested by the literature.

Figure 3. Association of dopamine upregulation with punishment subcomponents.

Standardized mean differences (SMDs) of the association between upregulating dopamine versus placebo and a) punishment learning/sensitivity and b) aversive Pavlovian bias. We recoded antagonist effects as if they were agonists (pink SMD point estimates and 9S% Cl). Green SMD point estimates and 9S% Cl indicate studies that were coded as original agonists. Asterisks indicate studies that used a low dose of an agonist or antagonists. These effects were interpreted contrary to their typical activity profile (e.g., a low-dose agonist acting antagonistically), as suggested by the literature.

There was low-to-moderate heterogeneity across all overall processes and main subcomponents (see Supplement). Substantial heterogeneity was only found in the risk attitude and model-based/flexibility subcomponents, with outlier exclusion resolving risk attitude heterogeneity, resulting in a significant positive dopamine association (eFigure8).

Serotonin

Overall, serotonin did not show associations with reward (SMD=0.02, 95%CI [-0.33, 0.36]) or punishment processes (SMD=0.22, 95%CI [-0.04, 0.49]; eFigure9).

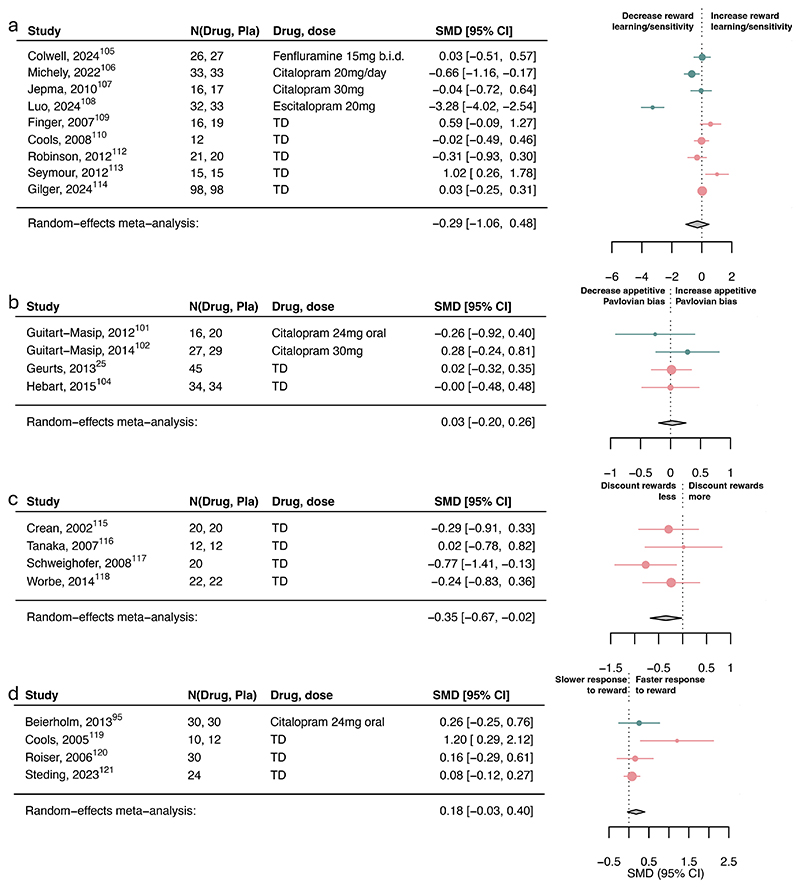

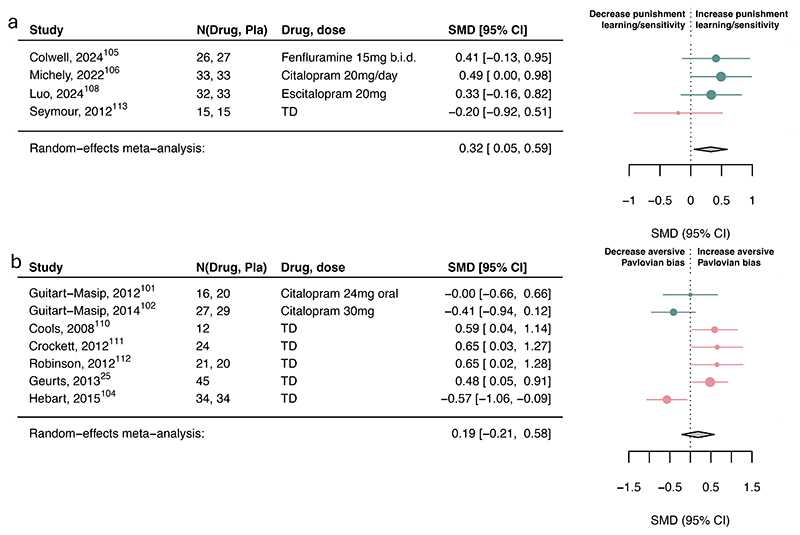

Serotonin had a small-to-moderate association with reward discounting (SMD=-0.35, 95%CI [- 0.67, -0.02]; Figure 4c) and punishment learning/sensitivity (SMD=0.32, 95%CI [0.05, 0.59]; Figure 5a) but not with reward learning/sensitivity, reward vigor, Pavlovian biases (Figure 4-5) or other subcomponents (eFigure10).

Figure 4. Association of serotonin upregulation with reward subcomponents.

Standardized mean differences (SMDs) of the association between upregulating serotonin versus placebo and a) reward learning/sensitivity, b) appetitive Pavlovian bias, c) reward discounting, and d) reward response vigor. Pla indicates placebo. We recoded antagonist effects as if they were agonists (pink SMD point estimates and 9S% Cl). Green SMD point estimates and 9S% Cl indicate studies that were coded as original agonists. TD: tryptophan depletion.

Figure 5. Association of serotonin upregulation with punishment subcomponents.

Standardized mean differences (SMDs) of the association between upregulating serotonin versus placebo and a) punishment learning/sensitivity and b) aversive Pavlovian bias. We recoded antagonist effects as if they were agonists (pink SMD point estimates and 9S% Cl). Green SMD point estimates and 9S% Cl indicate studies that were coded as original agonists. TD: tryptophan depletion.

However, three within-subject studies had to be excluded in the aversive Pavlovian domain due to missing information needed to combine with between-subject studies. Examining the six within-subject studies separately, serotonin was associated with a small-to-medium increase in aversive Pavlovian processes (SMD=0.36, 95%CI [0.20, 0.53]; eFigure11). Exploring learning rate and sensitivity computational parameters, serotonin was associated with increased punishment learning rates and decreased punishment sensitivity (eFigure12). However, this finding is based on a limited number of studies.

There was substantial heterogeneity in overall reward/punishment categories and the reward learning/sensitivity and aversive Pavlovian subcomponents. Removing identified outliers explained some of this variance and revealed a significant increase of overall punishment processes by serotonin (SMD=0.32, 95%CI [0.10, 0.54]; see Supplement; eFigure13).

Publication bias and moderator analyses

Egger’s test suggested significant publication bias in the overall dopamine reward domain (z=2.28, p=0.02) but not in any of the other overall domains (dopamine punishment: z=-0.79, p=0.43; serotonin reward: z=0.07, p=0.94; serotonin punishment: z=-0.09, p=0.93; eFigure14).

Dopamine

Only the reward and punishment learning/sensitivity and reward discounting categories contained greater than 9 studies, allowing a test for funnel plot asymmetry35. None of these showed significant publication bias (reward learning/sensitivity: z=1.49, p=0.14; punishment learning/sensitivity: z=-1.42, p=0.15; reward discounting: z=0.057, p=0.95; eFigure15).

Drug type significantly moderated the reward discounting results (R2=13.88%, I2=6.45%, p=0.03), with original agonist studies decreasing reward discounting (SMD=-0.10, 95%CI [-0.16, - 0.03]; 10 studies), while studies that originally used antagonists showed no meaningful association (SMD=0.14, 95%CI [-0.06, 0.35]; 2 studies). None of the other moderators had an effect (see Supplement).

Serotonin

The model-based learning/flexibility subcomponent was the only one with sufficient studies to conduct a funnel plot asymmetry analysis, which revealed no significant asymmetry (z=1.45, p=0.15; eFigure15).

No meaningful moderators were identified for serotonin (see Supplement).

Discussion

This meta-analysis reveals distinct roles for dopamine and serotonin in modulating RL processes in humans. Dopamine increased reward learning and sensitivity, and reward vigor. A different pattern of effects was observed for serotonin: promoting learning from punishments, and possibly amplifying aversive Pavlovian biases. Both serotonin and dopamine increased the value of rewards when contrasted with costs of time or effort. These findings contribute to two key domains: advancing neuroscience theories of dopamine and serotonin in humans, and informing clinical applications. We begin by addressing the theoretical implications and conclude with the clinical relevance.

The differing associations of dopamine and serotonin are broadly consistent with theoretical expectations. The most influential theory of dopamine posits that dopamine signals reward prediction errors, driving reward learning6,36. Supporting this, we found that boosting dopamine promotes reward learning/sensitivity in humans. Unlike preclinical studies, however5,20, we found no serotonin association with reward learning, reinforcing the idea that dopamine and serotonin serve dissociable functions here. Instead, serotonin was selectively associated with punishment learning, consistent with theories and human research linking serotonin to aversive processing8,37, and highlighting an opposing role to dopamine in learning14.

We also provide preliminary evidence for a dissociation of serotonin and dopamine in Pavlovian biases. Specifically, serotonin-but not dopamine-was associated with enhanced aversive biases in within-subject studies. This resonates with findings in depressed patients treated with serotonergic antidepressants38, and supports theories suggesting serotonin facilitates inhibition specifically in punishment contexts8,22–24. However, this effect is not consistently observed, potentially owing to high measurement noise in these tasks39,40, underscoring the need for psychometrically optimized tasks41.

Beyond learning, it is noteworthy that we found dopamine influencing reward vigor and discounting. This aligns with animal work suggesting that dopamine encodes a unified motivational signal, influencing both how energetically actions are taken (vigor) and decisions of whether to invest in effortful activity (value-based choice)32,42. At present, it is unclear whether the dopamine associations with vigor and discounting (especially effort-based discounting which supplementary exploratory analyses indicated might be particularly associated with dopamine) are related in humans as well. Notably, however, our findings converge with a rodent and clinical meta-analysis, where dopaminergic agents affected both reward discounting and vigor32,42–44.

Like dopamine, serotonin also diminished reward discounting. Although this might suggest that both have similar functions here, a more likely explanation is that they affect reward discounting through distinct mechanisms. Prior research suggests that dopamine reduces discounting by boosting the perceived benefits while serotonin may alter the perceived costs27,28,45. Although we were unable to dissociate the precise mechanism here, it is interesting that the serotonin studies exclusively covered delay discounting tasks (while dopamine additionally covered effort discounting tasks). This may suggest that serotonin increases the discounting factor in RL models to promote a focus on long-term over short-term outcomes27,46.

Clinically, perhaps the most significant finding is that dopaminergic and serotonergic pharmacological manipulations had appreciably distinct associations. They engaged different components of RL in a manner which is measurable and identifiable with simple behavioral tasks. Hence, simple RL tasks may play a useful role in measuring treatment target engagement. This contrasts with symptom markers, which do not differentiate psychiatric medications47,48. Interestingly, our observed effect sizes, though modest, align with RL impairments observed in mood disorders2,3, dopamine effects in Parkinson’s44, and antidepressant efficacy1. Our results thus fulfill a key precondition for future work: if different antidepressants modulate distinct RL components, then RL-based biomarkers could guide more optimized treatments. This aligns with calls to use RL to understand antidepressant mechanisms and their clinical relevance49.

While individual-level precision requires further refinement, establishing, even subtle, systematic group-level neuromodulatory RL associations is a crucial first step in guiding those efforts. A key and important question for future work is whether individual differences in baseline RL measures could help identify individuals more likely to benefit from one or the other type of intervention. This will require studies which simultaneously measure multiple RL domains, psychometrically robust tasks and direct head-to-head comparison of the treatments of interest.

Limitations

Several limitations warrant consideration. First, few studies reported computational RL outcomes, forcing us to rely on broad measures. While we attempted to examine learning rate and sensitivity separately, this proved challenging as few reported these. It is further hard to disentangle them (indeed, serotonin increased punishment learning but decreased punishment sensitivity; eFigure12) and both are sensitive to task designs50. Despite this, meaningful variability is evident in broad measures.

Second, the lack of studies directly comparing dopamine and serotonin prevented us from examining this relationship. However, such comparisons will be crucial for drawing definitive conclusions about their differential effects. There is also a lack of pharmacological specificity in the dopamine domain. Some medications target both dopamine and noradrenaline, complicating interpretation.

Third, the association between dopamine and reward learning and discounting depended on the drug-binding interpretation, shifting from negligible to meaningful when low-dose presynaptic accounts were considered. This highlights the complexities of dopaminergic research, including evidence that the direction of effects may depend on baseline dopamine levels51. Such complexities might therefore have added noise to the effect size estimates.

Fourth, substantial heterogeneity was observed in the association between serotonin and reward learning, potentially due to the lack of common tasks across studies. This suggests the meta-analytic effect may be fragile, underscoring the need for improved task standardization and psychometrically robust tasks. Several other factors may have contributed to heterogeneity, such as feedback saliency52 or menstrual cycle53, which future research needs to assess.

Fifth, most serotonin studies used tryptophan depletion (TD), a method debated as a mild serotonin manipulation54. However, our findings show meaningful associations even in TD-only analyses (reward discounting, aversive Pavlovian processes), supporting their validity despite this debate.

We also pooled within- and between-subject data to increase power, applying effect size corrections of within-subject studies where feasible. When not possible, inflated variance of effect sizes would be the likely consequence, reducing our power to detect significant effects.

Finally, all studies were done in healthy controls. Ultimately, however, head-to-head comparisons in patients across tasks will be required to establish the utility of RL processes for clinical applications.

Conclusions

Despite methodological heterogeneity, there is evidence that dopamine and serotonin affect RL; and that they do so by altering specific, distinct processes. These insights are valuable as they set forth candidate mechanisms for understanding the differentiating effects of treatments targeting different neuromodulators. Critically, the selective associations are observable in highly scalable human behavioral tasks, suggesting that such assessments may provide a fertile ground for the development of differentially sensitive biomarkers.

Supplementary Material

Key points.

Question

Do pharmacological manipulations of dopamine and serotonin affect components of reinforcement learning in humans?

Findings

Upregulating dopamine is associated with increased reward learning/sensitivity and reward response vigor, and decreased reward discounting. Upregulation of serotonin is associated with increased punishment learning/sensitivity and decreased reward discounting.

Meaning

Pharmacological manipulations of dopamine and serotonin have dissociable associations with different components of reinforcement learning. This forms a necessary basis for the development of selective markers for treatment assignment.

Acknowledgments

We thank the Applied Computational Psychiatry group for helpful comments and discussion on an earlier draft of the manuscript. We also thank the authors who provided raw data for the articles in these analyses. Some of these data were provided (in part) by the Radboud University, Nijmegen, The Netherlands. For the purpose of Open Access, the author has applied a CC BY public copyright license to any Author Accepted Manuscript version arising from this submission. This research was funded by Wellcome Trust grants (221826/Z/20/Z and 226790/Z/22/Z) and a Carigest S.A. donation to QJMH. We obtained support by the UCLH NIHR BRC. MB was supported by the Office for Life Sciences and the National Institute for Health and Care Research (NIHR) Mental Health Translational Research Collaboration, hosted by the NIHR Oxford Health Biomedical Research Centre and by the NIHR Oxford Health Biomedical Research Centre. The views expressed are those of the authors and not necessarily those of the UK National Health Service, the NIHR, or the UK Department of Health and Social Care. The funders had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication. AM and QJMH had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Footnotes

Financial disclosures

QJMH has received fees and options for consultancies for Aya Technologies and Alto Neuroscience. MB has received consulting fees from J&J, Engrail Therapeutics, CHDR, and travel expenses from Lundbeck. He was previously employed by P1vital Ltd.

Additional information

The preregistration can be found at https://www.crd.york.ac.uk/PROSPERO/display_record.php?RecordID=363747 and open data and code to replicate all analyses are available online: https://github.com/huyslab/DA5HT_MetaAnalysis_public.

References

- 1.Cipriani A, Furukawa TA, Salanti G, et al. Comparative efficacy and acceptability of 21 antidepressant drugs for the acute treatment of adults with major depressive disorder: a systematic review and network meta-analysis. Lancet. 2018;391(10128):1357–1366. doi: 10.1016/S0140-6736(17)32802-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Halahakoon DC, Kieslich K, O’Driscoll C, Nair A, Lewis G, Roiser JP. Reward-Processing Behavior in Depressed Participants Relative to Healthy Volunteers: A Systematic Review and Meta-analysis. JAMA Psychiatry. 2020;77(12):1286–1295. doi: 10.1001/jamapsychiatry.2020.2139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pike AC, Robinson OJ. Reinforcement Learning in Patients With Mood and Anxiety Disorders vs Control Individuals: A Systematic Review and Meta-analysis. JAMA Psychiatry. 2022;79(4):313–322. doi: 10.1001/jamapsychiatry.2022.0051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huys QJ, Daw ND, Dayan P. Depression: a decision-theoretic analysis. Annu Rev Neurosci. 2015 Jul 8;38:1–23. doi: 10.1146/annurev-neuro-071714-033928. [DOI] [PubMed] [Google Scholar]

- 5.Cardozo Pinto DF, Pomrenze MB, Guo MY, et al. Opponent control of reinforcement by striatal dopamine and serotonin. Nature. 2024 doi: 10.1038/s41586-024-08412-x. [DOI] [PubMed] [Google Scholar]

- 6.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–9. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 7.Maier SF, Watkins LR. Stressor controllability and learned helplessness: the roles of the dorsal raphe nucleus, serotonin, and corticotropin-releasing factor. Neurosci Biobehav Rev. 2005;29(4-5):829–41. doi: 10.1016/j.neubiorev.2005.03.021. [DOI] [PubMed] [Google Scholar]

- 8.Dayan P, Huys QJ. Serotonin in affective control. Annu Rev Neurosci. 2009;32:95–126. doi: 10.1146/annurev.neuro.051508.135607. [DOI] [PubMed] [Google Scholar]

- 9.Rush AJ, Trivedi MH, Wisniewski SR, et al. Acute and longer-term outcomes in depressed outpatients requiring one or several treatment steps: a STAR*D report. Am J Psychiatry. 2006;163(11):1905–17. doi: 10.1176/ajp.2006.163.11.1905. [DOI] [PubMed] [Google Scholar]

- 10.Webb CA, Trivedi MH, Cohen ZD, et al. Personalized prediction of antidepressant v. placebo response: evidence from the EMBARC study. Psychol Med. 2019 May;49(7):1118–1127. doi: 10.1017/S0033291718001708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Husain M, Roiser JP. Neuroscience of apathy and anhedonia: a transdiagnostic approach. Nature Reviews Neuroscience. 2018;19(8):470-484-470-484. doi: 10.1038/s41583-018-0029-9. [DOI] [PubMed] [Google Scholar]

- 12.Flagel SB, Clark JJ, Robinson TE, et al. A selective role for dopamine in stimulus-reward learning. Nature. 2011;469(7328):53–7. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Steinberg EE, Keiflin R, Boivin JR, Witten IB, Deisseroth K, Janak PH. A causal link between prediction errors, dopamine neurons and learning. Nature Neuroscience. 2013;16(7):966–973. doi: 10.1038/nn.3413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Daw DD, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Networks. 2002;15:603-616-603-616. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- 15.Martins D, Mehta MA, Prata D. The “highs and lows” of the human brain on dopaminergics: Evidence from neuropharmacology. Neurosci Biobehav Rev. 2017;80:351–371. doi: 10.1016/j.neubiorev.2017.06.003. [DOI] [PubMed] [Google Scholar]

- 16.Rogers RD. The roles of dopamine and serotonin in decision making: evidence from pharmacological experiments in humans. Neuropsychopharmacology. 2011;36(1):114–32. doi: 10.1038/npp.2010.165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16(5):1936–47. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Collins AG, Frank MJ. Opponent actor learning (OpAL): modeling interactive effects of striatal dopamine on reinforcement learning and choice incentive. Psychol Rev. 2014;121(3):337–66. doi: 10.1037/a0037015. [DOI] [PubMed] [Google Scholar]

- 19.Deakin JF, Graeff FG. 5-HT and mechanisms of defence. J Psychopharmacol. 1991;5(4):305–15. doi: 10.1177/026988119100500414. [DOI] [PubMed] [Google Scholar]

- 20.Cohen JY, Amoroso MW, Uchida N. Serotonergic neurons signal reward and punishment on multiple timescales. Elife. 2015;4 doi: 10.7554/eLife.06346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Guitart-Masip M, Duzel E, Dolan R, Dayan P. Action versus valence in decision making. Trends Cogn Sci. 2014;18(4):194–202. doi: 10.1016/j.tics.2014.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Boureau YL, Dayan P. Opponency revisited: competition and cooperation between dopamine and serotonin. Neuropsychopharmacology. 2011;36(1):74–97. doi: 10.1038/npp.2010.151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cools R, Nakamura K, Daw ND. Serotonin and dopamine: unifying affective, activational, and decision functions. Neuropsychopharmacology. 2011;36(1):98–113. doi: 10.1038/npp.2010.121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dayan P, Huys QJ. Serotonin, inhibition, and negative mood. PLoS Comput Biol. 2008 Feb;4(2):e4. doi: 10.1371/journal.pcbi.0040004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Geurts DE, Huys QJ, den Ouden HE, Cools R. Serotonin and aversive Pavlovian control of instrumental behavior in humans. J Neurosci. 2013;33(48):18932–9. doi: 10.1523/JNEUROSCI.2749-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dalley JW, Roiser JP. Dopamine, serotonin and impulsivity. Neuroscience. 2012;215:42–58. doi: 10.1016/j.neuroscience.2012.03.065. [DOI] [PubMed] [Google Scholar]

- 27.Doya K. Metalearning and neuromodulation. Neural Netw. 2002;15(4-6):495–506. doi: 10.1016/s0893-6080(02)00044-8. [DOI] [PubMed] [Google Scholar]

- 28.Meyniel F, Goodwin GM, Deakin JW, et al. A specific role for serotonin in overcoming effort cost. Elife. 2016;5 doi: 10.7554/eLife.17282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Salamone JD, Pardo M, Yohn SE, López-Cruz L, SanMiguel N, Correa M. Mesolimbic Dopamine and the Regulation of Motivated Behavior. Curr Top Behav Neurosci. 2016;27:231–57. doi: 10.1007/7854_2015_383. [DOI] [PubMed] [Google Scholar]

- 30.Soubrié P. Reconciling the role of central serotonin neurons in human and animal behavior. Behavioral and Brain Sciences. 1986;9(2):319–335. doi: 10.1017/S0140525X00022871. [DOI] [Google Scholar]

- 31.Dayan P. Instrumental vigour in punishment and reward. Eur J Neurosci. 2012;35(7):1152–68. doi: 10.1111/j.1460-9568.2012.08026.x. [DOI] [PubMed] [Google Scholar]

- 32.Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology (Berl) 2007;191(3):507–20. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- 33.Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Viechtbauer W. Conducting Meta-Analyses in R with the metafor Package. Journal of Statistical Software. 2010;36(3):1–48. doi: 10.18637/jss.v036.i03. [DOI] [Google Scholar]

- 35.Higgins JPT, Thomas J, Chandler J, et al. Cochrane Handbook for Systematic Reviews of Interventions version 65. 2024 [Google Scholar]

- 36.Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005 Jul 7;47(1):129–41. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tortora F, Hadipour AL, Battaglia S, Falzone A, Avenanti A, Vicario CM. The Role of Serotonin in Fear Learning and Memory: A Systematic Review of Human Studies. Brain Sci. 2023 Aug 12;13(8) doi: 10.3390/brainsci13081197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Malamud J, Lewis G, Moutoussis M, et al. The selective serotonin reuptake inhibitor sertraline alters learning from aversive reinforcements in patients with depression: evidence from a randomized controlled trial. Psychol Med. 2024 Jul;54(10):2719–2731. doi: 10.1017/S0033291724000837. [DOI] [PubMed] [Google Scholar]

- 39.Moutoussis M, Garzùn B, Neufeld S, et al. Decision-making ability, psychopathology, and brain connectivity. Neuron. 2021 Jun 16;109(12):2025–2040.:e7. doi: 10.1016/j.neuron.2021.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pike AC, Tan KHT, Tromblee H, Wing M, Robinson OJ. Test-Retest Reliability of Two Computationally-Characterised Affective Bias Tasks. Comput Psychiatr. 2024;8(1):217–232. doi: 10.5334/cpsy.92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zorowitz S, Niv Y. Improving the Reliability of Cognitive Task Measures: A Narrative Review. Biol Psychiatry Cogn Neurosci Neuroimaging. 2023 Aug;8(8):789–797. doi: 10.1016/j.bpsc.2023.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hamid AA, Pettibone JR, Mabrouk OS, et al. Mesolimbic dopamine signals the value of work. Nat Neurosci. 2016;19(1):117–26. doi: 10.1038/nn.4173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Castrellon JJ, Meade J, Greenwald L, Hurst K, Samanez-Larkin GR. Dopaminergic modulation of reward discounting in healthy rats: a systematic review and meta-analysis. Psychopharmacology (Berl) 2021;238(3):711–723. doi: 10.1007/s00213-020-05723-5. [DOI] [PubMed] [Google Scholar]

- 44.Costello H, Berry AJ, Reeves S, et al. Disrupted reward processing in Parkinson’s disease and its relationship with dopamine state and neuropsychiatric syndromes: a systematic review and meta-analysis. J Neurol Neurosurg Psychiatry. 2022;93(5):555–562. doi: 10.1136/jnnp-2021-327762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Westbrook A, van den Bosch R, Maatta JI, et al. Dopamine promotes cognitive effort by biasing the benefits versus costs of cognitive work. Science (New York, NY) 2020;367(6484):1362–1366. doi: 10.1126/science.aaz5891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Miyazaki KW, Miyazaki K, Tanaka KF, et al. Optogenetic activation of dorsal raphe serotonin neurons enhances patience for future rewards. Curr Biol. 2014 Sep 8;24(17):2033–40. doi: 10.1016/j.cub.2014.07.041. [DOI] [PubMed] [Google Scholar]

- 47.Arnow BA, Blasey C, Williams LM, et al. Depression Subtypes in Predicting Antidepressant Response: A Report From the iSPOT-D Trial. Am J Psychiatry. 2015;172(8):743–50. doi: 10.1176/appi.ajp.2015.14020181. [DOI] [PubMed] [Google Scholar]

- 48.Chekroud AM, Hawrilenko M, Loho H, et al. Illusory generalizability of clinical prediction models. Science. 2024 Jan 12;383(6679):164–167. doi: 10.1126/science.adg8538. [DOI] [PubMed] [Google Scholar]

- 49.Lan DCL, Browning M. What Can Reinforcement Learning Models of Dopamine and Serotonin Tell Us about the Action of Antidepressants? Comput Psychiatr. 2022;6(1):166–188. doi: 10.5334/cpsy.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Eckstein MK, Master SL, Xia L, Dahl RE, Wilbrecht L, Collins AGE. The interpretation of computational model parameters depends on the context. Elife. 2022;11 doi: 10.7554/eLife.75474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Cools R, D’Esposito M. Inverted-U-shaped dopamine actions on human working memory and cognitive control. Biol Psychiatry. 2011;69(12):e113–25. doi: 10.1016/j.biopsych.2011.03.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Kanen JW, Apergis-Schoute AM, Yellowlees R, et al. Serotonin depletion impairs both Pavlovian and instrumental reversal learning in healthy humans. Mol Psychiatry. 2021 Dec;26(12):7200–7210. doi: 10.1038/s41380-021-01240-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Dreher JC, Schmidt PJ, Kohn P, Furman D, Rubinow D, Berman KF. Menstrual cycle phase modulates reward-related neural function in women. Proc Natl Acad Sci U S A. 2007 Feb 13;104(7):2465–70. doi: 10.1073/pnas.0605569104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Faulkner P, Deakin JF. The role of serotonin in reward, punishment and behavioural inhibition in humans: insights from studies with acute tryptophan depletion. Neurosci Biobehav Rev. 2014;46(Pt 3):365–78. doi: 10.1016/j.neubiorev.2014.07.024. [DOI] [PubMed] [Google Scholar]

- 55.Jocham G, Klein TA, Ullsperger M. Dopamine-mediated reinforcement learning signals in the striatum and ventromedial prefrontal cortex underlie value-based choices. J Neurosci. 2011;31(5):1606–13. doi: 10.1523/JNEUROSCI.3904-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Admon R, Kaiser RH, Dillon DG, et al. Dopaminergic Enhancement of Striatal Response to Reward in Major Depression. Am J Psychiatry. 2017;174(4):378–386. doi: 10.1176/appi.ajp.2016.16010111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Frank MJ, O’Reilly RC. A mechanistic account of striatal dopamine function in human cognition: psychopharmacological studies with cabergoline and haloperidol. Behavioral neuroscience. 2006;120(3):497–517. doi: 10.1037/0735-7044.120.3.497. [DOI] [PubMed] [Google Scholar]

- 58.Walsh AEL, Huneke NTM, Brown R, Browning M, Cowen P, Harmer CJ. A Dissociation of the Acute Effects of Bupropion on Positive Emotional Processing and Reward Processing in Healthy Volunteers. Front Psychiatry. 2018;9:482. doi: 10.3389/fpsyt.2018.00482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bernacer J, Corlett PR, Ramachandra P, et al. Methamphetamine-induced disruption of frontostriatal reward learning signals: relation to psychotic symptoms. The American journal of psychiatry. 2013;170(11):1326–34. doi: 10.1176/appi.ajp.2013.12070978. [DOI] [PubMed] [Google Scholar]

- 60.Westbrook A, van den Bosch R, Hofmans L, et al. Striatal Dopamine Can Enhance Learning, Both Fast and Slow, and Also Make it Cheaper. bioRxiv. 2024:2024.02.14.580392-2024.02.14.580392. doi: 10.1101/2024.02.14.580392. [DOI] [Google Scholar]

- 61.Howlett JR, Huang H, Hysek CM, Paulus MP. The effect of single-dose methylphenidate on the rate of error-driven learning in healthy males: a randomized controlled trial. Psychopharmacology (Berl) 2017;234(22):3353–3360. doi: 10.1007/s00213-017-4723-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Addicott MA, Pearson JM, Schechter JC, Sapyta JJ, Weiss MD, Kollins SH. Attention-deficit/hyperactivity disorder and the explore/exploit trade-off. Neuropsychopharmacology. 2021;46(3):614–621. doi: 10.1038/s41386-020-00881-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bellebaum C, Kuchinke L, Roser P. Modafinil alters decision making based on feedback history - a randomized placebo-controlled double blind study in humans. Journal of psychopharmacology (Oxford, England) 2017;31(2):243–249. doi: 10.1177/0269881116668591. [DOI] [PubMed] [Google Scholar]

- 64.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442(7106):1042–5. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Jansen M, Lockwood PL, Cutler J, de Bruijn ERA. l-DOPA and oxytocin influence the neurocomputational mechanisms of self-benefitting and prosocial reinforcement learning. Neuroimage. 2023;270:119983. doi: 10.1016/j.neuroimage.2023.119983. [DOI] [PubMed] [Google Scholar]

- 66.Weis T, Puschmann S, Brechmann A, Thiel CM. Effects of L-dopa during auditory instrumental learning in humans. PLoS One. 2012;7(12):e52504. doi: 10.1371/journal.pone.0052504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Chakroun K, Wiehler A, Wagner B, et al. Dopamine regulates decision thresholds in human reinforcement learning in males. Nat Commun. 2023;14(1):5369. doi: 10.1038/s41467-023-41130-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Halahakoon DC, Kaltenboeck A, Martens M, et al. Pramipexole Enhances Reward Learning by Preserving Value Estimates. Biol Psychiatry. 2024;95(3):286–296. doi: 10.1016/j.biopsych.2023.05.023. [DOI] [PubMed] [Google Scholar]

- 69.Jocham G, Klein TA, Ullsperger M. Differential modulation of reinforcement learning by D2 dopamine and NMDA glutamate receptor antagonism. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2014;34(39):13151–62. doi: 10.1523/JNEUROSCI.0757-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Kahnt T, Weber SC, Haker H, Robbins TW, Tobler PN. Dopamine D2-receptor blockade enhances decoding of prefrontal signals in humans. J Neurosci. 2015;35(9):4104–11. doi: 10.1523/JNEUROSCI.4182-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Murray GK, Knolle F, Ersche KD, et al. Dopaminergic drug treatment remediates exaggerated cingulate prediction error responses in obsessive-compulsive disorder. Psychopharmacology. 2019;236:2325–2336. doi: 10.1007/s00213-019-05292-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Mikus N, Korb S, Massaccesi C, et al. Effects of dopamine D2/3 and opioid receptor antagonism on the trade-off between model-based and model-free behaviour in healthy volunteers. eLife. 2022;11 doi: 10.7554/eLife.79661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Cremer A, Kalbe F, Müller JC, Wiedemann K, Schwabe L. Disentangling the roles of dopamine and noradrenaline in the exploration-exploitation tradeoff during human decision-making. Neuropsychopharmacology. 2023;48(7):1078–1086. doi: 10.1038/s41386-022-01517-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Diederen KM, Ziauddeen H, Vestergaard MD, Spencer T, Schultz W, Fletcher PC. Dopamine Modulates Adaptive Prediction Error Coding in the Human Midbrain and Striatum. J Neurosci. 2017;37(7):1708–1720. doi: 10.1523/JNEUROSCI.1979-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Haarsma J, Fletcher PC, Griffin JD, et al. Precision weighting of cortical unsigned prediction error signals benefits learning, is mediated by dopamine, and is impaired in psychosis. Mol Psychiatry. 2021;26(9):5320–5333. doi: 10.1038/s41380-020-0803-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Eisenegger C, Naef M, Linssen A, et al. Role of dopamine D2 receptors in human reinforcement learning. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2014;39(10):2366–75. doi: 10.1038/npp.2014.84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Grob S, Pizzagalli DA, Dutra SJ, et al. Dopamine-related deficit in reward learning after catecholamine depletion in unmedicated, remitted subjects with bulimia nervosa. Neuropsychopharmacology. 2012;37(8):1945–52. doi: 10.1038/npp.2012.41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Cox SM, Frank MJ, Larcher K, et al. Striatal D1 and D2 signaling differentially predict learning from positive and negative outcomes. Neuroimage. 2015;109:95–101. doi: 10.1016/j.neuroimage.2014.12.070. [DOI] [PubMed] [Google Scholar]

- 79.Pizzagalli DA, Evins AE, Schetter EC, et al. Single dose of a dopamine agonist impairs reinforcement learning in humans: behavioral evidence from a laboratory-based measure of reward responsiveness. Psychopharmacology. 2008;196(2):221–32. doi: 10.1007/s00213-007-0957-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Kelm MK, Boettiger CA. Age moderates the effect of acute dopamine depletion on passive avoidance learning. Pharmacol Biochem Behav. 2015;131:57–63. doi: 10.1016/j.pbb.2015.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Wagner B, Clos M, Sommer T, Peters J. Dopaminergic Modulation of Human Intertemporal Choice: A Diffusion Model Analysis Using the D2-Receptor Antagonist Haloperidol. J Neurosci. 2020;40(41):7936–7948. doi: 10.1523/JNEUROSCI.0592-20.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Erfanian Abdoust M, Frobüse MI, Schnitzler A, Schreivogel E, Jocham G. Dopamine and acetylcholine have distinct roles in delay- and effort-based decision-making in humans. PLoS Biol. 2024;22(7):e3002714. doi: 10.1371/journal.pbio.3002714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.de Wit H, Enggasser JL, Richards JB. Acute administration of d-amphetamine decreases impulsivity in healthy volunteers. Neuropsychopharmacology. 2002;27(5):813–25. doi: 10.1016/S0893-133X(02)00343-3. [DOI] [PubMed] [Google Scholar]

- 84.Wardle MC, Treadway MT, Mayo LM, Zald DH, de Wit H. Amping up effort: effects of d-amphetamine on human effort-based decision-making. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31(46):16597–602. doi: 10.1523/JNEUROSCI.4387-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Soder HE, Cooper JA, Lopez-Gamundi P, et al. Dose-response effects of d-amphetamine on effort-based decision-making and reinforcement learning. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2021;46(6):1078–1085. doi: 10.1038/s41386-020-0779-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Daood M, Peled-Avron L, Ben-Hayun R, et al. Fronto-striatal connectivity patterns account for the impact of methylphenidate on choice impulsivity among healthy adults. Neuropharmacology. 2022;216:109190. doi: 10.1016/j.neuropharm.2022.109190. [DOI] [PubMed] [Google Scholar]

- 87.Pine A, Shiner T, Seymour B, Dolan RJ. Dopamine, time, and impulsivity in humans. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2010;30:8888–96. doi: 10.1523/JNEUROSCI.6028-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Smith E, Theis H, van Eimeren T, et al. Dopamine and temporal discounting: revisiting pharmacology and individual differences. bioRxiv. 2024:2024.08.28.610170-2024.08.28.610170. doi: 10.1101/2024.08.28.610170. [DOI] [Google Scholar]

- 89.Kayser AS, Allen DC, Navarro-Cebrian A, Mitchell JM, Fields HL. Dopamine, corticostriatal connectivity, and intertemporal choice. J Neurosci. 2012;32(27):9402–9. doi: 10.1523/JNEUROSCI.1180-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Weber SC, Beck-Schimmer B, Kajdi ME, Müller D, Tobler PN, Quednow BB. Dopamine D2/3- and μ-opioid receptor antagonists reduce cue-induced responding and reward impulsivity in humans. Transl Psychiatry. 2016;6(7):e850. doi: 10.1038/tp.2016.113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Kelm MK, Boettiger CA. Effects of acute dopamine precusor depletion on immediate reward selection bias and working memory depend on catechol-O-methyltransferase genotype. J Cogn Neurosci. 2013;25(12):2061–71. doi: 10.1162/jocn_a_00464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Ikeda Y, Funayama T, Tateno A, Fukayama H, Okubo Y, Suzuki H. Bupropion increases activation in nucleus accumbens during anticipation of monetary reward. Psychopharmacology (Berl) 2019;236(12):3655–3665. doi: 10.1007/s00213-019-05337-6. [DOI] [PubMed] [Google Scholar]

- 93.Grimm O, Nägele M, Kupper-Tetzel L, de Greck M, Plichta M, Reif A. No effect of a dopaminergic modulation fMRI task by amisulpride and L-DOPA on reward anticipation in healthy volunteers. Psychopharmacology (Berl) 2021;238(5):1333–1342. doi: 10.1007/s00213-020-05693-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Michely J, Viswanathan S, Hauser TU, Delker L, Dolan RJ, Grefkes C. The role of dopamine in dynamic effort-reward integration. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2020;45:1448–1453. doi: 10.1038/s41386-020-0669-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Beierholm U, Guitart-Masip M, Economides M, et al. Dopamine modulates reward-related vigor. Neuropsychopharmacology. 2013;38(8):1495–503. doi: 10.1038/npp.2013.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Mehta MA, Swainson R, Ogilvie AD, Sahakian J, Robbins TW. Improved short-term spatial memory but impaired reversal learning following the dopamine D(2) agonist bromocriptine in human volunteers. Psychopharmacology (Berl) 2001;159(1):10–20. doi: 10.1007/s002130100851. [DOI] [PubMed] [Google Scholar]

- 97.Au-Yeung SK, Halahakoon DC, Kaltenboeck A, Cowen P, Browning M, Manohar SG. The effects of pramipexole on motivational vigour during a saccade task: a placebo-controlled study in healthy adults. Psychopharmacology (Berl) 2024;241(7):1365–1375. doi: 10.1007/s00213-024-06567-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Graf H, Wiegers M, Metzger CD, Walter M, Abler B. Differential Noradrenergic Modulation of Monetary Reward and Visual Erotic Stimulus Processing. Front Psychiatry. 2018;9:346. doi: 10.3389/fpsyt.2018.00346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Abler B, Erk S, Walter H. Human reward system activation is modulated by a single dose of olanzapine in healthy subjects in an event-related, double-blind, placebo-controlled fMRI study. Psychopharmacology (Berl) 2007;191(3):823–33. doi: 10.1007/s00213-006-0690-y. [DOI] [PubMed] [Google Scholar]

- 100.Mehta MA, Gumaste D, Montgomery AJ, McTavish SF, Grasby PM. The effects of acute tyrosine and phenylalanine depletion on spatial working memory and planning in healthy volunteers are predicted by changes in striatal dopamine levels. Psychopharmacology (Berl) 2005;180(4):654–63. doi: 10.1007/s00213-004-2128-8. [DOI] [PubMed] [Google Scholar]

- 101.Guitart-Masip M, Chowdhury R, Sharot T, Dayan P, Duzel E, Dolan RJ. Action controls dopaminergic enhancement of reward representations. Proceedings of the National Academy of Sciences of the United States of America. 2012;109(19):7511–6. doi: 10.1073/pnas.1202229109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Guitart-Masip M, Economides M, Huys QJ, et al. Differential, but not opponent, effects of L-DOPA and citalopram on action learning with reward and punishment. Psychopharmacology (Berl) 2014;231(5):955–66. doi: 10.1007/s00213-013-3313-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Scholz V, Hook RW, Kandroodi MR, et al. Cortical dopamine reduces the impact of motivational biases governing automated behaviour. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2022;47(8):1503–1512. doi: 10.1038/s41386-022-01291-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Hebart MN, Glascher J. Serotonin and dopamine differentially affect appetitive and aversive general Pavlovian-to-instrumental transfer. Psychopharmacology. 2015;232(2):437–51. doi: 10.1007/s00213-014-3682-3. [DOI] [PubMed] [Google Scholar]

- 105.Colwell MJ, Tagomori H, Shang F, et al. Direct serotonin release in humans shapes aversive learning and inhibition. Nat Commun. 2024;15(1):6617. doi: 10.1038/s41467-024-50394-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Michely J, Eldar E, Erdman A, Martin IM, Dolan RJ. Serotonin modulates asymmetric learning from reward and punishment in healthy human volunteers. Commun Biol. 2022;5(1):812. doi: 10.1038/s42003-022-03690-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Jepma M, Te Beek ET, Wagenmakers EJ, van Gerven JM, Nieuwenhuis S. The role of the noradrenergic system in the exploration-exploitation trade-off: a psychopharmacological study. Front Hum Neurosci. 2010;4:170. doi: 10.3389/fnhum.2010.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Luo Q, Kanen JW, Bari A, et al. Comparable roles for serotonin in rats and humans for computations underlying flexible decision-making. Neuropsychopharmacology. 2024;49(3):600–608. doi: 10.1038/s41386-023-01762-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Finger EC, Marsh AA, Buzas B, et al. The impact of tryptophan depletion and 5-HTTLPR genotype on passive avoidance and response reversal instrumental learning tasks. Neuropsychopharmacology. 2007;32(1):206–15. doi: 10.1038/sj.npp.1301182. [DOI] [PubMed] [Google Scholar]

- 110.Cools R, Robinson OJ, Sahakian B. Acute tryptophan depletion in healthy volunteers enhances punishment prediction but does not affect reward prediction. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2008;33(9):2291–9. doi: 10.1038/sj.npp.1301598. [DOI] [PubMed] [Google Scholar]

- 111.Crockett MJ, Clark L, Apergis-Schoute AM, Morein-Zamir S, Robbins TW. Serotonin modulates the effects of Pavlovian aversive predictions on response vigor. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2012;37(10):2244–52. doi: 10.1038/npp.2012.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Robinson OJ, Cools R, Sahakian BJ. Tryptophan depletion disinhibits punishment but not reward prediction: implications for resilience. Psychopharmacology. 2012;219(2):599–605. doi: 10.1007/s00213-011-2410-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Seymour B, Daw ND, Roiser JP, Dayan P, Dolan R. Serotonin selectively modulates reward value in human decision-making. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2012;32(17):5833–42. doi: 10.1523/JNEUROSCI.0053-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Gilger MD, Hellrung L, Neukam PT, et al. Arbitration between model-free and model-based control is not affected by transient changes in tonic serotonin levels. J Psychopharmacol. 2024;38(2):178–187. doi: 10.1177/02698811231216325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Crean J, Richards JB, de Wit H. Effect of tryptophan depletion on impulsive behavior in men with or without a family history of alcoholism. Behav Brain Res. 2002;136(2):349–57. doi: 10.1016/s0166-4328(02)00132-8. [DOI] [PubMed] [Google Scholar]

- 116.Tanaka SC, Schweighofer N, Asahi S, et al. Serotonin differentially regulates short- and long-term prediction of rewards in the ventral and dorsal striatum. PLoS One. 2007;2(12):e1333. doi: 10.1371/journal.pone.0001333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Schweighofer N, Bertin M, Shishida K, et al. Low-serotonin levels increase delayed reward discounting in humans. J Neurosci. 2008;28(17):4528–32. doi: 10.1523/JNEUROSCI.4982-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Worbe Y, Savulich G, Voon V, Fernandez-Egea E, Robbins TW. Serotonin depletion induces ‘waiting impulsivity’ on the human four-choice serial reaction time task: cross-species translational significance. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2014;39:1519–26. doi: 10.1038/npp.2013.351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Cools R, Blackwell A, Clark L, Menzies L, Cox S, Robbins TW. Tryptophan depletion disrupts the motivational guidance of goal-directed behavior as a function of trait impulsivity. Neuropsychopharmacology. 2005;30(7):1362–73. doi: 10.1038/sj.npp.1300704. [DOI] [PubMed] [Google Scholar]

- 120.Roiser JP, Blackwell AD, Cools R, et al. Serotonin transporter polymorphism mediates vulnerability to loss of incentive motivation following acute tryptophan depletion. Neuropsychopharmacology. 2006;31(10):2264–72. doi: 10.1038/sj.npp.1301055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 121.Steding J, Ritschel F, Boehm I, et al. The effects of acute tryptophan depletion on instrumental reward learning in anorexia nervosa - an fMRI study. Psychol Med. 2023;53(8):3426–3436. doi: 10.1017/S0033291721005493. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.