Abstract

Objective:

To assess the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS) physical laparoscopic simulator for construct and predictive validity and for its educational utility.

Summary Background Data:

MISTELS is the physical simulator incorporated by the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) in their Fundamentals of Laparoscopic Surgery (FLS) program. MISTELS’ metrics have been shown to have high interrater and test-retest reliability and to correlate with skill in animal surgery.

Methods:

Over 200 surgeons and trainees from 5 countries were assessed using MISTELS in a series of experiments to assess the validity of the system and to evaluate whether practicing MISTELS basic skills (transferring) would result in skill acquisition transferable to complex laparoscopic tasks (suturing).

Results:

Face validity was confirmed through questioning 44 experienced laparoscopic surgeons using global rating scales. MISTELS scores increased progressively with increasing laparoscopic experience (n = 215, P < 0.0001), and residents followed over time improved their scores (n = 24, P < 0.0001), evidence of construct validity. Results in the host institution did not differ from 5 beta sites (n = 215, external validity). MISTELS scores correlated with a highly reliable validated intraoperative rating of technical skill during laparoscopic cholecystectomy (n = 19, r = 0.81, P < 0.0004; concurrent validity). Novice laparoscopists were randomized to practice/no practice of the transfer drill for 4 weeks. Improvement in intracorporeal suturing skill was significantly related to practice but not to baseline ability, career goals, or gender (P < 0.001).

Conclusion:

MISTELS is a practical and inexpensive inanimate system developed to teach and measure technical skills in laparoscopy. This system is reliable, valid, and a useful educational tool.

McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS) is a physical laparoscopic simulator that is the basis for the manual skills component of Society of American Gastrointestinal and Endoscopic Surgeons’ Fundamentals of Laparoscopic Surgery program. It is an effective educational tool and provides metrics that are highly reliable and a valid measure of technical skills in laparoscopy.

Multiple pressures have stimulated the development of curricula to teach fundamental technical skills to surgeons in a laboratory setting. These include reduced resident work hours, increasing costs of operating room time, and the public and payers’ focus on medical errors and the ethics of learning basic skills on patients. In response to these demands, laparoscopic simulators have been developed using inanimate box trainers or computer-based virtual reality platforms.1,2 The goals of these simulator-based curricula are to provide an opportunity to learn and practice basic skills in a relaxed and inexpensive environment to attain a basic level of technical facility that can be transferred from the laboratory to the operating room environment.

Laparoscopy has been an area where simulator curricula have attracted much interest because unique skills had to be learned not only by surgeons in training but also by surgeons in practice. This latter group had to develop a strategy to acquire novel skills and incorporate these skills into their clinical practice. Since simulator training requires an investment in both the equipment and time required for training, it is important that this investment be justified by providing proof of the value of simulators.

The process to develop and prove the value of the MISTELS (McGill Inanimate System for Training and Evaluation of Laparoscopic Skills) physical laparoscopy simulator has followed a stepwise progression. First, the skills unique to laparoscopic surgery were identified, modeled into exercises that could be carried out in a physical simulator, and a measuring system (metrics) was developed for each exercise, providing a quantitative and objective assessment of performance based on efficiency and precision. Next, the metrics were evaluated for reliability and validity. The most important aspect of validity assessment was to evaluate the relationship of technical skill measured in the simulator to skill in the operating room. If this relationship was found to be robust, simulator performance could then be used to predict performance in the operating room. Once these steps had been completed, the simulator system could then be assessed as a means to verify that laparoscopic technical skill had reached a level thought necessary for the safe performance of basic laparoscopic surgery. In other words, we sought to determine whether the MISTELS score could be used as a summative assessment tool to separate a group that was considered competent from one that would not be considered competent purely from the technical-skill point of view. If so, the sensitivity, specificity, positive and negative predictive values could be determined.

The purpose of this paper is to review the process used to develop the MISTELS physical laparoscopy simulator and to summarize the data accumulated over a series of experiments to prove its value as an effective tool to teach and evaluate the fundamental skills required in laparoscopic surgery. These new data are put in context with previously published preliminary data on the MISTELS system.

METHODS

Development of the MISTELS Program

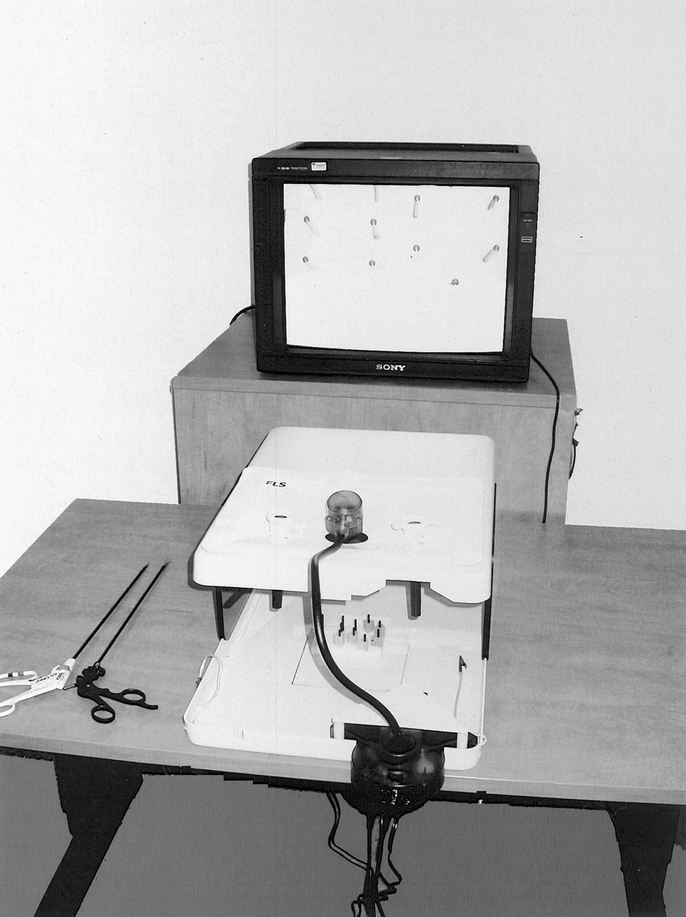

MISTELS is the acronym for the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills. This system evolved from the program we originally described.3 Briefly, MISTELS consists of 5 exercises performed in an endotrainer box. The surgeon works through 2 trocars placed in fixed positions through an opaque cover on a trainer box. The optical system was originally a 0-degree 10-mm laparoscope supported on a ring stand at a fixed position and focal distance from the target area. This was later replaced by a gooseneck camera (Flexcam iCam Digital NTSC; ClearOne Communications, Salt Lake City, UT), with both USB and S-video outputs, which allows the image to be displayed on a laptop monitor or any monitor/TV with an S-video input. The height of the endotrainer box can be varied to optimize the comfort for the surgeon. The relative positions of the trocars and the monocular optical system are standardized (Fig. 1).

FIGURE 1. MISTELS Box with gooseneck camera. Camera projects image on a monitor. Pneumatic table can be positioned at comfortable working height.

To develop the standardized tasks used in the trainer-box, a panel of 5 experienced laparoscopic surgeons reviewed a series of videotapes of laparoscopic cholecystectomy, appendectomy, inguinal hernia repair, and Nissen fundoplication. They then listed differences between performing surgery by laparoscopy compared with open surgery. The principle differences included a novel optical system providing magnified monocular vision and affecting depth perception, the requisite long instruments which diminish tactile feedback and amplify tremor, and the need to work through trocars with fixed access in the abdominal wall, resulting in both a fulcrum effect and decreased degrees of freedom. In addition, facility with devices specific for minimally invasive surgery (MIS), such as an endoloop and a knot pusher must be acquired. These devices must be directed in precise fine movements using both hands. Based on these findings, specific goals were identified to guide the development of the educational task modules.

The specific domains that were selected for training and evaluation included (1) depth perception using a monocular imaging system; (2) visual-spatial perception; (3) use of both hands in a complementary manner; (4) securing of a tubular structure using a pretied looped suture (endoloop); (5) precise cutting while the nondominant hand provides counter traction; (6) suturing using an intracorporeal knot (IC); and (7) suturing using an extracorporeal knot (EC). Two other exercises were described in the original report (placement and fixation of a prosthetic mesh over a defect and clipping and dividing a tubular structure). Although these 2 exercises proved to be of educational benefit, their value as an evaluative tool was limited, and they contributed significant additional cost in consumable supplies. They were therefore dropped from MISTELS.

Description of MISTELS Tasks

These have been described in detail previously.3,4 The candidate first watches a brief video or CD-ROM demonstrating proper performance of all 5 tasks then completes the 5 tasks in sequence.

Task 1 (Transfer)

A series of 6 plastic rings are picked up in turn by a grasping forceps from a pegboard on the surgeon's left, transferred in space to a grasper in the right hand, then placed around a post on the corresponding right-sided pegboard. After all rings are transferred from the left to right, the process is reversed, requiring transfer from the right to left hand. This task was designed to develop depth perception and visual-spatial perception in a monocular viewing system and the coordinated use of both the dominant and nondominant hands. It also replicates the important action of transferring and positioning a needle between needle holders when suturing. This exercise is timed, and a penalty score is assessed whenever a ring is dropped outside the surgeon's view.

Task 2 (Cutting)

In this exercise, a 4-×-4-inch gauze is suspended by alligator clips. The surgeon is required to cut a precise circular pattern from the gauze along a premarked template. It requires precision and the use of the nondominant hand to provide appropriate traction to the material and to position the gauze so that the dominant hand holding endoscopic scissors may cut it accurately. Any deviation of the cut from the premarked template results in a penalty. This exercise teaches the concept of traction and the need to use the nondominant hand to provide a convenient working angle for the dominant hand, working through the constraints of fixed trocar positions.

Task 3 (Ligating Loop)

In MIS, a ligating loop is a convenient tool to securely control a hollow tubular structure, such as a blood vessel, cystic duct, or appendix. It must be placed accurately to avoid encroaching on adjacent tissues and must be secured tightly to ensure that it will neither allow leakage nor become displaced. The surgeon must introduce the ligating loop through a trocar, then control the tubular structure (foam appendage) using a grasping forceps while the pretied loop is cinched precisely on a previously marked line on the appendage. In this exercise, a penalty is applied if the knotted loop is loose or if the loop is not accurately placed on the target line.

Tasks 4 and 5 (Suturing With Intracorporeal and EC)

It is essential that a surgeon doing laparoscopic surgery be able to place a stitch and tie a knot. In this exercise, a 00 silk suture with a curved needle is introduced through the trocar and positioned properly using the needle holders. A stitch is then placed through target points on either side of a slit penrose drain, and the suture is tied using either an intracorporeal (IC, instrument) tie (Task 4), or an extracorporeal (EC) tying technique with the aid of a knot pusher (Task 5). In these exercises, penalty scores are applied if the needle is not passed precisely through the target dots and if the stitch is not tied sufficiently tightly to approximate the edges of the slit and close the defect. Additional penalty is applied if the knot slips when tested and if the penrose drain is avulsed from the underlying block to which it is attached by 2-sided tape, indicating that uncontrolled upward force was applied.

Metrics

Each exercise is scored for efficiency (time) and precision (penalty). A cutoff time is assigned for each task. Time score (efficiency) is calculated by subtracting the actual time taken to complete the task from the cutoff time. A penalty score is applied for errors or lack of precision, and the penalty score is subtracted from the efficiency score to yield a final score for each task. Consequently, higher scores are better. To make each task result in scores in an equivalent range, a target score was identified by reviewing the results of the raw scores for a group of excellent chief residents and fellows. Scores were then normalized by dividing the score for each exercise by the target score, creating an equivalent range of scores for each task and for the total score. Thus, a score of 100 for any task or the total score would be considered excellent.

MISTELS as an Evaluation Tool

To assess the validity of the MISTELS metrics, a series of individual experiments were performed involving more than 250 subjects representing surgeons and trainees from more than 20 medical institutions in 5 countries on 3 continents. All MISTELS performances since the development of the system in 1996 have been entered prospectively in a computerized database. The host institution and level of training of the surgeon at the time of testing were recorded. Of the more than 1000 test scores, only the initial performance of an individual on MISTELS was used for the purpose of the validation studies, unless specifically stated otherwise. This was done to avoid a learning effect when MISTELS was used to assess laparoscopic skill.

Validation

Validation required a series of experiments all designed to provide evidence that the scores measured in the MISTELS system reflect the technical skills they purport to measure.

Construct Validity

Demonstration of construct validity is a process whereby the score in the test instrument (MISTELS) is compared with a construct that can be reasonably used as a surrogate for technical skill in the O.R. One hypothesis was that as a surgeon advances through the course of a residency program, into a laparoscopic fellowship, and then into clinical practice, he or she would become increasingly skilled in the technical aspects of laparoscopy. A total of 215 candidates from 5 countries were evaluated in the 5 MISTELS tasks. They were divided into 3 groups based on their laparoscopic experience. The junior group comprised 82 post graduate year (PGY) 1 to 2 residents with relatively little experience in independent performance of laparoscopic surgery, the intermediate group included 66 PGY 3 to 4 residents, and the senior group included 67 chief general surgery residents, laparoscopic surgery fellows, and attending surgeons who practice laparoscopic surgery. Differences between these groups were tested for significance for each task and total score using Kruskall-Wallis ANOVA. The groups were also evaluated with respect to whether they had achieved the mean total score for the senior group. Differences in the percentage of each group who had performed at this criterion level were tested for significance using χ2. To further investigate construct validity, 23 general surgery residents who were evaluated with MISTELS during different years of their residency were identified from the database. Scores for the first and second trials were compared using Wilcoxon signed rank test to evaluate whether MISTELS scores increased, as would be expected as residents acquired increasing skill and experience in laparoscopic surgery.

External Validity

To assess the generalizability of the MISTELS scoring system, evaluators at 5 beta test sites were trained to administer the test. Scores for 135 McGill surgeons were then compared with 80 from other institutions. Surgeons were stratified into junior, intermediate, and senior groups. Multiple regression analysis was performed to independently account for the effects of institution and level of training on MISTELS score.

Predictive/Concurrent Validity

Definitive evidence of validity requires proof of the relationship between scores obtained in the MISTELS and laparoscopic technical skill measured in a live operating room environment. Because no widely accepted gold standard of laparoscopic technical performance in the operating room exists, we developed a global assessment tool for use during dissection of the gallbladder from the liver bed during laparoscopic cholecystectomy (Vassiliou et al, unpublished observations). Domains evaluated on a 5-point scale included depth perception, bimanual dexterity, efficiency, tissue handling, and overall competence. The scores for the 5 domains were summed to obtain a total score from 0 to 25. Evaluations were carried out in the operating room by 2 trained observers. This scoring system was found to have excellent interrater reliability (>0.80) and construct validity. In the current study, 19 subjects were assessed in the operating room within 2 weeks of being assessed in MISTELS. Intraoperative evaluators were unaware of the subjects’ MISTELS scores. Multiple regression was used to evaluate the relationship between total MISTELS score and mean total intraoperative assessment score, while controlling for PGY level.

MISTELS as an Educational System

Twenty McGill University medical students rotating through a surgical service agreed to participate in the study. After completing a questionnaire and viewing an instructional video, subjects were randomized to a practice or a nonpractice (control) group, using assignment envelopes generated by a team not associated with the study. At baseline, all subjects were scored as they placed 1 EC and 1 IC stitch. The practice group undertook 4 weekly sessions of 10 peg transfers tasks, with all subjects achieving criterion pegboard transfer scores (mean level for senior group on their first attempt) by the 38th iteration of the task (mean number of trials to criterion score = 14). The control group did not have access to the simulator. The fourth week, all subjects performed the IC and EC stitch again and were rescored.

Wilcoxon signed rank test was used to determine improvement within each group, with Mann-Whitney U test used to evaluate differences between the 2 groups. Multiple regression was used to predict the change from baseline to final IC and EC knot-tying score. Covariates entered into the model included practice group, gender, interest in a surgical career, and baseline IC or EC score.

RESULTS

Construct Validity

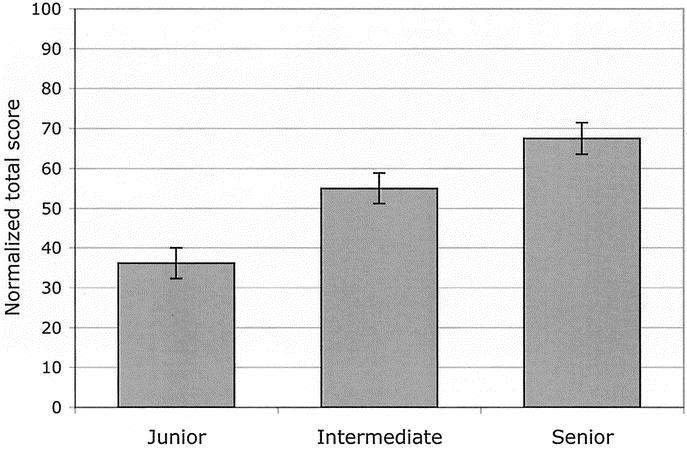

A comparison of junior residents (PGY1-2), intermediate residents (PGY3-4), and seniors (PGY5 residents, fellows, and practicing laparoscopic surgeons) showed a stepwise improvement in each task and the total normalized MISTELS score (Table 1 and Fig. 2), with a significant difference between groups for each comparison (n = 215, P < 0.0001).

TABLE 1. MISTELS Scores for Each Task and for Total Score

FIGURE 2. Comparison of MISTELS total scores between juniors (PGY1-2), intermediate level residents (PGY 3–4), and seniors (PGY 5, fellows, and practicing laparoscopic surgeons); n = 215, P < 0.0001.

The mean total score (normalized) for the senior group was 67.5. Only 2.4% (2/82) of juniors and 29% (19/66) of intermediate trainees reached this level. In contrast, 61% (41/67) of senior subjects reached this level. Differences were significant (χ2 = 62; P < 0.0001).

23 McGill residents had repeated MISTELS scores recorded in the database. Sixteen residents were PGY1-2 and 7 were PGY3 or above during their first trial. The mean (SD) time between the first 2 trials was 1.6 (0.8) years. The mean (SD) total score increased from 50 (21) on the first trial to 68 (16) on the second (P < 0.0002). The mean percent increase was 74% (107). Junior residents increased by 102% (118), compared with 11% (18) for nonjunior residents (P = 0.011).

External Validity

Multiple regression analysis revealed that only training level (P < 0.0001), and not test site (P = 0.87), independently predicted total MISTELS score.

Concurrent/Predictive Validity

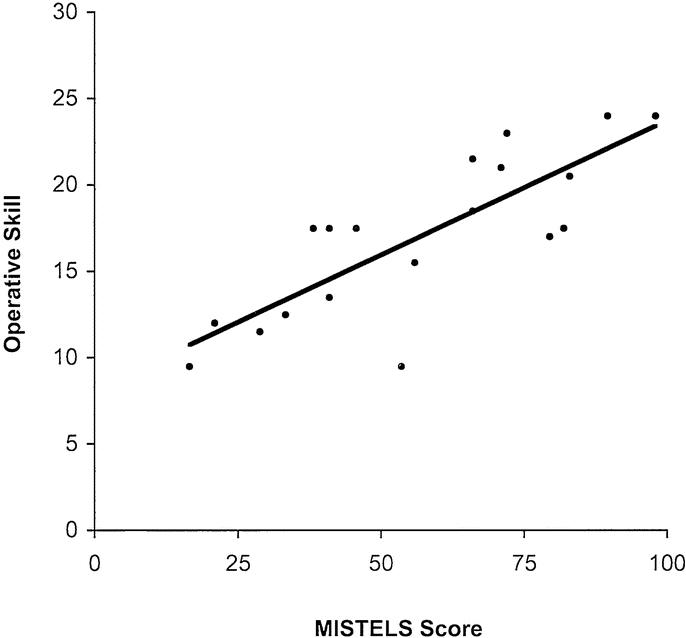

The total MISTELS score correlated highly with intraoperative measurements of technical skill in laparoscopy (r = 0.81; P < 0.001). Thus, about 66% of the variance of technical skill in the operating room (r2) can be predicted by knowledge of performance in the MISTELS inanimate assessment system. This provides evidence of concurrent/predictive validity (Fig. 3). On multiple regression analysis, both training level (junior/intermediate/senior; P = 0.005) and MISTELS score (P = 0.003) were independent predictors of intraoperative technical skill.

FIGURE 3. Linear regression analysis of operative skill measured by a validated global assessment scale during laparoscopic cholecystectomy versus MISTELS score. (n = 19, r = 0.81, P < 0.001).

MISTELS as an Educational System

The mean IC knot tying score improved significantly in the group who practiced pegboard transfer (from 12 ± 16 to 51 ± 29, P = 0.0125) but not in the control group (1 ± 3 to 7 ± 14, P = 0.11). The mean improvement in IC knot tying score was greater in the pegboard transfer group compared with controls (38 ± 33 versus 7 ± 13, P = 0.019). In contrast, mean EC tying scores improved significantly in the control group (5 ± 10 to 36 ± 26, P = 0.011), but not in the practice group (31 ± 29 to 50 ± 30, P = 0.11). However, there was no significant difference in the magnitude of improvement in EC knot tying between the 2 groups (19 ± 30 in practice group versus 31 ± 26 in the control group, P = 0.35).

To account for the differences at baseline, a multiple regression analysis was performed. On multiple regression analysis, the only significant predictor of improvement in IC-tying score was pegboard transfer practice (P = 0.006), with baseline IC score (P = 0.20), gender (P = 0.48), and career interest (P = 0.10) nonsignificant. In contrast, none of these variables significantly predicted the change in EC knot-tying score.

DISCUSSION

Simulation training should provide the opportunity to learn and practice the basic skill set unique to laparoscopy. These skills can then be applied in the context of knowledge, judgment, and experience to ensure safe conduct of laparoscopic surgery. An essential tenet of educational theory is that learning be accompanied by evaluation both for formative (feedback) and summative (final assessment) purposes. The feedback can be used to create directed learning programs to enhance skill specifically in the areas that are deficient, then to verify that the criterion skill level has been attained.

An inanimate simulator is attractive in that it is inexpensive, portable, reproducible, and flexible. The MISTELS system meets these objectives. One advantage of this over computer-based virtual reality systems is that it allows the trainee to use whatever instruments he or she uses in the operating room. It provides haptic feedback that is absent from most VR systems and is certainly less expensive. It provides the flexibility of allowing the trainee to use the same training system to practice additional skills; for example, cannulation, continuous sutures, and anastomosis between tubular structures. To do this in a computer-based system would be very expensive, and current models of the behavior of sutures are very primitive.

To be an effective educational tool, the metrics associated with the simulator must provide meaningful information to the student. For these data to be useful for evaluation they must be shown to be reliable and valid. The MISTELS has gone through a rigorous process of reliability and validity testing. The data presented in the current paper add to this previous work, which will now be summarized.

Reliability Testing

Reliability refers to the consistency of the test. It must be consistent when administered by different testers (interrater reliability); it must be consistent when the same student is being evaluated on different occasions, provided no new learning has occurred between tests (test-retest reliability); and it must be internally consistent (between individual test items and the overall score). We have previously shown that the interrater and test-retest reliabilities (n = 12) for the total scores were both excellent, with intraclass correlation coefficients of 0.998 (95% CI, 0.985–1.00) and 0.892 (95% CI, 0.665–0.968), respectively. Cronbach's α was calculated as a measure of internal consistency of the tasks and was excellent at 0.86 (Vassiliou et al, unpublished observations). Internal consistency could not be improved with the deletion of any task. The demonstration of reliability is mandatory before an evaluation can be shown to be valid.

Validity Testing

To prove the value of the MISTELS simulator, a series of individual experiments were performed involving a large number of subjects representing varying backgrounds of training, laparoscopic experience, geographic location, and ethnic diversity. Evaluators were trained in person, and their ability to accurately measure performance was verified. When a subject was tested on more than 1 occasion, only the first score was used for validity studies to avoid the confounding effect of learning. Proof of validity requires a series of independent studies to ensure that measurements in the simulator measure what they are supposed to measure (ie, technical skills in laparoscopy). We have previously published a comprehensive review of the validation of available laparoscopic simulators1

Face Validity

The process of developing the MISTELS tasks followed a structured plan. Skills specific to laparoscopy were identified by experienced laparoscopic surgeons, then modeled in a trainer box. Metrics were developed to reward efficiency and precision. Measurements of precision or errors were based on evaluation of the end product, rather than style (motion analysis). Although motion analysis has been shown to be a valid indicator of skill and has construct validity,5 it does not provide feedback to the student that can be used effectively for educational purposes.

Once the MISTELS skills and metrics were developed, a questionnaire was administered to members of the SAGES FLS committee. After performing the MISTELS tasks, they were asked to identify the skills required to complete each task and to identify any skills fundamental to laparoscopic surgery that were not represented in these tasks. The skills that were found to not be effectively covered in MISTELS included initial trocar placement and cannulation of a tubular structure. In addition, although dissection skill was not directly measured, the cutting task requires the skill of traction essential for dissection. Fine control of instrument tips, required for effective performance of each task, also contributes to skill at dissection. The high correlation between MISTELS total score and dissection of the gallbladder from the liver bed during laparoscopic cholecystectomy provides further evidence that this skill is reflected in the MISTELS program. A cannulation drill is lacking in MISTELS but can be easily added to the training box simulator. Such a task is in the process of being finalized and evaluated for reliability and validity.

Construct Validity

Demonstration of construct validity is a process whereby the score in the test instrument (MISTELS) is compared with a construct that can be reasonably assumed to correlate with technical skill in the operating room. In this study, we showed that for each task there were highly significant differences in MISTELS score between groups that would be expected to differ in their laparoscopic skills (junior resident versus intermediate level residents versus chief residents/fellows/practicing laparoscopists).

This larger data set adds to previous evidence for construct validity. In the paper first describing MISTELS,3 we correlated performance scores with year of residency training in 30 subjects. We found that as residents advanced through their training program, mean scores improved and variance decreased. A later study showed that the frequency distribution of MISTELS scores between juniors and seniors overlap very little, providing additional evidence for construct validity.6 When MISTELS performance was followed in 23 residents as they progressed through their residency, significant improvement was found over time. Since these residents did not practice on MISTELS between their 2 assessments, these improvements reflected increased skill acquired in the operating room, again reflecting evidence of construct validity. This confirms what we previously found in a smaller group of residents who were tested on 3 tasks 2 years apart.7

Concurrent Validity

Concurrent validity refers to the relationship between concurrent measurements of the test score and another construct that reflects the measure being evaluated (technical skill). Significant correlation was found between MISTELS performance in 12 PGY3 general surgery residents and performance of similar tasks developed and measured in a live animal model.4 In another study, general surgery residents who had high clinical in-training evaluations of technical skill also had better scores in MISTELS.8

Predictive Validity

In an ideal simulator, scores measured would be able to predict performance in the operating room. This is the best proof of validity of the simulator's metrics. In order for predictive validity to be tested, it is first necessary to develop a means to measure laparoscopic skills in the operating room. A global assessment tool to measure laparoscopic technical skill during dissection of the gallbladder from the liver bed during laparoscopic cholecystectomy was created. This was shown to be reliable and to have construct validity (Vassiliou et al, unpublished observations). Evaluations between 2 trained observers had a reliability of >0.80 (the level required for high-stakes evaluation) and correlated with evaluations by the supervising attending surgeon and the resident doing the case. There was a significant difference in the measured performance of junior residents and seniors. In the present study, we compared the intraoperative assessment of laparoscopic skill using this validated measure, to MISTELS scores assessed within 2 weeks of the intraoperative assessment. The high correlation (r = 0.81) between these 2 measures provides the strongest evidence for MISTELS validity.

MISTELS as an Assessment Tool

If MISTELS is to be considered for use as a summative assessment tool, its specificity and sensitivity needs to be described. Receiver operator curves for MISTELS have been generated, showing that a cumulative score of 270 (equivalent to a mean score of 54 across the 5 tasks) maximizes sensitivity, specificity, and positive and negative predictive values (each >0.80). These values were also described for various other cutoff scores.6

MISTELS as an Educational System

A simulator is of greatest value if a student using that device enhances skill with practice. Improvement in skill can be measured not only in the simulator but should be transferable to the real world. MISTELS has been evaluated as an educational tool. A controlled trial was carried out using 12 PGY3 residents randomized to receive weekly practice in all MISTELS tasks compared with no practice. After 6 weeks, the MISTELS trained residents not only performed better in MISTELS but were significantly more skillful and efficient when asked to suture 2 loops of bowel together using either intracorporeal (82% improvement compared with no practice group) or EC tying techniques (16% improvement compared with no practice group).4

In the present study, novices practiced a very basic task (peg transfer) in MISTELS, and after 40 iterations they improved their mean IC-tying score to a criterion level equivalent to that of the mean score for PGY5 residents in their first trial At baseline, 65% of the subjects could not even complete the task, and only 10% achieved the mean junior resident level score. By learning the fundamental laparoscopic skills required to do the transferring drill (depth perception, eye-hand coordination, control of instruments, working with a fulcrum effect, etc), medical students developed the skill set to do the more complex suturing drill. This evidence of transferability demonstrates value of MISTELS in technical skill acquisition.

CONCLUSION

MISTELS is a physical simulator designed to teach and measure technical skills fundamental to performance of laparoscopic surgery. This program has been described in detail, and the metrics associated with MISTELS have been extensively evaluated and shown to be reliable and valid. Practice using MISTELS results in enhanced technical skills that can be translated from the inanimate laboratory to the patient. MISTELS is an inexpensive, portable, and flexible system. To maximize the educational benefit of simulation in technical skills, such a program should be coupled with an educational curriculum teaching the knowledge and judgment fundamental to laparoscopic surgery. The SAGES has incorporated MISTELS as the manual skills component of its Fundamentals of Laparoscopic Surgery program. This comprehensive program puts together the cognitive and technical aspects of laparoscopy in a standardized educational curriculum, accompanied by a validated assessment tool that can verify learning.

Discussions

Dr. Bruce D. Schirmer (Charlottesville, Virginia): I wish to congratulate Dr. Fried and his colleagues from McGill on what I think is a landmark paper in quantifying skills testing for minimally invasive surgery.

I have 2 questions. Dr. Fried, what is your recommendation for the applicability and the use of this testing system in the FLS program for surgical training programs? Second, could an appropriate regulatory body in surgery adapt such a testing system to document competence in laparoscopic manual skills?

Dr. Gerald M. Fried (Montreal, Quebec, Canada): Many different program directors and fellowship directors are looking at ways to ensure that people are being trained up to a criterion level of performance.

The MISTELS system and the FLS program offer a standardized curriculum, like we heard with the thoracic surgery program this morning, so that people don't have to reinvent the wheel in terms of the process of training people.

The other important issue is the ability to measure the knowledge, judgment, and skill components of what people are actually learning. So not only do people take the training program but they can be measured against their peers. We actually have been able to define mean values and 95% confidence intervals for different levels of training; we can also establish criterion levels of performance that we want our residents to achieve in their program.

The second issue with respect to the regulatory board is really not something that I can answer. All I can say is that we have taken great pains to validate and ensure that the measurements are reliable, that they live up to those criteria that have been established for high stakes evaluation. I think that it is probably more appropriate for us as a body to ensure that we train ourselves and assess ourselves properly. If we do that within our professional organizations, we won't need to get outside regulators to implement less valid assessment strategies.

Dr. Thomas R. Gadacz (Augusta, Georgia): Dr. Fried, this is a very nice paper and an excellent presentation. Can these relatively inexpensive models that you have described be taken out of the training area and used at home? Some of the major factors are time and convenience. Can your residents practice at home with these models?

Dr. Gerald M. Fried (Montreal, Quebec, Canada): Thank you, Dr. Gadacz. I think that is a good point.

One of the things we have done in other studies in the past is to utilize the same exercises in a very inexpensive mirrored-type box trainer. We have a number of these available at McGill. The residents can sign them out like they can a textbook, take them home and practice their exercises, which has been useful.

The next level would be that the MISTELS/FLS boxes, which are now commercially available through SAGES, can be attached to a laptop, so you don't have to have a whole laparoscopic set-up. You can make sure that there are several of them around the various training institutions so that trainees have access to them. It is a little bit more expensive than a textbook, but it is much more easily available than, for instance, a virtual reality system would be.

Dr. Frank G. Moody (Houston, Texas): This is a superb piece of work because you bothered to validate the technique that you used and it is simple, and I imagine that it is relatively cheap. Maybe you can tell us about the cost of the box.

What do you recommend in terms of setting up a skills lab as these more advanced types of tools become available that could possibly work even better as a training device? What is needed in terms of the space, equipment, and personnel, etc?

Dr. Gerald M. Fried (Montreal, Quebec, Canada): I think that the cost of the FLS system is in the range of about $3000, including the objects, all the tools, and the didactic portion of the program. This price includes the opportunity to go to a test center and complete the assessment component.

I am a big believer at this point in time of the benefit of physical trainers as opposed to virtual reality trainers, because they are less expensive, much more easily modified, they have haptics, and they allow you to compare the use of different instruments.

For instance, if you get a new needle holder and you want to compare it to the currently available one, you could just try it out in your box. If you want to do a cannulation exercise, you can create a hollow tube and you can practice cannulating it with a cholangiogram catheter. It is enormously flexible.

I would think that at this point in time this is where I would put my energy and resources—until the point where the virtual reality based systems are more affordable and more adaptable. Right now the virtual reality systems probably belong in centers where they can be evaluated in the same way as the physical systems have been.

Dr. Nathaniel J. Soper (Chicago, Illinois): I would like to congratulate Dr. Fried and his group on amplifying the results of research that they have done now for several years. Clearly there are lots of pressures to move training out of the training room and into a laboratory, and only by validating these skills can we do this. I have a couple of questions for you, Dr. Fried.

Only about two-thirds of the variance of the intraoperative performance can be predicted from the MISTELS scores, as you showed in this elegant paper. What accounts for the other third?

You talked a little bit about the comparison between these training boxes and virtual reality simulators. Have you compared training on VR simulators to the video-training simulator in terms of operative performance, or compared to those who have not had any training whatsoever?

Finally, on a more mundane level, one of the problems with this system that I see is that it requires a trained proctor to actually be physically present to score performance, measuring the accuracy of cutting, recording elapsed time, etc. And that adds time, cost and difficulty to the system. Are you working on an automated scoring system such motion analysis or something similar? And about how long does it take to run somebody through all 5 tasks?

Dr. Gerald M. Fried (Montreal, Quebec, Canada): Thank you, Dr. Soper. Your first question is a very interesting one. I would be very disappointed if 100% of performance in the operating room could be predicted on the basis of watching someone do these structured tasks. In fact, having about two-thirds of the variance predictable is actually quite remarkable. I expect that the other third is probably related to patient factors and anatomic recognition, which is not learned here. Some of the variance relates to the patient's disease, inflammation, confidence of the resident in a operating room environment compared to a lab, and procedural knowledge, none of which are measured. So I think that those factors account for at least one-third of the variance in what we are measuring in the operating room.

The comparison of a VR system to a physical simulator is also a very interesting question. A lot of energy has gone into studying VR systems. Right now the current state of the art in virtual reality training systems is they are an abstract representation of the skills that we have represented physically.

The haptics are very primitive. If at all present, they are very expensive. If you look at suturing, which is the skill that people look to learn the most in the training system, the behavior of the stitch is very poorly represented by the computer algorithms.

They are very inflexible in terms of the equipment, and the systems themselves are not intuitive. As a result, there is a learning curve just to understand how the systems work. I think at this point in time there are a lot of benefits of the physical system.

One of the problems with the physical systems, as you have stated, is that it ideally requires someone to be there to supervise. There is a lot of evidence that having a trained preceptor is probably the best educational model, in fact, rather than have people learn independently and perhaps incorrectly.

We have 2 VR systems in our lab, and we find that residents sometimes actually learn bad habits that give them good scores. It is a little like a video game; they learn shortcuts. These shortcuts can reinforce bad habits. Certainly people can go to the lab and they can practice in the system without having anybody there once someone has shown them how to do the various drills. They could measure certain aspects of performance themselves. That being said, if we are going to use it for an evaluation system for certification, you are going to need to have a proctor there.

We are working on a physical training system that will have sensors built into the box that will allow self-scoring. I think that will be an exciting addition, but at a substantially increased cost. So we have to figure out again what the cost-benefit ratio is.

We have not yet started a prospective trial comparing training or no training and looking at outcomes in the operating room because we have just recently developed an outcome measure in the operating room that we feel is reliable and valid.

What we want to do is to make sure that the evaluation in the operating room is generalizeable; and, if so, we would like to construct a multicenter trial comparing virtual reality training, physical reality training, and no training, and look at performance in the operating room.

It takes about 45 minutes to run through the MISTELS system. Probably it would take you about 20 minutes, because you are very good. It takes me about 45 minutes. It just depends on how quick you are and how good your skill level is.

Your final question related to motion analysis, which is another interesting tool that is being used. Our feeling was that we wanted to have a measure that was intuitive and understandable to a student. If you tell them when they tie their knot that their knot wasn't square and it slipped, or that they didn't completely close the slit in the drain, they understand that. If you tell them that they have problems with depth perception, you could then feed them back into the lab and get them to practice those skills until they get up to a level that will conquer their problems with depth perception.

But if you tell them that their angle path length is very long, what does that mean? Although it is a valid measure of skill, it is not as useful as an educational model. We prefer to use something that is more intuitive that can relate in a feedback model as an educational tool tied to remedial exercises to enhance performance.

Dr. Jeffrey P. Gold (New York, New York): This is a really very important contribution, because not only have you described the technique for teaching, but you have also described the technique for measuring and to begin to do some important investigation on the acquisition of technical operative skills. We have learned that it takes residents a variable amount of time and effort to pick up technical stills. Some acquire them faster than others. Unfortunately, some don't master them at all.

I am wondering based upon your experience whether in your medical student group or in your junior resident group how wide a spectrum of first measured skills you saw and how different was the rate of acquisition of the skills? Were you able to predict in any way which students or residents would move along quickly or which progress more slowly with greater technical challenges?

I very much enjoyed your presentation and look forward to seeing more from your group.

Dr. Gerald M. Fried (Montreal, Quebec, Canada): One of the things that we learned from this work is the importance of developing criterion levels of performance which should be the goal of the educational system, as opposed to a specific length of time either in the lab or on a rotation. Our goal was to first identify those levels and then use that as the target level for training. The time to learn is variable. In that study of medical students, each one of them achieved the criterion level of performance within a relatively short period of time.

We have had one resident who we had followed over the residency program, earlier on in our simulator research, who never improved. This person was independently dropped from the program on the basis of lack of acquisition of technical skills. It is the only real outlier who I could say was untrainable.

We have not been able to predict, or we don't have enough data yet to allow us to predict, long-term skills or performance based on early data. We have a number of people now that we have tracked as they have gone through the program, and every one of them has significantly improved their performance as measured in the MISTELS. I think almost everybody is trainable, although the amount of time that it takes to train is quite variable.

Dr. Lawrence W. Way (San Francisco, California): Dr. Fried, I think that this is a fine work in creating a structured system to measure these things that we all consider to be basic skills. But I think it is premature to think that we actually have the pot of gold somewhere within our sight as far as being able to biopsy one's technical abilities and make big predictions based upon these limited types of observations, because the extent to which they really represent a practicing surgeon is limited. To me it is comparable to what we always did with a needle holder and sutures, tying on our bed sheets, picking up peas with forceps off the kitchen table, and that sort of thing, which could be studied in the same way.

Now, if one looks at the literature from the vast amount of studies in the acquisition of procedural skills in other domains—such as athletics, which we often time compare ourselves with—what appears to be the case is that people use different talents at different stages of learning. And as Dr. Gold just said, the learning goes over a long period, and it is much more complex than is represented by something that is sort of manual dexterity, which is what we are looking at here.

So although this is a good start, I think it is a little early to be giddy about our possibility of maybe predicting a full career from what we can do in our simulation boxes right now. When I put it that way, it is hard for you to disagree. But I am really just trying to say that we should exercise some caution before we get too overblown about it.

Dr. Gerald M. Fried (Montreal, Quebec, Canada): Dr. Way, I expect that you would be very unhappy to have a resident come to the operating room to do an open hernia repair who can't tie a knot. In fact, I am sure that you want that person practicing those basic skills on their bed posts and lab coats until they can tie their knots automatically and hold their needle holders appropriately.

We are not measuring competence in the operating room with MISTELS. We are saying that there is a basic skill set and we have tried to identify some of those basic skills that are different in laparoscopy than open surgery. Before someone comes to the operating room who is going to operate on my mother, I want to make sure that at the very basic levelhe or she has mastered the fundamental skill set. Those skills should be acquired in the laboratory. They shouldn't be learned in the operating room.

I am tired of seeing people come in to the OR and put their instruments right through the liver because they haven't yet learned cues for depth perception. Or their hands are all over the place because they are not used to the amplification of movement of an instrument placed through a trocar.

What we are saying here is that there is a basic skill set; that skill set is different for laparoscopy. There is an opportunity to learn these skills in an environment that is much more comfortable, under less pressure than the OR, and does not put our patients at risk. Once they have acquired the fundamental skills, then the judgment, the knowledge, the interpretation of what is seen must be learned in order for someone to be competent.

I am not saying that MISTELS is a measure of a person's ability or competence as a surgeon, but it is a measure of a skill set that they need to obtain. If they don't have basic skills, they will never be competent.

Footnotes

Supported by an unrestricted educational grant by Tyco Healthcare, Canada.

Address correspondence to: Gerald M. Fried, MD, 1650 Cedar Avenue, #L9.309, Montreal, Quebec, Canada H3G 1A4. E-mail: gerald.fried@mcgill.ca.

REFERENCES

- 1.Feldman LS, Sherman V, Fried GM. Using simulators to assess laparoscopic competence: ready for widespread use? Surgery. 2004;135:28–42. [DOI] [PubMed] [Google Scholar]

- 2.Fried GM. Simulators for laparoscopic surgery: a coming of age. Asian J Surg. 2004;27:1–3. [DOI] [PubMed] [Google Scholar]

- 3.Derossis AM, Fried GM, Abrahamowicz M, et al. Development of a model for training and evaluation of laparoscopic skills. Am J Surg. 1998;175:482–487. [DOI] [PubMed] [Google Scholar]

- 4.Fried GM, Derossis AM, Bothwell J, et al. Comparison of laparoscopic performance in vivo with performance measured in a laparoscopic simulator. Surg Endosc. 1999;13:1077–1081. [DOI] [PubMed] [Google Scholar]

- 5.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2003;236:458–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fraser SA, Klassen DR, Feldman LS, et al. Evaluating laparoscopic skills: setting the pass/fail score for the MISTELS system. Surg Endosc. 2003;17:964–967. [DOI] [PubMed] [Google Scholar]

- 7.Derossis AM, Antoniuk M, Fried GM. Evaluation of laparoscopic skills: a 2-year follow-up during residency training. Can J Surg. 1999;42:293–296. [PMC free article] [PubMed] [Google Scholar]

- 8.Feldman LS, Hagarty SE, Ghitulescu G, et al. Relationship between objective assessment of technical skills and subjective in-training evaluations in surgical residents. J Am Coll Surg. 2004;198:105–110. [DOI] [PubMed] [Google Scholar]