Abstract

Background:

As the attitude to adverse events has changed from the defensive “blame and shame culture” to an open and transparent healthcare delivery system, it is timely to examine the nature of human errors and their impact on the quality of surgical health care.

Methods:

The approach of the review is generic rather than specific, and the account is based on the published psychologic and medical literature on the subject.

Conclusions:

Rather than detailing the various “surgical errors,” the concept of error categories within the surgical setting committed by surgeons as front-line operators is discussed. The important components of safe surgical practice identified include organizational structure with strategic control of healthcare delivery, teamwork and leadership, evidence-based practice, proficiency, continued professional development of all staff, availability of wireless health information technology, and well-embedded incident reporting and adverse events disclosure systems. In our quest for the safest possible surgical health care, there is a need for prospective observational multidisciplinary (surgeons and human factors specialists) studies as distinct for retrospective reports of adverse events. There is also need for research to establish the ideal system architecture for anonymous reporting of near miss and no harm events in surgical practice.

The psychologic basis of human errors as they affect surgical practice is reviewed, and a broad categorization of errors that may occur in the surgical setting and components of a holistic defense system to ensure safe practice are outlined.

The title of the Institute of Medicine's Report “To Err Is Human”1 is a part quotation of a famous statement attributed to pope Alexander VI (Rodrigo Borgia, 1431–1503) one of the most degenerate secular popes of the Renaissance whose only redeeming feature was patronage of the arts. The other half of this statement reads “to forgive divine,” which has been persistently overlooked by all stakeholders of health care.

The propensity for error is so intrinsic to human behavior and activity that scientifically it is best considered as inherently biologic, since faultless performance and error result from the same mental process. All the available evidence clearly indicates that human errors are random unintended events. Indeed, although we can predict by well-established Human Reliability Assessment techniques2 the probability of specific errors, the actual moment in which an error occurs cannot be predicted, and certainly there is “no aura” that an error is about to happen in any medical setting. From the behaviorist perspective, error is the flip side of correct human performance, itself the product of cognitive ability and the level of psychomotor skill, which in professions requiring dexterity and eye-hand coordination (as surgery), determines safe and optimal execution (proficiency). In this context, one has to differentiate between “innate ability or aptitude,” which an individual is born with and brings to particular tasks, and “skill,” in execution, which is acquired by training and reinforcement.

Errors may or may not have a consequence (when the error translates into an accident or adverse event). The nature of the error is the same irrespective of outcome, no consequence, or consequence (adverse event), is dependent on chance and on the prevailing circumstances, which are external to the actor and which Reason3,4 attributes to latent defects within the system in which the individual operates. There are 2 scenarios when an error does not result in an adverse event: near-miss (close call) and no harm events. A near-miss is defined when an error is realized just in the nick of time and abortive action is instituted to cut short its translation. In the no harm scenario, the error is not recognized and the deed is done but fortunately for the actor, the expected adverse event does not occur. The distinction between the 2 is important and in best exemplified by reactions to administered drugs in allergic patients. A prophylactic injection of cephalosporin may be stopped in time because it suddenly transpires that the patient is known to be allergic to penicillin (near-miss). If this vital piece of information is overlooked and the cephalosporin administered, the patient may fortunately not develop an anaphylactic reaction (no harm event).

Problems With Expert Evaluation of Adverse Events Caused by Human Error

The outcome of any human activity is used by society as the yardstick of performance5 based on expert opinion or more structured root cause analysis. Since these verdicts are based on retrospective assessment, they are inevitably subject to hindsight bias first documented by Fischhoff.6,7 The severity of the adverse event influences further the verdict (outcome severity bias). The study in anesthesia reported by Caplan et al8 documented an inverse relationship between outcome severity and the judgments of the expert anesthesiologists on the appropriateness of care. Thus, although they may satisfy the need of society to endeavor to establish what went wrong, these retrospective expert evaluations of adverse events do not constitute a robust approach to the study of human error. One of the reasons for this is that, in the analysis process of adverse events, there is often insufficient information on the dilemmas, uncertainties, and demands, which faced the practitioner involved in the accident. These reports may therefore provide an incomplete picture of the evolution of the adverse event. However, there is some evidence that hindsight bias can be reduced by experience in accident reconstruction.9,10 The problem of hindsight bias in medicolegal cases is particularly relevant to surgical practice and has been insufficiently studied. Judges are also influenced by outcome severity and consider more harshly iatrogenic injury cases, resulting in long-term disability as distinct from the same injuries (errors), which are compatible with full recovery. In their turn, medical experts involved in these cases (prosecution and defense) can only provide considered opinions gained from retrospective analysis of the case notes. A Dutch report11 has identified 3 problems relating to medical expert reporting: 1) the medical experts may push up standards (or indeed lower them when acting for the defense), 2) experts often provide different/contrasting opinions, and 3) their judgments are influenced by their knowledge of the outcome. Another report has suggested that information on outcome should be withheld from experts providing reports.12 The feasibility of this recommendation in such medicolegal cases is suspect.

Positive Side of Errors

Errors under controlled conditions have a positive effect on learning and are thus important in training and acquisition of skills13 as the trainee is able to appreciate the cause of the error, how to correct and, more importantly, how to avoid it. This well-known observation was confirmed by a study,14 which compared 2 trainee groups: error training versus an error-avoidant training. In subsequent testing, the error-training subjects outperformed the error-avoidant group. Technical errors enacted by trainee surgeons within skills laboratories on both physical and virtual reality simulation models constitute an essential component of the gain in the proficiency for the execution of component surgical tasks such as suturing, tissue approximation, and anastomosis, provided the necessary feedback is provided15,16 and are nowadays recognized as essential adjuncts to the clinical apprenticeship system.

Performance at the Coal Face (Sharp End)

This has been studied and reported by Cook and Woods17 who outline 3 categories of factors that affect performance of front-line operators: 1) knowledge in context, how knowledge relevant to the situation is recalled; 2) mind-set, how individuals focus on one perspective/part of a complex and changing environment including how they shift their attention over time as the situation evolves; and 3) interacting goals, how individuals balance or make tradeoffs between interacting conflicting goals.

Knowledge-in-context factors include incorrect (buggy) knowledge, inert knowledge, and oversimplifications. Possession of knowledge is not enough for expertise since this requires organization such that it can be activated and applied as and when the situation demands.18 The related problem is known as inert knowledge (knowledge not activated and used when required). Expertise is dependent on situation-relevant knowledge that is accessible under the conditions in which the task is performed and underpins the ability of the practitioner to manage varying and difficult situations.

Although simplifications are considered useful as they aid the understanding of complex biologic pathways/processes, they can when lacking essential details (oversimplifications) lead to misconceptions and hence to errors. The adverse effect of oversimplifications among medical students and doctors has been documented.19

Mind-set problems include defects in attention control (distraction), loss of situation awareness,20 and fixation (cognitive lock up) with failure to revise the situation as it evolves/changes. Situation awareness refers to the cognitive processes involved in forming and changing mind-set. In the medical context, situation awareness involves the identification of an evolving situation, which may lead to an adverse event unless abortive or corrective action is taken.

Fixation (cognitive lockup) relates to the fact that accidents evolve rather than confront the operator out of the blue. Hence, the proficient expert surgeon makes initial decisions with the full realization that these may change if or as the situation evolves and thus is able to revise his or her strategy as dictated by the situation through an updating feedback process.21 Cognitive lockup occurs when this revision process breaks down and the surgeon remains fixed on an erroneous assessment of the situation and fails to revise the strategy in time to avoid a disaster.22,23 In surgery, it is often occasioned by stress such as operation on a life-threatening emergency or an unexpected life-threatening complication during a planned elective intervention.

Garden path problems24 are common in laparoscopic surgery where the surgeon's interface with the operative situation is limited to the image display of the operative field. Thus, the surgeon may mistake a right hepatic duct for the cystic duct and thus lead up “the garden path” toward the enactment of an iatrogenic bile duct injury. These errors reflect a failure of the problem solving process.

Errors in the Surgical Setting

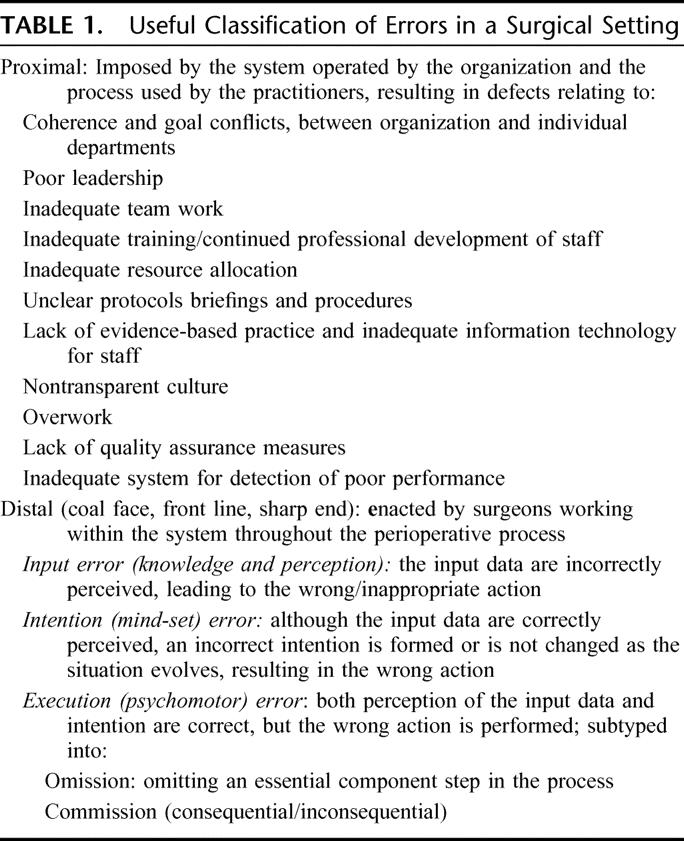

In the first instance, it is important to stress that errors and their nature are the same in all human activities/professions. Thus, it is more appropriate to refer to errors in the medical/surgical setting, as there are few or no “medical” errors, ie, that are exclusively specific to healthcare delivery with no equivalent in other professions. Nonetheless, it is useful for practical purposes to distinguish 2 broad categories of surgical errors: proximal and distal (coal face, front line, sharp end). The former relate to the system and process and the latter to the individual practitioners operating within the system (Table 1). Only factors relevant to errors in the operating room are discussed since all other distal errors surgeons may or may not commit, they share with physicians in other disciplines.

TABLE 1. Useful Classification of Errors in a Surgical Setting

Surgical Errors in the Operating Room

Extrapolation from reported studies1,25–28 indicate that 40% to 50% of hospital errors are enacted in the OR. However, there are certain qualifications, especially as these studies have overlooked the importance of the “intensivity factor” (the opportunities or encounters for an error to occur) as the denominator. These absolute figures may thus give a misleading verdict on error/incident rates and probabilities in the OR setting. If we consider an average general surgical OR suite with 10 operating rooms in each of which an average of 4 operations are performed in a day (conservative estimate). If we assume that, aside from the steps of the operation itself, these patients from the time of induction of anesthesia to exit from the recovery ward receive a median of 21 “treatments events” (based on personal observation of medium severity operations), these 40 patients would have collectively undergone 840 treatment activities in 1 day. If we compare the opportunity for error affecting these patients undergoing surgery with a surgical ward of 40 patients convalescing from surgery, the opportunity for error in this environment would be much less. Expressed as a ratio of incidents/number of treatment activities, my assumption (since there are no data) is that the risk of an error in the 2 settings would be little different, although the absolute number of adverse events is likely to be higher in the OR. The effect of the intensivity factor is likewise operative in intensive care units. One study documented that intensive care entails 178 activities per patient per day and reported an average of 1.2 errors per patient per day.29 This works out to safety ratio of 0.955 compared with civilian airline ratio of 0.98.

Indeed, observational and time-motion studies in the OR have been few. One such study by a multidisciplinary team of human factors specialists and surgeons involving continuous recording of observations in the OR of 10 complex operations identified problems in communication, information flow, workload, and competing tasks as having a measurable negative impact on team performance and patient safety in all these cases.30

The OR is the classic zone of conflicting goals between the individual practitioners, surgical teams, and the institution. The problem arises when the organization, as is usually the case, is geared to maximizing efficiency and productivity of the OR suite, but without providing adequate OR personnel resource. Conflicts also arise in relation of targets toward reducing “turn-around” times, which may impact on safety. The subject of safe and sustainable OR activity in relation to the number of interventions that can be carried out in a safe system and the required resource needs to be addressed by prospective observational and time-motion studies. The goals of such teams must furthermore be in line with those of the institution such that conflicts are avoided. Within the National Health Service targets imposed on Hospital Trusts to reduce waiting times for elective surgery have certainly raised problems. An example of goal conflict between team and organization was documented by a survey on attitudes to teamwork and safety in the operating room in 17 hospitals all within the National Health Service in Scotland.31 The results of this postal survey show that, while the teams working in the ORs placed a clear priority on patient safety against all other goals, eg, waiting lists, cost cutting, etc, some members felt that this was not fully endorsed by the administration.

The manner and procedures by which an organization reacts to adverse incidents, near-misses and overt disasters will influence materially how the practitioners at the coal face deliver health care. A transparent reporting and disclosure policy shared by all specifically avoids covert defensive medical practice with its inevitable increased costs from unnecessary investigations, specialist consultations, and delays. The impact of adverse events in the surgical setting to the various stakeholders (organization, society, legal) is variable from Limelight (news worthy) errors, which hit the headlines and account for the majority of litigations, eg, wrong site surgery (identification errors) and misappropriation (leaving instruments, etc, inside patients) and technical operative errors, as opposed to Cinderella errors, eg, resuscitation, prophylaxis errors, situation awareness, etc, which in the context of healthcare safety are probably more important because of their greater relative frequency (although data are not available). The concern in this context is that defense measures and protocols are rightfully used against limelight errors, but defense systems against Cinderella errors may be less robust.

Components of Safe Surgical Practice

In recent years, the systems approach based on human factors engineering to safe healthcare delivery has predominated the published literature.32–35 While acknowledging the importance of this, by itself it does not provide the entire solution, certainly as far as errors in the surgical setting are concerned for the simple reason that surgeons in operating on their patients constitute the actual treatment and this human activity is much more complex that flying civilian airplanes or manning complex nuclear plants. Furthermore, all Boeing jumbo jets are the same, but patients undergoing surgery for the same disease differ markedly from each other. Error-free delivery of health care is a utopia; instead, what is realizable is error-tolerant health care (one that prevents or reduces the risk of translation of errors into harm to our patients) but in achieving this, all 3 elements: system, process, and individual practitioners must be up to scratch.36 This holistic paradigm has to be the answer to achieving the safest possible health care, reaching the ALARP region (as low as is realistically possible), bearing in mind that health care is a far more dangerous profession (1 death per 1000 encounters) than either the scheduled airlines or nuclear industries with 1 death per 100,000 encounters.

The components of a safe surgical practice is a top-down process that includes: organizational structure with strategic control of healthcare delivery, teamwork and leadership, evidence-based practice, continued professional development of all staff (training and education), availability of health information technology, proficiency, and well-embedded incident reporting and disclosure systems.

Organizational Structure and Strategic Control of Healthcare Delivery

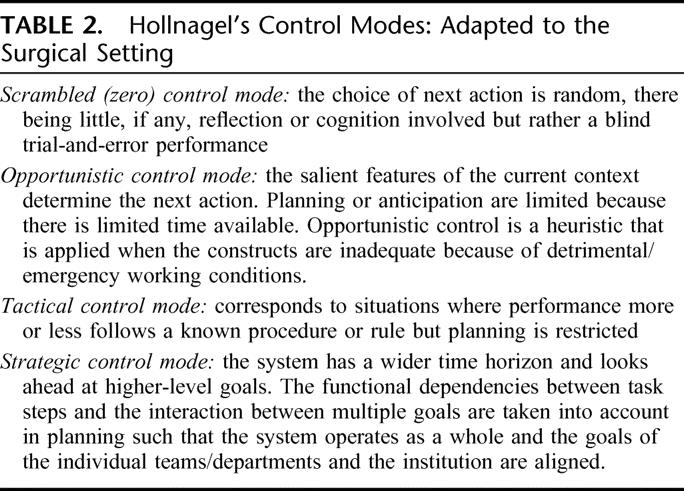

Modern cognitive science is based on functional models proposed by Neisser37 and Hollnagel,38 the originator of the Contextual Control Model used extensively in aviation but which is relevant to healthcare practice. This model outlines 4 types of control modes: scrambled, opportunistic, tactical, and strategic, each associated with a particular type of human performance (Table 2). In daily life, humans usually function in Hollnagel's opportunistic and tactical control modes in an “equilibrium condition between feedback and feed-forward, which corresponds to an efficient use of the available resources.” Human cognitive performance in the healthcare setting varies from the opportunistic mode in life-threatening emergency situations to the tactical control mode in elective health care. However, the strategic control where the goals of individual teams or departments are aligned with those of the institution is rarely encountered largely because of different priorities, defects in the organizational structure, and inadequate resources. In my view, strategic control is what the medical profession should aim for because it effectively integrates the individual activity of the practitioners with the system and the organization, a holistic approach with active patient participation.

TABLE 2. Hollnagel's Control Modes: Adapted to the Surgical Setting

Teamwork and Leadership

Although the importance of teamwork in all high-risk professions has been widely recognized, team training, especially at the multiprofessional level, has been largely overlooked in surgical training programs. Teamwork in health care involves a significant shift from individual provider toward collective responsibility to patient care. Teamwork does not threaten leadership; instead, it strengthens it by flattening the apex of the hierarchy pyramid and broadening its base with recognized benefits: 1) team spirit and interactive learning/development, 2) more effective resource management, 3) improved communication, and 4) improved performance and hence quality of care.39

Effective leadership of such medical teams is distinguished by 3 attributes: ability to direct and function in context, integrity, and professional standing. The most appropriate type of teamwork and leadership in medicine is akin to that of the Crew Resource Management now in its fifth version (which includes error management) used so effectively by civilian airlines40,41 and which trains senior staff to listen to subordinate staff (concerns, advice, clarification matters) and considers their input and perspective in the decision-/action-making process. Three processes operative in such an integrated team approach are of direct relevance to operating room practice: clear procedures, checklists, and briefings and debriefings. The Crew Resource Management model is also the basis for team training in the management of critical situations such that every member of the team knows exactly what to do and how.

Surgical Proficiency

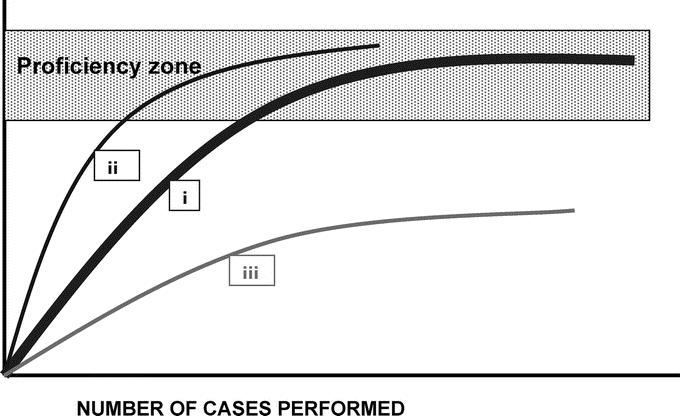

The word proficient is synonymous with expert in most dictionaries. In the surgical context, proficiency refers to expert independent execution of treatment (operation). Surgical proficiency is best modeled by a zone rather that a sharp threshold, since surgeons bring different levels of innate abilities to the task (average, above average, below average).42 In this model, the proficiency zone represents what society expects of fully trained surgeons: an outcome that varies from one surgeon to another within very narrow limits defined by the upper and lower thresholds. For any given operation, there will be some surgeons who perform at the top end (at the upper threshold of the proficiency zone), the performance of the majority of surgeons (as judged by clinical outcome) for the same operations will be within the zone (acceptable standards of care), but none should be below the lower threshold. The proficiency-gain curve represents the course (with time and number of procedures performed) by which an individual surgeon reaches the proficiency zone when he or she is able to perform the operation consistently with a good norm-referenced outcome (Fig. 1).

FIGURE 1. Model of surgical proficiency zone (indicated by the shaded area): the proficiency-gain curves are specific to individual surgeons and to individual operations and indicate the number of interventions (of a specific nature) performed to reach proficient faultless execution in the automatic unconscious mode when the surgeon is able to perform the operation consistently well without having to think about it. The curve (i) represents the majority (with normal innate attributes for manual and hand-eye coordination tasks). The curve (ii) represents the naturally gifted surgeons (with above average innate attributes) who become master surgeons and perform at the top of the proficiency zone, whereas the curve (iii) represents the few who never reach proficient execution. The only scientific method for studying these proficiency-gain curves is by techniques involving observational clinical human reliability assessment (OCHRA).

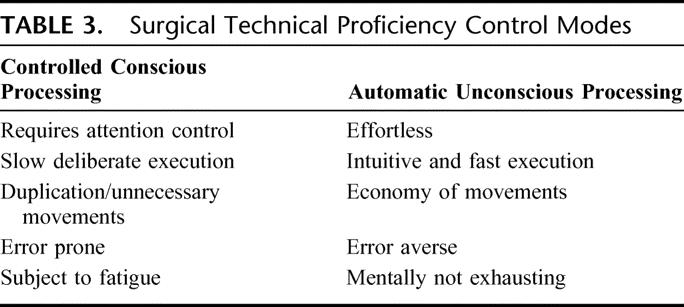

Unfortunately, in the surgical literature, the proficiency-gain curve is usually and most inappropriately referred to as the learning curve. There are 2 reasons why this terminology should be dropped. The first is because it is inappropriate on semantic grounds: learning refers only to cognitive knowledge (eg, language, steps of the operation), whereas proficiency refers to the ability to execute the procedure well, a process by which the individual passes from the controlled conscious processing of the trainee to the automatic unconscious mode of the expert (Table 3). Obviously, the transition from one to the other with training and experience is ultimately dependent on the innate abilities for psychomotor eye-hand coordination skills that individuals bring to operative surgery and which account for the different slopes of the curve shown in Figure 1, between “master” and “average” fully competent surgeons. The second reason for avoiding this terminology relates to the connotation that lawyers put on it in cases of litigation: that surgeons “learn” at the expense of patient outcome. The acquisition of proficiency in the execution of an operation can be studied by methodology adapted from Human Reliability Assessment techniques.43 The various published reports on “learning curves” for specific operations based exclusively on incidence of iatrogenic injuries and morbidity rates, and reaching conclusions/recommendations on the “x” number of operations required for acquisition of proficiency in the execution of an operation, lack both science and validity. The truth is that the proficiency-gain curve is specific to the individual as it is to the intervention.

TABLE 3. Surgical Technical Proficiency Control Modes

Proficiency also entails early detection and correction of underperformance by the “impaired physician” (mental health or substance abuse) or inept surgeon such as to enable detection of problems early on before undue harm is done. Although there is now a clear obligation on all practitioners to report all instances of impairment among colleagues, an organizational system based on internal structured continuous appraisal of all staff is more effective in the early detection of both categories of underperformance and should replace exclusive reliance on whistleblowers. In some countries, this appraisal system is used for the basis of revalidation of doctors in the various professions.44,45 Revalidation is essentially a process that demonstrates that doctors remain fit to practice. Rather than posing a threat, it is primarily concerned with confirming that good doctors are providing good medical care and provides support for their continued professional development.44 In safeguarding patients, it identifies weaknesses, impairments in certain doctors (a small percentage) and attempts to correct these in a safe and supportive environment. Revalidation equally protects doctors from unfounded criticisms of their practice. It should pose no threats to the proficient surgeon.

Access to Medical Information Technology at the Coal Face

It seems obvious that access to health information technology at the coal face of medical practice is of value in improving quality of care and patient safety, although there seems to have been no prospective studies to confirm this widely prevalent view. The subject has been recently reviewed46 by a detailed scrutiny of the published English-language literature. The review was based on 257 studies, which met the inclusion criteria, 25% of which were from only 4 academic institutions that used their own internally developed systems. Only 9 studies reported on commercially available systems. The 3 benefits identified by this review were: increased adherence to guideline-based care (evidence-based practice), enhanced surveillance and monitoring, and decreased medication errors. The authors also found evidence for increased efficiency as a consequence of decreased utilization of care but were unable to confirm cost benefit because of limited data. The findings of this review stress 3 key issues, the need for homogenous systems for medical information technologies, their availability by wireless systems and more widespread usage in hospitals, essential if we are to confirm their effectiveness in improving patient safety and quality of care.

Incident Reporting Systems

There are 2 issues that need clarification: 1) the mandatory reporting of all adverse events to the appropriate Hospital Safety Committee with their disclosure to patients and relatives; and 2) the anonymous reporting of near-misses and no harm events.47–55 The importance of near-misses and no harm events stems from the documented observation of their frequency: they occur 300 to 400 times more often than actual adverse events and thus enable quantitative analysis and modeling.54 The limited experience in health care with anonymous incident reporting systems, largely in anesthesia, critical care, primary and emergency care, and neonatology indicates their general acceptability and fewer barriers to data collection than actual morbidity, allowing complete evaluation and feedback to formulate prophylactic strategies.

The crucial issue for anonymous incident reporting that would be applicable to surgery is the appropriate choice of system architecture, which would lend itself to widespread adoption and ease of use by surgeons. The subject of systems architectures is well reviewed by Johnson.56 Unfortunately, there has been no reported evaluation of the relative merits of the various architectures used. The simplest is the local oversight reporting architecture. This is confidential rather than anonymous and in the case of hospitals such systems report to the Hospital Safety Management Committee. The second is the simple monitoring architecture, exemplified by the Swiss Confidential Incident Reporting in Anesthesia System.53 The limitation with this WEB based system is that it lacks a central manager and, for this reason, it precludes follow-up investigations. Its advantages include simplicity and general acceptability. The third category, known as regulated monitoring architecture, uses an external agency, which on receipt of the contribution can go back to the contributor for clarification of the nature of the incident. The fourth category is known as the gatekeeper architecture, and this is used by all major national systems. It involves a greater degree of managerial complexity. The contributions are initially sent to a local manager who may take remedial action before passing it on to the national gatekeeper. In turn, the gatekeeper decides on its importance before allocation of investigative resources on the incident. The last category, devolved architecture system, avoids the use of a gatekeeper and uses local supervisors instead who decide on its importance and report to the Safety Management Group for allocation of investigative resources.

Error Disclosure to Patients and Relatives

Errors in hospital care are ultimately the responsibility of the organization and thus relate as much to the organization as to the individual provider(s) involved in the accident. The systems' approach necessitates 1) hospital safety policy/error investigation team, which should include near-miss and no harm incidents as well as actual adverse events and 2) system disclosure team with fully trained members in the correct nondefensive empathic disclosure of errors/accidents to patients and or relatives.57 The disclosure has to be full. A reported study comparing operative team members and patients' perceptions of error and its disclosure to patients based on interviews of team members (surgeons, nurses, anesthesiologists) and patients showed that, while team members and patients agreed on what constitutes an error, there was discordance in the perception of what should be reported. Whereas most patients strongly advocated full disclosure of errors (what happened and how), team members preferred to disclose only what happened.

The general recommendation is that the disclosure team should exclude the practitioner involved in the adverse event for obvious reasons, eg, emotional state, partiality, etc. Studies have confirmed that the manner of disclosure of the adverse event caused by medical error influences the response to the disclosure from patients and relatives. One such Internet-based study in Germany involving 1017 participants while confirming the severity of the outcome as the most important single factor in the choice of action (including sanctions against the provider), an honest and empathic disclosure decreases significantly the probability of retaliatory action.58

CONCLUSION

Although still center stage and extensively reported in the last 8 years, we are still some way off resolving the problem of adverse events to our patients resulting from human error. More scientific and prospective observational studies are needed with less emphasis on retrospective chest-beating reports. Clinicians have to work closely with human factors specialists in future studies. Key areas that merit investigation include optimal and standardized architecture for reporting of both adverse events and near-miss/no harm incidents and standardized medical information technologies available on tap through easily accessible/portable electronic devices. Studies based on near-miss/no harm incidents are likely to provide more useful and prophylactic information on errors in the medical setting than root cause analysis of actual adverse events. The “systems” approach by itself will not suffice as patients are simply not machines and the proficiency of the individual practitioners must be guaranteed: the two are complementary. Revalidation should pose no threats to any good doctor, and surgeons would do well to embrace it. Whatever solution is reached, hospital health care must operate on Hollnagel's strategic mode with no conflict between goals of the individual teams and those of the organization: the two must be perfectly aligned.

Footnotes

Reprints: Alfred Cuschieri, Department of Surgery, Division of Medical Sciences, Scuola Superiore S'Anna di Studi Universitari, P.zza dei Martiri della Liberta' n.33, Pisa 56127, Italy. E-mail: alfred.cuschieri@sssup.it.

REFERENCES

- 1.Kohn LT, Corrigan JM, Donaldson MS, eds. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press, 2000. [PubMed] [Google Scholar]

- 2.Kirwan B. A Guide to Practical Human Reliability Assessment. London: Taylor & Francis, 1994. [Google Scholar]

- 3.Reason J. Human Error. Cambridge, MA: Cambridge University Press, 1990. [Google Scholar]

- 4.Reason J. Education and debate. Human error: models and management. BMJ. 2000;320:768–770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lipshitz R. Either a medal or a corporal: the effects of success and failure on the evaluation of decision making and decision makers. Org Behav Hum Decis Proc. 1989;44:380–395. [Google Scholar]

- 6.Fischhoff B. Hindsight ≠ foresight: the effect of outcome knowledge on judgment under uncertainty. J Exp Psychol. 1975;1:288–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fischhoff B. For those condemned to study the past: heuristics and biases in hindsight. In: Kahneman D, Slovic P, Tversky A, eds. Judgment Under Uncertainty: Heuristics and Biases. Cambridge, MA: Cambridge University Press, 1982. [Google Scholar]

- 8.Caplan R, Posner K, Cheney F. Effect of outcome on physician judgments of appropriateness of care. JAMA. 1991;265:1957–1960. [PubMed] [Google Scholar]

- 9.Henriksen K, Kaplan H. Hind sight bias, outcome knowledge and adaptive learning. Qual Saf Health Care. 2003;12(suppl 2ii):46–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hertwig R, Fanselow C, Hoffrage U. Hindsight bias: how knowledge and heuristics affect reconstruction of the past. Memory. 2003;11:357–377. [DOI] [PubMed] [Google Scholar]

- 11.Girard RW, Stolker CJ. The physician as an expert in medical negligent cases. Ned Tijdschr Geneeskd. 2003;147:819–823. [PubMed] [Google Scholar]

- 12.Hugh TB, Tracey GB. Hindsight bias in medical legal expert reports. Med J Aust. 2002;176:277–278. [DOI] [PubMed] [Google Scholar]

- 13.Arnold B, Roe R. User errors in human-computer interaction. In: Frese M, Ulich E, Dzida W, eds. Human Computer Interaction in the Work Place. Amsterdam: Elsevier, 1987:203–220. [Google Scholar]

- 14.Frese M, Brodbeck F, Heinbokel T, et al. Errors in training computer skills: on the positive function of errors. Human-Computer Interaction. 1991;6:77–93. [Google Scholar]

- 15.Schijven MP, Jakimowicz JJ, Broeders IA, et al. The Eindhoven laparoscopic cholecystectomy training course. Improving operative room performance using virtual reality training: results from first EAES accredited virtual reality training curriculum. Surg Endosc. 2005;19:1220–1226. [DOI] [PubMed] [Google Scholar]

- 16.Tang B, Hanna GB, Carter F, et al. Competence assessment of laparoscopic operative and cognitive skills: objective structured clinical examination (OSCE) or observational clinical human reliability assessment (OCHRA). World J Surg. 2006;30:527–534. [DOI] [PubMed] [Google Scholar]

- 17.Cook RI, Woods DD. Operating at the ‘sharp end:' the complexity of human error. In: Bogner MS, ed. Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum, 1994. [Google Scholar]

- 18.Bransford J, Sherwood R, Vye N, et al. Teaching and problem solving: research foundations. Am Psychologist. 1986;41:1078–1089. [Google Scholar]

- 19.Feltovich PJ, Spiro RJ, Coulson R. The nature of conceptual understanding in biomedicine: the deep structure of complex ideas and the development of misconceptions. In: Evans D, Patel V, eds. Cognitive Science in Medicine: Biomedical Modeling. Cambridge, MA: MIT Press, 1989. [Google Scholar]

- 20.Gopher D. The skill of attention control: acquisition and execution of attention strategies. In: Attention and Performance, vol. 14. Hillsdale, NJ: Lawrence Erlbaum, 1991. [Google Scholar]

- 21.Woods DD. The alarm problem and directed attention in dynamic fault management. Ergonomics. 1995;38:2371–2393. [Google Scholar]

- 22.De Keyser V, Woods DD. Fixation errors: failures to revise situation assessment in dynamic and risky systems. In: Colombo AG, Saiz de Bustamante A, eds. System Reliability Assessment. The Netherlands: Kluwer Academic, 1990:231–251. [Google Scholar]

- 23.Gaba DM, DeAnda A. The response of anesthesia trainees to simulated critical incidents. Anesth Analg. 1989;68:444–451. [PubMed] [Google Scholar]

- 24.Johnson PE, Moen JB, Thompson WB. Garden path errors in diagnostic reasoning. In: Bolec L, Coombs MJ, eds. Expert System Applications. New York: Springer-Verlag, 1988. [Google Scholar]

- 25.Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse effects and negligence in hospitalized patients. N Engl J Med. 1991;324:370–376. [DOI] [PubMed] [Google Scholar]

- 26.Gawande A, Thoma E, Zinner M, et al. The incidence and nature of surgical adverse events in Colorado and Utah. Surgery. 1999;126:66–75. [DOI] [PubMed] [Google Scholar]

- 27.Wilson RM, Runciman WB, Gibberd RW, et al. The Quality in Australian Health Care Study. Med J Aust. 1995;163:458–471. [DOI] [PubMed] [Google Scholar]

- 28.Baker GR, Norton PG, Flintoft V, et al. The Canadian adverse events study: the incidence of adverse events among hospital patients in Canada. Can Med Assoc J. 2004;170:1678–1686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Donchin Y, Copher D, Olin M, et al. A look into the nature of human error in the intensive care unit. Crit Care Med. 1995;23:294–300. [DOI] [PubMed] [Google Scholar]

- 30.Christian CK, Gustafson ML, Roth EM, et al. A prospective study of patient safety in the operating room. Surgery. 2006;139:159–173. [DOI] [PubMed] [Google Scholar]

- 31.Flin R, Yule S, McKenzie L, et al. Attitudes to teamwork and safety in the operating theatres. Surgeon. 2006;4:145–151. [DOI] [PubMed] [Google Scholar]

- 32.Shojania KG, Duncan BW, McDonald KM, et al. Making healthcare safer: a critical analysis of patient safety practices [Evidence Report/Technology Assessment No. 43; AHRQ Publication No. 01-E058]. Rockville, MD: Agency for Healthcare Research and Quality, 2001.

- 33.Leape LL. A systems analysis approach to medical error. J Eval Clin Prac. 1997;3:213–222. [DOI] [PubMed] [Google Scholar]

- 34.Weinstein L. A multifaceted approach to improve patient safety, prevent medical errors and resolve the professional liability crisis. Am J Obstet Gynecol. 2006;194:1160–1165. [DOI] [PubMed] [Google Scholar]

- 35.MacMillan DK. The quality management system as a tool for improving stakeholder confidence. Qual Assur. 2000;8:201–204. [DOI] [PubMed] [Google Scholar]

- 36.Cuschieri A. Reducing errors in the operating room. Surg Endosc. 2005;19:1022–1027. [DOI] [PubMed] [Google Scholar]

- 37.Neisser U. Cognition and Reality: Principles and Implications of Cognitive Psychology. San Francisco: Freeman, 1976. [Google Scholar]

- 38.Hollnagel E. Context, cognition, and control. In: Waern Y, ed. Co-operation in Process Management: Cognition and Information Technology. London: Taylor & Francis, 1998. [Google Scholar]

- 39.Ingram H, Desombre T. Teamwork in health care: lessons from the literature and from good practice around the world. J Manag Med. 1999;13:51–58. [DOI] [PubMed] [Google Scholar]

- 40.Helmreich RL. The evolution of crew resource management training in commercial aviation. http://www.psy.utexas.edu/helmreich [DOI] [PubMed]

- 41.Helmreich RL. On error management lessons from aviation. BMJ. 2003;320:781–785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cuschieri A. Lest we forget the surgeon. Semin Laparosc Surg. 2003;10:141–148. [DOI] [PubMed] [Google Scholar]

- 43.Tang B, Hanna GB, Joice P, et al. Identification and categorization of technical errors by Observational Clinical Human Reliability Assessment (OCHRA) during laparoscopic cholecystectomy. Arch Surg. 2004;139:1215–1220. [DOI] [PubMed] [Google Scholar]

- 44.Du Boulay C. Revalidation for doctors in the United Kingdom: the end or the beginning? BMJ. 2000;320:1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dauphinee WD. Revalidation of doctors in Canada. BMJ. 1999;319:1188–1190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:742–752. [DOI] [PubMed] [Google Scholar]

- 47.Barach P, Small D. Reporting and preventing medical mishaps: lessons form non-medical near miss reporting systems. BMJ. 2000;320:759–763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Beckmann U, West LF, Groombridge GJ, et al. The Australian Incident Monitoring Study in Intensive care: AIMS-ICU. The development and evaluation of an incident reporting system in Intensive Care. Anaesth Intens Care. 1996;24:314–319. [DOI] [PubMed] [Google Scholar]

- 49.Billings C. Incident Reporting Systems in Medicine and Experience With the Aviation Safety Reporting System. National Patient Safety Foundation, 1998. [Google Scholar]

- 50.Flanagan JC. The critical incident technique. Psychol Bull. 1954;51:327–358. [DOI] [PubMed] [Google Scholar]

- 51.Gaba D. Anaesthesiology as a model for patient safety in health care. BMJ. 2000;785–788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Maass G, Cortezzo M. Computerizing incident reporting at a community hospital. J Qual Improve. 2000;26:361–373. [DOI] [PubMed] [Google Scholar]

- 53.Staender S. Critical Incidents Reporting System (CIRS): Critical Incidents in Anaesthesiology, Department of Anaesthesia, University of Basel, Switzerland, 2000 http://www.medana.unibas.ch/cirs/

- 54.Flanagan JC. The critical incident technique. Psychol Bull. 1954;51:327–358. [DOI] [PubMed] [Google Scholar]

- 55.Khare RK, Uren B, Wears RL. Capturing more emergency department errors via an anonymous web-based reporting system. Qual Manag Health Care. 2005;14:91–94. [DOI] [PubMed] [Google Scholar]

- 56.Johnson C. Architecture for incident reporting http://www.dcs.gla.ac.uk/∼johnson

- 57.Laing BA. A system of medical error disclosure. Qual Saf Health Care. 2002;11:64–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Schwappach DLB, Koeck CM. What makes error unacceptable? A factorial survey on the disclosure of medical errors. Int J Qual Health Care. 2004;16:317–326. [DOI] [PubMed] [Google Scholar]