Abstract

Objective

Glioblastoma multiforme (GBM) is a grade IV brain tumour with very low life expectancy. Physicians and oncologists urgently require automated techniques in clinics for brain tumour segmentation (BTS) and survival prediction (SP) of GBM patients to perform precise surgery followed by chemotherapy treatment.

Methods

This study aims at examining the recent methodologies developed using automated learning and radiomics to automate the process of SP. Automated techniques use pre-operative raw magnetic resonance imaging (MRI) scans and clinical data related to GBM patients. All SP methods submitted for the multimodal brain tumour segmentation (BraTS) challenge are examined to extract the generic workflow for SP.

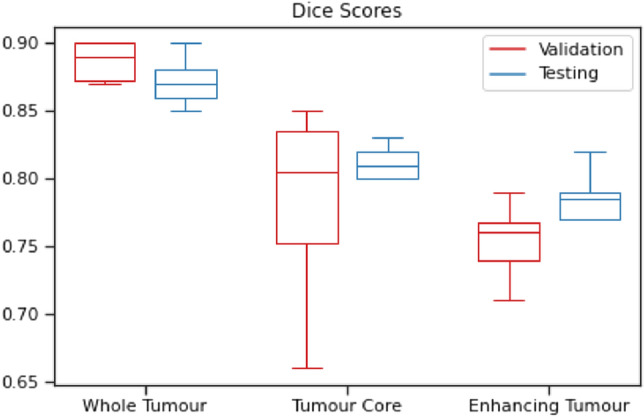

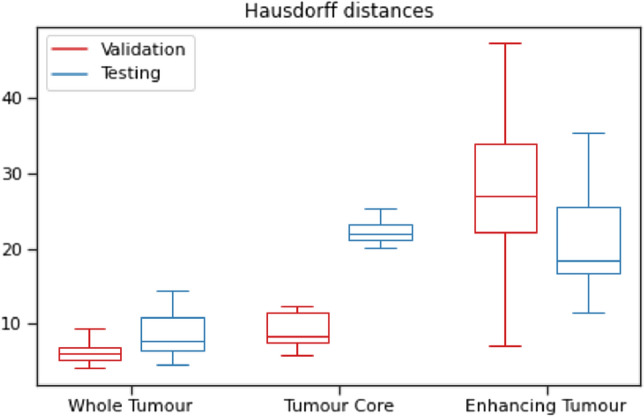

Results

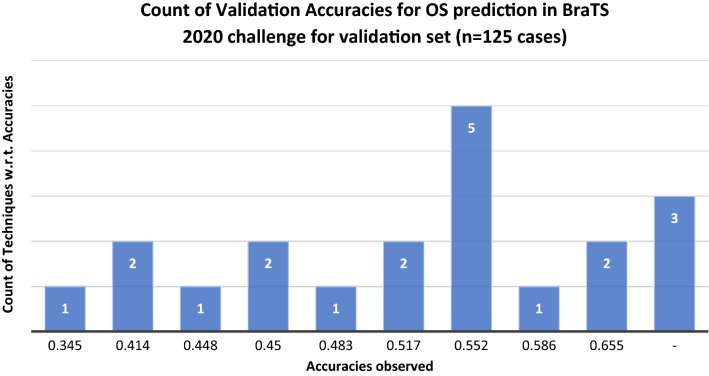

The maximum accuracies achieved by 21 state-of-the-art different SP techniques reviewed in this study are 65.5 and 61.7% using the validation and testing subsets of the BraTS dataset, respectively. The comparisons based on segmentation architectures, SP models, training parameters and hardware configurations have been made.

Conclusion

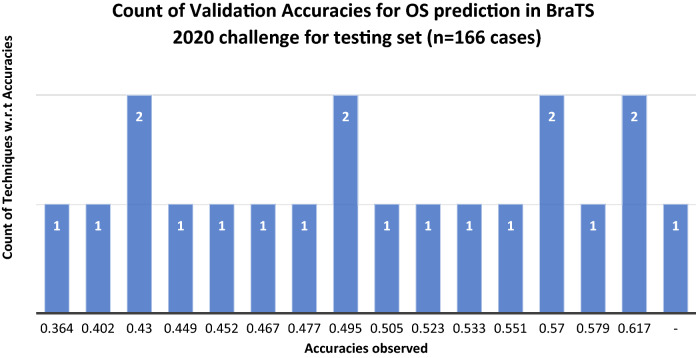

The limited accuracies achieved in the literature led us to review the various automated methodologies and evaluation metrics to find out the research gaps and other findings related to the survival prognosis of GBM patients so that these accuracies can be improved in future. Finally, the paper provides the most promising future research directions to improve the performance of automated SP techniques and increase their clinical relevance.

Keywords: Glioblastoma multiforme, 3D MRI scans, Brain tumour segmentation, Survival prediction, Deep learning, Machine learning, Radiomics

Introduction

Uncontrollable growth of abnormal cells in the brain is termed a brain tumour [1]. According to a study conducted in the United States, 23 people out of every 100,000 diagnosed yearly were found to have brain tumours related to the central nervous system (CNS) [2]. Based on aggressiveness and malignancy, tumours are categorised as benign (non-cancerous) or malignant (cancerous) in the medical field, as shown in Fig. 1. Primary brain tumours are those tumours that develop from the same brain tissue or nearby underlying tissues. Primary tumours can be either benign or malignant. Secondary or metastatic tumours are generally malignant tumours that originate elsewhere and rapidly expand towards brain tissues. There are about 120 types of brain tumours [3].

Fig. 1.

Categories of a brain tumour based on aggressiveness and origin location

Gliomas are the deadliest and aggressive malignant tumours that originate from the glial cells present in the brain. Over 60% of brain tumours found in adults are gliomas. Astrocytomas, ependymomas, GBM, medulloblastomas, and oligodendrogliomas are all various types of gliomas. The World Health Organization (WHO) has categorised gliomas into four grades based on the tumour's malignancy, aggressiveness, infiltration, recurrence, and other histology-based characteristics. Also, molecular gliomas, including the examination of isocitrate dehydrogenase (IDH) mutation status for diffuse astrocytomas and GBM, as well as 1p/19q co-deletion for oligodendroglioma, were included in the most recent WHO classification. Low-grade gliomas (LGGs) are classified as grade I or grade II gliomas, whereas high-grade gliomas (HGGs) are classified as grades III or grade IV gliomas. Their grade level defines the aggressive nature of gliomas. Gliomas of grade I are benign and slow-growing tumours. It is more likely that gliomas of grade II may regrow and expand over time. Grades III and IV, on the other hand, are extraordinarily lethal, and the survival rate is poor [4]. The survival duration of glioma patients is significantly dependent on the tumour grade [5]. The projected 5-year and 10-year relative survival rates for patients with malignant brain tumours are 35.0 and 29.3%, respectively [2]. HGG patients have shorter survival times than LGG patients. Based on the appearance of gliomas, the whole cancerous area is divided into subcomponents, such as necrosis (NCR), enhancing tumour (ET), non-enhancing tumour (NET), and peritumoural edema (ED). The tumour core (TC) of glioma is composed of NCR, ET, and NET. The Whole Tumour (WT) of glioma is composed of NCR, ET, NET and ED. It is important to note that LGG does not contain ET in most situations, but HGG does, as shown in Fig. 2.

Fig. 2.

Grades of glioma a raw LGG patient’s MRI, b annotated LGG patient’s MRI, c raw HGG patient’s MRI, d annotated patient’s MRI [6]

Regardless of histological type, most brain tumours produce cerebral ED, which is a significant cause of death and disability in patients. ED from a brain tumour develops when plasma-like fluid penetrates the brain's extracellular space through defective capillary connections in tumours [7]. Gliomas of high grade can expand quickly and infiltrate widely. GBM is a deadly kind of cancer that may develop in the spinal cord and brain. GBM may strike at any age, although it is more common in elderly adults. It may aggravate migraines, vomiting, nausea, and seizures. It may be significantly harder to cure, and recovery is frequently impossible to achieve. Therapies can halt the growth of cancer cells and relieve symptoms. It varies significantly in terms of position, size, and shape [8].

Despite advances in medical treatments related to brain tumours, the median survival duration of GBM patients remains 12–16 months [2]. The complete resection of the cancerous brain region followed by chemoradiation therapy is the gold standard to cure brain tumours [9, 10]. The location of glioma and its growth rate determine its impact on the neurological system. Gliomas have varying prognoses based on their anatomic location, histology, and molecular features [11]. It has posed a severe threat to people's lives; hence, early identification and treatment are essential [12]. The research related to SP of GBM patients is crucial for their treatment planning. Unfortunately, the clinical data required for the SP model are minimal; therefore, the best methodologies are required to handle the available data. Numerous factors, such as tumour histology, symptoms, tumour position, patient's age and molecular features, are used by oncologists to determine a patient's prognosis and an appropriate brain tumour treatment approach [13]. Patients are routinely followed up with post-operative MRI scans due to their poor overall survival (OS). MRI-based biomarkers can allow doctors to detect tumour growth patterns during its progression. Researchers believe that looking at the imaging characteristics of pre-operative MRI scans associated with a patient’s survival is an excellent starting point towards finding such biomarkers [6].

Motivation and key objectives

There are tremendous opportunities and challenges in OS prediction due to the high availability of complex and high-dimensional data. So, a need arises to study the literature of SP based on pre-operative MRI scans and clinical data. This work aims to provide an idea about the latest methodologies used to predict the survival time of GBM patients. The focus is only on the BraTS 2020 dataset because the maximum number of patients fall in this version. The techniques used in earlier BraTS challenges have already been reviewed in some studies. Thus, this paper focuses on reviewing only the techniques based on the 2020 version of the BraTS dataset [14].

The key objectives of this work are as follows:

To study the challenges faced in performing automated OS prediction using pre-operative MRI scans and clinical data.

To provide a generic workflow of OS prediction techniques used for GBM patients only using the BraTS dataset.

To understand the evaluation metrics used in comparing the performance of automated techniques designed specifically for OS prediction.

To provide meaningful information to the readers and young researchers about the BTS and OS prediction using pre-operative MRI scans.

The paper has been divided into eight sections. Section 1, as above, introduces us to the various issues of the problem under study. Section 2 describes the need for automated MRI analysis to predict OS of GBM patients, followed by the challenges faced in automating MRI analysis. Section 3 delves into the specifics of the BraTS 2020 challenge's BTS and SP dilemmas, followed by details of the dataset. Section 4 examines the generic workflow, covering all the details required to understand the recent BTS and SP multiproblem solutions, followed by tabulated comparisons. Section 5 explains the assessment metrics used in comparing the performance of submitted solutions. The limitations of existing techniques used for SP are highlighted in Sect. 6. Section 7 concludes the paper with a discussion, while Sect. 8 provides the future research directions.

Magnetic resonance image analysis for brain tumour treatment planning

Due to its non-invasiveness and superior soft-tissue resolution, structural MRI is often used in brain tumour research. However, a single structural MRI is inadequate to segregate all tumour sub-regions due to imaging artefacts and challenges associated with diverse tumour sub-regions. Multimodal MRI (mMRI) provides additional information about various sub-regions of gliomas. T1-weighted MRI (T1), T2-weighted MRI (T2), T1-weighted MRI with contrast enhancement (T1ce), and T2-weighted MRI with fluid-attenuated inversion recovery (T2 FLAIR) are all examples of mMRI scans as shown in Fig. 3 [15]. The TC defines a majority of the tumour, which is generally removed. Areas of T1ce hyperintensity define the ET compared to T1 and healthy White Matter (WM) areas in T1ce. The appearance of the NCR and NET in T1ce is typically less intense than in T1 because it encompasses the TC and the ED. The WT shows the whole scope of the cancerous brain region, which is typically represented by a hyper-intense FLAIR signal [6].

Fig. 3.

Different multimodal MRI scans a FLAIR b T1 c T1ce d T2 [6]

The first phase toward treatment is delineating the tumour and its components, also known as tumour area segmentation simultaneously on several modalities [16]. This stage is carried out by radiologists in a clinical setting manually, which becomes hectic with the rising number of patients. The accuracy of tumour structure segmentation is critical for treatment planning. Due to the scattered tumour borders and the partial volume distortion in MRI, it may be difficult to separate the discrete regions of the tumour using different imaging methods. A vast quantity of three-dimensional (3D) data make manual segmentation time-consuming and subject to inter-rater and intra-rater variances.

Additionally, human perception of the imagery is non-replicable and highly dependent on experience. As a result, segmentation problems must be performed using automated computer-aided approaches to decrease the radiologists' workload and improve overall accuracy [17]. With the increasing incidence of gliomas in the population and the development of MRI in clinical analysis, pre-operative MRI-based SP may provide necessary assistance to clinical treatment planning. Early diagnosis of a brain tumour can also increase the chances of a patient's survival. BTS is essential for brain tumour prognosis, clinical judgement, and follow-up assessment. Automated image-based analysis can be used to determine molecular markers and prognosis without requiring an intrusive biopsy [18].

Need for automated techniques

In order to overcome the problem of manual tumour annotations performed by radiologists, computer-aided glioma segmentation is very much needed [19]. For the experts, the accuracy of categorical estimates for SP varies from 23 to 78% [20]. At the same time, there are specific difficulties, such as variability in image capturing mechanism and the lack of a robust prognostic model. Sub-regions with biological characteristics coexist within the tumour, such as NCR, NET, ET, and ED, which mMRI scans can highlight. It is still difficult to separate tumour sub-regions since such regions have a wide range of shapes and appearances. Doctors may diagnose and treat patients more accurately by automatically segmenting the tumour sub-regions based on pre-operative MRI scans. Automation can decrease the requirement for a physician with extensive training and expertise and most probably the time required for MRI scan analysis [21].

Challenges in magnetic resonance image analysis

The computer-assisted analysis makes it possible for a human specialist to detect the tumour within a shorter period and preserve the results. Computerized analysis demands sufficient data and suitable working procedures. Radiofrequency emissions produced by thermal mobility of the ions in the patient's body and the coils and electronic circuits in the MRI scanner are responsible for the poor signal-to-noise ratio (SNR) and anomalies in raw MRI images. Image contrasts are reduced due to random fluctuation due to signal-dependent data biases. MRI non-uniformity refers to a non-significant variation in the intensity of the MRI signals. If the sample is not uniform, it may be due to radiofrequency coils or the acquisition pulse sequencing. MRI equipment collects unwanted information like skull, fat, or skin during brain scanning. Due to the diversity of MRI machine set-ups, the intensity profile of MRI scans may vary [14]. There are extremely few publicly available scans of brain tumours suitable for computer-aided analysis. The gathering of MRI scans from multiple institutions raises concerns about privacy and confidentiality. Another significant difficulty in medical image analysis is class imbalance. It may be challenging to generate imagery for an abnormal category since abnormal instances are rarer than regular ones [6].

Review methodology

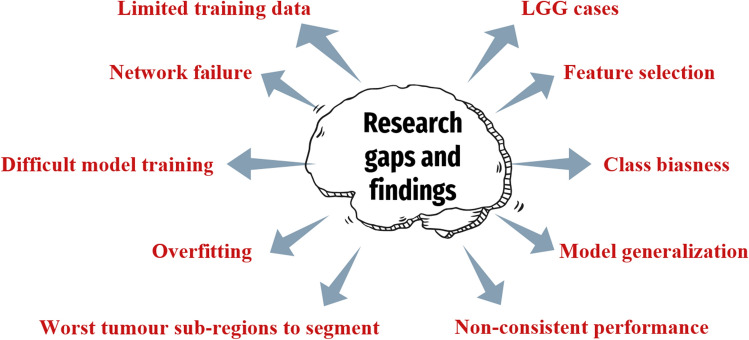

The literature review is focused mainly on studying the latest techniques proposed in the year 2020–2021 using the 2020 version of the BraTS dataset. The dataset is available at https://www.med.upenn.edu/cbica/brats2020/data.html. The criteria employed for inclusion or exlcusion of the research articles are described in Fig. 4. The Google scholar was used to find the required articles for this study. Initially, 1290 papers were obtained by conducting the search with keywords “brats”, “brain tumor”, “segmentation”, “survival” and “prediction”. Then, 163 papers were selected on the basis of 2020 version of the BraTS dataset. Further, 69 papers were selected on the basis of the abstract of the manuscripts. Lastly, 21 suitable papers finally met the inclusion criteria for this study.

Fig. 4.

Search criteria for selection of articles for the current study

The use of 3D MRI scans to segment gliomas aids in diagnosing and treating glioma patients. The BraTS challenge has been held yearly since 2012 as part of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) to assess current segmentation methods for pre-operative MRI-based BTS. The challenge makes medical data of GBM patients freely accessible for academic research purposes. This dataset contains a vast collection of pre-operative mMRI scans and clinical data with corresponding expert-derived ground-truth annotations across four MRI modalities [6, 22–25]. A considerable amount of research in BTS has been performed using the BraTS dataset [26–28]. The data are gathered from various sources, organisations, and scanners, including both LGGs and HGGs. The dataset contains training, validation, and testing data. For training data, segmentation ground-truth and patient’s survival information are provided. Validation and testing data, on the other hand, are devoid of segmentation indicators. Researchers can submit their results to the Centre for Biomedical Image Computing and Analytics (CBICA) Online Image Processing Portal to evaluate validation and test data performance.

The BraTS challenge 2020 consists of three tasks: segmentation of glioma brain tumour sub-regions, prediction of OS, and algorithmic uncertainty in tumour segmentation. The objective of the BTS task is to define the glioma and its internal components, including the WT, ET and TC. The SP task predicts the patient's survival time using the characteristics derived from MR imaging and clinical data. In recent years, the BraTS challenge has encouraged researchers to submit automated BTS and SP methods for evaluation and discussion using a publicly accessible mMRI dataset [6]. In the computer-aided diagnosis of gliomas, accurate BTS and SP are two crucial yet difficult objectives. Traditionally, these two activities were carried out independently, with little consideration given to their relationship. In order to extract robust quantitative imaging characteristics from a glioma image, it is necessary to accurately segment the three main sub-regions of the tumour image, viz. ET, TC and WT. Researchers think that these operations should be carried out in a cohesive framework to maximise the benefit.

Dataset

The objective of the BraTS 2020 challenge was to evaluate novel BTS and SP algorithms using pre-operative mMRI scans and clinical data. Various clinical techniques and scanners were used to collect data from a range of institutions [6, 22, 23]. In each patient's MRI scans, T1, T2, T1ce, and FLAIR (nifti files) are co-registered to the anatomical template of the T1 modality of the same patient. All patients in the training set are labelled (seg nifti file) for the three tumour tissues, i.e., ET, ED, and NCR/NET. All patient scans are skull-stripped and interpolated to isotropic resolution. One to four evaluators segmented the images using the same labelling technique to prepare the ground-truth annotations. The annotations were validated by experienced neuroradiologists [24, 25]. The details are summarized in Table 1.

Table 1.

BraTS 2020 data for brain tumour segmentation

| Data | Training | Validation | Testing | MRI voxel spacing | MRI dimensions |

|---|---|---|---|---|---|

| BraTS pre-operative MRI scans | 369 (293 HGG, 76 LGG) | 125 | 166 |

There were 236 patient data fields for patient survival statistics, including ID, age, OS (in days), and surgery status. GTR surgery was performed on 119 of the 236 data points, while the remainder underwent Subtotal Resection (STR) or Not Applicable (NA) operation. Out of 119 data with GTR status, one patient is alive; therefore, the datum used for survival training is 118. Based on this decreased number of observations, researchers created models to predict patient’s OS. The probability of survival days is divided into three categories: short term, mid-term, and long term. However, only patients with resection status GTR are considered for evaluation. The performance of proposed models can be assessed using validation and testing data. The details are highlighted in Table 2.

Table 2.

BraTS 2020 data for overall survival prediction

| Data | Training | Validation | Testing |

|---|---|---|---|

| Clinical data (.csv data) | 236 (119 GTR, 89 short, 60 mid and 87 long term survivors) | 29 | 107 |

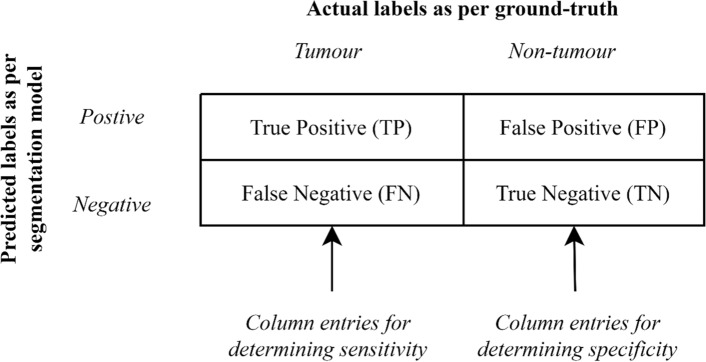

Brain tumour segmentation

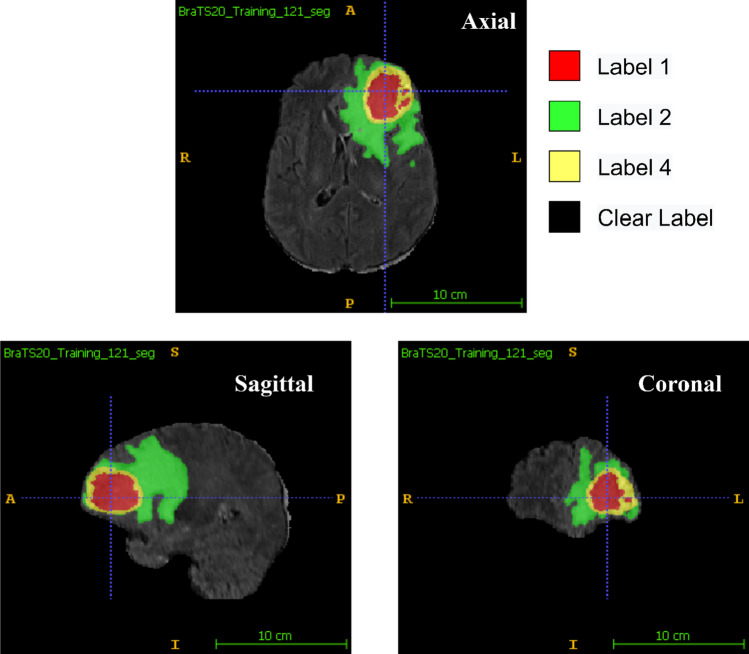

Researchers solve the BTS task using clinically obtained training data by creating an algorithm and generating segmentation labels of the different glioma sub-regions. ET, TC, and WT are the three sub-regions that are being examined in BraTS 2020 BTS. As illustrated in Fig. 5, the provided segmentation labels have values of 1 for NCR and NET (red colour), 2 for ED (green colour), 4 for ET (yellow colour), and 0 for background (black colour). Researchers submit their segmentation labels for assessment as a single multi-label file in nifti (.nii.gz) format to CBICA Image Processing Portal.

Fig. 5.

MRI scans with segmentation labels in multiple views [6]

Survival prediction

The segmentation labels generated after performing BTS can be used to extract imaging/radiomic properties which can be used further to train machine learning (ML) and deep learning (DL) models to forecast the OS of GBM patients. It is not essential to limit the parameters to just volumetric features only. Researchers can even use intensity, histological, textural, spatial, glioma diffusion attributes and histogram-based features along with clinical features like patient’s age and resection status. The SP task requires a prediction of OS for patients with gross total resection (GTR). The prediction should be the number of days, and validation of the algorithm is dependent on the accuracy of categorization into three categories, viz. long survivors (greater than 15 months), short survivors (less than 10 months), and mid-survivors (within 10 and 15 months). The prediction results obtained by researchers are submitted as a Comma Separated Value (CSV) file, including the subject ids to CBICA's Image Processing Portal.

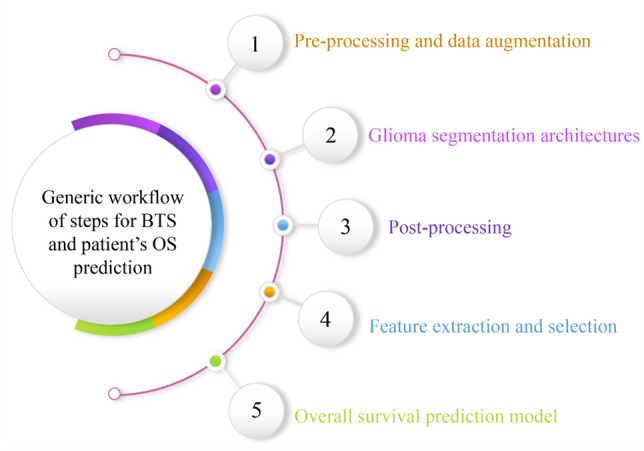

Generic workflow for brain tumour segmentation and survival prediction

Various end-to-end approaches for BTS and SP have been proposed in the literature. In some manner, all of these approaches assert their supremacy and usefulness above the others. In order to motivate researchers to present their automated BTS and OS prediction models, the BraTS challenge is organised yearly. It has been observed that the automated approaches accomplish the objectives of BTS and SP by following a similar set of steps. This section explains the generic workflow followed to predict the OS of patients, as shown in Fig. 6. SP models use pre-operative MRI scans and.csv data, including patient survival information.

Fig. 6.

The generic workflow for brain tumour segmentation and overall survival prediction

Pre-processing and data augmentation

Deep convolutional neural networks (DCNNs) are data operating algorithms. These algorithms require vast data in order to come up with accurate conclusions. Pre-processing and data augmentation are essential since such big datasets are seldom available. In addition to standardising input data, pre-processing techniques can also enhance critical data inside the original input scan, such as cropping the area of interest when it includes redundant or misleading information.

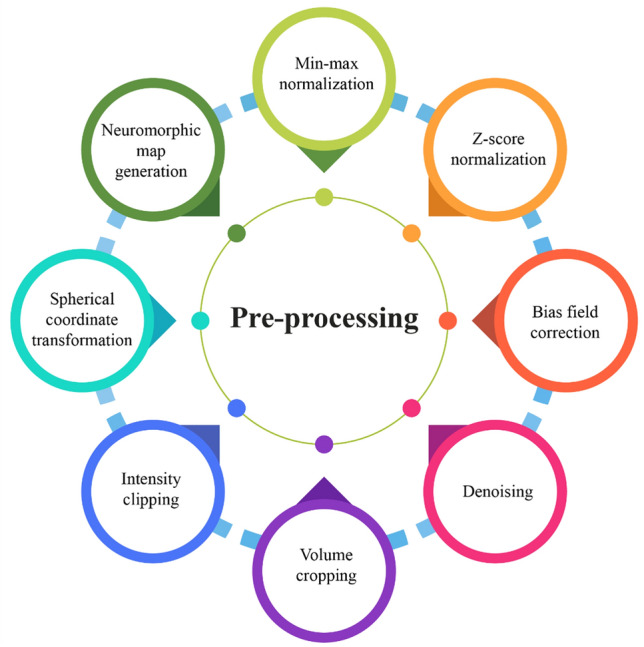

Pre-processing

The BraTS 2020 challenge organisers provided pre-operative MRI scans and ground-truth annotations for the training set. To minimise variability in patient scans induced by imaging procedures, the coordinators supplied data that had been co-registered to the T1 anatomical template of individual patients, normalised to 1 mm isotropic resolution, then skull-stripped. Pre-processing improves network performance and training. As shown in Fig. 7, the authors [29–46] employed the following pre-processing methods to account for intensity inhomogeneity throughout the dataset.

Min–max normalization

Fig. 7.

Types of pre-processing used in BraTS 2020 SP techniques

One of the most frequent methods for normalising data is min–max normalisation. ‘0’ and ‘1’ are the minimum and maximum values for each characteristic, respectively, and all other values are between 0 and 1.

value (image) = only the volume's brain area.

Although min–max normalisation ensures that all features have the same scale, it does not handle outliers well. Before feeding the MRIs as input for model training, González et al. [29], Parmar et al. [30], and Carmo et al. [31] normalised each MRI sequence in the range .

-

2.

z-score normalization

Researchers use z score intensity normalisation to minimise intensity variation within MRIs of different patients and variations among multiple modalities of the same patient. z score normalisation is a data normalisation method that eliminates the outlier problem. According to the formula given below, z score normalisation ensures intensity with a zero mean (mean) and a unit standard deviation (std) for each volume (considering only non-zero voxels):

value (image) = only the volume's brain area.

If the parameter ‘value’ is the same as the mean of all feature values, it is normalised to ‘0’; if it is less than the mean, it is a negative number; and if it is greater than the mean, it is a positive number. The original feature's standard deviation decides the magnitude of the negative and positive values. If the standard deviation of abnormal data is big, the normalised values will be closer to ‘0’. It deals with outliers but does not generate normalised data on the same scale. Researchers used z score normalization to set each MRI volume's mean and unit standard deviation before these volumes were fed into training models [29–42].

-

3.

Bias field correction

In MRI scans, low-frequency bias field signals are very smooth that cause MRI corruption, especially when the MRI scanners are obsolete. There are differences in the magnetic field strength of MRI scans captured with different procedures and scanners across various institutions. Segmentation architectures, textural analysis, and classification techniques that rely on the grey-level values of image pixels will not provide satisfactory results. It is necessary to correct the bias field signals before submitting raw MRI data to automated algorithms. Agravat et al. [33], Patel et al. [35], and Soltaninejad et al. [40] used N4ITK Insight Toolkit [44] to reduce bias field in all available structural MRIs.

-

4.

Denoising

MRI scans are often corrupted by Gaussian noise produced by the random thermal motion of electrical components and decreases image quality and reliability. Noise reduction can be achieved using a variety of noise filtering methods in order to improve image analysis. Agravat et al. [33] used denoising as a pre-processing step before training the segmentation architecture.

-

5.

Volume cropping

Patel et al. [35] firmly cropped all MRI scans to eliminate empty voxels outside the brain area. The MRI data used for training and generating inference have been pre-truncated to a size of from the original image's centre point MRI volumes were similarly cropped by Akbar et al. [42] and González et al. [29] from the central point.

-

6.

Intensity clipping

Pang et al. [43] reduced the amount of background region by using intensity trimming. Carmo et al. [31] also clipped MRI sequences within the interval

-

7.

Spherical coordinate transformation

Russo et al. [45] proposed spherical coordinate transformation as a pre-processing stage to improve segmentation results. Pre-training weights for future training stages were achievable due to the utilisation of spherical transformation in the first cascade pass. Extreme augmentation was also added via the spherical coordinate transformation. It was a practical step since it provides invariance for rotation and scaling. However, such invariance has disadvantages, mainly when dealing with WT segmentation; it introduces many false-positive areas. As a result, the authors employed a Cartesian model to filter away false-positive areas discovered via spherical pre-processing.

-

8.

Neuromorphic map generation

Han et al. [46] used a neuromorphic inspired pre-processing technique for tumour segmentation. It followed the concept of attention-based learner combined with Convolutional Neural Network (CNN) to generate saliency maps which aided in better image analysis. This pre-processing technique mimicked the brain's visual cortex and enabled attention-based learning as a pre-determined method for predicting target object regions. During the BraTS 2019 challenge, the neuromorphic attention module demonstrated the effectiveness of training 3D objects with a 2D UNet constraint. The previous 2D UNet with neuromorphic attention module used three channels of incoming image data instead of the initial four channels [47]. Based on the generated saliency maps, this study analysed four-channel MRI data as three-channel input scans. The goal was to include neuromorphic characteristics into the input MRI data.

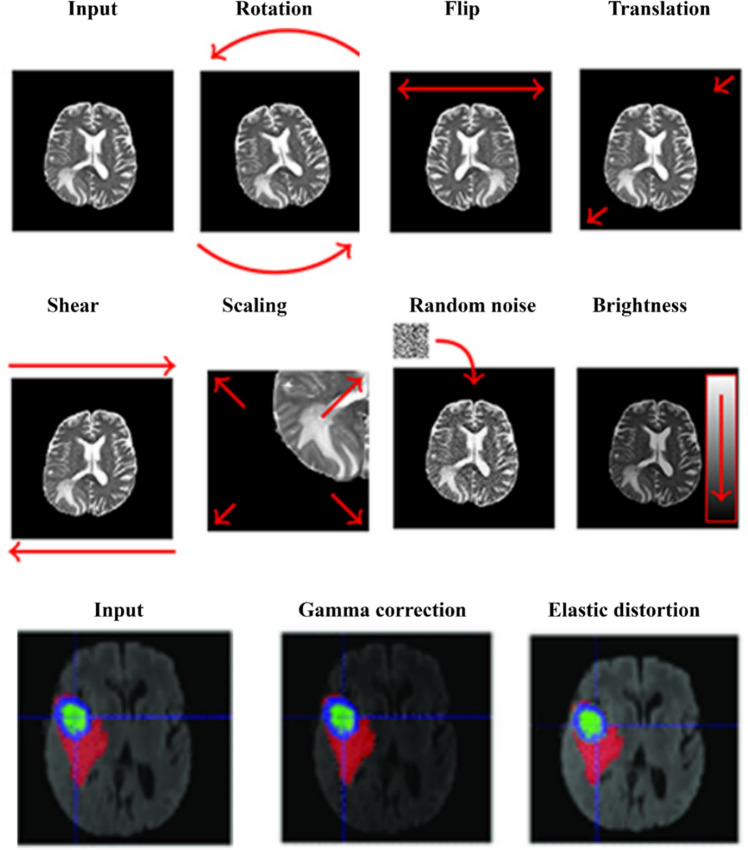

Data augmentation

Data augmentation, also known as artificial data generation, is a common approach used for improving the generalisation capabilities of Deep Neural Networks (DNNs). Data of good quality are hard to collect, and acquiring new examples is also very time-consuming and expensive. Medical image analysis, especially tumour delineation, is affected by this problem [48]. Several data augmentation techniques were used by researchers to increase the number of training samples which included random axis mirror flips [29, 30, 33–38, 49], intensity scaling and intensity shifting [31, 34, 37], isotropic scaling, per-channel gamma corrections [35], rotations [35–37, 43, 49], random scaling [38], elastic transformations, and zoom-in and zoom-out [49]. There are many ways of augmenting MRI scans, as illustrated in Fig. 8.

Fig. 8.

Glioma segmentation architectures

ML approaches, particularly DL architectures, have been applied recently in the medical domain to improve diagnostic and treatment procedures by building automated systems to categorise patients, regression-based predictions, and semantic segmentation applications [51]. However, due to data availability constraints, automation in medical applications is a big challenge. An unbalanced dataset is a common problem in medical imaging. For example, the cancer area is small relative to a healthy brain and MRI scan background. Creating a good DL structure, in general, is challenging, but it is now essential to automatically process data of the vast number of patients. Significantly, a deep structure can generate a wide range of features without the researchers' input. ML models are suitable for tiny datasets, but researchers need significant characteristics to train such models. ML/DL limitations have been addressed in many ways. Standard techniques for overcoming the imbalanced data problem in segmentation problems include the Weighted cross-entropy loss (WCEL) function, the generalized dice loss (GDL) function, and balanced patch selection [52]. Pre-processing techniques, such as volume cropping, data denoising and augmentation, have also led to improving the performance.

The accuracy of automated diagnostic systems has been improved by using several different models [53–57]. 3D MRI with DNNs is now widely used to diagnose brain tumours because of their valuable and precise performance. Artificial neural networks (ANNs), such as fully convolutional neural networks (FCNNs) and their ensembles, contribute to the medical field by automatically segmenting the tumour's sub-regions. Accurate BTS algorithms are needed more when the machine observes volumetric MRI scans in three dimensions rather than the actual two-dimensional (2D) perspective of a human interpretation. Even though mMRI provides complete information, it is still difficult to differentiate all sub-regions due to false image features. DL-based approaches often outperform standard ML methods for image semantic segmentation [23].

In their work [58], the authors covered the fundamental, generative, and discriminative approaches for BTS. DNN has recently gained popularity for the segmentation of radiological scans. DeepMedic [27], UNet [59], V-Net [60], SegNet [61], ResNet [62], and DenseNet [63] are the examples of CNN that generate semantic segmentation maps. UNet is generally acknowledged as a standard backbone architecture to perform semantic segmentation in medical imaging. UNet is an encoder–decoder architecture that reduces feature maps to half on the encoder path and doubles feature maps on the decoder path. The skip connections between UNet's parallel stages aid in feature reconstruction.

Due to the volumetric structure of MRIs, organs are often scanned like 3D entities and subsequently segmented using 3D CNN-based architectures. Myronenko et al. [56] (BraTS 2018 winner) proposed a variational autoencoder-based 3D encoder–decoder architecture for BTS. Isenee et al. [64] (second in BraTS 2018 challenge) utilised a modified version of the basic UNet [59] using diligent training and data augmentation throughout the model training and testing phase. The performance improvement was observed when Leaky Rectifier Linear Unit (ReLU) was utilised instead of the ReLU activation function. The BraTS 2019 winner, Jiang et al. [55], employed a two-stage cascaded UNet architecture to segment brain tumours. Different tricks for 3D BTS, such as sampling of patient’s input data, random patch size-based training, semi-supervised learning, architecture depth, learning warm-up, ensembling, and multiproblem learning, were used.

Model training and hyperparameter tuning are essential issues related to the high resource consumption by these models. 2D Neural Networks (NNs) have fewer parameters than 3D models and can be trained quickly, which allows for better hyperparameter tuning. Due to the absence of depth information, the 2D UNet performs poorly in 3D segmentation. As a result of class imbalance and increasing computing expenses, 3D UNet-based models often face many difficulties. Many recent studies have utilised ensemble models that average the output probabilities of separate models to enhance generalisation power and segmentation performance by providing uncertainty estimate for each voxel in the vision [55, 56, 64–67]. Due to different weights and hyperparameters used for model optimisation, individually trained models vary in one way or the other. As a result, ensembles have certain disadvantages like training time, memory constraints, and increased model complexity. New versions of the UNet were developed by Isensee et al. [54, 64] to show that a well-trained UNet can outperform complex ensemble approaches. Many later-year submissions that attempted to utilise the modified 3D UNets or ensembles of 3D UNets [55, 56, 68] seem to be inspired by Isensee's work. The glioma segmentation architectures used in BraTS 2020 SP task to segment tumour sub-regions are classified in Fig. 9.

Fig. 9.

Different types of networks used for glioma segmentation

Single networks

Using a three-layer deep 3D UNet encoder–decoder framework, Agravat et al. [33] presented a tumour segmentation architecture with deep connections to increase network depth. It enabled the gradient to flow straight to the previous layers, allowing the classification layer to watch the preceding levels closely. The layers' extensive connections further enhanced the difficulty in identifying patterns. An unbalanced dataset caused problems with BTS with a higher proportion of non-tumourous slices than tumourous slices, which reduced network accuracy. The 3D patch-based input ensured that the network did not overlearn the background voxels. The network was trained using a mix of dice loss and focal loss functions to enhance its performance to address this class imbalance. A smaller subcomponent size (NCR and ET) contributed to the failure of the network, especially in LGG cases.

Using an accumulated encoder (AE), Chato et al. [69] developed a modified 3D UNet consisting of three layers of encoder/decoder modules with concatenation links. AE included ReLU, accumulated block (AB), and max-pooling. To enhance the quality of low-level features, the AB developed two feature maps depending on element-wise addition. After a last max-pooling layer, two branches of convolutions generated these feature maps. After clipping the training patch, channel normalization (CN) [70] was applied to prevent overfitting and solve the class imbalance problem. In order to trigger the training procedure with network convergence and performance improvement, the mini-batch method was employed. After two convolutional layers, each encoder unit had batch normalization (BN) [71], a ReLU, and a max-pooling layer.

Anand et al. [32] suggested 3D FCNN with encoding and decoding pathways. Dense blocks and Transition Down blocks were used in the encoding route. A limited number of output feature maps were chosen for each convolutional layer to avoid the parameter explosion. The spatial dimension of the feature maps was decreased using the transition down blocks in the network. Dense blocks and Transition Up blocks make up the network's decoding or up-sampling route. The reversed convolution layers were used in the Transition Up blocks to move sample feature maps up in the hierarchy. The features from the encoding portion were combined with the upsampled features as input for the dense blocks in the decoding phase. With complicated cases, the suggested network did not perform well. The trained network was fine-tuned, and hard mining was conducted on tough instances [72]. With the help of DSC, a threshold-based selection of complicated instances was made.

Parmar et al. [30] showed that an increase in batch size might lead to smaller patches with less contextual information. Contrarily, a bigger patch size may provide more contextual information, resulting in smaller batch size, raising the stochastic gradient variance, and reducing the amount of optimization achieved. Batch pools with varying patch sizes were created. The model could learn global information from the most prominent patch and relevant texture from the minor patch with the same parameters by employing various cropping and padding stages between the convolution layers. Patching would also enable a less powerful Graphical Processing Unit (GPU) to handle the big image. The down block of the UNet framework [54] lowered patch size and increased channel length. Overfitting was eliminated by using the down block, which transmitted data from front to end. The position information was reconstructed using the down block outputs, which were then combined in the up block. Based on the probabilistic matrix, the patch size and number of channels were determined. Setting appropriate threshold values for each class helped to accomplish this goal.

Glioma segmentation was solved by Pang et al. [43] by using a 2D encoder-decoder arrangement. The encoder portion utilised five Feature Mining Units (FMUs) and four downsample modules to encode the input data. With the use of this structure, the input data may be cleaned of the noise while retaining the crucial features that made segmentation possible. Each of the convolution kernels utilised in the FMU interacted via skip connections; the characteristic channel information collected from these processes was spliced together [73–75]. All convolution operations were combined with BN operations [71] and ReLU activation functions. The decoder's FMU had the same structure as the encoder's. At the same time, the output datum of each FMU in the decoder was up-sampled to the original size of the image to maintain fundamental patterns. As a result, incorrect information was eliminated, and only appropriate data persisted [76, 77].

Suter et al. [78] used the usual ground-truth labelling for cerebrospinal fluid (CSF), WM and grey matter (GM) acquired using FSL software [79] to train a nnUNet-framework [80] for locating healthy areas. All images were subjected to an MRI modality-specific piece-wise linear intensity transformation based on these healthy tissue labelling to complement the healthy tissue intensity and map to a set intensity range for consistent grey-value binning. Only the WM and GM labelling were utilised, and CSF segmentation was frequently compromised by insufficient skull-stripping. The N4 method [44] was used to correct the bias field in all of the images.

To extract the local characteristics of the tumour, Zhao et al. [38] developed a segmentation then prediction (STP) architecture trained on patches. A global branch retrieved the WT's global characteristics to forecast survival days. An encoder and a decoder were used in the segmentation module. Eight convolutional blocks and three downsampling layers comprised the encoder part. There was a downsampling layer between every two convolutional blocks. Because of the limited GPU memory, the group normalization (GN) [81] was substituted with the IN. An output layer followed three pairs of upsampling stages and convolutional blocks in the segmentation decoder. Convolutions were used in an upsampling layer, followed by trilinear interpolation and skip connections between the encoder-decoder paths. The number of channels in each convolutional block was decreased to half of the channels in the preceding block. The output layer included convolutional kernels and three channels to generate the ET, WT, and TC segmentation masks.

Patel et al. [35] hypothesised that networks using conventional convolutions were less capable of producing correct segmentation labels for glioma sub-regions. As a result, the authors suggested inserting modified selective kernel (SK) blocks [82] into the recommended UNet. An attention mechanism allowed the network to automatically change its receptive field to accommodate spatial information collected at various scales. Due to the limitations of GPU memory, the suggested framework learned both anatomical context and complex representations. Downsampling and upsampling of feature maps were achieved via max-pooling with trilinear interpolation, which further helped in decreasing the network's memory footprint. Deep supervision [67, 83] was used to promote quicker network convergence. The proposed SK blocks may be broken down into three operations: (1) split, (2) channel selection, and (3) spatial attention. A channel attention technique was used to enable the network to alter the receptive field of the SK block adaptively. A primary spatial attention mechanism, comparable to the convolutional block attention module [84], was used to make the network concentrate more on prominent image regions. The channel datum was aggregated using the max and average pooling methods across the channel dimension.

Dai et al. [36] used the UNet attention framework (UNet-AT) for BTS, which made use of attention-gated blocks' capacity to focus more on semantic information to improve segmentation performance. Further, there was no need to increase effort for fine-tuning the model parameters and pre-process the data. IN was selected at random for the UNet-AT. The activation function is Leaky-ReLU with a leakiness of 0.01.

McKinley et al. [37] used DeepSCAN, a 3D-to-2D FCNN that performed well in the 2019 BraTS challenge [66] and was trained using uncertainty-aware loss. The instances were classified based on confidently segmented core or a poorly segmented or missing core. It was assumed that every tumour had a core. Thus, the criterion for classifying core tissue was lowered when the core, as delineated by the classifier, was poorly defined or absent. The network started with 3D convolutions to compress a non-isotropic 3D patch to 2D. In the bottleneck, a shallow encoder/decoder system utilised densely linked dilated convolutions. This architecture was extremely similar to the authors' BraTS 2018 proposal. The main changes were that the authors utilised IN rather than BN and included a basic local attention block across dilated dense blocks.

Cascaded networks

Using the DeepLabv3+ [85, 86] model, Miron et al. [34] developed a version of a two-stage cascaded asymmetric UNet in which decoders kept track of the segmentation region contour to add more regularisation. Large dimensions were used to protect global information. Due to memory constraints, a two-branch decoder with interpolation and deconvolution was employed on the first level of the cascaded model. Model inputs were initial volumes concatenated along with segmentation maps produced from the first step. Two different convolutions were applied to the segmentation and outline of the segmentation region as a regularisation step. Shorter decoders were able to retain more information throughout the upsampling process. When needed, residual additive connectors were added. In order to extract features at different resolutions, the encoder was changed by adding atrous convolutions having varying dilation rates. A Spatial Pooling unit was introduced in the encoder to make use of the dilated convolutions. After combining the five feature maps, the decoder received them as a single data stream. There were two output tuples at this point: one acquired via upsampling and the other through transposed convolutions.

In contrast to conventional Cartesian space imagery and volumes, Russo et al. [45] presented a new technique for feeding DCNN with spherical dimension converted input data to improve feature learning. The DCNN used as a baseline for the proposed approach was developed from the work of Myronenko [56], built on a variational auto-encoder (VAE) UNet with modified input shape and loss function depending on the kind of transformation employed in the pre-processing phase. The VAE consisted of a UNet comprising two decoder divisions: a segmentation decoding branch used to get the ultimate segmentation and an extra decoder used to regularise the shared encoder. For COVID-19 severity evaluation and progression prediction [87], the lesion encoder (LE) architecture was suggested. The original LE used the UNet structure [59], which included an encoder and decoder inspired by the EfficientNet [88]. The decoder translated the lesion feature maps to the input MRI size and produced the segmentation maps while the encoder learnt and recorded the lesion features in the input images.

Tumour- EfficientDet (T-EDet), a variation of EfficientNet [88], was suggested by Carmo et al. [31]. Using a pre-trained EfficientNet [88] with D4 variant, the calculations of backbone weights and features were the starting point for EfficientDet. Because the initial network was developed with a 3-channel input, a significant issue emerged with varying input channels. The 4-channel (4 modalities) input was converted into a 3-channel input using an adaptation convolution. The Bi-directional Feature Pyramid Network (BiFPN) altered the characteristics obtained from the pre-trained EfficientNet to get its initial features. A transposed convolution accompanied by BN and the same swish activation [89] was employed to adapt this representation to the size of the input MRI data. This new representation was given to the segmentation architecture. It comprised three blocks of depth-wise convolutions [90], batch norm, and swish, coupled with a final convolution to reduce the number of classes. In isolation and then as the backbone of the planned multiproblem experiment, the UNet 3D architecture based on the work of Carmo et al. [91] was utilised. In terms of training technique, several hyperparameters comparable to Isensee's work [54] were used. The primary experiment included the end-to-end refining of segmentations generated by a solid pre-trained UNet 3D backbone model, as well as the inclusion of an attention-based SP branch.

Self-ensemble network

Pei et al. [39] presented a technique based on 3D reUNet DL. The reUNet was made up of a standard residual ResUNet and a self-ensemble model. The problem of gradient vanishing stopped with the use of the self-ensemble model and the ResUNet model. For OS prediction, the tumour segmentation probabilities derived from 3D Self-ensemble ResUNet (srUNet) were utilised. Even though DL-based techniques performed well in semantic segmentation, a large number of training samples were needed.

Parallel network

Soltaninejad et al. [40] presented a segmentation method that consisted of two major branches with varying degrees of resolution. Based on the technique described in Newell et al. [92], the native resolution branch was an encoder-decoder architecture with residual blocks. In the last layer, the activation function was changed with a sigmoid function. The native branch dealt with better resolution small-sized MRI patches. For segmentation, another branch with a broader view but a poorer resolution was added. In order to match features from the larger receptive field with the native branch, these features were trimmed. As a final step, the characteristics from both branches were combined and presented to the final layer for segmentation. In order to create the delineation heatmaps from the features, two convolution layers were utilised for each path. In order to train the network, several loss functions were employed, such that each branch learned relevant characteristics together with the final layer. The output was sent through a Sigmoid function which used stochastic gradient descent to learn the network's topology to train the network. For each class, a separate model was trained against all other labels to identify patterns.

Ensemble network

When it comes to the BTS task, González et al. [29] presented an ensemble of seven models with 3D and 2D input methods, all based on asymmetric UNet (AUNet). According to the 2.5D model, each modality's low-level characteristics were extracted separately using a multi-input approach. Multi-view 2.5D Inception block was designed to combine features from various perspectives of a 3D visual and accumulate multi-scale features into a single 3D image. Five layers were used in the enhanced 3D UNet to extract additional semantic information, with the encoding route having twice the number of convolutional blocks as the decoding path. There were four 2.5D Multi-View Inception blocks and a modified 3D UNet architecture that included residual blocks, instance normalization (IN) [93], transpose convolutions for performing upsampling, and additive skip connections within encoding and decoding paths in the multi-input module.

Ali et al. [41] suggested a combination of 2D and 3D approaches to maximise their advantages. The authors also showed that if hyperparameter tuning was done effectively, 2D models might attain comparable or even better performance than 3D models. Several axial, sagittal, and coronal views were used to train the model to compensate for the absence of depth information in 2D models. A combination of 2D and lightweight 3D models was used to train the ensemble model introduced by Asra et al. [94] to extract both 2D and 3D benefits. The 3D dilated multi-fiber network (DMFNet) [53] model was selected because fewer parameters could be learnt more rapidly than other 3D complicated models. MultiFiber (MF) and dilated convolutions were used with group convolutions to decrease parameters and preserve prediction precision. The information was sent using a multiplexer between the fibres by combining two convolutions with the four-channel input. The spatial information of brain tumours was then captured using dilated convolutions. Convolutions were performed with multiple dilation rates to check how many pixels were missed throughout the process. An ensemble of these two models was constructed in order to make use of both.

Zhang et al. [95] focused on utilising the brain parcellation-based knowledge, coarse-to-fine approach, and ensembling to enhance UNet segmentation performance. Four UNets were trained independently to perform BTS. UNet1 investigated how distinct tumour areas were more apparent in various MRI modalities. UNet2 focused on developing a brain parcellation prototype using a T1 MRI as input. Brain parcellations plus mMRI images were used to train UNet3. Utilizing cropped MRI as well as brain parcellations as input, UNet4 resulted in coarse-to-fine segmentation. The final output was achieved via a majority vote of both coarse and fine segmentation. Table 3 summarises the specifics of segmentation architecture used to segment tumour sub-regions before performing feature extraction.

Table 3.

Summary of segmentation architectures for BraTS 2020 SP problem

| Authors | A | B | C | D | E | F | G | H |

|---|---|---|---|---|---|---|---|---|

| González et al. [29] | Ensemble | 2.5D, 3D | AUNet | 5 | Inception block (2.5D), residual block (3D) | IN | Multi-input strategy | Five-fold cross-validation |

| Agravat et al. [33] | Single | 3D | Encoder-Decoder FCNN | 3 | Dense module | BN | Deep supervision | – |

| Miron et al. [34] | Cascaded | 3D | Two-stage cascaded asymmetrical UNet | 3 | Residual additive connections, spatial pooling module, Atrous convolutions with dilations | GN | Regularization by contouring | – |

| Chato et al. [69] | Single | 3D | UNet AE | 3 | Accumulated block | CN, BN | Information fusion strategy | – |

| Anand et al. [32] | Single | 3D | FCNN | 3 | Dense blocks, transition up/down blocks | – | Hard mining on complex cases | Stratified sampling |

| Parmar et al. [30] | Single | 3D | UNet | 5 | Downblock/Upblock | IN | Patch selection strategies | Stratified sampling |

| Soltaninejad et al. [40] | Parallel | 3D | Encoder-Decoder | 2 | Residual block | – | Multi-resolution method | – |

| Pang et al. [43] | Single | 2D | Encoder-Decoder | 4 | Residual units | BN | More abundant features | – |

| Ali et al. [41] | Ensemble | 2D, 3D | 2D UNet and 3D DMFnet | 3 | Group convolution with MF, dilated convolutions | – | Utilized 2D and 3D benefits | – |

| Russo et al. [45] | Cascaded | 3D | DCNN | 3 | ResNet block in variational autoencoder | – | Spherical coordinate transformed input adds extreme augmentation, variational autoencoder-based UNet | – |

| Akbar et al. [42] | Parallel | 2D | MobileNetV1/V2 | 3 | Removed classification part, additional layers to produce the ratio of tumour sub-regions | BN | Focused on DL-based feature extraction rather than segmentation | Stratified sampling, fivefold cross-validation |

| Marti Asenjo et al. [49] | Single | 3D | EffUNet | 5 | EfficientNet block in encoder | – | Deep supervision | – |

| Carmo et al. [31] | Cascaded | 3D | T-EDet | 5 | D4 variation of EfficientNet, bi-directional feature pyramid network (BiFPN), multiATTUNet | BN, GN | Modification of 2D semantic segmentation EfficientNet model, MultiATTUNet architecture | – |

| Han et al. [46] | Single | 2D | UNet | 4 | Neuromorphic attention-based learner | BN | Neuromorphic saliency map | – |

| Pei et al. [39] | Self-ensemble | 3D | srUNet | 4 | Regular ResUNet and srUNet model | – | Self-ensemble avoids gradient disappearance and regularises training | – |

| Suter et al. [78] | Single | 3D | nnUNet-framework | 5 | Piece-wise linear intensity transform, grey-level binning | IN | Identified healthy region labels for CSF, WM, GM | Five-fold cross-validation |

| Zhao et al. [38] | Single | 3D | Encoder–decoder | 4 | Residual connection | IN | STP with local and global branch | Five-fold cross-validation, self-training |

| Patel et al. [35] | Single | 3D | UNet | 5 | Selective kernel block with attention mechanism, residual bottleneck block | GN | The network adjusts its receptive field via an attention mechanism, deep supervision | – |

| Dai et al. [36] | Single | 3D | UNet-AT | 5 | Attention-gated block | IN | Self-training model again with the training set and previously generated pseudo annotations for the validation set | – |

| McKinley et al. [37] | Single | 3D-to-2D | DeepSCAN | – | Dilated dense block with attention | IN | Separate cases based on confidently segmented/ vaguely segmented/ missing core | – |

| Zhang et al. [95] | Ensemble | 3D | UNet | 4 | Brain parcellation model using T1 MRI | – | Use of brain parcellation, coarse-to-fine strategy | – |

A Network type, B Input dimension, C Segmentation backbone, D Depth/levels/layers, E Modifications, F Normalization, G Uniqueness, H Sampling

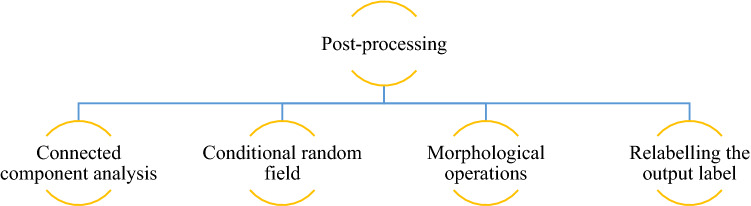

Post-processing

Several post-processing techniques for eliminating false positives and improving segmentation results have been suggested. Traditional post-processing techniques, like threshold- or region-growing methods, focus on isolated regions or pixels by utilising manually defined thresholds. Recently, post-processing techniques such as Connected Component Analysis (CCA), Conditional Random Fields (CRF), and Gaussian Markov Random Fields (MRF) have been utilised to infer pixel pairings given previous information such as pixel intensity distributions and geographic distance. Recently, the researchers coupled CRF to NN models in an end-to-end learning manner for image segmentation issues [96]. The post-processing shown in Fig. 10 increased the DSC and HD95 of the ET, WT, and TC classes considerably.

Fig. 10.

Post-processing techniques used by SP techniques submitted in BraTS 2020 challenge

Connected component analysis

CCA cluster voxels depending on connectivity and voxel intensity levels are comparable. The highly tiny linked components are removed from the result since they are false positives due to misleading segmentation findings. Agravat et al. [33] chose CCA to eliminate the tumour, which had a volume of less than one thousand voxels. To post-process the segmented labels, Anand et al. [32] utilised class-wise 3D CCA. Marti et al. [49] defined the primary tumour volume as the most considerable linked (solid) volume. Over the training dataset, the distance from each smaller area (secondary tumour volumes) that was not linked to the primary tumour was computed. After tumour segmentation, the secondary volumes situated at a distance more significant than the average range plus 1.5 times the normal deviation were deemed false-positive and; were therefore eliminated and categorised as background. Patel et al. [35] used CCA to minimise the frequency of false positives in any class with fewer than ten voxels. Zhao et al. [38] used CCA to enhance segmentation performance as well. Dai et al. [36] eliminated a tiny isolated WT/ET (just under ten voxels) out from prediction, modifying the TC size to match the size of the ET.

Conditional random field

The algorithm assumes the voxel category based on characteristics linked to a specific voxel, independent of the voxel's connection to other adjacent voxels. CRF analyses this connection and creates a graphical model to handle prediction dependencies. CRF was employed by Anand et al. [32] to smoothen the segmentation maps.

Morphological operations

Morphological operations were used to modify voxel values depending on their neighbourhood, taking into account the size and form of the voxel. After screening the spherical segmentation using the Cartesian filter, Russo et al. [45] utilised post-processing to improve ET segmentation. The scientists discovered that isolated voxels were responsible for numerous erroneous positive ET segmentations. The scientists used a binary opening operation to separate thin branches across ET sites before filtering out those with less than 30 voxels. Whenever the ET segmentation remained after these filters, the original was recovered and utilised as the final one. Alternatively, the ET partition was deleted, implying that there was no ET in the current volume.

Relabelling the output label

González et al.[29] established a threshold value that represents the smallest size of the ET area. When the overall amount of predicted ET voxels was less than the threshold, the label of all voxels in the ET area was substituted for one of the NCR regions. In the validation dataset, the threshold value was calculated to obtain maximum performance in this region. If the segmentation model did not identify a TC, the authors concluded that the identified WT area matched the TC and relabeled it as TC. ET was produced in the vicinity of the NCR, according to Agravat et al. [33]. Furthermore, in HGG, its size cannot be minimised. Because of its tiny size, ET was changed to NCR. Three hundred was the experimentally determined threshold for relabelling. As a post-processing step, Carmo et al. [31] used a particular threshold in ET segmentations, where segmented regions with less than 300 voxels were utterly wiped out. It was done to prevent false-positive predictions of the ET region, which may cause DSC to be zero in individuals without ET. According to Patel et al. [35], most LGG patients have no ET portion, and the inclusion of even a single misdiagnosed ET voxel in estimated label maps leads to a DSC of 0. To mitigate the severity of the penalty, the authors substituted all ET voxels by TC if the number of anticipated voxels was less than 50. Zhao et al. [38] discovered that if the segmented ET was less than 350 voxels, the authors suspected no ET in LGG instances and removed those identified voxels from its segmentation result.

Feature extraction and selection

Radiomics is studied to investigate the relationship between medical imaging aspects and underlying biological properties. It is a technique of extracting efficient quantitative characteristics from radiographic scans and developing prediction architectures that link image attributes to clinical outcomes. The fundamental concept behind radiomics is that intra-tumour imaging heterogeneity can be retrieved from the MRI scans. The tumourous areas estimated from the patient's MRI volume were then utilised to predict OS time.

González et al. [29] used four MRI modalities to derive radiomic characteristics from the whole brain and five sub-regions. WT, TC, ED, NCR, and ET are the feature extraction sub-regions. The Python library PyRadiomics [97] was used to extract all 2568 handcrafted features. The recursive feature elimination (RFE) method was employed to reduce features to prevent overfitting. Radiomics was also used to estimate two additional characteristics: the relative invasiveness coefficient (RIC) and relative necrosis coefficient (RNC). Agravat et al. [33] collected the patient's age, statistical data, and NCR shape for the SP model.

Miron et al. [34] retrieved radiomic characteristics from segmentation labels, which primarily defined the area of interest's 3D shape, size, and centre of mass. Each tumour sub-regions ratio to the total brain size and the WT were included. A new binary characteristic called ‘High Risk' was set, if the tumour was in the lobe with a higher risk probability or in the left hemisphere. A greater risk was assessed if the centre of mass was located within 128–150 slides (sagittal plane), 60–150 slides (coronal plane), or 0–41 slides (transversal plane).

Chato et al. [69] utilised a feature-level and decision-level information fusion approach for creating the OS prediction model. The intersection of two brain midplanes splits a brain volume into four or eight tiny sub-volumes to retrieve volumetric characteristics. Five volumetric features indicating the volume of a brain region/WT/ET/NCR-NET/ED were gathered from each tiny volume, plus the volume of the whole brain and the WT. The centroid, volume, diameter, orientation, principal axis length, surface area extent, and solidity of the tumour site were among the twenty-eight shape and location characteristics. Only GTR resection status was considered for evaluation. There were 119 samples with GTR resection status out of 236 samples in the BraTS 2020 training dataset. A dataset of 119 samples was insufficient to build a reliable OS prediction model. Thus, resection status was utilised as an additional non-imaging characteristic (i.e., GTR as ‘1’, STR or NA as ‘0’). The patient's age was utilised as another non-imaging characteristic since it was one of the variables considered by physicians to select an appropriate treatment strategy for glioma patients.

Anand et al. [32] extracted radiomic features such as mean, median, and entropy, as well as second-order features such as Gray Level Co-Occurrence Matrix (GLCM), Gray Level Run Length Matrix (GLRLM), Gray Level Dependence Matrix (GLDM), Gray Level Size Zone Matrix (GLSZM), and Neighboring Gray Tone Difference Matrix (NGTDM). A total of 1022 distinct characteristics were retrieved using a different mix of segmentation maps. Each characteristic's significant value was given using a forest of trees [98, 99].

Volume and shape characteristics were derived from the whole brain and tumour substructures by Parmar et al. [30]. The 2D/3D diameter, major axis, minor axis, elongation, spherical index, whole brain’s flatness, and tumour sub-regions were retrieved to identify interrelationships as shape characteristics. For matching segmentation labels, a clinical characteristic (age) was assigned.

For SP, Soltaninejad et al. [40] used statistical characteristics of already segmented tumour areas from the previous segmentation step. The volume sizes of the WT, TC, and ET that were normalised w.r.t. the total brain volume were considered. The mean intensities for each tumour tissue type, i.e., the WT, TC, and ET, was another set of characteristics. As a result, eight characteristics were utilised for OS prediction.

Pang et al. [43] extracted characteristics from MRI data by masking the ED, ET, and NCR-NET regions. The particular feature selection procedures included Cox univariate analysis, Cox multivariate analysis, and the ph hypothesis test. Minor axis length, sphericity derived from the segmentation result using the ED mask, and busyness extracted from the segmentation result using the ET area mask were among the selected attributes. In addition, the patient’s age was included in the final feature set. According to a bivariate study, the patient's age and survival time were substantially linked at 0.01. The Pearson correlation value was -0.353, which suggested that the patient's age can affect OS.

To estimate the OS time, Ali et al. [41] collected radiomic and image-based characteristics. The image-based characteristics were volume, surface area and ratios, and the WT and ET centre positions. The Laplacian of Gaussian (LoG) filters was used to extract radiomic characteristics. The input image's texture and intensity-based characteristics were retrieved and filtered using LoG filters with sigma values 1, 2, and 3. Fourteen shape-based characteristics (tumour volume, diameter, surface area), first-order features, and GLCM features were recovered from segmented tumours. The characteristics for the tumourous areas ET, ED, TC, and WT were extracted. Because of tumour overlapping, some features were common. The top-performing features were chosen using Random Forest (RF) Recursive Feature Elimination. The patients' age was included in the feature vector since it provided crucial information about their survival.

Individual lesion encoders were utilised by Russo et al. [45] to extract the latent variables of the input MRI scans, which were further used as input parameters to predict patient OS. A high-dimensional feature vector (d = 256) was generated for each MRI scan. Principal Component Analysis (PCA) regulated the feature dimensionality by selecting various principal components for subsequent analysis since the high-dimensional feature space tended to overfit. The age distribution seems normal; however, the OS distribution appears skewed, with most instances having an OS of fewer than 400 days. The tailed distribution of the OS values was modelled using a Tweedie distribution.

Akbar et al. [42] suggested modifying MobileNet V1 and MobileNet V2 as interchangeable feature extractors. The predictive architecture was fed with extracted features and patients’ age data. The MobileNet V1 design was changed by eliminating a portion of the categorization and linking it to many additional layers, resulting in three characteristics (the ratio of NEC, ED, and ET area to the overall area of the tumour). MobileNet V1 received a two-dimensional image with three channels that were a multiple of 32 in size. In contrast, the accessible dataset consisted of four cropped 3D images from the image's centre. T1ce, T2, and FLAIR images were utilised to modify. Axially sliced 3D MRI scans were obtained, yielding 128 2D image data of . The three images were stacked as three channels, and the 128 slices were converted into batch numbers for a single epoch. A dropout with a factor of 0.1 was employed to avoid overfitting [100]. Similar changes were made to the MobileNet V2 design, except that the output feature was used instead of the categorization layer. The same approach was used to input 3D MRI images to this architecture, as in the MobileNet V1 design. MobileNet V2-based modified network was selected based on the test findings.

Marti Asenjo et al. [49] created three distinct datasets for each patient's 2D anatomical plane, i.e. axial, sagittal, and coronal. The identification of low-level characteristics was enhanced by using a pre-trained EfficientNet network [88] based on ImageNet [101] as the UNet encoder Efficient UNet (EffUNet). This idea was to expand the number of layers and channels. The 3-channel decoder input layer was pre-trained on ImageNet, and further upgraded to a 4-channel configuration to accommodate all MRI modalities for each patient. The fourth layer was seeded with random weights drawn from a Gaussian distribution. The Laplacian and tumour gradient were vital in glioma tumour growth models [102, 103]. For all MRI sequences and the segmentation matrix, the Laplacian and the vector module of the 3D gradient were derived. Transformations were performed using MRI images (pixel values) and segmentation masks for a particular area, resulting in a binary matrix with ‘1’ within and ‘0’ outside the specified region. A complete tumour contains the whole of all three groups. NCR/NET volume, Gross Tumour Volume (GTV), and WT were also included in three ring-shaped structures. Following extraction, dimensionality reduction was required to select those characteristics with a higher connection to patient survival. Geometrical, statistical, locational, textural, and other characteristics were retrieved. Three variables (GTR, STR, and NA) were added as categorical and binary characteristics. Three distinct feature selection techniques were used: fscchi2 (ranking for classification using chi-square tests), fscmrmr (ranking for classification using Minimum Redundancy Maximum Relevance (MRMR) algorithm) and fsrftest (ranking for regression using F-tests). Each algorithm was then assigned the significance of each feature.

Han et al. [46] developed the neuromorphic saliency map to improve image analysis. An attention and saliency map was created to enhance tumour segmentation by simulating the visual brain and applying neuromorphic pre-processing. Several orientation-selective characteristics were used to build the neuromorphic NN. The orientation selectivity inspired by the visual cortex's "simple cell" enabled the resilience of abstract feature extraction [104]. The authors were able to generate a saliency map using the down-up resizing network. The saliency map proved successful at either eliminating noise or attracting attention. A new abstract image was created using UNet and a neuromorphic NN mixed network for BraTS 2019 [47]. It demonstrates the possibility of using a neuromorphic NN for image pre-processing or image post-processing.

Pei et al. [39] retrieved shape characteristics from segmentations produced and included non-radiomic variables like age. In the training phase, features were chosen based on their significance using an RF classifier based on survival risk. Data were randomly divided as training and validation in an 8:2 ratio in the training phase to determine the feature significance. The number of NC, ED, ET, shape elongation, flatness, most petite axis length, and surface area were among the 34 characteristics retrieved.

Suter et al. [78] retrieved features using the PyRadiomics library [105] for all four MRI modalities and the two segmentation labels WM and GM. The LoG filtered, intensity-based features were extracted for the original wavelet image. For tumour location characteristics, the images were registered using the MNI152 atlas [106]. Because the tumour's mass impact often altered the ventricles' location and shape, the authors used the Symmetric Normalization (SyN) [107] to reshape the afflicted regions appropriately. In the first stage, the authors eliminated any characteristics having a concordance index (cindex) less than 0.55. For all feature pairings having a correlation coefficient of 0.95 or above, the authors progressively eliminated the feature with the lowest c-index. The use of two priors was investigated. When the sequence prior was used, the characteristics were restricted to the T1ce and FLAIR MRI images. Features having an Intraclass Correlation Coefficient (ICC) (2, 1) of 0.85 or above were evaluated for robustness prior. These priors were evaluated both individually and together. Many ML techniques need or benefit from regularly distributed target variables. All sixteen feature selection and eleven ML technique combinations were examined. ReliefF (RELF), Fischer score (FSCR), chi-square score (CHSQ), joint mutual information (JMI), conditional infomax feature extraction (CIFE), double input symmetric relevance (DISR), mutual information maximization (MIM), conditional mutual information maximization (CMIM), interaction capping (ICAP), MRMR, and mutual information feature selection (MIFS) techniques were used for feature selection.

In their suggested STP model, Zhao et al. [38] used both local and global branches. The predictions from these two branches were combined to create the outcome. The segmentation module's output contains three local branch channels representing the ET, WT, and TC probabilities. As a result, the authors got three masks for the various tumour sub-regions. The authors were then given three bounding boxes, which were minimal cubes containing various sub-regions. The region of interest (ROI) alignment [108] was applied to the encoder outputs to get the features. The local tumour characteristics were obtained using 3D global average pooling.

Patel et al. [35] fed one whole image through the proposed network and retrieved 2048 characteristics from the bottleneck layer's end. This procedure was done for each of the eight mirror axis-flipped copies of the input and each of the five unique models in the proposed ensemble, yielding 40 different versions of these 2048 characteristics. The characteristics were average global pooled over batch and geographic dimensions to merge these 40 versions. Using this full feature set to train a model would significantly overfit, requiring dimensionality reduction using PCA. The authors also incorporated the age and volumetric features of tumour sub-regions in the suggested model. Patients with GTR were assessed; the authors chose to train the suggested cox model on a collection of 118 cases. The authors discovered experimentally that a model with ten main components outperformed all others on the validation set.

Based on the segmentation findings, Dai et al. [36] proposed a biophysics-guided architecture for SP. The authors created a map based on each patient’s tumour’s structure with four discrete values for different classes. Tumour heterogeneity and invasiveness was revealed by the tumour’s spatial distribution [109]. Instead of data-driven radiomic characteristics, the authors explored tumour growth biophysics modelling [110]. The RIC, defined as the ratio between the hypoxic TC and the infiltration front [111], was of particular interest. The authors used the biophysics-guided invasiveness feature [112], which used TC and WT to calculate RIC. In predictive modelling, this biophysics-guided feature outperformed the data-driven radiomics method. The tumour invasiveness was described using a RIC generated from the tumour structural map. The WT and TC first semi-axis length ratio was computed as the RIC in this research.

Zhang et al. [95] utilised the numbers 1, 2, and 3 to denote three resection statuses, viz. NA, GTR, and STR. In addition, three new techniques were used in the suggested model: (1) using brain parcellation to obtain more features, (2) integrating classifier and regressor models, and (3) selecting characteristics in addition to those obtained by Xue et al. [113]. The authors utilised brain parcellation to separate tissue labels, particularly CSF, WM, and GM. The surface area of each overlapping region between two brain structures was then computed (i.e., overlapped regions between ET and CSF, ET and WM, ET and GM, ED and CSF, ED and WM, and NCR/NET and GM). The technique for calculating surface area was the same as in Xue's study [113].

Overall survival prediction models

Various OS prediction techniques submitted in BraTS 2020 Challenge are briefly explained in this section under sub-sections of ML and DL models.

Machine learning models

Miron et al. [34] demonstrated that using ExtraTrees [114] on derived radiomic characteristics was highly sensitive to minor changes in the parameters, owing to the limited size of the training sample. The CSV file's age proved to be an essential characteristic. The most significant results were achieved when the depth of the trees was limited to just seven levels. Chato et al. [69] trained an OS time classification model using a basic NN ML technique. The Random Forest Regressor (RFR) was trained by Anand et al. [32] using the thirty-two most significant characteristics out of 1022. Parmar et al. [30] utilised age, volumetric, shape features for training the multi-fold RF classifier. Pang et al. [43] also utilised RF regression to estimate patient survival time. After identifying key characteristics, Ali et al. [41] utilised RFR with Grid search for SP. Patel et al. [35] used a cox proportional hazards model trained on in-depth characteristics derived from the proposed segmentation network to predict OS. A linear regressor with RIC and age as factors was employed by Dai et al. [36]. Agravat et al. [33] used five-fold cross-validation for training an RFR for OS prediction in instances with GTR. The RFR outperforms other cutting-edge methods that utilise linear regression and ANNs.

Soltaninejad et al. [40] used an RF model to extract the feature set from each Volume Of Interest (VOI). RF parameters (i.e. tree depth, the number of trees) were adjusted using five-fold cross-validation on the training dataset. The matching parameters, 50 trees of depth 10, gave the best generalisation and accuracy. Using the RF in the regression model, the predictions as the number of survival days were produced. To forecast OS values, Russo et al. [45] developed a Tweedie distribution-based Generalized Linear Model (GLM) [115], i.e., Tweedie Regressor. The resection status and age were essential OS predictors, and these were combined with the LesionEncoder DL features to feed into the Tweedie Regressor for OS prediction.

Suter et al. [78] investigated eleven ML methods, including automatic relevance determination regression (ARD), AdaBoost, decision tree (DT), extra tree(s), Gaussian processes, multi-layer perceptron (MLP), nearest neighbors, radius neighbours, passive-aggressive, RANSAC, RFs, stochastic gradient descent, support vector regression (SVR), linear, and Theil-Sen regression. On the training set, the effectiveness of all permutations was assessed using ten-fold stratified cross-validation.