Abstract

Background.

The Patient Generated Index (PGI) is an individualized measure of health related quality of life. Previous work testing the PGI in the oncology setting identified threats to content validity due to navigational and computational completion errors using the paper format.

Objective.

The purpose of this pilot study was to refine and evaluate the usability and acceptability of an electronic PGI (ePGI) prototype in the outpatient radiation oncology setting.

Methods.

This pilot study used adaptive agile web design, cognitive interview and survey methods.

Results.

Three iterations of testing and refining the ePGI were required. Fifteen patients completed the refined ePGI using touchscreen tablets with little or no coaching required. Nearly all participants rated the ePGI as “easy” or “very” easy to use, understand and navigate. Up to one-half stated they did not share this type of information with their clinician but felt the information on the ePGI would be useful to discuss when making decisions about their care. Eight clinicians participated, all of whom felt the ePGI was a useful tool to initiate dialogue about quality of life issues, reveal infrequent or unusual effects of treatment and assist with symptom management.

Discussion.

The pilot study indicates the ePGI may be useful for use at the point of care. Larger studies are needed to explore the influence it may have in decision-making and restructuring patient/provider communication.

Keywords: Quality of Life, Patient Generated Index; Electronic Survey; Patient Reported Outcome; Oncology; Patient Voice; Methodology

The concept of patient-centered care, in which patients are the “final arbiters in deciding what treatment and care they receive,” is core to efforts to transform health care in the U.S.(Crossing the Quality Chasm: A New Health System for the 21st Century, 2001). Within patient-centered care, the needs and values of the patient provide the basis for individualized care and decisions yet all too often, patients are unable to articulate or learn how treatment may affect what is most important to them (IOM). Communication and decision making becomes more meaningful when the impact of illness and its treatment on the individual is assessed from the patient perspective (Bowling, 1995; Lindblad, Ring, Glimelius, & Hansson, 2002; Patel, Veenstra, & Patrick, 2003).

Patients and clinicians are more likely to discuss health related quality of life (HRQL) issues when data are available to them. There is a need for patient relevant outcome measures that promote the patient perspective in health care discussions and decisions occurring during clinical interactions (Aburub, Gagnon, Rodríguez, & Mayo, 2016; Atkinson & Rubinelli, 2012; Hall, Kunz, Davis, Dawson, & Powers, 2015; McCleary et al., 2013).

The three-step patient-generated index (PGI; Ruta, Garratt, Leng, Russell, & MacDonald, 1994) provides a novel approach to the measurement of HRQL that accounts for individual values and preferences. First, patients identify areas most important to them that are effected by cancer and its treatment. Second, they score each item for severity. Third, they prioritize the importance of the items. The PGI, historically available only in paper format, has been studied in cancer (Camilleri-Brennan, Ruta, & Steele, 2002; Martin, Camfield, Rodham, Kliempt, & Ruta, 2007; Tang, Oh, Scheer, & Parsa, 2014; Tavernier, Totten, & Beck, 2011; Tavernier, Beck, Clayton, & Pett, 2011). A computerized version, however, has the capability to address documented navigational and computation errors (Tavernier, Totten, & Beck, 2011), threatening the content validity of the PGI. The purpose of this study was to refine and evaluate the usability and acceptability of an electronic version of the PGI (ePGI) prototype in the outpatient radiation oncology setting.

ePGI Prototype

Investigators developed a prototype based upon the three step PGI (Ruta, 1994). The initial prototype consisted of five screens with navigation and completion processes incorporated to address documented navigation and computation errors of the paper and pencil version (previous study).

Methods

Investigators obtained Institutional Review Board approval prior to beginning the study. Clinic liaisons assisted with identifying and inviting eligible participants; written consent was obtained by the investigators. The study occurred at a large outpatient radiation department with data collected data during routine appointments.

All full-time oncology physicians and registered nurses working in the radiation outpatient clinic were eligible and invited to participate. Investigators used convenience sampling methods to accrue adult patients receiving radiation treatment at the study site for at least two or more weeks at time of consent and who were able to speak and read English and being seen by a consenting clinician.

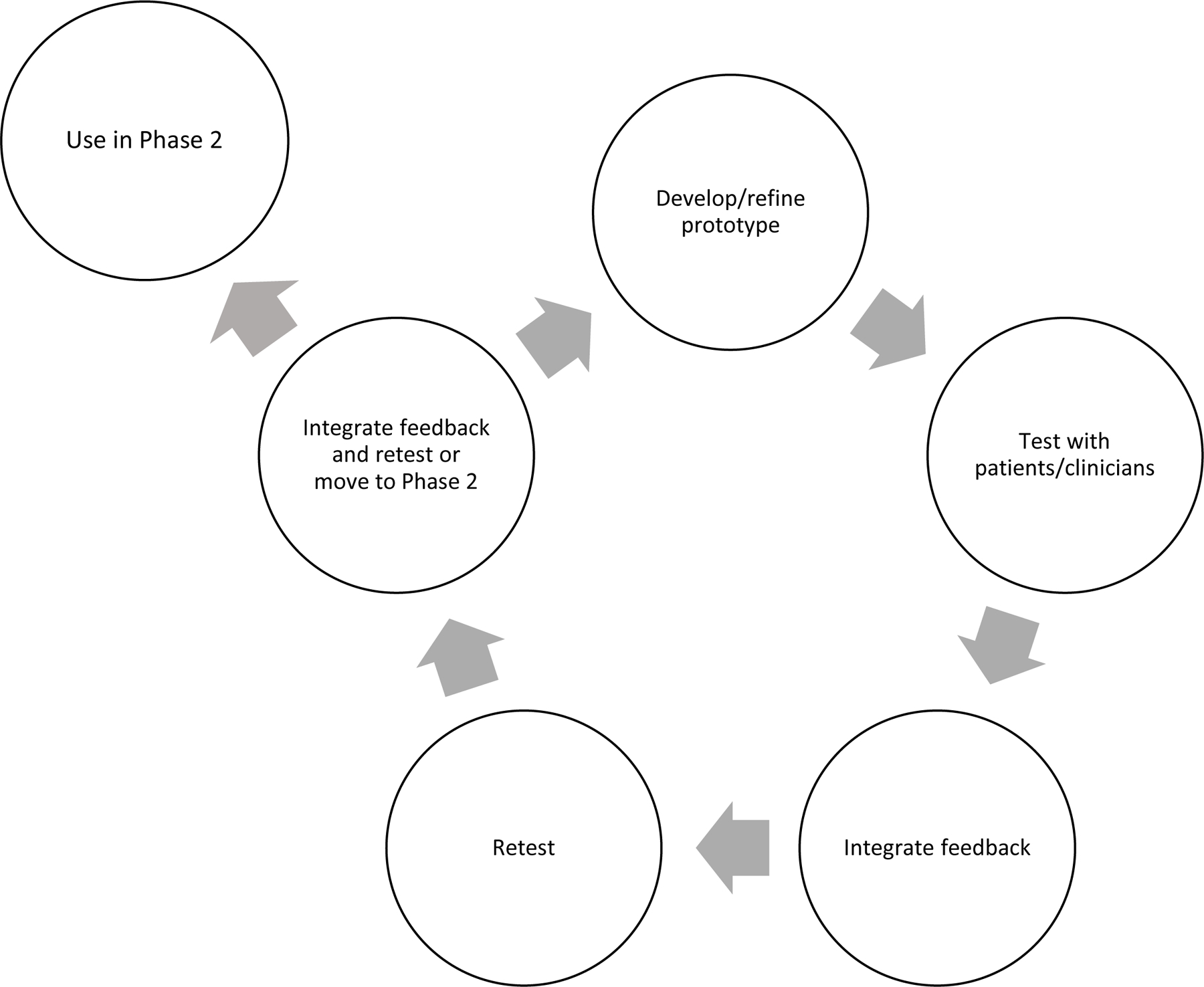

The study had two phases (Figure 1). In Phase 1 the investigators implemented an end-user adaptive agile design approach (Gustafson, 2011; Nielsen, 2000; Wolpin & Stewart, 2011) using cognitive interviews (Willis, 2005) and direct observation to examine and improve the usability (ease of use, understandability, navigational elements) and acceptability (interface layout and visual design) of the ePGI prototype (Health Literacy Online: A Guide for Simplifying the User Experience, 2016). The agile design specifically focused the development of the ePGI for the end-user, testing each iteration until there were no further changes suggested. In the second phase, investigators used a survey and structured interview to evaluate patient, nurse and physician perceptions of the usability and acceptability of the ePGI at the point of care.

Figure 1.

Two-phase study using adaptive agile design

Measures

Phase 1 cognitive interviews (Table 1) focused on the comprehension, navigation, layout and difficulties experienced or observed during completion of the ePGI. Phase 2 interviews addressed using the ePGI at the point of care (Table 2). For Phase 2, the investigators developed a 19-item survey of previous computer use and acceptability and usability of the ePGI, loosely adapted from other related survey questions (Basch et al., 2005; Carlson, Speca, Hagen, & Taenzer, 2001; Clark, Bardwell, Arsenault, DeTeresa, & Loscalzo, 2009). ePGI and surveys were completed using tablet computers and data automatically stored on a secure server using Research Electronic Data Capture (REDCap).

Table 1.

Cognitive Interview Questions

| 1. Tell me what you like about the ePGI

survey. a. What didn’t you like? |

| 2. For each screen: a. Tell me what this screen is asking b. Was it easy or difficult to complete this screen? i. What made it easy/difficult? ii. What could make it easier? iii. Probe for the specific elements of the screen 1. Entering words 2. Clicking/touching radio buttons for ratings 3. Selecting points c. Tell me how you came up with your answer. i. Is there anything that would have helped to make your answer easier? d. Do you like the way this screen looks? Why or why not? 3. Was it easy to move between screens in the survey? a. What made it easy/difficult? b. What could make it easier? 4. Did you look at your answers from the previous week? a. Why or why not? b. If so, was it helpful? i. Why or why not? c. Did you understand how to look at your answers from the previous week? i. What might make it easier to do/understand? d. Did you make any changes to your answers this time? 5. Is there anything else you thing should be changed to the survey to make it easier to complete? |

Table 2.

Phase 2 Patient and Clinician Interview Questions

|

Patient

Questions: We are going to talk about how you would use the information from this survey when you meet with your nurse or doctor. 1. Describe a typical weekly visit with your oncology doctor and nurse. 2. Do you usually talk about these ____ areas when you meet with your nurse/doctor? a. If so, how is it part of the conversation? b. If so, are they part of the planning of your care? c. Tell me why not. i. Do you wish it were part of your conversation? Why or why not? 3. Do you think about these areas when you make decisions about your health? 4. Do you think about these areas when you make decisions about the treatment you get for your cancer? 5. Would you want your doctor or nurse to see your survey? 6. How do you think it would help your doctor or nurse to know what is on your survey? 7. Is there anything you would need to know to be able to use your survey when you meet with your doctor or nurse? 8. IS there anything else you would like to add about how this survey may or may not be used while you get treatment for cancer? Clinician Questions: 1. Describe a typical weekly “on treatment” visit. a. What factors do you consider when making planning care? 2. Tell me about your previous experiences using patient reported measures (provide examples if necessary) a. Did you find them useful? Why or why not? 3. What are the benefits of using patient-reported outcomes in your practice? 4. What are the barriers to using patient-reported outcomes in your practice? 5. How do you define quality of life for your patient? a. How do you routinely assess for patients quality of life in your practice now? b. How do you usually document your assessment? At this point, review ePGI and how it is completed using responses randomly selected from patient ePGI responses. 1. If you had this information available to you before you meet with this patient, would you review it? a. Why or why not? 2. Reflecting back on the typical weekly visit you described, is the type of information in the ePGI ever discussed? a. If so, describe an example and how/why you use the information. 3. How do you think using the ePGI would affect or change the interaction you have with your patients? 4. Do you think this information is useful to you? a. Why or why not? b. If so, how? c. How would you use this in your practice? Is there anything else you would like to add about using the ePGI or other patient reported outcomes in your clinical practice? |

Procedure

Patients in Phase 1 completed the ePGI prototype using a touch screen tablet provided by the principal investigator. Participants had only the instructions within the prototype provided. The investigator observed the patient and provided directions or answered questions only if there was an inability to proceed further, taking notes of hesitations, completion errors and verbalized difficulties for reference during the subsequent interview. Cognitive interviews were recorded and assessed the ease of use, understandability, navigational elements, interface layout, visual design and potential usefulness of the ePGI. Patient recommendations were incorporated and tested with the next iteration, tested on the same and additional patients. The process was repeated until there were no observed difficulty with the completion of the ePGI nor patient suggestions to improve the ePGI.

In phase two, patients completed the ePGI on a computer tablet once a week for two consecutive weeks before seeing the clinician; the patient and treating clinician had the opportunity to share the ePGI results on the tablet during the visit. In the first week, patients answered survey questions about computer use and completed the ePGI. In the second week, the patient completed the ePGI, usability and acceptability survey questions and interview. Clinicians were interviewed individually at an agreed upon time after all patients had completed the study.

Analysis

Notes and recordings of Phase 1 interviews were reviewed by the first author for content related to suggestions for improving the ePGI. All suggestions were discussed with a bio-informatics professional to resolve any conflicting feedback and then incorporated into the ePGI for testing in the next iteration.Recorded interview data from Phase 2 were coded and analyzed directly from the recordings using content analysis. Quantitative data was downloaded from REDCap and analyzed using Excel for Windows. Because of the pilot study nature and thus small sample size, only descriptive analysis is reported.

Results

During Phase 1, three iterations of testing and refining the ePGI, involving seven patients were required to obtain a usable ePGI; one in which patients could easily complete, provide a description of what to do on each screen congruent with investigators’ intent, and not have additional questions about completion or suggestions for improvement. Cognitive interview results led to prototype changes in screen content, spacing and language. A sixth screen providing a summary of patient responses and overall HRQL index score was added based upon clinician feedback.

In phase 2, 15 patients completed the ePGI and interview; 12 (80%) were women and 12 (80%) were older than 60 years of age. Fourteen patients completed the survey questions (Table 3); six (42%) had little or no computer touch screen experience, three of whom had never used any type of computer prior to the study. Patients required less than 30 seconds or no coaching when completing the ePGI. Nearly all participants rated the ePGI as “easy” or “very easy” to use, navigate, understand, and follow instructions. Less than half stated they frequently shared the type of information they entered on the ePGI with their physician or nurse however most (n=8, 67%) felt the information on the ePGI would be useful in making decisions regarding their disease or treatment.

Table 3.

Frequency of Phase 2 ePGI Usability and Acceptability and Computer Use responses (n =14)

| Question | Easy/Very Easy | In-between | Difficult/Very Difficult | No response* |

|---|---|---|---|---|

| Usability and Acceptability Questions | n (%) | n(%) | n(%) | n |

| How easy or difficult was the survey to complete? | 12(86) | 2(14) | ||

| How easy or difficult was it to use the touch sensitive screen? | 13(93) | 1(7) | ||

| How understandable were the questions? | 12(86) | 2(14) | ||

| How easy or difficult was it to read the instructions on the screen? | 13(93) | 1(7) | ||

| How easy or difficult was it to follow the instructions on the screen? | 13(93) | 1(7) | ||

| How easy or difficult was it to find your way around the pages of the survey? | 14(100) | |||

| Not at all/ very little |

So-so

or

a little |

Quite a bit/ very much | ||

| How helpful was it to complete the survey? | 3(25) | 4(33) | 5(42) | 2 |

| Was the amount of time it took to complete the survey acceptable? | 14(100) | |||

| How useful is the information you entered in the survey to the decisions you make about your health? | 3(25) | 2(17) | 8(67) | 2 |

| Do you share this information with your nurse? | 6(50) | 1(7) | 5(42) | 2 |

| Do you share this information with your doctor? | 4(31) | 3(23) | 6(46) | 1 |

| How much did you enjoy using the survey? | 2(18) | 9(69) | 3 | |

| Computer Use Questions | ||||

| How often do you use computers? | 3(21) | 3(21) | 8(57) | |

| How much do you like computers? | 1(8) | 4(33) | 7(58) | 2 |

| How much do you like to complete computer surveys? | 5(42) | 4(33) | 3(25) | 2 |

| How much do you like to complete paper surveys? | 7(50) | 4(29) | 3(21) | |

| How much do you like to complete face-to-face surveys? | 8(57) | 6(43) |

Non-responders not included in percentage calculations

Patient interview responses provided additional detail to the survey findings. Those who shared the ePGI results verbally or from the tablet with their doctor or nurse (n= 6 and 9, respectively) during the visit initiated the discussion, not the clinician. All patients stated they consider the areas they listed on the ePGI when making treatment decisions but are often unsure how the treatment will affect the areas identified. All patient participants also stated they would like to share the ePGI survey with clinicians even if uncertain if or how it might help.

Eight radiation oncology clinicians (four nurses and four physicians) participated in the study. They all described the typical visit as clinician driven, focused on assessing for common side effects of radiation and those related to the anatomical features within the radiation field. Patient quality of life was defined predominantly (n= 6) as minimal symptomatology and the ability to provide self-care. Two clinicians defined it as enjoying ones’ life, patient well being and lack of bother. Five clinicians stated they were familiar with patient reported outcome measures but had not seen them widely used in practice. All eight clinicians articulated the lack of time and possible disruption of patient flow as significant barriers to using patient-reported outcomes in practice.

The predominant theme voiced by clinicians after a demonstration of the ePGI was the potential value of ePGI use in starting a dialogue about quality of life issues, revealing infrequent or unusual effects of treatment and assist with symptom management. All clinicians felt the ePGI could be used in practice, provided the concerns of patient flow disruption, linking the ePGI to the patient’s electronic health record (EHR) and having adequate resources to respond to patient needs were addressed. All but one clinician stated patients did not typically share ePGI type of information with them during visits. Four clinicians were perplexed by some shared responses on the ePGI (Table 4), not understanding how areas listed were related to the patient’s treatment. For example, a patient listed a pet as most important and affected by cancer and its treatment. The clinician expressed “total befuddlement on how to explore the answer or if I would be able to do anything about it” and did not pursue it further. In the patient interview, the patient described their inability to walk or train the dog due to the patient feeling too tired and out of breath when walking, describing the impact of fatigue in their life.

Table 4.

Frequency of Categories of Areas Effected by Cancer and Its Treatment Listed by Patients in Step 1 of ePGI

| Area | Frequency |

|---|---|

| Relationships (family, friends, pets) | 12 |

| Psychosocial (Stress, depression, embarrassment, worry) | 12 |

| External activities (work, civic, church, travel) | 11 |

| Body Image (appearance, embarrassment, ugly skin and incision) | 6 |

| Physical Symptoms (sleep, pain, drug effects, energy, sexual) | 6 |

| Everyday activities | 2 |

| Health | 2 |

| Longevity of life | 1 |

| Concentration | 1 |

Discussion

This study is the first known use of an electronic platform for the Patient Generated Index, allowing ePGI completion from any internet capable device. Responses support the acceptability and usability of the final prototype at the point of care by patients and clinicians. While clinician concerns about integrating patient reported outcomes into the EHR are understandable, examples of strategies to do so are described in the literature (Bennett, Jensen, & Basch, 2012; Berry et al., 2011; Chung & Basch, 2015; Lobach et al., 2016; McCleary et al., 2013; Wagner et al., 2015). The study precludes generalization of findings, the perplexity felt by clinicians over patient responses demonstrates a lack of correspondence between how patients and clinicians view quality of life. Clinicians can explore the perplexities during the patient visit and may reveal previously unassessed effects of treatment..

The ePGI seems a simple and practical approach to restructure patient-provider interactions and enhance meaningful dialogue at the point of care. Moreover, using a tool such as the ePGI allows for the expression of patient voice and personalized care. Further study evaluating the effects of the ePGI on communication and decision-making are needed.

Acknowledgements:

The authors thank Matthew M. Poppe, MD, Colleen Bruch, RN, MS, OCN® and Bernie LaSalle, BS for their assistance with this study.

Footnotes

The authors do not have any conflicts of interest to disclose.

Ethical Conduct of Research: Study was approved by University of Utah # IRB_00060729

Contributor Information

Susan S. Tavernier, Idaho State University College of Nursing, Meridian, Idaho.

Susan L. Beck, University of Utah, Salt Lake City, Utah.

References

- Aburub AS, Gagnon B, Rodríguez AM, & Mayo NE (2016). Using a personalized measure (Patient Generated Index (PGI)) to identify what matters to people with cancer. Support Care Cancer, 24(1), 437–445. doi: 10.1007/s00520-015-2821-7 [DOI] [PubMed] [Google Scholar]

- Atkinson S, & Rubinelli S (2012). Narrative in cancer research and policy: voice, knowledge and context. Critical Reviews in Oncology/Hematology, 84, Supplement 2(0), S11–S16. doi: 10.1016/S1040-8428(13)70004-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basch E, Artz D, Dulko D, Scher K, Sabbatini P, Hensley M, … Schrag D (2005). Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol, 23(15), 3552–3561. doi: 10.1200/jco.2005.04.275 [DOI] [PubMed] [Google Scholar]

- Bennett AV, Jensen RE, & Basch E (2012). Electronic patient-reported outcome systems in oncology clinical practice. CA: A Cancer Journal for Clinicians, 62(5), 336–347. doi: 10.3322/caac.21150 [DOI] [PubMed] [Google Scholar]

- Berry DL, Blumenstein BA, Halpenny B, Wolpin S, Fann JR, Austin-Seymour M, … McCorkle R (2011). Enhancing Patient-Provider Communication With the Electronic Self-Report Assessment for Cancer: A Randomized Trial. Journal of Clinical Oncology, 29(8), 1029–1035. doi: 10.1200/jco.2010.30.3909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowling A (1995). What things are important in people’s lives? A survey of the public’s judgements to inform scales of health related quality of life. Social Science & Medicine, 41(10), 1447–1462. doi: 10.1016/0277-9536(95)00113-L [DOI] [PubMed] [Google Scholar]

- Camilleri-Brennan J, Ruta D, & Steele RC (2002). Patient generated index: New instrument for measuring quality of life in patients with rectal cancer. World Journal of Surgery, 26(11), 1354–1359. doi: 10.1007/s00268-002-6360-2 [DOI] [PubMed] [Google Scholar]

- Carlson LE, Speca M, Hagen N, & Taenzer P (2001). Computerized quality-of-life screening in a cancer pain clinic. J Palliat Care, 17(1), 46–52. [PubMed] [Google Scholar]

- Chung AE, & Basch EM (2015). Incorporating the patient’s voice into electronic health records through patient-reported outcomes as the “review of systems”. J Am Med Inform Assoc, 22(4), 914–916. doi: 10.1093/jamia/ocu007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark K, Bardwell WA, Arsenault T, DeTeresa R, & Loscalzo M (2009). Implementing touch-screen technology to enhance recognition of distress. Psychooncology, 18(8), 822–830. doi: 10.1002/pon.1509 [DOI] [PubMed] [Google Scholar]

- Crossing the Quality Chasm: A New Health System for the 21st Century. (2001). Retrieved from [PubMed]

- Gustafson A (2011). Adaptive Web Design: Creating Rich Experiences with Progressive Enhancement. Chattanooga, TN: EasyReaders, LLC. [Google Scholar]

- Hall LK, Kunz BF, Davis EV, Dawson RI, & Powers RS (2015). The cancer experience map: an approach to including the patient voice in supportive care solutions. J Med Internet Res, 17(5), e132. doi: 10.2196/jmir.3652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Health Literacy Online: A Guide for Simplifying the User Experience. (2016). Retrieved from https://health.gov/healthliteracyonline/

- Lindblad AK, Ring L, Glimelius B, & Hansson MG (2002). Focus on the individual--quality of life assessments in oncology. Acta Oncol, 41(6), 507–516. [DOI] [PubMed] [Google Scholar]

- Lobach DF, Johns EB, Halpenny B, Saunders TA, Brzozowski J, Del Fiol G, … Cooley ME (2016). Increasing Complexity in Rule-Based Clinical Decision Support: The Symptom Assessment and Management Intervention. JMIR Med Inform, 4(4), e36. doi: 10.2196/medinform.5728 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin F, Camfield L, Rodham K, Kliempt P, & Ruta D (2007). Twelve years–experience with the Patient Generated Index (PGI) of quality of life: a graded structured review. Quality of Life Research, 16(4), 705–715. doi: 10.1007/s11136-006-9152-6 [DOI] [PubMed] [Google Scholar]

- McCleary NJ, Wigler D, Berry D, Sato K, Abrams T, Chan J, … Meyerhardt JA (2013). Feasibility of Computer-Based Self-Administered Cancer-Specific Geriatric Assessment in Older Patients With Gastrointestinal Malignancy. The Oncologist, 18(1), 64–72. doi: 10.1634/theoncologist.2012-0241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielsen J (2000). Why you only need to test with 5 users. Retrieved from nngroup.com website: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/

- Patel KK, Veenstra DL, & Patrick DL (2003). A Review of Selected Patient-Generated Outcome Measures and Their Application in Clinical Trials. Value in Health, 6(5), 595–603. doi: 10.1046/j.1524-4733.2003.65236.x [DOI] [PubMed] [Google Scholar]

- Ruta D (1994). A new approach to the measurement of quality of life: The Patient-Generated Index. Medical Care Research and Review, 32, 1109–1126. [DOI] [PubMed] [Google Scholar]

- Tang JA, Oh T, Scheer JK, & Parsa AT (2014). The current trend of administering a patient-generated index in the oncological setting: a systematic review. Oncol Rev, 8(1), 245. doi: 10.4081/oncol.2014.245 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner LI, Schink J, Bass M, Patel S, Diaz MV, Rothrock N, … Cella D (2015). Bringing PROMIS to practice: brief and precise symptom screening in ambulatory cancer care. Cancer, 121(6), 927–934. doi: 10.1002/cncr.29104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willis GB (2005). Cognitive Interviewing: A tool for improving questionnaire design. Thousand Oaks, CA: Sage. [Google Scholar]

- Wolpin S, & Stewart M (2011). A deliberate and rigorous approach to development of patient-centered technologies. Semin Oncol Nurs, 27(3), 183–191. doi: 10.1016/j.soncn.2011.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]