Highlights

-

•

Voxel-level segmentation labels from DWI, the gold standard, are scarce.

-

•

Our approach using image-level infarct core size obtains voxel-level segmentations.

-

•

The target infarct core size can be estimated with CTP, a weak ground truth.

-

•

Our approach outperforms nnUNet models trained on voxel-level data.

-

•

Segmentations with our approach outperform the CTP estimations.

Keywords: CTA, CTP, Deep learning, Multiple-instance learning, Weak labels

Abstract

Acute ischemic stroke is a leading cause of death and disability in the world. Treatment decisions, especially around emergent revascularization procedures, rely heavily on size and location of the infarct core. Currently, accurate assessment of this measure is challenging. While MRI-DWI is considered the gold standard, its availability is limited for most patients suffering from stroke. Another well-studied imaging modality is CT-Perfusion (CTP) which is much more common than MRI-DWI in acute stroke care, but not as precise as MRI-DWI, and it is still unavailable in many stroke hospitals. A method to determine infarct core using CT-Angiography (CTA), a much more available imaging modality albeit with significantly less contrast in stroke core area than CTP or MRI-DWI, would enable significantly better treatment decisions for stroke patients throughout the world.

Existing deep-learning-based approaches for stroke core estimation have to face the trade-off between voxel-level segmentation / image-level labels and the difficulty of obtaining large enough samples of high-quality DWI images. The former occurs when algorithms can either output voxel-level labeling which is more informative but requires a significant effort by annotators, or image-level labels that allow for much simpler labeling of the images but results in less informative and interpretable output; the latter is a common issue that forces training either on small training sets using DWI as the target or larger, but noisier, dataset using CT-Perfusion (CTP) as the target.

In this work, we present a deep learning approach including a new weighted gradient-based approach to obtain stroke core segmentation with image-level labeling, specifically the size of the acute stroke core volume. Additionally, this strategy allows us to train using labels derived from CTP estimations.

We find that the proposed approach outperforms segmentation approaches trained on voxel-level data and the CTP estimation themselves.

1. Introduction

A cute ischemic stroke (AIS) is a leading cause of death and disability worldwide, and treatment decisions, particularly for patients with the most disabling type of AIS - large vessel occlusions (LVOs) - rely heavily on neuroimaging evaluations. In the last decade, minimally invasive endovascular thrombectomy procedures have been shown to dramatically reduce the disability caused by LVO AIS, and patient eligibility for this procedure is based on imaging assessments of the extent and location of irreversibly injured tissue, termed the “infarct core.” Patients who present for care without evidence of substantial injury are more likely to benefit than those with larger injuries, which affect more vital brain structures. At present, however, the optimal imaging technique to screen patients for the extent of infarct core at presentation remains undetermined.

The most accurate means of defining acute infarcted brain tissue is diffusion-weighted (DWI) MRI imaging. Practically, however, the 24/7 availability of an MRI scanner for an emergency evaluation is limited. As a result, many of the clinical trials of endovascular thrombectomy relied on CT perfusion (CTP). This contrast-based technique benefits from commercially available software that can perform automated post-processing and integrate those results seamlessly into clinical workflows. While much more available than 24/7 MRI-DWI imaging, it is uncommon outside of major urban stroke centers, thereby limiting its utility as an effective screening modality. In a study examining administrative claims data, nearly 70% of patients diagnosed with AIS were treated at hospitals unable to perform CTP. (Kim et al., 2021).

In this study, we develop a method to define and segment infarct core in AIS patients and validate its performance using MRI-DWI as a ground truth. We develop our algorithm using images from a much more widely available imaging technique, (Kim et al., 2021) CT Angiogram (CTA), and use images that were acquired in the routine care of patients being emergently evaluated for possible AIS, to ensure the technique is tested in an appropriate and generalizable cohort. One of the major challenges with CTA is that it shows significantly less contrast in the stroke core area than CTP or MRI-DWI.

Recently published works (Hokkinen et al., 2021, Mäkelä et al., 2022), including ones from our group (Abdelkhaleq et al., 2021, Sheth et al., 2019), have shown that image computing pipelines leveraging deep convolutional neural networks have the potential of using CTAs to estimate the infarct core hypoperfused volumes either as an image-level volume or as a voxel-level segmentation. However, these approaches suffer from two significant drawbacks. The first is the trade-off between voxel-level segmentation/image-level labels, where algorithms can either output pixel-level labeling, which is more interpretable and informative but requires a significant amount of effort by expert annotators, or image-level labels that allow for much simpler labeling of the images and therefore larger datasets and simpler model updates, but results in less interpretable and informative algorithm output. The second is the difficulty of obtaining large enough samples of high-quality DWI images, the gold standard, which forces algorithm training either on small training sets using DWI as the target or larger datasets using CTP as the target, which is not ideal as CTP estimations introduce some errors especially when voxel-level segmentation is used.

In this work, we present a deep learning model including a new weighted gradient-based approach to address both problems. We show that we can train our model using image-level labeling, i.e. the size of the acute stroke core volume, and obtain voxel-level segmentation. This strategy allows us to significantly reduce the error of training using labels derived from CTP estimations and therefore use larger training sets. This is achieved by training a symmetry-sensitive convolutional neural network (CNN) model receiving as input a CTA image and the total infarct core volume estimated with the CTP image as the target value to be learned. Since the difference between hemispheres is the key aspect used by stroke clinicians and CTP deconvolution algorithms (Barber et al., 2000, Konstas et al., 2009) to segment the stroke core, our CNN is designed to implicitly learn a distance between the feature representations of the two brain hemispheres. We then propose a new weighted gradient-based approach inspired by but distinct from Grad-CAM (Selvaraju et al., 2017), and compute the most relevant distance feature maps with respect to the predicted stroke core volume. After a few postprocessing steps, this map can be used as a segmentation map for the stroke core. The segmentation map obtained is then evaluated on the manual segmentations from DWI images which provide the best possible gold standard for stroke core on an external dataset not used for training or validating the model. These results are compared to a fully supervised nnU-NET models (Isensee et al., 2021) which have also been successfully used to segment stroke core on CT data (El-Hariri et al., 2022) and segmentations derived from the CTP images using commercial software, RAPID.ai by iSchemaView, the current de facto standard in large stroke centers because of successful clinical trials (Albers et al., 2018, Nogueira et al., 2018) (Nogueira et al., 2018). In addition to the voxel-level stroke maps, we evaluate these approaches on the image-level estimations and we further compare them to the image-level expert estimations on CTA.

Given the potential impact of this work on stroke care, we developed a web application allowing clinical and medical image computing researchers to estimate stroke core segmentations on CTA using the proposed model. It is available at https://glabapps.uth.edu/.

2. Previous work

Using image-level labels to output voxel-level label estimations has been attempted before, and it typically falls under the umbrella of multiple-instance learning (MIL) (Cheplygina et al., 2019, Quellec et al., 2017a). MIL scenarios are not unique to images, and they occur whenever acquiring labels for samples independently is costly and labels for a group of samples, also known as “bags”, is not.

Examples of inferring pixel-level labels from image level with deep learning networks for medical images include techniques for retina images (Gondal et al., 2017, Quellec et al., 2017b, Zhao et al., 2019), histopathology or microscopy images (Jia et al., 2017, Kraus et al., 2016, Lerousseau et al., 2020, Lu et al., 2021, Xu et al., 2019, Xu et al., 2014, Zhang et al., 2022) for histopathology or microscopy images, natural images (Ahn and Kwak, 2018, Huang et al., 2018, Pinheiro and Collobert, 2015, Tang et al., 2020), and 3D brain vessel segmentation (Dang et al., 2022). However, little MIL work has been done in the field of acute stroke detection with 3D images.

Recently, approaches to estimate infarct core with CT and CTA brain images of acute ischemic stroke subjects have been developed. Whenever a voxel-level output was produced, the basic model employed consisted of variations of patch-based convolutional neural networks (CNN) or fully convolutional neural networks (F-CNN) similar to a U-Net architecture, typically with steps for the generation of an internal network representation encouraging the comparison between the two brain hemispheres. In two published works (Hokkinen et al., 2021, Mäkelä et al., 2022), authors proposed two models to segment infarct core using CTA as input and voxel-level labels generated from CTP-derived ground truth. The former used a 3D CNN with residual connections, a fully connected layer to generate the probability of stroke at the center of a 3D patch, and a patch-based strategy to generate the samples; the latter used an extended version of the same architecture with a hyperparameter tuning step. In both cases, the original and “left–right flipped” brain images have been used as two image channels to encourage the use of symmetry information between the two brain hemispheres in the convolutional filters.

Other groups trained pipelines including F-CNN segmentation networks on CT images using voxel-level ground truth either based on DWI (Kuang et al., 2021, Qiu et al., 2020) or CTP (El-Hariri et al., 2022). El-Hariri et al. (2022) used a recent auto-configuring F-CNN called nnU-net (Isensee et al., 2021) using the original and “left–right flipped” CT as two image channels. Qiu et al. (2020) used more complex processing involving also an F-CNN to generate an asymmetry map, hypoattenuation maps, cerebrospinal fluid maps, and lesion probability as an input for a final random forest classifier. Kuang et al. (2021) trained an end-to-end F-CNN using symmetric and non-symmetric inputs and atlas images, with the addition of attention gates and another output to estimate the ASPECT score on top of voxel-level stroke labels.

In our group’s work (Sheth et al., 2019), we developed a CNN model to estimate image-level dichotomous variables representing large and small stroke cores from CTA using CTP-based ground truth labels. The CNN model, called DeepSymNet-v1 receives as input the two hemispheres separately and then learns shared convolutional filters between the two images, similarly to a Siamese network, which are then subtracted by an L1-layer. The L1-layer activations are then used as input to other convolutional filters that learn a symmetry-sensitive representation. In another work (Abdelkhaleq et al., 2021), we used an extension of the model, called DeepSymNet-v2, that greatly reduces the complexity of the convolutional filters by avoiding the use of Inception layers, includes a non-symmetric path, skip-connections, batch normalization, and other improvements in the pre-processing pipeline. While this was successfully tested using DWI-based as ground truth, it is still only able to generate image-level labels. The same architecture was also successfully tested for large vessel occlusion detection with low-quality CTA images from mobile stroke units (Czap et al., 2022).

In this work, we present a further extension of the deep learning architecture, called DeepSymNet-v3, and a new weighted gradient-based approach to obtain stroke core segmentation with image-level labeling. To our knowledge, this is the first time that a machine learning-based model for acute stroke care trained on image-level labels is shown to generate a full segmentation of the stroke core.

3. Material

The imaging data and clinical variables were retrospectively acquired from the Memorial Hermann Hospital System under protocols HSC-MS-20–1266 and HSC-MS-19–0630 approved by the Committee for the Protection of Human Subjects at the University of Texas Health Science Center at Houston. The data acquisition has been carried out in accordance with the Declaration of Helsinki.

We included subjects with acute ischemic stroke or stroke mimics, i.e. subjects who underwent neuroimaging to evaluate for possible stroke but were ultimately diagnosed as having conditions other than stroke after detailed evaluation by the Vascular Neurology specialists. We included subjects with a readable quality CTA image who also had a CTP or DWI image to confirm the stroke core volume. We split our data into a training/validation set and a testing set as shown in Table 1.

Table 1.

Patient population.

| Training/Validation Set | Testing set | |

|---|---|---|

| N | 362 | 81 |

| Age, mean (std) | 65 (15 std) years | 64 (13 std) years |

| Sex, N | 183 males (51%), 168 females (46%), 11 N/A (3%) | 37 males (46%), 44 females (54%) |

| Ischemic Stroke, N | 227 yes (63%), 135 no (37%) | 81 yes, 0 no |

| Large Vessel Occlusion, N | 169 yes (47%), 193 no (53%) | 81 yes, 0 no |

| CTP Stroke Core Volume, N | ||

| 0 ml | 255 (70.4%) | 23 (28.3%) |

| > 0 & < 30 ml | 50 (13.8%) | 27 (33.3%) |

| ≥ 30 & ≤ 70 ml | 18 (4.9%) | 15 (18.5%) |

| > 70 ml | 39 (10.7%) | 6 (7.4%) |

| CTP N/A | 0 (0%) | 10 (12.3%) |

| DWI Stroke Core Volume, N | ||

| 0 ml | N/A | 0 (0%) |

| > 0 & < 30 ml | N/A | 40 (49.3%) |

| ≥ 30 & ≤ 70 ml | N/A | 19 (23.4%) |

| > 70 ml | N/A | 22 (27.1%) |

| Race, N | ||

| White | 125 (34.5%) | 32 (39.5%) |

| Black or Afr. American | 106 (29.2%) | 19 (23.4%) |

| Asian | 81 (22.3%) | 20 (24.6%) |

| Other | 39 (10.7%) | 10 (12.3%) |

Our testing set only included subjects with a large vessel occlusion (LVO) that had a successful endovascular thrombectomy. LVO was defined as an occlusion of the middle cerebral artery (M1 and M2 segments) or occlusion of the intracranial internal carotid artery. A successful endovascular thrombectomy was defined using a thrombolysis in cerebral infarction (TICI) score equal to or greater than “Grade 2B”, i.e. all cases that had substantial reperfusion. Subjects in our testing set had DWI images that allowed us to precisely estimate the stroke core map with two independent readers. The two readers were graduate students supervised by an interventional neurologist who specialized in stroke care with experience in evaluating clinical neuroimaging data. They used Slicer 3D (Kikinis et al., 2014) to create the segmented stroke core maps. DWI images were acquired 24–48 h after the stroke event. We included only subjects with a successful recanalization and excluded hemorrhagic transformations where bleeding occurred outside of the stroke core. Patients with hemorrhagic transformation (i.e. petechial bleeding within the infarct core) were included, as infarct core segmentation for these patients would remain accurate. As such, the stroke core was not expected to have changed significantly. This methodology is consistent with prior studies, which have used follow-up infarct volume imaging in patients with rapid reperfusion as a means to evaluate the accuracy of imaging modalities acquired at the patient’s initial presentation. (Hoving et al., 2018).

4. Methods

This section starts by describing the pre-processing step of the various image modalities used as input to the models and to evaluate them. Then, we describe our convolutional neural network design, DeepSymNet-v3, that can be trained directly with image-level labels, followed by a description of the Weighted Gradient-based Segmentation (WGBS) step which allows us to convert the internal gradients of the network to a segmentation. Further, we describe three other existing approaches which we use as comparisons: (1) nnU-net, a model generation pipeline for fully supervised segmentation algorithms trained on segmentation maps; (2) RAPID.ai CTP, the commercial software used to generate stroke core maps from CTP data; (3) CTA-ASPECTS, a manual grading approach aimed at neuroradiologists to assess early CTA changes in acute ischemic stroke which is correlated with stroke core size.

4.1. Pre-processing

All images CTA, CTP, and DWI were first converted from DICOM to Nifti format, skull stripped, resampled, and finally linearly registered to a common image template with an affine transformation. The image template had a 146x182x133 resolution and a 1x1x1mm voxel size. All the segmentation maps on DWI and CTP used to train or validate the algorithms were performed in the original patient image space and then moved to the common template space using the affine transformation identified in the DWI or CTP registration. Segmentation maps used a nearest neighbor interpolation to avoid any change in the values of the segmentation maps, as opposed to CTA, CTP, and DWI which used bilinear interpolation. The description of the additional processing needed for each modality follows.

The registration of CTA images required Gaussian filtering (sigma = 1, kernel size = 9x9x9) to blur the vessels and parenchyma before their use in the registration algorithms. The transformation identified was then applied to the CTA image before blurring. CTA images voxel were clipped to 100 Hounsfield units (HU) and then normalized from 0 to 1 before their use in the machine learning models. The image template allowed to split the two hemispheres by using the sagittal plane in the middle of the image.

The registration of CTP images could not proceed directly as they are 4D volumes showing the uptake of contrast agent as a function of time. Therefore, a mean CTP volume was first created by averaging each voxel across time which led to a 3D brain volume. Then, this mean CTP volume was registered to the common template.

4.2. DeepSymNet-v3

DeepSymNet-v3 is a 3-dimensional convolutional neural network that receives as input the two CTA brain hemispheres as inputs and aims to learn the overall stroke core volume. The model is designed to be insensitive to the spatial location of the activations of the convolutional filters and at the same time to learn distances between hemispheres in feature space. This strategy enables learning from a weak label like the stroke volume that can have significant errors at voxel level, particularly at the boundaries. These errors can be introduced by the CTP image acquisition or the image processing itself. (Mangla et al., 2014) In addition, the location of the CTA hypoperfused signal, indicating the presence of a stroke core, might not match exactly the boundary of the stroke core identified by CTP because of the different imaging timing during the injection of contrast. (Estrada et al., 2022) While these errors are likely to influence the shape of the stroke, they are less likely to influence the overall stroke volume, particularly for medium and large strokes. As such, using the overall stroke volume as a weak image-level label, the model can learn on a target which is less confounded by misrepresentations of the vasculature territory affected by stroke.

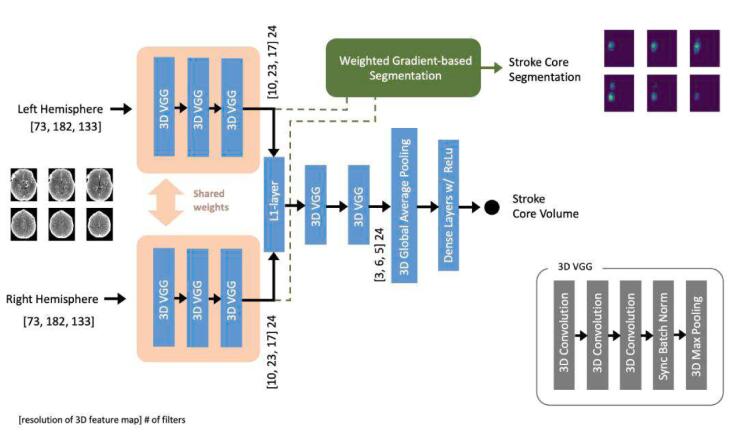

Fig. 1 shows the structure of the network. It receives as input the two hemispheres separately, three 3D VGG blocks learn low-level features common to the whole brain by sharing the weights between the two data paths. This is similar to the structure of Siamese architectures (Bertinetto et al., 2016), however, instead of directly using a contrastive loss, the two paths are combined by an “L1-layer” that performs an element-by-element L1 difference between each element of the two tensors, essentially computing the local L1 distance between the two feature maps while preserving the spatial information. Then, more high-level convolutional features are estimated by two 3D blocks, which are learning higher order filters from these feature-level distances, thereby avoiding the need to explicitly minimize a Euclidean distance metric immediately after the shared-weight path, which is what a contrastive-loss would have forced to do. Then, a 3D global average pooling layer followed by a fully connected later with ReLu activations and a neuron with a single output combined with linear activation completes the network. These last layers allow combining activations from the last 3D VGG blocks, irrespective of their location, which then are used to learn the overall stroke core volume with a simple mean squared error (MSE) loss function shown below

where N is the number of samples, is the stroke volume for the sample n and is the estimated stroke core volume with CTP.

Fig. 1.

DeepSymNet-v3 architecture. The model is trained on stroke core volumes, i.e. image-level labels, and it generates stroke core segmentation maps, i.e. voxel-level labels.

Each 3D VGG block starts with 3 consecutive convolutional layers with a depth of 24 filters each with a kernel size of 3x3x3 and ReLu activations. Then, they are followed by a 2x2x2 3D max pooling layer with a 2x2x2 stride, which reduces the feature spatial resolution, and a Batch Normalization step synchronized across the 4 GPUs used for concurrent training.

Due to the lack of very deep layers, relatively simple convolutional filters, and global average pooling, the network has a total number of trainable parameters of ∼ 219,000. As a comparison, common 2D convolutional architectures such as Inception (Szegedy et al., 2016), ResNet (He et al., 2015), VGG-16 (Simonyan and Zisserman, 2015) or EfficientNet (Tan and Le, 2020) have tens of millions of parameters even if using 2D convolutions.

The model is trained without any form of pre-training using the Adam optimizer, with a learning rate of 0.001, a mini-batch size of 35, and early stopping with a maximum number of epochs of 200.

4.3. Weighted Gradient-based segmentation (WGBS)

Here, we introduce our strategy to generate a segmentation map by using a gradient-based technique. Our WGBS approach first generates a saliency map to estimate how the features computed before the L1-layer (which forces a local inter-hemisphere distance computation) are influenced by changes in the predicted stroke core output, followed by a post-processing step to identify the hemisphere most likely to be affected by stroke and generate the final segmentation. This last step is necessary as the L1-layer of the DeepSymNet-v3 introduces a left/right hemisphere ambiguity for the saliency maps leading to an increase of y. Without it, a mirror image of the stroke core would often appear in the contralateral hemisphere. The saliency map starts with a gradient-based operation inspired by Grad-CAM (Selvaraju et al., 2017), but the similarity stops there as the weighting strategy, pooling operations, and terms for computing the gradients are quite different from the original Grad-CAM that is tailored to classification problems.

Given A as the convolutional layer before the L1-layer; i,j,s as the 3D brain coordinates, and k as the index of the 3D convolutional filter in A. We compute the average relevance for each feature map k as

where Z is the total number of voxels Ak. Note that in the classical Grad-CAM algorithm, y is the activation before the softmax for a specific class. In our case, we can use directly the raw estimation of the hypoperfused volume as the network does not compress it using a sigmoid-like function, but rather uses a ReLu activation. Then, we weighted the saliency map Sk for each feature map k,

And we estimate the hypoperfused area S for each hemisphere

where Z' is the total number of convolutional filters in S.

Finally, we create the final segmentation map by using the estimated S projecting it to both hemispheres and picking the area with the lowest voxel values. This is similar to what is done by neuroradiologists when identifying hypoperfused areas with CTA-ASPECT scores. The full process is described in Algorithm 1.

| Algorithm 1: Python-style pseudocode for weighted gradient-based segmentation (WGBS) |

|---|

| # I,J,S represent the 3D dimension of the brain |

| # d: in comments indicate the dimensionality of the tensor |

| # mean(): global mean returning a 1-d variable |

| # sum(): global sum returning a 1-d variable |

| # max(): global maximum returning a 1-d variable |

| # clip(var, clipValue): clip var to the clipValue maximum |

| # Smooth and normalizes S between 0 and 100. |

| def normalize(S): |

| # normalize |

| res = clip(S, 0, max(S)) # d: same as S |

| res = res / max(S) # d: same as S |

| res = res * 100 # d: same as S |

| # smooth |

| res = gauss_filt(res) # d: same as S |

| return res |

| # Generate a segmentation map for hypoperfused areas |

| # receives as input the brain CTA and the DeepSymNet-v3 network |

| # trained with image-level stroke core sizes estimated with CTP |

| def createSegmentationMap(brain, deepSymNetv3): |

| # split hemispheres into brain_left and brain_right. |

| # Compute weighted saliency maps S |

| # (named S_left and S_right) as previously described |

| # find the hemisphere with the strongest S, named S_max |

| if sum(S_right) > sum(S_left): |

| S_max = normalize(S_right) # d: IxJxS/2 |

| else: |

| S_max = normalize(S_left) # d: IxJxS/2 |

| # find the affected hemisphere based on the average voxel values |

| # in the original CTA corresponding to the thresholded S_max |

| v_right = mean(brain_right[S_max > 50]) # d: 1 |

| v_left = mean(brain_left[S_max > 50]) # d: 1 |

| if v_right < v_left: |

| hypoperf_r = S_max # d: IxJxS/2 |

| hypoperf_l = zeros(I,J,S/2) # d: IxJxS/2 |

| else: |

| hypoperf_r = zeros(I,J,S/2) # d: IxJxS/2 |

| hypoperf_l = S_max # d: IxJxS/2 |

| return hypoperf_l, hypoperf_r |

4.4. Stroke core estimation methods used as benchmark

In the following subsections we describe the other stroke core estimation approaches that we use to benchmark the DeepsymNetv3 + WGBS proposed: two fully supervised segmentation networks trained on voxel-level labels (nnU-Net), the CBFs maps generated with the deconvolution-based approach by the commercial software Rapid.Ai with CTP, and expert-based stroke core estimation on CTA.

4.4.1. nnU-Net

nnU-net (Isensee et al., 2021) is a model selection approach for fully-convolutional neural networks image segmentation that uses a mix of pre-defined rules and autoML techniques which identify good strategies for sampling, pre-processing, as well the U-Net model itself. The authors have tested this strategy on 23 public datasets, achieving excellent segmentation performance in all of them and sometimes reaching state-of-the-art performance. In (El-Hariri et al., 2022) the nnU-net model was used to identify hypoperfused areas using brain CT in acute ischemic stroke patients.

As such, a nnU-net-based model is an excellent benchmark to estimate the performance of fully supervised segmentation algorithms trained on the segmentation maps themselves rather than image-level labels in DeepSymNet-v3.

We run two experiments with nnU-net, one using as input the pre-processed CTA volume as a single channel and another using a 2-channel input, where the first channel contained the pre-processed CTA volume and the other “left–right flipped” version. The second experiment attempted to drive the Unet-like model selected by nnU-net to learn features leveraging the differences between hemispheres. Throughout the rest of the paper, we refer to the model generated by the second experiment as nnU-Net-Sym. Fully 3D convolutional layers were used and optimized using stochastic gradient descent with a learning rate of 0.01 and Nesterov accelerated gradients (Dogo et al., 2018) with a momentum of 0.99 and weight decay of 3e-05. A maximum number of epochs of 1000 with a batch size of 2. All parameters, but the choice of using fully 3d convolutional layers, were automatically selected by the nnU-net model selection pipeline.

As in the original nnU-net paper, we used 5-fold-cross validation on the training/validation set using the stroke core map estimated with CTP and then estimated the final performance using the best-performing model weights on the test set.

4.4.2. RAPID.ai CTP stroke core maps

CTP stroke core maps are estimated with the RAPID.ai commercial software (by iSchemaView) using the rCBF < 30% maps, which is the most common definition in recent clinical trials. Relative cerebral blood flow or rCBF is computed as CBF = CBFvoxel/CBFcontrol. The software computed CBFs with a deconvolution-based approach using singular value decomposition (SVD), (Konstas et al., 2009) followed by a proprietary post-processing approach on the rCBF. While the software did not allow to generate the 3D voxels of such a map, it generated 2D reports containing all the axial slides. This allows us to back project the stroke core map into the original 3D volume.

We decided to use RAPID.ai as it has been used as the basis of patient selection for successful stroke clinical trials such as DEFUSE 3 (Albers et al., 2018) and DAWN. (Nogueira et al., 2018).

4.4.3. Image-level expert estimation (CTA-ASPECTS)

The Alberta Stroke Program Early CT Score (ASPECTS) is a manual grading approach aimed at vascular neurologists and neuroradiologists to assess early CT changes in acute ischemic stroke and correlates with stroke core size. (Barber et al., 2000, Haussen et al., 2016) It works by dividing the brain into 10 different areas in each hemisphere, each area is then evaluated by comparing it to the contralateral hemisphere and assigning a score. The maximum score is 10, with one point subtracted for each area where an ischemic change is suspected. While it was initially designed for standard CT, it was found that it is more sensitive when used on CTA images. (Coutts et al., 2004) as CTA has better contrast than CT in the infarct core area (Estrada et al., 2022). ASPECT and CTA-ASPECT are essentially the strategies used by neuroradiologists to have a proxy of acute stroke core size when no CTP or DWI imaging is available.

In this work, CTA-ASPECTS are assigned to each subject in the test set by an expert stroke neurologist who was blinded to the subject information and had only access to the CTA image. This score allows us to estimate the performance of an expert in adjudicating the degree of ischemic changes using a validated clinical metric.

5. Results

First, we evaluate the performance of the approaches targeted at estimating the overall volume of stroke core at image-level, i.e. DeepSymNet-v3 on CTA, with RAPID.ai on CTP and a human expert on CTA with CTA-ASPECTS. The ground truth is generated by averaging the volume estimated on DWI by the two experts. Table 2 shows the performance evaluated with the area under the ROC curve (AuRoC), the area under the precision/recall curve (AuPrC), and the sensitivity/specificity pair estimated with the point in the ROC curve closer to a perfect classification. We only included subjects for which CTP data was available (n = 71). The performance has been evaluated using the stroke volume dichotomized at thresholds that can be used as clinical endpoints to decide whether to perform an endovascular thrombectomy or not (although, the most used ones are likely to be 50 ml or 70 ml). The AuRoC and AuPrC for the human expert were obtained by varying the threshold of the CTA-ASPECT scores. DeepSymNet-v3 overperforms the human expert on the same CTA data for all metrics. When DeepSymNet-v3 is compared to RAPID.ai, which uses CTP as input, the performance is similar, however, DeepSymNet-v3 appears to have a slight advantage on thresholds until 50 ml.

Table 2.

Image level core estimation using our approach (with CTA input), Rapid.Ai (CTP input) and expert adjudication with CTA input (CTA-ASPECTS).

| RAPID.ai on CTP |

DeepSymNet-v3 on CTA |

Expert on CTA (CTA-ASPECTS) |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| AuRoC | AuPrC | Sens/Spec | AuRoC | AuPrC | Sens/Spec | AuRoC | AuPrC | Sens/Spec | |

| Ischemic Core Threshold | |||||||||

| 15 ml | 0.77 | 0.89 | 0.56/0.90 | 0.83 | 0.93 | 0.62/0.95 | 0.76 | 0.88 | 0.74/0.71 |

| 30 ml | 0.81 | 0.82 | 0.62/0.89 | 0.85 | 0.83 | 0.91/0.65 | 0.82 | 0.79 | 0.88/0.65 |

| 50 ml | 0.82 | 0.78 | 0.71/0.91 | 0.87 | 0.78 | 0.79/0.87 | 0.81 | 0.65 | 0.92/0.55 |

| 70 ml | 0.86 | 0.76 | 0.82/0.94 | 0.90 | 0.69 | 0.88/0.85 | 0.78 | 0.50 | 0.94/0.50 |

The external test set with valid CTP (n = 71) is used and the final ground truth used is the average between the two reader segmentations on DWI. AuRoC: Area under the ROC curve; AuPrC: Area under the precision recall curve; Sens/Spec: Sensitivity/Specificity. In bold, the best performing method out of the three compared according to AuRoC or AuPrC. For each row and metric, the best performing method is highlighted in bold.

Then, we evaluate the voxel level segmentation performance using the DICE coefficient and 95% Hausdorff Distance (HD). The former is arguably the most used metric to evaluate segmentation algorithms as it evaluates voxel overlap between segmentation and ground truth ignoring the true negative in the background, however, it has some drawbacks when evaluating contours, especially of large strokes. (Taha and Hanbury, 2015) The HD compares the largest distance between contours representing the estimated core maps and the ground truth, which counterbalances some of the drawbacks of DICE. All the metrics computed are estimated at subject-level, i.e., each subject is given a DICE or HD metric independently, and then they were averaged together for the whole test set. This process avoids giving more weight to subjects with large strokes, however, it also means that missing a very small stroke, say of <2 ml will significantly impact the overall scores. Because of this, our segmentation evaluation is stratified by stroke size.

Table 3 compares the segmentation performance of DeepSymNet-v3 + WGBS (trained only on an image-level label, i.e., stroke volume) to nnU-net / nnU-net-Sym, two supervised segmentation models trained on voxel-level maps. Note that in all models CTA data was used as input. DeepSymNet-v3 + WGBS outperformed both models in the full test set and after stratification. The increase of DeepSymNet-v3 + WGBS over nnU-net is statistically significant (p-value < 1.0e-03 for both networks) according to the Mann-Whitney U test.

Table 3.

Segmentation performance comparison (1).

| nnU-Net on CTA |

nnU-Net-Sym on CTA |

DeepSymNet-v3 + WGBS on CTA |

|||||

|---|---|---|---|---|---|---|---|

| N | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | |

| All Test set | 81 | 0.14 | 27.9 | 0.14 | 25.8 | 0.26 | 25.2 |

| Test set excluding stroke core ≤ 7.5 ml | 69 | 0.16 | 27.4 | 0.16 | 25.4 | 0.30 | 22.8 |

| Test set excluding stroke core ≤ 15 ml | 58 | 0.18 | 27.0 | 0.18 | 25.3 | 0.35 | 21.6 |

| Test set excluding stroke core ≤ 30 ml | 41 | 0.22 | 22.0 | 0.21 | 24.3 | 0.42 | 20.2 |

The full external test set (n = 81) is used. Improvement of DeepSymNet-v3 + WGBS over nnU-net is statistically significant (p-value < 1.0e-03 for both networks) according to the Mann-Whitney U test.

For each row and metric, the best performing method is highlighted in bold.

Table 4 compares the segmentation performance of DeepSymNet-v3 + WGBS to Rapid.Ai, which uses CTP as input. We only included subjects for which CTP data was available (n = 71). Also in this case, DeepSymNet-v3 + WGBS outperformed Rapid.Ai in all tests. This is statistically significant (p-value < 1.0e-04) according to the Mann-Whitney U test. We also report the inter-reader performance on DWI segmentations. As expected, the error between readers is much lower than the error of even the best model (on CTA or CTP), however, their maximum DICE coefficient ranges from 0.71 to 0.79.

Table 4.

Segmentation Performance Comparison (2).

| RAPID.ai on CTP |

DeepSymNet-v3 + WGBS on CTA |

Experts' Agreement on DWI |

|||||

|---|---|---|---|---|---|---|---|

| N | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | Avg. Dice Score (higher is better) | Median 95% HD (lower is better) | |

| All Test set (with valid CTP) | 71 | 0.12 | 28.0 | 0.25 | 24.9 | 0.71 | 5.4 |

| Test set (with valid CTP) excluding stroke core ≤ 7.5 ml | 60 | 0.14 | 25.7 | 0.28 | 24.6 | 0.75 | 4.9 |

| Test set (with valid CTP) excluding stroke core ≤ 15 ml | 50 | 0.15 | 25.7 | 0.32 | 24.0 | 0.77 | 4.5 |

| Test set (with valid CTP) excluding stroke core ≤ 30 ml | 34 | 0.19 | 23.2 | 0.41 | 21.8 | 0.79 | 4.2 |

The external test set with valid CTP (n = 71) is used. Improvement of DeepSymNet-v3 + WGBS over Rapid is statistically significant (p-value < 1.0e-04) according to the Mann-Whitney U test. For each row and metric, the best performing method is highlighted in bold (experts' agreement is not considered as it is used as a reference).

Fig. 2 shows an example of the pre-processed CTA used as input to DeepSymNet-v3, the stroke core identified with CTP by RAPID.ai, WGBS with DeepSymNet-v3, the DWI volume used to generate the ground truth stroke core maps, and the final stroke core maps manually segmented that have been used to evaluate the algorithms. Figures for all subjects of the test set are available as supplementary materials.

Fig. 2.

Example Segmentation. Estimations for entire test dataset are available as supplementary material.

In a system with an AMD Epyc 2.4Ghz processor and an NVIDIA A100 GPU card, the DeepSymNet-v3 + WGBS model including data loading takes an average of 0.67 s (std 0.03) for a single CTA. If we include a full image report generation, the times go up to an average of 6.5 s (std 0.5) for a single CTA. These benchmarks used a single CPU core, 5.6 GB of GPU memory, and were repeated 10 times each. They do not include the time required for rigid image registration. Our non-optimized FSL-based rigid image registration pipeline required<1 min per subject, however, this time can be drastically reduced by using more recent image registration approaches.

6. Discussion and conclusions

In this work, we have presented an approach able to leverage the image-level labels from CTP to generate voxel-level segmentations.

In our experiments, we have shown that voxel-level estimations with DeepSymNet-v3 + WGBS outperform two fully supervised approaches, based on nnU-net, trained directly on the voxel-level (Table 3). At first sight, this seems counterintuitive as a fully supervised method should always be better or comparable to a semi-supervised MIL approach (if trained on the same data) (Jia et al., 2017). Typically, only MIL methods combining bag-level labels with at least some instance-level labels can obtain performance higher than fully supervised approaches (Li et al., 2018, Shin et al., 2019). However, there could be instances in which a fully supervised loss could be detrimental. This situation can happen in the world of biomedical imaging computing when pixel-level labels used to train algorithms contain a non-negligible amount of noise. In this scenario, avoiding training directly on the pixel-level labels can be beneficial, as hinted by Kandemir and Hamprecht (2015) who compared MIL instance-based feature engineering algorithms with a fully supervised approach on histopathology data, finding that one approach, mi-Graph (Zhou et al., 2009), was able to outperform the fully supervised one. In our case, voxel labels from CTP are quite noisy, particularly at the edges of the ischemic stroke core, and therefore can be considered weak labels. However, the overall volume estimation is much less likely to suffer from these errors, as overestimations and underestimations of stroke borders tend to cancel out if the error is randomly distributed, therefore they provide a target that is less noisy than the voxels themselves. The reduced CTP error in image-level stroke volume is observed in the experiments in Table 2 and Table 4, where CTP has comparable performance with DeepSymNet-v3 with image-level estimation, but statistically significantly lower performance on voxel-level estimations.

Another reason behind the relatively good performance obtained with the segmentations is that DeepSymNet-v3 internally learns a distance between groups of symmetric voxels at relatively high resolution via the L1-layer, and WGBS can quantify the most relevant voxels for such distance. However, this would not be possible if the gradient was capped by a sigmoid function at the final output, and it is further helped by the 3D global average polling layer that makes the activations insensitive to location, allowing the fully connected layer to combine the feature maps to obtain the stroke core volume.

We should note that in many automated segmentation systems for medical imaging, a “good” segmentation system will have better Dice and 95% HD scores than what we reported in our experiments. However, our experimental setup is particularly challenging because of the difficulty of the task itself, using averages score at a subject level (which greatly penalizes missing small strokes), and using manual segmentation on the DWI rather than on the original CTAs. Therefore, all of our performance considerations of the methods presented are done by comparing relative improvements, or lack thereof, with other methods able to generate a segmentation on the same test dataset, with the same test metrics. Having said this, automatic stroke core segmentation methods competing in the ISLES challenge (Hakim et al., 2021) achieved mean Dice scores ranging from 0.23 to 0.51 and all these methods had the significant advantage of using CTP data as input, similarly to Rapid.ai.

This work has also other limitations. Firstly, the error between readers on DWI data is lower than the one of DeepSymNet-v3 + WGBS. However, this is to be expected as the acute ischemic stroke core on DWI is much clearer than on CTA, which is used as input for our model. See Fig. 2 for an example. Then, WGBS assumes that the stroke is one-sided, which is true in the vast majority of cases but not always. Furthermore, we did not test any method trained on DWI as a target label either at image or voxel level. While this is likely to achieve even better performance, it is beyond the scope of this work as we want to show that we can train clinically useful models with the much more common CTP data, leaving the scarce and precious DWI estimations only as the external test set. Finally, the test set used included only subjects with LVO and not with other types of ischemic strokes. However, the LVO population is the one that is most likely to benefit from the estimation of the stroke core during the acute phase.

If these results are confirmed with additional data from other sites, this model could be applied in stroke centers that do not have access to CTP or DWI imaging 24/7, which represent the majority of hospitals in the US, and the majority of patients suffering from acute ischemic stroke. Therefore, we developed a web application allowing clinical and medical image computing researchers to estimate stroke core segmentations on CTA using the proposed model. It is available at https://glabapps.uth.edu/.

Finally, we believe that the overarching idea of using segmentation volumes (or 2D areas) as image-level labels from which to extract voxel/pixel estimations can be extended to other image modalities and conditions, especially when the segmentation maps used as ground truth are particularly noisy. In further, work we will extend this idea to other segmentation problems and further validate the approach proposed for acute stroke care.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgment

This work is supported by the National Institutes of Health grant R01NS121154. We would like to thank the Memorial Hermann Health System for enabling the data collection effort.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.nicl.2023.103362.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

Data availability

Data will be made available on request.

References

- Abdelkhaleq, R., Kim, Y., Khose, S., Kan, P., Salazar-Marioni, S., Giancardo, L., Sheth, S.A., 2021. Automated prediction of final infarct volume in patients with large-vessel occlusion acute ischemic stroke. Neurosurg. Focus 51, E13. 10.3171/2021.4.FOCUS21134. [DOI] [PMC free article] [PubMed]

- Ahn, J., Kwak, S., 2018. Learning Pixel-Level Semantic Affinity With Image-Level Supervision for Weakly Supervised Semantic Segmentation. Presented at the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4981–4990.

- Albers G.W., Marks M.P., Kemp S., Christensen S., Tsai J.P., Ortega-Gutierrez S., McTaggart R.A., Torbey M.T., Kim-Tenser M., Leslie-Mazwi T., Sarraj A., Kasner S.E., Ansari S.A., Yeatts S.D., Hamilton S., Mlynash M., Heit J.J., Zaharchuk G., Kim S., Carrozzella J., Palesch Y.Y., Demchuk A.M., Bammer R., Lavori P.W., Broderick J.P., Lansberg M.G. DEFUSE 3 Investigators, 2018. Thrombectomy for stroke at 6 to 16 hours with selection by perfusion imaging. N. Engl. J. Med. 2018;378:708–718. doi: 10.1056/NEJMoa1713973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber P.A., Demchuk A.M., Zhang J., Buchan A.M. Validity and reliability of a quantitative computed tomography score in predicting outcome of hyperacute stroke before thrombolytic therapy. ASPECTS Study Group. Alberta Stroke Programme Early CT Score. Lancet Lond. Engl. 2000;355:1670–1674. doi: 10.1016/s0140-6736(00)02237-6. [DOI] [PubMed] [Google Scholar]

- Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S., 2016. Fully-Convolutional Siamese Networks for Object Tracking, in: Hua, G., Jégou, H. (Eds.), Computer Vision – ECCV 2016 Workshops, Lecture Notes in Computer Science. Springer International Publishing, Cham, pp. 850–865. 10.1007/978-3-319-48881-3_56.

- Cheplygina V., de Bruijne M., Pluim J.P.W. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image Anal. 2019;54:280–296. doi: 10.1016/j.media.2019.03.009. [DOI] [PubMed] [Google Scholar]

- Coutts S.B., Lev M.H., Eliasziw M., Roccatagliata L., Hill M.D., Schwamm L.H., Pexman J.H.W., Koroshetz W.J., Hudon M.E., Buchan A.M., Gonzalez R.G., Demchuk A.M. ASPECTS on CTA Source Images Versus Unenhanced CT. Stroke. 2004;35:2472–2476. doi: 10.1161/01.STR.0000145330.14928.2a. [DOI] [PubMed] [Google Scholar]

- Czap A.L., Bahr-Hosseini M., Singh N., Yamal J.-M., Nour M., Parker S., Kim Y., Restrepo L., Abdelkhaleq R., Salazar-Marioni S., Phan K., Bowry R., Rajan S.S., Grotta J.C., Saver J.L., Giancardo L., Sheth S.A. Machine Learning Automated Detection of Large Vessel Occlusion From Mobile Stroke Unit Computed Tomography Angiography. Stroke. 2022;53:1651–1656. doi: 10.1161/STROKEAHA.121.036091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dang V.N., Galati F., Cortese R., Di Giacomo G., Marconetto V., Mathur P., Lekadir K., Lorenzi M., Prados F., Zuluaga M.A. Vessel-CAPTCHA: An efficient learning framework for vessel annotation and segmentation. Med. Image Anal. 2022;75 doi: 10.1016/j.media.2021.102263. [DOI] [PubMed] [Google Scholar]

- Dogo, E.M., Afolabi, O.J., Nwulu, N.I., Twala, B., Aigbavboa, C.O., 2018. A Comparative Analysis of Gradient Descent-Based Optimization Algorithms on Convolutional Neural Networks, in: 2018 International Conference on Computational Techniques, Electronics and Mechanical Systems (CTEMS). Presented at the 2018 International Conference on Computational Techniques, Electronics and Mechanical Systems (CTEMS), pp. 92–99. 10.1109/CTEMS.2018.8769211.

- El-Hariri H., Souto Maior Neto L.A., Cimflova P., Bala F., Golan R., Sojoudi A., Duszynski C., Elebute I., Mousavi S.H., Qiu W., Menon B.K. Evaluating nnU-Net for early ischemic change segmentation on non-contrast computed tomography in patients with Acute Ischemic Stroke. Comput. Biol. Med. 2022;141 doi: 10.1016/j.compbiomed.2021.105033. [DOI] [PubMed] [Google Scholar]

- Estrada U.-M.-L.-T., Meeks G., Salazar-Marioni S., Scalzo F., Farooqui M., Vivanco-Suarez J., Gutierrez S.O., Sheth S.A., Giancardo L. Quantification of infarct core signal using CT imaging in acute ischemic stroke. NeuroImage Clin. 2022;34 doi: 10.1016/j.nicl.2022.102998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gondal, W.M., Köhler, J.M., Grzeszick, R., Fink, G.A., Hirsch, M., 2017. Weakly-supervised localization of diabetic retinopathy lesions in retinal fundus images, in: 2017 IEEE International Conference on Image Processing (ICIP). Presented at the 2017 IEEE International Conference on Image Processing (ICIP), pp. 2069–2073. 10.1109/ICIP.2017.8296646.

- Hakim A., Christensen S., Winzeck S., Lansberg M.G., Parsons M.W., Lucas C., Robben D., Wiest R., Reyes M., Zaharchuk G. Predicting Infarct Core From Computed Tomography Perfusion in Acute Ischemia With Machine Learning: Lessons From the ISLES Challenge. Stroke. 2021;52:2328–2337. doi: 10.1161/STROKEAHA.120.030696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haussen D.C., Dehkharghani S., Rangaraju S., Rebello L.C., Bouslama M., Grossberg J.A., Anderson A., Belagaje S., Frankel M., Nogueira R.G. Automated CT Perfusion Ischemic Core Volume and Noncontrast CT ASPECTS (Alberta Stroke Program Early CT Score): Correlation and Clinical Outcome Prediction in Large Vessel Stroke. Stroke. 2016;47:2318–2322. doi: 10.1161/STROKEAHA.116.014117. [DOI] [PubMed] [Google Scholar]

- He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition. Arxiv. Org. 2015;7:171–180. doi: 10.3389/fpsyg.2013.00124. [DOI] [Google Scholar]

- Hokkinen L., Mäkelä T., Savolainen S., Kangasniemi M. Evaluation of a CTA-based convolutional neural network for infarct volume prediction in anterior cerebral circulation ischaemic stroke. Eur. Radiol. Exp. 2021;5:25. doi: 10.1186/s41747-021-00225-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoving J.W., Marquering H.A., Majoie C.B.L.M., Yassi N., Sharma G., Liebeskind D.S., van der Lugt A., Roos Y.B., van Zwam W., van Oostenbrugge R.J., Goyal M., Saver J.L., Jovin T.G., Albers G.W., Davalos A., Hill M.D., Demchuk A.M., Bracard S., Guillemin F., Muir K.W., White P., Mitchell P.J., Donnan G.A., Davis S.M., Campbell B.C.V. Volumetric and Spatial Accuracy of Computed Tomography Perfusion Estimated Ischemic Core Volume in Patients With Acute Ischemic Stroke. Stroke. 2018;49:2368–2375. doi: 10.1161/STROKEAHA.118.020846. [DOI] [PubMed] [Google Scholar]

- Huang, Z., Wang, X., Wang, Jiasi, Liu, W., Wang, Jingdong, 2018. Weakly-Supervised Semantic Segmentation Network with Deep Seeded Region Growing, in: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Presented at the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7014–7023. 10.1109/CVPR.2018.00733.

- Isensee F., Jaeger P.F., Kohl S.A.A., Petersen J., Maier-Hein K.H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods. 2021;18:203–211. doi: 10.1038/s41592-020-01008-z. [DOI] [PubMed] [Google Scholar]

- Jia Z., Huang X., Chang E.-I.-C., Xu Y. Constrained deep weak supervision for histopathology image segmentation. IEEE Trans. Med. Imaging. 2017;36:2376–2388. doi: 10.1109/TMI.2017.2724070. [DOI] [PubMed] [Google Scholar]

- Kandemir M., Hamprecht F.A. Computer-aided diagnosis from weak supervision: A benchmarking study. Comput. Med. Imaging Graph Breakthrough Technol. Digital Pathol. 2015;42:44–50. doi: 10.1016/j.compmedimag.2014.11.010. [DOI] [PubMed] [Google Scholar]

- Kikinis R., Pieper S.D., Vosburgh K.G. In: Intraoperative Imaging and Image-Guided Therapy. Jolesz F.A., editor. Springer; New York, NY: 2014. 3D slicer: A platform for subject-specific image analysis, visualization, and clinical support; pp. 277–289. [DOI] [Google Scholar]

- Kim Y., Lee S., Abdelkhaleq R., Lopez-Rivera V., Navi B., Kamel H., Savitz S.I., Czap A.L., Grotta J.C., McCullough L.D., Krause T.M., Giancardo L., Vahidy F.S., Sheth S.A. Utilization and availability of advanced imaging in patients with acute ischemic stroke. Circ. Cardiovasc. Qual. Outcomes. 2021;14:e006989. doi: 10.1161/CIRCOUTCOMES.120.006989. [DOI] [PubMed] [Google Scholar]

- Konstas A.A., Goldmakher G.V., Lee T.-Y., Lev M.H. Theoretic basis and technical implementations of CT perfusion in acute ischemic stroke, Part 2: technical implementations. Am. J. Neuroradiol. 2009;30:885–892. doi: 10.3174/ajnr.A1492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraus, O.Z., Ba, J.L., Frey, B.J., 2016. Classifying and segmenting microscopy images with deep multiple instance learning. Bioinformatics 32, i52–i59. 10.1093/bioinformatics/btw252. [DOI] [PMC free article] [PubMed]

- Kuang H., Menon B.K., Sohn S.I., Qiu W. EIS-Net: Segmenting Early Infarct and scoring ASPECTS Simultaneously on Non-contrast CT of Patients with Acute Ischemic Stroke. Med. Image Anal. 2021;101984 doi: 10.1016/j.media.2021.101984. [DOI] [PubMed] [Google Scholar]

- Lerousseau M., Vakalopoulou M., Classe M., Adam J., Battistella E., Carré A., Estienne T., Henry T., Deutsch E., Paragios N. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2020, Lecture Notes in Computer Science. Martel A.L., Abolmaesumi P., Stoyanov D., Mateus D., Zuluaga M.A., Zhou S.K., Racoceanu D., Joskowicz L., editors. Springer International Publishing; Cham: 2020. Weakly supervised multiple instance learning histopathological tumor segmentation; pp. 470–479. 10.1007/978-3-030-59722-1_45. [Google Scholar]

- Li, Z., Wang, C., Han, M., Xue, Y., Wei, W., Li, L.-J., Fei-Fei, L., 2018. Thoracic Disease Identification and Localization With Limited Supervision. Presented at the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8290–8299.

- Lu M.Y., Williamson D.F.K., Chen T.Y., Chen R.J., Barbieri M., Mahmood F. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat. Biomed. Eng. 2021;5:555–570. doi: 10.1038/s41551-020-00682-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mäkelä T., Öman O., Hokkinen L., Wilppu U., Salli E., Savolainen S., Kangasniemi M. Automatic CT angiography lesion segmentation compared to CT perfusion in ischemic stroke detection: a feasibility study. J. Digit. Imaging. 2022 doi: 10.1007/s10278-022-00611-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mangla R., Ekhom S., Jahromi B.S., Almast J., Mangla M., Westesson P.-L. CT perfusion in acute stroke: Know the mimics, potential pitfalls, artifacts, and technical errors. Emerg. Radiol. 2014;21:49–65. doi: 10.1007/s10140-013-1125-9. [DOI] [PubMed] [Google Scholar]

- Nogueira R.G., Jadhav A.P., Haussen D.C., Bonafe A., Budzik R.F., Bhuva P., Yavagal D.R., Ribo M., Cognard C., Hanel R.A., Sila C.A., Hassan A.E., Millan M., Levy E.I., Mitchell P., Chen M., English J.D., Shah Q.A., Silver F.L., Pereira V.M., Mehta B.P., Baxter B.W., Abraham M.G., Cardona P., Veznedaroglu E., Hellinger F.R., Feng L., Kirmani J.F., Lopes D.K., Jankowitz B.T., Frankel M.R., Costalat V., Vora N.A., Yoo A.J., Malik A.M., Furlan A.J., Rubiera M., Aghaebrahim A., Olivot J.-M., Tekle W.G., Shields R., Graves T., Lewis R.J., Smith W.S., Liebeskind D.S., Saver J.L., Jovin T.G., Trial Investigators D.A.W.N. Thrombectomy 6 to 24 Hours after Stroke with a Mismatch between Deficit and Infarct. N. Engl. J. Med. 2018;378:11–21. doi: 10.1056/NEJMoa1706442. [DOI] [PubMed] [Google Scholar]

- Pinheiro, P.O., Collobert, R., 2015. From Image-Level to Pixel-Level Labeling With Convolutional Networks. Presented at the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1713–1721.

- Qiu W., Kuang H., Teleg E., Ospel J.M., Sohn S.I., Almekhlafi M., Goyal M., Hill M.D., Demchuk A.M., Menon B.K. Machine learning for detecting early infarction in acute stroke with non–contrast-enhanced CT. Radiology. 2020;294:638–644. doi: 10.1148/radiol.2020191193. [DOI] [PubMed] [Google Scholar]

- Quellec G., Cazuguel G., Cochener B., Lamard M. Multiple-instance learning for medical image and video analysis. IEEE Rev. Biomed. Eng. 2017;10:213–234. doi: 10.1109/RBME.2017.2651164. [DOI] [PubMed] [Google Scholar]

- Quellec G., Charrière K., Boudi Y., Cochener B., Lamard M. Deep image mining for diabetic retinopathy screening. Med. Image Anal. 2017;39:178–193. doi: 10.1016/j.media.2017.04.012. [DOI] [PubMed] [Google Scholar]

- Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D., 2017. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization, in: 2017 IEEE International Conference on Computer Vision (ICCV). Presented at the 2017 IEEE International Conference on Computer Vision (ICCV), pp. 618–626. 10.1109/ICCV.2017.74.

- Sheth S.A., Lopez-Rivera V., Barman A., Grotta J.C., Yoo A.J., Lee S., Inam M.E., Savitz S.I., Giancardo L. Machine Learning-Enabled Automated Determination of Acute Ischemic Core From Computed Tomography Angiography. Stroke. 2019;50:3093–3100. doi: 10.1161/STROKEAHA.119.026189. [DOI] [PubMed] [Google Scholar]

- Shin S.Y., Lee S., Yun I.D., Kim S.M., Lee K.M. Joint Weakly and Semi-Supervised Deep Learning for Localization and Classification of Masses in Breast Ultrasound Images. IEEE Trans. Med. Imaging. 2019;38:762–774. doi: 10.1109/TMI.2018.2872031. [DOI] [PubMed] [Google Scholar]

- Simonyan, K., Zisserman, A., 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition. 10.48550/arXiv.1409.1556.

- Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A., 2016. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. ArXiv160207261 Cs.

- Taha A.A., Hanbury A. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med. Imaging. 2015;15 doi: 10.1186/s12880-015-0068-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan, M., Le, Q.V., 2020. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. ArXiv190511946 Cs Stat.

- Tang P., Wang X., Bai S., Shen W., Bai X., Liu W., Yuille A. PCL: proposal cluster learning for weakly supervised object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020;42:176–191. doi: 10.1109/TPAMI.2018.2876304. [DOI] [PubMed] [Google Scholar]

- Xu, G., Song, Z., Sun, Z., Ku, C., Yang, Z., Liu, C., Wang, S., Ma, J., Xu, W., 2019. CAMEL: A Weakly Supervised Learning Framework for Histopathology Image Segmentation. Presented at the Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10682–10691.

- Xu Y., Zhu J.-Y., Chang E.-I.-C., Lai M., Tu Z. Weakly supervised histopathology cancer image segmentation and classification. Med. Image Anal. 2014;18:591–604. doi: 10.1016/j.media.2014.01.010. [DOI] [PubMed] [Google Scholar]

- Zhang, H., Meng, Y., Zhao, Y., Qiao, Y., Yang, X., Coupland, S.E., Zheng, Y., 2022. DTFD-MIL: Double-Tier Feature Distillation Multiple Instance Learning for Histopathology Whole Slide Image Classification. Presented at the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18802–18812.

- Zhao R., Liao W., Zou B., Chen Z., Li S. Weakly-supervised simultaneous evidence identification and segmentation for automated glaucoma diagnosis. Proc. AAAI Conf. Artif. Intell. 2019;33:809–816. doi: 10.1609/aaai.v33i01.3301809. [DOI] [Google Scholar]

- Zhou, Z.-H., Sun, Y.-Y., Li, Y.-F., 2009. Multi-instance learning by treating instances as non-I.I.D. samples, in: Proceedings of the 26th Annual International Conference on Machine Learning, ICML ’09. Association for Computing Machinery, New York, NY, USA, pp. 1249–1256. 10.1145/1553374.1553534.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data will be made available on request.