Abstract

The scientific reform movement has proposed openness as a potential remedy to the putative reproducibility or replication crisis. However, the conceptual relationship among openness, replication experiments and results reproducibility has been obscure. We analyse the logical structure of experiments, define the mathematical notion of idealized experiment and use this notion to advance a theory of reproducibility. Idealized experiments clearly delineate the concepts of replication and results reproducibility, and capture key differences with precision, allowing us to study the relationship among them. We show how results reproducibility varies as a function of the elements of an idealized experiment, the true data-generating mechanism, and the closeness of the replication experiment to an original experiment. We clarify how openness of experiments is related to designing informative replication experiments and to obtaining reproducible results. With formal backing and evidence, we argue that the current ‘crisis’ reflects inadequate attention to a theoretical understanding of results reproducibility.

Keywords: reproducibility, replication, open science, metascience, experiment, statistical theory

1. Introduction

In a number of scientific fields, replication and reproducibility crisis labels have been used to refer to instances where many results have failed to be corroborated by a sequence of scientific experiments. This state of affairs has led to a scientific reform movement. However, this labelling is ambiguous between a crisis of practice and a crisis of conceptual understanding. Insufficient attention has been given to the latter, which we believe is a detriment to moving forward to conduct science better. In this article, we make theoretical progress towards understanding replications and reproducibility of results (henceforth, ‘results reproducibility’)1 by a formal examination of the logical structure of experiments.2

We view replication and reproducibility as methodological subjects of metascience. As we have emphasized elsewhere [10], these methodological subjects need a formal approach to properly study them. Therefore, our work here is necessarily mathematical; however, we make our conclusions relatable to the broader scientific community by pursuing a narrative form in explaining our framework and results within the main text. Mathematical arguments are presented in the appendices. Our objective is to build a strong, internally consistent, verifiable theoretical foundation to understand and to develop a precise language to talk about replication experiments and results reproducibility as well as to use this framework to study how openness in and of experiments is related to either of them. We advance mathematical arguments from first principles and proofs, using probability theory, mathematical statistics, statistical thought experiments and computer simulations. We ask the reader to evaluate our work within its intended scope of providing theoretical precision and nuanced arguments.

The following backdrop to motivate our research matters: a common concern voiced in the scientific reform literature and recent scholarly discourse regards various forms of scientific malpractice as potential culprits of reproducibility failures, and openness is sometimes touted as a remedy to alleviate such malpractices [11–15]. Some malpractice is believed to take place at the level of the scientist. For example, hypothesizing after the results are known involves presenting a post hoc hypothesis as if it were an a priori hypothesis, conditional on observing the data [16,17]. Another example is p-hacking, a statistically invalid form of performing inference to find statistically significant results [17–19]. Some is believed to operate at the community or institution level. For example, publication bias involves omitting studies with statistically non-significant results from publications and is primarily attributed to flawed incentive structures in scientific publishing [1,17]. Transparency in scientific practice in general and tools to promote openness in experimental reporting (such as preregistration, registered reports, and open laboratory books) in particular are often highlighted as potential remedies to curb such malpractice. Before we suspect malpractice of either kind and set out to correct the scientific record or demand reparations, however, it behoves the scientific community to gain a complete understanding of the factors that may account for a given set of results in a sequence of replication experiments. This way we can hope to understand what aspects of experiments need to be openly communicated and to what end.

If a result of an experiment is not reproduced by a replication experiment, before we reject it as a false positive or suspect some form of malpractice, we need to assess and account for: (i) sampling error, (ii) theoretical constraints on the reproducibility rate of the result of interest, conditional on the elements of the original experiment, and (iii) assumptions from the original experiment that were not carried over to the replication experiment. First of these is a well-known and widely understood statistical fact that describes why methodologically we can at best guarantee reproducibility of a result on average (i.e. in expectation). The second point about the theoretical limits of the reproducibility rate is not well understood, and we hope to address this oversight in this article. The last one has been brought up in individual cases but typically in an ad hoc manner, and we aim to provide a systematic approach for comprehensive evaluations of replication experiments. Since metascientific heuristics may lead us astray in these assessments, we need a fine-grained conceptual understanding of how experiments operate and relate to each other, and what role openness plays in facilitating replications or promoting reproducible results. Indeed a replication crisis and a reproducibility crisis are different things and should be understood on their own. We distinguish between replication experiments and results reproducibility, discuss precursors of each, and assess how openness of experiments relates to each separately.

In this article, we argue that ‘failed’ replications do not necessarily signify failures of scientific practice.3 Rather, they are expected to occur at varying rates due to the features of and differences in the elements of the logical structure of experiments. By using a mathematical characterization of this structure, we provide precise definitions of and clear delineation between replication, reproducibility and openness. Then, by using toy examples, simulations and cases from the scientific literature, we illustrate how our characterization of experiments can help identify what makes for replication experiments that can, in theory, reproduce a given result and what determines the extent to which experimental results are reproducible. In the next section, we define main notions that we use to build a logical structure of experiments which help us derive our theoretical results.

2. The logical structure of experiments

2.1. Definitions

The idealized experiment is a probability experiment: a trial with uncertain outcome on a well-defined set. A scientific experiment where inference is desired under uncertainty can be represented as an idealized experiment. The results from an experiment can be defended as valid only if the assumptions of the probability experiment hold. One useful set-up for us is as follows: given some background knowledge K (see table 1 for reference to all notation and terms introduced in this section) on a natural phenomenon, a scientific theory makes a prediction, which is in principle testable using observables, the data D. A mechanism generating D is formulated under uncertainty and is represented as a probability model MA under assumptions A. Given D, inference is desired on some unknown part of MA. The extent to which parts of MA that are relevant to the inference are confirmed by D is assessed by a fixed and known collection of methods S evaluated at D (similar descriptions for other purposes can be found in [10,21]).

Table 1.

Quick reference guide to notation and key terms.

| symbol | name | description | formal definition/result | |

|---|---|---|---|---|

| ξ | idealized experiment | scientific experiment represented as a probability experiment | definition 2.1 | |

| ξ′ | replication experiment | idealized experiment aiming to reproduce R from another experiment by generating D′ independent from D | appendix B, definition 2.2, result 2.9 | |

| K | background knowledge | state of scientific knowledge on the phenomenon of interest used to conceptualize, design and perform the experiment | appendix C, remark 4.1 | |

| MA | assumed model | assumed mechanism generating the data | appendix D, result 4.2 | |

| A | population assumptions | population characteristics independent from sampling design, such as finiteness and continuity | ||

| M | model specification | model properties that depend on researcher assumptions, such as sampling scheme | ||

| S | method | fixed and known set of methods for collecting and analysing data | ||

| Spre | pre-data methods | scientific assumptions made before collecting data and procedures implemented to obtain D | result 4.3 | |

| Spost | statistical methods | statistical procedures applied on D | appendix E, result 4.4 | |

| D | data | application of Spre to sample the population assuming MA | ||

| Ds | data structure | structural aspects of the data such as sample size and number and type of variables | appendix F result 4.5 | |

| Dv | data values | observed values that signify a fixed realization of the data | result 4.6 | |

| R | result | decision rule which maps the application of Spost to D onto the decision space such as choice of a model over others, a parameter estimate, or rejection of a null hypothesis | definition 2.3, 2.4 | |

| ϕ | true reproducibility rate | limiting frequency of reproduced results in a sequence of replication experiments | appendix A, appendix G, definition 2.5, | |

| ϕN | estimated reproducibility rate | sample frequency of reproduced results in a sequence of N replication experiments | result 2.7, result 5.1, remark 2.8 | |

| π-Open | openness | which elements of ξ are available to ξ′ | definition 2.6 |

Definition 2.1. —

The tuple ξ := (K, MA, S, D) is an idealized experiment.

Definition 2.1 of ξ captures some key distinct elements of experiments whose population characteristics can in principle be tested. These elements are not necessarily independent of each other. For example, K may inform and constrain the sets of plausible MA and S. Or it may be necessary for MA to constrain S.

MA includes the sampling design when sampling a population conforming A, which we assume to be independent of sampling design. For example, A may be the description of an infinite population of interest, which may be sampled in a variety of ways to yield distinct probability models MA for the data depending on the sampling scheme.

We distinguish two elements of S: Spre and Spost. Spre is the scientific methodological assumptions made before data collection and procedures implemented to obtain D. Spre captures assumptions in designing and executing an experiment such as experimental paradigms, study procedures, instruments and manipulations. Conditional on K and MA, Spre is reliable if the random variability in D is due only to sampling variability modelled by MA. Spost is the statistical methods applied on D. If inferential, Spost is reliable if it is statistically consistent. S is reliable if and only if Spre and Spost are reliable.

We also distinguish two elements of D: Ds and Dv. Ds is the structural aspects of the data, such as the sample size, number of variables, units of measurement for each variable, and metadata. Dv is the observed values, that is, a realization conforming Ds. Some statistical approaches to assess risk and loss focus on the reproducibility conditional on Dv, whereas others focus on averages over independent realizations of Dv.

Definition 2.1 of ξ allows us to scaffold other definitions as follows. An exact replication experiment ξ′ must generate D′ independent of D conditional on MA in the values but with the same structure Ds.

Definition 2.2. —

The tuple is an exact replication experiment of ξ if and is a random sample independent of Dv. If at least one of differs from (MA, S, Ds) or then ξ′ can at most be a non-exact replication experiment of ξ.

Definition 2.2 mathematically isolates ξ and ξ′ from R, the result of interest as formally defined in definition 2.3. That is, ξ′ does not need to have a specific aim to be performed or worked with as a mathematical object. The benefits of this isolation will become clear in §3, where an unconditional ξ and its non-exact ξ′ pair may become a ξ and its exact ξ′ pair, conditional on R.

Often, however, we would perform experiments with a specific aim and would like to see whether the result of ξ is reproduced in ξ′. Depending on the desired mode of statistical inference, example aims include hypothesis testing, point or interval estimation, model selection or prediction of an observable. Further, when augmented with an R, K′ must differ from K in a specific way. Encompassing all these statistical modes of inference, we introduce the notion of a result R, as a decision rule. For convenience, we assume that R lives on a discrete space here.

Definition 2.3. —

Let be the sample space and be the decision space. For sample size , the function is a result.

R is obtained by mapping the application of Spost on D on to the decision space. If ξ′ is aimed at reproducing R of ξ, it is conditional on R and leads us to the following connection between an idealized experiment and a result.

Definition 2.4. —

Let R and R′ be results from ξ and ξ′, respectively. R = ro is reproduced by R′ = rd if d = o, else R = ro is not reproduced.

In definition 2.4, reproducibility of R depends on the available actions r1, r2, …, rq. The size of q is case specific. Examples are as follows. In a null hypothesis significance test, q = 2: the null hypothesis and the alternative hypothesis. In a model selection problem, we entertain q models and choose one as the best model generating the data. In a parameter estimation problem for a continuous parameter, we build q arbitrary bins, and call a result reproduced if the estimate from ξ′ falls in the same bin as the result from ξ. How the bins are constructed in a problem affects the actual reproducibility rate of a result. However, for our purposes in this article, theoretical results hold for all cases regardless of this tangential issue.

The class of problems of interest to us here involves cases where, in a sequence of exact replication experiments, if S is reliable, we should expect a regularity in the results. That is, probability theory tells us that if the elements of an idealized experiment are well defined, then we should expect the results from a sequence of replication experiments to stabilize at a certain proportion, given the characteristics of an idealized experiment and the true data-generating mechanism. This notion is formalized in definition 2.5.

Definition 2.5. —

Let ξ(1), ξ(2), …, ξ(N) be a sequence of idealized experiments. The reproducibility rate

of a result, R = ro is a parameter of the sequence (I{C} = 1 if C, and 0 otherwise).

An advantage of definition 2.5 is that conditional on R = ro in ξ and a sequence of replication experiments ξ(1), ξ(2), …, ξ(N), the relative frequency of reproduced results ϕN converges to ϕ ∈ [0, 1] as N → ∞. So, we immediately have as a natural estimator of ϕ. Further, we are formally comforted to know that . That is, with high probability, the estimated reproducibility rate ϕN from a sequence of replication experiments will get closer to the true reproducibility rate of the original experiment ϕ.

Finally, we turn to the last of our key concepts: openness. Openness refers to the accessibility of all necessary information regarding the elements of ξ by another idealized experiment ξ*. This accessibility may be used for a variety of purposes. For example, Spost can be re-applied to D to verify R independently of ξ. In this capacity, openness facilitates the auditing of experimental results by way of screening off certain errors, including human and instrumental (e.g. data entry and programming errors), that may be introduced in the process of obtaining R initially. On the other hand, openness may be needed to perform an exact ξ′ by way of duplicating Spre to obtain D′ and Spost to obtain R′. In this capacity, openness makes exact ξ′ possible.

Openness is critically related to reproducibility since the degree to which information is transferred from ξ to ξ′ impacts the ϕ of a given result. However, not all elements of ξ need to be open for all purposes. Therefore, a nuanced understanding of openness requires evaluating it at a fixed configuration of the elements of ξ conditional on a specific purpose, rather than as a categorical judgement at the level of the whole experiment, as open or not. This leads us to think of openness element-wise, as in definition 2.6.

Definition 2.6. —

Let be the power set of elements of ξ and . ξ is π-Open for ξ* if π ⊂ K*, where ξ* is an idealized experiment that imports information from ξ.

A specific example of π-Open of definition 2.6 would be π ≡ (MA, Spre), where ξ* gets all the information about the assumptions, model and pre-data methods from ξ but no other information. Another example of π-Open is the special case where ξ has all its elements open, such that π ≡ (K, MA, S, D). In this case, for convenience, we say ξ is ξ-Open for ξ*.

2.2. Fundamental results on replications and reproducibility rate from first principles

Here, we present two results about reproducibility and some remarks, based on definitions 2.1–2.6. A well-formed theory of reproducibility requires results of these types: fundamental, mathematical and invoking a functional framework to study replications and reproducibility. They serve as theoretical benchmarks to check other results against. Technically oriented readers may refer to appendices A and B for a more detailed discussion and results complementary to the main argument.

We begin by noting that, given definition 2.5 and the discussion following it, it is not straightforward to say exactly what we gain if we were to update the estimated reproducibility rate based on the results obtained from performing more replications. Indeed, to understand the value of replication experiments in assessing the reproducibility of a result, a strong mathematical statement is required, which is our result 2.7.

Result 2.7. —

Let ξ(1), ξ(2), …, ξ(N) be a sequence of replication experiments with reproducibility rate ϕ given by definition 2.5. Then,

2.1 where ϕN is the sample reproducibility rate of result R = ro obtained from the sequence (proof in appendix A).

Result 2.1 is fundamental to study replications and reproducibility for a number of reasons:

-

1.

It provides a basis for building trust in the notion of reproducibility from replication experiments. Roughly, it says that if we perform replication experiments and estimate the reproducibility rate of ro by ϕN from these experiments, then we are guaranteed that deviations of ϕN from ϕ are going to get small and stay small.

-

2.

It is almost necessary to move forward theoretically. It immediately implies that if the assumptions of an original experiment are satisfied in its replication experiments, then we are adopting a statistically defensible strategy by continuing to perform replication experiments and updating ϕN as a proportion of successes to assess the reproducibility rate. Therefore, result 2.7 gives us a theoretical justification of why we should care about performing more replication experiments whose assumptions are satisfied and be interested in estimating the reproducibility rate based on those replication experiments alone. Further, violating the assumptions of ξ in replication experiments implies that ϕN converges to some ϕ defined by the flaws underlying a non-exact sequence of replications of ξ rather than the reproducibility rate of ro of interest.

-

3.

As we will detail in result 2.9, a theoretically fertile way to study replication experiments is by defining a sequence of experiments as a stochastic process. The results from such processes almost always require the solid foundation provided by result 2.7.

Remark 2.8. —

The reproducibility rate given in definition 2.5 has excellent properties as shown by result 2.7. However, we keep in mind that definition 2.5 is only one way to measure reproducibility. It is a counting measure which counts the reproduced results. Instead, a continuous measure as a degree of confirmation of a result might seem more proper to measure reproducibility. One has to be aware that just defining a reproducibility measure does not imply that it has desirable mathematical properties. It is easy to define meaningful continuous measures of reproducibility which might have pathological properties (e.g. that do not satisfy result 2.7), and these should be avoided (see appendix A for details).

In practice, Spost are functions of sample moments, such as the sample mean. In these cases, sometimes the Lindeberg–Lévy central limit theorem (CLT) and its extensions provide useful results about the properties of ξ(1), ξ(2), …. However, restricting Spost this way constrains the mathematical setting to study the statistical properties of ξ(1), ξ(2), … or results reproducibility. For example, working with the CLT is challenging when Spost cannot be formulated as a function of a fixed sample size or to discuss the properties of a sequence of replication experiments directly, without referring to Spost as a means to estimate a particular R.

We provide a broad setting without these limitations by assuming that K requires only minimal validity conditions on MA and S. Specifically, we let MA be any probability model, subject only to some mathematical regularity conditions such as continuity of distribution functions, the existence of the mean and the variance of the variable of interest. We also let Spost be the sample distribution function.4 With the generality provided by these assumptions, we obtain one of our main theoretical results.

Result 2.9. —

The sequence of idealized experiments ξ(1), ξ(2), … given by definition 2.5 is a proper stochastic process, seen as a joint function of random sample D and of each value in the support of data-generating mechanism, (see constructive proof in appendix B).

Result 2.9 is of fundamental importance to study results reproducibility mathematically because it allows us to apply the well-developed theory of stochastic processes to build a theory of results reproducibility. Two aspects of result 2.9 are noteworthy:

-

1.

When we obtain a random sample in ξ and perform inference using a fixed value of a statistic such as a threshold, the sequence ξ(1), ξ(2), … constitutes random variables independent of each other conditional on the true model generating the data. Obtaining the distributions implied by ξ helps us understand the statistical nature of replication experiments.

-

2.

ξ′ generates new data D′, and R′ is conditional on D′. That is, when inference is performed for a particular replication experiment, the data are fixed. Most generally, conditional on D′ if the empirical distribution function is R′, then the replication experiment estimates the model generating the data. Therefore, a replication experiment determines a sample-based estimate of a statistical model.

In the next section, we introduce a toy example as a running case study to instantiate our theoretical results on replications, reproducibility and openness.

3. A toy example

Our toy example involves an inference problem regarding a population of ravens, K. An infinite population of ravens where each raven is either black or white constitutes the population assumptions, A. Each uniformly randomly sampled raven can be identified correctly as black or white, which defines the pre-data methods, Spre. The result of interest, R, is to estimate the (unknown) population proportion of black ravens, p, or some function of it.

We consider six distinct sampling scenarios, which lead to six distinct MA, and thus six distinct idealized experiments. To avoid overly complicated mathematical notation, we denote the models by ξbin, ξnegbin, ξhyper, ξpoi, ξexp and ξnor. These models represent the binomial, negative binomial, hypergeometric, Poisson, exponential and normal probability distributions for the data-generating mechanism, respectively. In specific examples, we also vary Spost, the point estimator of the parameter of interest to take values as maximum likelihood estimate (MLE), method of moments estimate (MME) and posterior mode (i.e. Bayesian inference). We further vary Ds via the sample size (i.e. n ∈ {10, 30, 100, 200}). We use these idealized experiments to illustrate our results in the rest of the article.

These six idealized experiments make the following sampling assumptions. ξbin stops when n ravens are sampled. ξnegbin stops when w white ravens are sampled. ξhyper is a special case where the sampling has access only to a finite subset of the infinite population delineated by A. ξbin, ξnegbin and ξhyper are often called exact models, in the sense that their MA does not involve any limiting or approximating assumptions. On the other hand, ξpoi approximates ξbin, where a large sample of n ravens is sampled when the proportion of black ravens p is small. The larger the n and the smaller the p such that np remains constant, the better the approximation. ξexp has the same approximative characteristics and parameter as ξpoi. However, notably, ξexp records the time between observations instead of counting the ravens, so its Spre is different from all other experiments. Finally, ξnor approximates ξbin where a large sample of n ravens with intermediate proportion of black ravens, p, holds.

As the result of interest, R, these six idealized experiments aim to estimate either the proportion of black ravens, p, in the population or the rate of black ravens sampled, np → λ, a function of p, in the approximative models. Figure 1 shows distinctive elements of these six idealized experiments.

Figure 1.

Six idealized experiments ξbin, ξnegbin, ξhyper, ξpoi, ξexp, ξnor: The binomial, negative binomial, hypergeometric, Poisson approximation to binomial, exponential waiting times between Poisson events and normal approximation to binomial, respectively. All but ξhyper assume infinite population (A) of black and white ravens, with sampling designs resulting in distinct probability models (MA). ξhyper assumes sampling from a finite subset of the population. All experiments aim at performing inference on result (R), which reduces down to an estimate of either the population proportion of black ravens or the mean number of black ravens in the population.

In §4, we use these six idealized experiments to show that openness connects to reproducibility in a variety of ways and to reproduce a given result, and replication experiments do not need to be exact. We show that conditional on a given result from an original experiment, non-exact replication experiments can serve as valid exact replication experiments, if the inferential equivalence holds between the original and the replication. We further show that the true rate of reproducibility of a sequence of exact replication experiments and a sequence of non-exact replication experiments are distinct (except trivially) for a given result.

4. Element-wise openness and assessing the meaning of replications

Tools and procedures have been developed to help facilitate openness in science [11,14,17,22]. Guidelines may argue for making as much information available as possible about an experiment or leave it to intuition to guide which elements of an experiment are relevant and need to be shared for replication. We are interested in better understanding what does and does not need to be made available, in service of which objective, and under what conditions. We perceive two main issues: what openness means for performing meaningful replications and how it impacts results reproducibility. We first evaluate the former. Then we show that a uniform, wholesale framing of openness is not the remedy to the reproducibility crisis that some take it to be.

ξ has elements involving uncertainty, such as Dv taken as a random variable. Uncertainty modelled by probability is always conditional on the available background information [23], and thus, reproducibility of R is always conditional on K. That is, ξ′ must import sufficient information from ξ with respect to R of interest to assess whether R is reproduced in R′. A ξ′ that aims to reproduce a given result from ξ may be performed in a variety of ways depending on which elements of ξ are open.

In the context of our toy example, figure 2 shows a network structure of some possible ξ as a function of which elements of ξ are open. Specifically, we consider variations of the six experiments introduced in §3 for two Spost (MLE and posterior mode) and two Ds (n = 30 and n = 200) yielding 24 distinct ξ, each denoted by a node in each network in figure 2. Given 1 of these 24 as ξ, all possible 24 experiments are either exact or non-exact ξ′. We use definition 2.6 and specify π to assess the degree of openness in these experiments. When ξ is ξ-Open, the probability of exact replication is 1, and every node of the network is only connected to itself. If ξ is π-Open, where π is a proper subset of ξ, then ξ′ may be a non-exact replication of ξ in various ways because ξ′ needs to substitute in a value for elements that are not in π. Therefore, the probability of ξ′ being an exact replication of ξ is lower than when ξ is ξ-Open. In figure 2, we show the network structures that result from choosing non-open elements with equal probability among all substitutions considered for each element. The network complexity depends on the size of π. If it is large, the number of connections among the nodes in the network is small, and each connection is strong (e.g. strongest when all open). In contrast, if it is small, the number of connections among the nodes in the network is large because there are both multiple substitutions to be made and multiple possibilities for each, and each connection is weak (e.g. weakest when MA, Spost, Ds not open in figure 2). Hence, as the size of π decreases, it becomes less probable to perform an exact replication of ξ. By looking at which elements of ξ are open to start with, we can assess how the sequence ξ(1), ξ(2), … of replication experiments can be misinterpreted if the necessary elements were not open and/or got lost in translation. In the rest of this section, we organize our results by elements K, MA, S, D.

Figure 2.

For the models in the toy example, degrees of openness (as given by definition 2.6) are depicted in eight networks, each consisting of the same 24 idealized experiments. Each idealized experiment is represented by a node in each network. These 24 experiments are obtained by a 6 × 2 × 2 factorial design. The first factor, MA, takes six values: binomial, negative binomial, hypergeometric, Poisson, exponential and normal. The second factor, Spost, takes two values: MLE and posterior mode. The third factor, Ds, takes two values: n = 30 and n = 200. Connections between nodes represent potential substitutions of non-open elements of idealized experiments. As more elements of an idealized experiment are non-open, the probability of choosing an exact replication decreases, as indicated by increased connectivity in the network.

4.1. Background knowledge, K

Providing an exact description of what goes into K is notoriously difficult. K, which is more of a philosophical element of ξ, typically carries over much more than what can be immediately gleaned over by a transparent and complete description of MA, S and D. We understand K to contain theoretical assumptions, contextual knowledge, paradigmatic principles, a specific language and presuppositions inherent in a given field; in short, a lot of inherited cultural and historical meaning of the kind Feyerabend refers to as natural interpretations in the Against Method ([24], p. 49). As Feyerabend explains, such natural interpretations are not easy to make explicit or even sometimes be aware of and thus, being open about them might not be a matter of choice. However, observations gain meaning only against this backdrop, and experiments can only be interpreted correctly by using the same language used to design them in the first place. Within ξ, this tends to happen implicitly, whereas when performing ξ′, there is no guarantee that all the relevant information in K will carry over to K′.

By using the binomial experiment in our toy example, we can illustrate why K is an integral part of ξ and what role it plays for ξ′. In ξbin, our aim (R) is to estimate the proportion of black ravens (p) in an infinite population of ravens (A). MA samples n ravens. In the case of our Spre, we count black and white ravens by naked eye. In the case of our Spost, we use the maximum likelihood estimator of p. We set n = 100, which constitutes our Ds. This description of ξbin based on a specific configuration of MA, Spre, Spost, Ds could just as well be used to define an experiment in which scientists are interested in estimating the proportion of black swans in a population of black and white swans. While ξbin would still be mathematically well defined, its scientific content and context are not captured by any of these four elements. For that, we need K. Without K, we would have to consider an about black swans as an acceptable replication of ξbin about black ravens, based on the mathematical structure alone. K, then, communicates scientific meaning across experiments.

As a more practical example of the import of K, we consider a recent ‘failed’ replication experiment. Murre [25] attempted to replicate a classical experiment by Godden and Baddeley [26] on context-dependent memory. Context-dependent memory refers to the hypothesis that the higher the match between the context in which a memory is being retrieved and the context in which the memory was originally encoded, the more successful the recall is expected to be. In the abstract, Murre [25] summarizes the results of the replication experiment as follows: ‘Contrary to the original experiment, we did not find that recall in the same context where the words had been learned was better than recall in the other context.’ Does this suggest that the results of the original experiment were a false positive—as replication failures are commonly interpreted? There are many reasons to not jump to that conclusion including sampling error and the fact that the context of the replication was different from that of the original [26] experiment. Specifically, unlike the original, the replication was being filmed as part of a TV programme. We will set these obvious concerns aside for a moment to focus on another. Ira Hyman explains the issue in a Twitter thread [27]. Hyman indicates that the phenomenon of context-dependent memory is conditional on the distinctiveness of the encoding context. That is, if distinct contexts are used over multiple trials, the chances that the context will be remembered with the encoded information increases. When the context is not distinctive enough or remains constant over trials, the effect disappears. Another known boundary condition for the phenomenon is the outcome variable: past research has shown that this works for retrieval tasks (e.g. free recall) and not recognition. The Murre [25] replication did not carry over these contextual details and changed the design in a way to not instigate context-dependent memory. As a result, the differences between R and R′ become impossible to attribute to a single cause and fail to provide evidence that can confirm or refute the results of the original Godden and Baddeley [26] experiment. It is even questionable whether the Murre [25] experiment provided an appropriate test of the result of interest in the first place to be considered a meaningful or relevant replication.

This replication example on context-dependent memory appears to imply that a ξ′ is meaningful or relevant with respect to a specific result R. By definition 2.2 and its interpretation, however, we know that mathematically, it is more convenient to separate the definition of ξ′ from R. It follows that there are at least two aspects of assessing the meaning and relevance of a replication.

Firstly, while an operational definition of K is elusive, a useful way to think about K is ‘all the information in ξ that is not already in MA, S and D’. At the minimum, for ξ′ to be considered a meaningful replication of ξ, K′ must import some information in K regarding the immediate scientific context of ξ. For this to hold, there is no need to invoke the notion of R.

Second, to assess the reproducibility of a given R, K′ must import relevant information pertaining to R from ξ. That is, replication experiments unconditional and conditional on R are not the same objects. To emphasize the difference between them, we distinguish between in-principle and epistemic reproducibility of an R in remark 4.1 (for further details, see appendix C).

Remark 4.1. —

Let ξ be an idealized experiment and ξ′ be its exact replication. Conditional on R from ξ, K′ is necessarily distinct from K for epistemic reproducibility of R by R′, but not necessarily distinct for in-principle reproducibility of R by R′.

In practice, ξ′ can never be an exact replication of ξ in an ontological sense. The ξ is a one-time event that has already happened under certain conditions and ξ′ has to differ from ξ in some aspect. The best standard that ξ′ can purport to achieve is to capture relevant elements of ξ in such a way that performing inference about R while adhering to A and sampling the same population is possible within an acceptable margin of error. However, every experiment is embedded in its immediate social, historical and scientific context, making it a non-trivial task for scientists to include all the relevant K when they report the experiment in an article and make explicit all the natural interpretations used to assign meaning to its results. As such, designing and conducting replication experiments cannot be reduced to a clerical implementation of reported experimental procedures. A comprehensive understanding of K is increasingly critical as ξ′ diverges further away from ξ to be able to comprehend the nature and importance of the divergence for the interpretability of ξ′ and for results reproducibility. For ξ′ to serve their intended objective, information readily available from ξ′ needs to be supplemented by a careful historical and contextual examination of the relevant literature and the broader scientific background. Otherwise, ξ′ may differ from ξ in non-trivial ways impacting the meaning of the evidence obtained and changing the estimated reproducibility rate.

4.2. Model, MA

For ξ′ to be able to reproduce all possible R of ξ, MA must be specified up to the unknown quantities on which inference is desired. This specification must be transmitted to ξ′, such that MA and are identical for inferential purposes mapping to R. If an aspect of MA that has an inferential value mapping to R is not transmitted to ξ′ and this inferential value is lost, then R cannot be meaningfully reproduced by R′. On the other hand, given an inferential objective mapping to a specific R, the aspects of MA that are irrelevant to that inferential objective need not be transmitted to ξ′ to meaningfully reproduce R by R′. Counterintuitively, to meaningfully reproduce R by R′, MA and do not need to be identical, as given by result 4.2.

Result 4.2. —

MA and do not have to be identical in order to reproduce a result R by R′. Under mild assumptions, the requirement for R to be reproducible by R′ is that there exists a one-to-one transformation between MA and for inferential purposes mapping to R (proof and details in appendix D).

As an example of result 4.2, consider ξbin and ξnegbin in figure 1. Conditional on the objective of estimating p, the population proportion of black ravens, any of (ξbin, ξbin), (ξbin, ξnegbin), (ξnegbin, ξbin) and (ξnegbin, ξnegbin) can be effectively considered a pair (ξ, ξ′) of an idealized experiment and its (exact) replication. The reason is that the quantity of interest p is an identifiable parameter in both experiments, although MA and are not necessarily identical.5

In practice, when conducting a sequence of replication experiments, we would be interested in gauging the extent to which we can reproduce a specific result. Assuming that S are the same throughout all experiments, we expect the observed reproducibility rate of a sequence of experiments whose elements are chosen from ξbin, ξnegbin to converge on the same value, capturing the information on p, in the same way. However, result 4.2 does not imply that the (true) reproducibility rate of any two sequences of experiments involving any MA and are equal to each other. In fact, the (true) reproducibility rates of two sequences are not equal, when non-exact replications are involved.

Openness of MA to needs to be distinguished from the equivalence of MA and . In ξbin and ξnegbin, is not equivalent to MA. However, the binomial and the negative binomial models become equivalent with respect to a certain inferential objective that allows for reproducing a specific R, which is estimating p. To establish this compatibility, MA should be open to ξ′ but does not need to be assumed in ξ′. Specifically, to set to be the negative binomial model in ξ′ to reproduce the estimate of p in ξ, we need to know that ξ has used the binomial model. This ensures that ξ′ can use a model that has the same parameter p with the exact same meaning as in ξ and same population assumptions A such that the inferential equivalence holds. A model that has different population assumptions A from ξbin and ξnegbin is ξhyper. This difference matters for reproducing a specific R. ξhyper samples from an arbitrary finite subset of infinite population but still uses the same parameter p as ξbin and ξnegbin. The estimate of p in ξhyper will be biased due to differences in A. Without access to full specification of MA, this compatibility between MA and or lack thereof cannot be established.

This point is illustrated in many-analyst studies [28,29] in which a fixed D is independently analysed by multiple research teams who are provided D and a research question that puts a restriction on which R would be relevant for the purposes of the project. The teams were not, however, provided a MA, Spost or full specification of K. Teams used a variety of models differing in their assumptions about the error variance and the number of covariates (MA) to analyse D. The results differed widely with regard to reported effect sizes and hypothesis tests. So even when D was open, the lack of specification with regard to MA yielded largely inconsistent results. It is not because the same aspects of reality cannot be captured by different models but because researchers did not automatically agree on which aspects to capture in their models.

Taking stock, our ravens example is deliberately simple to help in our analysis. State-of-the-art models are often large objects. If MA is large, it might not always be clear which class of models can be drawn from to be equivalent to MA, and finding this class might be unfeasible. Then MA needs to be both open to and photocopied by ξ′ to be able to reproduce the results of interest. This point is particularly important to communicate to scientists who primarily engage in routine null hypothesis significant testing procedures and may not be conventionally expected to transparently report their models.6

4.3. Method, S

4.3.1. Pre-data methods, Spre

Spre comprises a wide range of procedural components in ξ that feeds into collection of Dv. Examples of Spre are determining types of observables, unobservables and constants; measurement and instrumentation choices; and sampling procedures such as random number generators used in computational methods.

Pertaining to mathematical features of the variables of interest, Spre may capture their types or a particular scaling. For example, a variable can be assumed discrete, continuous, or both discrete and continuous for mathematical convenience. This choice determines whether we are bound by a counting measure or a Lebesgue measure. A variable can also be assumed categorical, ordinal, interval or ratio. Some variables or parameters are scaled to the interval [0, 1] on the real line, to make their interpretation natural. All of these Spre choices affect MA and the consequent Spost.

Pertaining to operational features of the variables of interest, Spre may capture the method of observation and measurement instruments. In our toy example, a raven can be observed for its colour by naked eye (Spre), but another investigator may opt for a mechanical pigment test . What considerations should be given when making substitutions for Spre? One issue due to choices in operationalization is measurement error. Measurement error in observables, when not accounted for, might be a factor unduly exacerbating irreproducibility or inflating reproducibility [10,32,33]. Another issue arises due to arbitrary choice of experimental manipulations or conditions which might not be mathematically equivalent. For example, manipulations that are not tested for specificity may end up manipulating non-focal constructs or only weakly manipulate the focal construct (i.e. leading to small effect sizes) [34].

Even though knowing all these features is useful in understanding Spre, there is a caveat. All aspects of Spre must be fixed before realizing Dv, and it is challenging to assess a priori whether ξ and ξ′ using different Spre and , respectively, can be equivalent to each other. Due to these complexities and ambiguities surrounding Spre, openness of Spre seems to be the easiest way to obtain an equivalent in designing and performing ξ′. However, there are well-known examples to show that Spre and can be different and yet ξ and ξ′ can be equivalent conditional on R, which leads us to result 4.3.

Result 4.3. —

Spre and do not have to be identical to reproduce a result R.

As an example of result 4.3, consider models ξpoi and ξexp in figure 1. ξpoi has a good approximative model to the model in ξbin if we think of sampling ravens continuously from a population where black ravens are rare. We assume np → λ, where λ is the rate of sampling the black ravens (parameter of the Poisson model), and under this assumption, we focus on inference on λ. Now, as a thought experiment, let us assume that we do not have a device to count the number of black ravens past 1. However, we have a chronometer. As a result of using the model in ξpoi, we are, as a mathematical fact, also using the model ξexp, which measures the time between observing black ravens. Further, the two models have the same parameter, with the same interpretation. Therefore, if we were to measure the time between observing black ravens for a sample, then we can still perform inference on the rate of observing black ravens from the population. We note that ξbin, ξnegbin, ξhyper, ξpoi and ξnor operate under different assumptions, but are still counting ravens and interested in the number of black ravens. In contrast, ξexp is considerably different from these experiments. It is not counting ravens, but measuring time, which we would reasonably define as a continuous variable. While Spre in ξexp differs considerably from all other experiments in our toy example, the exponential experiment would serve as a meaningful ξ′ to reproduce R in any of them, at least approximately.

4.3.2. Statistical methods, Spost

Statistical methods, Spost, that are designed for a specific inferential goal, R, but do not return identical values when applied to a fixed D are common. Conversely, some statistical methods return identical values for a specific inferential goal, R, and they are mathematically equivalent conditional on D, even though they operate under distinct motivating principles. We have the following result.

Result 4.4. —

Spost and do not have to be identical to reproduce a result R by R′.

For the experiments ξbin and ξnegbin in our toy example, the MLE and the MME of p are numerically equivalent (appendix E). This equivalence holds even when the interpretation of probability differs between methods. For example, MLE and the posterior mode in Bayesian inference under uniform prior distribution on parameters are equivalent regardless of all else.

At the minimum, for ξ′ to be a meaningful replication of ξ conditional on R, the modes of inference should be equivalent. That is, the pair () should belong to one of: point estimators, interval estimators, hypothesis tests, predictions, or model selection. Further, Spost should be open to ξ′, but it does not need to be duplicated to establish equivalence. For example, to use MME to estimate p in ξ′, we need to know that ξ has used MLE or MME. This way, we can ensure that ξ′ will at least use a numerically equivalent estimator as the one used in ξ, even if not equivalent in principle. On the other hand, it is well known that a variety of Spost for the same mode of inference may yield different R. The many-analyst project by Silberzahn et al. [29] provides clear examples of this. Teams that were given a fixed D to analyse for a predetermined R (i.e. effect size as given by odds ratio) ended up implementing their choice of Spost. Even when their modelling assumptions matched, the results they reported varied. For instance, out of the teams that assumed a logistic regression model with two covariates, one pursued a generalized linear mixed-effects model with a logit link for Spost ([29], line 15 in table 3) and another pursued a Bayesian logistic regression ([29], line 16 in table 3). The confidence intervals around the effect size estimates reported by these two teams do not even overlap despite using a fixed D.

4.4. Data, D

4.4.1. Data structure, Ds

In statistics and philosophy of statistics, D′ is often seen as the new data of the old kind in the sense that Dv and are independent of each other, but Ds and are identical. However, conditional on R, we have result 4.5.

Result 4.5. —

Ds and do not have to be identical in order to reproduce a result R by R′.

As an example of result 4.5, we consider the models in ξpoi and ξexp in figure 1. Poisson model counts the black ravens as observable. It assumes that black ravens are observed with a constant rate. Exponential model measures the time between arrivals of black ravens. It also assumes that black ravens are observed with a constant rate. By referring to the unit of observations, we see that the data structures in ξpoi and ξexp are distinct. And yet, the unknown parameter about which inference is desired is the same, λ—the rate of black ravens appearing in continuous sampling (appendix F).

As another example, note that the stopping rules of ξbin and ξnegbin are different from each other. The stopping rule affects Ds because the maximum number of black ravens in ξbin is n, but in ξnegbin, it is the maximum number of black ravens in the population. And yet, the estimate of p is the same in both experiments.

Data sharing is sometimes viewed as a prerequisite for a reproducible science [8,13,35,36]. Our analysis suggests that this statement requires further qualification and calls for attention to Ds. Result 4.5 notwithstanding, changes in Ds are not trivial and they impact the true reproducibility rate. For example, ξ′ might be designed to have a larger sample size than that of ξ. In this case, the variance of the sampling distribution of the sample mean decreases linearly with the sample size, and hence, it would be different for ξ and ξ′. Typically, larger sample sizes are pursued to increase the statistical power of a hypothesis test in ξ′. While such ξ′ will indeed increase the power of a test, it also impacts the reproducibility rate. Counterintuitively, under some scenarios, this might play out as reproducing false results with increased frequency (see [10], for such counterintuitive results).

4.4.2. Data values, Dv

Having open access to Dv has no bearing on designing and performing a meaningful ξ′ or on the reproducibility of R. Conditional on R, ξ′ aims to reproduce R, not Dv. Therefore, reporting R from ξ is sufficient for ξ′ to assess whether R is reproduced by R′. However, information from ξ can be reported in a variety of ways and does not necessarily contain R. We show this with an example. We consider a model selection problem with three models M1, M2, M3, where ξ and ξ′ use some information criterion (IC) as Spost. Assume ξ reports selecting M1 as R. This is all ξ′ needs to import to know whether R is reproduced in R′. If R′ reports M1 as the selected model, then it is reproduced, else it is not. However, if which model is selected is not reported as R, ξ′ needs values of IC from ξ for all M1, M2, M3, so that ξ′ can redo the analysis of ξ to find out what R was. In the unlikely event that not even ICs are reported, ξ′ would need Dv to re-perform the whole analysis of ξ by applying Spost to D to calculate ICs and then to obtain R.

Result 4.6. —

ξ does not have to be Dv-Open in order for ξ′ to reproduce a result R.

That said, openness of Dv might facilitate auditing of R and vetting it for errors. There may be other benefits to open Dv such as enabling further research on Dv (e.g. meta-analyses). The distinction we draw matters particularly when there may be valid ethical concerns regarding data sharing [37]. Open Dv is best evaluated on its own merits as has been discussed extensively [38] but cannot be meaningfully appraised as a facilitator of replication experiments or precursor of results reproducibility. While some level of open scientific practices is necessary to obtain reproducible results, open data are not a prerequisite.

5. Exact versus non-exact replications: a simulation study on reproducibility rate

So far we have established that to reproduce R, all elements of ξ do not need to be open, and not all elements that are required to be open need to be duplicated for a meaningful ξ′. On the flip side, we also established that relatively simple openness considerations such as experimental procedures, hypotheses, analyses and data will not suffice to make ξ′ meaningful. The challenge in making π-openness useful for replication experiments is to clearly identify and delineate the elements of the idealized experiment. For example, proper K is difficult to define and communicate with precision. Also, MA is at times conflated with Spost and left opaque in reporting. As we discussed earlier, making K explicit and clearly specifying MA up to its unknowns is critical when designing ξ′.

Hitherto, we focused on replication experiments and only alluded to results reproducibility when needed. In this tack, we have mathematically isolated ξ from R and made some statements about ξ unconditional, and then conditional on R to emphasize their difference. Now that we turn our attention to explicitly drawing the link from replications to reproducibility, we condition R on ξ.

Given a sequence of exact replication experiments ξ(1), ξ(2), … and a result R from an original experiment ξ, do we expect to confirm R with high probability irrespective of the elements of ξ? The answer is ‘no’ as shown elsewhere [10,21]. The true reproducibility rate of a result is a function of not only the true model generating the data but also the elements of the idealized experiment. ξ may be characterized by a misspecified MA (e.g. omitted variables, incorrect formulation between variables and parameters), unreliable Spre (e.g. measurement error, confounded designs, non-probability samples), unreliable Spost (e.g. inconsistent estimators, violated statistical assumptions), errors in D (e.g. recording errors), or large noise-to-signal ratio (e.g. large error variance and small expected value). All of these lead to the mathematical conclusion that the true reproducibility rate ϕ is specific to each configuration of ξ and thus can take any value on [0, 1]. Therefore, ϕ tells us more about the experiment itself than some unobserved reality that is presumed to exist beyond it. Since we are now conditioning on ξ and questioning the reproducibility rate of R, the conclusion is that while a degree of openness may be able to address a ‘replication’ crisis by facilitating faithful replication experiments, it does not suffice to solve any alleged ‘reproducibility’ crisis.

Openness of elements of ξ facilitates ξ′, thereby allowing us to estimate ϕ of R by ϕN conditional on ξ. However, ϕ cannot be reasonably used as a target of scientific practices where each ξ is designed to maximize it. It does not make sense to think that a ξ that returns the highest reproducibility rate for a given R is scientifically most relevant or most rigorous experiment. For example, choosing an Spost that always returns the same fixed value regardless of Dv would yield ϕ = 1. In fact, ϕ can be made independent of what it would be under sampling error.7

A reasonable expectation from ξ′ is to deliver a scientifically relevant estimate of ϕ, given R. Openness plays an important role in this regard. In §4, we established that any non-open elements of ξ would need to be substituted for in ξ′, leading to a non-exact replication. The following result states how a sequence of non-exact replications alter the reproducibility rate.

Result 5.1. —

Assume a sequence ξ(1), ξ(2), …, ξ(J) of idealized experiments in which a result R is of interest. Then, the estimated reproducibility rate of R in this sequence converges to the mean reproducibility rate of R in J replication experiments. (See appendix G for proof.)

Result 5.1 states that the true reproducibility rate to which the estimated reproducibility rate of a sequence of non-exact replication experiments converges is the mean reproducibility rate of results from all experiments in the non-exact sequence and not the true reproducibility rate of a fixed original result. Hence, the reproducibility rate is a function of all elements of the idealized experiment, for both a fixed original experiment and all its replications. Each replication that is non-exact in a different way from others introduces variability, decreasing the precision of estimates given a fixed number of replications.

We illustrate the link between replication experiments and reproducibility rate with a simulation study. We consider a series of exact and non-exact replication experiments to analyse the variation in the reproducibility rate of a result as a function of the elements of ξ. We use sequences of two idealized experiments ξpoi and ξnor, which are approximate models to binomial from our toy example. For all conditions, we fix the true proportion of black ravens and the number of trials in the exact binomial model at 0.01 and 1000, respectively. These arbitrary choices make the true reproducibility rate distinct under ξpoi and ξnor. As R, we choose a point estimate for the location parameter of the probability model. For convenience, we assume that the parameter estimates of the original experiments are equal to the true value. After each replication experiment, we determine whether this result is reproduced by R′ based on whether it falls within some suitably scaled population standard deviation units of the true parameter value.

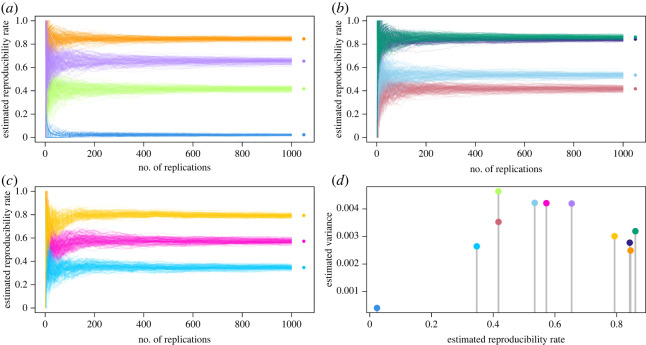

In exact replications, we vary MA, Spost, Ds of the idealized experiment, each element taking two values. This results in a 2 (MA) × 2 (Spost) × 2 (Ds) study design (eight conditions) for exact replications where (i) model assumed, MA ∈ {ξpoi, ξnor}, (ii) method as point estimate, Spost ∈ {MLE, posterior mode}, and (iii) sample size, Ds ∈ {30, 200}. When Spost is the posterior mode, we use conjugate priors: Gamma distribution with rate and shape parameters 5 (arbitrarily chosen) for ξpoi, and normal distribution with prior mean 10 and prior precision 1 for ξnor. Figure 3a,b shows 100 independent runs of a sequence of 1000 exact replication experiments under these conditions, for ξpoi and ξnor, respectively.

Figure 3.

Reproducibility rates of a true result in sequences of 1000 exact (a,b) and non-exact (c) replication experiments. Spost is varied as MLE and posterior mode. Ds is varied as n = 30 and n = 200. Each condition is colour coded and consists of 100 independent runs. (a) MA: Poisson. Orange; MLE, n = 200. Purple; posterior mode, n = 200. Light green; MLE, n = 30. Light blue; posterior mode, n = 30. (b) MA: Normal. Dark green; posterior mode, n = 200. Dark blue; MLE, n = 200. Pale blue; posterior mode, n = 30. Rose; MLE, n = 30. (c) Three cases of 1000 non-exact replication experiments where they are chosen uniformly randomly from the set of all eight idealized experiments (magenta), four idealized experiments with lowest reproducibility rates (aqua blue), and four idealized experiments with highest reproducibility rates (yellow). (a–c) Asterisks denote the mean of the reproducibility rates of 100 runs at step 1000, an estimate of the true reproducibility rate for the sequence of idealized experiments. (d) Variances of all 11 exact and non-exact sequences at step 50 of the simulation with respect to the estimated reproducibility rate (see text for interpretation).

In non-exact replications, we vary the set from which the replication experiment is uniformly randomly chosen from in each step. This results in additional three conditions: a set of all eight idealized experiments, a set of four idealized experiments with lowest reproducibility rates and a set of four idealized experiments with highest reproducibility rates. Figure 3c shows 100 independent runs of a sequence of 1000 non-exact replication experiments under these conditions.

We emphasize that all parameters of the simulation example in figure 3 are chosen so that the implications of differences between different models, methods and data structures make the link between replications and reproducibility explicit. It is certainly possible to choose these parameters to obtain any true reproducibility rate defined by a specific ξ since ϕ ∈ [0, 1].

Conditional on R, some conclusions from figure 3 are as follows.

-

1.

The true reproducibility rate depends on the true data-generating mechanism and the elements of the original experiment. Specifically, the true reproducibility rate in our simulation is a function of the true model generating the data, MA, and also Ds such as the sample size, and Spost such as the method of point estimation. This can be seen from exact replication sequences of eight idealized experiments in figure 3a,b, with the true reproducibility rate for each experiment indicated by stars.

-

2.

By weak law of large numbers, even if the true reproducibility rate is high (e.g. orange in figure 3a and green in figure 3b), the estimated reproducibility rate from a short sequence of exact replications has higher variance than the variance of the estimated reproducibility rate in a longer sequence. However, the estimated reproducibility rate from exact replications ultimately converges to the true reproducibility rate of an original result from a fixed ξ illustrating result 2.7.

-

3.

Estimated rate of reproducibility from a sequence of non-exact replications may be drastically different from the true reproducibility rate of an original result. The sequence of idealized experiments shown in pink in figure 3c is a sequence of non-exact replications for any of the eight original idealized experiments in figure 3a,b. For example, assume the original experiment we aim to replicate is ξpoi with Spost and Ds set to posterior mode and sample size n = 30, respectively. Blue sequences in figure 3a show that the true reproducibility rate of R (i.e. the estimate of location parameter) from these sequences of exact replication experiments is close to zero as shown by the convergence of 100 runs (i.e. blue star). If Spost and Ds were not open in this experiment, then we would have had to substitute for them and the pink sequences in figure 3c would serve as plausible replication experiments. In this case, we would estimate the reproducibility rate of R as approximately 60% (i.e. pink star).

-

4.

In a sequence of replication experiments, the set we choose the experiments from matters for true reproducibility rate. An original idealized experiment and its non-exact replications belonging to a set of idealized experiments that have true reproducibility rates close to each other for a given R yield an estimated reproducibility rate that is closer to the true value of the original experiment. For example, the yellow and blue sequences in figure 3c come from a set of four idealized experiments with the lowest and highest reproducibility rates among all eight experiments, respectively. The set of experiments sampled in the blue sequence is compared with the set of experiments sampled in the yellow sequence. The latter serves as a more relevant set of idealized experiments for replications of the orange and purple experiments in figure 3a, and the dark blue and green experiments in figure 3b, yielding a better reproducibility rate estimate for the original R. This pattern is an illustration of the broader theoretical result 5.1.

In practice, however, we do not have access to the true reproducibility rate of any idealized experiment to help determine our replication sets. We have to make our decision based on the elements of the idealized experiment instead, and that requires a thorough understanding of how each element of the idealized experiment impacts the reproducibility rate in a given situation.

-

5.

The variance of the estimated reproducibility rate of results in a sequence of non-exact replications can be higher or lower than the variance of the estimated reproducibility rate in a sequence of exact replications of the original experiment. The pattern of variances we observe in figure 3d is a direct consequence of nϕ following a binomial distribution and result 5.1. As a mathematical fact of the binomial distribution, its variance is maximum at ϕ = 0.5 and decreases as the probability of success, ϕ, gets closer to 0 or 1. Hence, we expect our estimates to vary greatly in a sequence of non-exact replication experiments with moderate true reproducibility rates. If a sequence of non-exact replications come from a homogeneous set of very high (or very low) true reproducibility rates, we expect our estimates to vary little. On the other hand, we expect highest variation in our estimates from exact replications if ϕ = 0.5 from the original experiment and from non-exact replications if they are highly heterogeneous in their true reproducibility rates.

In sum, the mere choice of the elements of ξ impacts both the level of the true reproducibility rate and the variance of the estimated reproducibility rate. Any divergence in ξ′ may move the estimated reproducibility rate away from the true value for an original result and increase the variance of its estimates. In appendix H, we provide a broader example for result 5.1 in the context of linear regression models, under a model selection (rather than parameter estimation) scenario, where both true and false original results are considered. This simulation study demonstrates a similar pattern of results to those presented in figure 3. Combined, simulation results confirm that reproducibility rate can take any value on [0, 1] depending on the elements of ξ even when the original experiment indeed captures a true result, there is no scientific malpractice, and meaningful replication experiments can be performed to reproduce R.

6. Discussion

In this article, we focused on scientific experiment as the critical unit of analysis, formalizing the logical structure of experiments towards building a theory of reproducibility. We clarified what makes for a meaningful replication experiment even when an exact replication experiment is not possible and established how openness of different elements of the idealized experiment contribute to it. We distinguished between the ability of a replication experiment to reproduce a result and the true reproducibility rate for that result. We showed that theoretically it is not possible to justify a desired level of reproducibility rate in a given line of research and to reach a high level of reproducibility rate via eliminating malpractice, requiring open procedures or data, or performing replication experiments.

We understand the potential lack of enthusiasm of the practitioner when they may find that the theory we develop does not have immediate application on their scientific practice. Our work is theoretical and is meant to present a framework to understand and study the objects and products of science. It is not meant to provide solutions to immediate problems scientists face. Practitioners often turn to theory for a clear answer to their difficulties in real-life studies. Our goal is not to provide these answers. We lay the groundwork that would potentially be needed to address such problems in the indefinite future, but the theoretical work is slow and incremental. In our simulations, we can create perfect conditions to illustrate our theoretical results because we set our own model parameters, and we know what our models are and what they mean, perfect transparency exists and there are no misunderstandings because every aspect of scientific objects is precisely known. All mathematical and statistical problems—excepting paper-and-pencil exact solutions—are primarily studied this way. Science as practised, on the other hand, is messy, ambiguous, loose, hard to define and communicate. There is no easy or direct translation of our work to myriad imperfections of the scientific practice. Our idealizations are removed from reality to make theoretical work possible in the first place. We are only laying the building blocks of such a theory to make practical implementations possible in the future. All this does not mean theory is currently of no practical relevance, however. In fact, we think that without a thorough understanding of mathematical implications of reproducibility and replications, we cannot be ready to interpret the results and solve problems that arise in practice.

With this constraint in mind, we discuss key theoretical insights from our findings.

6.1. Reproducibility and the search for truth

A layperson understanding of reproducibility to the effect that ‘if we observe a natural phenomenon, we should be able to reproduce it and if we cannot reproduce it, our initial observation must have been a fluke’ is exceedingly misleading. A statistical fact is that reproducibility is not simply a function of ‘truth’. This was illustrated in [21] and proved in [10]: true results are not perfectly reproducible, and perfectly reproducible results are not always true (see appendix I for proof). True reproducibility rate of a result and the variability in its estimator are determined by many factors including but not limited to the true data-generating mechanism: The degree of rigour of the original experiment as assessed by the extent to which its elements are individually reliable and internally compatible with each other, the degree to which replication experiments are faithful to the original and how any discrepancies impact the results, the degree of rigour of the replication experiment wherever it diverges from the original and how we determine for a result to be reproduced. Factors such as effect size, sampling error, missing background knowledge and model misspecification [39,40] could render true results difficult to reproduce.

As a useful reminder, sampling error might be masked by the choice of method and other elements of the idealized experiment. A false result could be 100% reproducible due to the choice of estimation method. Therefore, judgements of reproducibility cannot exclusively be used to make valid inference on the truth value of a given result (see also [41], for a computational model with a similar conclusion).

Even if some form of a perfect experiment that captures ground truth and its exact replications exist, it might take many epistemic iterations of theoretical, methodological and empirical research to achieve them (see [42], p. 45, for a detailed discussion on epistemic iteration). We cannot expect to skip the arduous iterative process of doing science and hope to arrive at a non-trivially reproducible science with procedural interventions. In most fields and stages of science, focusing on maximizing reproducibility seems like a fool’s errand. For meaningful scientific progress, at the minimum, we should take care to properly analyse the elements of the original experiment to assess how they might impact the true reproducibility rate and analyse the discrepancies of replication experiment(s) from the original to gauge how our reproducibility estimates may vary from the true value of the original result’s reproducibility. In the course of ‘normal science’ (borrowing terminology from [43]), reproducibility of a result is more likely to tell us something about the experiments that generated the result and its reproducibility rate estimates than the lawlikeness of some underlying phenomenon.

6.2. Defining reproducibility

One aspect of reproducibility that often gets overlooked: how we define and quantify a result and its reproducibility also determines the true reproducibility rate (see also [44] for a discussion of different statistical methods to assess reproducibility and their limitations). For example, in a null hypothesis significance test, we might call a ‘reject’ decision in a replication experiment a successfully reproduced result if the original experiment rejected the hypothesis. On the other hand, we might instead look at whether effect size estimate of the replication experiment falls within some fixed error around the point estimate from the original experiment. Everything else being equal, the true reproducibility rates are expected to be different between these two cases using different reproducibility criteria.

Our findings hold under mathematical definitions of a result (definition 2.3) and of reproducibility rate (definition 2.5). In the absence of such theoretical precision, we often resort to heuristic, common sense interpretations of terms. In appendix A, we present a detailed argument on why and how theoretical precision matters and provide an example of a plausible measure of reproducibility without desirable statistical properties. Such lax standards in definitions invite unwanted or strategic abuse of ambiguities when interpreting replication results when we have a limited understanding of what we should expect to observe. Our surprise at ‘failed’ replication results or delight in ‘successful’ ones may not be warranted, and what we observe could simply be a theoretical limitation imposed by our definitions rather than a reflection of the true signal that presumably exists in nature. For an extreme example, consider the following: we might call a result as reproduced if the replication effect size estimate falls on the real line. That would trivially give us a reproducibility rate.

Whenever we evaluate replications and estimate reproducibility, it is incumbent on us to understand how we define our results, how we determine reproducibility and how our measures should be expected to behave under specific conditions.

6.3. Reproducibility and openness

Open practices in science have been intuitively proposed as a key to solving the issues surrounding reproducibility of scientific results. However, a formal framework to validate this intuition has been missing and is needed for a clear discussion of reproducibility. The notion of idealized experiment serves as a theoretical foundation for this purpose. By using this foundation, we have distinguished the concepts of replication and reproducibility, showing how openness is related to meaningful replications. We have also distinguished between two types of reproducibility (appendix C). Whether elements from one experiment carry over to a replication experiment is only relevant to epistemic—as opposed to in-principle—reproducibility. In practice, however, resource constraints determine the availability and transferability of information between experiments. A realistic framework needs to provide a refined sense of which elements of an experiment need to be open to reproduce a given result, as opposed to simply saying ‘all of it’.