Abstract

Introduction:

Many cancer survivors report cognitive problems following diagnosis and treatment. However, the clinical significance of patient-reported cognitive symptoms early in survivorship can be unclear. We used a machine learning approach to determine the association of persistent self-reported cognitive symptoms two years after diagnosis and neurocognitive test performance in a prospective cohort of older breast cancer survivors.

Materials and Methods:

We enrolled breast cancer survivors with non-metastatic disease (n=435) and age- and education-matched non-cancer controls (n=441) between August 2010 and December 2017 and followed until January 2020; we excluded women with neurological disease and all women passed a cognitive screen at enrollment. Women completed the FACT-Cog Perceived Cognitive Impairment (PCI) scale and neurocognitive tests of attention, processing speed, executive function, learning, memory and visuospatial ability, and timed activities of daily living assessments at enrollment (pre-systemic treatment) and annually to 24 months, for a total of 59 individual neurocognitive measures. We defined persistent self-reported cognitive decline as clinically meaningful decline (3.7+ points) on the PCI scale from enrollment to twelve months with persistence to 24 months. Analysis used four machine learning models based on data for change scores (baseline to twelve months) on the 59 neurocognitive measures and measures of depression, anxiety, and fatigue to determine a set of variables that distinguished the 24-month persistent cognitive decline group from non-cancer controls or from survivors without decline.

Results:

The sample of survivors and controls ranged in age from were ages 60–89. Thirty-three percent of survivors had self-reported cognitive decline at twelve months and two-thirds continued to have persistent decline to 24 months (n=60). Least Absolute Shrinkage and Selection Operator (LASSO) models distinguished survivors with persistent self-reported declines from controls (AUC=0.736) and survivors without decline (n=147; AUC=0.744). The variables that separated groups were predominantly neurocognitive test performance change scores, including declines in list learning, verbal fluency, and attention measures.

Discussion:

Machine learning may be useful to further our understanding of cancer-related cognitive decline. Our results suggest that persistent self-reported cognitive problems among older women with breast cancer are associated with a constellation of mild neurocognitive changes warranting clinical attention.

Keywords: patient-reported outcomes, breast cancer, cancer-related cognitive decline, cancer-related cognitive impairment, machine learning, neuropsychology, self-reported cognition

Introduction

Cognitive problems are reported by up to half of breast cancer survivors following their diagnosis and treatment.1,2 These problems are commonly referred to as cancer-related cognitive decline (CRCD). Despite their frequency, the clinical significance of self-reported cognitive symptoms remains unclear.3 Part of the confusion is that there is no agreed upon standard for measuring CRCD. There are sound reasons to define CRCD based on neurocognitive test performance, while patient-reported measures are also employed with success.4,5 These considerations are further complicated by observations that self-reported cognitive problems do not always correspond to deficits on neurocognitive measures6 and conflicting reports about the extent to which self-reported cognitive problems are otherwise attributable to depression, anxiety, and fatigue.7,8 This uncertainty about the meaning of cognitive complaints may leave clinicians unsure as to the appropriate actions to take when their patients, especially older patients, have cognitive complaints.

In older non-cancer populations, self-reported cognitive symptoms can be predictors of future cognitive decline.5,9–11 However, CRCD does not fit the mold of more established neurocognitive disorders of older age like Parkinson’s or Alzheimer’s disease, which have a generally established onset, course, neuropathology, and pattern of cognitive impairment. CRCD tends to more subtle in terms of the magnitude of deficits and affects a broad range of cognitive domains.12,13 Therefore, researching CRCD using a “top-down” research frame of fitting data to preconceived models may have limited usefulness in defining clinically important cognitive problems. Machine learning uses a “bottom-up” approach, allowing the data to drive our understanding and offering an alternative for addressing the challenges in studying CRCD.14 Machine learning approaches have been applied with some success in identifying risk of cognitive decline in the setting of other neurocognitive disorders, but have not yet been used in the setting of CRCD.15,16

In this report, we used data from the large prospective Thinking and Living with Cancer (TLC) cohort of older breast cancer survivors and frequency-matched non-cancer controls to test the potential utility of machine learning to understand the clinical meaning of self-reported cognitive problems. Among women with 24-month follow-up data, we describe how many survivors exhibit 12-month decline based on self-reported cognition, and whether that decline persists to 24 months. We then used machine learning to determine if changes in objective neurocognitive performance distinguish older survivors with self-reported cognitive problems from survivors who do not report cognitive decline and from non-cancer controls. Our goal in this study was exploratory; we sought to discover if machine learning could reveal a pattern of neurocognitive decline that was associated with self-reported cognitive decline. Identifying neurocognitive changes could help to clarify the significance of self-reported cognitive difficulties in clinical practice and signal which neuropsychological tests might be most sensitive to cancer-related cognitive problems particularly. This study is intended to illustrate the potential uses of machine learning approaches to inform clinical care of cancer survivors with CRCD and highlight areas for future focus.

Methods

Study Design and Sample

We conducted a secondary analysis using data from the TLC multi-site prospective study of older breast cancer survivors and frequency-matched non-cancer controls.17 All Institutional Review Boards approved the protocol (NCT03451383). We included participants recruited between August 1, 2010 and December 31, 2017 and followed until January 29, 2020; the study is ongoing. Eligible breast cancer survivors were 60 years of age or older, newly diagnosed with primary nonmetastatic breast cancer, and able to complete assessments in English. Women with a history of stroke, head injury, major axis I psychiatric disorders, and neurodegenerative disorders were excluded. We also excluded women with a prior history of cancer if active treatment occurred in the five years prior to enrollment or included systemic therapy. Non-cancer controls had the same exclusion criteria as survivors and included friend and community controls frequency-matched to survivors by age, race, education, and recruitment site.

Screening exclusions included scores of <24 on the Mini-Mental State Examination (MMSE)18 and/or <third grade equivalent reading level on the Wide Range Achievement Test, 4th edition (WRAT-4) Word Reading19 subtest. We excluded eight controls who scored >3 standard deviations (SD) above or below the control mean baseline neurocognitive scores adjusted for their age and education group. We also excluded data for survivors who experienced a recurrence (n=2) within six months prior to the evaluation. Participants were assessed at enrollment/baseline (post-surgery, pre-systemic therapy for survivors) and 12 and 24 months later using an interviewer-administered structured survey with standardized scales and neurocognitive testing.

Measures

Self-reported cognitive functioning was measured using the Functional Assessment of Cancer Therapy-Cognition (FACT-Cog) Perceived Cognitive Impairment (PCI-18) subscale.20,21 Based on previous reports,22,23 we considered a clinically meaningful cognitive decline as a change of 0.5 SD from the non-cancer controls’ baseline to 12-month FACT-Cog PCI score; this was equivalent to a decline >3.7 points.

Neurocognitive data included 59 measures from objective neuropsychological tests and a test of Timed Instrumental Activities of Daily Living (TIADL).24–27 We also included factors that might affect cognitive symptoms in cancer survivors: the Center for Epidemiologic Studies-Depression Scale (CES-D)28 for depression symptoms; the State-Trait Anxiety Inventory (STAI)29 state version for anxiety symptoms; and the FACT-Fatigue (FACT-F).30 A total of 62 measures are all continuous and descriptively summarized in Supplementary Table 2.

Groups

Our primary group of interest was survivors with persistent declines on FACT-cog PCI scores (“persistent CRCD”). We first identified survivors who declined on the FACT-Cog PCI from pre-systemic therapy to twelve months (i.e., declined after completing adjuvant chemotherapy and/or starting hormone therapy). To exclude those experiencing transient symptoms or later recovery,31 we defined the persistent CRCD group as only those with sustained decline of >3.7 points on the PCI from pre-treatment to 24 months. The comparison groups were non-cancer controls and non-declining survivors.

Statistical Approach

Demographic and clinical characteristics of the survivor groups and non-cancer controls were compared using one-way ANOVA for continuous variables and chi-squared tests for categorical variables.

Defining the cognitive groups requires that women have FACT-Cog PCI scores at baseline and 12-month follow-up, which led missing data (n=85). Prior to analyses, we examined reasons for missing data. The majority of missing data were due to missing one follow-up assessment but completing other later assessments (n=76), administrative/site-related losses (n=5), and death or drop-out (n=5). Women excluded from analyses due to missing data were similar to those included in sociodemographic, clinical, and psychosocial characteristics including baseline cognitive scores. There were minor differences between those included and missing, with survivors and controls with missing PCI data having significantly fewer years of education (15.4 vs. 14.2 years in survivors, 15.7 vs. 13.8 years in controls, p<.001, p<.001) than those included in the analyses (see Supplementary Section 1). However, as we focus on the changes in neurocognitive and symptom variables, the impact of baseline difference may be less meaningful. The differences in changes among neurocognitive and symptom variables between those included and missing are further discussed in Supplementary Section 1. In this work, we assume the missing data mechanism is either missing at random or missing completely at random where the completely case only analysis does not lead to biased estimation.32

Machine learning approaches were used to distinguish persistent CRCD (n=60) from (1) non-cancer controls (n=441) or (2) non-declining survivors (n=147). The predictor sets included change scores from baseline to 12 months for the 59 neurocognitive measures and three behavioral measures (anxiety, depression, fatigue). Additional analyses examined models that included predictor set change scores from baseline to 24 months. The PCI baseline scores were included in all models. Approximately 1.4% missing predictors among the 59 neurocognitive and three behavioral measures were imputed using nearest neighbor imputation.33

We evaluated four machine learning methods with cross-validation: (1) a logistic regression model with least absolute shrinkage and selection operator (LASSO),34 (2) a logistic regression model with elastic net regulation (Elasticnet),35 (3) a decision tree method using random forest (RF),36 and (4) stochastic gradient boosting machine learning (SGB). 37 We included backward variable selection model (BVS) without cross-validation (CV) as a conventional benchmark.

For cross-validation, instead of splitting the entire data into one training and one validation datasets, we created leave-one-out cross validation (LOOCV) sets to evaluate the performance of models. For each LOOCV dataset, we used a five-fold cross-validation during the model optimization of tuning/penalty parameters for four machine learning methods but not for BVS to maximize the area-under-the curve (AUC). We computed the empirical means and 95% confidence intervals of the AUC, sensitivity, and specificity where Youden’s index (maximum sensitivity + specificity) was used to identify a cut-off, respectively. Finally, we graphed the receiver-operating-characteristics (ROC) curves with median AUC, sensitivity, and specificity. We summarized rankings of each predictor derived from leave-one-out iterations using the frequency of each predictor selected in LASSO. We performed all analyses with R version 3.5.1 randomforest and caret packages (R Foundation for Statistical Computing).37

Results

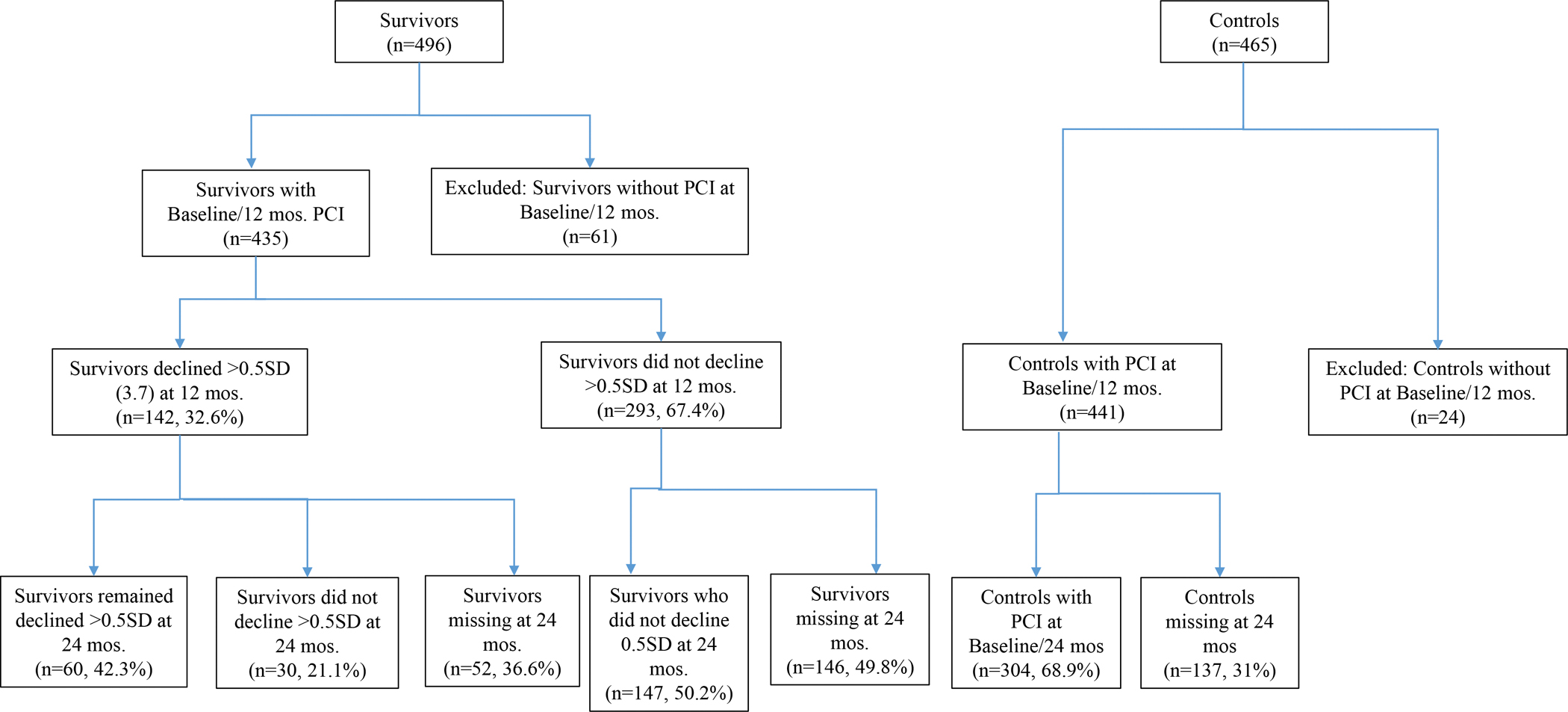

There were 435 survivors and 441 non-cancer controls included in this analysis (Figure 1). Among survivors, 67% (n=293) did not report cognitive declines and 33% (n=142) exhibited decline on the FACT-Cog PCI at 12 months. Among the 142 survivors with 12-month cognitive decline, 63% (n=90) completed a 24-month assessment: 33% (n=30) had improved cognitive scores and 67% (n=60) survivors continued to exhibit declined scores (i.e., the persistent CRCD group), see Figure 2.

Figure 1:

Older Women with Non-metastatic Breast Cancer and Frequency-Matched Non-Cancer Controls Included in Analyses of Persistent Cognitive Decline.

The numbers of survivors and non-cancer controls are calculated among those who were eligible to participate in the study for at least two time points. Eligibility for multiple assessments in the study was the same as enrollment eligibility, and included having or developing a neurological disease (e.g., stroke, Parkinson’s disease). Characteristics of missing data and patterns of missing assessments by survivors and non-cancer control groups are summarized in Supplementary Section 1.

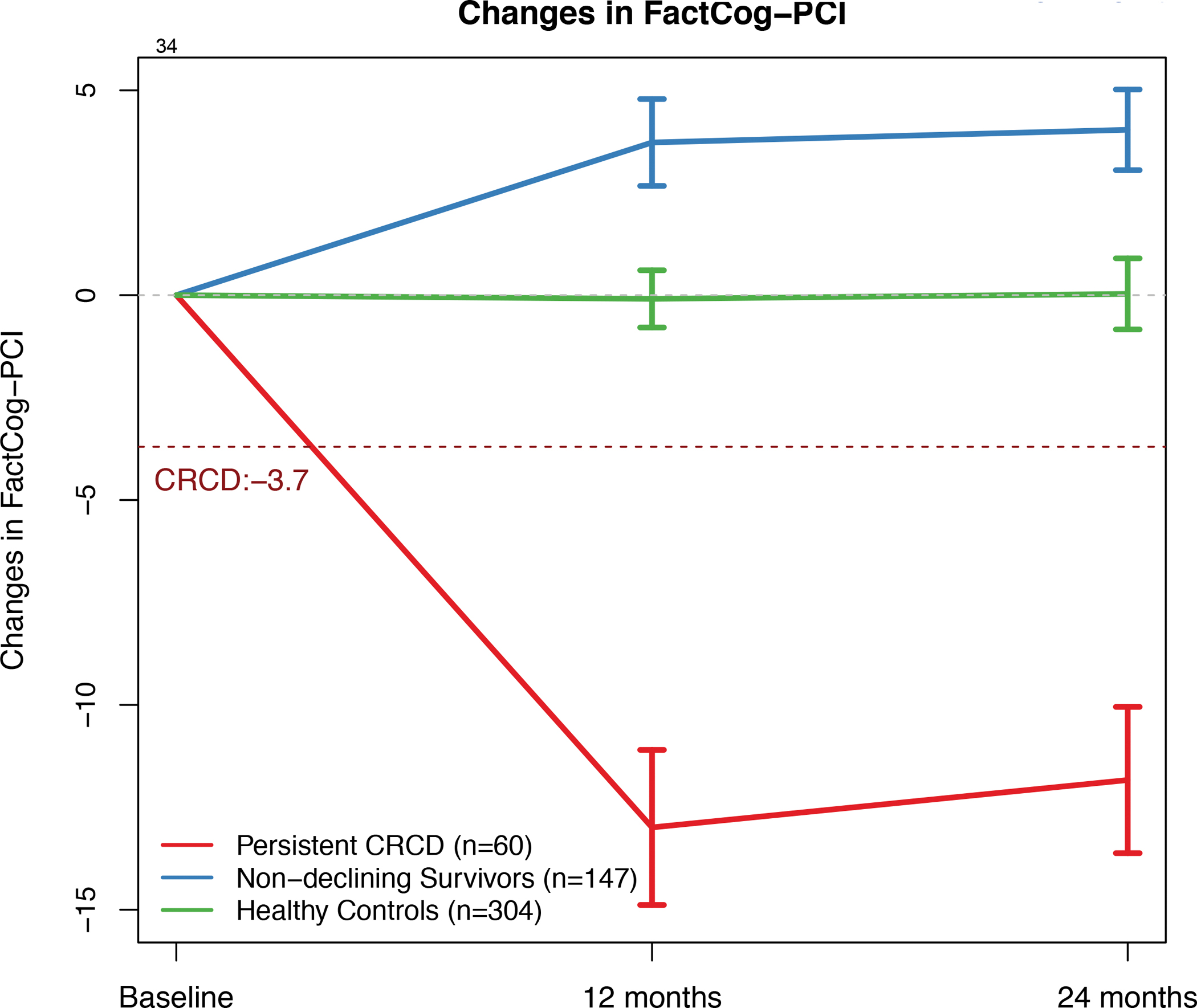

Figure 2:

FACT-Cog PCI Change Scores by Group over Time

Brackets represent 95% confidence intervals. Persistent CRCD is defined as 3.7+ points decline on the FACT-Cog Perceived Cognitive Impairment (PCI) scale from pre-systemic therapy baseline to 12 months, with persistence to 24 months.

The survivors and controls were comparable and were predominantly White (83%), had fifteen years of education on average, and had a mean age of 67 (Table 1). The non-declining survivor group tended to have higher levels of depression, and the two survivor groups exhibited significantly worse anxiety and fatigue symptoms compared to the control group. However, there were no significant differences in systemic therapy regimens between survivors in the persistent CRCD group and non-declining group; approximately 70% were treated with hormonal therapy only in each survivor group.

Table 1.

Demographic and Clinical Pre-systemic Therapy/Enrollment Characteristics of Older Women with Non-metastatic Breast Cancer by 24-month Cognitive Status and Frequency-Matched Non-Cancer Controls

| Breast Cancer Survivors | Controls | |||

|---|---|---|---|---|

| Persistent CRCD1 (n=60) | Non-Declining Survivors1 (n=147) | Non-cancer Controls (n=441) | p value2 | |

| Sociodemographic factors | ||||

| Mean (SD) or percent (n) | ||||

| Mean age, years (SD), range | 67.8 (5.2), 60–84 years | 67.7 (6.1), 60–98 years | 67.7 (6.5), 60–90 years | 0.992 |

| Race, % (n) | 0.375 | |||

| White | 88.3 (53) | 80.3 (118) | 83.0 (365) | |

| Nonwhite | 11.7 (7) | 19.7 (29) | 17.0 (75) | |

| Marital status, % (n) | 0.001 | |||

| Married | 68.3 (41) | 66.0 (97) | 51.5 (227) | |

| Widowed, divorced, single | 31.7 (19) | 34.0 (50) | 48.5 (214) | |

| Mean education, years (SD) | 15.8 (2.0) | 15.8 (2.1) | 15.7 (2.2) | 0.843 |

| Clinical factors | ||||

| Treatment | 0.847 | |||

| Chemotherapy +/− hormonal therapy | 28.6 (16) | 27.2 (37) | - | |

| Hormonal therapy only | 71.4 (40) | 72.8 (99) | - | |

| AJCC v. 6 Stage, % (n) | 0.529 | |||

| 0 | 6.7 (4) | 10.9 (16) | - | |

| I | 70.0 (42) | 63.3 (93) | - | |

| II | 16.7 (10) | 21.8 (32) | - | |

| III | 6.7 (4) | 4.1 (6) | - | |

| Surgery type, % (n) | 0.319 | |||

| BCS with/without RT | 68.3 (41) | 61.0 (89) | - | |

| Mastectomy | 31.7 (19) | 39.0 (57) | - | |

| ER status, % (n) | 0.202 | |||

| Positive | 83.3 (50) | 89.7 (131) | - | |

| Negative | 16.7 (10) | 10.3 (15) | - | |

| HER2 status, % (n) | 0.810 | |||

| Positive | 7.0 (4) | 8.0 (11) | - | |

| Negative | 93.0 (53) | 92.0 (126) | - | |

| Depression (≥ 16 on CES-D), % (n) | 5.0 (3) | 11.2 (16) | 5.5 (24) | 0.053 |

| Mean STAI3 score (SD) | 27.7 (6.5) | 28.5 (7.7) | 26.8 (5.7) | 0.024 |

| Mean FACT-F4 score (SD) | 43.9 (6.2) | 44.0 (7.7) | 46.2 (6.1) | <.001 |

Persistent CRCD is defined as 3.7+ points decline on the FACT-Cog Perceived Cognitive Impairment (PCI) scale from pre-systemic therapy baseline to 12 months, with persistence to 24-months

p-values reflect overall comparisons among groups

State-Trait Anxiety Inventory scores range from 20 to 80; higher scores reflecting more anxiety.

FACT-Fatigue Subscale scores range from 0 to 52; higher scores reflect less fatigue.

BCS=Breast Conserving Surgery; ER=Estrogen Receptor; CES-D=Center for Epidemiologic Studies Depression Scale; STAI = State Trait Anxiety Inventory, state version; FACT-F = Functional Assessment of Cancer Therapy – Fatigue Subscale; SD=standard deviation; CRCD=cancer-related cognitive decline

Machine Learning Classification of Persistent CRCD

We compared performance across the five machine learning approaches while considering relative strengths and weaknesses (Supplementary Figures 1, 2 and Supplementary Table 4). We found that LASSO and Elasticnet performed well and selected variables that were consistent with results from two-sample t-tests and yielded coefficients that were clinically interpretable and quantifiable. Between LASSO and Elasticnet, LASSO prioritized a smaller subset of variables among colinear variables, while preserving similar predictive performance. We considered this an important strength among highly intercorrelated neurocognitive outcomes, thus we focused on the LASSO findings. Among the less favorable results, RF did not show good separation compared to the others and SGB had better AUC’s than other approaches but was concerning for over-fitting - yielding on average >50 influential predictors. BVS showed good AUC’s but again was only included as a benchmark and not subjected to cross-validation.

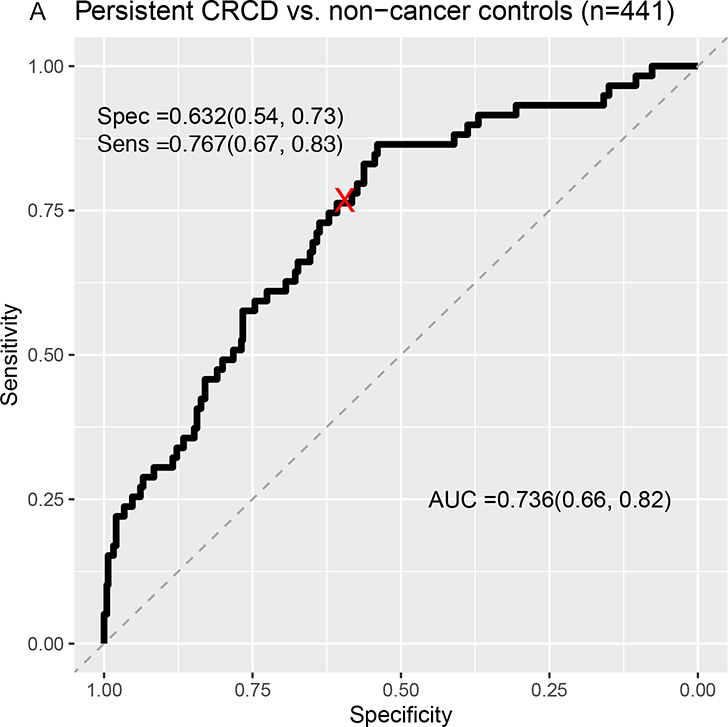

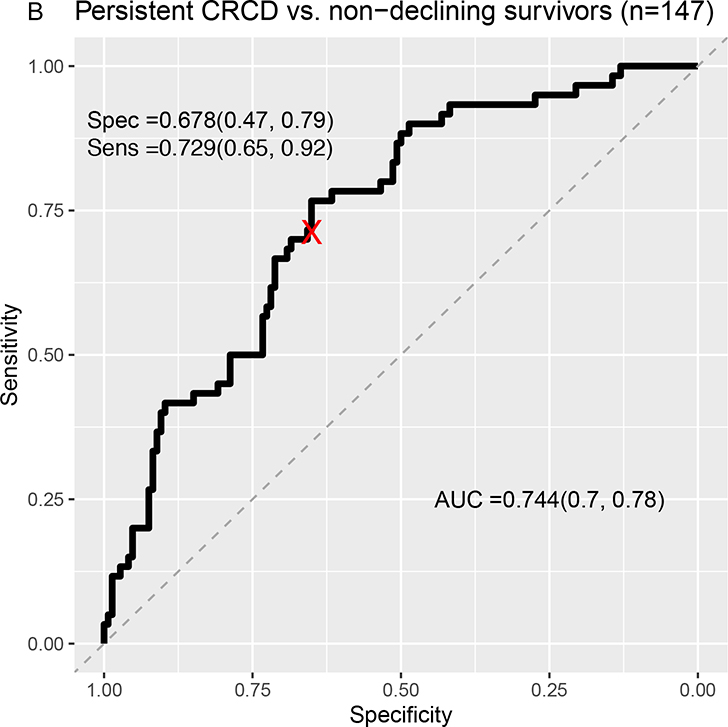

Classification separating survivors with persistent CRCD from non-cancer controls yielded an area under the curve (AUC) of .74 (LASSO sensitivity=.77; specificity=.63) (Figure 3A). There was similar separation of survivors with persistent CRCD from non-declining survivors (AUC=.74, LASSO sensitivity=.73; specificity=.68) (Figure 3B). The Elasticnet, BVS, and SGB analyses had better AUCs than those of LASSO, while RF did not show good separation (Supplementary Figures 1, 2 and Supplementary Table 4).

Figure 3:

Receiver Operating Characteristic (ROC) Curves From LASSO Machine Learning Models Classifying Persistent CRCD Using Baseline to 12-Month Change Scores.

A=Persistent CRCD (N=60) vs. Non-cancer controls (n=441); B=Persistent CRCD vs. Non-declining survivors (n=147).

The area under the ROC curve (AUC) and its 95% confidence interval (CI), sensitivity and its 95% CI, specificity and its 95% CI are presented. Rectangular 95% confidence regions for the ROC curve are partially displayed.

Persistent CRCD is defined as 3.7+ points decline on the FACT-Cog Perceived Cognitive Impairment (PCI) scale from pre-systemic therapy baseline to 12 months, with persistence to 24 months.

Several variables were consistently informative in classifying persistent self-reported CRCD from both comparison groups, including declines in Category Fluency, NAB Digit Span, NAB Driving Scenes, components of list learning tasks mainly representing learning efficiency, and the TIADL functional task (Table 3). Fatigue was informative in separating groups but only in models distinguishing persistent CRCD from controls; depression and anxiety did not contribute to separating the groups. Other machine learning variable selection approaches such as Elasticnet and BVS yielded similar top-ranked variables, including Category Fluency and Driving Scenes, while SGB and RF tended to select completion time measures across different tasks (See Supplementary Table 5).

Additional analyses predicting baseline to 24-month change scores show reduced but fair AUC, especially in the model comparing persistent CRCD to controls (Supplementary Figure 3 and Supplementary Table 6). Similar tests emerged as influential in that model, including learning efficiency/accuracy measures and speed and accuracy scores on measures of daily functioning.

Discussion

This study is the first that we are aware of to apply machine learning to characterize clinically meaningful persistent cognitive declines reported by older breast cancer survivors. One-third of older breast cancer survivors reported clinically meaningful cognitive decline from pre-systemic therapy to twelve months later, and two-thirds of this group went on to exhibit persistent cognitive decline at 24 months. Treatment exposure (i.e., chemotherapy with or without hormone therapy or hormone therapy alone) was comparable between survivors with self-reported cognitive decline and survivors who did not report decline. LASSO models accurately distinguished older breast cancer survivors with persistent cognitive decline from non-cancer controls and non-declining survivors on the basis of a constellation of small neurocognitive declines from baseline to twelve months. Increased fatigue in survivors with persistent cognitive decline contributed to distinguishing survivors with persistent declines from controls, but depression and anxiety symptoms did not meaningfully contribute to the models. This is notable because there is a common belief that self-reported cognitive problems are primarily driven by mood symptoms and our data do not support this. It is not surprising that fatigue is associated with cognitive changes to some degree, as it has long been known that fatigue affects cognitive performance even in non-clinical samples.38 Taken together, these exploratory results suggest that self-reported cognitive problems could signal subtle declines on some neurocognitive tests, and contribute to better understanding cancer-related cognitive decline.

Our findings have important clinical considerations for older breast cancer survivors. First, similar to other studies of older survivors,39 self-reported cognitive decline was detected in a sizable number of older survivors, was not specific to a treatment type, and did not resolve for most of those survivors by 24 months. However, other investigations with largely younger survivors have found that functional domains including cognition tend to return to pre-cancer levels for most by twelve months.31,40–42 One explanation of the different age-based findings is that recovery after decline may be less likely in older vs. younger breast cancer survivors due to aging and losses in cognitive reserve and compensatory mechanisms.

Second, our machine learning approach extends use of these methods in other neurocognitive disorders15,16,43 and provides several new insights into cancer-related cognitive decline that will require further investigation. LASSO models illustrated that self-reported cognitive problems could be related to the cumulative effects of multiple small neurocognitive deficits at the test level, and not anxiety or depression. These findings may provide insight into why prior studies, often using aggregated domain-level scores, had difficulty reconciling subjective and objective cognitive measures.6 Futher, the tests that discriminated having persistent CRCD reflected specific areas consistent with cognitive aging - learning efficiency, verbal fluency, attention, and task processing speed.44,45 Finally, while there was broad overlap in the types of tests identifying CRCD at twelve and 24 months, by 24 months more daily functioning measures emerged suggesting that the types of cognitive difficulties experienced or noticed by older patients with CRCD may evolve over time.

With a limited sample size, it is hard to draw reliable and reproducible conclusions when the number of predictors is large as in our case. Noting that, we optimized models through the five-fold CV and we repeated the model building process using LOOCV to evaluate whether the predictors selected in each optimized model in each LOOCV sample is similar. BVS yielded relatively higher AUCs compared to LASSO, Elasticnet, and BVS; this is not surprising as the five-fold CV within LOOCV samples was not implemented in BVS, thus BVS results may pose potential problems of generalization (Supplementary Figures 1, 2, and Supplementary Table 4). In the presence of high multicollinearity, others have reported that stepwise selection such as forward or backward selections could produce inconsistent results if the model did not go through cross-validation, and variance partitioning analyses may be unable to identify unique sources of variation.46 We do not describe the results from the SGB or random forest in detail because the results in our analysis were concerning for over-fitting and they did not provide readily interpretable/explainable results.47

Another potential implication of our results is that a “fine-toothed comb” approach may be needed to detect the subtle neurocognitive deficits in CRCD and other related cognitive disorders.48–50 This idea is supported by prior studies that have shown an association between learning inefficiencies and self-reported memory lapses in cancer survivors only after careful scrutiny of memory test performance.51 In fact, some of the influential tests that emerged in our analyses were so-called “process scores” reflecting errors in execution of the task rather than achievement, a focus in recent studies of methods identifying preclinical Alzheimer’s disease.49

Overall, our results suggest that clinicians look for cognitive symptoms in older cancer survivors. With replication, our findings would support screening for self-reported cognitive problems and referring survivors with difficulty or declines to specialists for neuropsychological evaluation and potential interventions. This suggestion supports the use of geriatric assessments done traditionally for treatment decision-making to include cognitive screening to guide survivorship care.52,53 There are several short, easy to administer self-report instruments in older survivors that are recommended to screen for cancer-related cognitive decline during survivorship visits, including the FACT-cog20 PCI (18 items) and the PROMIS 8-item measure.5,55 One benefit of “working up” survivors with cognitive complaints is that there is growing evidence to support intervention strategies such as walking, chair exercise, yoga, and other mindfulness approaches, strategies to improve sleep, and cognitive rehabilitation and training.40,55–59 These interventions may be especially useful in older survivors with CRCD that also have coexisting age-related illnesses like diabetes or cardiovascular disease that diminish organ system and cognitive reserve.60

This study applied machine learning to data from a national cohort to provide insights into the study and management of cognitive problems. However, several caveats should be considered in evaluating our results. First, our sample was highly educated and had limited racial/ethnic diversity. It will be critical to extend our results in other settings and populations. Next, additional analyses of models using neurocognitive and self-reported changes from baseline to 24 months yielded fair but weaker distinguishing power than the AUC for decline from baseline to twelve months. However, there was broad overlap in the types of neurocognitive tests that were most influential in models of baseline to twelve months and baseline to 24 months. Third, while we include a comprehensive set of neurocognitive measures, it will be important to replicate our results using an array of different tests, including more granular measures (e.g., continuous performance tests) that may be more sensitive to the types of subtle declines we observed over multiple domains.61,62 Fourth, our results rely on leave-one-out validation sets that may inflate machine learning performance since there were no other large datasets of older breast cancer survivors available. It will be important to perform external validation using data from an independent study as the field grows. Finally, we chose a machine learning approach that selected one best fitting variable if there were any sets of highly correlated variables (e.g., list-learning trials in a memory test), so variables that are absent in models are not necessarily irrelevant.

Overall, our findings suggest that patient-reported CRCD is common in older breast cancer survivors, can persist over time, and is reflected in a constellation of subtle neurocognitive changes following initiation of adjuvant treatment. While more research is needed to continue to characterizing CRCD, clinical attention to patient-reported symptoms in older breast cancer survivors is warranted.

Supplementary Material

Table 2:

List Of Top 10 Highest Ranked Variables In Each Lasso Model And Baseline to12-Month Change Score

| Model A | Model B | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Non- Cancer Controls (n=441) | Persistent CRCD1 (n=60) | Non-Declining Survivors (n=147) | Persistent CRCD (n=60) | |||||||

| Variable | Lasso Rank | Mean | SD | Mean | SD | Lasso Rank | Mean | SD | Mean | SD |

| Category Fluency Total | 1 | 0.07 | 4.61 | −1.62 | 4.71 | 1 | 0.38 | 4.55 | −1.62 | 4.71 |

| NAB List Learning Trial 3 | 2 | 0.26 | 1.90 | −0.45 | 1.95 | 5 | 0.18 | 1.82 | −0.45 | 1.95 |

| NAB Driving Scenes | 3 | −1.79 | 7.05 | −3.88 | 6.88 | 3 | −1.16 | 7.61 | −3.88 | 6.88 |

| NAB Digit Span Forward Total | 7 | 0.21 | 1.99 | −0.53 | 1.55 | 2 | 0.34 | 1.91 | −0.53 | 1.55 |

| PCI Score Baseline | 9 | 61.08 | 21.39 | 68.20 | 14.87 | 4 | 61.41 | 21.41 | 68.20 | 14.87 |

| TIADL Ingredients Can 2 Time2 | 10 | 0.02 | 12.41 | 2.50 | 9.07 | 8 | −0.54 | 13.40 | 2.50 | 9.07 |

| FACIT-Fatigue | 4 | 1.26 | 7.99 | −2.02 | 8.90 | |||||

| NAB List A Immediate Raw Score | 5 | 1.09 | 4.57 | −0.30 | 3.90 | |||||

| COWAT A Intrusions2 | 6 | −0.10 | 0.74 | 0.75 | 6.80 | |||||

| NAB List Learning Long Delay Forced Choice False Alarms2 | 8 | −0.55 | 2.68 | 0.10 | 2.30 | |||||

| Trail Making Test B Time2 | 6 | −7.03 | 31.57 | 0.96 | 33.54 | |||||

| NAB List Learning Long Delay Forced Choice Hits | 7 | 0.13 | 1.45 | 0.40 | 1.37 | |||||

| NAB Figure Drawing Copy | 9 | −0.49 | 4.75 | 0.17 | 4.43 | |||||

| Category Fluency Perseverations2 | 10 | 0.06 | 1.23 | −0.17 | 1.38 | |||||

Shaded areas represent tests that were not top ranked for that model.

Persistent CRCD is defined as 3.7+ points decline on the FACT-Cog Perceived Cognitive Impairment (PCI) scale from pre-systemic therapy baseline to 12 months, with persistence to 24-months

Higher scores indicate worse performance

NAB = Neuropsychological Assessment Battery; PCI – Perceived Cognitive Impairment; TIADL = Timed Instrumental Activities of Daily Living; FACIT = Functional Assessment of Chronic Illness Therapy; COWAT = Controlled Oral Word Association Test; CRCD=cancer-related cognitive decline

Acknowledgements

We would like to thank the women in the TLC study for their sharing of their time and experiences; without their generosity, this study would not have been possible. We are also indebted to Sherri Stahl, Naomi Greenwood, Margery London, and Sue Winarsky who serve as patient advocates from the Georgetown Breast Cancer Advocates for their insights and suggestions on study design and methods. We thank the TLC study staff who contributed by ascertaining, enrolling, and interviewing women.

Funding statement:

This research was supported by the National Cancer Institute at the National Institutes of Health grants R01CA129769 and R35CA197289 to JM and a National Institute on Aging award R01AG068193 to JM and AJS. This study was also supported in part the National Cancer Institute under Award K08CA241337 to KVD, and the National Cancer Institute at the National Institutes of Health grant P30CA51008 to Georgetown Lombardi Comprehensive Cancer Center for support of the Biostatistics and Bioinformatics Resource and the Non-Therapeutic Shared Resource. The work of AJS and BCM was supported in part by the National Institute of Aging at the National Institutes of Health grants P30AG010133, P30AG072976, and R01AG19771 and National Cancer Institute at the National Institutes of Health grant R01CA244673. TAA was supported in part by National Cancer Institute at the National Institutes of Health grants R01CA172119 and P30CA008748. JCR was supported by the National Cancer Institute at the National Institutes of Health grant R01CA172119. The work of JC was supported in part by the American Cancer Society Research Scholars grant 128660-RSG-15–187-01-PCSM and the National Cancer Institute at the National Institutes of Health grant R01CA237535. HJC was supported in part by the National Institute of Aging at the National Institutes of Health grant P30AG028716 for the Duke Pepper Center. KER is partially supported by the National Institute of Aging at the National Institutes of Health grant K01AG065485 and the Cousins Center for Psychoneuroimmunology. TNB was supported in part by the National Cancer Institute grant K01CA212056. ZMN is supported in part by the National Institutes of Health grant K12HD001441. SKP was supported in part by the American Cancer Society Research Scholars grant RSG-17–023-01-CPPB. The work of Paul Jacobsen was done while he was at Moffitt Cancer Center.

Footnotes

Declaration of Competing Interests

The authors have no competing interests.

Disclaimers

The views expressed do not represent the official position of the National Cancer Institute or any other funding agency.

References

- 1.Buchanan ND, Dasari S, Rodriguez JL, et al. Post-treatment neurocognition and psychosocial care among breast cancer survivors. Am J Prev Med. 2015;49(6):S498–S508. doi: 10.1016/J.AMEPRE.2015.08.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lange M, Licaj I, Clarisse B, et al. Cognitive complaints in cancer survivors and expectations for support: Results from a web–based survey. Cancer Med. 2019;8(5):2654–2663. doi: 10.1002/cam4.2069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Smidt K, Mackenzie L, Dhillon H, et al. The perceptions of Australian oncologists about cognitive changes in cancer survivors. Support Care Cancer. doi: 10.1007/s00520-016-3315-y [DOI] [PubMed] [Google Scholar]

- 4.Wefel JS, Vardy J, Ahles T, Schagen SB. International Cognition and Cancer Task Force recommendations to harmonise studies of cognitive function in patients with cancer. Lancet Oncol. 2011;12(7):703–708. doi:S1470–2045(10)70294–1 [pii] 10.1016/S1470-2045(10)70294-1 [DOI] [PubMed] [Google Scholar]

- 5.Henneghan AM, Van Dyk K, Kaufmann T, et al. Measuring self-reported cancer-related cognitive impairment: recommendations from the Cancer Neuroscience Initiative Working Group. J Natl Cancer Inst. February 2021. doi: 10.1093/jnci/djab027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Costa DSJ, Fardell JE. Why are objective and perceived cognitive function weakly correlated in patients with cancer? J Clin Oncol. 2019;37(14):JCO.18.02363. doi: 10.1200/JCO.18.02363 [DOI] [PubMed] [Google Scholar]

- 7.Hutchinson AD, Hosking JR, Kichenadasse G, Mattiske JK, Wilson C. Objective and subjective cognitive impairment following chemotherapy for cancer: A systematic review. Cancer Treat Rev. 2012;38(7):926–934. doi: 10.1016/j.ctrv.2012.05.002 [DOI] [PubMed] [Google Scholar]

- 8.Pullens MJJ, De Vries J, Roukema JA. Subjective cognitive dysfunction in breast cancer patients: a systematic review. Psycho- Oncology. 2010;19(11):1127–1138. [DOI] [PubMed] [Google Scholar]

- 9.Amariglio RE, Donohue MC, Marshall GA, et al. Tracking early decline in cognitive function in older individuals at risk for Alzheimer disease dementia: The Alzheimer’s disease cooperative study cognitive function instrument the Alzheimer’s disease cooperative study cognitive function instrument. JAMA Neurol. 2015;72(4):446–454. doi: 10.1001/jamaneurol.2014.3375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ryan MM, Grill JD, Gillen DL. Participant and study partner prediction and identification of cognitive impairment in preclinical Alzheimer’s disease: Study partner vs. participant accuracy. Alzheimer’s Res Ther. 2019;11(1):1–8. doi: 10.1186/s13195-019-0539-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tomaszewski Farias S, Lau K, Harvey D, Denny KG, Barba C, Mefford AN. Early functional limitations in cognitively normal older adults predict diagnostic conversion to Mild Cognitive Impairment. J Am Geriatr Soc. 2017;65:1152–1158. doi: 10.1111/jgs.14835 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ono M, Ogilvie JM, Wilson J, et al. A meta-analysis of cognitive impairment and decline associated with adjuvant chemotherapy in women with breast cancer. Front Oncol. 2015;5(FEB). doi: 10.3389/fonc.2015.00059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jim HSL, Phillips KM, Chait S, et al. Meta-analysis of cognitive functioning in breast cancer survivors previously treated with standard-dose chemotherapy. J Clin Oncol. 2012;30(29):3578–3587. doi: 10.1200/JCO.2011.39.5640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jordan MI, Mitchell TM. Machine learning: Trends,perspectives, and prospects. Science. 2015;349(6245):253–260. doi: 10.1126/science.aac4520 [DOI] [PubMed] [Google Scholar]

- 15.Casanova R, Saldana S, Lutz MW, Plassman BL, Kuchibhatla M, Hayden KM. Investigating predictors of cognitive decline using machine learning. Journals Gerontol - Ser B Psychol Sci Soc Sci. 2020;75(4):733–742. doi: 10.1093/geronb/gby054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ezzati A, Zammit AR, Harvey DJ, Habeck C, Hall CB, Lipton RB. Optimizing machine learning methods to improve predictive models of Alzheimer’s Disease. J Alzheimer’s Dis. 2019;71(3):1027–1036. doi: 10.3233/JAD-190262 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mandelblatt JS, Small BJ, Luta G, et al. Cancer-related cognitive outcomes among older breast cancer survivors in the thinking and living with cancer study. J Clin Oncol. 2018;36(32):3211–3222. doi: 10.1200/JCO.18.00140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12(3):189–198. doi:0022–3956(75)90026–6 [pii] [DOI] [PubMed] [Google Scholar]

- 19.Wilkinson GS, Robertson GJ. Wide Range Achievement Test (WRAT4). Psychol Assess Resour Lutz. 2006. [Google Scholar]

- 20.Wagner LI, Sweet J, Butt Z, Lai J, Cella D. Measuring Patient Self-Reported Cognitive Function: Development of the Functional Assessment of Cancer Therapy-Cognitive Function Instrument. J Support Oncol. 2009;7(6):W32–39. [Google Scholar]

- 21.Wagner LI, Lai JS, Cella D, Sweet J, Forrestal S. Chemotherapy-related cognitive deficits: development of the FACT-Cog instrument. Ann Behav Med. 2004;27:S10. [Google Scholar]

- 22.Cheung YT, Foo YL, Shwe M, et al. Minimal clinically important difference (MCID) for the functional assessment of cancer therapy: Cognitive function (FACT-Cog) in breast cancer patients. J Clin Epidemiol. 2014;67(7):811–820. doi: 10.1016/j.jclinepi.2013.12.011 [DOI] [PubMed] [Google Scholar]

- 23.Janelsins MC, Heckler CE, Peppone LJ, et al. Cognitive complaints in survivors of breast cancer after chemotherapy compared With Age-Matched Controls: An analysis from a nationwide, multicenter, prospective longitudinal study. In: Journal of Clinical Oncology. Vol 35. American Society of Clinical Oncology; 2017:506–514. doi: 10.1200/JCO.2016.68.5826 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stern RA, White T. NAB, Neuropsychological Assessment Battery: Administration, scoring, and interpretation manual. 2003.

- 25.Benton AL. Neuropsychological Assessment. Annu Rev Psychol. 1994;45:1–23. [DOI] [PubMed] [Google Scholar]

- 26.Reitan R, Wolfson D. The Halstead-Reitan Neuropsychological Test Battery. Tucson, AZ: Neuropsychology Press; 1985. [Google Scholar]

- 27.Owsley C, Sloane M, McGwin G, Ball K. Timed instrumental activities of daily living tasks: Relationship to cognitive function and everyday performance assessments in older adults. Gerontology. 2002;48(4):254–265. doi: 10.1159/000058360 [DOI] [PubMed] [Google Scholar]

- 28.Radloff LS. The CES-D Scale. Appl Psychol Meas. 1977;1(3):385–401. doi: 10.1177/014662167700100306 [DOI] [Google Scholar]

- 29.Renzi DA. State-Trait Anxiety Inventory. Meas Eval Couns Dev. 1985;18(2):86–89. doi: 10.1080/07481756.1985.12022795 [DOI] [Google Scholar]

- 30.Yellen SB, Cella DF, Webster K, Blendowski C, Kaplan E. Measuring fatigue and other anemia-related symptoms with the Functional Assessment of Cancer Therapy (FACT) measurement system. J Pain Symptom Manage. 1997;13(2):63–74. doi: 10.1016/S0885-3924(96)00274-6 [DOI] [PubMed] [Google Scholar]

- 31.Collins B, MacKenzie J, Tasca GA, Scherling C, Smith A. Persistent cognitive changes in breast cancer patients 1 year following completion of chemotherapy. In: Journal of the International Neuropsychological Society. Vol 20.; 2014:370–379. doi: 10.1017/S1355617713001215 [DOI] [PubMed] [Google Scholar]

- 32.Ibrahim JG, Molenberghs G. Missing data methods in longitudinal studies: A review. Test. 2009;18(1):1–43. doi: 10.1007/s11749-009-0138-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Beretta L, Santaniello A. Nearest neighbor imputation algorithms: A critical evaluation. BMC Med Inform Decis Mak. 2016;16(Suppl 3). doi: 10.1186/s12911-016-0318-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tibshirani R Regression Shrinkage and Selection Via the Lasso. J R Stat Soc Ser B. 1996;58(1):267–288. doi: 10.1111/j.2517-6161.1996.tb02080.x [DOI] [Google Scholar]

- 35.Natekin A, Knoll A, Michel O. Gradient boosting machines, a tutorial. 2013. doi: 10.3389/fnbot.2013.00021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Andy Liaw by Wiener M, Andy Liaw M Package “randomForest” Title Breiman and Cutler’s Random Forests for Classification and Regression. 2018. doi: 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 37.Kuhn M ThecaretPackage. http://topepo.github.io/caret/index.html. Accessed June 17, 2020.

- 38.Marcora SM, Staiano W, Manning V. Mental fatigue impairs physical performance in humans. J Appl Physiol. 2009;106(3):857–864. doi: 10.1152/japplphysiol.91324.2008 [DOI] [PubMed] [Google Scholar]

- 39.Mandelblatt JS, Clapp JD, Luta G, et al. Long-term trajectories of self-reported cognitive function in a cohort of older survivors of breast cancer: CALGB 369901 (Alliance). Cancer. 2016;122(22):3555–3563. doi: 10.1002/cncr.30208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lange M, Joly F, Vardy J, et al. Cancer-Related Cognitive Impairment: An update on state of the art, detection, and management strategies in cancer survivors. Ann Oncol Off J Eur Soc Med Oncol. 2019:1–16. doi: 10.1093/annonc/mdz410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ganz PA, Kwan L, Stanton AL, Bower JE, Belin TR. Physical and psychosocial recovery in the year after primary treatment of breast cancer. J Clin Oncol. 2011;29:1101–1109. doi: 10.1200/JCO.2010.28.8043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wefel JS, Kesler SR, Noll KR, Schagen SB. Clinical characteristics, pathophysiology, and management of noncentral nervous system cancer-related cognitive impairment in adults. CA Cancer J Clin. 2015;65(2):123–138. doi: 10.3322/caac.21258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ramsdale E, Snyder E, Culakova E, et al. An introduction to machine learning for clinicians: How can machine learning augment knowledge in geriatric oncology? J Geriatr Oncol. 2021;12(8):1159–1163. doi: 10.1016/j.jgo.2021.03.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Park DC, Reuter-Lorenz P. The adaptive brain: aging and neurocognitive scaffolding. Annu Rev Psychol. 2009;60:173. doi: 10.1146/annurev.psych.59.103006.093656 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Salthouse TA. Aging and measures of processing speed. Biol Psychol. 2000;54(1–3):35–54. doi:S0301051100000521 [pii] [DOI] [PubMed] [Google Scholar]

- 46.Morozova O, Levina O, Uusküla A, Heimer R. Comparison of subset selection methods in linear regression in the context of health-related quality of life and substance abuse in Russia. BMC Med Res Methodol. 2015;15:71. 10.1186/s12874-015-0066-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Petch J, Di S, Nelson W. Opening the Black Box: The Promise and Limitations of Explainable Machine Learning in Cardiology. Can J Cardiol 2022;38:204–13. 10.1016/J.CJCA.2021.09.004. [DOI] [PubMed] [Google Scholar]

- 48.Kiselica AM. Empirically defining the preclinical stages of the Alzheimer’s continuum in the Alzheimer’s Disease Neuroimaging Initiative. Psychogeriatrics. 2021;21(4):491–502. doi: 10.1111/psyg.12697 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Osuna J, Thomas K, Edmonds E, et al. Subtle cognitive decline predicts progression to Mild Cognitive Impairment above and beyond Alzheimer’s Disease risk factors. Arch Clin Neuropsychol. 2019;34(6):846–846. doi: 10.1093/arclin/acz035.14 [DOI] [Google Scholar]

- 50.Thomas KR, Bangen KJ, Weigand AJ, et al. Objective subtle cognitive difficulties predict future amyloid accumulation and neurodegeneration. Neurology. 2020;94(4):e397–e406. doi: 10.1212/WNL.0000000000008838 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gaynor AM, Ahles TA, Ryan E, et al. Initial encoding deficits with intact memory retention in older long-term breast cancer survivors. J Cancer Surviv. 2021. doi: 10.1007/s11764-021-01086-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mohile SG, Dale W, Somerfield MR, et al. Practical assessment and management of vulnerabilities in older patients receiving chemotherapy: Asco guideline for geriatric oncology. J Clin Oncol. 2018;36(22):2326–2347. doi: 10.1200/JCO.2018.78.8687 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pergolotti M, Battisti NML, Padgett L, et al. Embracing the complexity: Older adults with cancer-related cognitive decline—A Young International Society of Geriatric Oncology position paper. J Geriatr Oncol. 2020;11(2). doi: 10.1016/j.jgo.2019.09.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.PROMIS Instrument Development and Validation Scientific Standards Version 2.0.; 2013.

- 55.Zeng Y, Dong J, Huang M, et al. Nonpharmacological interventions for cancer-related cognitive impairment in adult cancer patients: A network meta-analysis. Int J Nurs Stud. 2020;104:103514. doi: 10.1016/j.ijnurstu.2019.103514 [DOI] [PubMed] [Google Scholar]

- 56.Mustian KM, Sprod LK, Janelsins M, Peppone LJ, Mohile S. Exercise recommendations for cancer-related fatigue, cognitive impairment, sleep problems, depression, pain, anxiety, and physical dysfunction: A Review. Oncol Hematol Rev. 2012;8(2):81–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Treanor CJ, Mcmenamin UC, O’Neill RF, et al. Non-pharmacological interventions for cognitive impairment due to systemic cancer treatment. Cochrane Database Syst Rev. 2016;2016(8):CD011325. doi: 10.1002/14651858.CD011325.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Campbell KL, Zadravec K, Bland KA, Chesley E, Wolf F, Janelsins MC. The effect of exercise on cancer-related cognitive impairment and applications for physical therapy: systematic review of randomized controlled rrials. Phys Ther. 2020;100(3):523–542. doi: 10.1093/ptj/pzz090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Carroll JE, Bower JE, Ganz PA. Cancer-related accelerated ageing and biobehavioural modifiers: a framework for research and clinical care. Nat Rev Clin Oncol. 2022;19(3):173–187. doi: 10.1038/s41571-021-00580-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Magnuson A, Ahles T, Chen BT, Mandelblatt J, Janelsins MC. Cognitive function in older adults with cancer: assessment, management, and research opportunities. J Clin Oncol. May 2021:JCO2100239. doi: 10.1200/JCO.21.00239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Bernstein LJ, Catton PA, Tannock IF. Intra-individual variability in women with breast cancer. J Int Neuropsychol Soc. 2014;20(4):380–390. doi: 10.1017/S1355617714000125 [DOI] [PubMed] [Google Scholar]

- 62.Li Y Bayesian hierarchical variance components modeling goes beyond conventional methods in elucidating greater intraindividual variabilities in vigilance and sustained attention in breast cancer survivors. In: International Cognition and Cancer Task Force Conference. Denver; 2020. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.