Abstract

Purpose of review:

We comprehensively examined recent advancements in developing novel cognitive measures that could significantly enhance detection of outcome changes in Alzheimer’s disease (AD) clinical trials. Previously established measures were largely limited in their ability to detect subtle cognitive declines in preclinical stages of AD, particularly due to weak psychometric properties (including practice effects and ceiling effects) and requirement of in-person visits that impacted ascertainment.

Recent findings:

We present novel cognitive measures that were designed to exhibit reduced practice effects and stronger correlations with AD biomarkers. Additionally, we summarized some recent efforts in developing remote testing measures protocols that are aimed to overcome the limitations and inconvenience of in-person testing, and digital phenotyping, which analyzes subtle forms of digital behavior indicative of cognitive phenotypes. We discuss each measure’s prognostic accuracy and potential utility in AD research while also commenting on their limitations. We also describe our study, the Development of Novel Measures for Alzheimer’s Disease Prevention Trials (NoMAD), that employed a parallel group design in which novel measures and established measures are compared in a clinical trials armature.

Summary:

Overall, we believe that these recent developments offer promising improvements in accurately detecting clinical and preclinical cognitive changes in the AD spectrum; however, further validation of their psychometric properties and diagnostic accuracies are warranted before reliably implementing these novel measures in AD clinical trials.

Keywords: Preclinical Alzheimer’s Disease, Cognition, Neuropsychology, Testing, Biomarkers

INTRODUCTION

Alzheimer’s disease (AD) is the most common cause of dementia, affecting over 5.8 million people in the US [1]. The neuropathology of AD – such as amyloid-beta (Aβ) plaques and tau tangles – can begin years to decades before clinical symptoms are apparent, although subtle cognitive decline can be present [2]. This preclinical phase has garnered much attention as a window for possible interventions, and yet, our ability to detect subtle cognitive decrements in preclinical AD is limited. Indeed, many of the gold-standard cognitive and functional tests in AD research were originally developed for the clinical population who already show significant cognitive changes. There is a pressing need for improved, validated testing in preclinical AD. This article will describe the issues with current gold-standard tests and various attempts at developing novel neuropsychological measures. We also illustrate methodological limitations of validating novel measures and present ways to address these issues.

LIMITATIONS OF ESTABLISHED TESTS

The need for novel measures of cognition comes in part from the significant practice effects observed in serial assessment with well-established tests. Practice effects are improvements in test performance due to repeated exposure to the test materials and paradigm. Practice effects can be problematic firstly because they can be mistaken as improvements in cognition. Moreover, such gains in performance for a specific test cannot be transferred to other tasks or real-world scenarios. Consequently, tests impacted by practice effects may begin to measure test-taking skill rather than the cognitive capacity they are intended to assess. Further, in a clinical trial setting, improvements due to practice effects may obscure subtle cognitive decline in placebo groups, making it more difficult to identify improvements in an active treatment group. It is even possible for a practice effect to be mistaken for a treatment signal, as has been seen in schizophrenia literature [3]. Goldberg et al.[4] analyzed data from Alzheimer’s Disease Neuroimaging Initiatives (ADNI) and found that cognitively normal older adults exhibited low to medium practice effects on widely-used tests over the course of 6–12 months (effect sizes (ES) of 0.20–0.30). Other studies reported practice effects of similar magnitude [5,6]. Though seemingly modest, practice effects of this magnitude could obscure years of subtle cognitive decline in preclinical populations [4]. Many established cognitive tests fail to follow the cognitive science literature on how to reduce practice effects, lacking approaches that reduce memorization of responses or strategies.

Another weakness of established neuropsychological testing is the pronounced ceiling effects in healthy participants. Ceiling effects occur when most participants reach the maximum score on a test due to low level of difficulty. Because ceiling effects reduce variability in data, they prevent meaningful analysis. Ceiling effects are common in orientation questions (date, year, location etc.) used in many cognitive tests, including the Mini Mental Status Examination (MMSE) and the Alzheimer’s Disease Assessment Scale-Cognitive Subscale (ADAS-Cog). In the ADNI data set, roughly 80% of healthy controls and 42% of MCI individuals score 10/10 on the MMSE orientation [7]. Functional measurements, designed primarily for individuals with late MCI or AD, are also susceptible to ceiling effects; for instance, a majority of individuals in preclinical stages score zero (i.e., no functional impairment) on the Functional Activities Questionnaire (FAQ), a commonly used informant-based questionnaire [8]. These ceiling effects in neuropsychological and functional testing limit the measures’ ability to identify subtle cognitive or functional decline.

Traditional tests also have relatively weak correlations with AD biomarkers. In cross-sectional studies, cognitive differences between Aβ+ and Aβ- individuals are typically small or undetectable using established tests [9–11]. In longitudinal studies, Aβ+ individuals decline faster than Aβ- individuals, but this deficit manifests only over the course of years. For instance, one study found that the difference in decline between Aβ+ and Aβ- groups was 0.07 to 0.15 z-scores per year [12]. Tau aggregation is thought to be more closely related to cognitive impairment than Aβ [13–15], but even less is known about the association between established measures and tau. Given that both tau PET and plasma p-tau217 may yield high predictability of preclinical and prodromal AD [16], future validation studies of neuropsychological tests should include tau measures to provide convergent evidence for validity.

In sum, there is an acute need for new neuropsychological tests that are supported by cognitive science literature to reduce practice effects and ceiling effects and improve sensitivity to AD biomarkers. In addition, these tests must retain good validity and test-retest reliability and must demonstrate usefulness in a clinical trial setting.

NOVEL MEASURES

There has been recent advancement in neuropsychological test constructions to target problematic psychometric properties of the previously established measures.

Serial Assessments

Many new computerized assessments have been designed for serial administration. One such test is the Computerized Preclinical Alzheimer’s Cognitive Composite (cPACC). The cPACC is a web-based battery intended to be a digital equivalent of the original PACC (paper and pencil based), the current gold-standard in preclinical AD testing. The PACC consists of 4 subtests and assesses orientation, episodic memory, working memory, and processing speed. A digital version of the PACC is desirable because it could standardize administration, eliminate the need for in-person assessment, and allow for easier data transfer and analysis. In the cPACC, episodic memory is tested by the Face Name Hobby Recall (a matching task with a delay component) and Grid Locations (a visual learning task). Two nonverbal tasks assess working memory: a symbol line task, in which participants recall the ordering of a string of symbols, and a visual patterns task, in which participants are shown an array of boxes and asked to recall the order in which boxes turned black. Speeded matching, a computerized analog to the (WAIS-R) digit symbol coding, measures processing speed. A validation study [17] indicated that cPACC scores were moderately associated with the original PACC (r = .61). Participants who scored above and below a 25 on the MMSE had significantly different scores across all measures, implying some sensitivity to cognitive changes. Nonetheless, the cPACC had weak tests-retest reliability on some subtests (r = .36, r = .48, and r= .45 on symbol line, grid locations delayed recall, and visual patterns, respectively) , and there were significant practice effects on episodic memory tasks (cohen’s d = .49 and cohen’s d =.61 on Grid Locations delayed recall and Face Name Hobby delayed recall, respectively). No alternate forms were available to compensate for such practice effects. While the cPACC’s web-based administration may have some benefits, such as reducing the need for in-person testing, it also demonstrated problems with reliability and practices effects, and its correlation to the PACC was only moderate. cPACC also needs further studies for association with AD biomarkers.

The CogState Brief Battery (CBB) is a commercially available, computerized measure designed to minimize cultural and language biases by using playing cards as stimuli. It consists of a simple reaction time task (Detection task), a choice reaction time task (Identification task), a one-back working memory task (One-Back task), and a continuous recognition visual learning task (Learning Task). The CBB assesses attention/vigilance, processing speed, working memory, and visual learning, and uses alternate forms to reduce practice effects. The CBB demonstrated good validity across neuropsychiatric disorders, such as schizophrenia and dementia associated with HIV [18]. A more recent study [19] added the Face-Name Associative Memory Exam (FNAME) and Mnemonic Separation Task (MST), two tasks that recruit medial temporal regions [20,21], to create the Computerized Cognitive Composite (C3) specifically for use in AD and MCI. The C3 correlates with the PACC and Aβ status, though the PACC proved more sensitive to Aβ status than the C3. The C3 exhibits adequate test-retest reliability (ICC=.626) [22], but suffers from moderate practice effects (increases of .25 standard deviations between visits). The C3 has the advantage of standardized digital administration, a brief format (roughly 15 minutes), and reduced cultural bias [19,23], but it does not address other psychometric issues of established tests, such as practice effects, and relatively low correlations with biomarkers.

The NIH Toolbox Cognitive Battery (NIHTB-CB) is another brief computerized battery. It consists of 7 subtests that assess attention and executive functions, language, processing speed, working memory, and episodic memory. It has been well-validated across a wide age range in cognitively healthy people, showing expected age effects and good test-retest reliability [24,25]. The NIH-CB composite has a small practice effect (d = 0.29), comparable to Goldberg’s aforementioned finding for well-established tests (d =0.25) [4]. A delayed recall trial – sometimes added to the NIH-CB to further tailor the assessment to AD-related impairment – has similar accuracy in classifying CN, MCI, and AD, compared to the PACC. Studies evaluating the NIHTB-CB’s sensitivity to AD biomarkers are limited, and only a weak association was noted with tau pathology (and no association with Aβ) [26]. Similar to CogState’s CBB, the NIHTB-CB is valid and advantageous in some respects, with its brevity and its simple, standardized administration. However, psychometrically, the NIHTB-CB does not present significantly improved sensitivity or reduced practice effects compared with established paper and pencil tests, such as the PACC. Moreover, factor analyses indicate a fluid and a crystalized component which do not necessarily map onto known AD cognitive domain compromises. Further studies are needed to clarify its association with AD biomarkers.

Single time-point assessments

Other novel tests have been primarily used as single time-point assessments. Mormino et al. [27] have focused on tests that specifically engage the hippocampus, an area of the brain affected early in AD [28,29]. The study used an associative memory task [30], in which participants were asked to pair concrete nouns (e.g., violin) with famous people or places (e.g., Ronald Reagan, Golden Gate Bridge) and assessed learning with cued recall. To our knowledge, no data on test-retest reliability, practice effects or associations with other neuropsychological tests have been published. Mormino et al. also administered the widely-used and previously-validated Mnemonic Similarity Task (MST) [31] to test precision of pattern separation learning. In this task, participants learned objects with forced encoding and were subsequently asked to categorize new foils, similar lures, or old objects. The MST is thought to be particularly dependent on dentate function. This task has alternate forms and is robust to practice effects [32], making it also suitable for serial assessments. Worse performance on the associative memory task and MST correlated with higher levels AD biomarkers, such as CSF Aβ42/Aβ40 and Aβ+ status, though associations were small [27]. Both tasks were more sensitive to Aβ than the Logical Memory test from the Wechsler Memory Scale. While more biomarker and validation studies are needed, especially for the associative memory task, these tasks demonstrate the value of targeting the hippocampus in preclinical AD testing.

Another promising novel measure called the Loewenstein-Acevedo Scale for Semantic Interference and Learning (LASSI-L) uses a challenging word learning task to detect subtle cognitive impairment. The LASSI-L employs proactive semantic interference paradigm in which learning and recall of one list of words (List A) interferes with the ability to learn another set of words from the same semantic categories (List B). This task also assesses the degree of retroactive learning (List B’s interference with recall of List A). The performance score is based on presence of both proactive- and retroactive interference, as well as the ability to overcome proactive interference, a capacity unique to the LASSI-L. MCI individuals are more susceptible to both types of interference than cognitively normal individuals; indeed, the LASSI-L classified cognitively normal vs. MCI with 90% accuracy [33,34]. Worse performance also correlated with higher amyloid burden and atrophy of the medial temporal lobe [34–36]. Test-retest reliability was high (r = 0.60 to r = 0.89) [35], and although no information is available regarding practice effects, creating alternate forms seem feasible. Overall, the LASSI-L is an improvement to established tests, with higher sensitivity and correlation with biomarkers. Its usefulness in clinical trials remains to be tested.

The Need to Assess the Novel Measures

Taken together, recent development of novel cognitive measures allows for diagnostic accuracy and enhanced ability to detect subtle cognitive changes over time. Nonetheless, it is unclear whether novel measures exhibit significant advantages over established measures in terms of psychometric properties and associations with AD biomarkers. Further studies are needed to validate their utility in AD clinical trials and should include more direct comparisons with established measures using parallel group design. While most of validation studies employed a design where each participant receives both novel and established tests, to correlate performance, such a design could lead to test interference, decreased power, and increased subject fatigue.

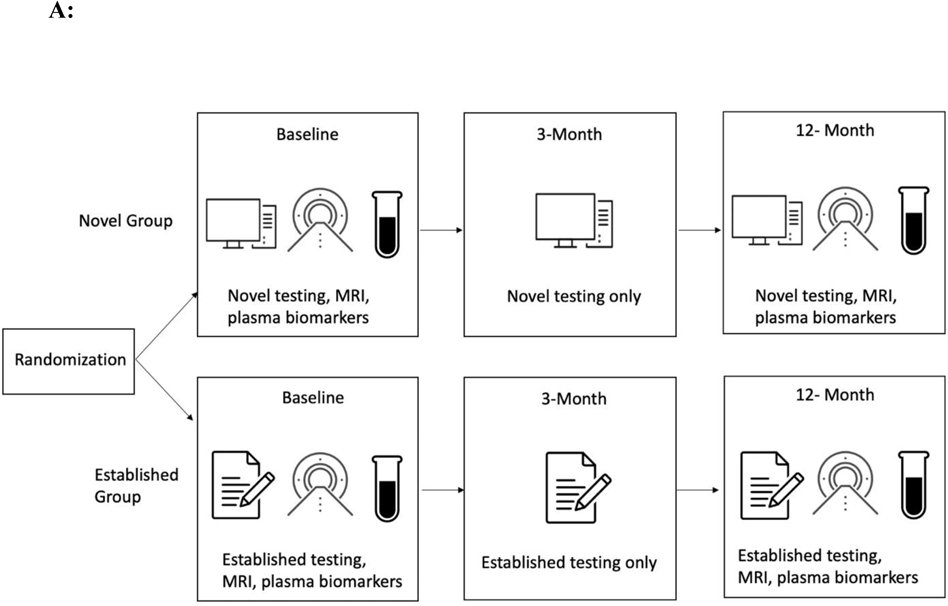

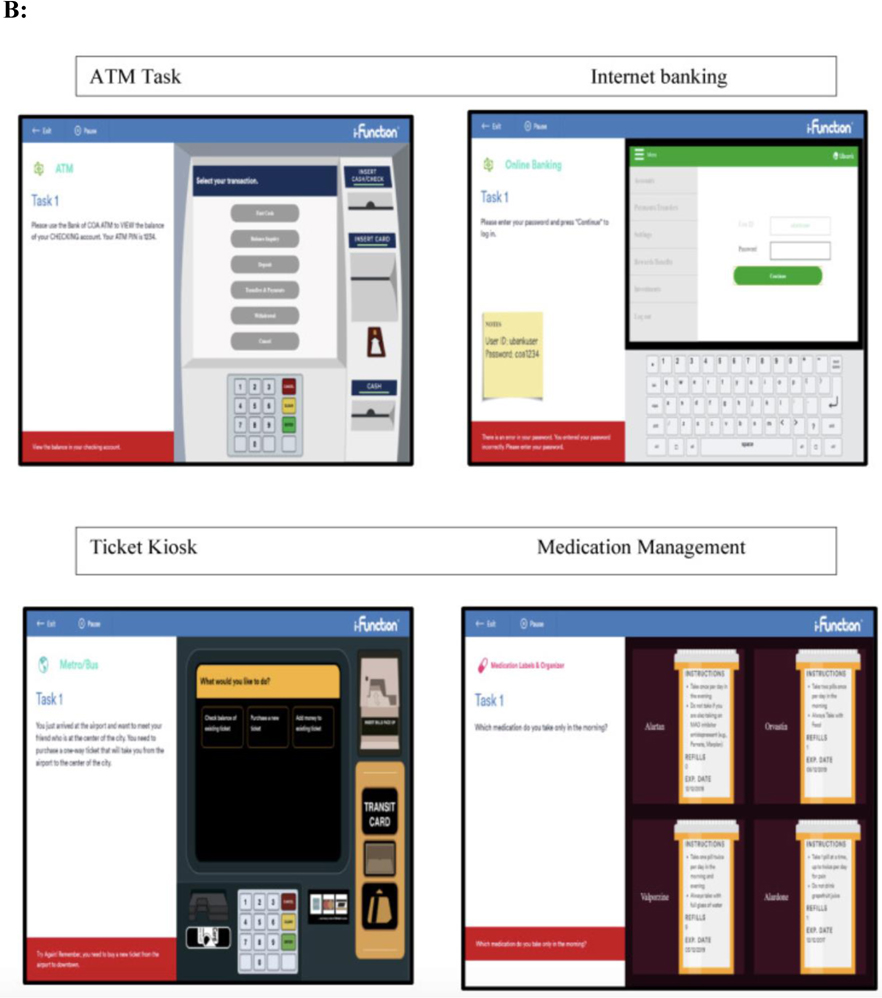

We aimed to address these limitations by conducting a randomized parallel group design that is consistent with the structure of a clinical trial (see Figure 1A). In an ongoing multisite study entitled, Development of Novel Measures for Alzheimer’s Disease Prevention Trials (NoMAD), 320 participants will be randomized to two clinical trial armatures, one group receiving novel cognitive measures and the other receiving established measures [33]. The novel measures include the No Practice Effect (NPE) battery, which is specifically designed to reduce practice effects according to the cognitive science literature, using three equivalent alternative forms and components that attenuate learning of study protocols (e.g., forced encoding on recognition memory, interference trials on the N-Back) [37]. Executive function, processing speed, and episodic memory are assessed (see Table 1). The Miami Computerized Functional Assessment Scale (CFAS) is another novel measure that is designed to measure functions related to instrumental activities of daily living (IADLs). The CFAS uses computerized simulations of IADLs, such as using an ATM machine or a ticket kiosk. (see Figure 1B). Accuracy and speed for completing the tasks are used to determine functional abilities. This method measures functioning based on participants’ behaviors, and therefore could eliminate subjectivity and bias that could arise from informant measures. The well-established battery consists of the PACC, ADAS-cog and FAQ. NoMAD is currently ongoing, and we aim to test whether the novel measures exhibit significant advantage over established measures in terms of psychometric properties, practice effects, and association with AD biomarkers (plasma phosphorylated tau and neurofilament light) and genetic markers (APOE status). Findings form this study will inform the utility of novel NPE and CFAS measures as reliable measures of cognitive changes in AD clinical trials. Additionally, findings from NOMAD will inform if this strategy is viable and if the tests themselves show promise in terms of validation parameters. If true, this methodology should be implemented in all studies of this type in the future.

Fig 1A.

Study Design. Participants are randomized into the novel or established group. The novel group completes the NPE battery and the CFAS at baseline, 3 months, and 12 months. The established group completes the PACC, ADAS-cog, and FAQ at baseline, 3 months, and 12 months. Both groups undergo MRI and have plasma biomarkers drawn at baseline and 12 months.

Table 1:

No Practice Effect (NPE) measures in NoMAD study

| Domain Assessed | Assessment |

|---|---|

| Executive function/working memory | N-Back Letter-Number Span Brown Peterson Paradigm |

| Processing speed | Digit Symbol |

| Verbal Fluency | Letter Fluency Semantic Fluency |

| Episodic Memory | Word Recognition Memory Immediate Recall Word Recognition Memory Delayed Recall |

Fig. 1B.

Miami Computerized Functional Assessment Scale (CFAS). Participants complete computerized simulations of four everyday activities: using an ATM machine, using an online banking system, operating a ticket kiosk, and managing medications.

REMOTE TESTING

Remote testing is an innovative approach to improve diagnostic accuracy and track cognitive changes over time. Smartphones and tablets have become ubiquitous, which led to the advancement of smartphone- and tablet-based remote testing for preclinical AD. Remote testing has multiple advantages. It is highly scalable and cost-effective, allowing access to much larger samples than in-clinic testing can provide. It also lends itself to more frequent testing, leading potentially to more reliable data (as it might eliminate “bad days” or skewed item pools). Further, the fact that participants may complete tests in an environment familiar to them, as opposed to a memory clinic, increases the ecological validity, and reduces anxiety associated with the “white-coat effect”. Remote testing is not without its challenges, however. Its feasibility must be demonstrated, as remote testing protocols can be difficult to monitor compliance and have high attrition [38]. A lack of researcher control over the testing environment with distraction could also lead to poor reliability. Finally, most published smartphone measures still lack rigorous validation. Nonetheless, development and validation of various remote measures offer opportunities for more effective and accurate data collection. Here we will discuss some noteworthy trends and studies in the field of remote testing.

Remote adaptation of in-person tests

Telehealth versions of many well-established tests have been validated and include the Telephone MMSE, Telephone Montreal Cognitive Assessment, and video-conferenced Rowland Universal Dementia Assessment Scale [39–42]. In general, these tests correspond highly to in-person tests and demonstrate good feasibility. Some of the aforementioned novel digital tests have also been adapted for telehealth administration. The NIH toolbox has been administered using videoconferencing and showed no significant difference to in-person testing [43]. Cogstate has not published any data on remote protocols, but a study on their digital battery (in-clinic but unsupervised) demonstrated no significant differences between unsupervised and supervised scores [44]. Some measures from the previously-described No Practice Effect (NPE) battery were also adapted for smartphone use to allow for remote administration. NoMAD’s parallel group design allows for direct comparison of the smartphone measures to both established and novel in-clinic measures.

Burst Testing

A second category of remote testing uses a method called “burst” testing, wherein participants complete multiple brief assessments daily for a given span of time. These scores are then averaged to give a more representative measure of cognition. This design comes as a response to the inherent variability of humans’ everyday cognitive function [45], which single time point tests fail to capture. In a study supporting this design’s validity, Sliwinski et al. [46] used smartphones with participants aged 25–65 to deliver five daily tests of working memory and perceptual speed for a period of 2 weeks. The study found excellent reliability and feasibility. In Hassenstab et al.’s burst testing study [47], participants downloaded an app onto their own smartphones and completed four brief batteries a day for 7 days. The batteries included a Grids Tests (spatial memory), a Symbols Tests (processing speed), and Prices Test (associative memory). Preliminary results suggest excellent reliability (r = .90 to .98), and a wide range of correlations with well-established in-person measures (r = .24 to .74) [47]. Associations with biomarkers remain to be established.

Short-Term Serial Testing

Other researchers have used remote administration to effectively evaluate learning ability, leveraging high volume testing to measure improvement over time. Decrements in learning ability have been linked to preclinical AD, with some arguing that preclinical AD is primarily characterized by learning deficits. One study of 94 individuals used a Face-Name Associative Memory Exam (FNAME) to measure learning ability over the course of one year [48]. Tasks were completed every month using a mobile tablet. Aβ+ individuals performed significantly worse than Aβ - individuals after the 4th testing session. In contrast, no difference was observed in well-established measures (administered at baseline and again at 1-year). Although the task suffered from ceiling effects, this method shows promise in identifying learning differences at a much shorter timescale than in-person tests, important for early and accurate detection of cognitive change. Another, more rigorous, learning paradigm called Online Repeated Cognitive Assessment (ORCA) was developed by Lim et al. [49]. Participants were asked to match 50 Chinese characters to their meaning for 25 minutes a day for 6 days. This task, previously validated in a pilot study [50], was highly difficult and was free from ceiling effects. Aβ + individuals scored significantly worse than Aβ – individuals as soon as the second day of testing, with the gap continuing to widen over the course of 6 days. Alternate forms using different stimuli are in development, though currently unavailable. How this test would be used in a clinical trial remains to be seen, not least because of the rigor of its testing regimen. Nevertheless, this study speaks to the value of high volume, high difficulty testing to reveal subtle cognitive deficits. It is also possible that another rigorous verbal learning test administered for the same number of hours might yield similar results and have similar drawbacks.

Digital Phenotyping

The last trend in novel testing discussed here is digital phenotyping. Digital phenotyping uses data from devices to capture subtle behaviors that may be indicative of different cognitive phenotypes. The data can be collected actively, while participants engage in cognitive activities (e.g., assessments), or passively, by monitoring phone use, or other device data, in everyday life. The Digital Neuro Signature (DNS), developed by Altoida, is an augmented reality task in which participants hide objects and then are asked to retrieve them in specific orders. Using machine learning, the DNS assesses global cognitive function by measuring the speed and accuracy of performance, as well as over 100 subtle behaviors, such as grip strength, purposeless screen touch, and gait. A large cohort study (n= 548) showed the DNS to be highly prognostically accurate (.94), sensitive (0.91) and specific (0.82) [51]. The DCTClock, a digital adaptation of the common paper-and-pencil clock drawing test, also uses an active data collection approach. In the original clock drawing test, participants are asked to draw the face of a clock showing a specific time, and clinicians evaluate the end result. The DCTClock uses a digital pen to track behaviors throughout the test, such as pauses and unnecessary pen strokes, in addition to the final product. Scores have been shown to correlate well with well-established measures, such as the PACC, and AD biomarkers, such as Aβ and tau imaging [52]. Apple, Evidation, and Eli Lilly, in contrast, have collaborated to passively track elements of phone use in healthy, MCI, and AD individuals [53]. Phone usage patterns differed among cognitively healthy and cognitively impaired people, with impaired individuals showing slower typing, greater reliance on helper apps, and fewer total messages, to list a few metrics. Other passive phenotyping studies examine movement and activity patterns to identify cognitive decline. One study installed a GPS data logger into each participant’s vehicle, and captured driving performance (using speed, acceleration, and other measures) and the spatiotemporal characteristics of car trips over the course of a year [54]. Driving behavior on its own was highly accurate (F1 = .82) in identifying preclinical AD, as defined by the presence of CSF biomarkers. When age and APOE status were added to the model, the F1 score rose to .96. Another study used a wearable ankle device to measure gait and errors in motor planning, and found striking differences across cognitive groups with large effect sizes (Cohen’s d = 1.17–4.56) [55]. Still other studies use Smart Home technologies and other wearable devices to monitor cognitive status [56]. These studies show promise for passive digital phenotyping and signal a possible avenue for remote testing to pursue.

CONCLUSION

In pre-clinical AD, common paper-and pencil tests are limited by practice effects and ceiling effects, in addition to barriers associated with in-person testing. Many new cognitive measures, in the form of novel in-person testing, remote testing, and digital phenotyping, aim to address these weaknesses. Most of these new measures are digitally administered, signaling a new era in neuropsychological measurement. Overall, we believe that these novel tests and deployment methods appear promising. However, whether they are in fact “better” (i.e., more sensitive to biomarker and to subtle cognitive decline or improvement) than established tests remains unproven.

BULLET POINTS.

Well-established cognitive tests in preclinical AD are limited by ceiling effects, practice effects, and low associations with biomarkers.

Novel in-person tests used for both one-time and serial assessment have shown some promising results, but further validation is needed due to inconsistent findings and a lack of direct comparison with established measures.

The NoMAD study uses an innovative clinical trial armature to validate its new cognitive and functional measures, which are designed to reduce practice effects.

Novel remote measures, which use different paradigms such as adaptation of in-person test, burst testing, or high-volume learning, have shown early-stage success.

Digital phenotyping, both active (monitoring behavior during an assessment) and passive (monitoring behavior in everyday life), uses machine learning and a variety of different devices and paradigms to detect cognitive phenotypes

Financial support and sponsorship:

This work is supported by the National Institute on Aging grant award R01AG051346 to TG.

Conflicts of interest:

HK reports support from NIH 5T32MH020004. DPD reports scientific advisory to Acadia, Genentech, and Sunovion. DPD has served on the DSMB for Green Valley. TG receives royalties from VeraSci for use of the BACS in clinical trials. SC has nothing to report.

REFERENCES:

- 1.2020 Alzheimer’s disease facts and figures. Alzheimers Dement 2020. [DOI] [PubMed] [Google Scholar]

- 2.Jack CR Jr., Holtzman DM: Biomarker modeling of Alzheimer’s disease. Neuron 2013, 80:1347–1358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Goldberg TE, Goldman RS, Burdick KE, Malhotra AK, Lencz T, Patel RC, Woerner MG, Schooler NR, Kane JM, Robinson DG: Cognitive improvement after treatment with second-generation antipsychotic medications in first-episode schizophrenia: is it a practice effect? Arch Gen Psychiatry 2007, 64:1115–1122. [DOI] [PubMed] [Google Scholar]

- 4.Goldberg TE, Harvey PD, Wesnes KA, Snyder PJ, Schneider LS: Practice effects due to serial cognitive assessment: Implications for preclinical Alzheimer’s disease randomized controlled trials. Alzheimers Dement (Amst) 2015, 1:103–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Calamia M, Markon K, Tranel D: Scoring higher the second time around: meta-analyses of practice effects in neuropsychological assessment. Clin Neuropsychol 2012, 26:543–570. [DOI] [PubMed] [Google Scholar]

- 6.Machulda MM, Hagen CE, Wiste HJ, Mielke MM, Knopman DS, Roberts RO, Vemuri P, Lowe VJ, Jack CR Jr., Petersen RC: [Formula: see text]Practice effects and longitudinal cognitive change in clinically normal older adults differ by Alzheimer imaging biomarker status. Clin Neuropsychol 2017, 31:99–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Schneider LS, Goldberg TE: Composite cognitive and functional measures for early stage Alzheimer’s disease trials. Alzheimers Dement (Amst) 2020, 12:e12017. * This article describes some of the limiations of composite tests, a common trend in preclincal AD assessment. This article supports the need for improved preclinal AD testing.

- 8.Marshall GA, Rentz DM, Frey MT, Locascio JJ, Johnson KA, Sperling RA, Alzheimer’s Disease Neuroimaging I: Executive function and instrumental activities of daily living in mild cognitive impairment and Alzheimer’s disease. Alzheimers Dement 2011, 7:300–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Aizenstein HJ, Nebes RD, Saxton JA, Price JC, Mathis CA, Tsopelas ND, Ziolko SK, James JA, Snitz BE, Houck PR, et al. : Frequent amyloid deposition without significant cognitive impairment among the elderly. Arch Neurol 2008, 65:1509–1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Song Z, Insel PS, Buckley S, Yohannes S, Mezher A, Simonson A, Wilkins S, Tosun D, Mueller S, Kramer JH, et al. : Brain amyloid-beta burden is associated with disruption of intrinsic functional connectivity within the medial temporal lobe in cognitively normal elderly. J Neurosci 2015, 35:3240–3247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Duke Han S, Nguyen CP, Stricker NH, Nation DA: Detectable Neuropsychological Differences in Early Preclinical Alzheimer’s Disease: A Meta-Analysis. Neuropsychol Rev 2017, 27:305–325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mormino EC, Papp KV, Rentz DM, Donohue MC, Amariglio R, Quiroz YT, Chhatwal J, Marshall GA, Donovan N, Jackson J, et al. : Early and late change on the preclinical Alzheimer’s cognitive composite in clinically normal older individuals with elevated amyloid beta. Alzheimers Dement 2017, 13:1004–1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bejanin A, Schonhaut DR, La Joie R, Kramer JH, Baker SL, Sosa N, Ayakta N, Cantwell A, Janabi M, Lauriola M, et al. : Tau pathology and neurodegeneration contribute to cognitive impairment in Alzheimer’s disease. Brain 2017, 140:3286–3300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nelson PT, Alafuzoff I, Bigio EH, Bouras C, Braak H, Cairns NJ, Castellani RJ, Crain BJ, Davies P, Del Tredici K, et al. : Correlation of Alzheimer disease neuropathologic changes with cognitive status: a review of the literature. J Neuropathol Exp Neurol 2012, 71:362–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hanseeuw BJ, Betensky RA, Jacobs HIL, Schultz AP, Sepulcre J, Becker JA, Cosio DMO, Farrell M, Quiroz YT, Mormino EC, et al. : Association of Amyloid and Tau With Cognition in Preclinical Alzheimer Disease: A Longitudinal Study. JAMA Neurol 2019, 76:915–924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Leuzy A, Smith R, Cullen NC, Strandberg O, Vogel JW, Binette AP, Borroni E, Janelidze S, Ohlsson T, Jogi J, et al. : Biomarker-Based Prediction of Longitudinal Tau Positron Emission Tomography in Alzheimer Disease. JAMA Neurol 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Calamia M, Weitzner DS, De Vito AN, Bernstein JPK, Allen R, Keller JN: Feasibility and validation of a web-based platform for the self-administered patient collection of demographics, health status, anxiety, depression, and cognition in community dwelling elderly. PLoS One 2021, 16:e0244962. * This feasbility and validation study for a digital version of the PACC had mixed results. This is an example of a novel test with both strengths and psychometric weakenesses.

- 18.Maruff P, Thomas E, Cysique L, Brew B, Collie A, Snyder P, Pietrzak RH: Validity of the CogState brief battery: relationship to standardized tests and sensitivity to cognitive impairment in mild traumatic brain injury, schizophrenia, and AIDS dementia complex. Arch Clin Neuropsychol 2009, 24:165–178. [DOI] [PubMed] [Google Scholar]

- 19. Papp KV, Rentz DM, Maruff P, Sun CK, Raman R, Donohue MC, Schembri A, Stark C, Yassa MA, Wessels AM, et al. : The Computerized Cognitive Composite (C3) in an Alzheimer’s Disease Secondary Prevention Trial. J Prev Alzheimers Dis 2021, 8:59–67. * This trial validates the Computerized Cognitive Composite (C3), a brief, digital cognitive battery. The C3 shows promise as a neuropsychological testing tool.

- 20.Kirwan CB, Stark CE: Overcoming interference: an fMRI investigation of pattern separation in the medial temporal lobe. Learn Mem 2007, 14:625–633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vannini P, Hedden T, Becker JA, Sullivan C, Putcha D, Rentz D, Johnson KA, Sperling RA: Age and amyloid-related alterations in default network habituation to stimulus repetition. Neurobiol Aging 2012, 33:1237–1252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Papp KV, Rentz DM, Maruff P, Sun C, Raman R, Donohue M, Schembri A, Stark C Yassa M, Wessels A, Yaari R, Holdridge K, Aisen P, Sperling RA: Computerized Cognitive Composite (C3) Performance Differences between Aβ+ and Aβ- normal older adults screened for the A4 (Anti-Amyloid in Asymptomatic AD) Study. In Alzheimer’s Association International Conference: 2018. [Google Scholar]

- 23.Buckley RF, Sparks KP, Papp KV, Dekhtyar M, Martin C, Burnham S, Sperling RA, Rentz DM: Computerized Cognitive Testing for Use in Clinical Trials: A Comparison of the NIH Toolbox and Cogstate C3 Batteries. J Prev Alzheimers Dis 2017, 4:3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Scott EP, Sorrell A, Benitez A: Psychometric Properties of the NIH Toolbox Cognition Battery in Healthy Older Adults: Reliability, Validity, and Agreement with Standard Neuropsychological Tests. J Int Neuropsychol Soc 2019, 25:857–867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Weintraub S, Dikmen SS, Heaton RK, Tulsky DS, Zelazo PD, Slotkin J, Carlozzi NE, Bauer PJ, Wallner-Allen K, Fox N, et al. : The cognition battery of the NIH toolbox for assessment of neurological and behavioral function: validation in an adult sample. J Int Neuropsychol Soc 2014, 20:567–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Snitz BE, Tudorascu DL, Yu Z, Campbell E, Lopresti BJ, Laymon CM, Minhas DS, Nadkarni NK, Aizenstein HJ, Klunk WE, et al. : Associations between NIH Toolbox Cognition Battery and in vivo brain amyloid and tau pathology in non-demented older adults. Alzheimers Dement (Amst) 2020, 12:e12018. * This study describes the weak associations of AD biomarkers and NIH Toolbox Cognition Battery. This assessment is an example of novel test that may be useful, but still pyschometrically flawed.

- 27. Trelle AN, Carr VA, Wilson EN, Swarovski MS, Hunt MP, Toueg TN, Tran TT, Channappa D, Corso NK, Thieu MK, et al. : Association of CSF Biomarkers With Hippocampal-Dependent Memory in Preclinical Alzheimer Disease. Neurology 2021, 96:e1470–e1481. * This article used tasks that tax the hippocampus to measure preclinical AD. It demonstrates the value of targeting specific brain regions in neuropsychological testing.

- 28.Braak H, Braak E: Neuropathological stageing of Alzheimer-related changes. Acta Neuropathol 1991, 82:239–259. [DOI] [PubMed] [Google Scholar]

- 29.Braak H, Braak E: Staging of Alzheimer’s disease-related neurofibrillary changes. Neurobiol Aging 1995, 16:271–278; discussion 278–284. [DOI] [PubMed] [Google Scholar]

- 30.Trelle AN, Carr VA, Guerin SA, Thieu MK, Jayakumar M, Guo W, Nadiadwala A, Corso NK, Hunt MP, Litovsky CP, et al. : Hippocampal and cortical mechanisms at retrieval explain variability in episodic remembering in older adults. Elife 2020, 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stark SM, Yassa MA, Lacy JW, Stark CE: A task to assess behavioral pattern separation (BPS) in humans: Data from healthy aging and mild cognitive impairment. Neuropsychologia 2013, 51:2442–2449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stark SM, Kirwan CB, Stark CEL: Mnemonic Similarity Task: A Tool for Assessing Hippocampal Integrity. Trends Cogn Sci 2019, 23:938–951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Crocco E, Curiel-Cid RE, Kitaigorodsky M, Gonzalez-Jimenez CJ, Zheng D, Duara R, Loewenstein DA: A Brief Version of the LASSI-L Detects Prodromal Alzheimer’s Disease States. J Alzheimers Dis 2020, 78:789–799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Loewenstein DA, Curiel RE, Greig MT, Bauer RM, Rosado M, Bowers D, Wicklund M, Crocco E, Pontecorvo M, Joshi AD, et al. : A Novel Cognitive Stress Test for the Detection of Preclinical Alzheimer Disease: Discriminative Properties and Relation to Amyloid Load. Am J Geriatr Psychiatry 2016, 24:804–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Curiel Cid RE, Loewenstein DA, Rosselli M, Matias-Guiu JA, Pina D, Adjouadi M, Cabrerizo M, Bauer RM, Chan A, DeKosky ST, et al. : A cognitive stress test for prodromal Alzheimer’s disease: Multiethnic generalizability. Alzheimers Dement (Amst) 2019, 11:550–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Loewenstein DA, Curiel RE, Wright C, Sun X, Alperin N, Crocco E, Czaja SJ, Raffo A, Penate A, Melo J, et al. : Recovery from Proactive Semantic Interference in Mild Cognitive Impairment and Normal Aging: Relationship to Atrophy in Brain Regions Vulnerable to Alzheimer’s Disease. J Alzheimers Dis 2017, 56:1119–1126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Bell SA, Cohen HR, Lee S, Kim H, Ciarleglio A, Andrews H, Rivera AM, Igwe K, Brickman AM, Devanand DP, et al. : Development of novel measures for Alzheimer’s disease prevention trials (NoMAD). Contemp Clin Trials 2021, 106:106425. ** This article describes novel measures designed to reduce practice effects, as well as the innovative structure of its validation study that uses the armature of a clinical trial. It addresses limitations of established tests and of common validation study designs.

- 38.Pratap A, Neto EC, Snyder P, Stepnowsky C, Elhadad N, Grant D, Mohebbi MH, Mooney S, Suver C, Wilbanks J, et al. : Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. NPJ Digit Med 2020, 3:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Geddes MR, O’Connell ME, Fisk JD, Gauthier S, Camicioli R, Ismail Z, Alzheimer Society of Canada Task Force on Dementia Care Best Practices for C: Remote cognitive and behavioral assessment: Report of the Alzheimer Society of Canada Task Force on dementia care best practices for COVID-19. Alzheimers Dement (Amst) 2020, 12:e12111. * This review outlines the validation of many telehealth versions of accepted neuropsychological measures. In general, remote administration of many tests is valid and feasible.

- 40.Wong L, Martin-Khan M, Rowland J, Varghese P, Gray LC: The Rowland Universal Dementia Assessment Scale (RUDAS) as a reliable screening tool for dementia when administered via videoconferencing in elderly post-acute hospital patients. J Telemed Telecare 2012, 18:176–179. [DOI] [PubMed] [Google Scholar]

- 41.Newkirk LA, Kim JM, Thompson JM, Tinklenberg JR, Yesavage JA, Taylor JL: Validation of a 26-point telephone version of the Mini-Mental State Examination. J Geriatr Psychiatry Neurol 2004, 17:81–87. [DOI] [PubMed] [Google Scholar]

- 42.Pendlebury ST, Welch SJ, Cuthbertson FC, Mariz J, Mehta Z, Rothwell PM: Telephone assessment of cognition after transient ischemic attack and stroke: modified telephone interview of cognitive status and telephone Montreal Cognitive Assessment versus face-to-face Montreal Cognitive Assessment and neuropsychological battery. Stroke 2013, 44:227–229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rebchuk AD, Deptuck HM, O’Neill ZR, Fawcett DS, Silverberg ND, Field TS: Validation of a Novel Telehealth Administration Protocol for the NIH Toolbox-Cognition Battery. Telemed J E Health 2019, 25:237–242. [DOI] [PubMed] [Google Scholar]

- 44. Perin S, Buckley RF, Pase MP, Yassi N, Lavale A, Wilson PH, Schembri A, Maruff P, Lim YY: Unsupervised assessment of cognition in the Healthy Brain Project: Implications for web-based registries of individuals at risk for Alzheimer’s disease. Alzheimers Dement (N Y) 2020, 6:e12043. * This article describes a large validation and feasibility study using the Cogstate Brief Battery. Findings were positive, and support the usability of Coogstate’s Brief Battery.

- 45.Sliwinski MJ: Measurement-burst designs for social health research. Social and Personality Psychology Compass 2008, 2:245–261. [Google Scholar]

- 46.Sliwinski MJ, Mogle JA, Hyun J, Munoz E, Smyth JM, Lipton RB: Reliability and Validity of Ambulatory Cognitive Assessments. Assessment 2018, 25:14–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Hassenstab J: Presentation: Remote Assessment Approaches in the Dominantly Inherited Alzheimer Network (DIAN). In Alzheimer’s Association International Conference: 2020. * This abstract outlines the preliminary results from an ambulatory burst-testing paradigm. This is an key example of a new trend in preclinical AD testing.

- 48. Samaroo A, Amariglio RE, Burnham S, Sparks P, Properzi M, Schultz AP, Buckley R, Johnson KA, Sperling RA, Rentz DM, et al. : Diminished Learning Over Repeated Exposures (LORE) in preclinical Alzheimer’s disease. Alzheimers Dement (Amst) 2020, 12:e12132. * This article outlines a learning task and its assocaiton with AD biomarkers. This article supports the value of using short-term serial testing to test for learning differences.

- 49. Duff K, Hammers DB, Dalley BCA, Suhrie KR, Atkinson TJ, Rasmussen KM, Horn KP, Beardmore BE, Burrell LD, Foster NL, et al. : Short-Term Practice Effects and Amyloid Deposition: Providing Information Above and Beyond Baseline Cognition. J Prev Alzheimers Dis 2017, 4:87–92. ** In this article, remote testing and highly demanding tasks revealed learning differences in amyloid-positive and -negative groups at a very short time scale. This article shows the utility in assessing learning defecits as a sign of preclinical AD.

- 50.Baker JE, Bruns L Jr., Hassenstab J, Masters CL, Maruff P, Lim YY: Use of an experimental language acquisition paradigm for standardized neuropsychological assessment of learning: A pilot study in young and older adults. J Clin Exp Neuropsychol 2020, 42:55–65. [DOI] [PubMed] [Google Scholar]

- 51. Buegler M, Harms R, Balasa M, Meier IB, Exarchos T, Rai L, Boyle R, Tort A, Kozori M, Lazarou E, et al. : Digital biomarker-based individualized prognosis for people at risk of dementia. Alzheimers Dement (Amst) 2020, 12:e12073. ** This remotely administered digital phenotyping task measures over 100 subtle bevahiors and uses machine learning to assess global congition and AD risk. This highlights the promise in digital phenotyping and remote testing.

- 52. Rentz DM, Papp KV, Mayblyum DV, Sanchez JS, Klein H, Souillard-Mandar W, Sperling RA, Johnson KA: Association of Digital Clock Drawing With PET Amyloid and Tau Pathology in Normal Older Adults. Neurology 2021, 96:e1844–e1854. * This article outlines the DCTClock, a digital version of a traditional clock drawing test that assesses subtle behviors to digital phenotypes. This highlights a key development in trend of digital phenotyping.

- 53.Chen R, Jankovic F, Marsinek N: Developing Measures of Cognitive Impairment in the Real World from Consumer-Grade Multimodal Sensor Streams. In 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. Edited by. Anchorage AK; 2019. [Google Scholar]

- 54. Bayat S, Babulal GM, Schindler SE, Fagan AM, Morris JC, Mihailidis A, Roe CM: GPS driving: a digital biomarker for preclinical Alzheimer disease. Alzheimers Res Ther 2021, 13:115. * This article uses GPS driving data to identify preclincal AD with high degree of success. This show the promise of passive digital phenotyping to capture movement patterns.

- 55.Zhou H, Lee H, Lee J, Schwenk M, Najafi B: Motor Planning Error: Toward Measuring Cognitive Frailty in Older Adults Using Wearables. Sensors (Basel) 2018, 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Husebo BS, Heintz HL, Berge LI, Owoyemi P, Rahman AT, Vahia IV: Sensing Technology to Monitor Behavioral and Psychological Symptoms and to Assess Treatment Response in People With Dementia. A Systematic Review. Front Pharmacol 2019, 10:1699. [DOI] [PMC free article] [PubMed] [Google Scholar]