Abstract

We consider the problem of modeling conditional independence structures in heterogeneous data in the presence of additional subject-level covariates – termed Graphical Regression. We propose a novel specification of a conditional (in)dependence function of covariates – which allows the structure of a directed graph to vary flexibly with the covariates; imposes sparsity in both edge and covariate selection; produces both subject-specific and predictive graphs; and is computationally tractable. We provide theoretical justifications of our modeling endeavor, in terms of graphical model selection consistency. We demonstrate the performance of our method through rigorous simulation studies. We illustrate our approach in a cancer genomics-based precision medicine paradigm, where-in we explore gene regulatory networks in multiple myeloma taking prognostic clinical factors into account to obtain both population-level and subject-level gene regulatory networks.

Keywords: Directed acyclic graph, Non-local prior, Predictive network, Subject-specific graph, Varying graph structure

1. Introduction

Basic inferential problem.

Graphical models have been widely applied to investigate conditional (in)dependence structures in multivariate data in different domains and contexts. Specifically, for a p-dimensional random vector X = (X1, …, Xp), a graphical model for X is defined by a tuple: , consisting of a graph G and an associated probability distribution (e.g., Gaussian distribution for continuous variables), respecting the conditional independence encoded by G. In many scientific contexts, it is possible that the graph structure G of the primary variables of interest (X) can change as extraneous factors change (e.g., experimental conditions). In this paper, we propose methods for modeling the conditional independence structure of X given another m-dimensional random vector U = (U1,…, Um), termed external covariates. The model thus defined as is treated as a regression function of the external covariates U (or simply covariates hereafter); hence, we have graphical regression such that the conditional independence structure is allowed to vary arbitrarily with different realizations of U. Concisely stated, the main inferential goals are (i) evaluate how graph topology changes over different realizations of U; (ii) select covariate (sub-)sets in U that have major impact on the graph topology; and (iii) allow for prediction, at both the node and graph structure levels, in the support of U. The conceptual frameworks introduced in this paper are generally applicable to any class of graphical models (undirected or directed) – the methodology we develop particularly targets directed graphical models.

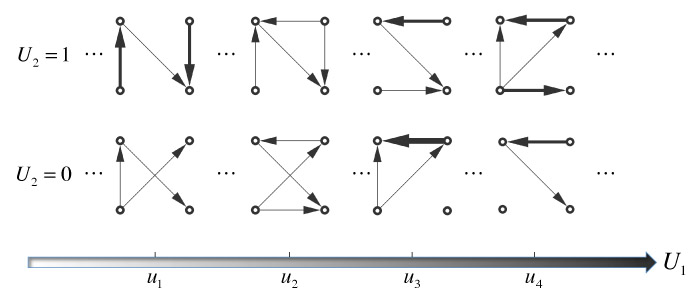

The concept is illustrated through a toy example in Figure 1, where the directed graphs have p = 4 nodes and m = 2 covariates of which U1 is continuous (horizontal axis) and U2 is binary (vertical axis). For each column, the two graphs have both common and differential edges that correspond to the binary variable. For each row, the graphs are “evolving” with respect to the values of U1. Figure 1 shows a snapshot of the “evolution” in the continuous space of U1 at four arbitrary values. The thickness of the edge is proportional to its strength (e.g., posterior probability/partial correlation).

Figure 1:

Toy example of directed graphs with four nodes and two external covariates. U1 is continuous and U2 is binary. The edge thickness is proportional to its strength of association.

Scientific motivation.

The scientific motivation of this work stems from the fact that cancer is a heterogeneous disease at different molecular, genomic and clinical levels (Heppner and Miller, 1983). Thus, the paradigm in cancer treatment has shifted from the traditional “one-size-fits-all” approach towards developing personalized treatments that account for individual variability in genes and in clinical and environmental characteristics for each person – termed precision medicine (De Bono and Ashworth, 2010). In the context of clinical genomics, the development and progression of cancer is driven by sub-networks within the functional regulatory networks of genes and their products, which undergo changes in response to different patient-specific characteristics such as clinical prognostic factors and/or environmental stress conditions (Luscombe et al., 2004; Bandyopadhyay et al., 2010). To this end, recovering both population-level and subject-level gene regulatory networks and monitoring the changes in gene regulatory relationships as a function of a given patient’s clinical characteristics may assist in developing personalized treatment regimes that target specific pathway disruptions – and is, perhaps one of the most pertinent and pressing quantitative problems in molecular biology. Statistically, the challenge is to integrate the additional patient-level information (e.g., prognostic factors) for assessing the conditional independence structures in multivariate genomic data (e.g., gene expression data), as alluded to in the previous paragraph (Section 5 provides a more detailed exposition).

Existing approaches and limitations.

Traditional graphical models (Lauritzen, 1996) typically assume i.i.d. sampling and thus do not account for subject-specific heterogeneity. However, several attempts have been made to model the conditional independence structure in this challenging setting. For external covariates with discrete support, Oates et al. (2014) and Yajima et al. (2015) developed group-specific directed acyclic graphs (DAGs) to estimate differential networks for several related groups. Similarly, Guo et al. (2011), Danaher et al. (2014) and Peterson et al. (2015) studied group-specific graphical models for the undirected case. Geiger and Heckerman (1996) studied a context-specific DAG model in which the space of one node is partitioned, and each subspace defines a different DAG structure. In a similar spirit, Nyman et al. (2014) developed a context-specific undirected graphical model in which the structure changes with discrete nodes. Liu et al. (2010) proposed a graph-valued regression that discretizes the continuous covariate space into a finite number of subspaces by classification and regression trees and fits a separate undirected graph for each subspace. In essence, the infinite dimensional problem is reduced to a finite dimensional one. However, the estimated graphs may be unstable and lack similarity for close covariates due to the separate graph estimation, as reported by Cheng et al. (2014). In summary, these approaches allow graph structure to vary with an external covariate/node which must be discrete. Kolar et al. (2010a) proposed a penalized kernel smoothing approach for conditional Gaussian graphical models in which the precision matrix varies with the continuous covariates. Cheng et al. (2014) developed a conditional Ising model for binary data where the dependencies are linear functions of additional covariates. Although the methods of Kolar et al. (2010a) and Cheng et al. (2014) allow the edge strength to vary with the covariates, neither approach deals with a graph structure that changes with the covariates. From a machine learning perspective, Zhou et al. (2010) and Kolar et al. (2010b) proposed interesting time-varying undirected graph algorithms for time series data. The graph structure is allowed to change over time by borrowing strength from “neighboring” time points via kernel smoothing. The graph estimation problem is essentially broken down to separate estimation for each time point, for which the joint distribution is not well-defined. It is not straightforward to extend their methods beyond univariate case due to the curse of dimensionality of kernel methods (Scott, 2008), that is, “neighboring” points quickly become “distant” as dimensionality increases. In addition, extending to discrete covariates is also unclear with kernel-based methods.

Brief outline of our method.

In this paper, we overcome the challenges described above and propose a novel class of graphical models, graphical regression (GR), which has six major axes of innovation. (1) Non-stationarity: we allow both the edge strength and the structure of the graph to vary simultaneously with multiple covariates, which can be either continuous, discrete or a mixture of both. This allows the edge strength to vary smoothly with continuous covariates, which encourages similar graphs for similar covariate realizations. (2) Sparsity in both edge and covariate selection: sparsity is a critical assumption in a high-dimensional setting where the number of parameters is much larger than the sample size. Here, we impose two levels of sparsity: (i) sparsity in edge selection and (ii) sparsity in the relationship between edges and covariates. We select relevant edges through a thresholding parameter and select relevant covariates for each edge through a spike-and-slab prior formulation. (3) Functional nonlinearity in edge-covariate relationships: the relationships between edges and covariates are allowed to be nonlinear and are modeled using spline-based semiparametric representations. This allows the model to distinguish the functional form of the relationships (linear vs. nonlinear) through suitable orthogonal basis decompositions. (4) Accounting for subject-specific heterogeneity: the regression formulation of GR conveniently allows us to construct graphs that are subject-specific, which is often more appropriate than single (population-level) graphs in some contexts. For example, due to tumor heterogeneity, even patients with the same type of cancer are expected to have different structural changes in their molecular signaling pathways that are determined by their specific clinical characteristics. Hence, personalized gene networks recovered by GR may assist in developing precision medicine (tailored therapies) for treatment. (5) Prediction: our regression-based framework allows us to predict network structures for new patients. In doing so, we do not require new node-level data (e.g., gene expressions); rather, new covariates (e.g., prognostic factor levels) will suffice. (6) Selection theoretical justifications: our thresholded prior formulations for the edge/covariate selection connects to the spike-and-slab prior, a commonly used prior for Bayesian variable selection. Moreover, the “slab” part is shown to be equivalent to a non-local prior (Johnson and Rossell, 2010). Non-local priors tend to shrink small effect to zero which is desirable in our setting where we are only interested in edges with moderate to strong strength/effect. We show under certain conditions that the proposed prior achieves model selection consistency.

In essence, GR can be thought of as an infinite dimensional generalization of an ordinary DAG model that admits a fairly wide class of models. Special cases of GR include ordinary, conditional, time-varying, group- and context-specific DAG models (see detailed list at the end of Section 2.1). We compare GR to alternative and existing methods with extensive simulation studies and establish its superiority in terms of both model selection and prediction. We apply GR to multiple myeloma gene expression data and construct a subject-specific graph by incorporating important prognostic factors. While some of our results are confirmed by the existing biological literature, several interesting findings are potential targets for future validation studies. Furthermore, we develop an interactive web application to visualize and interpret the varying graph structures. Finally, we discuss the possibilities as well as challenges of extension to the undirected case in Section 6.

We remark that there is also a rich machine learning literature on dynamic Bayesian networks for time series data and causal inference (DBNs, Ghahramani (1998); Murphy (2002); Song et al. (2009); Robinson and Hartemink (2010); Grzegorczyk and Husmeier (2011); Dondelinger et al. (2013)) which is orthogonal to this work. DBNs focus on modeling temporal networks between time points and leave the network structure within each time slice unmodeled or fixed, which in our case is explicitly modeled by GR.

2. Model

2.1. Graphical regression

A DAG, G = (V, E), contains a set of nodes V = {1,…,p} and a set of directed edges E ⊂ V × V that does not contain any directed cycles. We denote a directed edge from node j to node h by h ← j. A DAG model for X is a pair consisting of a graph G, for which the nodes V represent random variables X = XV and an associated probability distribution , respecting the conditional independence encoded by G. The joint distribution of X admits a convenient factorization , where pa(h) denotes the parent set of node h. Assuming that X follows a multivariate normal distribution, each conditional distribution can be modeled by a linear regression, with and βhj ≠ 0 if and only if j ∈ pa(h). We assume that X is arranged according to a prior ordering such that βhj = 0 for all h ≥ j, then pa(h) = {j ≥ h + 1: βhj ≠ 0}. Without an ordering, DAGs within the same Markov equivalence class (i.e. DAGs with the same conditional independence structure) cannot be distinguished. In GR, we consider DAG and assume that factorizes according to a DAG,

| (1) |

where pa(h∣U), depending on covariates U, is the parent set of node h in graph G(U). In what follows, we call U the external covariates (or simply the covariates) and X the nodes. Each conditional distribution is then modeled by

| (2) |

We define Bhj(U) as a conditional independence function (CIF) since the event I{βhj(U) = 0} determines the DAG structure which in turn encodes the conditional independence of X as a function of U. This is stated formally in the following lemma.

Lemma 1 (Functional Directed Pairwise Markov Property). Assuming the conditional distribution of X given U satisfies (1) and (2) and h < j. If I{βhj(U) = 0} = 1, then Xh ⫫ Xj∣Xpa(h), U.

The proof of lemma 1 follows from the fact that I{βhj(U) = 0} = 1 implies there is a missing edge between h and j given U, which in turn implies that Xh ⫫ Xj∣Xpa(h), U by the directed pairwise Markov property (Lauritzen, 1996). GR is a fairly flexible class of models and has at least five special cases:

-

(1)

If U is empty, then GR reduces to the case of the ordinary Gaussian DAG model.

-

(2)

If U is discrete (e.g., binary/categorical group indicator), then βhj(U) is group-specific and GR is a group-specific DAG model.

-

(3)

If U is taken to be the last node Xp, then GR can be interpreted as a context-specific DAG (Geiger and Heckerman, 1996) where the graph structure varies with Xp.

-

(4)

If the distribution of βhj (U) is absolutely continuous with respect to Lebesgue measure, then GR is a conditional DAG model in which the strength of the graph varies continuously with the covariates but the structure is constant.

-

(5)

If U is univariate time points, then GR can be used for modeling time-varying DAGs. Given the CIFs βhj (U), the likelihood of a GR can be expressed as

| (3) |

where β = (βhj), and x, u are the n samples of X, U. In the following sections, we detail a methodological framework with the aim of making inference on the CIFs and consequently on a graphical regression model .

2.2. Modeling the conditional independence function

We parameterize the CIF for a given edge h ← j as a product of two component functions, thresholding function,

| (4) |

where the two components are (1) a smooth function θhj(·) of U to allow for both linear and nonlinear covariate effects and (2) a hard thresholding function, through a thresholding parameter thj to induce sparsity in the resulting graph structures. We discuss the need for and construction of the two components in detail below.

(1). Smooth function:

The parameterization of the CIF (βhj) for a given edge in terms of a smooth function encapsulates the fact that we expect the presence of an edge and its corresponding strength to vary similarly for similar covariate values. This fact is captured through the smoothness of θhj(·) that encourages similar graphs for close realizations of covariates. In principle, the smooth function θhj(·) can be either specified by a parametric (e.g., linear) function or modeled semiparametrically or nonparametrically; we choose the latter to achieve greater flexibility by letting the model choose the appropriate structural form. Specifically, suppose U = (Z, W) consists of discrete covariates (with appropriate coding) Z = (Z1,…, Zr) and continuous covariates W = (W1,…, Wq) with r + q = m. We construct θhj(·) through a structured additive model as

| (5) |

where φhjl is the effect between the discrete covariate Zl and the edge h ← j and fhjk(·) is the functional relationship between the continuous covariate Wk and the edge h ← j. We assume fhjk(·) to be smooth and do not specify a specific (parametric) functional form of fhjk(·). In principle, fhjk(·) can be modeled with any nonlinear functional representation, depending on the context of the application. For example, if the relationship follows a very localized (“spiky”) behavior, one could choose wavelets for fhjk(·); similarly if the relationship follows a periodic pattern, Fourier bases could potentially be used. However, in the absence of such information, we model fhjk(·) via spline-based semiparametric representations which allows graph structure to vary smoothly with external covariates. We defer the explicit formulation to Section 2.3 after we introduce the thresholding function.

(2). Thresholding function:

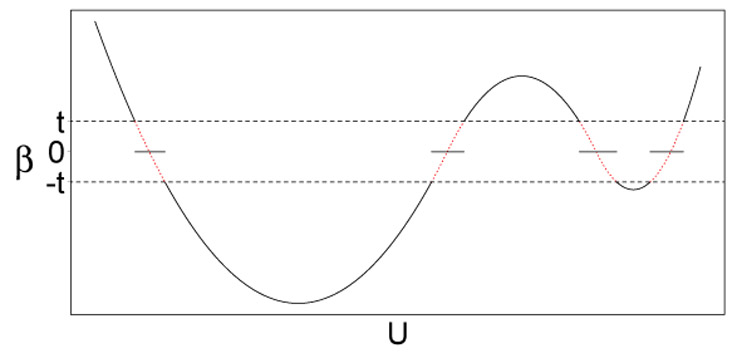

Now that we have defined θhj(U) as a smooth function of U, the solution is not sparse for any given realization of U (i.e., producing exact zeros). In other words, unconstrained estimation of θhj(·) might result in the graph structure having too many edges, some with very small magnitudes, which hinders both inference and interpretations (e.g., increased false positive edges). To account for this, we introduce an explicit thresholding mechanism through a parameter thj that admits sparsity, allowing the structure of βhj to vary with U. This edge-specific parameter can be interpreted as a minimum effect size of the CIF. The thresholding mechanism is conceptually depicted in Figure 2, where the solid curve represents β(U), the dashed lines indicate the threshold t and the dotted curves are the part of θ(υ) that is thresholded to zero. Note that we do not fix the threshold thj a priori but instead estimate it a posteriori by imposing a prior distribution, which we discuss in Section 2.4. Other thresholding functions including soft thresholding and nonnegative garrote thresholding have been studied in different contexts. A soft thresholding (Donoho and Johnstone, 1995) has advantage of continuous shrinkage over hard thresholding. However, it also introduces a constant bias/deviation. A nonnegative garrote thresholding (Gao, 1998) is a compromise between soft and hard thresholding. It is continuous like soft thresholding and has less bias than soft thresholding. Our choice of using hard thresholding in this paper is mainly motivated by the theoretical property of its connection to non-local prior as described in Section 3.

Figure 2:

Example plot of the conditional independence function (CIF). Solid curve represents the CIF β(U); dashed lines indicate the threshold t; dotted curves are thresholded to zero.

In theory, one can use any Bayesian variable selection technique to obtain a sparse estimator of βhj(U), for example, a spike-and-slab prior (George and McCulloch, 1993; Ishwaran and Rao, 2005). However, in order to allow the sparsity to vary with subject-specific external covariates U, we need to introduce a latent indicator for each potential edge and each subject, which would greatly increase the model complexity and hence reduce the scalability. For example, in our application with multiple myeloma dataset, the spike-and-slab construction would need n × p × (p–1)/2 = 173712 latent indicators whereas our model only needs p × (p — 1)/2 = 1128 thresholding parameters and this number can be further reduced to p = 48 (Section 2.4). In addition, while our model can perform graph structure prediction (Supplementary Material B), it is not straightforward to do so with spike-and-slab prior.

In the context of a single regression, the varying-coefficient model (VCM, Hastie and Tibshirani (1993)) can be viewed as a special case of the model defined by equations (2) and (4) with thj = 0 for all j = 1,…, p. In VCM, the regression coefficients vary smoothly with the covariates but the sparsity/structure of the model remains constant. We compare GR with VCM in simulation studies (Section 4).

2.3. Bayesian orthogonal basis p-splines

In this section, we specify the functional form of the relationship between the continuous covariates and edges. Our construction allows us to distinguish the functional form of the edge-covariate relationship, which generates more interpretable results in our application. In particular, we take a semiparametric approach and model the smooth function fhjk(·) by cubic b-splines , where wk denotes n samples of Wk, , represents the design matrix of the spline bases for Wk, and αhjk is the corresponding spline coefficient. We choose a large enough number of knots/bases to capture the local features in the functions. To prevent overfitting, we use p-splines (Eilers and Marx, 1996; Lang and Brezger, 2004) which restrict the influence of αhjk by a roughness penalty. P-splines can be treated as a mixed model or equivalently a Bayesian hierarchical model (Ruppert et al., 2003) by assuming αhjk ~ N(0, sK− with the singular penalty matrix K constructed from the second order differences of the adjacent spline coefficients. The coefficients that parameterize the constant and linear trends of fhjk(·) are in the null space of K and hence are not penalized. Also, notice that equation (4) is not identifiable because adding a constant to fhjk(·) and subtracting it from fhjk′(·) does not change the equation. Following Scheipl et al. (2012), we take a spectral decomposition of the covariance of ,

where uk is the orthonormal matrix of eigenvectors associated with positive eigenvalues in the diagonal matrix dk. Let be the orthogonal basis and , then has a proper normal distribution that is proportional to that of the partially improper prior of . Note that the prior of will be extended to a spike-and-slab prior in Section 2.4 for covariate selection.

In short, we have decomposed into a penalized part and an unpenalized part and reparameterized , where is the coefficient of the linear term wk, which allows us to put separate shrinkage/selection priors on and and to differentiate between linearly and nonlinearly varying effects (Section 2.4). The intercept term is merged into the global intercept μhj,

| (6) |

where θhj = (θ1hj,…,θnhj)T and zl are samples of Zl. In practice, we retain only the first several eigenvectors and eigenvalues that explain most (e.g., 99%) of the variability of fhjk(wk). This leads to a great dimension reduction of (for example, with 20 bases, the dimension of is usually reduced to around 10) and hence efficient computation. Note that this construction of the orthogonal spline basis requires specification of both the order and number of basis functions. We provide detailed discussion of the specification and sensitivity analyses to both in Supplementary Materials A and E, respectively.

2.4. Parameter priors and covariate selection

In this section, we detail a set of priors that are instrumental in defining an efficient computational approach for posterior sampling (Supplementary Material B). In Section 3, we outline the connection between these priors and the non-local priors (Johnson and Rossell, 2010, 2012) and ascertain their theoretical properties.

The CIF consists of a smooth function component and a thresholding component (as described in Section 2.2), which define three sets of parameters: (1) edge-covariate-specific parameters μhj, , and φhjl; (2) edge-specific parameter thj; and (3) node-specific parameter . Hereafter, we discuss the priors and hyper-priors for all the parameters of the two components with three objectives: (1) encourage sparsity in both graph structure and edge-covariate relationships; (2) improve computational efficiency; and (3) achieve the theoretical properties.

Priors for smooth function.

The smooth function is parameterized by the constant effect μhj; linear effect ; nonlinear effect ; and discrete effect φhjl for h = 1,…, p ‒ 1, j = h + 1,…,p, k = 1,…,q and l = 1,…,r. For simplicity, we first describe the prior for ; the priors for μhj, , φhjl are analogously defined later. We expand to be a product of a scalar ηhjk and a vector ξhjk with the same size as . The parameter expansion technique (Gelman et al., 2008) improves the mixing of the MCMC algorithm, which has also been observed by Scheipl et al. (2012) and Ni et al. (2015). We consider the case in which some of the covariates may contribute to only a few CIFs (i.e., we expect a sparse solution for ). The scalar ηhjk is assigned a spike-and-slab prior ηhjk ~ N(0, shjk) with shjk = γhjkνhjk with νhjk ~ IG(aν, bν) and γhjk ~ ρhδ1(γhjk) + (1 – ρh)δν0 (γhjk). The hyper-parameter v0 is a very small, pre-specified number. Such a setting implies that the binary variable γhjk indicates the importance of ηhjk and hence . If γhjk = 1 (slab), is non-zero, which suggests that there exists a nonlinear effect between the edge h ← j and the continuous covariate Wk. If γhj (spike), the effect of ηhjk is almost negligible and can be safely treated as zero (i.e., ), which in turn implies that there is no nonlinear effect between the edge h ← j and the covariate Wk. The variance parameter v0 can also be set to zero. Using a small but positive v0 was first proposed by George and McCulloch (1993) to exclude nonsignificant nonzero effects. It also allows us to avoid trans-dimensional MCMC which may mix poorly in moderate to high dimensional problem. Similar strategy is also used in recent Bayesian variable selection literature (Scheipl et al., 2012; Ročková and George, 2014). We assume a beta hyper-prior for ρh ~ Beta(aρ, bρ), which automatically adjusts for multiplicity (Scott et al., 2010). Finally, distributes ηhjk across the entries of and is assumed to follow a mixture of two normal distributions, with . The bimodal nature discourages small absolute values for and hence has better separation between zero and non-zero effects. The induced prior on is called the parameter-expanded normal-mixture-of-inverse-gamma (peNMIG) distribution (Scheipl et al., 2012). Similarly, we assume peNMIG priors for μhj, , φhjl. This allows us to investigate: (1) whether there exists an edge h ← j; (2) if true, whether the edge h ← j is constant or not; (3) if not, which set of covariates affect the edge; and (4) whether the relationship between the edge and a continuous covariate is linear or nonlinear.

Priors for thresholding.

The thresholding parameter thj controls the sparsity of the graphs. In the limit, as thj → 0 all nodes are fully connected for all subjects while as thj → ∞ all nodes are disconnected. We remark that thj is not fully identifiable. For example, when μhj, , , Φhjl all equal 0, thj can take any positive value since βhj is always zero. We resolve this issue by assuming thj = th, ∀j which enforces the identifiability of th as long as not all βhj’s are zero. We impose a uniform prior on th ~ U(0, tmax) (sensitivity analyses on the choice of tmax are provided in Supplementary Material E). In our experience, due to faster mixing, peNMIG needs only 1/4 of the iterations to achieve the same performance as the prior in Section 3. Therefore, we use peNMIG in the simulations and application.

We complete the prior specifications by assuming an inverse-gamma prior for residual variance . The choice of hyperparameters and a schematic of the complete hierarchical formulation of GR are provided in Supplementary Material A. We use an MCMC algorithm to sample all the parameters from their posterior distributions; details are given in Supplementary Mateiral B where we also discuss the process of using posterior samples for predictions. We consider two types of predictions, node and graph structure predictions. Node prediction, in which the goal is to impute the missing observation of some node Xh, is common in graphical models; whereas graph structure prediction, to the best of our knowledge, is novel. The idea is to predict the graph structure for a new subject based on the external covariates.

3. Theoretical properties

In this section, we first present a general result of the connection between our thresholded prior and the discrete mixture of a point mass at 0 and a non-local prior and then discuss the model selection consistency in Supplementary Material C. A non-local prior assigns a vanishing density to the neighborhood of the null hypothesis. In model selection, the density vanishes around 0 and therefore shrinks small effect to zero, which is appealing because we are interested in a parsimonious estimation of the graph (i.e., a sparse network). Several proposals of the theoretical properties of non-local priors have been published (Johnson and Rossell, 2010, 2012; Rossell and Telesca, 2015; Shin et al., 2015).

Consider a general hard thresholding mechanism, β = θI(∣θ∣ > t) for t ≥ 0, where and . The following proposition shows that with a fairly wide range of prior distributions, and , the marginal prior of β can be expressed as a discrete mixture of a point mass at 0 and a non-local prior.

Proposition 1. Suppose is bounded near 0 and Pr(t = 0) = 0. Then

where the latent variable ψ ~ Bernoulli(pψ) and ρψ = Et[Pr(∣θ∣ > t∣t∣)]. Moreover,

The proof is given in Supplementary Material C. It is straightforward to generalize proposition 1 to an independent multivariate case where , and β = (β1,…, βp) with βj = θjI(∣θj∣ > tj).

Corollary 1. Assume the conditions of proposition 1 apply. Then , where ψj ~ Bernoulli(ρψ) and ψ = (ψ1, …, ψp). Moreover, as αj → 0 for some j = 1, …, p.

One specific example of proposition 1 and corollary 1 is the exponential moment (eMOM) prior and the product eMOM (peMOM) prior, which under mild regularity conditions leads to Bayes factor consistency in a linear model when p ≤ n (Rossell and Telesca, 2015).

Example 1. Let and let be the Fréchet/inverse-Weibull distribution with density . Then is the eMOM prior with order d = 1, 2,… and is the peMOM prior. When d = 1, the normalizing constant and mixture weight .

Another example of corollary 1 is the modified peMOM prior, which provides model selection consistency even when p increases sub-exponentially with n (Shin et al., 2015).

Example 2. Let and , where . Then is the modified peMOM.

Our thresholding procedure extends the results in Rossell and Telesca (2015), where they prove the equivalence between the marginal density of truncation mixtures and nonlocal priors. In our construction, we threshold the parameter itself rather than truncate its density. Hence, the resulting marginal prior of Rossell and Telesca (2015) is a non-local prior while ours is a discrete mixture of the non-local prior and point mass at 0. The priors of thresholding/truncation parameters from the two approaches are also very different in order to achieve the same non-local prior. For example, to obtain eMOM, we assume to be inverse-Weibull while Rossell and Telesca (2015) sets , which is not analytically available. We discuss the circumstances under which the model selection consistency results for linear regression (Shin et al., 2015) can be applied to GR in Supplementary Material C.

4. Simulation studies

In this section, we empirically illustrate the performance of GR in terms of edge selection, covariate selection, node prediction and structure prediction. To the best of our knowledge, there are no alternative approaches that can perform DAG inference allowing graph structure to vary with both continuous and discrete covariates; therefore we compare GR to two types (Bayesian and non-Bayesian) of VCMs and to a group-specific DAG model (i.e., with categorical covariates), since both methods are a special case of GR as described in Sections 2.1 and 2.2. Throughout the simulations, we choose B = 20 spline bases and set the hyperparameters as v0 = 2.5 × 10−4, tmax = 1, (ασ, bσ) = (10−4, 10−4), (av, bv) = (5,100) and (aρ, bρ) = (0.5, 0.5) so that the priors have large variance. For Bayesian methods, we run 100,000 MCMC iterations, discard the first 50,000 iterations as burn-in and retain every 5th sample. For each scenario, we report the results on 50 simulated datasets. In addition, even though the focus of this paper is on DAG, we also compare GR to two state-of-the-art methods for group-specific undirected graphical models (see Supplementary Material D). We perform a sensitivity analysis on the number B of spline bases and all the hyperparameters tmax, (ασ, bσ), (aν, bν), (αω, bω) and v0 in Supplementary Material E, which shows that our method is robust to the choice of number of bases and hyperparameters.

4.1. Case I: single regression

We first compare GR with both Bayesian and frequentist VCM models in the single regression setting. Bayesian VCM (BVCM) is implemented using GR with t deterministically set to 0. For the frequentist approach, we choose recent work by Fan et al. 2014, iterative nonparametric independence screening (INIS). Since INIS only works on a univariate continuous covariate, we set q = 1 and r = 0.

Low-dimensional scenarios.

We set n = 200, p = 50 and generate the data from the following model

with one constant effect, one linearly varying effect and one nonlinearly (quadratically) varying effect. We draw (X1,…, X50, U) from N(0, I51). The true threshold t is set at 0.5 and 0. We also generate an independent test dataset with 200 samples for prediction.

We assess the performances in terms of prediction and of the selection of the true X’s. Specifically, we report the true positive rate (TPR), false discovery rate (FDR), Matthews correlation coefficient (MCC) and mean squared prediction error (MSPE) in Supplementary Material D. We do not report the area under the ROC curve because INIS does not have a tuning parameter that needs to be set by users. MCC is a balanced measure of binary classification that takes values between −1 (total disagreement) and +1 (perfect classification). GR performs the best in terms of both variable selection and prediction when t = 0.5. MCC is 0.960, 0.890 and 0.796 and the MSPE is 1.899, 1.927 and 6.941 for GR, BVCM and FDM, respectively. When t = 0, GR is still much better than INIS and comparable to BVCM (slightly lower MCC but also slightly lower MSPE). Note that the operating characteristics for INIS are calculated on the basis of only 2 or 3 simulated datasets (out of 50) because for the majority of the simulated datasets, INIS does not select any variables; this issue is probably due to relatively low signals and sample size.

High-dimensional scenarios.

We consider high-dimensional cases for which INIS is originally designed. We adopt the following data generating process (Fan et al., 2014):

We generate X1,…,Xp from a standard normal distribution and U from a standard uniform distribution. Six scenarios are considered: (1) n = 200, p = 50, t = 0.5; (2) n = 200, p = 50, t = 0; (3) n = 200, p = 200, t = 0.5; (4) n = 200, p = 200, t = 0; (5) n = 400, p = 1000, t = 0.5; and (6) n = 400, p = 1000, t = 0. Notice that scenario (6) is exactly the same as example 3 of Fan et al. (2014).

The operating characteristics are also reported in Supplementary Material D. In terms of selecting the X’s, GR outperforms BVCM and INIS for all cases. For example, when n = 200, p = 200 and the true model is VCM (i.e., t = 0), the values of MCC for GR, BVCM and INIS are 0.961, 0.903 and 0.826, respectively. The better MCC for GR is mostly due to its better control of false positives (with FDR = 0.037) through thresholding. However, we observe that in the high-dimensional setting (especially when n = 400, p = 1000), sometimes a low FDR is achieved at the price of a relatively low TPR because of an over-estimated thresholding parameter. As a result, the prediction is somewhat undermined. This may explain the unstable prediction performance of GR. We point out that the typical application of GR involves only a moderate number of variables (48 in our case study) and variable selection rather than prediction is of key interest in graphical models.

4.2. Case II: graphical model

The goal of this simulation study is to illustrate the selection performance of GR. INIS is not considered because of its poor performance in the single regression case and its inability to handle multivariate covariates and covariate selection. We consider two examples: (1) group-specific DAG (q = 0, r = 1) and (2) general GR (q, r > 0).

Group-specific DAG.

We first study the case where we only have one categorical covariate (i.e., q = 0, r = 1). As mentioned in Section 2.1, GR, in this case, is reduced to the group-specific DAG model. We set p = 20, n = 40 and assume two groups, z ∈ {0, 1}n, with equal size. The true thresholding parameter t is set to 0.5. We assume the sparsity of the edge-covariate relationship is 0.2 (i.e., γ ~ Bernoulli(0.2)). The non-zero covariate coefficient φ is generated from N(0, 0.72) for the first group. Then we add a normal noise level to φ for the second group. We vary the level of noise to achieve five scenarios with different percentages (10%, 30%, 50%, 80%, 100%) of differential edges. The error term ϵ is drawn from standard normal distribution. For comparison, we apply the Bayesian linear model selection method, SSVS (George and McCulloch, 1993) separately to each group.

In Figure 3, we plot the FDR and MCC of GR and SSVS for different scenarios. We do not show the TPR because it is nearly the same for the two methods (~0.6 across five scenarios). Overall, GR performs better than SSVS. This is expected since GR is a joint approach that pools the information from two groups while SSVS makes separate estimations for each group and is expected to lose efficiency. Not surprisingly, as the percentage of differential edges increases, the performance of SSVS becomes closer to that of GR. When the two groups have completely different edge sets, SSVS slightly outperforms GR.

Figure 3:

Matthews correlation coefficient (solid lines) and false discovery rate (dashed lines) are plotted against percentages of differential edges. The triangle markers represent GR; circle markers represent SSVS.

General GR.

We compare GR with BVCM in terms of both edge and covariate selection when covariates contain both continuous and discrete variables. We let n = 200, p = 50 and consider two scenarios: (i) q = r = 2 and (ii) q = r = 3. The sparsity of the edge-covariate relationship is assumed to be 0.015. The non-zero discrete covariate effect φ is generated from a standard normal distribution and the function f (·) of the significant continuous covariates is either linear or nonlinear with equal probability. Nonlinear functions are further randomly selected from f(x) = sin(8π/7x), f(x) = sin(4π/7x), f(x) = exp(x) – 1 and f(x) = x2 – 1. The continuous covariates w are drawn from a standard normal distribution and the discrete covariates z are randomly generated from {0, 1, 2}.

In Table 1, we calculate the TPR, FDR and MCC for the selection of continuous covariates (with prefix c), discrete covariates (with prefix d) and network edges (with prefix e). For both scenarios, the difference in covariate selection between GR and BVCM is almost negligible and can be explained by the trade-off between TPR and FDR. But in terms of edge selection, GR outperforms BVCM. For example, the eMCCs for GR and BVCM are 0.823 and 0.626. Moreover, GR is capable of predicting the network structure for new observations. We generate independent test data w* and z* with sample size 200. We show TPR, FDR and MCC for the predictive network (with prefix p) in Table 1. The performance is nearly the same as the estimation with the training data. On an AMD 2.2 GHz processor, the average computation times are 15.6 and 20.5 hours for scenarios (i) and (ii), which can be greatly reduced by parallelizing our algorithm via the factorization (2).

Table 1:

Networks with n = 200, p = 50. Prefixes: e-edge, c-continuous (covariates), d-discrete (covariates), p-predictive network. The numbers are calculated on the basis of 50 repetitions; standard deviations are given within parentheses.

|

q = r = 2 |

q = r = 3 |

|||

|---|---|---|---|---|

| GR | BVCM | GR | BVCM | |

| eTPR | 0.895 (0.031) | 0.985 (0.012) | 0.854 (0.029) | 0.988 (0.007) |

| eFDR | 0.120 (0.027) | 0.398 (0.030) | 0.180 (0.033) | 0.555 (0.038) |

| eMCC | 0.881 (0.024) | 0.755 (0.022) | 0.823 (0.023) | 0.626 (0.033) |

| cTPR | 0.967 (0.024) | 0.985 (0.017) | 0.955 (0.026) | 0.989 (0.012) |

| cFDR | 0.336 (0.056) | 0.303 (0.041) | 0.490 (0.039) | 0.467 (0.065) |

| cMCC | 0.797 (0.037) | 0.825 (0.026) | 0.691 (0.031) | 0.719 (0.051) |

| dTPR | 0.770 (0.068) | 0.826 (0.060) | 0.769 (0.039) | 0.828 (0.025) |

| dFDR | 0.128 (0.058) | 0.143 (0.078) | 0.169 (0.049) | 0.202 (0.080) |

| dMCC | 0.817 (0.053) | 0.838 (0.052) | 0.796 (0.035) | 0.809 (0.047) |

| pTPR | 0.889 (0.031) | - | 0.851 (0.029) | - |

| pFDR | 0.115 (0.027) | - | 0.175 (0.034) | - |

| pMCC | 0.880 (0.023) | - | 0.824 (0.023) | - |

Sensitivity analysis of ordering misspecification.

One assumption of our model is the knowledge of a prior ordering of the nodes. Here we investigate the sensitivity of our approach to the ordering misspecification. This analysis is performed following the criteria in Altomare et al. (2013) and Shojaie and Michailidis (2010). We apply GR to the data simulated under scenario (i) q = r = 2, assuming misspecified orderings. To quantify the distance between the misspecified ordering (used to fit the data) and the true ordering (used to generate the data), we compute the normalized Kendall’s tau distance which is the ratio of the number of discordant pairs over the total number of pairs. The Kendall’s tau lies between 0 and 1, with 0 indicating a perfect agreement of the two orderings and 1 indicating a perfect disagreement. Since GR is not able to learn the ordering, we will ignore the directions of the edges in reporting the graph structure learning performance in Table 2. The performance of GR under ordering misspecification can be also affected by the average number of v-structures across subjects for both the true ordering and the prior ordering which is also reported in Table 2. As expected, the performance deteriorates as the Kendall’s tau becomes larger. However, it remains reasonable (MCC= 0.74) when Kendall’s tau= 0.25 whereas for extreme scenario when Kendall’s tau= 1, MCC decreases to 0.51.

Table 2:

Sensitivity of misspecified ordering when q = r = 2. The columns under label “Misspecified ordering” are simulations with misspecified prior orderings; the last column reports the performance of GR using orderings estimated by order-MCMC. Directions of the edges are ignored. The standard error for each statistic is given in parentheses.

| Misspecified ordering |

order-MCMC |

||||

|---|---|---|---|---|---|

| Kendall’s tau | 0.00 | 0.25 | 0.51 | 1:00 | 0.15(0.05) |

| V-structures (prior) | 56 | 56 | 48 | 50 | 53(4) |

| V-structures (shared) | 56 | 37 | 18 | 0 | 41(7) |

| TPR | 0.895 (0.031) | 0.799(0.025) | 0.725(0.030) | 0.601(0.032) | 0.820(0.037) |

| FDR | 0.120 (0.027) | 0.286(0.028) | 0.399(0.031) | 0.509(0.031) | 0.232(0.062) |

| MCC | 0.881 (0.024) | 0.740(0.022) | 0.638(0.024) | 0.514(0.028) | 0.781(0.047) |

Although in our application the ordering is naturally retrieved from the reference signaling pathway, there are cases where such ordering is completely unknown or impractical to obtain. In these situations, the problem is usually decomposed into two sub-problems: (1) learn the ordering; and (2) given the ordering, learn the network structure. The first sub-problem can be solved by using an ordering learning algorithm such as order-MCMC (Friedman and Koller, 2003) which is an efficient MCMC algorithm over the ordering space with parameters and graph marginalized out. Here, we perform a simulation to study the performance of GR in conjunction with order-MCMC. We first apply order-MCMC (implemented by Kuipers and Moffa (2016)) to the data generated under scenario (i) for 100,000 iterations starting from a random ordering. Upon completion, the algorithm outputs a posterior sample of the orderings based on which we calculate the posterior mean ordering. Then we apply GR using the mean ordering as our prior ordering. The performance (Table 2) is quite reasonable compared to the results under the true ordering. For instance, the MCC of GR with order-MCMC (MCC=0.781) is between that of GR with the true ordering (MCC=0.881) and with slightly misspecified ordering (Kendall’s tau=0.25, MCC=0.740).

Scalability of number of covariates.

Our application considers m = 2 covariates. We conduct a simulation study (details provided in Supplementary Material D) to investigate the scalability of GR with respect to m = 6, 12, 18, 24. We find GR scales reasonably well in terms of both structure learning performance and computation time (scales linearly in m).

Comparison of different thresholding functions.

We focus on hard thresholding in this paper due to its appealing theoretical property. However, it is straightforward to extending our method to other thresholding functions such as soft thresholding and nonnegative garrote thresholding and it is interesting to empirically compare them. We carry out a simulation study for such comparison in Supplementary Material D. The results show very comparable performance among the three thresholding functions.

Sensitivity analysis of distribution misspecification.

We conduct a simulation study where the data are generated from a non-Gaussian distribution. The inference under GR is robust to distribution misspecification. Details are reported in Supplementary Material E.

5. Application

In the United States, it has been estimated that more than 26,000 new cases of multiple myeloma occurred and that multiple myeloma caused 11,000 deaths in 2015 (Siegel et al., 2015). Multiple myeloma is a late-stage malignancy of B cells in the bone marrow. According to the International Staging System (Greipp et al. 2005), multiple myeloma is classified into three stages by two important prognostic factors, serum beta-2 microglobulin (Sβ2M) and serum albumin: stage I, Sβ2M < 3.5 mg/L and serum albumin ≥ 3.5 g/dL; stage II, neither stage I nor III; and stage III, Sβ2M ≥ 5.5 mg/L. Multiple myeloma is known to exhibit great genomic heterogeneity and therefore many current research efforts have shifted their focus from traditional therapies to precision medicine for patients diagnosed with multiple myeloma on the basis of genomically defined pathways (Lohr et al., 2014). Our data consist of gene expressions and matched clinical information collected by the Multiple Myeloma Research Consortium, which can be downloaded from the Multiple Myeloma Genomic Portal (https://www.broadinstitute.org/mmgp/home). We focus on the genes mapped to the five critical signaling pathways identified by previous multiple myeloma studies (Boyd et al., 2011): The (1) Ras/Raf/MEK/MAPK pathway, (2) JAK/STAT3 pathway, (3) PI3K/AKT/mTOR pathway, (4) NF-κB pathway and (5) WNT/β-catenin pathway. After removing samples with missing values, we have n = 154 samples and p = 48 genes with q = 2 continuous covariates. Our goal is to reconstruct both population-level and subject-level gene regulatory networks, monitor the changes in gene regulatory relationships guided by prognostic factors and assess the contribution of prognostic factors to the varying networks. We obtain a prior ordering of genes from the reference signaling pathway for multiple myeloma (Boyd et al., 2011). In particular, we put gene i before gene j in the ordering if there is a directed path from gene j to gene i in the reference network. For genes that are not connected by a directed path, the ordering of them is arbitrary. We run two separate MCMCs, each with 500,000 iterations, discard the first 50% as burn-in and thin the chains by taking every 25th sample. The Markov chains exhibit good mixing by MCMC diagnostics. Model checking shows no significant evidence for lack of fit and model assumption violation. Details provided in Supplementary Material F.

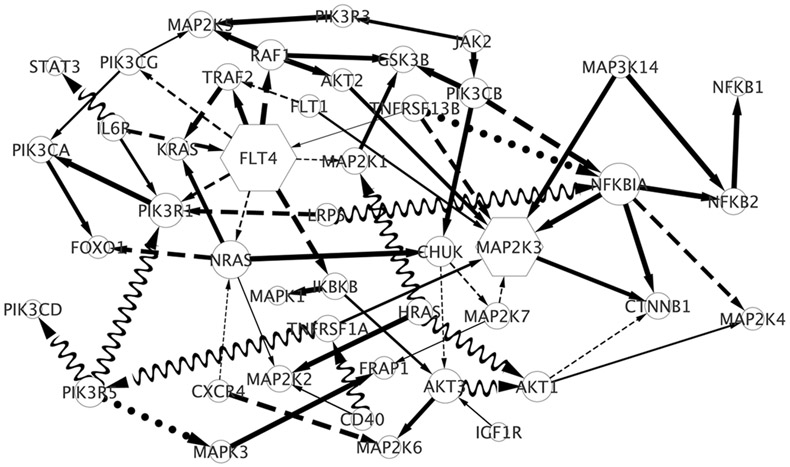

Population-level network.

The inferred network is shown in Figure 4. We find

-

(i)

38 positive constant edges/effects (solid lines): positive constant effect μhj > th, no linear effect and no nonlinear effect for all k = 1,…, q;

-

(ii)

20 negative constant effects (dashed lines): μhj < −th, , , ∀k = 1,…, q;

-

(iii)

2 linearly varying effects (dotted lines): and ∣θhj(wi)∣ > th for some k and i;

-

(iv)

9 nonlinearly varying effects (waves): and ∣θhj(wi)∣ > th for some k and i. The thickness of the solid or dashed line is proportional to its posterior probability and the node size is proportional to its degree. Some regulatory relationships are consistent with those reported in the existing biological literature. For example, NRAS/HRAS activating MAP2K2 is part of the well-known MAPK cascade, which participates in the regulation of fundamental cellular functions, including proliferation, survival and differentiation. Mutated regulation is a necessary step in the development of many cancers (Roberts and Der, 2007). We also observe that IL6R activates PIK3R1, which together with its induced PI3K/AKT pathway plays a key role in protection against apoptosis and the proliferation of multiple myeloma cells (Hideshima et al., 2001). Moreover, we find two driver/hub genes (degree≥ 8, hexagons in Figure 4), FLT4 and MAP2K3 with degrees 9 and 8, both of which play important roles in multiple myeloma. FLT4, also known as VEGFR3, is responsible for angiogenesis for multiple myeloma (Kumar et al., 2003) and MAP2K3 contributes to the development of multiple myeloma through MAPK cascades (Leow et al., 2013).

Figure 4:

The recovered gene regulatory network in Multiple Myeloma data integrating the prognostic factors: Sβ2M and serum albumin. The solid lines indicate positive constant effects; dashed lines indicate negative constant effects; dotted lines indicate linearly varying effects; waves indicate nonlinearly varying effects; the width of the solid or dashed line is proportional to its posterior probability; the node size is proportional to its degree. Hexagons are hub genes.

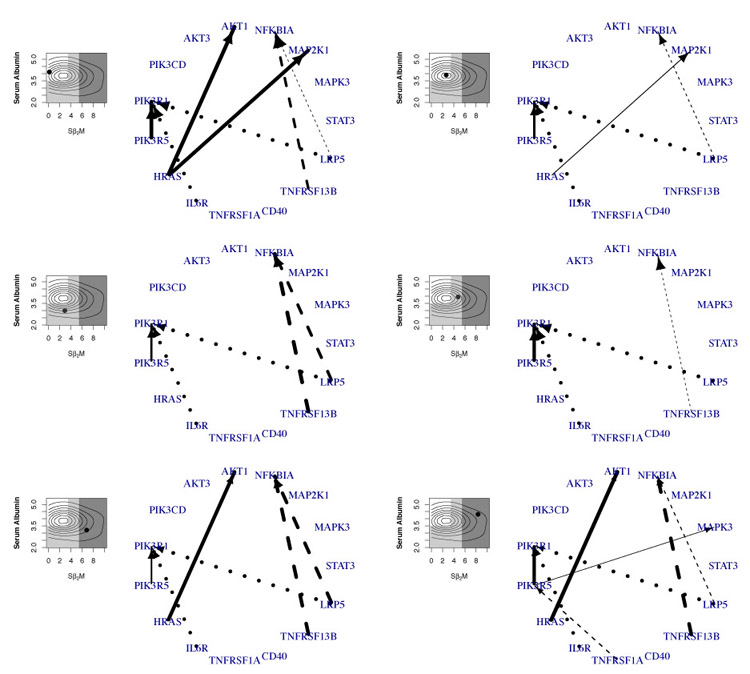

Subject-level network.

We provide six personalized (sub-)networks that correspond to different realizations of the covariates in Figure 5, two for each cancer stage. The prognostic factor levels are shown as black dots on the left and the background is color coded according to the cancer stage: white (stage I), grey (stage II) and black (stage III). Edges affected by Sβ2M are represented in solid lines; those affected by serum albumin are shown in dashed lines. The dotted lines are constant edges. These “personalized” networks at the patient-level can potentially aid in developing precision medicine strategies using drugs that target specific disruptions in these signaling pathways.

Figure 5:

Sub-networks with varying structure. The prognostic factor level is shown as the black dot on the left panel for each plot together with the kernel density (Sβ2M on the x-axis and serum albumin level on the y-axis). The background is color coded according to cancer stage: white (stage I), grey (stage II) and black (stage III). Edges affected by Sβ2M are represented in solid lines; those affected by serum albumin are dashed lines. The dotted lines are constant edges. The edge width is proportional to its posterior probability.

Using the R package shiny, we develop an interactive web application (https://nystat.shinyapps.io/MMCR-app) to visualize the varying structure of the network. The application interactively displays the varying sub-network in response to the user’s input of the desired values of Sβ2M and serum albumin; a feature enabled by graph structure prediction. We provide screenshots of the application in Supplementary Material G.

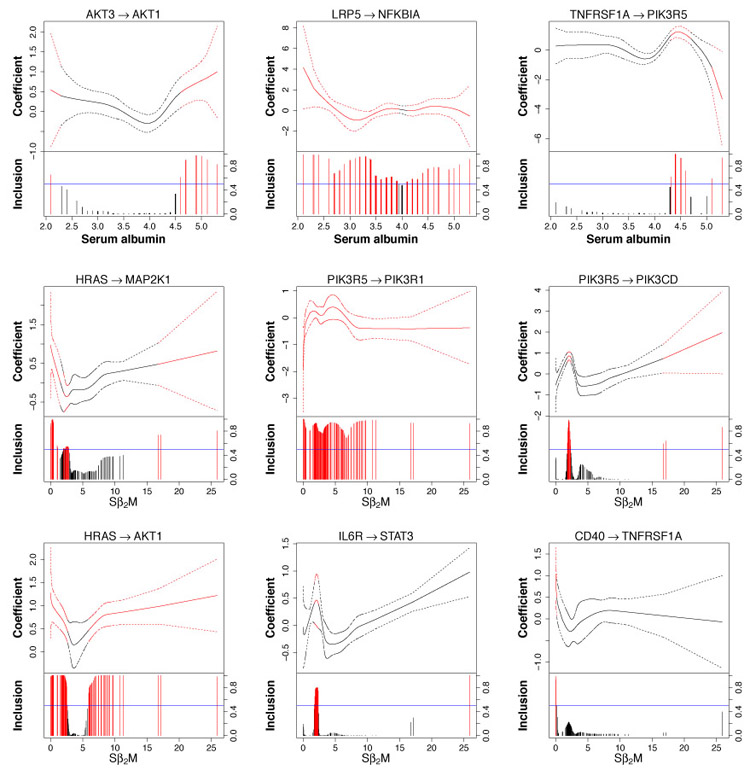

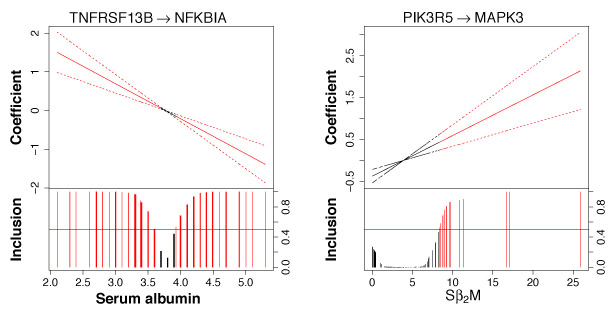

Varying gene regulatory relationships.

In Figure 6, we present 9 nonlinearly varying effects. Three of them (top panel of graphs) vary with serum albumin concentrations and the rest vary with Sβ2M. The fitted curve and 95% credible bands are shown in the top portion of each graph; posterior edge inclusion probabilities are shown in the bottom portion. Red lines and curves indicate that the effect is significant. Some of these regulatory relationships are noteworthy. For example, we observe an interesting trend between HRAS and AKT1 (bottom left graph in Figure 6). It has been found that RAS has the potential to activate the PI3K/AKT signaling pathway in multiple myeloma (Hu et al., 2003). Our study shows that HRAS activates AKT1 when Sβ2M< 2.64 or Sβ2M> 5.70 but the regulatory relationship is almost negligible when Sβ2M∈ (2.64, 5.70), which is close to the interval (3.5, 5.5) that is used for staging and corresponds to stage II multiple myeloma. In addition, we find some interesting connections for linearly varying effects (Figure 7). Many studies in multiple myeloma have revealed the importance of NF-κB activation, the inhibitor of which, NFKBIA, is degraded by TNFRSF (Silke and Brink, 2010). Our analysis (left graph in Figure 7) confirms the regulatory relationship between TNFRSF13B (a member of TNFRSF) and NFKBIA. Interestingly, the sign of the regulation switches at around the cutoff (3.5 g/dL) of serum albumin that distinguishes between stages I and II and, as expected, when serum albumin concentrations become higher, which suggests more advanced multiple myeloma, the inactivation becomes stronger.

Figure 6:

Nonlinearly varying effects. Top three graphs: CIFs vary with serum albumin concentrations. Bottom six graphs: CIFs vary with Sβ2M. For each graph, the fitted curve (solid) with 95% credible bands (dashed) are shown in the top portion and marginal posterior inclusion probabilities are shown in the bottom portion. Blue horizontal line is the 0.5 probability cutoff. Red lines and curves indicate significant coefficients.

Figure 7:

Linearly varying effects. Fitted curve (solid) with 95% credible bands (dashed) are shown in the top portion and marginal posterior inclusion probabilities are shown in the bottom portion. Blue horizontal line is the 0.5 probability cutoff. Red lines and curves indicate significant coefficients.

We assess the overall contribution of prognostic factors (Sβ2 M and serum albumin) on the varying networks across patients using a score defined in Supplementary Material F. The resulting scores are C1 = 6.5% and C2 = 5.9%, i.e., 6.5% (5.9%) of the total posterior edge inclusion probability is explained by Sβ2M (serum albumin) – thus Sβ2M has a marginally stronger effect on varying network topology than serum albumin.

6. Discussion

We have introduced a novel class of graphical models, GR, to estimate varying structure networks by incorporating additional covariates. We assume the relationships between edges and covariates are sparse and could be nonlinear. Our thresholded prior is equivalent to a mixture of a unit point mass and a non-local prior. Under the special case in which covariates are discrete, we show model selection consistency under such a prior. Empirically, GR exhibits good performance compared to VCM. We apply GR to multiple myeloma gene expression data, taking important prognostic factors as covariates, which reveals several interesting findings that include the patient-specific networks.

GR assumes a prior ordering of the nodes, which could be thought of as a limitation of our model. However, it is a common assumption of many recent DAG approaches (Shojaie and Michailidis, 2010; Altomare et al., 2013; Ni et al., 2015). Our sensitivity analysis shows reasonable performance of GR when there is moderate ordering misspecification. We provide a discussion on how to obtain a prior ordering in practice in Supplementary Material H including when the ordering is completely unknown a priori, one can first use an ordering learning algorithm and then apply GR given the estimated ordering. We test this strategy in simulation (Section 4.2) which shows satisfactory performance. Alternatively, one may want to fit an undirected graph instead. A straightforward way to apply GR for undirected graphs is through neighborhood selection (Meinshausen and Bühlmann, 2006): each node is regressed on all the other nodes instead of its parent set, and variable selection is applied to infer graph structure. The asymmetry is mediated by taking the union or intersection of the edges. However, although asymptotically it is a legitimate procedure, the asymmetry means that there is no well-defined joint distribution, hence posterior distribution, for finite samples. Therefore, we can obtain only a point estimator based on the marginal distribution of GR, but are not able to compute the posterior distribution. An alternative approach might be to focus on a decomposable graph. With this assumption, an undirected graph can be equivalently modeled as a DAG given a perfect ordering (Stingo and Marchetti, 2015). However, in the case of GR, it is not a trivial problem because the perfect ordering may change across subjects. We plan to address this issue in future work. Another direction of future work can be developing the theoretical property of GR when the covariates are continuous. One difficulty is the singularity of the joint distribution of θhj (w, z), which we hope to circumvent in the future.

Supplementary Material

Acknowledgements

V. Baladandayuthapani’s research was supported by grants from the National Cancer Institute (P30-CA016672, R01-CA160736, R01-CA194391, P50CA142509) and the National Science Foundation (1463233).

References

- Altomare D, Consonni G, and La Rocca L (2013). Objective bayesian search of gaussian directed acyclic graphical models for ordered variables with non-local priors. Biometrics, 69(2):478–487. [DOI] [PubMed] [Google Scholar]

- Bandyopadhyay S, Mehta M, Kuo D, et al. (2010). Rewiring of genetic networks in response to DNA damage. Science, 330(6009):1385–1389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyd KD, Davies FE, and Morgan GJ (2011). Novel drugs in myeloma: harnessing tumour biology to treat myeloma. In Multiple Myeloma, pages 151–187. Springer. [DOI] [PubMed] [Google Scholar]

- Cheng J, Levina E, Wang P, and Zhu J (2014). A sparse ising model with covariates. Biometrics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danaher P, Wang P, and Witten DM (2014). The joint graphical lasso for inverse covariance estimation across multiple classes. J R Stat Soc B, 76(2):373–397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Bono J and Ashworth A (2010). Translating cancer research into targeted therapeutics. Nature, 467(7315):543–549. [DOI] [PubMed] [Google Scholar]

- Dondelinger F, Lèbre S, and Husmeier D (2013). Non-homogeneous dynamic Bayesian networks with Bayesian regularization for inferring gene regulatory networks with gradually time-varying structure. Machine Learning, 90(2):191–230. [Google Scholar]

- Donoho DL and Johnstone IM (1995). Adapting to unknown smoothness via wavelet shrinkage. J Am Stat Assoc, 90(432):1200–1224. [Google Scholar]

- Eilers PHC and Marx BD (1996). Flexible smoothing with B-splines and penalties. Statistical Science, 11(2):89–121. [Google Scholar]

- Fan J, Ma Y, and Dai W (2014). Nonparametric independence screening in sparse ultra-high-dimensional varying coefficient models. J Am Stat Assoc, 109(507):1270–1284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman N and Koller D (2003). Being bayesian about network structure. a bayesian approach to structure discovery in bayesian networks. Machine learning, 50(1-2):95–125. [Google Scholar]

- Gao H-Y (1998). Wavelet shrinkage denoising using the non-negative garrote. Journal of Computational and Graphical Statistics, 7(4):469–488. [Google Scholar]

- Geiger D and Heckerman D (1996). Knowledge representation and inference in similarity networks and bayesian multinets. Artificial Intelligence, 82(1):45–74. [Google Scholar]

- Gelman A, van Dyk DA, Huang Z, and Boscardin JW (2008). Using redundant parameterizations to fit hierarchical models. J Comp Graph Stat, 17(1):95–122. [Google Scholar]

- George EI and McCulloch RE (1993). Variable selection via gibbs sampling. Journal of the American Statistical Association, 88(423):881–889. [Google Scholar]

- Ghahramani Z (1998). Learning dynamic Bayesian networks. In Adaptive Processing of Sequences and Data Structures, pages 168–197. Springer. [Google Scholar]

- Greipp PR, San Miguel J, Durie BG, et al. (2005). International staging system for multiple myeloma. Journal of Clinical Oncology, 23(15):3412–3420. [DOI] [PubMed] [Google Scholar]

- Grzegorczyk M and Husmeier D (2011). Non-homogeneous dynamic Bayesian networks for continuous data. Machine Learning, 83(3):355–419. [Google Scholar]

- Guo J, Levina E, Michailidis G, and Zhu J (2011). Joint estimation of multiple graphical models. Biometrika, page asq060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T and Tibshirani R (1993). Varying-coefficient models. J R Stat Soc B, 55(4):757–796. [Google Scholar]

- Heppner GH and Miller BE (1983). Tumor heterogeneity: biological implications and therapeutic consequences. Cancer and Metastasis Reviews, 2(1):5–23. [DOI] [PubMed] [Google Scholar]

- Hideshima T, Nakamura N, Chauhan D, et al. (2001). Biologic sequelae of interleukin-6 induced pi3-k/akt signaling in multiple myeloma. Oncogene, 20(42):5991–6000. [DOI] [PubMed] [Google Scholar]

- Hu L, Shi Y, Hsu J. h., Gera J, Van Ness B, and Lichtenstein A (2003). Downstream effectors of oncogenic ras in multiple myeloma cells. Blood, 101(8):3126–3135. [DOI] [PubMed] [Google Scholar]

- Ishwaran H and Rao JS (2005). Spike and slab variable selection: frequentist and Bayesian strategies. Annals of Statistics, pages 730–773. [Google Scholar]

- Johnson VE and Rossell D (2010). On the use of non-local prior densities in Bayesian hypothesis tests. J R Stat Soc B, 72(2):143–170. [Google Scholar]

- Johnson VE and Rossell D (2012). Bayesian model selection in high-dimensional settings. Journal of the American Statistical Association, 107(498):649–660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolar M, Parikh AP, and Xing EP (2010a). On sparse nonparametric conditional covariance selection. In ICML-10, pages 559–566. [Google Scholar]

- Kolar M, Song L, Ahmed A, and Xing EP (2010b). Estimating time-varying networks. The Annals of Applied Statistics, pages 94–123. [Google Scholar]

- Kuipers J and Moffa G (2016). Partition mcmc for inference on acyclic digraphs. Journal of the American Statistical Association, (just-accepted). [Google Scholar]

- Kumar S, Witzig T, Timm M, et al. (2003). Expression of vegf and its receptors by myeloma cells. Leukemia, 17(10):2025–2031. [DOI] [PubMed] [Google Scholar]

- Lang S and Brezger A (2004). Bayesian P-splines. J Comp Graph Stat, 13(1):183–212. [Google Scholar]

- Lauritzen SL (1996). Graphical models. Oxford University Press. [Google Scholar]

- Leow CC-Y, Gerondakis S, and Spencer A (2013). Mek inhibitors as a chemotherapeutic intervention in multiple myeloma. Blood cancer journal, 3(3):e105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H, Chen X, Wasserman L, and Lafferty JD (2010). Graph-valued regression. In Advances in Neural Information Processing Systems, pages 1423–1431. [Google Scholar]

- Lohr JG, Stojanov P, Carter SL, et al. (2014). Widespread genetic heterogeneity in multiple myeloma: implications for targeted therapy. Cancer cell, 25(1):91–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luscombe NM, Babu MM, Yu H, et al. (2004). Genomic analysis of regulatory network dynamics reveals large topological changes. Nature, 431(7006):308–312. [DOI] [PubMed] [Google Scholar]

- Meinshausen N and Bühlmann P (2006). High-dimensional graphs and variable selection with the lasso. The Annals of Statistics, pages 1436–1462. [Google Scholar]

- Murphy KP (2002). Dynamic Bayesian networks. Probabilistic Graphical Models, M. Jordan, 7. [Google Scholar]

- Ni Y, Stingo FC, and Baladandayuthapani V (2015). Bayesian nonlinear model selection for gene regulatory networks. Biometrics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyman H, Pensar J, Koski T, Corander J, et al. (2014). Stratified graphical models-context-specific independence in graphical models. Bayesian Analysis, 9(4):883–908. [Google Scholar]

- Oates CJ, Korkola J, Gray JW, Mukherjee S, et al. (2014). Joint estimation of multiple related biological networks. The Annals of Applied Statistics, 8(3):1892–1919. [Google Scholar]

- Peterson C, Stingo FC, and Vannucci M (2015). Bayesian inference of multiple gaussian graphical models. Journal of the American Statistical Association, 110(509):159–174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts P and Der C (2007). Targeting the raf-mek-erk mitogen-activated protein kinase cascade for the treatment of cancer. Oncogene, 26(22):3291–3310. [DOI] [PubMed] [Google Scholar]

- Robinson JW and Hartemink AJ (2010). Learning non-stationary dynamic Bayesian networks. Journal of Machine Learning Research, 11(Dec):3647–3680. [Google Scholar]

- Ročková V and George EI (2014). EMVS: The EM approach to Bayesian variable selection. Journal of the American Statistical Association, 109(506):828–846. [Google Scholar]

- Rossell D and Telesca D (2015). Non-local priors for high-dimensional estimation. Journal of the American Statistical Association. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruppert D, Wand MP, and Carroll RJ (2003). Semiparametric regression. Number 12. Cambridge university press. [Google Scholar]

- Scheipl F, Fahrmeir L, and Kneib T (2012). Spike-and-slab priors for function selection in structured additive regression models. J Am Stat Assoc, 107(500):1518–1532. [Google Scholar]

- Scott DW (2008). The curse of dimensionality and dimension reduction. Multivariate Density Estimation: Theory, Practice, and Visualization, pages 195–217. [Google Scholar]

- Scott JG, Berger JO, et al. (2010). Bayes and empirical-bayes multiplicity adjustment in the variable-selection problem. The Annals of Statistics, 38(5):2587–2619. [Google Scholar]

- Shin M, Bhattacharya A, and Johnson VE (2015). Scalable bayesian variable selection using nonlocal prior densities in ultrahigh-dimensional settings. arXiv preprint arXiv:1507.07106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shojaie A and Michailidis G (2010). Penalized likelihood methods for estimation of sparse high-dimensional directed acyclic graphs. Biometrika, 97(3):519–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel RL, Miller KD, and Jemal A (2015). Cancer statistics, 2015. CA: a cancer journal for clinicians, 65(1):5–29. [DOI] [PubMed] [Google Scholar]

- Silke J and Brink R (2010). Regulation of tnfrsf and innate immune signalling complexes by trafs and ciaps. Cell Death & Differentiation, 17(1):35–45. [DOI] [PubMed] [Google Scholar]

- Song L, Kolar M, and Xing EP (2009). Time-varying dynamic Bayesian networks. In Advances in Neural Information Processing Systems, pages 1732–1740. [Google Scholar]

- Stingo F and Marchetti GM (2015). Efficient local updates for undirected graphical models. Statistics and Computing, 1(25):159–171. [Google Scholar]

- Yajima M, Telesca D, Ji Y, and Müller P (2015). Detecting differential patterns of interaction in molecular pathways. Biostatistics, 16(2):240–251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou S, Lafferty J, and Wasserman L (2010). Time varying undirected graphs. Machine Learning, 80(2–3):295–319. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.