PURPOSE:

Consent processes are critical for clinical care and research and may benefit from incorporating digital strategies. We compared an electronic informed consent (eIC) option to paper consent across four outcomes: (1) technology burden, (2) protocol comprehension, (3) participant agency (ability to self-advocate), and (4) completion of required document fields.

METHODS:

We assessed participant experience with eIC processes compared with traditional paper-based consenting using surveys and compared completeness of required fields, over 3 years (2019-2021). Participants who consented to a clinical trial at a large academic cancer center via paper or eIC were invited to either pre-COVID-19 pandemic survey 1 (technology burden) or intrapandemic survey 2 (comprehension and agency). Consent document completeness was assessed via electronic health records.

RESULTS:

On survey 1, 83% of participants (n = 777) indicated eIC was easy or very easy to use; discomfort with technology overall was not correlated with discomfort using eIC. For survey 2, eIC (n = 262) and paper consenters (n = 193) had similar comprehension scores. All participants responded favorably to at least five of six agency statements; however, eIC generated a higher proportion of positive free-text comments (P < .05), with themes such as thoroughness of the discussion and consenter professionalism. eIC use yielded no completeness errors across 235 consents versus 6.4% for paper (P < .001).

CONCLUSION:

Our findings suggest that eIC when compared with paper (1) did not increase technology burden, (2) supported comparable comprehension, (3) upheld key elements of participant agency, and (4) increased completion of mandatory consent fields. The results support a broader call for organizations to offer eIC for clinical research discussions to enhance the overall participant experience and increase the completeness of the consent process.

INTRODUCTION

The informed consent (IC) process is the foundation of research participant protection and is critical to both clinical care and research. There is consensus that the IC process may benefit from incorporating digital and automation strategies, but health care digitization should not exceed the technology capacity of participants and create additional burden.1 Assessment of participant technology burden with the use of digital tools in the IC process for clinical research (CR) in this regard is not well documented.

Studies have shown that enhancing the consent experience with introductory videos, visual aids, and testing can improve participant comprehension.2-4 However, most studies to date comparing electronic informed consenting (eIC) to paper have used focus groups,5 mock studies,6 nononcologic trials with small sample sizes (N = 35, 50, 120),7-9 or disease-specific oncology trials within a single sex and small sample size (N = 71).10 A recent review of research studies where paper-based IC processes were replaced with eIC for research did not consistently find that eIC was superior in facilitating participants' comprehension of information.11 Another systematic review of digital tools in the IC process found that digitization did not adversely affect outcomes and suggested that multimedia tools were desirable.12 To date, most studies comparing paper-based IC with eIC have been diverse in design and hard to summarize because of the lack of standardization. Additionally, these studies do not cover the high-volume and complex oncology clinical trials performed at major cancer centers.

IC discussion quality is typically assessed through the lens of information retention or communication of federally required elements. Less inquiry has been conducted into participants' subjective experience of IC as a quality metric, acknowledging their critical position as research stakeholders and assessing their ability to self-advocate in the decision-making process.13 Additionally, prospective study participants are at an inherent disadvantage as the consenting professional brokers the currency of knowledge.14 There is widespread consensus that the current paper-based IC process can be improved to be more participant centered in a way that facilitates research participants making their most informed, autonomous choice.15 Current literature is lacking in studies assessing potential differences in participant agency between eIC and paper-based consenting.

There are little available data explicitly comparing CR consent document completion of mandatory fields across eIC and paper. However, studies with surgical procedures have shown that standardizing the consent process through electronic means significantly eliminated the disadvantages found in paper-based processes (illegibility, incomplete fields, and document loss) and has simultaneously been shown to increase participant and staff satisfaction.16 A recent surgical consent study using a sample size of 200 showed that eIC decreased paper consenting error rates from 32% to 1%.17 Another surgical consent completeness study showed that using eIC in 29 cases decreased paper-based consenting errors when compared with 160 paper-based cases from 10% to 40% to zero.18

To address these issues, we implemented an in-house developed eIC application for CR consenting in 2017 to support participants across the previously described four key outcomes. Our implementation was developed to digitalize the research participant consenting experience with an educational engagement model. It allows consenting professionals and participants to move through the consent document synchronously without bypassing required fields and displays the consent form in a series of easily navigable tiers. Participants self-select their preferred mode of consent and review the document in real time with their consenting professional on a tablet or laptop/desktop, either in person or via eIC-telemedicine with synchronous two-way video, voice, and screen share. eIC is enabled for CR participants who are English speaking and literate. Completed consents commit to the participant's electronic health record and MyMSK Patient Portal (PP); a paper version is available on request.

This paper describes our experiences across four key aims: (1) determine whether eIC poses added technology burden on oncology participants compared with paper-based consenting methods, (2) measure participant comprehension in the consent process via a standardized survey, (3) use participant feedback on the consent experience to establish indicators of participant agency from their unique perspective, and (4) assess eIC completion of required consent document fields versus paper.

METHODS

Setting

Memorial Sloan Kettering (MSK) is a high-volume National Cancer Institute (NCI)–designated Comprehensive Cancer Center which in 2020 had 22,822 inpatient admissions and 781,924 ambulatory visits. 46% percent of all CR participant consents for 2021 (32,696) were performed electronically (15,056). eIC use versus paper for CR consenting has grown consistently from 2019 (6,434) through 2021 (15,056, 134% increase).

Survey Approach and Design

We conducted a two-phase survey approach over 3 years using an iterative design methodology. This quality improvement approach allowed insight into participants' overall perception and general usability of eIC during survey 1. Those outcomes gave us confidence to explore more complex themes of comprehension and agency by focusing on two specific high-volume institutional protocols in survey 2.

Survey 1 assessed baseline participant experience with eIC and digital burden with technology via a five-question Likert scale-based survey assessing: comfort with technology, how difficult or easy eIC was to use, what participants liked most about eIC, suggestions for improvement, and would they recommend eIC to another participant (Data Supplement). The survey was conducted over nine prepandemic months in 2019 (February-November). Digital burden was ascertained by first assessing the participants comfort with technology in general and then asking their opinion on the eIC application's difficulty or ease of use for their consent discussion.

Survey 2 assessed comprehension of consent content and indicators of participant agency. Participants had prepandemic office visits and eIC-telemedicine visits during the pandemic in December 2019 through May 2021, during which they consented to either of our two highest accruing institutional protocols: Storage and Research Use of Human Biospecimens and Genomic Profiling in Cancer Participants (ClinicalTrials.gov identifier: NCT01775072). A ten-item (four comprehension and six agency) survey with free-text response options tested comprehension of key elements of these nontherapeutic studies. Comprehension questions were tailored to each of the two protocols (Data Supplement), and scoring was from 0% to 100%. Agency statements prompted the participant to answer yes or no. Both protocols were chosen because they are pan-institutional, disease and stage agnostic, and gave large sample sizes to compare eIC versus paper consenting. The Genomic Profiling protocol consent has a video component for both paper and eIC and is shown to each participant as part of the consent discussion. The video contains an overview of the protocol and highlights key tenets of genetic testing, including the NY State confidentiality of records of genetic tests information.

Survey Delivery

Surveys were distributed electronically through participants' PP, with responses collected and managed using a Research Electronic Data Capture (REDCap) tool hosted at MSK.19 Electronic delivery was chosen because of logistical constraints and high use of the PP by both survey cohorts: 84% for survey 1 and 88% for survey 2. To preserve confidentiality, surveys were anonymous. Surveys were sent within 72 hours of participant consent to their primary protocol with no time limit for response. This research was deemed exempt by the MSK Institutional Review Board.

Surveys, including comprehension questions and agency statements, were developed and workshopped with patient education experts, each protocol's principal investigator, Institutional Review Board, CR operations, quality assurance, and with patients who provided their feedback in mock consent and survey sessions facilitated by MSK's Patient Family Advisory Council on Quality.

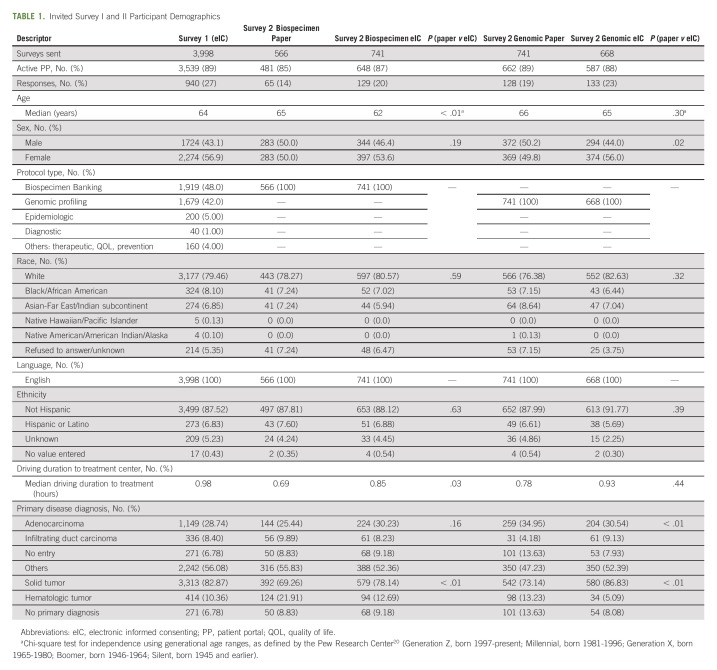

Participant Eligibility and Demographics

Eligible individuals sent PP invitations to participate were literate, English-speaking adults who had consented to a primary CR protocol. Remuneration was not offered. Surveys were not socialized in advance with potential participants during their primary protocol consent discussions. PP activity and demographic data are known for the sample to whom surveys were distributed, including age, sex, race, ethnicity, primary disease diagnosis, and zip code (Google Maps Application Programming Interface was used to calculate driving duration to the treatment location zip code; Table 1). However, data on actual respondents are unknown because of anonymization.

TABLE 1.

Invited Survey I and II Participant Demographics

Survey Distribution

Survey 1 was distributed to all first-time eIC application users consenting to any protocol in that timeframe. Consent sessions took place in-person using a tablet or laptop/desktop, and participants self-selected eIC for their primary clinical trial(s) discussion. Survey 2 participants self-selected eIC or paper to consent to either of two high-volume accruing nontherapeutic CR institutional protocols. Survey 2 was conducted intrapandemic, and consent sessions took place in person (using paper or eIC on a desktop or tablet) or via eIC-telemedicine.

Outcomes

We compared eIC with paper-based consenting across four primary outcomes whether a digital tool such as eIC (1) imposes increased technology burden, (2) improves comprehension in the consent discussion, (3) affects indicators of participant agency, and (4) increases completion of required consent document fields.

Analysis

Survey responses and completeness data were analyzed through a mixed quantitative and qualitative approach. Wilcoxon rank sum was used to compare agency scores (sum of yes responses across six total agency statements) between eIC and paper groups. Agency score is not a validated measure and was developed by the investigators as described herein. Free-text responses were reviewed by two coders for keyword indicators of content and sentiment, assigned a valence (positive, negative, and neutral), and allocated into thematic categories. Comment valences were categorized into positive versus nonpositive (ie, negative or neutral) and assessed using chi-square tests.

RESULTS

Usability and Technology Burden

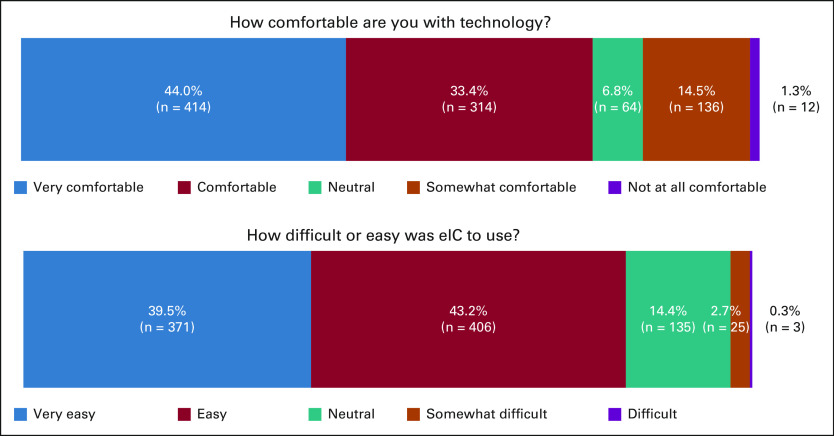

From survey 1, there were 940 respondents (of 3,539) who completed the initial five question experience survey (27% response). 15.8% of participants expressed being not at all comfortable (1.3%) or somewhat comfortable (14.5%) with technology in general; however, only 3% of participants noted that eIC was difficult (0.3%) or somewhat difficult (2.7%) to use (Fig 1). Most respondents (n = 777; 83%) indicated electronic consenting was very easy (n = 371) or easy (n = 406) to use.

FIG 1.

Survey 1 participant results on eIC application usability and technology burden. eIC, electronic informed consenting.

Most respondents (896, 95%) indicated that they would recommend eIC to another research participant. Free-text feedback from 467 participants when asked, “What did you like most about eIC?,” partitioned into three distinct themes: (1) easy to use and convenient, (2) value in having the electronic version of the final consent available for reference, and (3) the video helped with their overall comprehension of the clinical trial.

Survey 1 Demographics

Survey 1 invitees consented to 17 different parent protocols, with majority representation (90%) from the Biospecimen Banking (48%) and Genomic Profiling (42%) protocols (the same primary protocols used for survey 2; Table 1). The median driving distance to treatment was 0.98 hours.

Consent Form Comprehension

From survey 2, there were 455 respondents (of 2,378) who completed the survey (19% response). The comprehension survey for the Biospecimen Banking protocol had 65 paper consenters with an average comprehension score of 65%, versus 129 eIC users, who averaged 66%. For the Genomic Profiling protocol, 128 paper consenters had an average comprehension score of 71% versus 133 eIC users who averaged 73%. Differences in comprehension scores were not statistically significant.

Survey 2 Demographics

Survey 2 invitees were primarily seen onsite and chose to consent via eIC or paper, although eIC-telemedicine may have been used. In chi-square tests of independence, proportions of invitees by race and ethnicity were shown to be similar across the eIC and paper invitees for both protocols (P > .05). However, primary disease type, driving duration (two sample t test), and age ranges (defined generationally20) were not equally distributed among eIC and paper invitees to the Biospecimen Banking version (P < .01, P < .05, and P < .05, respectively). Primary disease diagnosis and sex of invitees were not equally distributed between eIC and paper invitees to the Genomic Profiling protocol (P < .01 and P < .05, respectively). Age, race, and ethnicity were found to be equally distributed when comparing all survey 1 invitees with all survey 2 invitees in a chi-square test of independence (P > .05); however, differences were noted in driving distance (two-sample t test), sex, primary diagnosis, and tumor type (P < .05; Table 1).

Although we did not poll participants on their access to required technology, invitees who were sent survey 1 and survey 2 had active PP usage of 84% and 88%, respectively, suggesting a high proportion of technology access. Within-protocol PP usage for survey 2 invitees: Biospecimen Banking, 85% of paper versus 87% of eIC; Genomic Profiling, 89% of paper versus 88% of eIC.

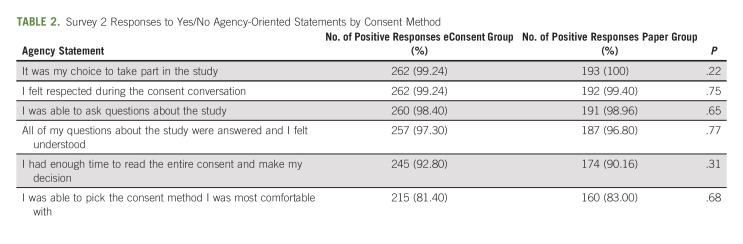

Responses to Agency-Oriented Statements

The 457 participant responses to the six agency statements in survey 2 are summarized in Table 2. Differences in agency statement responses between electronic and paper consenting methods were not statistically significant (median agency score = 6 across all groups; interquartile range = 0-1).

TABLE 2.

Survey 2 Responses to Yes/No Agency-Oriented Statements by Consent Method

Agency Free-Text Responses

One hundred fifty-eight of 262 eIC participants (62%) and 169 of 193 paper participants (87%) provided free-text responses to the agency statements. Among the total free-text responses (n = 296), 35% were determined to show positive sentiment toward the consent experience, 47% neutral, and 18% negative. For eIC, there were 44% positive comments, 15% negative, and 41% neutral comments. Paper consents generated 32% positive comments, 24% negative, and 44% neutral comments. Experiences with electronic consenting generated a significantly higher proportion of total positive comments than paper consenting (58 of 187 for eIC and 39 of 182 for paper), as compared with nonpositive comments (P < .05). Use of eIC also generated a significantly higher proportion of positive comments associated with the conduct (eg, kindness and professionalism) of the consenting professional (20 of 21 for eIC and 17 of 27 for paper) versus nonpositive comments, compared with the paper group (P < .01). Comments with positive agency sentiment included “I found the electronic version easier to follow along and read” and “It was conducted in an extremely professional way.” Examples of negative comments included “I was extremely uncomfortable signing an iPad with my finger” and “Elderly population may have difficulty with digital approach.”

Consent Method Completeness

Completion of eIC fields was 100% (0 deficiencies in 235 consents) and 94% for paper (15 deficiencies [6.4%] in 235 consents) across four protocols (both survey 2 protocols, another biospecimen protocol, and an institutional phase III therapeutic protocol). Paper consent form deficiencies included missing dates, required fields, and pages. A chi-square test of independence showed eIC to have a significant association with improved completeness versus paper (P < .001).

DISCUSSION

Survey 1 showed that our implementation of eIC was well tolerated, did not present a technology burden, and offered satisfactory functionality for participants in the consenting process. Discomfort with technology was not correlated with discomfort while using the eIC application. This suggests that the eIC application is approachable and accessible to participants even in the setting of technology aversion.

Survey 2 suggests that eIC supports equivalent comprehension of study details compared with a paper-based discussion process. Use of the informational video in the Genomic Profiling protocol regardless of consent medium may account for improved scores on the comprehension survey, and we note this for future exploration. This video is separate from the two-way video used during telemedicine.

Agency scores suggest a high degree of participant satisfaction and maintenance of a positive consent experience overall, regardless of consent medium or protocol. Scores suggest that even in cases when one or more agency-associated criteria were not met (eg, participant was not offered the choice of consent method), participants did not feel their overall experience was undermined. Noted areas for improvement are giving participants enough time to make their decision, adequate information regarding complex or detailed study elements, and consent medium choice. This study was a pilot to compare feedback on participants' eIC and paper consent experiences through the lens of agency. Further investigation is planned to establish a validated agency measure in this context.

Demographics of age, race, and ethnicity were equally distributed between survey 1 and survey 2 invitees and equally distributed between eIC and paper groups for each protocol within survey 2. However, across all survey invitees during the timeframe of recruitment, unequal distributions were noted for primary disease diagnosis, tumor type, driving duration, and sex of invitees. These may be indicative of broader consent trends within the MSK CR population. Investigation of these differences is beyond the scope of the current study, but we note them for future exploration.

As a strength, MSK is well suited to explore the consenter-consented dynamic, agency, and accessibility in the CR encounter. Additionally, the scope and functionality of our eIC platform allows consenting to occur with participants in-person and virtually via a telemedicine platform. This further allows us to distribute surveys efficiently and automatically to a large cross-section of our demographic given the considerable number of participants who choose to participate in CR protocols. Moreover, invitees who were sent survey 1 mainly consented to two primary protocols (90%): Biospecimen Banking and Genomic Profiling. These two protocols were the sole focus of survey 2.

A potential limitation was that participants' responses may be influenced by recall bias since a time limit was not imposed for survey completion. Selection bias may also have been a factor since all surveys were electronic, and participants self-selected their preferred consent medium. Recognizing that electronic surveys provide some selection bias against those with limited technology ability/access, the authors believe that this was not a significant driver for exclusion given invitees' PP use. Survey burden was likely a driver for our observed response rates, with higher survey burden resulting in more dropouts and/or nonresponse. Survey 1 had lower survey burden with five opinion questions (27% response), whereas survey 2 had higher survey burden with a 10-question comprehension and agency assessment (19% response). Other limitations are that this study was conducted at a single center, and only two protocols were chosen to assess consent comprehension and agency.

Further investigation into participant self-advocacy, knowledge as a currency of power, and the locus of decision making as elements of the consent discussion is warranted. Data are also needed for more complex therapeutic trials where participants may be seeking life-saving treatment, and, therefore, interactions may be more psychoemotionally charged. We are probing further into digital literacy and technology barriers for participants from marginalized/vulnerable populations in our current investigations. Additionally, we are investigating health equity barriers and socioeconomic status drivers for CR consenting.

In conclusion, this implementation of eIC was well received by participants, did not impose an additional technology burden, afforded similar comprehension of key study elements, and maintained specified hallmarks of an agency-driven consent experience per our selected indicators. Completeness of the consent document was improved using eIC compared with paper.

Alexia Iasonos

Stock and Other Ownership Interests: Bristol Myers Squibb/Sanofi

Consulting or Advisory Role: Intelligencia, Mirati Therapeutics

Paul Sabbatini

Honoraria: UpToDate

Research Funding: Bristol Myers Squibb (Inst), Ludwig Institute for Cancer Research (Inst)

No other potential conflicts of interest were reported.

PRIOR PRESENTATION

Presented in part at the 2022 ASCO Annual Meeting, Chicago, IL, June 3-7, 2022 and the 2020 ASCO Annual Meeting, virtual, May 29-31, 2020.

SUPPORT

This research was funded in part through the NIH/NCI Cancer Center Support Grant P30 CA008748.

AUTHOR CONTRIBUTIONS

Conception and design: Michael T. Buckley, Molly R. O'Shea, Collette Houston, Stephanie L. Terzulli, Joseph M. Lengfellner

Administrative support: Michael T. Buckley, Collette Houston, Joseph M. Lengfellner

Collection and assembly of data: Michael T. Buckley, Sangeeta Kundu, Suken Shah

Data analysis and interpretation: Michael T. Buckley, Molly R. O'Shea, Allison Lipitz-Snyderman, Gilad Kuperman, Suken Shah, Alexia Iasonos, Paul Sabbatini

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Digitalizing the Clinical Research Informed Consent Process: Assessing the Participant Experience in Comparison With Traditional Paper-Based Methods

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/op/authors/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Alexia Iasonos

Stock and Other Ownership Interests: Bristol Myers Squibb/Sanofi

Consulting or Advisory Role: Intelligencia, Mirati Therapeutics

Paul Sabbatini

Honoraria: UpToDate

Research Funding: Bristol Myers Squibb (Inst), Ludwig Institute for Cancer Research (Inst)

No other potential conflicts of interest were reported.

REFERENCES

- 1. Mair FS, Montori VM, May CR. Digital transformation could increase the burden of treatment on patients. BMJ. 2021;375:n2909. doi: 10.1136/bmj.n2909. [DOI] [PubMed] [Google Scholar]

- 2. Rowbotham MC, Astin J, Greene K, Cummings SR. Interactive informed consent: Randomized comparison with paper consents. PLoS One. 2013;8:e58603. doi: 10.1371/journal.pone.0058603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Fink AS, Prochazka AV, Henderson WG, et al. Enhancement of surgical informed consent by addition of repeat back: A multicenter, randomized controlled clinical trial. Ann Surg. 2010;252:27–36. doi: 10.1097/SLA.0b013e3181e3ec61. [DOI] [PubMed] [Google Scholar]

- 4. Grady C, Cummings SR, Rowbotham MC, et al. Informed consent. N Engl J Med. 2017;376:856–867. doi: 10.1056/NEJMra1603773. [DOI] [PubMed] [Google Scholar]

- 5. Simon CM, Schartz HA, Rosenthal GE, et al. Perspectives on electronic informed consent from patients underrepresented in research in the United States: A focus group study. J Empir Res Hum Res Ethics. 2018;13:338–348. doi: 10.1177/1556264618773883. [DOI] [PubMed] [Google Scholar]

- 6. Karunaratne AS, Korenman SG, Thomas SL, et al. Improving communication when seeking informed consent: A randomised controlled study of a computer-based method for providing information to prospective clinical trial participants. Med J Aust. 2010;192:388–392. doi: 10.5694/j.1326-5377.2010.tb03561.x. [DOI] [PubMed] [Google Scholar]

- 7. Harmell AL, Palmer BW, Jeste DV. Preliminary study of a web-based tool for enhancing the informed consent process in schizophrenia research. Schizophr Res. 2012;141:247–250. doi: 10.1016/j.schres.2012.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lindsley KA. Improving quality of the informed consent process: Developing an easy-to-read, multimodal, patient-centered format in a real-world setting. Patient Educ Couns. 2019;102:944–951. doi: 10.1016/j.pec.2018.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Blake DR, Lemay CA, Maranda LS, et al. Development and evaluation of a web-based assent for adolescents considering an HIV vaccine trial. AIDS Care. 2015;27:1005–1013. doi: 10.1080/09540121.2015.1024096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. McCarty CA, Berg R, Waudby C, et al. Long-term recall of elements of informed consent: A pilot study comparing traditional and computer-based consenting. IRB. 2015;37:1–5. [PMC free article] [PubMed] [Google Scholar]

- 11. Chen C, Lee PI, Pain KJ, et al. Replacing paper informed consent with electronic informed consent for research in academic medical centers: A scoping review. AMIA Jt Summits Transl Sci Proc. 2020;2020:80–88. [PMC free article] [PubMed] [Google Scholar]

- 12. Gesualdo F, Daverio M, Palazzani L, et al. Digital tools in the informed consent process: A systematic review. BMC Med Ethics. 2021;22:18. doi: 10.1186/s12910-021-00585-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kim S, Jabori S, O’Connell J, et al. Research methodologies in informed consent studies involving surgical and invasive procedures: Time to reexamine? Patient Educ Couns. 2013;93:559–566. doi: 10.1016/j.pec.2013.08.018. [DOI] [PubMed] [Google Scholar]

- 14. Holmes-Rovner M, Montgomery JS, Rovner DR, et al. Informed decision making: Assessment of the quality of physician communication about prostate cancer diagnosis and treatment. Med Decis Making. 2015;35:999–1009. doi: 10.1177/0272989X15597226. [DOI] [PubMed] [Google Scholar]

- 15. Lentz J, Kennett M, Perlmutter J, Forrest A. Paving the way to a more effective informed consent process: Recommendations from the Clinical Trials Transformation Initiative. Contemp Clin Trials. 2016;49:65–69. doi: 10.1016/j.cct.2016.06.005. [DOI] [PubMed] [Google Scholar]

- 16. Siracuse JJ, Benoit E, Burke J, et al. Development of a web-based surgical booking and informed consent system to reduce the potential for error and improve communication. Jt Comm J Qual Patient Saf. 2014;40:126–133. doi: 10.1016/s1553-7250(14)40016-3. [DOI] [PubMed] [Google Scholar]

- 17. Reeves JJ, Mekeel KL, Waterman RS, et al. Association of electronic surgical consent forms with entry error rates. JAMA Surg. 2020;155:777–778. doi: 10.1001/jamasurg.2020.1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. St John ER, Scott AJ, Irvine TE, et al. Completion of hand-written surgical consent forms is frequently suboptimal and could be improved by using electronically generated, procedure-specific forms. Surgeon. 2017;15:190–195. doi: 10.1016/j.surge.2015.11.004. [DOI] [PubMed] [Google Scholar]

- 19. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dimock M. Defining Generations: Where Millennials End and Gen Z Begins. Pew Research Center; 2019. https://www.pewresearch.org/fact-tank/2019/01/17/where-millennials-end-and-generation-z-begins/ [Google Scholar]