Summary

Macaque inferior temporal cortex neurons respond selectively to complex visual images, with recent work showing that they are also entrained reliably by the evolving content of natural movies. To what extent does temporal continuity itself shape the responses of high-level visual neurons? We addressed this question by measuring how cells in face-selective regions of the macaque visual cortex were affected by the manipulation of a movie’s temporal structure. Sampling a 5m movie at 1s intervals, we measured neural responses to randomized, brief stimuli of different lengths, ranging from 800ms dynamic movie snippets to 100ms static frames. We found that the disruption of temporal continuity strongly altered neural response profiles, particularly in the early response period after stimulus onset. The results suggest that models of visual system function based on discrete and randomized visual presentations may not translate well to the brain’s natural modes of operation.

eTOC Blurb

Although we experience the world as a continuous stream of information, our knowledge about the visual brain stems primarily from its responses to brief and isolated stimuli. Here we show in the macaque object pathway that selectivity to natural movie content is strongly determined by visual continuity and temporal context.

Introduction

We experience the world as a continually evolving sequence of events, scenes, and interactions. Humans and other animals use their vision to track and integrate a wide range of information, coordinating the inherent dynamics of the natural world with their own active sampling of important scene elements1,2. This integration provides a predictive context for interpreting the flow of incoming visual signals. What role might high-level visual neurons, such as those observed throughout the macaque inferior temporal cortex, play in such integration? For example, would the visual response profile of face-selective cortical neurons depend on the temporal continuity of a video? What might be the neural consequences of breaking up normal visual experience into discrete, randomized presentations?

It is difficult to make predictions on this point, since nearly all that is known about visual responses in the brain derives from the brief presentation of stimuli, often in highly reduced forms. For example, most studies of the macaque inferior temporal cortex have used isolated images of natural and artificial stimuli flashed briefly on the screen to study object selectivity among visual neurons3–7. By comparison, only a few single-unit studies have investigated how such neurons respond under more naturalistic conditions, for example when a subject is allowed to freely gaze within an evolving visual scene8–10. Thus, much more is known about how the brain responds to flashed presentations of isolated stimuli than how it responds under the continuity and complexity of everyday vision.

In some areas, researchers have studied the brain’s encoding of visual temporal sequences, such as in the processing of bodily actions4,11–14. These studies have linked the comprehension of bodily actions to the integration of form, movement, and location15, and in some cases to the generation of one’s own bodily actions11,16–18. Reductionistic investigations of sequence processing in the inferotemporal cortex has in some cases identified a marked temporal dependency, which has often been linked to Hebbian or other forms of statistical learning through repeated sequence presentation19–24. These studies provide evidence that object-selective neurons in the inferior temporal cortex exhibit significant temporal dependency in their responses.

A somewhat different facet of temporal integration involves the active sampling of a visual scene provided by the frequent redirection of gaze1,25,26. For humans and other primates, whose high-resolution vision is sharply concentrated in the fovea, the sequential gathering of important information across fixations is a central feature of visual cognition27. In normal vision, the temporal discontinuities introduced by this active sensing of the visual scene through gaze redirection is superimposed upon the temporal dynamics of actors and events in the external world. The stable perception of the world amid the constantly shifting retinal stimulation is one of the enduring puzzles of visual neuroscience28–30.

Recently, the use of naturalistic stimulus paradigms has gained popularity and has begun to complement more conventional presentation methods (for a recent review, see Leopold and Park31). The human fMRI community has, in particular, taken up the use of movies as a new paradigm for tapping into various aspects of brain function32–48, with some monkey investigators following suit49–58. One single-unit study investigated responses in macaque anterior fundus face patch to repeated 5-minute presentations of naturalistic movies full of dynamic social interaction8. Neurons in this area were strongly entrained by the movie timeline, with a high level of response repeatability across presentations of the same movie despite the free movement of the eyes. However, the role of temporal continuity in shaping neural responses to complex movie content is at present poorly understood. While not yet studied in detail, this question may be important, since our understanding of high-level vision is at present shaped strongly by the brain’s response to the presentation of discrete and isolated stimuli.

The current study addresses the role of temporal continuity on neural responses in the ventral visual pathway using a paradigm in which we systematically manipulated the temporal continuity of visual content stemming from a naturalistic movie. We recorded from cells in the macaque anterior fundus (AF) and the anterior medial (AM) face patches, in which responses to both static images and dynamic movies have previously been studied3,8,54,59–68. The goal of present study was not specifically related to face processing, but instead used the well characterized responses in these areas to understand the influence of visual continuity (the uninterrupted viewing of stimulus) and temporal context (the effect of preceding meaningful visual content) over high-level visual responses. Our approach was to sample visual snippets from throughout a movie, thus breaking its natural continuity, and to observe whether neurons would maintain their selectivity when the movie content was delivered in discrete and randomized form. We found that this disruption of temporal continuity strongly and significantly affected neural responses to the movie content in both areas we recorded, as well as over multiple timescales. The most profound impact was on the response to the initial onset of the discrete presentation, for which neural responses were nearly uncorrelated to those observed in response to the same content of the intact movie.

Results

Six macaque monkeys (Macaca mulatta) viewed intact movies and randomized movie clips (“snippets”) as visual responses were recorded from single neurons in the anterior fundus (AF) and anterior medial (AM) face patches. These regions were defined functionally using fMRI63 and targeted using methods described previously59,60 (Figure 1a, see Methods). In total, we recorded from 163 neurons in the AF face patch (Subject T: 18; Subject R: 16; Subject S: 91; Subject SR: 38) and 72 neurons in the AM face patch (Subject D: 49; Subject M: 23). For the main analyses, we combined neurons across regions into a single population after determining that the results were similar in both regions. Corresponding analyses performed separately in the two face patches are presented in supplemental material. The animals viewed multiple repetitions of an intact 5-minute movie. They also viewed randomized short snippets and static images extracted from the movie at each of 300 time points spaced by one second (see Methods). Most of the analyses described below involve comparing the responses to 300 equivalent visual segments from the original, intact movie with either 800 ms movie snippets or 100 ms static images. Corresponding responses to two additional conditions (250 and 100 ms movie snippets) are also discussed. Five of the monkeys (T, R, S, D, M) were free to direct their gaze while viewing the continuous movie and randomized snippets. In addition, two of the monkeys (S and SR), viewed the same movie and snippets under controlled fixation.

Figure 1. Experimental Design.

a) Top row shows representative frames from the intact movie that was presented during the free viewing paradigm. Middle row depicts the results of the fMRI face-patch localizer task used to target the AF (left) and AM (right) face patches. Functional localizer is overlaid on a T1w image taken after the electrodes where implanted (see Methods). The bottom row depicts the spiking response from one AF (left) and one AM (right) neuron to the viewing of multiple trials of the intact movie. The black dots represent the time of a spike with 1 ms bins, and each row is a trial. The red line is the mean spike density function for all trials. b) Schematic of the creation of the individual snippets from the original movie, and the reconstructed response to those snippets. c) Schematic of the pseudorandomized task design that was used to collect the data within and across sessions. d) Schematic for the selection of response periods based on the individual snippet lengths. e) Schematic of the regression analysis between the snippet responses and the original movie. The green ellipse represents a hypothetical goodness of fit measure.

The recordings hinged on the longitudinal recording capabilities of the implanted microwire bundles since the data for individual neurons was amassed over 8–18 daily sessions of data collection8,59(see Fig S1). Each of these sessions began with several minutes of conventional testing with flashed images from different stimulus categories, which was used both to establish each neuron’s basic selectivity and as a tool for “fingerprinting” to identify the same neurons across subsequent recording sessions. This testing was followed by all other conditions presented in randomized blocks (Figure 1c). We first investigated the effects of temporal continuity on movie content during free viewing. In this condition, the intact movie was viewed between 8 and 24 times (median for viewings per neuron: monkey T = 8, monkey R = 10, monkey S = 15, monkey M = 24, monkey D = 18). The randomized movie snippets were viewed between 7 and 37 times each (median viewings per neuron: 800 ms: T = 7, R = 10, S = 13, M = 13, D = 12; 250 ms: T = 7, R = 11, S = 16, M = 14, D = 17; 100 ms: T = 7, R = 9, S = 17, M = 13, D = 20; static image: T = 13, R = 17, S = 37, M = 33, D = 28).

Effects of manipulating temporal continuity during free viewing

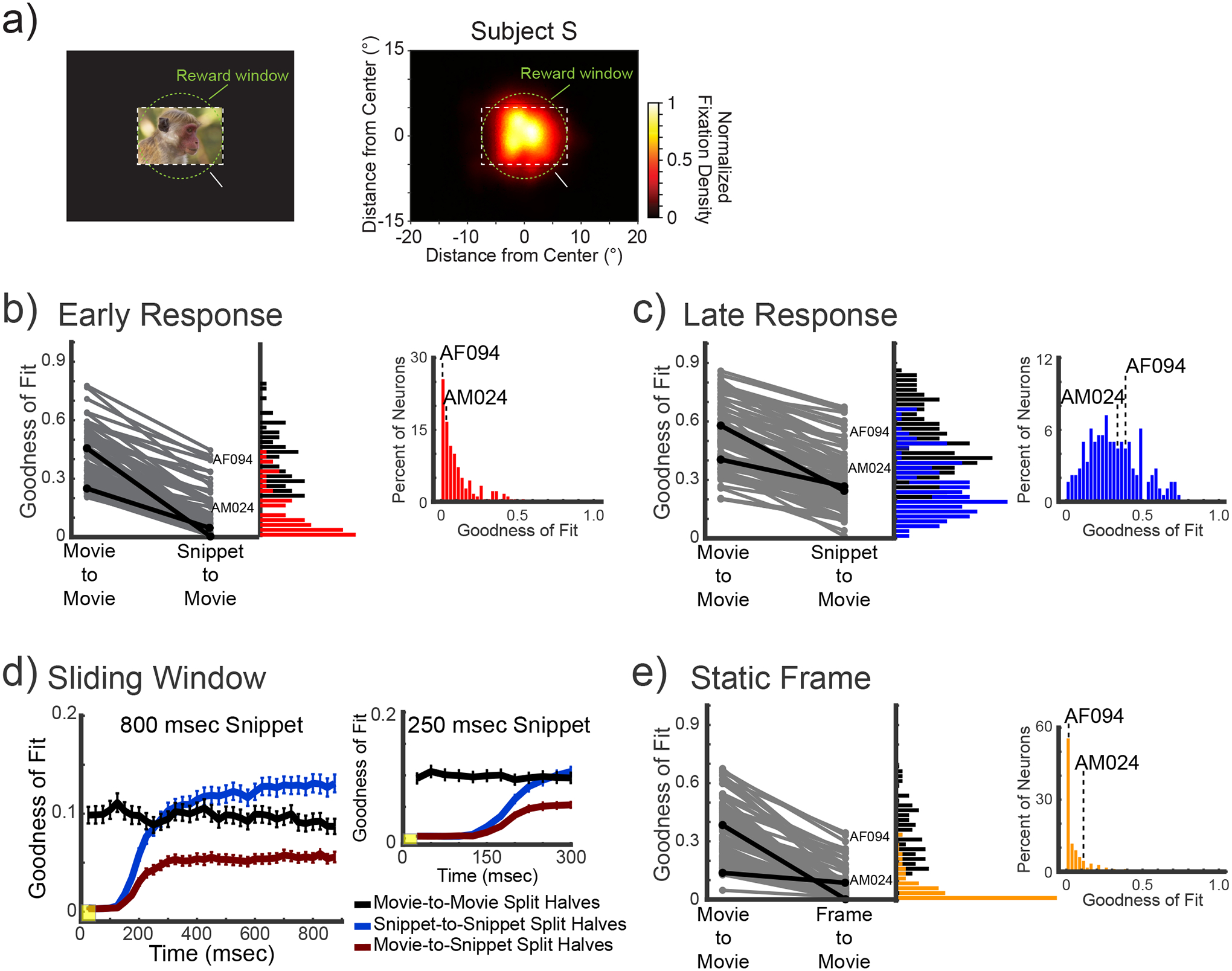

Previous work has shown that neurons in the areas we recorded are reliably entrained to the movie under conditions of free gaze, when the animal actively samples the content of the movie. We first asked whether, under these conditions, the neural responses depend on the temporal continuity of the movie. We found that breaking up the movie into snippets or frames and randomizing their presentation strongly influenced the stimulus response selectivity of neurons in these areas. The differences were most obvious in the early responses, from 100–300 ms following stimulus onset, but were also evident in the later response period to video snippets.

Figure 2 shows an example neuron (neuron AF094) responding to the same video content in continuous versus randomized snippet presentation. This neuron was typical in that it was face-selective and entrained reliably by the movie. Figure 2a shows the raw raster responses during several representative 800 ms snippets and corresponding static frame presentations. From these example rasters, the repeatability across presentations and the effects of randomization are both evident. Figure 2b then shows the reconstructed time courses to the 800 ms snippets early onset responses (red, 100–300 ms following snippet onset) and the later responses (blue, 300–900 ms following snippet onset) in comparison to the equivalent time periods from the continuous movie. The responses to the randomized presentation are reordered to match the native timeline of the movie (see Figure 1b, Methods). Both reconstructed time courses revealed notable deviations in spiking during the corresponding analysis windows of the continuous presentation (gray time courses).

Figure 2. Representative response of a neuron from area AF.

Six representative time points are featured (a), which show the diversity of response across time points and contexts. We compared stimulus-driven response during the intact movie (top row), 800 ms snippets (middle row) and 100 ms static center- frame (bottom row). The rows of spiking data show the raster plots and computed average spike density function during the three conditions for the featured time points. The mean firing rate (Hz) to the static categorical images is presented next to the snippet responses, highlighting the current neuron’s face responsiveness. This neuron’s average firing rate during the category task is shown in the right most inset. Responses to the category task were calculated over a 200ms window starting at stimulus onset and averaged across all presentation within a given category. b) Average intact movie response (gray) shown together with the reconstructed spike count responses to the early response (red, top), the late response (blue, bottom) using matching analysis windows relative to the center frame (see Figure 1b,d). c) Analysis of response to static frames (orange), compared to reconstructed response in same format as b.

Figure 2c applies a similar analysis to spiking responses to the static single frames drawn from the movie, which showed little correspondence to the spiking activity in the continuous condition. Scatterplots showing the response correspondence for the 300 sample time points are shown to the right of each reconstruction. For this example neuron, the initial onset responses to both the snippet (red) and static frame (yellow) were uncorrelated with the responses to the equivalent visual content presented in the continuous condition (snippet: r = 0.0665, p = 0.2506; static frame: r = −0.031, p = 0.5933). The responses to content later in each snippet (blue) were affected by randomization but retained their correlation with the continuous responses (r = 0.55, p = 0.01e-23). Additional single unit examples from both areas are shown in Figure S2.

Across the population, neural selectivity was strongly affected by the fragmented and randomized presentation of the movie content. Comparing the neural responses to the same movie frames presented in its original format, the most prominent changes in selectivity were observed in the response directly following the onset of the snippet or static frame. In fact, we found that for many visually responsive neurons, the selectivity measured following the onset of a snippet was statistically uncorrelated with that measured to the same frames during the native movie presentation. We quantified this relationship with a linear regression applied to the 300 samples compared across the corresponding conditions (see scatterplots in Figure 2). This analysis provided a measure of goodness-of-fit (r2), essentially summarizing the similarity in responses to the same movie content in continuous and discontinuous presentation modes.

Figures 3b and 3e show that for most neurons the visual selectivity to the initial presentation of the 800 ms snippets and static frames, respectively, had a poor correspondence to that observed during the continuous condition (Fig 3b, r2mean = 0.11 +/− 0.12; Fig 3e, r2mean =0.06 +/− 0.08). In both cases, the r2 distribution dropped sharply from a control analysis, in which a split-halves analysis was used to compute the r2 distribution from the movies using the same number of trials (paired t-test, Fig 3b, paired samples t(103)= 25.99, p = 0.5e−48; Fig 3e, paired samples t(103) = 22.18, p = 0.5e−42). These findings demonstrate that the abrupt appearance of a static image or dynamic clip on a blank background elicits visual responses that differ markedly from the responses to the same content presented within the context of the movie (see Figure S3 for analyses for separate analysis of AF and AM). In addition to the 800 ms movie snippet featured above, analysis of the onset period for the independent 250 ms and 100 ms snippet presentations yielded similar results (Figure S3).

Figure 3. Population Response changes across context and time.

a) Schematics of the free-viewing paradigm used during the movie-watching condition. Dotted areas represent the size of the stimulus (white) and reward windows (green). The reward window was not shown to the animals. The density plot shows the summed gaze positions during the free-viewing of the within context movie. b) Paired comparison within context and between context goodness-of-fits (r2) of neurons in the AF and AM face-patches during the onset period. Neurons in the pair comparison were filtered by an r2 or 0.2 in the within-context condition. The black lines highlight the example neurons presented in Figures 2 and S2b. The vertical histograms represent the density of r2-values for the split halves within-context (black) and between context (red) for the full population. The horizontal histogram, on the right, shows the r2 for all trials of the between context regression for the full population. c) depicts the same data and analyses for the late period of the 800 ms snippets. d) show the evolution of the goodness-of-fit for the within-context (black), out-of-context (blue) and between context (red) regressions using a sliding window of 50 ms (yellow box) with 25 ms jumps, for both the 800 ms and 250 ms snippets. Error bars represent the standard-error of the mean of the population within each bin. e) Shows the same set of comparisons as (b) and (c) for the response to the presentation of the static frames.

Later in the response to the snippet, this disruption of visual selectivity was less pronounced (Figure 3c). Comparing visual responses during this period to the native movie allowed us to assess the effects of temporal continuity itself. By analyzing responses beginning 300ms following the onset of the snippet, the transient effects were largely diminished (see Figure 3d), thus we could focus on potential effects of temporal randomization of responses to the movie content. Individual neurons varied in the level of disruption of their late period responses, with some neurons moderately affected by temporal reordering (e.g. Figure 2, Figure S2a) and others showing little if any influence (e.g. Figure S2b, Figure S2c). Across the combined AF/AM population, the influence of temporal continuity was evident as a broad distribution of r2 measures (Figure 3c, r2mean =0.31 +/− 0.17), which differed markedly from the narrow r2 distributions of the early responses (see Figure S3 for separate analysis of AF and AM). As above, the r2 values were contrasted with those obtained from a within-session split-halves control condition. Importantly, responses to movie content late in the snippet period were also markedly disrupted by randomized presentation (paired samples t(148) = 30.78, p = 0.3e−66), though less so than during the early period (repeated measures ANOVA (Context × Time Frame): main effect of Context F(1, 179)=510.46; p=0.3e−53; Main effect of Time Frame F(1,179)=862.39 p=0.2e−70; but no interaction F(1, 179)=0.56; p=0.45). Thus the visually selective responses in these face selective areas are also affected by temporal continuity expressed over longer time scales.

Figure 3d plots the temporal evolution of selectivity for the snippets (800ms and 250ms) relative to the same content presented in the native movie (see Methods). The running r2 values show that the movie-to-snippet correlation is very low during the initial response period, climbing gradually and approaching its plateau after approximately 300 ms. The initially low r2 values reflect the neural response to the abrupt onset of the visual snippet. Following this initial onset period, the movie-to-snippet r2 remains lower than the other within-condition comparisons during the late period, reflecting the different temporal context resulting from snippet randomization. It should be noted that the absolute r2 magnitude of the time course is lower than in the other panels because the correlation in the running analysis is computed over brief, 50ms time windows. These results demonstrate two clear response phases following the onset of the movie snippets: namely, an early onset period in which response selectivity is severely disrupted, and a later period in which the onset effects have subsided, but the temporal reordering of the movie content continues to affect visual responses.

Effect of manipulating temporal continuity during controlled fixation

The experiments above demonstrate that, when the monkey is allowed to freely direct its gaze the response to visual content depends on whether it is seen in the context of a continuous movie or isolated within an extracted snippet. While this finding indicates that temporal continuity is critical for shaping high-level neural responses, one potentially confounding factor is the prospect that the animal’s gaze position differs in the continuous versus snippet conditions and is possibly driving the context effects through different receptive field stimulation5–7,69–72. To test this possibility, we repeated the experiments above under conditions of controlled fixation. We recorded 55 neurons from two animals’ AF face patches (17 new neurons from Monkey S, and 38 neurons from task naïve Monkey SR), focusing on the repeated presentation of the full length 5-min movie and the randomized 800ms snippets. In this condition, the animals were required to maintain their gaze continuously within 2 or 1.5 degrees of a central fixation point (Figure 4a, Figure S4a), ensuring similar visual position of scene elements across trials and conditions.

Figure 4. Population Response during gaze control.

Data from the AF face patch while subjects maintained their gaze on a central fixation point. a) Schematics of the fixation paradigm used during the gaze control condition. Dotted areas represent the size of the stimulus (white) and fixation windows (green). The fixation window was not shown to the animals. Small inset windows show the summed density of gaze positions throughout the within context movie presentation. b) and c) show the paired comparison of within context and between context goodness-of-fits (r2) of neurons in the during the early and late response periods respectively in the same format as Figure 3. d) show the evolution of the goodness-of-fit for the within-context (black), out-of-context (blue) and between context (red) regressions using a sliding window of 50 ms (yellow box) with 25 ms jumps. Error bars represent the standard-error of the mean of the population within each bin. e) Paired comparison of within and between context goodness-of fit, in the same format as Figure 3b. The static frames were presented for 300 ms without ISI in this experiment.

The results from the controlled fixation condition were clear. Just as during the free viewing condition, the disruption of temporal continuity strongly and significantly altered the selectivity to specific visual content (Figure 4). As above, we measured this change through the decreased goodness-of-fit between a direct comparison of neural responses during the within-context (i.e. movie) and out-of-context (i.e. snippet) conditions, restricted to periods in which the animals were fixating continuously. For both the early period (100–300 ms; t(54)= 11.8, p = 0.15e−15; Figure 4b) and the later period (300–900 ms; t(54)= 13.501, p = 0.6e−18, Figure 4c) of the snippet response, responses were markedly affected by the removal of the video content from the natural temporal context. When we calculated the sliding window as above (Figure 4d), we again found that the correspondence between the within and out-of-context responses began to increase shortly after 100ms into the snippets and plateaued around 400ms, never reaching the r2 of the within-context responses. In contrast to the free-viewing analysis, here the snippet-to-snippet comparison showed the highest overall values, after an initial rising phase and then plateau at the same level as the movie-to-movie analysis. We interpret this increased correspondence as reflecting a brief, albeit potentially important, consequence of controlled fixation during initial snippet onset period. Finally, as above, the strongest changes in response selectivity were observed for the flashed static frames extracted from the movie. This finding was observed even when blank periods were eliminated between presentations of the static frames (Figure 4e). Thus, the nature and magnitude of the context effects closely resembled those measured during the original free-viewing condition for all the comparisons, suggesting that differences in gaze position did not underly the differences observed during continuous versus discrete and randomized stimulus presentation. This conclusion was further supported by analysis of gaze position during the free viewing experiment described earlier (Figure S4).

Relationship to face selectivity

While neural face selectivity measured with static, isolated images did not figure prominently into the design of the present study, we did confirm that many of the neurons we tested in and around the AF and AM face patches exhibited a preference for faces over other images (Figure 5a; Figure S3 for separate analysis of AF and AM), in accordance with many previous studies3,62,73–75. Specifically, we found that 88 of the 180 recorded neurons, or 49% of our free-viewing population, had a d’ greater than 0.65 which is a criterion similar to that used by previous studies73,75.

Figure 5. Interaction between face selectivity and context responses.

a) Selectivity of the combined face patch neurons to faces over scenes and objects. The left histograms depicting the density of d’ values for all neurons. Values of d’ greater than zero represent a preference for larger responses to faces. The right heat maps show normalized firing rate, to the maximum response of a given cell, for all neurons within the populations sorted by their d’ score. The blue bar on the side of the heat map shows neurons that had significant d’ values, and thus would be categorized as face selective. b) and c) show the relationship between face selectivity and our goodness-of-fit (r2) measure for the early and late response periods for the different contexts. b) depicts relationship, and lines of best fit, for the two movie-to-movie (black; early: Pearson’s correlation = 0.1109, p = 0.1383; late: Pearson’s correlation = 0.0698, p = 0.3517) and snippet-to-snippet (blue; early: Pearson’s correlation = 0.3852, p = 0.9e−7; late: Pearson’s correlation = 0.1708, p = 0.0219) goodness-of-fit measure and d’, for the early (left) and late (right) response periods). c) depicts the same comparison for the between context goodness-of-fits and a given neurons d’ score (red; early: Pearson’s correlation = 0.1706, p = 0.022; late: Pearson’s correlation = 0.0738, p = 0.3248). All p-values are Bonferroni corrected for multiple comparisons, 0.05 alpha = 0.0083.

To test whether a cell’s category selectivity had bearing on its observed dependency on temporal context during naturalistic stimulus presentation, we compared each neuron’s d’ score for face selectivity to the magnitude of its same context goodness-of-fit (Figures 5b) and its between context goodness-of-fit values (Figures 5c). The results show that while face selective neurons did not maintain their selectivity during the presentation of movies (Figure 5b, black data), those having a higher level of face selectivity maintained somewhat higher selectivity under during the early phase of the snippets (Figure 5b, blue data). Additionally, the results from the between context goodness-of-fits (Figure 5c) indicate a weak relationship, between the face selectivity d’ value and context r2 value in the early and late response periods. Thus, while the majority neurons were disrupted by temporal discontinuity regardless of their category selectivity, the early responses of face selective neurons showed a slightly higher preservation of selectivity during the snippets than other neurons.

Discussion

In the current study, we found that the responses of neurons in anterior face-selective regions of the macaque inferior temporal cortex differ depending on temporal context. Neurons responded differently to the same movie content when it was presented continuously versus when it was divided into brief snippets and shown out of context, in randomized presentation. The disruption in visual responses was particularly prominent in the period shortly following the onset of the snippet. Results were similar during free viewing and controlled fixation. In the following sections, we discuss how visual operations may differ between the continuous, free viewing of a movie and the brief, randomized presentation of its content. We also discuss the role of perceptual history in the establishment of spatial and temporal context during normal vision, as well as the bearing of these results on the activity of face-selective neurons.

Visual selectivity altered by abrupt onset

The most striking effect we observed following the presentation of snippets and static frames extracted from the movie was during the initial phase of the response. During the first 200 ms of activity following the onset of the stimulus, the neural response profile to the content extracted from the movie had virtually no correspondence to the same neurons’ responses to the same content appearing in the movie itself. Is this result to be expected? In some ways, the answer may be yes, since onset responses have long been shown to cause nonspecific transients throughout the visual system76–78. However, nonspecific transients were not the only factor reshaping neural selectivity in the early snippet responses observed in the present study. For some neurons, the response to snippet content, including the onset, were markedly decreased compared to the same content in the full movie (e.g., response at 150.5 s in Fig. 2a). Likewise, the change in selectivity cannot be attributed to long response latencies. The average response latency for both populations (combined = 118ms; AF = 115 ms; AM = 120 ms), as calculated relative to the static frame onset (see Methods), preceded the plateau observed in the goodness-of-fit time courses (Figures 3d, 4d). The low correspondence of the responses during the snippet onset presentation instead suggests that the visual continuity itself is important.

Visual continuity is an aspect of natural experience that has been absent in most experimental assays of neural selectivity, but which evidently has a significant influence on neural responses. One interpretation of the differences observed between continuous and discrete presentation conditions invokes neural adaptation, or fatigue, which can affect neural processing at all levels of the visual system. Adaptation can have many manifestations, including alterations in stimulus feature tuning, and is usually studied through continued or repetitive presentation of stimuli79,80,81. In our study, the most straightforward way adaptation might affect responses is to diminish the magnitude of responses during continuous presentation compared to the discrete flashed presentation82,83. However, this was not the case. Instead, we found the magnitude changes were stronger overall during the visually continuous presentation (see Figure S5a & S5b). This finding, combined with the clear differences in stimulus selectivity in the two conditions, does not lead to a straightforward explanation based on neural fatigue. At the same time, it is likely that some form of adaptation contributes to the difference. To systematically investigate this topic, future studies will need to vary the temporal dynamics in the presentation of movie segments and to employ carefully designed stimuli whose specific adaptational influences on subsequent presentations can be assessed.

Although the neural populations recorded in this study were from two fMRI-determined face patches, our study did not aim specifically to investigate category selectivity, nor its disruption with abrupt presentation. Our use of natural movie stimuli differs from the more conventional use of isolated objects in that the images depicted scenes containing multiple individuals and objects within a complex spatial layout information52. Because the movies were not designed to balance visual features or movie content equally, they are not well suited, for example, to systematically compare the effects of temporal continuity for faces versus other categories. Thus, more work is required to understand whether, for example, the observed effects of temporal context are related to the complex scene content or whether, for example, similar disruptions in tuning would be observed for continuous viewing of stimuli presented in isolated form or in the absence of spatial context.

Nonetheless, it bears emphasis that our understanding of high-level feature and category selectivity derives almost exclusively from the presentation of flashed, isolated stimuli. Thus, if the response selectivity observed during flashed presentation does not represent the visual preferences of neurons under more continuous conditions, current notions of feature coding and category representation in the brain may be inaccurate.

Temporal integration of category selective neurons

Beyond the striking changes in the early responses, there were also smaller but significant changes that emerged from the temporal reordering of scene content. These changes were observed in both the free viewing and controlled fixation conditions. As most studies to date have intentionally randomized stimuli that have no particular temporal relationship, relatively little is known about whether feature-selective neurons in the temporal cortex are sensitive to temporal or behavioral context. Some work, however, has suggested that where an animal is looking9,84,85 or what an animal has recently seen13 can strongly affect the responses of IT neurons. Such neurons have been shown to modify their responses based on contextual information, usually in the form of a cue or memory component9,86–93. Neurons in the superior temporal sulcus are associated with motion stimuli12,14,16,94–96, in particular biological motion, for which temporal continuity may be particularly important. Recently, it has been shown that selective neurons in macaque IT can acquire selectivity for temporal context when isolated stimuli are played in a learned temporal sequence22,23,97. Increasingly, researchers are using naturalistic stimuli as a complementary approach to conventional stimulus presentation (for a review, see Leopold and Park31).

Our results provide some insight into time scales over which neurons integrate visual information by systematically varying the amount of temporal contextual information that was presented, in the form of brief movie snippets lasting 800 ms, 250 ms, 100 ms snippets, and static frames presented for 100 ms, in each case extracted from a longer movie. We found that a subset of neurons within both the AF and AM face patches were significantly affected by the temporal reordering of movie content, even in the late snippet period, suggesting that they integrate some types of visual information at least over time scales of seconds.

In the ventral stream, few previous studies that have similarly addressed temporal integration, albeit with somewhat different methods. Hasson et al34 used fMRI in a naturalistic viewing paradigm somewhat similar to the one we implemented to investigate the length of temporal receptive fields throughout the brain. They found that temporal receptive windows, the length of coherent temporal context necessary to reliably stimulate a portion of the brain, increased systematically as information moved from early visual cortex through the visual system into the prefrontal cortex. In the human temporal cortex, they found 4–8 second spans of coherent temporal information were required create reliable and repeatable visual responses. While there are differences in methodology and experimental design, these findings match our results, likewise suggesting that neurons within the temporal lobe may incorporated greater than one second worth of contextual information in determining their response to a given stimulus8,31,48,76–78.

Conclusions

It is interesting to consider that, during periods of visual free viewing, inferior temporal cortical neurons, including those face patch neurons reported here, enter into a mode of operation marked by sequential target selection, continuity of visual content and attentional processes, and anticipation of events. In this exploratory mode, and in contrast to discrete modes of image presentation, basic visual responses may be modified by neuromodulatory inputs, along with a range of brain areas involved in memory, spatial perception, motor planning, and executive function. As experimental work continues to explore the frontiers of natural visual experience, our portrait of visual brain organization may need to evolve and expand. A central feature of natural experience that is explicitly removed from most experimental approaches is that of temporal continuity. The present study demonstrates that, for populations of well-studied feature selective neurons in the macaque high-level visual cortex, two aspects of temporal continuity, namely visual continuity, the uninterrupted viewing of a stimulus, and temporal context, the orderly progression of visual events in a scene, critically determines how the brain interprets its visual input at a given moment in time.

STAR Methods

RESOURCE AVAILABILITY

Lead contact

Further information and requests for data can be directed to either the lead contact, Brian E Russ (brian.russ@nki.rfmh.org), or the senior author, David A Leopold (leopoldd@mail.nih.gov).

Materials availability

The current study did not generate new unique reagents or materials.

Data and code availability

The data reported in this manuscript will be shared upon request by the lead contact or senior author.

Analysis code for the comparison of temporal context effects have been deposited on Github(https://github.com/beruss/Temporal_Context; doi:10.5281/zenodo.7419223).

The lead contact will provide any additional information required to reanalyze the data reported upon request.

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Five rhesus macaques (macaca mulatta) participated in the current experiments, including 2 females (ages 4–13 years at the time of the study) and 3 males (ages 4–10 years). All procedures were approved by the Animal Care and Use Committee of the National Institute of Mental Health, and followed the guidelines of the United States National Institutes of Health.

METHOD DETAILS

Surgical procedures

In an initial surgery, each animal was implanted with a fiberglass head-post, for immobilizing the head during recording sessions. After behavioral training, described below, microwire bundle electrodes (Microprobes) and adjustable microdrives were implanted into the face patches during a second surgical procedure (see Figure 1a). The electrodes, microdrives, and surgical procedures are described in previous publications59,60. In three subjects (two female, two male) the electrodes were directed to the anterior fundus face patch (AF): Subject T received bilateral 32 channel electrodes bundles; Subject R received a 64 channel bundle in the right hemisphere; and Subject S and Subject SR received a 64 channel bundle in the left hemisphere. In two other subjects (both male), electrodes were directed to the anterior medial (AM) face patches: Subject M received one 64 channel microwire bundle in the left AM; Subject D was implanted bilaterally with 128 channel microwire bundles, with the left bundle located within AM and the right bundle just adjacent to AM.

Following electrode implantation, the subjects participated in a series of behavioral tasks. During this period, the subjects’ access to liquid was controlled, such that they were motivated to receive liquid reward in exchange for participating in the experimental tasks. In the case that they did not receive sufficient liquid reward in a given session, they were supplemented with liquids in their home cage. Hydration and weights were monitored throughout the experimental period to ensure the animals’ health and well-being.

Visual Stimuli and Behavioral Monitoring

Subjects viewed a range of intact movies, randomized snippets and static frames extracted from the movies, and flashed categorical images. Image presentation and gaze fixation monitoring were under computer control using a combination of custom written QNX9 and Psychtoolbox98 or a MonkeyLogic100 code. Neurophysiological signals were recorded using TDT hardware and software. The subjects’ horizontal and vertical eye position was continuously monitored using a 60 Hz Eyelink (SR Research) system, which converted position to analog voltages that were passed to the neurophysiological and behavioral recording software. At the start of each session, the subject’s eye position was centered and calibrated using a nine-position grid with 6° visual angle separation. This calibration procedure was rerun repeatedly throughout each session to ensure good calibration during all experimental trials. Blocks of intact movie presentation, randomized snippet presentation, and categorical image presentation were randomly interleaved (Figure 1c) and took the following form.

Intact Movie Presentation.

The monkeys repeatedly viewed multiple repetitions of a 5 min movie containing a wide range of interactions, mostly between other monkeys8,52,53. The movies were presented on an LCD screen with a horizontal dimension of 15 dva. While there was no fixation, subjects were required to maintaining their gaze within the movie frame, for which they received a drop of juice reward each 2 – 2.5 s.

Randomized Snippet Presentation.

The content from the movie above was broken down into short movie snippets and static frames, which the monkey viewed in randomized order. The original 5m movie was first divided into three hundred 1 s segments, which were subsequently used to generate snippets of different lengths. The snippet durations of 800, 250, and 100 ms spanned the center frame of each 1 s movie segment. For example, the 800 ms snippet from the 151st second would present the frames of the movie from 150.10 to 150.90 s, whereas the 100 ms snippet from the same segment would display the frames from 150.45 to 150.55 s. The static frame condition presented the center frame for 100 ms. For each snippet length and the static frame condition, all 300 stimuli were presented repeatedly and in randomized order (Figure 1b). Other aspects of the stimulus were the same as in the intact movie condition, other than the 200 ms blank black screen now inserted between each presentation. As above, the subjects were permitted view the entire snippet content within the brief presentation period and were rewarded with a drop of juice and a 1.0 s break after completing approximately 2 s of trials, depending slightly on the snippet lengths. For an inappropriate fixation break, the animal would forfeit its juice for that period and incur an additional 750 ms timeout followed by repetition of the previous stimulus. For each block of the snippet task, the stimulus duration was held constant until all 300 stimuli were presented.

Categorical Image Presentation.

In these blocks, the monkeys serially viewed brief image presentations drawing from four categories, with 40 images from each category. The image categories were monkey faces, human faces, objects, natural scenes. The stimuli were randomized and presented at 12 dva for 100 ms with a 200 ms inter-stimulus interval. As above, the subjects were permitted to direct their gaze anywhere within the image. If gaze was maintained in the window for seven successive images, the subject received a liquid reward and a 1000 ms inter-trial break. An inappropriate fixation break led to forfeit of reward and an additional 750 timeout. This condition served the dual role of evaluating category selectivity and establishing a “fingerprint” for tracking the identity of single neurons across sessions60.

QUANTIFICATION AND STATISTICAL ANALYSIS

Longitudinal Neural Recording

Given the large number of conditions and presentations required in the current study, our recording paradigm hinged on the capacity to accurately record the same neurons across multiple sessions. This longitudinal tracking of individual cells required particular care in spike sorting and rules for determining the continuous isolation of neurons across sessions.

Spike Sorting.

Following each session of recording, channels were cleaned and then submitted to an automatic spike sorting algorithm. The cleaning procedure, run separately on each bank of 16 channels sharing a connector, involved regressing out the first principal component across channels. This procedure was important in our experience to combat certain types of movement and chewing-related artifacts that were shared across channels. Following this cleaning procedure, the automatic sorting program wave_clus99,101 was applied to identify and sort action potentials on individual channels. Candidate spike waveforms were first marked as any voltages exceeding four standard deviations of the noise. These candidate waveforms were then sorted automatically using PCA space, rendering a sequence of timestamps for between 0 and 4 usable waveforms from each microwire channel.

Tracking Neurons Across Days.

Verifying the isolation of a given neuron across days required a combined consideration of spike waveform, as determined by the automated spike sorting algorithm99,101, and an independent comparison of the response fingerprint, or selectivity to the flashed images (see Figure S1 for example of isolation and fingerprinting). A cell was determined to be the same as that isolated on a previous day if it (1) was recorded from the same channel, (2) had the same fingerprint signature of response selectivity, and (3) had a similar waveform. Of these three criteria, the third was the only one that was subject to flexibility, since it is well known that small positional changes in the relationship between an electrode and a neuron can change the action potential shape. However, any neuron recorded from a different electrode or having a different selectivity fingerprint across days were considered to be a different neuron. Applying these criteria, we excluded from analysis neurons that were held for fewer than 75% of the sessions, and therefore had insufficient numbers of trials for our statistical analyses. All visually responsive neurons, defined as those giving statistically significant responses in any one of the conditions, were investigated for the effects of temporal continuity, with a separate analysis investigating the specific factor of face selectivity.

Data Analysis

The main data analysis focused on comparing the neural responses to the intact movie with those elicited by the same visual content presented during the randomized 800 ms snippets. Based on pilot analysis, we divided this analysis into two distinct time windows (Figure 1d). The first analysis window analyzed spiking from 100–300 ms following the onset of the snippet, targeting the contribution of the initial response. The second analysis window then focused on the delayed, or extended, response between 300–900 ms.

For each time window, we compared the mean spike count over multiple presentations of the 300 snippets to the corresponding mean spike counts elicited by the corresponding frames in the intact movie. In the main analysis, we compared the two conditions using a linear regression, from which we derived the goodness of fit (r2) to assess the effects of context (see Figure 1e). We also reordered the randomized snippet responses into their original sequence in order to visualize any temporal disruption (see Figure 1b).

For the main analysis, the r2 parameter provided insight into the disruption in visual selectivity introduced by randomized snippet presentation. For example, r2 values of 1.0 would indicate that a neuron exhibits identical responses to the same visual content across the continuous versus randomized snippet presentation conditions, whereas an r2 value of 0.0 would correspond to a complete disruption of the stimulus selectivity, where the neural responses to the specific content were uncorrelated. For intermediate values, the r2 indicates the tightness of the stimulus selectivity relationship across conditions, or response variance explained.

In addition to the 800 ms snippets, we also analyzed neural responses to equivalent snippets of 250 and 100 ms, as well as static images of the center frame presented for 100 ms. In those cases, the presentation times were too short to allow for a separate consideration of selectivity before and after the initial stimulus onset, thus a standard response window of 50 to 250 ms was used for evaluation of the onset response.

We calculated a gaze position dependent goodness-of-fit for the early and late period responses of the 800ms snippets. For each epoch, we then evaluated the dispersion of eye positions across presentations based on the Euclidean distance to the mean of the within context movie. This created a set of neural responses that were median split into clustered, or near, and spread-out, or far-away, fixations, as defined by a trials eye positions proximity to the other presentations of the same scene. Randomized snippet trials were then sorted based on the spatial proximity of the mean gaze to that observed during continuous viewing of the same content. This allowed us to divide the snippet responses into epochs exhibiting near and far-away gaze positions relative to that observed during the continuous movie. From this data, we calculated goodness-of-fit of neural responses separately for the snippet trials in which the gaze did or did not approximate that observed during the continuous movie.

We used the same response windows for each clip to calculate a Context Index. Here we define the Context Index as the difference between the within context response and the out-of-context response divided by the sum of both context’s responses. Therefore, if a cell responded strongly to the within context condition and not at all the out-of-context response, the Context Index would be equal to 1, showing a strong preference for the within context condition. However, if the response to both conditions was nearly equal, the Context Index would equal 0, or no preference for either condition.

Neuronal latencies were estimated from their responses to the static frames using the following procedures. We first calculated the average and standard deviation of the firing rate during the 50ms prior to stimulus onset. Then for each of the 300 snippets, we calculated a response onset to their average spike rates. Using a sliding window, we found the moment were spiking activity changed (increased or decreased) by 3std of the baseline firing rate and was maintained for a minimum of 15ms. We then averaged the obtained latencies for all 300 snippets to find the mean latency for a given neuron.

Supplementary Material

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and Algorithms | ||

| MATLAB | Mathworks | https://uk.mathworks.com/products/matlab.html |

| Analysis Code | Custom Matlab pipeline | https://github.com/beruss/Temporal_Context doi:10.5281/zenodo.7419223 |

| Psychtoolbox | Brainard, D.H. (1997)98 | http://psychtoolbox.org |

| Wave_clus | Chaure, F.J., et al (2018)99 | https://github.com/csn-le/wave_clus |

| NIMH MonkeyLogic | Hwang J. et al (2019)100 | https://monkeylogic.nimh.nih.gov/index.html |

Highlights.

Face patch neuronal responses depend on temporal continuity

Neural selectivity is disrupted by randomly presenting snippets and static frames

Onset responses to dynamic movie snippets are uncorrelated with original movie

Post-onset responses are moderately disrupted by temporal context.

Acknowledgements

This work was supported by the Intramural Research Program of the National Institute of Mental Health (ZIAMH002838, ZIAMH002898) to D.A.L. Functional and anatomical MRI scanning was carried out in the Neurophysiology Imaging Facility Core (NIMH, NINDS, NEI). This work utilized the computational resources of the NIH HPC Biowulf cluster http://hpc.nih.gov).

Inclusion and Diversity

We support inclusive, diverse, and equitable conduct of research.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declaration of Interests

The authors declare no competing interests.

REFERENCES:

- 1.Schroeder CE, Wilson DA, Radman T, Scharfman H, and Lakatos P (2010). Dynamics of Active Sensing and perceptual selection. Curr Opin Neurobiol 20, 172–176. 10.1016/j.conb.2010.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Melcher D (2001). Persistence of visual memory for scenes. Nature 412, 401–401. 10.1038/35086646. [DOI] [PubMed] [Google Scholar]

- 3.Tsao DY, Freiwald WA, Tootell RBH, and Livingstone MS (2006). A Cortical Region Consisting Entirely of Face-Selective Cells. Science 311, 670–674. 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jellema T, and Perrett DI (2003). Perceptual History Influences Neural Responses to Face and Body Postures. J Cognitive Neurosci 15, 961–971. 10.1162/089892903770007353. [DOI] [PubMed] [Google Scholar]

- 5.Perrett DI, Hietanen JK, Oram MW, and Benson PJ (1992). Organization and functions of cells responsive to faces in the temporal cortex. Philosophical transactions of the Royal Society of London Series B, Biological sciences 335, 23–30. 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- 6.Desimone R, Albright T, Gross C, and Bruce C (1984). Stimulus-selective properties of inferior temporal neurons in the macaque. J Neurosci 4, 2051–2062. 10.1523/jneurosci.04-08-02051.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gross CG, Rocha-Miranda CE, and Bender DB (1972). Visual properties of neurons in inferotemporal cortex of the Macaque. Journal of Neurophysiology 35, 96–111. [DOI] [PubMed] [Google Scholar]

- 8.McMahon DBT, Russ BE, Elnaiem HD, Kurnikova AI, and Leopold DA (2015). Single-Unit Activity during Natural Vision: Diversity, Consistency, and Spatial Sensitivity among AF Face Patch Neurons. J Neurosci 35, 5537–5548. 10.1523/jneurosci.3825-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sheinberg DL, and Logothetis NK (2001). Noticing Familiar Objects in Real World Scenes: The Role of Temporal Cortical Neurons in Natural Vision. J Neurosci 21, 1340–1350. 10.1523/jneurosci.21-04-01340.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mosher CP, Zimmerman PE, and Gothard KM (2014). Neurons in the Monkey Amygdala Detect Eye Contact during Naturalistic Social Interactions. Curr Biol 24, 2459–2464. 10.1016/j.cub.2014.08.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Keysers C, and Perrett DI (2004). Demystifying social cognition: a Hebbian perspective. Trends Cogn Sci 8, 501–507. 10.1016/j.tics.2004.09.005. [DOI] [PubMed] [Google Scholar]

- 12.Nelissen K, Luppino G, Vanduffel W, Rizzolatti G, and Orban GA (2005). Observing Others: Multiple Action Representation in the Frontal Lobe. Science 310, 332–336. 10.1126/science.1115593. [DOI] [PubMed] [Google Scholar]

- 13.Singer JM, and Sheinberg DL (2010). Temporal Cortex Neurons Encode Articulated Actions as Slow Sequences of Integrated Poses. J Neurosci 30, 3133–3145. 10.1523/jneurosci.3211-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vangeneugden J, Pollick F, and Vogels R (2009). Functional Differentiation of Macaque Visual Temporal Cortical Neurons Using a Parametric Action Space. Cereb Cortex 19, 593–611. 10.1093/cercor/bhn109. [DOI] [PubMed] [Google Scholar]

- 15.Jellema T, Maassen G, and Perrett DI (2004). Single Cell Integration of Animate Form, Motion and Location in the Superior Temporal Cortex of the Macaque Monkey. Cereb Cortex 14, 781–790. 10.1093/cercor/bhh038. [DOI] [PubMed] [Google Scholar]

- 16.Nelissen K, Borra E, Gerbella M, Rozzi S, Luppino G, Vanduffel W, Rizzolatti G, and Orban GA (2011). Action Observation Circuits in the Macaque Monkey Cortex. J Neurosci 31, 3743–3756. 10.1523/jneurosci.4803-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rizzolatti G, Fogassi L, and Gallese V (2001). Neurophysiological mechanisms underlying the understanding and imitation of action. Nature Reviews Neuroscience 2, 661–670. 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- 18.Ferrari PF, Gallese V, Rizzolatti G, and Fogassi L (2003). Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. The European journal of neuroscience 17, 1703–1714. [DOI] [PubMed] [Google Scholar]

- 19.Miyashita Y (1988). Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nature 335, 817–820. 10.1038/335817a0. [DOI] [PubMed] [Google Scholar]

- 20.Meyer T, and Olson CR (2011). Statistical learning of visual transitions in monkey inferotemporal cortex. Proc National Acad Sci 108, 19401–19406. 10.1073/pnas.1112895108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Meyer T, Ramachandran S, and Olson CR (2014). Statistical Learning of Serial Visual Transitions by Neurons in Monkey Inferotemporal Cortex. J Neurosci 34, 9332–9337. 10.1523/jneurosci.1215-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Schwiedrzik CM, and Freiwald WA (2017). High-Level Prediction Signals in a Low-Level Area of the Macaque Face-Processing Hierarchy. Neuron 96, 89–97.e4. 10.1016/j.neuron.2017.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ramachandran S, Meyer T, and Olson CR (2017). Prediction suppression and surprise enhancement in monkey inferotemporal cortex. J Neurophysiol 118, 374–382. 10.1152/jn.00136.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Perrett DI, Xiao D, Barraclough NE, Keysers C, and Oram MW (2009). Seeing the future: Natural image sequences produce “anticipatory” neuronal activity and bias perceptual report. Q J Exp Psychol 62, 2081–2104. 10.1080/17470210902959279. [DOI] [PubMed] [Google Scholar]

- 25.Leszczynski M, and Schroeder CE (2019). The Role of Neuronal Oscillations in Visual Active Sensing. Frontiers Integr Neurosci 13, 32. 10.3389/fnint.2019.00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Henderson JM (2003). Human gaze control during real-world scene perception. Trends Cogn Sci 7, 498–504. 10.1016/j.tics.2003.09.006. [DOI] [PubMed] [Google Scholar]

- 27.Mitchell JF, and Leopold DA (2015). The marmoset monkey as a model for visual neuroscience. Neurosci Res 93, 20–46. 10.1016/j.neures.2015.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Efron R (1967). THE DURATION OF THE PRESENT. Ann Ny Acad Sci 138, 713–729. 10.1111/j.1749-6632.1967.tb55017.x. [DOI] [Google Scholar]

- 29.Melcher D, and Colby CL (2008). Trans-saccadic perception. Trends Cogn Sci 12, 466–473. 10.1016/j.tics.2008.09.003. [DOI] [PubMed] [Google Scholar]

- 30.Wurtz RH, Joiner WM, and Berman RA (2011). Neuronal mechanisms for visual stability: progress and problems. Philosophical Transactions of the Royal Society B: Biological Sciences 366, 492–503. 10.1098/rstb.2010.0186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Leopold DA, and Park SH (2020). Studying the visual brain in its natural rhythm. NeuroImage, 116790. 10.1016/j.neuroimage.2020.116790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bartels A, and Zeki S (2004). Functional brain mapping during free viewing of natural scenes. Human Brain Mapping 21, 75–85. 10.1002/hbm.10153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hasson U, Nir Y, Levy I, Fuhrmann G, and Malach R (2004). Intersubject Synchronization of Cortical Activity During Natural Vision. Science 303, 1634–1640. 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- 34.Hasson U, Yang E, Vallines I, Heeger DJ, and Rubin N (2008). A Hierarchy of Temporal Receptive Windows in Human Cortex. J Neurosci 28, 2539–2550. 10.1523/jneurosci.5487-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hasson U, Malach R, and Heeger DJ (2010). Reliability of cortical activity during natural stimulation. Trends in cognitive sciences 14, 40–48. 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Huth AG, Nishimoto S, Vu AT, and Gallant JL (2012). A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron 76, 1210–1224. 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hasson U, and Honey CJ (2012). Future trends in Neuroimaging: Neural processes as expressed within real-life contexts. NeuroImage 62, 1272–1278. 10.1016/j.neuroimage.2012.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wang HX, Freeman J, Merriam EP, Hasson U, and Heeger DJ (2012). Temporal eye movement strategies during naturalistic viewing. Journal of Vision 12, 16. 10.1167/12.1.16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Çukur T, Nishimoto S, Huth AG, and Gallant JL (2013). Attention during natural vision warps semantic representation across the human brain. Nature Neuroscience 16, 763–770. 10.1038/nn.3381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vanderwal T, Kelly C, Eilbott J, Mayes LC, and Castellanos FX (2015). Inscapes: A movie paradigm to improve compliance in functional magnetic resonance imaging. Neuroimage 122, 222–232. 10.1016/j.neuroimage.2015.07.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Franchak JM, Heeger DJ, Hasson U, and Adolph KE (2016). Free Viewing Gaze Behavior in Infants and Adults. Infancy 21, 262–287. 10.1111/infa.12119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Vodrahalli K, Chen P-H, Liang Y, Baldassano C, Chen J, Yong E, Honey C, Hasson U, Ramadge P, Norman KA, et al. (2017). Mapping between fMRI responses to movies and their natural language annotations. NeuroImage, 1–9. 10.1016/j.neuroimage.2017.06.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Finn ES, and Bandettini PA (2021). Movie-watching outperforms rest for functional connectivity-based prediction of behavior. Neuroimage 235, 117963–117963. 10.1016/j.neuroimage.2021.117963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Finn ES, Glerean E, Khojandi AY, Nielson D, Molfese PJ, Handwerker DA, and Bandettini PA (2020). Idiosynchrony: From shared responses to individual differences during naturalistic neuroimaging. Neuroimage 215, 116828–116828. 10.1016/j.neuroimage.2020.116828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Vanderwal T, Eilbott J, and Castellanos FX (2018). Movies in the magnet: Naturalistic paradigms in developmental functional neuroimaging. Dev Cogn Neuros-neth 36, 100600. 10.1016/j.dcn.2018.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Nastase SA, Goldstein A, and Hasson U (2020). Keep it real: rethinking the primacy of experimental control in cognitive neuroscience. Neuroimage 222, 117254. 10.1016/j.neuroimage.2020.117254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Meer JN van der, Breakspear M, Chang LJ, Sonkusare S, and Cocchi L (2020). Movie viewing elicits rich and reliable brain state dynamics. Nature communications, 1–14. 10.1038/s41467-020-18717-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Nentwich M, Leszczynski M, Russ BE, Hirsch L, Markowitz N, Sapru K, Schroeder CE, Mehta A, Bickel S, and Parra LC (2022). Semantic novelty modulates neural responses to visual change across the human brain. Biorxiv, 2022.06.20.496467. 10.1101/2022.06.20.496467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shepherd SV, Steckenfinger SA, Hasson U, and Ghazanfar AA (2010). Human-Monkey Gaze Correlations Reveal Convergent and Divergent Patterns of Movie Viewing. Curr Biol 20, 649–656. 10.1016/j.cub.2010.02.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mantini D, Hasson U, Betti V, Perrucci MG, Romani GL, Corbetta M, Orban GA, and Vanduffel W (2012). Interspecies activity correlations reveal functional correspondence between monkey and human brain areas. Nat Methods 9, 277–282. 10.1038/nmeth.1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Mantini D, Corbetta M, Romani GL, Orban GA, and Vanduffel W (2013). Evolutionarily Novel Functional Networks in the Human Brain? J Neurosci 33, 3259–3275. 10.1523/jneurosci.4392-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Russ BE, and Leopold DA (2015). Functional MRI mapping of dynamic visual features during natural viewing in the macaque. Neuroimage 109, 84–94. 10.1016/j.neuroimage.2015.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Russ BE, Kaneko T, Saleem KS, Berman RA, and Leopold DA (2016). Distinct fMRI Responses to Self-Induced versus Stimulus Motion during Free Viewing in the Macaque. J Neurosci 36, 9580–9589. 10.1523/jneurosci.1152-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Park SH, Russ BE, McMahon DBT, Koyano KW, Berman RA, and Leopold DA (2017). Functional Subpopulations of Neurons in a Macaque Face Patch Revealed by Single-Unit fMRI Mapping. Neuron 95, 971–981.e5. 10.1016/j.neuron.2017.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sliwa J, and Freiwald WA (2017). A dedicated network for social interaction processing in the primate brain. Science 356, 745–749. 10.1126/science.aam6383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ortiz-Rios M, Balezeau F, Haag M, Schmid MC, and Kaiser M (2021). Dynamic reconfiguration of macaque brain networks during natural vision. Neuroimage 244, 118615. 10.1016/j.neuroimage.2021.118615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Sakon JJ, and Suzuki WA (2021). Neural evidence for recognition of naturalistic videos in monkey hippocampus. Hippocampus 31, 916–932. 10.1002/hipo.23335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ainsworth M, Sallet J, Joly O, Kyriazis D, Kriegeskorte N, Duncan J, Schüffelgen U, Rushworth MFS, and Bell AH (2021). Viewing Ambiguous Social Interactions Increases Functional Connectivity between Frontal and Temporal Nodes of the Social Brain. J Neurosci 41, 6070–6086. 10.1523/jneurosci.0870-20.2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.McMahon DBT, Bondar IV, Afuwape OAT, Ide DC, and Leopold DA (2014). One month in the life of a neuron: longitudinal single-unit electrophysiology in the monkey visual system. J Neurophysiol 112, 1748–1762. 10.1152/jn.00052.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.McMahon DBT, Jones AP, Bondar IV, and Leopold DA (2014). Face-selective neurons maintain consistent visual responses across months. Proceedings of the National Academy of Sciences 111, 8251–8256. 10.1073/pnas.1318331111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Koyano KW, Jones AP, McMahon DBT, Waidmann EN, Russ BE, and Leopold DA (2021). Dynamic Suppression of Average Facial Structure Shapes Neural Tuning in Three Macaque Face Patches. Curr Biol 31, 1–12.e5. 10.1016/j.cub.2020.09.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Freiwald WA, Tsao DY, and Livingstone MS (2009). A face feature space in the macaque temporal lobe. Nature Neuroscience 12, 1187–1196. 10.1038/nn.2363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Tsao DY, Moeller S, and Freiwald WA (2008). Comparing face patch systems in macaques and humans. Proceedings of the National Academy of Sciences 105, 19514–19519. 10.1073/pnas.0809662105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, and Tootell RBH (2003). Faces and objects in macaque cerebral cortex. Nature Neuroscience 6, 989–995. 10.1038/nn1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Freiwald WA, and Tsao DY (2014). Neurons that keep a straight face. Proc National Acad Sci 111, 7894–7895. 10.1073/pnas.1406865111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Tsao DY, Schweers N, Moeller S, and Freiwald WA (2008). Patches of face-selective cortex in the macaque frontal lobe. Nature Neuroscience 11, 877–879. 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Russ BE, Petkov CI, Kwok SC, Zhu Q, Belin P, Vanduffel W, and Hamed SB (2021). Common functional localizers to enhance NHP & cross-species neuroscience imaging research. Neuroimage 237, 118203. 10.1016/j.neuroimage.2021.118203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Popivanov ID, Jastorff J, Vanduffel W, and Vogels R (2012). Stimulus representations in body-selective regions of the macaque cortex assessed with event-related fMRI. NeuroImage 63, 723–741. 10.1016/j.neuroimage.2012.07.013. [DOI] [PubMed] [Google Scholar]

- 69.Maunsell JH, and Essen DCV (1983). Functional properties of neurons in middle temporal visual area of the macaque monkey. I. Selectivity for stimulus direction, speed, and orientation. Journal of Neurophysiology 49, 1127–1147. [DOI] [PubMed] [Google Scholar]

- 70.Maunsell JH, and Newsome WT (1987). Visual processing in monkey extrastriate cortex. Annual review of neuroscience 10, 363–401. 10.1146/annurev.ne.10.030187.002051. [DOI] [PubMed] [Google Scholar]

- 71.Boussaoud D, Desimone R, and Ungerleider LG (1991). Visual topography of area TEO in the macaque. The Journal of comparative neurology 306, 554–575. 10.1002/cne.903060403. [DOI] [PubMed] [Google Scholar]

- 72.Taubert J, Wardle SG, Tardiff CT, Patterson A, Yu D, and Baker CI (2022). Clutter Substantially Reduces Selectivity for Peripheral Faces in the Macaque Brain. J Neurosci 42, 6739–6750. 10.1523/jneurosci.0232-22.2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Aparicio PL, Issa EB, and DiCarlo JJ (2016). Neurophysiological Organization of the Middle Face Patch in Macaque Inferior Temporal Cortex. J Neurosci 36, 12729–12745. 10.1523/jneurosci.0237-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Bell AH, Malecek NJ, Morin EL, Hadj-Bouziane F, Tootell RBH, and Ungerleider LG (2011). Relationship between Functional Magnetic Resonance Imaging-Identified Regions and Neuronal Category Selectivity. J Neurosci 31, 12229–12240. 10.1523/jneurosci.5865-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Popivanov ID, Jastorff J, Vanduffel W, and Vogels R (2014). Heterogeneous Single-Unit Selectivity in an fMRI-Defined Body-Selective Patch. J Neurosci 34, 95–111. 10.1523/jneurosci.2748-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Schiller PH, and Malpeli JG (1977). Properties and tectal projections of monkey retinal ganglion cells. J Neurophysiol 40, 1443–1443. 10.1152/jn.1977.40.6.1443-s. [DOI] [PubMed] [Google Scholar]

- 77.Müller JR, Metha AB, Krauskopf J, and Lennie P (2001). Information Conveyed by Onset Transients in Responses of Striate Cortical Neurons. J Neurosci 21, 6978–6990. 10.1523/jneurosci.21-17-06978.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Oram MW, and Perrett DI (1992). Time course of neural responses discriminating different views of the face and head. J Neurophysiol 68, 70–84. 10.1152/jn.1992.68.1.70. [DOI] [PubMed] [Google Scholar]

- 79.Feuerriegel D, Vogels R, and Kovács G (2021). Evaluating the Evidence for Expectation Suppression in the Visual System. Neurosci Biobehav Rev 126, 368–381. 10.1016/j.neubiorev.2021.04.002. [DOI] [PubMed] [Google Scholar]

- 80.Kohn A, and Movshon JA (2004). Adaptation changes the direction tuning of macaque MT neurons. Nat Neurosci 7, 764–772. 10.1038/nn1267. [DOI] [PubMed] [Google Scholar]

- 81.Jeyabalaratnam J, Bharmauria V, Bachatene L, Cattan S, Angers A, and Molotchnikoff S (2013). Adaptation Shifts Preferred Orientation of Tuning Curve in the Mouse Visual Cortex. Plos One 8, e64294. 10.1371/journal.pone.0064294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Williams NP, and Olson CR (2022). Contribution of individual features to repetition suppression in macaque inferotemporal cortex. J Neurophysiol 128, 378–394. 10.1152/jn.00475.2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Williams NP, and Olson CR (2022). Independent Repetition Suppression in Macaque Area V2 and Inferotemporal Cortex. J Neurophysiol. 10.1152/jn.00043.2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.DiCarlo JJ, and Maunsell JH (2000). Form representation in monkey inferotemporal cortex is virtually unaltered by free viewing. Nature Neuroscience 3, 814–821. 10.1038/77722. [DOI] [PubMed] [Google Scholar]

- 85.DiCarlo JJ, and Maunsell JHR (2003). Anterior inferotemporal neurons of monkeys engaged in object recognition can be highly sensitive to object retinal position. Journal of Neurophysiology 89, 3264–3278. 10.1152/jn.00358.2002. [DOI] [PubMed] [Google Scholar]

- 86.Anderson B, Mruczek REB, Kawasaki K, and Sheinberg D (2008). Effects of Familiarity on Neural Activity in Monkey Inferior Temporal Lobe. Cereb Cortex 18, 2540–2552. 10.1093/cercor/bhn015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Woloszyn L, and Sheinberg DL (2012). Effects of long-term visual experience on responses of distinct classes of single units in inferior temporal cortex. Neuron 74, 193–205. 10.1016/j.neuron.2012.01.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Miller E, Li L, and Desimone R (1991). A neural mechanism for working and recognition memory in inferior temporal cortex. Science 254, 1377–1379. 10.1126/science.1962197. [DOI] [PubMed] [Google Scholar]

- 89.Rolls ET, Baylis GC, Hasselmo ME, and Nalwa V (1989). The effect of learning on the face selective responses of neurons in the cortex in the superior temporal sulcus of the monkey. Exp Brain Res 76, 153–164. 10.1007/bf00253632. [DOI] [PubMed] [Google Scholar]

- 90.Kourtzi Z, and DiCarlo JJ (2006). Learning and neural plasticity in visual object recognition. Curr Opin Neurobiol 16, 152–158. 10.1016/j.conb.2006.03.012. [DOI] [PubMed] [Google Scholar]

- 91.Meyer T, Walker C, Cho RY, and Olson CR (2014). Image familiarization sharpens response dynamics of neurons in inferotemporal cortex. Nat Neurosci 17, 1388–1394. 10.1038/nn.3794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Vinken K, de Beeck HO, and Vogels R (2018). Face Repetition Probability Does Not Affect Repetition Suppression in Macaque Inferotemporal Cortex. J Neurosci 38, 0462–18. 10.1523/jneurosci.0462-18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Kuravi P, and Vogels R (2017). Effect of adapter duration on repetition suppression in inferior temporal cortex. Sci Rep-uk 7, 3162. 10.1038/s41598-017-03172-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Nelissen K, Vanduffel W, and Orban GA (2006). Charting the Lower Superior Temporal Region, a New Motion-Sensitive Region in Monkey Superior Temporal Sulcus. J Neurosci 26, 5929–5947. 10.1523/jneurosci.0824-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Polosecki P, Moeller S, Schweers N, Romanski LM, Tsao DY, and Freiwald WA (2013). Faces in Motion: Selectivity of Macaque and Human Face Processing Areas for Dynamic Stimuli. J Neurosci 33, 11768–11773. 10.1523/jneurosci.5402-11.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Furl N, Hadj-Bouziane F, Liu N, Averbeck BB, and Ungerleider LG (2012). Dynamic and Static Facial Expressions Decoded from Motion-Sensitive Areas in the Macaque Monkey. J Neurosci 32, 15952–15962. 10.1523/jneurosci.1992-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Feuerriegel D, Vogels R, and Kovács G (2021). Evaluating the Evidence for Expectation Suppression in the Visual System. Neurosci Biobehav Rev 126, 368–381. 10.1016/j.neubiorev.2021.04.002. [DOI] [PubMed] [Google Scholar]

- 98.Brainard DH (1997). The Psychophysics Toolbox. Spatial vision 10, 433–436. [PubMed] [Google Scholar]

- 99.Chaure FJ, Rey HG, and Quiroga RQ (2018). A novel and fully automatic spike-sorting implementation with variable number of features. Journal of Neurophysiology 120, 1859–1871. 10.1152/jn.00339.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Hwang J, Mitz AR, and Murray EA (2019). NIMH MonkeyLogic: Behavioral control and data acquisition in MATLAB. J Neurosci Meth 323, 13–21. 10.1016/j.jneumeth.2019.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Quiroga RQ, Nadasdy Z, and Ben-Shaul Y (2004). Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural computation 16, 1661–1687. 10.1162/089976604774201631. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data reported in this manuscript will be shared upon request by the lead contact or senior author.

Analysis code for the comparison of temporal context effects have been deposited on Github(https://github.com/beruss/Temporal_Context; doi:10.5281/zenodo.7419223).