Abstract

As explanations are increasingly used to understand the behavior of graph neural networks (GNNs), evaluating the quality and reliability of GNN explanations is crucial. However, assessing the quality of GNN explanations is challenging as existing graph datasets have no or unreliable ground-truth explanations. Here, we introduce a synthetic graph data generator, ShapeGGen, which can generate a variety of benchmark datasets (e.g., varying graph sizes, degree distributions, homophilic vs. heterophilic graphs) accompanied by ground-truth explanations. The flexibility to generate diverse synthetic datasets and corresponding ground-truth explanations allows ShapeGGen to mimic the data in various real-world areas. We include ShapeGGen and several real-world graph datasets in a graph explainability library, GraphXAI. In addition to synthetic and real-world graph datasets with ground-truth explanations, GraphXAI provides data loaders, data processing functions, visualizers, GNN model implementations, and evaluation metrics to benchmark GNN explainability methods.

Subject terms: Databases, Decision making, Computer science, Statistics

Introduction

As graph neural networks (GNNs) are being increasingly used for learning representations of graph-structured data in high-stakes applications, such as criminal justice1, molecular chemistry2,3, and biological networks4,5, it becomes critical to ensure that the relevant stakeholders can understand and trust their functionality. To this end, previous work developed several methods to explain predictions made by GNNs6–14. With the increase in newly proposed GNN explanation methods, it is critical to ensure their reliability. However, explainability in graph machine learning is an emerging area lacking standardized evaluation strategies and reliable data resources to evaluate, test, and compare GNN explanations15. While several works have acknowledged this difficulty, they tend to base their analysis on specific real-world2 and synthetic16 datasets with limited ground-truth explanations. In addition, relying on these datasets and associated ground-truth explanations is insufficient as they are not indicative of diverse real-world applications15. To this end, developing a broader ecosystem of data resources for benchmarking state-of-the-art GNN explainers can support explainability research in GNNs.

A comprehensive data resource to correctly evaluate the quality of GNN explanations will ensure their reliability in high-stake applications. However, the evaluation of GNN explanations is a growing research area with relatively little work, where existing approaches mainly leverage ground-truth explanations associated with specific datasets2 and are prone to several pitfalls (as outlined by Faber et al.16). Further, multiple underlying rationales can generate the correct class labels, creating redundant or non-unique explanations. A trained GNN model may only capture one or an entirely different rationale. In such cases, evaluating the explanation output by a state-of-the-art method using the ground-truth explanation is incorrect because the underlying GNN model does not rely on that ground-truth explanation. In addition, even if a unique ground-truth explanation generates the correct class label, the GNN model trained on the data could be a weak predictor using an entirely different rationale for prediction. Therefore, the ground-truth explanation cannot be used to assess post hoc explanations of such models. Lastly, the ground-truth explanations corresponding to some of the existing benchmark datasets are not good candidates for reliably evaluating explanations as they can be recovered using trivial baselines (e.g., random node or edge as explanation). The above discussion highlights a clear need for general-purpose data resources which can evaluate post hoc explanations reliably across diverse real-world applications. While various benchmark datasets (e.g., Open Graph Benchmark (OGB)17, GNNMark18, GraphGT19, MalNet20, Graph Robustness Benchmark (GRB)21, Therapeutics Data Commons22,23, and EFO-1-QA24) and programming libraries for deep learning on graphs (e.g., Dive Into Graphs (DIG)25, Pytorch Geometric (PyG)26, and Deep Graph Library (DGL)27) in graph machine learning literature exist, they are mainly used to only benchmark the performance of GNN predictors and are not suited to evaluate the correctness of GNN explainers because they do not capture ground-truth explanations.

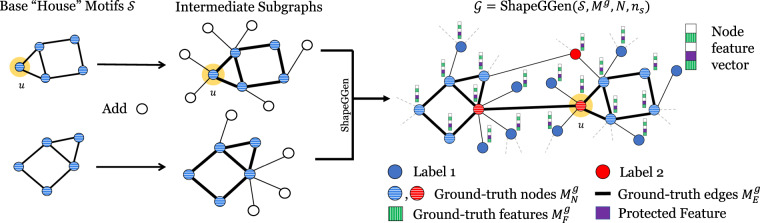

Here, we address the above challenges by introducing a general-purpose data resource that is not prone to ground-truth pitfalls (e.g., redundant explanations, weak GNN predictors, trivial explanations) and can cater to diverse real-world applications. To this end, we present ShapeGGen (Fig. 2), a novel and flexible synthetic XAI-ready (explainable artificial intelligence ready) dataset generator which can automatically generate a variety of benchmark datasets (e.g., varying graph sizes, degree distributions, homophilic vs. heterophilic graphs) accompanied by ground-truth explanations. ShapeGGen ensures that the generated ground-truth explanations are not prone to the pitfalls described in Faber et al.16, such as redundant explanations, weak GNN predictors, and trivially correct explanations. Furthermore, ShapeGGen can evaluate the goodness of any explanation (e.g., node feature-based, node-based, edge-based) across diverse real-world applications by seamlessly generating synthetic datasets that can mimic the properties of real-world data in various domains.

Fig. 2.

Overview of ShapeGGen graph dataset generator. ShapeGGen is a novel dataset generator for graph-structured data that can be used to benchmark graph explainability methods using ground-truth explanations. Graphs are created by combining subgraphs containing any given motif and additional nodes. The number of motifs in a k-hop neighborhood determines the node label (in the figure, we use a 1-hop neighborhood for labeling, and nodes with two motifs in its 1-hop neighborhood are highlighted in red). Feature explanations are some masks over important node features (green striped), with an option to add a protected feature (shown in purple) whose correlation to node labels is controllable. Node explanations are nodes contained in the motifs (horizontal striped nodes) and edge explanations (bold lines) are edges connecting nodes within motifs.

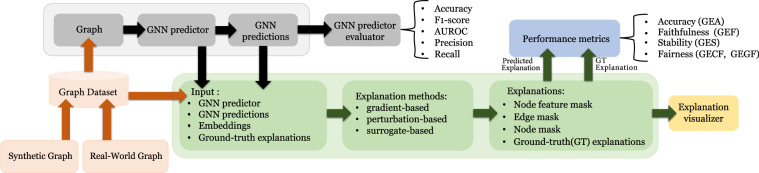

We incorporate ShapeGGen and several other synthetic and real-world graphs28 into GraphXAI, a general-purpose framework for benchmarking GNN explainers. GraphXAI also provides a broader ecosystem (Fig. 1) of data loaders, data processing functions, visualizers, and a set of evaluation metrics (e.g., accuracy, faithfulness, stability, fairness) to reliably benchmark the quality of any given GNN explanation (node feature-based or node/edge-based). We leverage various synthetic and real-world datasets and evaluation metrics from GraphXAI to empirically assess the quality of explanations output by eight state-of-the-art GNN explanation methods. Across many GNN explainers, graphs, and graph tasks, we observe that state-of-the-art GNN explainers fail on graphs with large ground-truth explanations (i.e., explanation subgraphs with a higher number of nodes and edges) and cannot produce explanations that preserve fairness properties of underlying GNN predictors.

Fig. 1.

Overview of GraphXAI. GraphXAI provides data loader classes for XAI-ready synthetic and real-world graph datasets with ground-truth explanations for evaluating GNN explainers, implementations of explanation methods, visualization functions for GNN explainers, utility functions to support new GNN explainers, and a diverse set of performance metrics to evaluate the reliability of explanations generated by GNN explainers.

Results

To evaluate GraphXAI, we show how GraphXAI enables systematic benchmarking of eight state-of-the-art GNN explainers on both ShapeGGen (in the Methods section) and real-world graph datasets. We explore the utility of the ShapeGGen generator to benchmark GNN explainers on graphs with homophilic vs. heterophilic, small vs. large, and fair vs. unfair ground-truth explanations. Additionally, we examine the utility of GNN explanations on datasets with varying degrees of informative node features. Next, we outline the experimental setup, including details about performance metrics, GNN explainers, and underlying GNN predictors, and proceed with a discussion of benchmarking results.

Experimental setup

GNN explainers

The GraphXAI defines an Explanation class capable of storing multiple types of explanations produced by GNN explainers and provides a graphxai.BaseExplainer class that serves as the base for all explanation methods in GraphXAI. We incorporate eight GNN explainability methods, including gradient-based: Grad29, GradCAM11, GuidedBP6, Integrated Gradients30; perturbation-based: GNNExplainer14, PGExplainer10, SubgraphX31; and surrogate-based methods: PGMExplainer13. Finally, following Agarwal et al.15, we consider random explanations as a reference: (1) Random Node Features, a node feature mask defined by an d-dimensional Gaussian distributed vector; (2) Random Nodes, a 1 × n node mask is randomly sampled using a uniform distribution, where n is the number of nodes in the enclosing subgraph; and (3) Random Edges, an edge mask drawn from a uniform distribution over a node’s incident edges.

Implementation details

We use a three-layer GIN model32 and a GCN model33 as GNN predictors for our experiments. We use a model comprising three GIN convolution layers with ReLU non-linear activation function and a Softmax activation for the final layer. The hidden dimensionality of the layers is set to 16. We follow an established approach for generating explanations8,15 and use reference algorithm implementations. We select top-k (k = 25%) important nodes, node features, or edges and use them to generate explanations for all graph explainability methods. For training GIN models, we use an Adam optimizer with a learning rate of 1 × 10−2, weight decay of 1 × 10−5, and the number of epochs to 1000. We use an Adam optimizer with a learning rate of 3 × 10−2, no weight decay, and 1500 training epochs for training GNN models. We set hyperparameters of GNN explainability models following the authors’ recommendations.

We use a fixed random split provided within the GraphXAI package to split the datasets. For each ShapeGGen dataset, we use a 70/5/25 split for training, validation, and testing, respectively. For MUTAG, Benzene, and Fluoride Carbonyl datasets, we use a 70/10/20 split throughout each dataset. Average performance is reported across each sample in the testing set of each dataset.

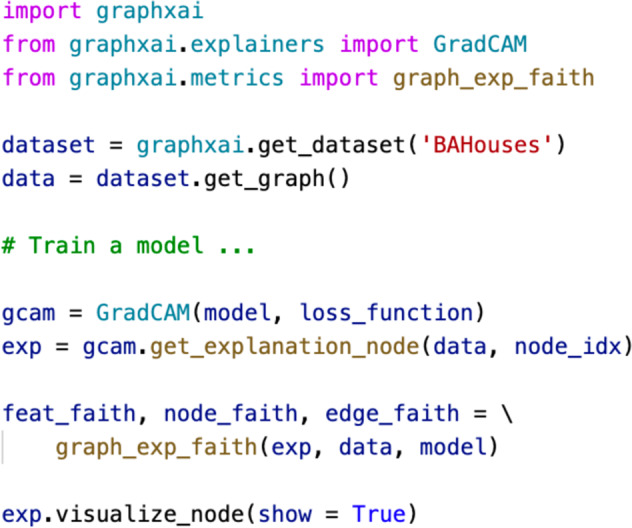

Performance metrics

In addition to the synthetic and real-world data resources, we consider four broad categories of performance metrics: (i) Graph Explanation Accuracy (GEA); (ii) Graph Explanation Faithfulness (GEF); (iii) Graph Explanation Stability (GES); and (iv) Graph Explanation Fairness (GECF, GEGF) to evaluate the explanations on the respective datasets. In particular, all evaluation metrics leverage predicted explanations, ground-truth explanations, and other user-controlled parameters, such as top-k features. Our GraphXAI package implements these performance metrics and additional utility functions within graphxai.metrics module. Figure 3 shows a code snippet for evaluating the correctness of output explanations for a given GNN prediction in GraphXAI.

Fig. 3.

Example use case of the GraphXAI package. An example of explaining a prediction in the GraphXAI package. With just a few lines of code, one can calculate an explanation for a node or graph, calculate metrics based on that explanation, and visualize the explanation.

Graph explanation accuracy (GEA)

We report graph explanation accuracy as an evaluation strategy that measures an explanation’s correctness using the ground-truth explanation Mg. In ground-truth and predicted explanation masks, every node, node feature, or edge belongs to {0, 1}, where ‘0’ means that an attribute is unimportant and ‘1’ means that it is important for the model prediction. To measure accuracy, we use Jaccard index34 between the ground-truth Mg and predicted Mp:

| 1 |

where TP denotes true positives, FP denotes false positives, and FN indicates false negatives. Most synthetic- and real-world graphs have multiple ground-truth explanations. For example, in the MUTAG dataset35, carbon rings with both NH2 or NO2 chemical groups are valid explanations for the GNN model to recognize a given molecule as mutagenic. For this reason, the accuracy metric must account for the possibility of multiple equally valid explanations existing for any given prediction. Hence, we define ζ as a set of all possible ground-truth explanations, where |ζ| = 1 for graphs having a unique explanation. Therefore, we calculate GEA as:

| 2 |

Here, we can calculate GEA using predicted node feature, node, or edge explanation masks. Finally, Eq. 1 quantifies the accuracy between the ground-truth and predicted explanation masks. Higher values mean a predicted explanation is more likely to match the ground-truth explanation.

Graph explanation faithfulness (GEF)

We extend existing faithfulness metrics2,15 to quantify how faithful explanations are to an underlying GNN predictor. In particular, we obtain the prediction probability vector using the GNN, i.e., , and using the explanation, i.e., , where we generate a masked subgraph by only keeping the original values of the top-k features identified by an explanation, and get their respective predictions . Finally, we compute the graph explanation unfaithfulness metric as:

| 3 |

where Kullback-Leibler (KL) divergence score quantifies the distance between two probability distributions, and the “||” operator indicates statistical divergence measure. Note that Eq. 3 is a measure of the unfaithfulness of the explanation. So, higher values indicate a higher degree of unfaithfulness.

Graph explanation stability (GES)

Formally, an explanation is defined to be stable if the explanation for a given graph and its perturbed counterpart (generated by making infinitesimally small perturbations to the node feature vector and associated edges) are similar15,36. We measure graph explanation stability w.r.t. the changes in the model behavior. In addition to similar output labels for and the perturbed , we employ a second level of check where the difference between model behaviors for and is bounded by an infinitesimal constant , i.e., . Here, refers to any form of model knowledge like output logits or embeddings . We compute graph explanation instability as:

| 4 |

where D represents the cosine distance metric, and are the predicted explanation masks for and , and represents a -radius ball around for which the model behavior is same. Eq. 4 is a measure of instability, and higher values indicate a higher degree of instability.

Counterfactual fairness mismatch

To measure counterfactual fairness15, we verify if the explanations corresponding to and its counterfactual counterpart (where the protected node feature is modified) are similar (dissimilar) if the underlying model predictions are similar (dissimilar). We calculate counterfactual fairness mismatch as:

| 5 |

where, Mp and are the predicted explanation mask for and for the counterfactual counterpart of . Note that we expect a lower GECF score for graphs having weakly-unfair ground-truth explanations because explanations are similar for both original and counterfactual graphs, whereas, for graphs with strongly-unfair ground-truth explanations, we expect a higher GECF score as explanations change when we modify the protected attribute.

Group fairness mismatch

We measure group fairness mismatch15 as:

| 6 |

where and are predictions for a set of graphs using the original and the essential features identified by an explanation, respectively, and SP is the statistical parity. Finally, Eq. 6 is a measure of group fairness mismatch of an explanation where higher values indicate that the explanation is not preserving group fairness.

Evaluation and analysis of GNN explainability methods

Next, we discuss experimental results that answer critical questions concerning synthetic and real-world graphs and different ground-truth explanations.

Benchmarking GNN explainers on synthetic and real-world graphs

We evaluate the performance of GNN explainers on a collection of synthetically generated graphs with various properties and molecular datasets using metrics described in the experimental setup. Results in Tables 1–5 show that, while no explanation method performs well across all properties, across different ShapeGGen node classification datasets (Table 6), SubgraphX outperforms other methods on average. In particular, SubgraphX generates 145.95% more accurate and 64.80% less unfaithful explanations than other GNN explanation methods. Gradient-based methods, such as GradCam and GuidedBP, perform the next best of all methods, with Grad producing the second-lowest unfaithfulness score and GradCAM achieving the second-highest explanation accuracy score. PGExplainer generates the least unstable explanations–35.35% less unstable explanations than the average instability across other GNN explainers. In summary, results of Tables 1–5 show that node explanation masks are more reliable than edge- and node feature explanation masks and state-of-the-art GNN explainers achieve better faithfulness for synthetic graph datasets as compared to real-world graphs.

Table 2.

Evaluation of GNN explainers on SG-Base graph dataset based on node explanation masks .

| Method | GEA (↑) | GEF (↓) | GES (↓) | GECF (↓) | GEGF (↓) |

|---|---|---|---|---|---|

| Random | 0.075 ± 0.002 | 0.638 ± 0.007 | 1.55 ± 0.004 | 1.01 ± 0.010 | 0.027 ± 0.002 |

| Grad | 0.194 ± 0.002 | 0.498 ± 0.007 | 0.745 ± 0.005 | 0.157 ± 0.004 | 0.068 ± 0.003 |

| GradCAM | 0.188 ± 0.001 | 0.620 ± 0.006 | 0.295 ± 0.005 | 0.029 ± 0.003 | 0.027 ± 0.002 |

| GuidedBP | 0.190 ± 0.001 | 0.653 ± 0.007 | 0.430 ± 0.004 | 0.074 ± 0.003 | 0.020 ± 0.002 |

| IG | 0.140 ± 0.002 | 0.672 ± 0.007 | 0.639 ± 0.004 | 0.114 ± 0.004 | 0.011 ± 0.001 |

| GNNExplainer | 0.103 ± 0.003 | 0.632 ± 0.007 | 0.431 ± 0.008 | 0.249 ± 0.007 | 0.028 ± 0.002 |

| PGMExplainer | 0.133 ± 0.002 | 0.622 ± 0.007 | 0.974 ± 0.001 | 0.798 ± 0.003 | 0.083 ± 0.003 |

| PGExplainer | 0.165 ± 0.002 | 0.635 ± 0.007 | 0.224 ± 0.004 | 0.005 ± 0.000 | 0.030 ± 0.002 |

| SubgraphX | 0.383 ± 0.004 | 0.344 ± 0.006 | 0.585 ± 0.004 | 0.225 ± 0.004 | 0.114 ± 0.004 |

Base GNN is a GCN33 as opposed to Table 1 which is based on explaining a GIN model32. Overall, explainer performance is very similar to that of the GIN with SubgraphX performing the best on faithfulness and accuracy metrics while gradient-based methods and PGExplainer typically perform best for fairness and stability.

Table 3.

Evaluation of GNN explainers on SG-Base graph dataset based on edge explanation masks .

| Method | GEA (↑) | GEF (↓) | GES (↓) | GECF (↓) | GEGF (↓) |

|---|---|---|---|---|---|

| Random | 0.135 ± 0.001 | 0.419 ± 0.007 | 1.167 ± 0.001 | 0.997 ± 0.002 | 0.064 ± 0.003 |

| GNNExplainer | 0.152 ± 0.002 | 0.302 ± 0.006 | 0.995 ± 0.001 | 0.957 ± 0.002 | 0.047 ± 0.003 |

| PGExplainer | 0.117 ± 0.002 | 0.171 ± 0.004 | 1.000 ± 0.000 | 1.000 ± 0.000 | 0.037 ± 0.003 |

| SubgraphX | 0.271 ± 0.003 | 0.548 ± 0.007 | 0.815 ± 0.005 | 0.290 ± 0.007 | 0.030 ± 0.002 |

Arrows (↑/↓) indicate the direction of better performance. SubgraphX method, on average, produces the most reliable edge explanations when evaluated across all five performance metrics. Note that of the explainers tested in this study, only the above four methods produce edge explanations.

Table 1.

Evaluation of GNN explainers on SG-Base graph dataset based on node explanation masks . Arrows (↑/↓) indicate the direction of better performance.

| Method | GEA (↑) | GEF (↓) | GES (↓) | GECF (↓) | GEGF (↓) |

|---|---|---|---|---|---|

| Random | 0.148 ± 0.002 | 0.579 ± 0.007 | 0.920 ± 0.002 | 0.763 ± 0.003 | 0.023 ± 0.002 |

| Grad | 0.193 ± 0.002 | 0.392 ± 0.006 | 0.806 ± 0.004 | 0.159 ± 0.004 | 0.039 ± 0.003 |

| GradCAM | 0.222 ± 0.002 | 0.452 ± 0.006 | 0.263 ± 0.004 | 0.010 ± 0.001 | 0.020 ± 0.002 |

| GuidedBP | 0.194 ± 0.001 | 0.557 ± 0.007 | 0.432 ± 0.004 | 0.067 ± 0.002 | 0.021 ± 0.002 |

| IG | 0.142 ± 0.002 | 0.545 ± 0.007 | 0.727 ± 0.005 | 0.110 ± 0.003 | 0.021 ± 0.002 |

| GNNExplainer | 0.102 ± 0.003 | 0.534 ± 0.007 | 0.431 ± 0.008 | 0.233 ± 0.006 | 0.027 ± 0.002 |

| PGMExplainer | 0.133 ± 0.002 | 0.541 ± 0.007 | 0.984 ± 0.001 | 0.791 ± 0.003 | 0.096 ± 0.004 |

| PGExplainer | 0.194 ± 0.002 | 0.557 ± 0.007 | 0.217 ± 0.004 | 0.009 ± 0.000 | 0.029 ± 0.002 |

| SubgraphX | 0.324 ± 0.004 | 0.254 ± 0.006 | 0.745 ± 0.005 | 0.241 ± 0.006 | 0.035 ± 0.003 |

SubgraphX far outperforms other methods in accuracy and faithfulness while PGExplainer is best for stability and counterfactual fairness. In general, gradient methods produce the most fair explanations across both counterfactual and group fairness metrics. See Tables 3–4 for results on edge and feature explanation masks.

Table 5.

Evaluation of GNN explainers for real-world molecular datasets with ground-truth explanations.

| Dataset | Method | GEA (↑) | GEF (↓) |

|---|---|---|---|

| Mutag | Random | 0.044 ± 0.007 | 0.590 ± 0.031 |

| Grad | 0.022 ± 0.006 | 0.598 ± 0.030 | |

| GradCAM | 0.085 ± 0.012 | 0.672 ± 0.029 | |

| GuidedBP | 0.036 ± 0.007 | 0.649 ± 0.030 | |

| Integrated Grad (IG) | 0.049 ± 0.010 | 0.443 ± 0.031 | |

| GNNExplainer | 0.031 ± 0.005 | 0.618 ± 0.030 | |

| PGMExplainer | 0.042 ± 0.007 | 0.503 ± 0.031 | |

| PGExplainer | 0.046 ± 0.007 | 0.504 ± 0.031 | |

| SubgraphX | 0.039 ± 0.007 | 0.611 ± 0.030 | |

| Benzene | Random | 0.108 ± 0.003 | 0.513 ± 0.012 |

| Grad | 0.122 ± 0.007 | 0.262 ± 0.011 | |

| GradCAM | 0.291 ± 0.007 | 0.551 ± 0.012 | |

| GuidedBP | 0.205 ± 0.007 | 0.438 ± 0.012 | |

| Integrated Grad (IG) | 0.044 ± 0.003 | 0.182 ± 0.010 | |

| GNNExplainer | 0.129 ± 0.005 | 0.444 ± 0.012 | |

| PGMExplainer | 0.154 ± 0.006 | 0.433 ± 0.012 | |

| PGExplainer | 0.169 ± 0.007 | 0.375 ± 0.012 | |

| SubgraphX | 0.371 ± 0.009 | 0.513 ± 0.012 | |

| Fl-Carbonyl | Random | 0.087 ± 0.007 | 0.440 ± 0.26 |

| Grad | 0.132 ± 0.010 | 0.210 ± 0.021 | |

| GradCAM | 0.005 ± 0.007 | 0.500 ± 0.026 | |

| GuidedBP | 0.089 ± 0.010 | 0.315 ± 0.024 | |

| Integrated Grad (IG) | 0.091 ± 0.007 | 0.174 ± 0.019 | |

| GNNExplainer | 0.094 ± 0.009 | 0.423 ± 0.026 | |

| PGMExplainer | 0.078 ± 0.008 | 0.426 ± 0.026 | |

| PGExplainer | 0.079 ± 0.009 | 0.372 ± 0.025 | |

| SubgraphX | 0.008 ± 0.002 | 0.466 ± 0.026 |

Arrows (↑/↓) indicate the direction of better performance. Integrated Gradient explanations obtain the lowest unfaithfulness score across all three datasets. Note that stability and fairness do not apply to these datasets because generating plausible perturbations for molecules is non-trivial, and they do not contain protected features.

Table 6.

Statistics of graphs generated using ShapeGGen data generator for evaluating different properties of GNN explanations.

| Dataset | SG-Base | SG-Heterophilic | SG-SmallEx | SG-Unfair |

|---|---|---|---|---|

| Nodes | 13150 | 13150 | 15505 | 13150 |

| Edges | 46472 | 46472 | 51782 | 46472 |

| Node features | 11 | 11 | 11 | 11 |

| Average node degree | 3.53 ± 0.02 | 3.53 ± 0.02 | 3.34 ± 0.02 | 3.53 ± 0.02 |

| Class 0 Nodes | 4382 | 4382 | 7777 | 4382 |

| Class 1 Nodes | 8768 | 8768 | 7728 | 8768 |

| Shape of the planted motif (S) | ‘house’ | ‘house’ | ‘triangle’ | ‘house' |

| Number of initial subgraphs (Ns) | 1200 | 1200 | 1300 | 1200 |

| Probability of subgraph connection (p) | 0.006 | 0.006 | 0.006 | 0.006 |

| Subgraph size (ns) | 11 | 11 | 12 | 11 |

| Number of classes (K) | 2 | 2 | 2 | 2 |

| Number of node features (nf) | 11 | 11 | 11 | 11 |

| Number of informative features (ni) | 4 | 4 | 4 | 4 |

| Class separation factor (sf) | 0.6 | 0.6 | 0.5 | 0.6 |

| Number of clusters per class (cf) | 2 | 2 | 2 | 2 |

| Protected feature noise factor (ϕ) | 0.5 | 0.5 | 0.5 | 0.75 |

| Homophily coefficient (η) | 1 | −1 | 1 | 1 |

| Number of GNN layers (L) | 3 | 3 | 3 | 3 |

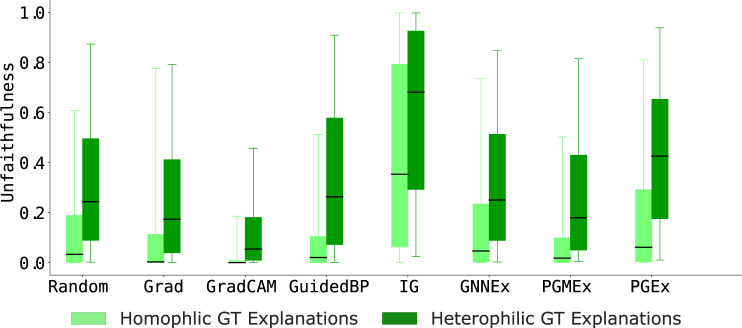

Analyzing homophilic vs. heterophilic ground-truth explanations

We compare GNN explainers by generating explanations on GNN models trained on homophilic and heterophilic graphs generated using the SG-Heterophilic generator. Then, we compute the graph explanation unfaithfulness scores of output explanations generated using state-of-the-art GNN explainers. We find that GNN explainers produce 55.98% more faithful explanations when ground-truth explanations are homophilic than when ground-truth explanations are heterophilic (i.e., low unfaithfulness scores for light green bars in Fig. 4). These results reveal an important gap in existing GNN explainers. Namely, existing GNN explainers fail to perform well on diverse graph types, such as homophilic, heterophilic and attributed graphs. This observation, enabled by the flexibility of ShapeGGen generator, highlights an opportunity for future algorithmic innovation in GNN explainability.

Fig. 4.

Unfaithfulness scores across eight GNN explainers on SG-Heterophilic graph dataset consisting of either homophilic or heterophilic ground-truth (GT) explanations. GNN explainers produce more faithful explanations (lower GEF scores) on homophilic graphs than heterophilic graphs, revealing an important limitation of existing GNN explainers.

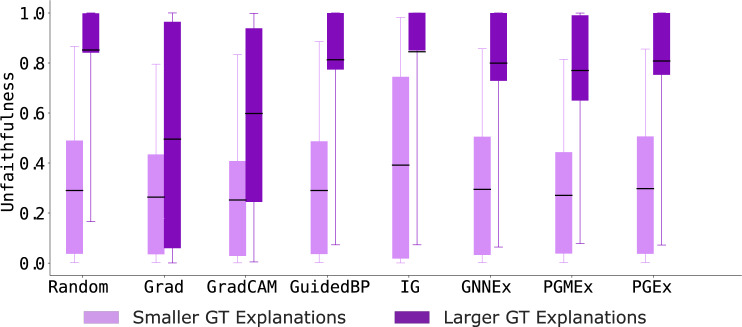

Analyzing the reliability of graph explainers to smaller vs. larger ground-truth explanations

Next, we examine the reliability of GNN explainers when used to predict explanations for models trained on graphs generated using the SG-SmallEx graph generator. Results in Fig. 5 show that explanations from existing GNN explainers are faithful (i.e., lower GEF scores) to the underlying GNN models when ground-truth explanations are smaller, i.e., S = ‘triangle’. On average, across all eight GNN explainers, we find that existing GNN explainers are highly unfaithful to graphs with large ground-truth explanations with an average GEF score of 0.7476. Further, we observe that explanations generated on ‘triangular’ (smaller) ground-truth explanations are 59.98% less unfaithful than explanations for ‘house’ (larger) ground-truth explanations (i.e., low unfaithfulness scores for light purple bars in Fig. 5). However, the Grad explainer, on average, achieves 9.33% lower unfaithfulness on large ground-truth explanations compared to other explanation methods. This limited behavior of existing GNN explainers has not been previously known and highlights an urgent need for additional analysis of GNN explainers.

Fig. 5.

Unfaithfulness scores across eight GNN explainers on SG-SmallEx graph dataset with smaller (triangle shapes) or (house shapes) ground-truth (GT) explanations. Results show that GNN explainers produce more faithful explanations (lower GEF scores) on graphs with smaller GT explanations than on graphs with larger GT explanations.

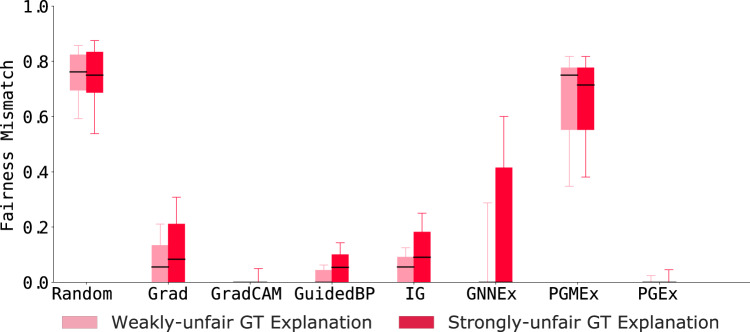

Examining fair vs. unfair ground-truth explanations

To measure the fairness of predicted explanations, we train GNN models on SG-Unfair, which generates graphs with controllable fairness properties. Next, we compute the average GECF and GEGF values for predicted explanations from eight GNN explainers. The fairness results in Fig. 6 show that GNN explainers do not preserve counterfactual fairness and are highly prone to producing unfair explanations. We note that for weakly-unfair ground-truth explanations (light red in Fig. 6), explanations Mp should not change as the label-generating process is independent of the protected attribute. Still, we observe high GECF scores for most explanation methods. For strongly-unfair ground-truth explanations, we find that explanations from most GNN explainers fail to capture (i.e., low GECF scores for dark red bars in Fig. 6) the unfairness enforced using the protected attribute and generate similar explanations even when we flip/modify the respective protected attribute. We see that GradCAM and PGEx explanations outperform other GNN explainers in preserving counterfactual explanations for weakly-unfair ground-truth explanations. In contrast, the PGMEx explainer preserves counterfactual fairness better than other explanation methods on strongly-unfair ground truth explanations. Our results highlight the importance of studying fairness in XAI as they can enhance a user’s confidence in the model and assist in detecting and correcting unwanted bias.

Fig. 6.

Counterfactual fairness mismatch scores across eight GNN explainers on SG-Unfair graph dataset with weakly-unfair or strongly-unfair ground-truth (GT) explanations. Results show that explanations produced on graphs with strongly-unfair ground-truth explanations do not preserve fairness and are sensitive to flipping/modifying the protected node feature.

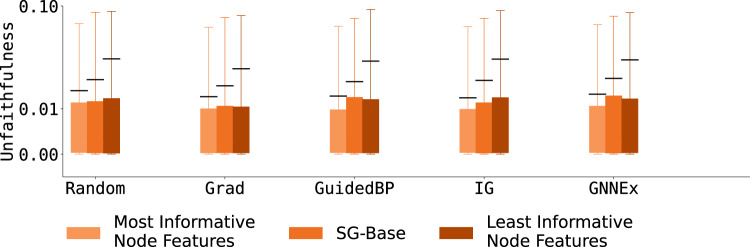

Faithfulness shift with varying degrees of node feature information

Using ShapeGGen’s support for node features and ground-truth explanations on those features, we evaluate explainers that generate explanations for node features. Results for node feature explanations on SG-Base are given in Table 4. In addition, we explore the performance of explainers under varying proportions of informative node features. Informative node features, defined in the ShapeGGen construction (Algorithm 1), are node features correlated with the label of a given node, as opposed to redundant features, which are sampled randomly from a Gaussian distribution. Figure 7 shows the results of experiments on three datasets, SG-MoreInform, SG-Base, and SG-LessInform. All datasets have similar graph topology, but SG-MoreInform has a higher proportion of informative features while SG-LessInform has a lower proportion of these informative features. SG-Base is used as a baseline with a proportion of informative features greater than SG-LessInform but less than SG-MoreInform. There are minimal differences between explainers’ faithfulness across datasets, however, unfaithfulness tends to increase with fewer informative node features. As seen in Table 4, the Gradient explainer shows the best faithfulness score across all datasets for node feature explanation. Still, this faithfulness is relatively weak, only 0.001 better than random explanation. These results show that the faithfulness of node feature explanations worsens under sparse node feature signals.

Table 4.

Evaluation of GNN explainers on SG-Base graph dataset based on node feature explanation masks .

| Method | GEA (↑) | GEF (↓) | GES (↓) | GECF (↓) | GEGF (↓) |

|---|---|---|---|---|---|

| Random | 0.281 ± 0.003 | 0.016 ± 0.001 | 0.997 ± 0.001 | 0.810 ± 0.005 | 0.023 ± 0.002 |

| Grad | 0.306 ± 0.002 | 0.015 ± 0.001 | 0.925 ± 0.003 | 0.259 ± 0.006 | 0.027 ± 0.003 |

| GuidedBP | 0.240 ± 0.003 | 0.016 ± 0.001 | 0.899 ± 0.004 | 0.275 ± 0.006 | 0.025 ± 0.002 |

| IG | 0.278 ± 0.003 | 0.016 ± 0.001 | 0.917 ± 0.004 | 0.119 ± 0.004 | 0.022 ± 0.002 |

| GNNExplainer | 0.281 ± 0.003 | 0.017 ± 0.001 | 0.999 ± 0.001 | 0.826 ± 0.005 | 0.023 ± 0.003 |

Arrows (↑/↓) indicate the direction of better performance. All GNN explainers produce highly faithful node feature explanations. However, the stability of these methods on node features is more similar to random explanations than is observed for node explanations in Table 1 and Table 2. Note that of the explainers tested in this study, only the above five methods produce node feature explanations.

Fig. 7.

Unfaithfulness scores across five GNN explainers that produce node feature explanations. Every GNN explainer is evaluated on three datasets whose network topology is equivalent to SG-Base and by varying the ratio between informative and redundant node features: most informative node features, control node features, and least informative node features. Results show that across all explainers, unfaithfulness decreases as the proportion of informative to redundant features increases, with explainers trained on the graph with the most informative node features having consistently lower unfaithfulness scores than explainers trained on graphs with the least informative node features.

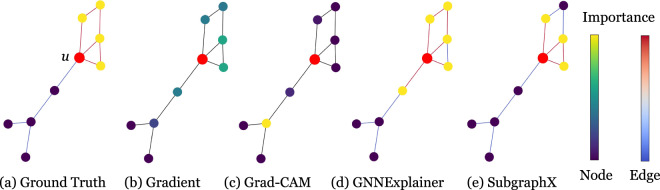

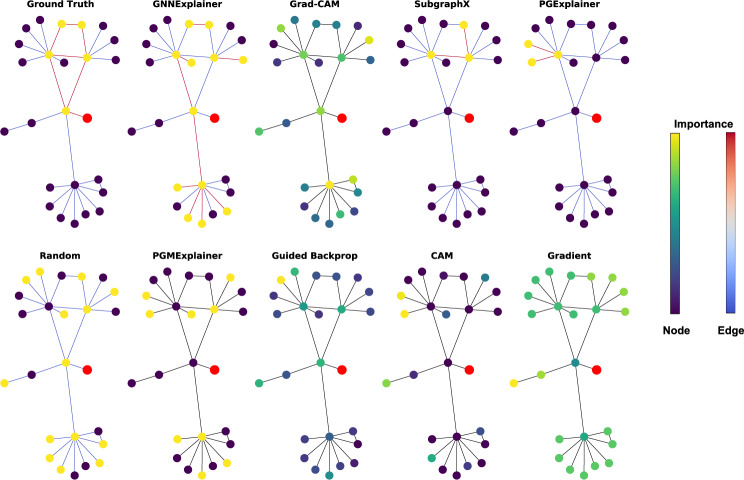

Visualization results

GraphXAI provides functions that visualize explanations produced by GNN explainability methods. Users can compare both node- and graph-level explanations. In addition, function implementations for visualization are parameterized, allowing users to change colors and weight interpretation. Functions are compatible with matplotlib37 and networkx38. Visualizations are generated by graphxai.Explanation.visualize_node for node-level explanations and graphxai.Explanation.visualize_graph functions for graph-level explanations. In Fig. 8, we show the output explanation from four different GNN explainers as produced by our visualization function. Figure 9 shows example outputs from multiple explainers in the GraphXAI package on a ShapeGGen -generated dataset.

Fig. 8.

Visualization of four explainers from the G-XAI Bench library on the BA-Shapes dataset. The visualization is for explaining the prediction of node u. We show the L + 1-hop around node u, where L is the number of layers of the GNN model predicting on the dataset. Two color bars indicate the intensity of attribution scores for the node and edge explanations. Note that edge importance is not defined for every method, so edges are set to black to indicate that the method does not provide edge scores. Visualization tools are a native part of the GraphXAI package, including user-friendly functions graphxai.Explanation.visualize_node and graphxai.Explanation.visualize_graph to visualize GNN explanations. The visualization tools in GraphXAI allow users to compare the explanations of different GNN explainers, such as gradient-based methods (Gradient and Grad-CAM) and perturbation-based methods (GNNExplainer and SubgraphX).

Fig. 9.

Example of a particularly challenging example in a ShapeGGen dataset. All explanation methods that output node-wise importance scores are shown, including the ground-truth explanation at the top of the figure. Importance and edge scores are highlighted by relative value across each explanation method, as shown by the scales at right in the figure. The central node, i.e., the node being classified in this example, is shown in red on each subgraph. Visualizations are generated by graphxai.Explanation.visualize_node, a function native to the graphxai package. Some explainers can capture portions of the ground-truth explanation, such as SubgraphX and GNNExplainer, but others attribute no importance to the ground-truth shape, such as CAM and Gradient.

Discussion

GraphXAI provides a general-purpose framework to evaluate GNN explanations produced by state-of-the-art GNN explanation methods. GraphXAI provides data loaders, data processing functions, visualizers, real-world graph datasets with ground-truth explanations, and evaluation metrics to benchmark the quality of GNN explanations. GraphXAI introduces a novel and flexible synthetic dataset generator called ShapeGGen to automatically generate benchmark datasets and corresponding ground truth explanations robust against known pitfalls of GNN explainability methods. Our experimental results show that existing GNN explainers perform well on graphs with homophilic ground-truth explanations but perform considerably worse on heterophilic and attributed graphs. Across multiple graph datasets and types of downstream prediction tasks, we show that existing GNN explanation methods fail on graphs with larger ground-truth explanations and cannot generate explanations that preserve the fairness properties of the underlying GNN model. In addition, GNN explainers tend to underperform on sparse node feature signals compared to more densely informative node features. These findings indicate the need for methodological innovation and a thorough analysis of future GNN explainability methods across performance dimensions.

GraphXAI provides a flexible framework for evaluating GNN explanation methods and promotes reproducible and transparent research. We maintain GraphXAI as a centralized library for evaluating GNN explanation methods and plan to add newer datasets, explanation methods, diverse evaluation metrics, and visualization features to our existing framework. In the current version of GraphXAI, we mostly employ real-world molecular chemistry datasets as the need for model understanding is motivated by experimental evaluation of model predictions in the laboratory, and it includes a wide variety of graph sizes (ranging from 1,768 to 12,000 instances in the dataset), node feature dimensions (ranging from 13 to 27 dimensions), and class imbalance ratios. In addition to the scale-free ShapeGGen dataset generator, we will include other random graph model generators in the next GraphXAI release to support benchmarking of GNN explainability methods on other graph types. Our evaluation metrics can be also extended to explanations from self-explaining GNNs, e.g., GraphMASK12 identifies edges at each layer of the GNN during training that can be ignored without affecting the output model predictions. In general, self-explaining GNNs also return a set of edge masks as an output explanation for a GNN prediction that can be converted to edge importance scores for computing GraphXAI metrics. We anticipate that GraphXAI can help algorithm developers and practitioners in graph representation learning develop and evaluate principled explainability techniques.

Methods

ShapeGGen is a key component of GraphXAI and serves as a synthetic dataset generator of XAI-ready graph datasets. It is founded in graph theory and designed to address the pitfalls (see Introduction) of existing graph datasets in the broad area of explainable AI. As such, ShapeGGen can facilitate the development, analysis, and evaluation of GNN explainability methods (see Results). We proceed with the description of ShapeGGen data generator.

Notation

Graphs

Let denote an undirected graph comprising of a set of nodes and a set of edges . Let denote the set of node feature vectors for all nodes in , where is an d-dimensional vector which captures the attribute values of a node v and denotes the number of nodes in the graph. Let be the graph adjacency matrix where element Auv = 1 if there exists an edge between nodes u and v and Auv = 0 otherwise. We use to denote the set of immediate neighbors of node u, . Finally, the function is defined as and outputs the degree of a node .

Graph neural networks

Most GNNs can be formulated as message passing networks39 using three operators: Msg, Agg, and Upd. In a L-layer GNN, these operators are recursively applied on , specifying how neural messages (i.e. embeddings) are exchanged between nodes, aggregated, and transformed to arrive at node representations in the last layer of transformations. Formally, a message between a pair of nodes (u, v) in layer l is defined as a function of hidden representations of nodes and from the previous layer: . In Agg, messages from all nodes are aggregated as: . In Upd, the aggregated message is combined with to produce u’s representation for layer l as . Final node representation is the output of the last layer. Lastly, let f denote a downstream GNN classification model that maps the node representation zu to a softmax prediction vector , where C is the total number of labels.

GNN explainability methods

Given the prediction f(u) for node u made by a GNN model, a GNN explainer outputs an explanation mask Mp that provides an explanation of f(u). These explanations can be given with respect to node attributes , nodes , or edges , depending on specific GNN explainer, such as GNNExplainer14, PGExplainer10, and SubgraphX31. For all explanation methods, we use a graph masking function MASK that outputs a new graph by performing element-wise multiplication operation between the masks (Ma, Mn, Me) and their respective attributes in the original graph , e.g. A′ = A ⊙ Me. Finally, we denote the ground-truth explanation mask as Mg that is used to evaluate the performance of GNN explainers.

ShapeGGen dataset generator

We propose a novel and flexible synthetic dataset generator called ShapeGGen that can automatically generate a variety of benchmark datasets (e.g., varying graph sizes, degree distributions, homophilic vs. heterophilic graphs) accompanied by ground-truth explanations. Furthermore, the flexibility to generate diverse synthetic datasets and corresponding ground-truth explanations allows us to mimic the data generated by various real-world applications. ShapeGGen is a generator of XAI-ready graph datasets supported by graph theory and is particularly suitable for benchmarking GNN explainers and studying their limitations.

Flexible parameterization of ShapeGGen

ShapeGGen has tunable parameters for data generation. By varying these parameters, ShapeGGen can generate diverse types of graphs, including graphs with varying degrees of class imbalance and graph sizes. Formally, a graph is generated as: = ShapeGGen , where:

S is the motif, defined as a subgraph, to be planted within the graph.

Ns denotes the number of subgraphs used in the initial graph generation process.

p represents the connection probability between two subgraphs and controls the average shortest path length for all possible pairs of nodes. Ideally, a smaller p value for larger Ns is preferred to avoid low average path length and, therefore, the poor performance of GNNs.

ns is the expected size of each subgraph in the ShapeGGen generation procedure. For a fixed S shape, large ns values produce graphs with long-tailed degree distributions. Note that the expected total number of nodes in the generated graph is N × ns.

K is the number of distinct classes defined in the graph downstream task.

nf represents the number of dimensions for node features in the generated graph.

ni is the number of informative node features. These are features correlated to the node labels instead of randomly generated non-informative features. The indices for the informative features define the ground-truth explanation mask for node features in the final ShapeGGen instance. In general, larger ni results in an easier classification and explanation task, as it increases the node feature-level ground-truth explanation size.

sf is defined as the class separation factor that represents the strength of discrimination of class labels between features for each node. Higher sf corresponds to a stronger signal in the node features, i.e., if a classifier is trained only on the node features, a higher sf value would result in an easier classification task.

cf is the number of clusters per class. A larger cf value increases the difficulty of the classification task with respect to node features.

is the protected feature noise factor that controls the strength of correlation r between the protected features and the node labels. This value corresponds to the probability of “flipping” the protected feature with respect to the node’s label. For instance, results in zero correlation (r = 0) between the protected feature and the label (i.e. complete fairness), results in a positive correlation (r = 1), and results in a negative correlation (r = −1) between the label and the protected feature.

η is the homophily coefficient that controls the strength of homophily or heterophily in the graph. Positive values (η > 0) produce a homophilic graph while negative values (η < 0) produce a heterophilic graph.

L is the number of layers in the GNN predictor corresponding to the GNN’s receptive field. For the purposes of ShapeGGen, L is used to define the size of the GNN’s receptive field and thus the size of the ground-truth explanation generated for each node.

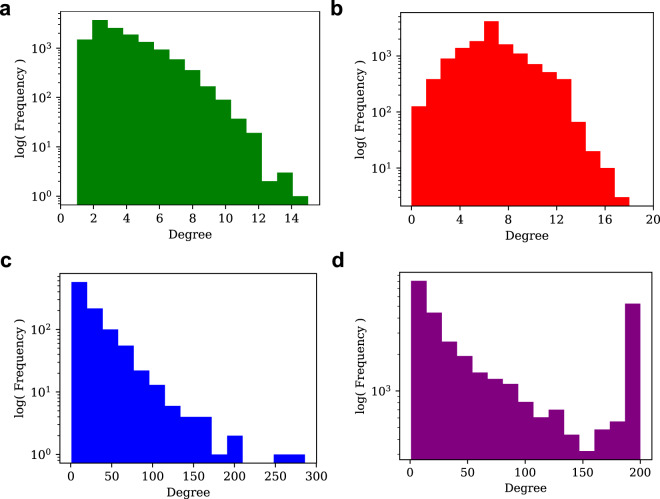

This wide array of parameters for ShapeGGen allows for the generation of graph instances with vastly differing properties Fig. 10.

Fig. 10.

Comparison of degree distribution for (a) ShapeGGen dataset (SG-Base), (b) random Erdös-Rényi graph (p = 5 × 10−4) graph, (c) German Credit dataset, and (d) Credit Defaulter dataset. All plots are shown with a log scale of frequency for the y-axis. SG-Base and both real-world graphs show a power-law degree distribution commonly observed in real-world datasets exhibiting preferential attachment properties. Datasets generated by ShapeGGen are designed to present power-law degree distributions to match real-world dataset topologies, such as those observed in German Credit and Credit Defaulter. The degree distribution of SG-Base is much different than the binomial distribution exhibited in Erdös-Rényi graph (b), an unstructured random graph model.

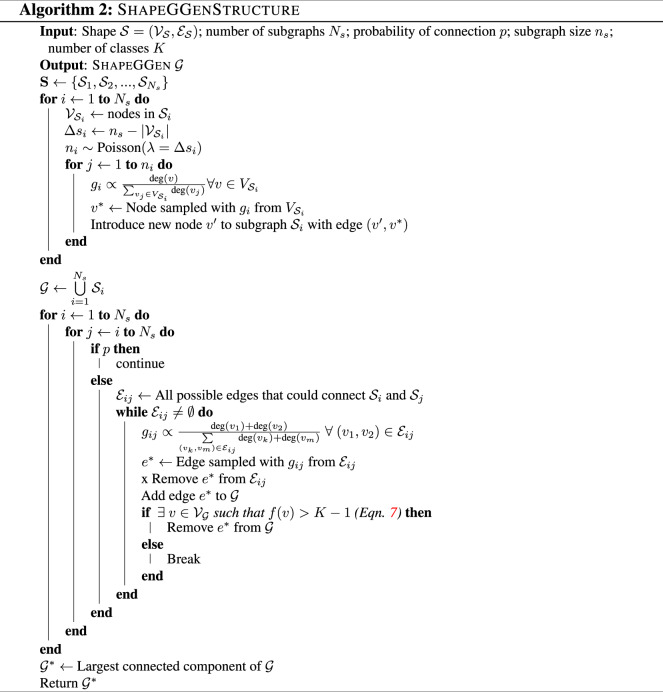

Generating graph structure

Figure 2 summarizes the process to generate a graph . ShapeGGen generates Ns subgraphs that exhibit the preferential attachment property40, which occurs in many real-world graphs. Each subgraph is first given a motif, i.e., a subgraph . A preferential attachment algorithm is then performed on base structure , adding () nodes by creating edges to nodes in . The Poisson distribution is used for determining the sizes of each subgraph used in the generation process, with , the difference between the number of nodes in the motif and the expected subgraph size . After creating a list of randomly-generated subgraphs , edges are created to connect subgraphs, creating the structure of an instance of ShapeGGen. Subgraph connections and local subgraph construction is performed in such a way that each node in the final graph has between 1 and K motifs within its neighborhood, i.e., for any v and . This naturally defines a classification task in the domain of f to {0, 1, …, K−1}. More details on the ShapeGGen structure generation can be found in Algorithm 2.

Generating labels for prediction

A motif defined as a subgraph occurs randomly throughout , with the set . The task on this graph is a motif detection problem, where each node has exactly 1, 2, or K motifs in its 1-hop neighborhood. A motif is considered to be within the neighborhood of a node if any node is also in the neighborhood of v, i.e., if . Therefore, the task that a GNN predictor needs to solve is defined by:

| 7 |

where if and 1 otherwise.

Generating node feature vectors

ShapeGGen uses a latent variable model to create node feature vectors and associate them with network structure. This latent variable model is based on that developed by Guyon41 for the MADELON dataset and implemented in Scikit-Learn’s make_classification function42. The latent variable model creates ni informative features for each node based on the node’s generated label and also creates non-informative features as noise. Having non-informative/redundant features allows us to evaluate GNN explainers, such as GNNExplainer14, that formulate explanations as node feature masks. More detail on node feature generation is given in Algorithm 1.

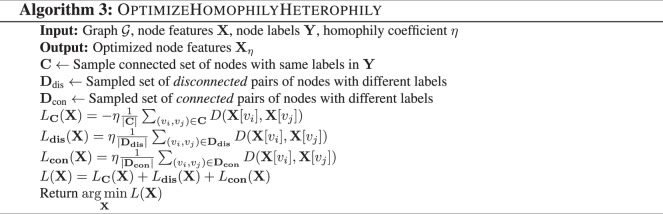

ShapeGGen can generate graphs with both homophilic and heterophilic ground-truth explanations. We optimize between homophily vs. heterophily by taking advantage of redundant node features, i.e., features that do not associate with the generated labels, and manipulate them appropriately based on a user-specified homophily parameter η. The magnitude of the η parameter determines the degree of homophily/heterophily in the generated graph. The algorithm for node features is given in Algorithm 3. While not every node feature in the feature vector is optimized for homophily/heterophily indication, we experimentally verified the cosine similarity between node feature vectors produced by Algorithm 3 reveals a strong homophily/heterophily pattern. Finally, ShapeGGen can generate protected features to enable the study of fairness1. By controlling the value assignment for a selected discrete node feature, ShapeGGen introduces bias between the protected feature and node labels. The biased feature is a proxy for a protected feature, such as gender or race. The procedure for generating node features is outlined in NodeFeatureVectors within Algorithm 1.

Generating ground-truth explanations

In addition to generating ground-truth labels, ShapeGGen has a unique capability to generate unique ground-truth explanations. To accommodate diverse types of GNN explainers, every ground-truth explanation in ShapeGGen contains information on a) the importance of nodes, b) the importance of node features, and c) the importance of edges. This information is represented by three distinct masks defined over enclosing subgraphs of nodes , i.e., the L-hop neighborhood around the node. We denote the enclosing subgraph of node for a given GNN predictor with L layers as: . Let motifs within this enclosing subgraph be: . Using this notation, we define ground-truth explanation masks:

Node explanation mask. Nodes in Sub(v) are assigned a value of 0 or 1 based on whether they are located within a motif or not. For any node , we set if and 0 otherwise. This function is applied to all nodes in the enclosing subgraph of v to produce an importance score for each node, yielding the final mask as: .

Node feature explanation mask. Each feature in v’s feature vector is labeled as 1 if it represents an informative feature and 0 if it is a random feature. This procedure produces a binary node feature mask for node v as: .

Edge explanation mask. To each we assign a value of either 0 or 1 based on whether e connects any two nodes in Sub(v). The masking function is defined as follows:

| 8 |

This function is applied to all edges to produce ground-truth edge explanation as: . The procedure to generate these ground-truth explanations is thoroughly described in Algorithm 1.

Datasets in GraphXAI

We proceed with a detailed description of synthetic and real-world graph data resources included in GraphXAI.

Synthetic graphs

The ShapeGGen generator outlined in the Methods section is a dataset generator that can be used to generate any number of user-specified graphs. In GraphXAI, we provide a collection of pre-generated XAI-ready graphs with diverse properties that are readily available for analysis and experimentation.

Base ShapeGGen graphs (SG-Base)

We provide a base version of ShapeGGen graphs. This instance of ShapeGGen is homophilic, large, and contains house-shaped motifs for ground-truth explanations, formally described as = ShapeGGen ( = ‘house’, Ns = 1200, p = 0.006, ns = 11, K = 2, nf = 11, ni = 4, sf = 0.6, cf = 2, ϕ = 0.5, η = 1, L = 3). The node features in this graph exhibit homophily, a property commonly found in social networks. With over 10,000 nodes, this graph also provides enough examples of ground-truth explanations for rigorous statistical evaluation of explainer performance. The house-shaped motifs follow one of the earliest synthetic graphs used to evaluate GNN explainers14.

Homophilic and heterophilic ShapeGGen graphs

GNN explainers are evaluated on homophilic graphs1,43–45 as low homophily levels in graphs can degrade the performance of GNN predictors46,47. To this end, there are no heterophilic graphs with ground-truth explanations in current GNN XAI literature despite such graphs being abundant in real-world applications46. To demonstrate the flexibility of the ShapeGGen data generator, we use it to generate graphs with: i) homophilic ground-truth explanations (SG-Base) and ii) heterophilic ground-truth explanations (SG-Heterophilic), i.e., = ShapeGGen ( = ‘house’, Ns = 1200, p = 0.006, ns = 11, K = 2, nf = 11, ni = 4, sf = 0.6, cf = 2, ϕ = 0.5, η = −1, L = 3).

Weakly and strongly unfair ShapeGGen graphs

We utilize the ShapeGGen data generator to generate graphs with controllable fairness properties, i.e., leverage ShapeGGen to generate synthetic graphs with real-world fairness properties where we can enforce unfairness w.r.t. a given protected attribute. We use the ShapeGGen to generate graphs with: i) weakly-unfair ground-truth explanations (SG-Base) and ii) strongly-unfair ground-truth explanations (SG-Unfair) = ShapeGGen ( = ‘house’, Ns = 1200, p = 0.006, ns = 11, K = 2, nf = 11, ni = 4, sf = 0.6, cf = 2, ϕ = 0.75, η = 1, L = 3). Here, for the first time, we generated unfair synthetic graphs that can serve as pseudo-ground-truth for quantifying whether current GNN explainers preserve counterfactual fairness.

Small and large ShapeGGen explanations

We explore the faithfulness of explanations w.r.t. different ground-truth explanation sizes. This is important because a reliable explanation should identify important features independent of the explanation size. However, current data resources only provide graphs with smaller-size ground-truth explanations. Here, we use the ShapeGGen data generator to generate graphs having (i) smaller ground-truth explanations size (SG-SmallEx) = ShapeGGen ( = ‘triangle’, Ns = 1200, p = 0.006, ns = 12, K = 2, nf = 11, ni = 4, sf = 0.5, cf = 2, ϕ = 0.5, η = 1, L = 3) and (ii) larger ground-truth explanations size using house motifs.

Low and high proportions of salient features

We examine the faithfulness of node feature masks produced by explainers under different levels of sparsity for class-associated signal in the node features. The feature generation procedure in ShapeGGen specifies node feature parameters ni, the number of informative features that are generated to correlate with node labels, and nf, number of total node features. The remaining nf−ni features are redundant features that are randomly distributed and have no correlation to the node label. Using an equivalent graph topology to SG-Base, we change the relative proportion of node features which are attributed to the label by adjusting ni and nf. We create SG-MoreInform, a ShapeGGen instance with a high proportion of ground-truth features to total features (8:11). Likewise, we create SG-LessInform, a ShapeGGen instance with a low proportion of ground-truth features to total features (4:21). This proportion in SG-Base falls in the middle of SG-MoreInform and SG-LessInform instances with a proportion of ground-truth to total features of 4:11. Formally, we define SG-MoreInform as = ShapeGGen ( = ‘house’, Ns = 1200, p = 0.006, ns = 11, K = 2, nf = 11, ni = 8, sf = 0.6, cf = 2, ϕ = 0.5, η = 1, L = 3) and SG-LessInform as = ShapeGGen ( = ‘house’, Ns = 1200, p = 0.006, ns = 11, K = 2, nf = 21, ni = 4, sf = 0.6, cf = 2, ϕ = 0.5, η = 1, L = 3).

BA-Shapes

In addition to ShapeGGen, we provide a version of BA-Shapes14, a synthetic graph data generator for node classification tasks. We start with a base Barabasi-Albert (BA)48 graph using N nodes and a set of five-node “house”-structured motifs K randomly attached to nodes of the base graph. The final graph is perturbed by adding random edges. The nodes in the output graph are categorized into two classes corresponding to whether the node is in a house (1) or not in a house (0).

Real-world graphs

We describe the real-world graph datasets with and without ground-truth explanations provided in GraphXAI. To this end, we provide data resources from crime forecasting, financial lending, and molecular chemistry and biology1,2,35. We consider these datasets as they are used to train GNNs for generating predictions in high-stakes downstream applications. In particular, we include chemical and biological datasets because they are used to identify whether an input graph (i.e., a molecular graph) contains a specific pattern (i.e., a chemical group with a specific property in the molecule). Knowledge about such patterns, which determine molecular properties, represents ground-truth explanations2. We provide a statistical description of real-world graphs in Tables 6–8. Below, we discuss the details of each of the real-world datasets that we employ:

Table 7.

Statistics of real-world graph classification datasets in GraphXAI with ground-truth (GT) explanations.

| Dataset | MUTAG | Benzene | Fluoride-Carbonyl | Alkane-Carbonyl |

|---|---|---|---|---|

| Graphs | 1,768 | 12,000 | 8,671 | 4,326 |

| Average nodes | 29.15 ± 0.35 | 20.58 ± 0.04 | 21.36 ± 0.04 | 21.13 ± 0.05 |

| Average edges | 60.83 ± 0.75 | 43.65 ± 0.08 | 45.37 ± 0.09 | 44.95 ± 0.12 |

| Node features | 14 | 14 | 14 | 14 |

| GT Explanation | NH2, NO2 chemical group | Benzene Ring | F− and C = O chemical group | Alkane and C = O chemical group |

Table 8.

Statistics of real-world node classification datasets in GraphXAI without ground-truth (GT) explanations.

| Dataset | German credit graph | Recidivism graph | Credit defaulter graph |

|---|---|---|---|

| Nodes | 1,000 | 18,876 | 30,000 |

| Edges | 22,242 | 321,308 | 1,436,858 |

| Node features | 27 | 18 | 13 |

| Average node degree | 44.48 ± 26.51 | 34.04 ± 46.65 | 95.79 ± 85.88 |

MUTAG

The MUTAG35 dataset contains 1,768 graph molecules labeled into two different classes according to their mutagenic properties, i.e., effect on the Gram-negative bacterium S. Typhimuriuma. Kazius et al.35 identifies several toxicophores - motifs in the molecular graph - that correlate with mutagenicity. The dataset is trimmed from its original 4,337 graphs to 1,768, based on those whose labels directly correspond to the presence or absence of our chosen toxicophores: NH2, NO2, aliphatic halide, nitroso, and azo-type (terminology, as referred to in Kazius et al.35).

Alkane carbonyl

The Alkane Carbonyl2 dataset contains 1,125 molecular graphs labeled into two classes where a positive sample indicates a molecule that contains an unbranched alkane and a carbonyl (C = O) functional group. The ground-truth explanations include any combinations of alkane and carbonyl functional groups within a given molecule.

Benzene

The Benzene2 dataset contains 12,000 molecular graphs extracted from the ZINC1549 database and labeled into two classes where the task is to identify whether a given molecule has a benzene ring or not. Naturally, the ground truth explanations are the nodes (atoms) comprising the benzene rings, and in the case of multiple benzenes, each of these benzene rings forms an explanation.

Fluoride carbonyl

The Fluoride Carbonyl2 dataset contains 8,671 molecular graphs labeled into two classes where a positive sample indicates a molecule that contains a fluoride (F−) and a carbonyl (C = O) functional group. The ground-truth explanations consist of combinations of fluoride atoms and carbonyl functional groups within a given molecule.

German credit

The German Credit1 graph dataset contains 1,000 nodes representing clients in a German bank connected based on the similarity of their credit accounts. The dataset includes demographic and financial features like gender, residence, age, marital status, loan amount, credit history, and loan duration. The goal is to associate clients with credit risk.

Recidivism

The Recidivism1 dataset includes samples of bail outcomes collected from multiple state courts in the USA between 1990–2009. It contains past criminal records, demographic attributes, and other demographic details of 18,876 defendants (nodes) who got released on bail at the U.S. state courts. Defendants are connected based on the similarity of past criminal records and demographics, and the goal is to classify defendants into bail vs. no bail.

Credit defaulter

The Credit defaulter1 graph has 30,000 nodes representing individuals that we connected based on the similarity of their spending and payment patterns. The dataset contains applicant features like education, credit history, age, and features derived from their spending and payment patterns. The task is to predict whether an applicant will default on an upcoming credit card payment.

Acknowledgements

C.A., O.Q., and M.Z. gratefully acknowledge the support by NSF under Nos. IIS-2030459 and IIS-2033384, US Air Force Contract No. FA8702-15-D-0001, and awards from Harvard Data Science Initiative, Amazon Research, Bayer Early Excellence in Science, AstraZeneca Research, and Roche Alliance with Distinguished Scientists. H.L. was supported in part by NSF under Nos IIS-2008461 and IIS-2040989, and research awards from Google, JP Morgan, Amazon, Bayer, Harvard Data Science Initiative, and D3 Institute at Harvard. O.Q. was supported, in part, by Harvard Summer Institute in Biomedical Informatics. Any opinions, findings, conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funders.

Author contributions

C.A., O.Q., H.L. and M.Z. contributed new analytic tools and wrote the manuscript. C.A. and O.Q. retrieved, processed, and harmonized datasets. C.A. and O.Q. implemented the synthetic dataset generator and performed the analyses for technical validation of the new resource. M.Z. conceived the study.

Data availability

GraphXAI data resource28 is hosted on Harvard Dataverse under a persistent identifier 10.7910/DVN/KULOS8. We have deposited different a number of ShapeGGen -generated datasets and real-world graphs at this repository.

Code availability

Project website for GraphXAI is at https://zitniklab.hms.harvard.edu/projects/GraphXAI. The code to reproduce results, documentation, and tutorials are available in GraphXAI ‘s Github repository at https://github.com/mims-harvard/GraphXAI. The repository contains Python scripts to generate and evaluate explanations using performance metrics and also visualize explanationa. In addition, the repository contains information and Python scripts to build new versions of GraphXAI as the underlying primary resources get updated and new data become available.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Chirag Agarwal, Owen Queen.

References

- 1.Agarwal, C., Lakkaraju, H. & Zitnik, M. Towards a unified framework for fair and stable graph representation learning. In UAI (2021).

- 2.Sanchez-Lengeling et al. Evaluating attribution for graph neural networks. NeurIPS (2020).

- 3.Giunchiglia, V., Shukla, C. V., Gonzalez, G. & Agarwal, C. Towards training GNNs using explanation directed message passing. In The First Learning on Graphs Conference (2022).

- 4.Morselli Gysi, D. et al. Network medicine framework for identifying drug-repurposing opportunities for covid-19. Proceedings of the National Academy of Sciences (2021). [DOI] [PMC free article] [PubMed]

- 5.Zitnik, M., Agrawal, M. & Leskovec, J. Modeling polypharmacy side effects with graph convolutional networks. In Bioinformatics (2018). [DOI] [PMC free article] [PubMed]

- 6.Baldassarre, F. & Azizpour, H. Explainability techniques for graph convolutional networks. In ICML Workshop on LRGR (2019).

- 7.Faber, L. et al. Contrastive graph neural network explanation. In ICML Workshop on Graph Representation Learning and Beyond (2020).

- 8.Huang, Q., Yamada, M., Tian, Y., Singh, D. & Chang, Y. Graphlime: Local interpretable model explanations for graph neural networks. IEEE Transactions on Knowledge and Data Engineering (2022).

- 9.Lucic, A., Ter Hoeve, M. A., Tolomei, G., De Rijke, M. & Silvestri, F. Cf-gnnexplainer: Counterfactual explanations for graph neural networks. In AISTATS (PMLR, 2022).

- 10.Luo, D. et al. Parameterized explainer for graph neural network. In NeurIPS (2020).

- 11.Pope, P. E., Kolouri, S., Rostami, M., Martin, C. E. & Hoffmann, H. Explainability methods for graph convolutional neural networks. In CVPR (2019).

- 12.Schlichtkrull, M. S., De Cao, N. & Titov, I. Interpreting graph neural networks for nlp with differentiable edge masking. In ICLR (2021).

- 13.Vu, M. N. & Thai, M. T. PGM-Explainer: probabilistic graphical model explanations for graph neural networks. In NeurIPS (2020).

- 14.Ying, R., Bourgeois, D., You, J., Zitnik, M. & Leskovec, J. GNNExplainer: generating explanations for graph neural networks. In NeurIPS (2019). [PMC free article] [PubMed]

- 15.Agarwal, C. et al. Probing GNN explainers: A rigorous theoretical and empirical analysis of GNN explanation methods. In AISTATS (2022).

- 16.Faber, L., K. Moghaddam, A. & Wattenhofer, R. When comparing to ground truth is wrong: On evaluating GNN explanation methods. In KDD (2021).

- 17.Hu, W. et al. Open Graph Benchmark: datasets for machine learning on graphs. In NeurIPS (2020).

- 18.Baruah, T. et al. GNNMark: a benchmark suite to characterize graph neural network training on gpus. In ISPASS (2021).

- 19.Du, Y. et al. GraphGT: machine learning datasets for graph generation and transformation. In NeurIPS Datasets and Benchmarks (2021).

- 20.Freitas, S. et al. A large-scale database for graph representation learning. In NeurIPS Datasets and Benchmarks (2021).

- 21.Zheng, Q. et al. Graph robustness benchmark: Benchmarking the adversarial robustness of graph machine learning. In NeurIPS Datasets and Benchmarks (2021).

- 22.Huang, K. et al. Therapeutics Data Commons: Machine learning datasets and tasks for drug discovery and development. In NeurIPS Datasets and Benchmarks (2021).

- 23.Huang K, et al. Artificial intelligence foundation for therapeutic science. Nature Chemical Biology. 2022;18:1033–1036. doi: 10.1038/s41589-022-01131-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wang, Z., Yin, H. & Song, Y. Benchmarking the combinatorial generalizability of complex query answering on knowledge graphs. In NeurIPS Datasets and Benchmarks (2021).

- 25.Liu, M. et al. DIG: A turnkey library for diving into graph deep learning research. JMLR (2021).

- 26.Fey, M. & Lenssen, J. E. Fast graph representation learning with PyTorch Geometric. ICLR 2019 (RLGM Workshop) (2019).

- 27.Wang, M. et al. Deep Graph Library: Towards efficient and scalable deep learning on graphs. In ICLR workshop on representation learning on graphs and manifolds (2019).

- 28.Agarwal C, Queen O, Lakkaraju H, Zitnik M. 2022. Evaluating explainability for graph neural networks. Harvard Dataverse. [DOI] [PMC free article] [PubMed]

- 29.Simonyan, K. et al. Deep inside convolutional networks: Visualising image classification models and saliency maps. In ICLR (2014).

- 30.Sundararajan, M., Taly, A. & Yan, Q. Axiomatic attribution for deep networks. In ICML (2017).

- 31.Yuan, H., Yu, H., Wang, J., Li, K. & Ji, S. On explainability of graph neural networks via subgraph explorations. In ICML (2021).

- 32.Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? In ICLR (2019).

- 33.Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In ICLR (2017).

- 34.Taha, A. A. & Hanbury, A. Metrics for evaluating 3d medical image segmentation: analysis, selection, and tool. In BMC Medical Imaging (2015). [DOI] [PMC free article] [PubMed]

- 35.Kazius, J. et al. Derivation and validation of toxicophores for mutagenicity prediction. In Journal of Medicinal Chemistry (2005). [DOI] [PubMed]

- 36.Yuan, H., Yu, H., Gui, S. & Ji, S. Explainability in graph neural networks: A taxonomic survey. IEEE Transactions on Pattern Analysis and Machine Intelligence (2022). [DOI] [PubMed]

- 37.Hunter, J. D. Matplotlib: A 2d graphics environment. Computing in Science & Engineering (2007).

- 38.Hagberg, A. A., Schult, D. A. & Swart, P. J. Exploring network structure, dynamics, and function using networkx. In Proceedings of the 7th Python in Science Conference (2008).

- 39.Wu, Z. et al. A comprehensive survey on graph neural networks. In IEEE Transactions on Neural Networks and Learning Systems (2020). [DOI] [PubMed]

- 40.Barabási, A.-L. & Albert, R. Emergence of scaling in random networks. In Science (1999). [DOI] [PubMed]

- 41.Guyon, I. Design of experiments of the nips 2003 variable selection benchmark. In NIPS 2003 workshop on feature extraction and feature selection (2003).

- 42.Pedregosa, F. et al. Scikit-learn: Machine learning in Python. In JMLR (2011).

- 43.McCallum, A. K. et al. Automating the construction of internet portals with machine learning. In Information Retrieval (2000).

- 44.Sen, P. et al. Collective classification in network data. In AI magazine (2008).

- 45.Wang, K. et al. Microsoft academic graph: When experts are not enough. In Quantitative Science Studies (2020).

- 46.Zhu, J. et al. Beyond homophily in graph neural networks: Current limitations and effective designs. In NeurIPS (2020).

- 47.Jin, W. et al. Node similarity preserving graph convolutional networks. In WSDM (2021).

- 48.Albert, R. & Barabási, A.-L. Statistical mechanics of complex networks. Reviews of modern physics (2002).

- 49.Sterling, T. & Irwin, J. J. Zinc 15–ligand discovery for everyone. Journal of Chemical Information and Modeling (2015). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Agarwal C, Queen O, Lakkaraju H, Zitnik M. 2022. Evaluating explainability for graph neural networks. Harvard Dataverse. [DOI] [PMC free article] [PubMed]

Data Availability Statement

GraphXAI data resource28 is hosted on Harvard Dataverse under a persistent identifier 10.7910/DVN/KULOS8. We have deposited different a number of ShapeGGen -generated datasets and real-world graphs at this repository.

Project website for GraphXAI is at https://zitniklab.hms.harvard.edu/projects/GraphXAI. The code to reproduce results, documentation, and tutorials are available in GraphXAI ‘s Github repository at https://github.com/mims-harvard/GraphXAI. The repository contains Python scripts to generate and evaluate explanations using performance metrics and also visualize explanationa. In addition, the repository contains information and Python scripts to build new versions of GraphXAI as the underlying primary resources get updated and new data become available.