Abstract

Facial expression and body posture recognition have protracted developmental trajectories. Interactions between face and body perception, such as the influence of body posture on facial expression perception, also change with development. While the brain regions underpinning face and body processing are well‐defined, little is known about how white‐matter tracts linking these regions relate to perceptual development. Here, we obtained complementary diffusion magnetic resonance imaging (MRI) measures (fractional anisotropy [FA], spherical mean Ṧ μ), and a quantitative MRI myelin‐proxy measure (R1), within white‐matter tracts of face‐ and body‐selective networks in children and adolescents and related these to perceptual development. In tracts linking occipital and fusiform face areas, facial expression perception was predicted by age‐related maturation, as measured by Ṧ μ and R1, as well as age‐independent individual differences in microstructure, captured by FA and R1. Tract microstructure measures linking posterior superior temporal sulcus body region with anterior temporal lobe (ATL) were related to the influence of body on facial expression perception, supporting ATL as a site of face and body network convergence. Overall, our results highlight age‐dependent and age‐independent constraints that white‐matter microstructure poses on perceptual abilities during development and the importance of complementary microstructural measures in linking brain structure and behaviour.

Keywords: body posture, development, facial expression, fusiform face area, tractography, white matter

Measures of white matter microstructure in tracts linking face‐ and body‐specific regions in the brain were obtained in children and adolescents, and related to their perceptual abilities. Through development, children become better at discriminating facial expressions and body postures, and their improved perceptual abilities are mirrored in changes to white matter microstructure. The results suggest that white matter microstructure poses both age‐dependent and age‐independent constraints on perception.

1. INTRODUCTION

The ability to recognize other people's facial expressions, a critical skill for everyday social interactions, has a protracted development that extends well into adolescence (Calder, 2011; Gao & Maurer, 2009). While functional and structural changes (Gomez et al., 2017; Natu et al., 2016) in face‐selective brain regions have been linked to developmental improvements in the perception of facial characteristics such as face identity, our understanding of brain development relating to facial expression perception is limited at best. Expression recognition is subserved by a network of regions in lateral occipitotemporal and ventral temporal cortex (Duchaine & Yovel, 2015), but the developmental changes in white‐matter connectivity between these regions and their relation to perceptual development are unknown. Moreover, facial expression perception has to be considered within the context of other cues that are used to recognise emotions. Most prominently, another person's body posture provides a context that exerts a powerful influence on how facial expressions are perceived (Aviezer et al., 2008; Meeren et al., 2005; Teufel et al., 2019), suggesting possible interaction between face and body networks. In the current study, we therefore investigated the development of perception of facial expressions, body postures, and the influence of body context on expression perception through childhood and adolescence. Additionally, we investigated microstructural changes within white matter connections along face and body processing pathways in the brain that directly underpin perceptual development.

Between infancy and adolescence, humans become increasingly more accurate at perceiving the subtle changes in facial expressions that are associated with different emotions (Herba et al., 2006; Thomas et al., 2007). The development of emotion recognition from body posture has been studied far less, but suggests a similarly protracted developmental trajectory to facial expression perception (Heck et al., 2018; Ross et al., 2012; Vieillard & Guidetti, 2009). At the cortical level, faces and bodies are thought to be processed along largely distinct cortical pathways in lateral occipitotemporal and ventral temporal cortex (VTC). “Core” face‐selective regions have been shown to include the occipital face area (OFA), fusiform face area (FFA), posterior superior temporal sulcus (pSTS‐face), and anterior temporal lobe (ATL) (Duchaine & Yovel, 2015; Haxby et al., 2000). Similarly, and in close proximity to face‐specific regions, a set of body‐selective regions have also been described, including extrastriate body area (EBA), fusiform body area (FBA), and pSTS (pSTS‐body) (de Gelder, 2006; Peelen & Downing, 2005).

A limited number of studies have shown that the selectivity, sensitivity, and/or size of face‐selective regions increase during development and are related to facial identity perception (Golarai et al., 2010; Gomez et al., 2017; Natu et al., 2019). For instance, the activity of face‐selective regions as measured by fMRI was found to be more strongly modulated by subtle differences in face identity in adults compared to 5‐ to 12‐year‐old children (Natu et al., 2016) and these neural sensitivity differences were related to perceptual performance in discriminating facial identities, even when controlling for age. These findings are consistent with the idea that within‐region developmental changes such as a sharpening of tuning functions of face‐selective populations of neurons might be directly related to perceptual improvements across development (Natu et al., 2016). While it is currently unknown whether similar developmental changes within face‐selective areas might underpin improved perceptual ability in relation to other face characteristics, including facial expression, it seems plausible to assume that this is the case.

A complementary process that likely shapes the development of facial identity and facial expression perception are changes in structural connectivity between cortical regions. The core face network is underpinned by direct white matter connections between face‐selective regions along the occipital and ventral temporal cortex (Gschwind et al., 2012; Pyles et al., 2013; Wang et al., 2020). In adults, there is some evidence linking the structural properties of white matter at the grey‐white matter boundary close to fusiform‐face regions to identity recognition ability from faces (Gomez et al., 2015; Song, Zhu, et al., 2015). However, the extent to which the microstructure of tracts between face‐selective regions relate to perceptual ability, in particular facial expression perception, and how the developing microstructure constrains this ability has not been addressed to date.

Research in adults has demonstrated that the body context within which a face is perceived can have dramatic effects on facial expression perception (Aviezer et al., 2008, 2012; Hassin et al., 2013; Meeren et al., 2005; Teufel et al., 2019), suggesting some interplay between face‐ and body‐selective pathways. For example, observers are more likely to perceive a disgusted face as angry when presented in an angry body context. Developmental research suggests that children's facial expression perception is even more strongly influenced by body context compared to adults (Leitzke & Pollak, 2016; Mondloch, 2012; Mondloch et al., 2013; Nelson & Mondloch, 2017; Rajhans et al., 2016). A changing structural connectivity profile between face‐ and body‐selective areas is one potential substrate underpinning these developmental changes in contextual influence on facial expression perception. Work in both adult humans and primates (fMRI and electrophysiology) suggests that non‐emotional face and body information is processed largely independently in the early visual system, with influences of the body network on face processing emerging later in the face‐selective hierarchy in ATL (Fisher & Freiwald, 2015; Harry et al., 2016). A recent psychophysical study points to the existence of a similar processing hierarchy for the integration of emotion signals from face and body (Teufel et al., 2019). Microstructural properties of connections from face‐ and body‐selective areas to ATL are therefore of particular interest to understand the development of facial expression perception in the context of an expressive body.

In the current study, using the precision afforded by psychophysical measures, we explored the developmental improvements of facial expression and body posture discrimination in 8‐ to 18‐year‐olds, and their relation to the development of contextual influences of body posture on facial expression perception. Using functionally localised face‐ and body‐selective seed regions for fibre‐tracking in the same individuals, we isolated white matter connections within the face and body networks and their convergence onto candidate regions integrating face and body information, notably ATL. We extracted multiple microstructural measures from these tracts to specifically address the component processes of microstructural change across development and their relation to perception. In particular, we obtained a diffusion magnetic resonance imaging (dMRI) measure sensitive to diffusion within the intracellular (e.g., neuronal/axonal and glial) space, the spherical mean (Ṧ μ); a quantitative MRI measure, the longitudinal relaxation rate R1 as a proxy for myelination; and a more widely used general dMRI measure of “tract integrity,” the fractional anisotropy (FA), believed to reflect density of axonal packing, orientation of axons within a voxel and membrane permeability. Previous evidence suggests that changes to tuning functions within face‐selective areas are linked to the development of face perception. Here, we used our data to test the hypothesis that, complementing the perceptual consequences of functional selectivity, microstructural changes in white matter tracts between areas in the face and body processing streams influence the development of facial expression and body posture perception, as well as the influence of body posture on facial expression perception.

2. METHODS

2.1. Participants

A total of 45 typically developing children (22 females) between 8 and 18 years of age (mean age = 12.96 ± 3.1) were recruited from the local community. The study took place over two visits and was part of a larger study focussing on brain development. Children underwent cognitive testing and a 3 T MRI scan on their first visit, and a 7 T scan (n = 44) on their second visit. All children had normal or corrected‐to‐normal vision and were screened to exclude major neurological disorders. All children had IQ values above 86 (mean score ± SD = 107 ± 14.26, range = 86–145) (Wechsler Abbreviated Scale of Intelligence II; The Psychological Corporation, 1999). Pubertal stage was determined using parental report on the Pubertal Development Scale (PDS) (Petersen et al., 1988). A strong positive correlation between age and PDS score was found (r s = .85, p < .0001). Therefore, in subsequent analyses, we used age adjustment only. Primary caregivers of children provided written informed consent prior to participating (with over 16 s also providing their own written consent). Experimental protocols were approved by Cardiff University School of Psychology Ethics Committee and were in line with the Declaration of Helsinki. Participants were reimbursed with a voucher.

2.2. Psychophysical testing

We focussed on face and body morphs between the emotions of anger and disgust. These emotions were chosen for several reasons. Negative emotions, particularly disgust, are recognised at adult‐like levels later during development compared to other emotions (Herba et al., 2006) enabling us to quantify perceptual changes across childhood and adolescence. Additionally, previous research has shown a larger, more robust effect of body context with anger and disgust compared to other emotions (Aviezer et al., 2008), and we wanted to ensure that we had the sensitivity to detect individual differences in this effect across development. Finally, the emotions of disgust and anger are associated with body postures that are clearly identifiable.

2.3. Stimuli

For our face stimuli, we used images of facial expressions from the Radboud and Karolinska Directed Emotional Faces validated sets of facial expressions (Langner et al., 2010; Lundqvist et al., 1998). Angry and disgusted facial expressions of four male identities were morphed separately using FantaMorph (FantaMorph Pro, Version 5). The morphs changed in increments of 5%, leading to a total of 21 morph levels from fully angry to fully disgusted face generated for each identity (Figure 1).

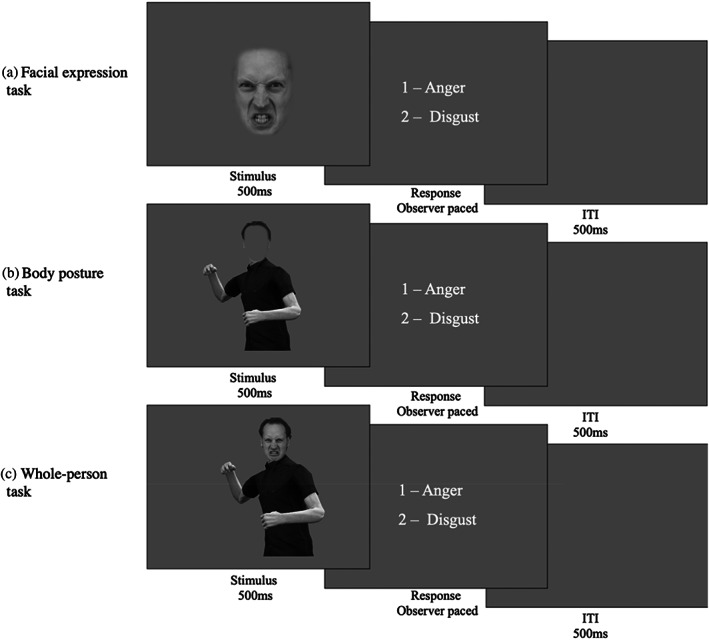

FIGURE 1.

Psychophysical task design. The figure illustrates one trial for each of the three experimental tasks. The facial expression (a) and the body posture tasks (b) were used to index the precision of facial expression and body posture representations, respectively. The whole‐person task was used to measure the influence of body posture on facial expression perception. In the facial expression (a) and the body posture tasks (b) isolated faces or bodies were presented and participants were asked to categorize them as angry or disgusted. In the whole‐person task (c), they were asked to base their categorization on the face and ignore the body. On each trial, the stimulus was presented centrally for 500 ms. Following presentation of the stimuli, response options appeared on screen and remained until an observer made a response. The next trial commenced following a 500 ms inter‐trial interval.

By contrast to facial expressions, body postures have to be morphed in 3D, rather than 2D image space. To create these stimuli, an actor dynamically posed emotional body postures while 3D location information was recorded from 22 body joints using a motion capture suit with motion trackers (Fedorov et al., 2018). The actors' postures were modelled on typical body postures from the literature (e.g., Aviezer et al., 2008). Based on the dynamically recorded behaviour of the actor, we chose four slightly different angry and disgusted body postures to create four identities. Visualisation of these postures was achieved using Unity 3D game engine (Unity Technologies, San Francisco, version Unity 2018). Each of these postures was given a different identity by varying body composition and clothing. For each body identity, morphs between anger and disgust were generated via weighted averages of corresponding joint angles of the recorded postures. Similar to face morphs, body morphs changed in increments of 5%, resulting in a total of 21 body morph levels per identity ranging from fully angry to fully disgusted. Each body posture was combined with a facial identity to create a photorealistic “whole‐person” stimulus. For the body posture categorisation task, a mean grey oval was placed centrally over the face to conceal the distinguishing features of the facial expression (Figure 1). For the whole‐person task, facial expression morphs were presented on a fully angry or a fully disgusted body (Figure 1).

2.4. Stimulus presentation

We indexed the precision of (i) facial expression and (ii) body posture representations, as well as (iii) the influence of body posture on expression perception by estimating psychometric functions (PFs) in three separate psychophysical tasks (see Figure 1 for details). Each task consisted of a 1‐Alternative‐Forced‐Choice paradigm, in which participants viewed a single stimulus and were asked to categorize it as anger or disgust. In the Facial Expression and the Body Posture tasks, the stimulus was an isolated face or body morph. In the whole‐person task, face morphs were presented either in the context of fully angry or fully disgusted bodies, and participants were instructed to categorize the facial expression and ignore the body posture. To optimize testing efficiency, the morph level of the stimulus presented on each trial was determined by the Psi method (Kontsevich & Tyler, 1999), a Bayesian adaptive procedure. Stimuli were presented centrally on the screen for 500 ms. The images subtended approximately 15° visual angle (vertically) by 10° visual angle (horizontally). Following stimulus presentation, response options appeared and remained on screen until a response was recorded via a button press. A small number of 8‐ to 10‐year‐old participants indicated their response verbally and the experimenter pressed the button. Following each response, there was a 500 ms inter‐trial interval prior to the next stimulus appearing.

Presentation of the tasks was controlled by custom‐written MATLAB (Version 2016b, The MathWorks, Natick, MA) code using the Psychophysics Toolbox (Version 3.0.14) (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997) and the Palamedes Toolbox (Kingdom & Prins, 2010). For the facial expression and body posture tasks, there were 100 trials. For the whole‐person task, there were 496 trials: half were facial expression morphs on a fully angry body, and the other half on a fully disgusted body posture. Each of the four identities were displayed an equal number of times in each task. The order of tasks was fully counterbalanced across participants and each task had a short training phase at the beginning. Together, all three tasks took approximately 30 min to complete.

2.5. Analysis

Using custom‐written MATLAB code with the Palamedes toolbox (Kingdom & Prins, 2010), PFs based on a cumulative Gaussian were fitted to the data of each task for each observer to extract the slope and point‐of‐subjective equality parameters (PSE). Lapse rate was fixed at 0.03; guess rate was determined by the experimental procedure and was fixed at 0. Two PFs were fitted to the two conditions within the whole‐person task: one PF for data when the facial expression morphs were presented on an angry body, and the other for the facial expression morphs on a disgusted body. Goodness‐of‐fit of the PFs was assessed visually by two independent researchers to exclude participants whose PF slopes were close to or equal to 0 (i.e., whose responses did not change with morph levels). A total of 12 children were excluded from the facial expression condition, 6 children from the body posture condition, and 17 children from the whole‐person condition due to poor fitting of the PFs.

For the facial expression and body posture tasks, the slope parameter was the key measure of interest. It provides an index for the precision of the perceptual representations underlying performance, with steeper slopes indicating greater ability to distinguish between subtle differences in the morphs. For the whole‐person task, the key measure was the difference in PSE between the two conditions, that is, when the same face morphs were presented on a fully angry or fully disgusted body. The PSE is the point at which the observer is equally likely to perceive the stimulus as either disgust or anger. The PSE change between the two conditions in the whole‐person task reflects the contextual influence of body posture on facial expression judgements.

2.6. Neuroimaging

On the participant's first visit, dMRI data were acquired on a 3 T Connectom scanner (Siemens Healthcare, Erlangen, Germany) with 300 mT/m gradients and a 32‐channel radiofrequency coil (Nova Medical, Wilmington, MA). The dMRI data were acquired using a multi‐shell diffusion‐weighted echo‐planar imaging (EPI) sequence with an anterior‐to‐posterior phase encoding direction (TR/TE = 2600/48 ms; resolution = 2 × 2 × 2 mm; 66 slices; b‐values = 0 (14 vols), 500; 1200 (30 directions), and 2400; 4000; 6000 (60 directions) s/mm2; TA = 16 min 14 s). One additional volume was acquired with a posterior‐to‐anterior phase encoding direction for the purpose of EPI distortion correction. T1‐weighted anatomical images were acquired using a 3D Magnetization Prepared Rapid Gradient Echo (MP‐RAGE) sequence (TR/TE = 2300/2 ms; resolution = 1 × 1 × 1 mm; 192 slices; TA = 5 min 32 s). On the second visit, participants had structural and functional scans on a 7 T Siemens Magnetom (Siemens Healthcare). Whole‐brain EPI gradient echo data were acquired (TR/TE = 2000/30 ms; resolution = 1.5 × 1.5 × 1.5 mm; 87 slices; multi‐band factor = 3; TA = 9 min 18 s), with slices angled along the anterior commissure and posterior commissure to minimise signal drop out from the temporal regions. In addition, a B0 field map (TR/TE 1/TE 2 = 560/5.1/6.12 ms; resolution = 3 × 3 × 3 mm; 44 slices; TA = 1 min 7 s) was acquired to unwarp the high‐field EPI data. A high‐resolution MP2RAGE structural scan (TR/TE = 6000/2.7 ms; resolution = 0.65 × 0.65 × 0.65 mm; TA = 10 min 46 s) was also acquired (Marques et al., 2010). An SA2RAGE B1 map (TR/TE = 2400/0.72 ms; resolution = 3.25 × 3.25 × 6 mm; TA = 1 min 26 s) was acquired to bias‐field correct the MP2RAGE (Eggenschwiler et al., 2012). Retrospective correction of head movement was used to mitigate blurring of the high‐resolution MP2RAGE data at 7 T (Gallichan et al., 2016).

For the functional localiser task, which was used to identify cortical regions involved in face and body processing for each individual, participants were presented with grey‐scale images of faces, houses, bodies, and chairs. Images were shown in blocks by stimulus category, and the order was pseudorandomised across participants. Within each block, 15 images were displayed for 800 ms each with a 200 ms inter‐stimulus interval. A block of fixation followed the stimulus category block, and the participant was asked to focus on the fixation cross, displayed centrally on a mean grey screen (15 s). For the last second of each fixation block, the fixation cross turned red to indicate that the next block of images would commence. In total, there were four blocks of each stimulus category. Therefore, for each stimulus category, 64 trials per condition were presented overall. To ensure participants attended to the images, they were instructed to respond using a key press on an MR‐compatible button box if the same image was presented twice in succession (1‐back task). The number of repeated images per block varied between 0 and 3. The average accuracy on the 1‐back task was 90% (SD ± 0.18), indicating a high degree of attention to the stimuli. Participants completed a short practice version of the task prior to the scan.

2.7. Functional MRI processing

Functional MRI data processing was carried out using FEAT (FMRI Expert Analysis Tool, Version 6.0, FMRIB Functional MRI of the Brain Software Library FSL; Jenkinson et al., 2012). High‐field functional data was unwarped using the B0 field map generated from a brain extracted magnitude and phase image in FSL (Woolrich et al., 2001). The 7 T functional data was then registered into 3 T diffusion space to allow for tractography in native diffusion space. First, the pre‐processed high‐resolution MP2RAGE (skull stripped, bias corrected, neck cropped) image was registered to the diffusion data using Advanced Normalization Tools (Avants et al., 2011). The T1w contrast from MP2RAGE was selected as the best intermediary between functional and diffusion data sets. Next, 7 T functional data were registered to the diffusion‐aligned T1w image. In addition, functional data were transformed to Montreal Neurological Institute (MNI) space using FMRIB's Linear Image Registration Tool (FLIRT) (Jenkinson et al., 2002; Jenkinson & Smith, 2001).

Motion correction of fMRI data was performed with FSL's Motion Correction using FLIRT (MCFLIRT); one participant was removed from the analysis due to motion of more than two voxels (3 mm) during the fMRI task. The average absolute motion for all participants was 0.74 mm ± 0.7. To mitigate the effects of motion in the functional imaging analysis, the estimated motion traces from MCFLIRT were added to the GLM as nuisance regressors (Jenkinson et al., 2002). Skull‐stripping and removal of non‐brain tissue was completed using Brain Extraction Tool (BET; Smith, 2002). All data were high‐pass temporal filtered with a Gaussian‐weighted least‐squares straight‐line fitting (sigma = 50.0 s). On an individual subject basis, spatial smoothing was applied using a Gaussian smoothing kernel with a full width at half maximum 4 mm to preserve high spatial resolution (Woolrich et al., 2001). Time‐series statistical analysis was carried out using FMRIB's Improved Linear Model with local autocorrelation correction (Woolrich et al., 2001).

A univariate GLM was implemented to examine the BOLD response associated with face and body stimuli. The contrast Faces > Houses as well as the contrast Bodies > Chairs were used to localise cortical regions involved in face and body processing, respectively. Functionally defined regions‐of‐interest (ROIs) involved in face processing (FFA, OFA, and pSTS‐face) and body processing (FBA, EBA, pSTS‐body) were identified on a subject‐by‐subject basis in individual subject space. Z‐statistic images were uncorrected and thresholded at p = .1. ROIs could be identified in the following number of subjects: OFA (n = 37), FFA (n = 43), pSTS‐face (n = 41), EBA (n = 39), FBA (n = 40), and pSTS‐body (n = 39). ROI analyses (and therefore all subsequent white matter tractography and analyses) were restricted to the right hemisphere, as overall functional activation was much weaker in left hemisphere and fewer ROIs could reliably be identified across participants. As a validation step, the individual ROIs were translated into MNI space, and an average coordinate across participants was determined for each ROI (Table 1). The Euclidean distance was calculated between each ROI and the average coordinates in MNI space for each participant to provide a measure of how variable the locations were across participants. The average coordinates were comparable to other studies where these regions have been extracted in MNI space (Bona et al., 2015; Harry et al., 2016; Schobert et al., 2018; Vocks et al., 2010). ROIs were inflated to 10 mm in diameter into surrounding WM to be used as seed regions for functionally defined white matter tractography. In addition, an ROI in the right ATL was manually drawn based on anatomical landmarks as follows: a coronal plane in right temporal lobe extending from the lateral fissure to the ventral surface of the brain (Hodgetts et al., 2015), with the position of the plane just anterior to where the central sulcus meets the lateral fissure.

TABLE 1.

Average coordinates for ROIs

| ROI | x | y | z | SD |

|---|---|---|---|---|

| FFA | 38 | −43 | −20 | 7.1 |

| OFA | 38 | −76 | −7 | 11.24 |

| pSTS‐face | 46 | −57 | 12 | 6.04 |

| FBA | 38 | −43 | −18 | 7.6 |

| EBA | 41 | −78 | 0 | 9.17 |

| pSTS‐body | 44 | −59 | 11 | 8.87 |

Note: The coordinates reported are the average coordinates of all individual participant ROIs registered to MNI space, in order to allow for comparison with coordinates reported in the literature. The Euclidean distance was calculated between each ROI and the group average coordinate in MNI space, for each observer. The standard deviation (SD) of the Euclidian distance between individual ROIs and group average coordinates is reported to provide a measure of how variable the ROIs were across observers.

Abbreviations: ATL, anterior temporal lobe; EBA, extrastriate body area; FBA, fusiform body area; FFA, fusiform face area; MNI, Montreal Neurological Institute; OFA, occipital face area; PSTS, posterior superior temporal sulcus; ROIs, regions‐of‐interest.

2.8. Diffusion‐weighted‐imaging pre‐processing

Diffusion‐weighted‐imaging (DWI) data were pre‐processed to reduce thermal noise and image artefacts which included image denoising (Veraart et al., 2016), correction for signal drift (Vos et al., 2017), motion, eddy current, and susceptibility‐induced distortion correction (Andersson & Sotiropoulos, 2016), gradient nonlinearities, and Gibbs ringing (Kellner et al., 2016). The pre‐processing pipeline was implemented in MATLAB, but depended on open‐source software packages from MRtrix (Tournier et al., 2019) and FSL (Jenkinson et al., 2012). DWI data quality assurance was performed on the raw diffusion volumes using slicewise outlier detection (Sairanen et al., 2018). Digital brain extraction was performed using the FSL BET (Jenkinson et al., 2012) followed by the FSL segmentation tool which segmented tissue into CSF, WM, and GM.

2.9. Tractography

In order to identify tracts, we first obtained voxel‐wise estimates of fibre orientation distribution functions. This was done by applying multi‐shell multi‐tissue constrained spherical harmonic deconvolution (Jeurissen et al., 2014) to the pre‐processed images (Descoteaux et al., 2009; Seunarine & Alexander, 2014; Tournier et al., 2004, 2007) with maximal spherical harmonics order lmax = 8. Functionally defined fibre tracts (FDFTs) were generated using the using the b = 6000 s/mm2 shell for each participant between ROIs in the right hemisphere within the face network (OFA to FFA; Figure 2, FFA to ATL, pSTS‐face to ATL) and the body network (EBA to FBA) in each subject. In order to assess the confluence of face‐ and body‐selective networks in ATL, we also identified FDFTs between FBA to ATL and pSTS‐body to ATL. Streamlines were generated using a probabilistic algorithm in MRtrix using one ROI as seeding mask and the second as an inclusion region, following the organisation of the visual processing hierarchy. No streamlines were found between OFA/FFA and pSTS‐face, nor between EBA/FBA and pSTS‐body. All identified FDFTs were visually inspected, and spurious fibres manually removed. Any FDFTs between ROIs with less than 20 streamlines were removed from subsequent analyses (FFA‐ATL: n = 1, pSTS‐face‐ATL: n = 1, FBA‐ATL: n = 1, EBA‐FBA: n = 1). In addition to the FDFTs generated, two anatomically defined tracts, as comparison tracts, were extracted in the right hemisphere: the inferior longitudinal fasciculus (ILF) and the cortico‐spinal tract (CST). ILF was selected as a comparison tract to reconstructed FDFTs as it traverses similar subcortical regions, therefore providing an index of specificity, and CST was selected as a region outside the visual processing regions. TractSeg segmentation software (Wasserthal et al., 2019) was used to automatically extract these tracts.

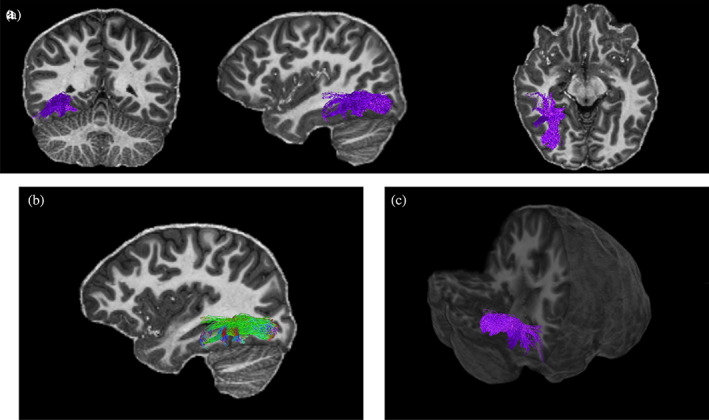

FIGURE 2.

Reconstructed Functionally defined fibre tracts (FDFTs) for occipital face area–fusiform face area (OFA–FFA) for one participant. The top panel depicts the tracts in a coronal, sagittal, and axial planes (a). The tracts are shown with directional colour encoding in the sagittal plane (b), where green is anterior–posterior, red is left–right, and blue is superior–inferior. A 3D positioning of the tracts is shown in the bottom right panel (c).

The following parametric maps were computed to characterise the microstructure of each fibre tract. Diffusion‐weighted data acquired across three b‐value shells (b = 500, 1200, 2400 s/mm2) were fitted to a cumulant expansion of the signal up to the fourth‐order cumulant (i.e., kurtosis), to improve estimation of the second‐order cumulant (the diffusion tensor), and its derived measures (e.g., FA) (Jensen et al., 2016; Veraart et al., 2011). FA has been widely interpreted in the literature as a measure of “tract integrity,” but is actually thought to reflect multiple aspects of the microstructure, including density of axonal packing, orientation of axons within a voxel and membrane permeability (Jones et al., 2013). While FA is more general in its interpretation, it is a powerful benchmark for comparison with existing cohort studies of development (Lebel et al., 2019; Lebel & Beaulieu, 2011; Tamnes et al., 2018). We complemented FA with a second diffusion measure, the spherical mean (Ṧ μ) of the diffusion‐weighted signal at b = 6000 s/mm2, which has been shown to suppress extracellular signal (Kaden et al., 2016; Veraart et al., 2011). The resulting signal reflects restricted diffusion, that is, water that is trapped intracellularly, such as in axons of neurons, or glial cells, without having to rely on signal modelling. In this work, Ṧ μ was used to refine the inferences from FA, as an increase in Ṧ μ is more directly influenced by intracellular signal, such as increases in the number or density of neuronal and glial processes (vs. the surrounding microstructure at large) than FA. The third measure used was derived from quantitative MRI, the longitudinal relaxation rate R1 (= 1/T1), extracted from the MP2RAGE sequence (Marques et al., 2010). R1 is sensitive to myelination and has been shown to correspond with white matter maturation across childhood and adolescence (Lutti et al., 2014). Diffusion and relaxometry measures were projected across streamlines and averaged for each tract resulting in one average metric value per FDFT or comparison tract in each participant.

2.10. Statistical analysis

All statistical analyses were run in R (Version 3.6.1). To assess the relationship between behavioural metrics and FDFTs, Spearman correlations were performed as data were non‐normally distributed. Bonferroni correction was used to control for multiple comparisons of the six FDFTs with a corrected value of p < .008. Age‐related variability in significant tract‐behaviour relationships was controlled for using multiple linear regression. As this was a secondary analysis, the significance level was set at p < .05.

3. RESULTS

3.1. Behavioural results

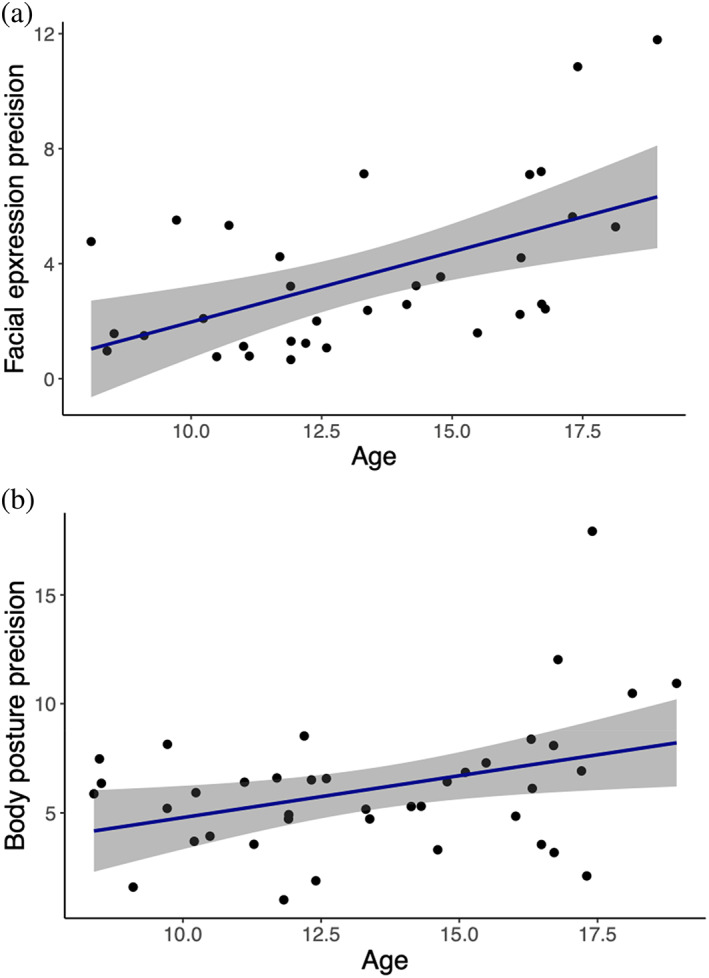

In line with previous research (Dalrymple et al., 2017; Herba et al., 2006), our data indicate that facial expression discrimination improves with age in children and throughout adolescence, as indicated by a significant positive relationship between facial expression precision and age (r s = .51, p < .005) (Figure 3). However, the relationship between body posture discrimination ability and age, while suggesting a trend towards improving body posture discrimination with age, did not reach significance (r s = .27, p = .078) (Figure 3).

FIGURE 3.

Relationship between facial expression precision and body posture precision with age. The correlation between age (years) and (a) facial expression precision (r s = .51, p < .005), and (b) body posture precision (r s = .27, p = .078), as indexed by the slope estimate of the individual's psychometric function (PF) for each task. Each point represents one observer. The 95% confidence interval is shown with grey shading.

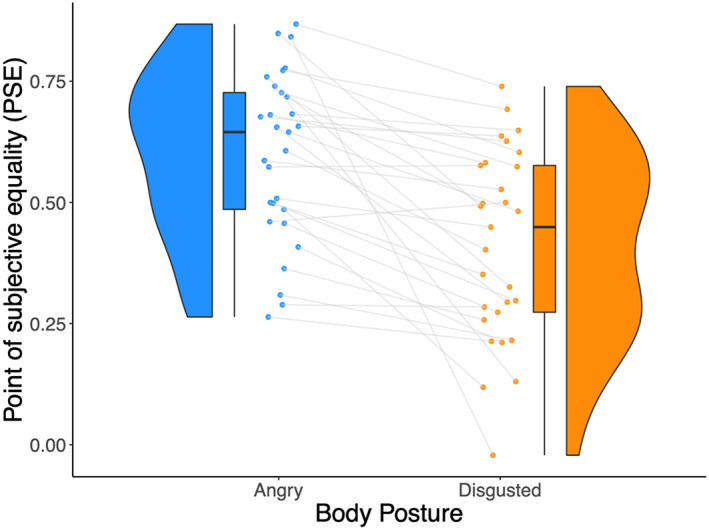

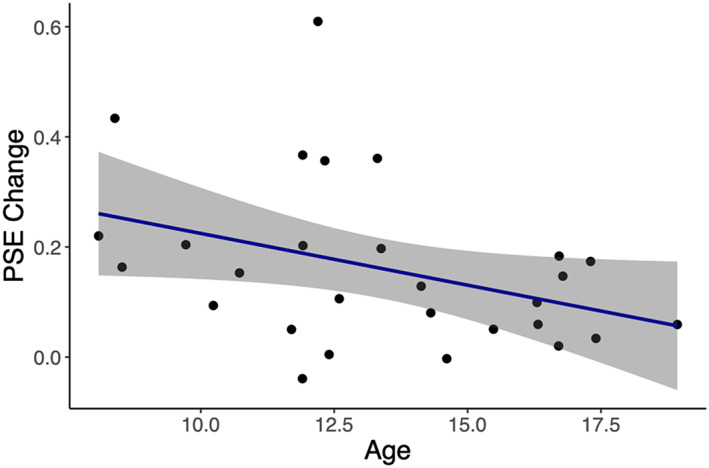

As expected, body posture clearly influenced facial expression perception in our participants (Figure 4). Specifically, perception of facial expressions was biased towards the body emotion, as indicated by a robust difference in the PSE when the same facial expressions were presented in the context of a fully angry versus a fully disgusted body posture (t(28) = 5.10, p < .0001). There was no significant relationship between facial expression precision and PSE change (r s = −.32, p = .11; controlling for age r s = −.32, p = .12), neither was there a significant relationship between body posture precision and PSE change (r s = −.13, p = .55; controlling for age r s = −.13, p = .57). Interestingly, the influence of body posture on facial expression perception decreased with age in children and throughout adolescence, as indicated by a negative relationship between PSE change and age (r s = −.39, p = .0395) (Figure 5). In other words, younger children were more strongly influenced by body posture in their categorisation of facial expressions than older children and adolescents. Controlling for facial expression precision in the relationship between PSE change and age resulted in the relationship no longer being significant (r s = −.29, p = .16), whereas controlling for body posture precision in the relationship between PSE change and age had no effect on the relationship (r s = −.44, p = .03). Together, these findings suggest that the improvement in facial expression precision is a key factor driving the reduction in body context influence with age, whereas improved body posture discrimination does not have an effect on this relationship.

FIGURE 4.

Point‐of‐subjective equality (PSE) change between categorisation of facial expressions on a 100% angry and a 100% disgusted body posture. The raincloud plot displays the PSE values for the whole‐person condition when facial expressions were presented with a fully angry (blue) or fully disgusted (orange) body posture. A significant difference (t(28) = 5.10, p < .0001) was observed between the PSE values when the facial expression was categorised with a 100% angry and 100% disgusted body posture. Each line represents one observer and depicts the change in PSE. The distribution of the values is illustrated by the shaded area, with the boxplot indicating the median and the interquartile range.

FIGURE 5.

Relationship between point‐of‐subjective equality (PSE) change and age. Significant negative correlation between age (years) and PSE change (r s = −.39, p = .0395). Each point represents one observer. The 95% confidence interval is shown with grey shading.

3.2. Tractography results

In order to isolate different aspects of white matter microstructure across development and their relation to perception, we used Ṧ μ to index characteristics directly linked to diffusion within the intracellular (axonal, glial) space, R1 as a measure of myelination, and FA as a widely used general measure reflecting multiple aspects of microstructure. As described below, these measures related differentially to age, perception, as well as the interaction between age and perception.

3.2.1. Age and microstructural change

Changes to intraaxonal and/or glial signal fraction, Ṧ μ, within all FDFTs of the face and body networks (with the exception of FBA to ATL tracts) were observed across development, as indicated by a significant positive relationship between Ṧ μ and age (all p's < .006; see Supplemental Information [SI] Table S1 for details). Similarly, the index of myelination (R1) of all face‐ and body‐related FDFTs increased with age, as demonstrated by the relationship between R1 and age (all p's < .005; SI Table S1). There were no significant relationships between the more general FA measure in any FDFTs and age (SI Table S1). In addition to FDFTs, R1ILF was significantly positively correlated with age (r s = .65, p < .0001), as was Ṧ μ CST (r s = .61, p < .0001) and Ṧ μ ILF (r s = .56, p = .0001).

3.2.2. Perceptual performance and microstructure

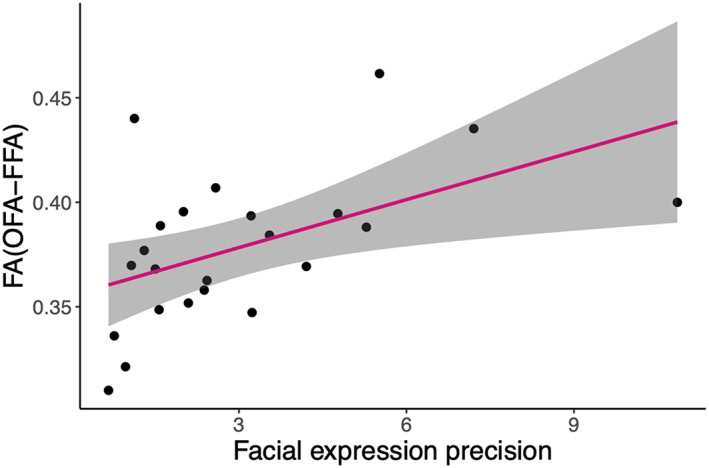

Better facial expression discrimination was related to an increase in all three microstructural measures for the tracts linking OFA and FFA (FAOFA–FFA: r s = .56, p = .006; Figure 6; Ṧ μ OFA–FFA: r s = .55, p = .007; R1OFA–FFA: r s = .62, p = .002). No other significant relationships were observed between facial expression precision and FDFT measures within the face and body networks (see SI Table S2 for detailed statistics). More accurate body posture perception was associated with changes to intracellular signal, Ṧ μ, and increased myelination (R1) of the tracts linking OFA and FFA, as indicated by a positive relationship between perception and both Ṧ μ (r s = .60, p = .001) and R1 (r s = .59, p = .001). No other significant relationships were found between body posture precision and FDFT measures, including FA, across all remaining tracts (SI Table S3). Finally, a greater influence of body context on facial expression perception was related to reduced Ṧ μ (r s = −.61, p = .002), as well as reduced R1 (r s = −.60, p = .002) in tracts linking pSTSbody and ATL, but no other FDFTs showed significant relationships (SI Table S4).

FIGURE 6.

Relationship between facial expression precision and fractional anisotropy (FA)(OFA–FFA). There was a significant correlation between facial expression precision and FA of occipital face area–fusiform face area (OFA–FFA) tracts (r s = .5526, p = .006). Each point represents one observer. The 95% confidence interval is shown with grey shading.

To explore the specificity of these findings to FDFTs within face and body networks, ILF tract metrics were assessed in relation to the behavioural measures, and a significant relationship was found between R1ILF and PSE change (r s = −.59, p = .002). No other significant relationships were found (SI Tables S2–S4). A comparison tract outside of visual processing regions, the CST, also showed no significant relationships between microstructural measures and behaviour (SI Tables S2–S4).

3.2.3. Age, perceptual performance, and microstructural change

To identify the independent contributions of microstructure and age to perceptual performance, we conducted multiple regression analyses with age and tract microstructural measure as predictors for tract microstructural measures showing a significant correlation with perception.

A regression model with age and FAOFA–FFA as predictors for facial expression perception explained 37% of the variance in facial expression perception (adjusted R 2 = .371, F(2,20) = 7.50, p = .004). Age (β = .33, p = .02) and FAOFA–FFA (β = 28.2, p = .02) each independently predicted facial expression perception, suggesting that the microstructural characteristics of the OFA–FFA tracts captured by FA were associated with the ability to recognise facial expressions beyond age‐related variability. The equivalent regression model using age and R1OFA–FFA as the microstructural measure to predict facial expression perception suggested both were significant predictors of perception and explained 38% of the total variance (adjusted R 2 = .375, F(2,20) = 7.61, p = .003). However, only R1OFA–FFA was a significant predictor of facial expression perception once age was accounted for (β = 39.73, p = .02); age did not significantly predict perception independently of R1OFA–FFA (β = .089, p = .63). When Ṧ μ OFA–FFA and age were used as predictors for facial expression perception (adjusted R 2: .245, F(2,20) = 4.56, p = .023), regression analysis suggested that neither Ṧ μ OFA–FFA (β = 69.1, p = .20) nor age (β = .231, p = .25) were significant predictors of facial expression perception independently of the other (see SI Tables S5‐S7). Together, these analyses suggest that Ṧ μ OFA–FFA does not contribute to facial expression perception independently of age. By contrast, our data suggest that FA OFA–FFA and R1OFA–FFA also capture microstructural characteristics that determine facial expression perception independently of age.

A regression model with age and R1OFA–FFA as predictors for body posture perception suggested that although together both were significant predictors of body posture perception (adjusted R 2 = .295, F(2,25) = 6.64, p = .005), neither age (β = .446, p = .09) nor R1OFA–FFA (β = 23.8, p = .28) were significant predictors of body posture perception independently of the other. Similarly, age (β = .435, p = .08) and Ṧ μ OFA–FFA (β = 81.96, p = .17) were not significant predictors of body posture perception independently of the other (see SI Tables S8 and S9 for full model details).

Within the pSTSbody to ATL tracts, both age and R1 were significant predictors of the influence of body posture on facial expression perception (PSE change) (adjusted R 2: .290, F(2,21) = 5.69, p = .011). R1 remained a significant predictor of perception even after accounting for age‐related variability (β = −1.92, p = .045), whereas age did not (β = −.01, p = .57). Similarly, both age and Ṧ μ for the same tracts were significant predictors of PSE change (adjusted R 2: .221, F(2,21) = 4.27, p = .028), but did not predict perception independently of the other (Ṧ μ β = −7.71, p = .15; age β = −.007, p = .59). Finally, when R1ILF and age were used as predictors for PSE change (adjusted R 2 = .271, F(2,22) = 5.46, p = .01), R1ILF remained a borderline significant predictor of PSE change once accounting for age‐related variability (β = −2.15, p = .054), but age did not (β = −.006, p = .54).

Taken together, the regression analyses suggest that microstructural measures FA and R1 predict variability in face‐ and body‐related perceptual abilities of relevant face or body network tracts beyond variability associated with age. By contrast, the role of Ṧ μ measures on perception within these tracts appears to be strongly related to age.

4. DISCUSSION

In the current study, we measured facial expression and body posture perception in children and adolescents from 8 to 18 years, as well as the influence of body context on facial expression perception. We directly linked perceptual performance to microstructural changes within white matter tracts along face‐ and body‐selective processing pathways. We show that, using multiple, complementary microstructural measures, we can identify both age‐related developmental changes in microstructure related to behaviour, as well as age‐independent microstructural changes. Ṧ μ and R1, specific measures based on intracellular diffusion‐weighted signal and myelination respectively, increased with age along face‐ and body‐selective pathways. This increase was not seen in FA, a more general, composite measure that indexes several aspects of the microstructure. Increases in all three measures in the tracts linking OFA and FFA related to developmental improvements in the ability to recognise facial expressions. Importantly, however, once microstructural changes due to age were controlled for, only R1 and the composite measure FA of the OFA–FFA tracts predicted perceptual performance, while Ṧ μ was not related to behaviour beyond the variability linked to age. In addition to improvements in facial expression perception with age, we found that children and adolescents are less influenced by body posture when perceiving facial expressions as they get older. Our data suggest that this decreasing influence of body posture is driven by improvements in facial expression discrimination ability. The influence of body context on facial expression perception was related to microstructural measures based on intracellular diffusion‐weighted signal and myelination, Ṧ μ and R1 respectively, of the tract between pSTSbody and ATL. The myelination measure R1 also predicted this bias towards body posture beyond age‐related variability. Taken together, our results show that behavioural variability across development in face and body networks is driven by both age‐related maturation of white matter, as well as age‐independent variation in structural properties which contribute to behaviour.

The importance of functional characteristics of face‐selective regions for social perception has been well‐studied in adults, and, to a lesser extent, in children and adolescents. Specifically, development of the ability to recognize facial identity is linked to increases in the size of face‐selective FFA, as well as increased response amplitude and selectivity to faces in this region (Golarai et al., 2009, 2010; Gomez et al., 2017; Natu et al., 2016). However, the role of white matter connections along the processing hierarchy in constraining function and behaviour is less well‐understood. In adults, the properties of tracts at the boundary between white and grey matter close to FFA have been linked to face recognition ability (Gomez et al., 2015; Song, Garrido, et al., 2015). Here, we show that the structure of tracts linking OFA to FFA contributes to facial expression recognition ability during development. This relationship was specific to tracts linking face‐selective regions and was not found for tracts linking body‐selective EBA and FBA, despite the latter being in close proximity to FFA. Similarly, the absence of a relationship between facial expression discrimination ability and microstructural characteristics of large occipitotemporal association tracts like the ILF suggests behavioural specificity exists within smaller fibre bundles linking face‐specific regions. This finding provides support for previous suggestions that tracts linking face‐specific regions along VTC are distinct from ILF (Gomez et al., 2015; Wang et al., 2020).

Microstructural MRI measurements are made at the level of imaging voxels (mm scale), but inferences are drawn at the level of the microstructure within that voxel (μm). This situation can lead to a lack of specificity in identifying those elements of the microstructure that are underlying changes in the observed metrics. Our aim was to address this issue by using multiple microstructural measures within the same sample, which are optimised to detect signals arising from different components of the microstructure. In particular, the ultra‐strong gradients of the Connectom scanner (300 mT/m) allowed us to obtain diffusion data at high b‐values (b = 6000 s/mm2) while maintaining a reasonable signal to noise ratio. At these b‐values, the signal is weighted more heavily towards the diffusion of water molecules in the intracellular space, providing the rotationally invariant spherical mean Ṧ μ metric with greater sensitivity to the intracellular components of the microstructure. An increased Ṧ μ measure could, for instance, reflect reduced axon diameter or a greater number and/or density of neuronal and/or glial processes. By contrast, the FA measure, which is arguably the most widely used measure of white matter microstructure from diffusion MR, has high sensitivity in detecting a range of changes in microstructure; however, it is not possible to disentangle the microstructural components driving these changes (Afzali et al., 2021; Jones et al., 2013). FA reflects the (normalised) standard deviation of diffusivities along three orthogonal axes: the principal diffusion axis, and the two orthogonal axes. When diffusion is the same along all three axes, FA is zero. When constrained to move along just one axis, the FA assumes a value of one. FA can therefore reflect multiple aspects of the microstructure, including orientation of axons within a voxel, changes in density of axonal packing, membrane permeability, as well as combinations of these factors. In order to address myelination more directly, given its important role in development of white matter, we also obtained measures of the longitudinal relaxation rate (R1), which has previously been shown through comparative ex‐vivo studies of MR measurements and histology to map myelin content closely (Lutti et al., 2014).

What can these measures tell us about the link between behaviour and white matter microstructure during development? Our findings suggest that the more specific measures of Ṧ μ and R1, sensitive to the signal from intracellular space and myelin, respectively, are strongly age dependent. These measures increased with age across almost all of the tracts that we studied here. This finding is consistent with previous research, which found that Ṧ μ is sensitive to age‐related maturation of white matter across the developing brain (Raven et al., 2020), with age‐related changes in Ṧ μ suggested to reflect reduced axonal diameter, greater complexity of neuronal and glial processes, with more advanced developmental stage. Similarly, R1 has previously been shown to increase with age across the large fascicles in the brain (Yeatman et al., 2014). The positive relationship between facial expression discrimination ability and Ṧ μ of tracts linking OFA to FFA suggests that changes in number, density and/or complexity of neuronal and glial processes with general development contribute to the improvement in facial expression discrimination performance with age. Importantly, these changes in brain structure do not contribute to perception independently of age. Similarly, some variability in myelination measured by R1 influences perception in an age‐dependent manner. Interestingly, however, myelination measured by R1 also contributes to perception beyond age‐related changes. This age‐independent effect was even more clearly seen for FA: FA was relatively insensitive to age across all tracts identified here and was predictive of facial expression discrimination ability even after accounting for age‐related variability. These results suggest that there are differences in microstructure that explain behavioural variability beyond age‐related changes in neuronal and glial processes, and myelination. While myelination as measured by R1 appears to have both an age‐related and an age‐independent influence on behaviour, FA is a composite measure, and it is therefore difficult to pinpoint exactly what microstructural characteristics underlie changes in FA. However, previous research has suggested that variability in FA linked to facial identity processing ability in adults in white matter close to FFA may be due to an increase in the number and density of connections to neurons in FFA (Gomez et al., 2015). Support for a similar proposal applying to the current data is also realised in recent findings reporting large variability in FA along tracts during development, which dwarf any age‐related changes in FA across tracts (Yeatman et al., 2014). Whether our findings of age‐dependent and age‐independent microstructural variability contributing to behaviour reflect a general organising principle for the constraints posed by white matter structure on behaviour during development, or whether this is specific to the structure–behaviour relationship within these particular networks remains an open question. In order to disentangle the characteristics of white matter structure driving behavioural changes during development and those underpinning naturally occurring behavioural differences within the population, it will be important for future research to directly compare multiple microstructural measures and their contribution to perceptual ability across children and adults.

Although white matter tracts linking regions of the face perception network have previously been identified (Gschwind et al., 2012; Pyles et al., 2013; Wang et al., 2020), much less is known about white matter connections linking body‐specific cortical regions. To our knowledge, our study was the first to use body posture morphs to investigate the development of body posture discrimination ability. The results indicate that body posture perception follows a similarly protracted developmental trajectory to facial expression perception, reaching far into adolescence. To relate this perceptual development to brain structure, we mirrored the anatomical and hierarchical processing of the face network in our extraction of tracts for the body network. Interestingly, we found no evidence for a relationship between body posture discrimination ability and the structure of tracts linking EBA to FBA, but instead found evidence for a relationship with the structure of tracts linking OFA to FFA. This relationship was limited to the Ṧ μ and R1 measures, however, and neither was a significant predictor for body posture perception independently of age, suggesting that variability in these tracts related to body posture discrimination appears to be limited to age‐related maturation of white matter. Nevertheless, the notion that tracts linking face‐specific processing regions also encode elements of body posture discrimination is consistent with previous research showing similarly robust levels of activation of FFA in response to blurred faces on bodies as to faces alone (Cox, 2004). Regardless, it is still surprising that we do not find an association between body posture perception and characteristics of tracts linking body‐selective areas, while we do find this link for face‐selective areas. Importantly, the relationship between facial expression discrimination ability and the microstructure of OFA–FFA tracts remained significant even after removing variability associated with body posture discrimination, highlighting that facial expression perception ability is related to tract microstructure independently of body posture perception ability. The lack of significant associations found between body posture perception and tracts linking body‐specific regions may reflect our gaps in knowledge regarding white matter connections and pathways important in body perception.

A key aim of our study was to identify not only the relationship between brain development and perception of isolated faces and bodies, but also its relation to perception of integrated facial expression and body posture signals. In everyday life, facial expressions are seen within the context of other socially relevant signals, most importantly, the other person's body posture. It is well‐established that body context has an important influence on facial expression perception in adults, and that this effect is larger in children. Our data suggest that the influence of body context on facial expression perception continues to decrease well into adolescence. Moreover, our results point towards a potential mechanism for the decreased reliance on body posture with age. Specifically, the data suggest that the improvement in facial expression discrimination ability drives the decrease in the body context effect across development. It is tempting to speculate that a similar mechanism might drive differences observed throughout the lifespan. For instance, older adults rely more strongly on body context in facial expression perception relative to younger adults (Abo Foul et al., 2018; Kumfor et al., 2018). Given that older adults also suffer a reduced ability to discriminate between different facial expressions, it is possible that the increased reliance on body posture is a direct result of their reduced facial expression discrimination ability, a mirror‐analogue to what we found here in children and adolescents.

It is important to highlight that our results cannot be explained by children simply ignoring the instructions and judging body context instead of facial expression in the whole‐person task. The high consistency of performance within participants in discriminating facial expressions, as measured by the slope of the PF, across the conditions with and without body context (r s = .36, p = .07) indicates that children followed task instructions and discriminated facial expressions in the whole‐person task, rather than simply judging the body posture. Additionally, based on our exclusion criteria, any participants, who simply responded according to the body posture, would have been removed from the analysis due to an inability to fit a PF to the data.

Linking the body‐context effect to brain development, we found that the extent of influence of body context on facial expression perception was significantly related to the microstructure of fibre tracts between pSTSbody and ATL. Specifically, greater Ṧ μ and R1 for this tract was associated with a smaller influence of body posture on facial expression perception. Importantly, myelination of this tract, as measured by R1, was predictive of the influence of body context even after removing the variability associated with age, suggesting that the behaviour–structure association is driven by factors relating to general brain maturation, as well as age‐independent variability in myelination influencing perception. While it is unclear how microstructural characteristics, indexed by Ṧ μ and R1, in body‐specific tracts linking pSTSbody and ATL leads to less influence of body on facial expression perception, the location of these tracts lends support to previous findings in humans and monkeys pointing towards ATL as potential sites of face and body integration (Fisher & Freiwald, 2015; Harry et al., 2016; Teufel et al., 2019). Indeed, within the context of recent proposals put forward by Taubert et al. (2021), our microstructural results provide some support for face and body networks being weakly integrated in early stages of processing, insofar as the link between tract microstructure from OFA to FFA and body posture perception indicates some processing of body posture within face pathways, with face and body processing becoming more integrated downstream of FFA, particularly leading to ATL.

More generally, our findings speak to the organisation of face processing in the visual system by providing support for revised face perception models (Duchaine & Yovel, 2015; Grill‐Spector et al., 2018). While older models argued for separate pathways for facial identity and facial expression (Bruce & Young, 1986; Haxby et al., 2000) with FFA and STS thought to be specific to identity and expression processing, respectively, our results support more recent findings suggesting that FFA plays a role in the processing of facial expression (Bernstein & Yovel, 2015). Specifically, we show that the microstructure of tracts linking OFA to FFA is related to the development of facial expression discrimination ability, while none of the STS‐related tracts shows this association. It is worth highlighting that our analyses were restricted to core face and body processing regions, as we were particularly interested in the integration of face and body signals, with ATL as a likely site of possible integration. However, it is clear that brain areas beyond core face perception regions, such as the amygdala, play a critical role in emotion perception (Phelps & LeDoux, 2005). A deeper understanding of the relationship between facial expression perception and brain structure during development will therefore necessitate an exploration of the wider face and body perception networks in future.

The results of our study have to be considered within the context of a key limitation being that we focussed on only two emotional expressions: anger and disgust. The decision to focus on a limited number of emotions was partly based on feasibility constraints, dictated by the use of psychophysical methods. We specifically chose these two emotions for several reasons: first, disgust is one of the last emotions to be reliably recognised during development, maximising the likelihood of observing substantial developmental change across both our child and adolescent participants. Second, a key aim of our study was to focus on both emotional face and body signals, as well as their integration. Previous research has shown a more robust effect of body context with anger and disgust compared to other emotions (Aviezer et al., 2008), and we wanted to maximise the potential influence of body context in order to be sensitive to individual differences across development. Finally, the emotions of disgust and anger are associated with body postures that are clearly identifiable. By contrast, other postures like sadness and happiness are less easily conveyed through a simple posture (Lopez et al., 2017). While the limitation to these two emotions is important to keep in mind, there is no reason to believe that the key principles identified here, that facial expression precision drives the influence of body posture, as well as the relationships to microstructure, would not also apply to other emotions.

In summary, we focussed on the well‐defined face and body networks to study the interplay between development of brain structure and perception in children and adolescents. We show that facial expression and body posture perception have a protracted developmental trajectory, extending far into adolescence. We find a similar developmental profile for the integration of face and body signals and demonstrate that the changing influence of body posture on facial expression can largely be explained by improvements in facial expression discrimination. We demonstrate that different microstructural characteristics of tracts within face and body pathways differentially relate to variability in facial expression discrimination in an age‐dependent or age‐independent manner. In particular, changes to intracellular signal‐dominated microstructure, for example the complexity of neuronal and glial processes, was predominantly associated with age‐dependent improvements in facial expression and body posture perception. By contrast, myelination measured by R1, and the more general, composite measure of microstructure, FA, were also associated with age‐independent differences in perceptual performance. Overall, our results highlight the protracted development of facial expression perception, body posture perception, and context effects on facial expression perception. Moreover, our results shed light on the constraints that white matter microstructure imposes on behaviour and highlights the utility of using complementary measures of microstructure to study the links between brain structure, function, and perception.

FUNDING INFORMATION

ER was supported by the Marshall‐Sherfield Postdoctoral Fellowship during this work and is now supported by a NIH Fellowship (NICHD/1F32HD103313‐01). DKJ is supported by a Wellcome Trust Investigator Award (096646/Z/11/Z) and a Wellcome Trust Strategic Award (104943/Z/14/Z).

CONFLICT OF INTEREST

The authors declare no conflict of interest.

Supporting information

DATA S1 Supporting Information

ACKNOWLEDGEMENTS

The authors would like to thank John Evans, Slawomir Kusmia, Allison Cooper, and Sila Genc for their support with data acquisition. The authors would also like to thank the participants and their families for taking part in the study.

Ward, I. L. , Raven, E. P. , de la Rosa, S. , Jones, D. K. , Teufel, C. , & von dem Hagen, E. (2023). White matter microstructure in face and body networks predicts facial expression and body posture perception across development. Human Brain Mapping, 44(6), 2307–2322. 10.1002/hbm.26211

Funding information Marshall Aid Commemoration Commission; National Institute of Child Health and Human Development, Grant/Award Number: 1F32HD103313‐01; Wellcome Trust, Grant/Award Numbers: 096646/Z/11/Z, 104943/Z/14/Z

Contributor Information

Isobel L. Ward, Email: wardi@cardiff.ac.uk.

Elisabeth von dem Hagen, Email: vondemhagene@cardiff.ac.uk.

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

REFERENCES

- Abo Foul, Y. , Eitan, R. , & Aviezer, H. (2018). Perceiving emotionally incongruent cues from faces and bodies: Older adults get the whole picture. Psychology and Aging, 33(4), 660–666. 10.1037/pag0000255 [DOI] [PubMed] [Google Scholar]

- Afzali, M. , Pieciak, T. , Newman, S. , Garyfallidis, E. , Özarslan, E. , Cheng, H. , & Jones, D. K. (2021). The sensitivity of diffusion MRI to microstructural properties and experimental factors. Journal of Neuroscience Methods, 347, 108951. 10.1016/j.jneumeth.2020.108951 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersson, J. L. R. , & Sotiropoulos, S. N. (2016). An integrated approach to correction for off‐resonance effects and subject movement in diffusion MR imaging. NeuroImage, 125, 1063–1078. 10.1016/j.neuroimage.2015.10.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants, B. B. , Tustison, N. J. , Song, G. , Cook, P. A. , Klein, A. , & Gee, J. C. (2011). A reproducible evaluation of ANTs similarity metric performance in brain image registration. NeuroImage, 54(3), 2033–2044. 10.1016/j.neuroimage.2010.09.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer, H. , Hassin, R. R. , Ryan, J. , Grady, C. , Susskind, J. , Anderson, A. , Moscovitch, M. , & Bentin, S. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science, 19(7), 724–732. [DOI] [PubMed] [Google Scholar]

- Aviezer, H. , Trope, Y. , & Todorov, A. (2012). Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science, 338(6111), 1225–1229. 10.1126/science.1224313 [DOI] [PubMed] [Google Scholar]

- Bernstein, M. , & Yovel, G. (2015). Two neural pathways of face processing: A critical evaluation of current models. Neuroscience & Biobehavioral Reviews, 55, 536–546. 10.1016/j.neubiorev.2015.06.010 [DOI] [PubMed] [Google Scholar]

- Bona, S. , Cattaneo, Z. , & Silvanto, J. (2015). The causal role of the occipital face area (OFA) and lateral occipital (LO) cortex in symmetry perception. The Journal of Neuroscience, 35(2), 731–738. 10.1523/JNEUROSCI.3733-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10, 433–436. [PubMed] [Google Scholar]

- Bruce, V. , & Young, A. (1986). Understanding face recognition. British Journal of Psychology, 77(3), 305–327. 10.1111/j.2044-8295.1986.tb02199.x [DOI] [PubMed] [Google Scholar]

- Calder, A. J. (Ed.). (2011). The Oxford handbook of face perception. Oxford University Press. [Google Scholar]

- Cox, D. (2004). Contextually evoked object‐specific responses in human visual cortex. Science, 304(5667), 115–117. 10.1126/science.1093110 [DOI] [PubMed] [Google Scholar]

- Dalrymple, K. A. , di Oleggio, V. , Castello, M. , Elison, J. T. , & Gobbini, M. I. (2017). Concurrent development of facial identity and expression discrimination. PLoS One, 12(6), e0179458. 10.1371/journal.pone.0179458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nature Reviews Neuroscience, 7(3), 242–249. 10.1038/nrn1872 [DOI] [PubMed] [Google Scholar]

- Descoteaux, M. , Deriche, R. , Knosche, T. R. , & Anwander, A. (2009). Deterministic and probabilistic tractography based on complex fibre orientation distributions. IEEE Transactions on Medical Imaging, 28(2), 269–286. 10.1109/TMI.2008.2004424 [DOI] [PubMed] [Google Scholar]

- Duchaine, B. , & Yovel, G. (2015). A revised neural framework for face processing. Annual Review of Vision Science, 1(1), 393–416. 10.1146/annurev-vision-082114-035518 [DOI] [PubMed] [Google Scholar]

- Eggenschwiler, F., Kober, T., Magill, A. W., Gruetter, R., & Marques, J. P. (2012). SA2RAGE: A new sequence for fast B1+‐mapping. Magnetic Resonance Medicine, 67, 1609–1619. [DOI] [PubMed] [Google Scholar]

- Fedorov, L. A. , Chang, D.‐S. , Giese, M. A. , Bülthoff, H. H. , & de la Rosa, S. (2018). Adaptation aftereffects reveal representations for encoding of contingent social actions. Proceedings of the National Academy of Sciences of the United States of America, 115(29), 7515–7520. 10.1073/pnas.1801364115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher, C. , & Freiwald, W. A. (2015). Whole‐agent selectivity within the macaque face‐processing system. Proceedings of the National Academy of Sciences of the United States of America, 112(47), 14717–14722. 10.1073/pnas.1512378112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallichan, D. , Marques, J. P. , & Gruetter, R. (2016). Retrospective correction of involuntary microscopic head movement using highly accelerated fat image navigators (3D FatNavs) at 7T. Magnetic Resonance in Medicine, 75(3), 1030–1039. 10.1002/mrm.25670 [DOI] [PubMed] [Google Scholar]

- Gao, X. , & Maurer, D. (2009). Influence of intensity on children's sensitivity to happy, sad, and fearful facial expressions. Journal of Experimental Child Psychology, 102(4), 503–521. 10.1016/j.jecp.2008.11.002 [DOI] [PubMed] [Google Scholar]

- Golarai, G. , Ghahremani, D. G. , Grill‐Spector, K. , & Gabrieli, J. D. E. (2010). Evidence for maturation of the fusiform face area (FFA) in 7 to 16 year old children. Journal of Vision, 5(8), 634. 10.1167/5.8.634 [DOI] [Google Scholar]

- Golarai, G. , Liberman, A. , Yoon, J. M. D. , & Grill‐Spector, K. (2009). Differential development of the ventral visual cortex extends through adolescence. Frontiers in Human Neuroscience, 3, 80. 10.3389/neuro.09.080.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez, J. , Barnett, M. A. , Natu, V. , Mezer, A. , Palomero‐Gallagher, N. , Weiner, K. S. , Amunts, K. , Zilles, K. , & Grill‐Spector, K. (2017). Microstructural proliferation in human cortex is coupled with the development of face processing. Science, 355(6320), 68–71. 10.1126/science.aag0311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez, J. , Pestilli, F. , Witthoft, N. , Golarai, G. , Liberman, A. , Poltoratski, S. , Yoon, J. , & Grill‐Spector, K. (2015). Functionally defined white matter reveals segregated pathways in human ventral temporal cortex associated with category‐specific processing. Neuron, 85(1), 216–227. 10.1016/j.neuron.2014.12.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill‐Spector, K. , Weiner, K. S. , Gomez, J. , Stigliani, A. , & Natu, V. S. (2018). The functional neuroanatomy of face perception: From brain measurements to deep neural networks. Interface Focus, 8(4), 20180013. 10.1098/rsfs.2018.0013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gschwind, M. , Pourtois, G. , Schwartz, S. , Van De Ville, D. , & Vuilleumier, P. (2012). White‐matter connectivity between face‐responsive regions in the human brain. Cerebral Cortex, 22(7), 1564–1576. 10.1093/cercor/bhr226 [DOI] [PubMed] [Google Scholar]

- Harry, B. B. , Umla‐Runge, K. , Lawrence, A. D. , Graham, K. S. , & Downing, P. E. (2016). Evidence for integrated visual face and body representations in the anterior temporal lobes. Journal of Cognitive Neuroscience, 28(8), 1178–1193. 10.1162/jocn_a_00966 [DOI] [PubMed] [Google Scholar]

- Hassin, R. R. , Aviezer, H. , & Bentin, S. (2013). Inherently ambiguous: Facial expressions of emotions, in context. Emotion Review, 5(1), 60–65. 10.1177/1754073912451331 [DOI] [Google Scholar]

- Haxby, J. V. , Hoffman, E. A. , & Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–233. 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Heck, A. , Chroust, A. , White, H. , Jubran, R. , & Bhatt, R. S. (2018). Development of body emotion perception in infancy: From discrimination to recognition. Infant Behavior and Development, 50, 42–51. 10.1016/j.infbeh.2017.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herba, C. M. , Landau, S. , Russell, T. , Ecker, C. , & Phillips, M. L. (2006). The development of emotion‐processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry, 47(11), 1098–1106. 10.1111/j.1469-7610.2006.01652.x [DOI] [PubMed] [Google Scholar]

- Hodgetts, C. J. , Postans, M. , Shine, J. P. , Jones, D. K. , Lawrence, A. D. , & Graham, K. S. (2015). Dissociable roles of the inferior longitudinal fasciculus and fornix in face and place perception. eLife, 4, e07902. 10.7554/eLife.07902 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson, M. , Bannister, P. , Brady, M. , & Smith, S. (2002). Improved optimization for the robust and accurate linear registration and motion correction of brain images. NeuroImage, 17(2), 825–841. 10.1006/nimg.2002.1132 [DOI] [PubMed] [Google Scholar]

- Jenkinson, M. , Beckmann, C. F. , Behrens, T. E. J. , Woolrich, M. W. , & Smith, S. M. (2012). FSL. NeuroImage, 62(2), 782–790. 10.1016/j.neuroimage.2011.09.015 [DOI] [PubMed] [Google Scholar]

- Jenkinson, M. , & Smith, S. (2001). A global optimisation method for robust affine registration of brain images. Medical Image Analysis, 5(2), 143–156. 10.1016/S1361-8415(01)00036-6 [DOI] [PubMed] [Google Scholar]

- Jensen, C. D. , Duraccio, K. M. , Carbine, K. M. , & Kirwan, C. B. (2016). Topical review: Unique contributions of magnetic resonance imaging to pediatric psychology research: Table I. Journal of Pediatric Psychology, 41(2), 204–209. 10.1093/jpepsy/jsv065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeurissen, B. , Tournier, J.‐D. , Dhollander, T. , Connelly, A. , & Sijbers, J. (2014). Multi‐tissue constrained spherical deconvolution for improved analysis of multi‐shell diffusion MRI data. NeuroImage, 103, 411–426. 10.1016/j.neuroimage.2014.07.061 [DOI] [PubMed] [Google Scholar]

- Jones, D. K. , Knösche, T. R. , & Turner, R. (2013). White matter integrity, fiber count, and other fallacies: The do's and don'ts of diffusion MRI. NeuroImage, 73, 239–254. 10.1016/j.neuroimage.2012.06.081 [DOI] [PubMed] [Google Scholar]

- Kaden, E. , Kruggel, F. , & Alexander, D. C. (2016). Quantitative mapping of the per‐axon diffusion coefficients in brain white matter: Quantitative mapping of the per‐axon diffusion coefficients. Magnetic Resonance in Medicine, 75(4), 1752–1763. 10.1002/mrm.25734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellner, E. , Dhital, B. , Kiselev, V. G. , & Reisert, M. (2016). Gibbs‐ringing artifact removal based on local subvoxel‐shifts: Gibbs‐ringing artifact removal. Magnetic Resonance in Medicine, 76(5), 1574–1581. 10.1002/mrm.26054 [DOI] [PubMed] [Google Scholar]

- Kingdom, F. A. A. , & Prins, N. (2010). Psychophysics: A practical introduction (1st ed.). Elsevier. [Google Scholar]

- Kleiner, M. , Brainard, D. , & Pelli, D. (2007). What's new in Psychtoolbox‐3? Perception, 36, 1–16. [Google Scholar]

- Kontsevich, L. L. , & Tyler, C. W. (1999). Bayesian adaptive estimation of psychometric slope and threshold. Vision Research, 39(16), 2729–2737. 10.1016/S0042-6989(98)00285-5 [DOI] [PubMed] [Google Scholar]

- Kumfor, F. , Ibañez, A. , Hutchings, R. , Hazelton, J. L. , Hodges, J. R. , & Piguet, O. (2018). Beyond the face: How context modulates emotion processing in frontotemporal dementia subtypes. Brain, 141(4), 1172–1185. 10.1093/brain/awy002 [DOI] [PubMed] [Google Scholar]

- Langner, O. , Dotsch, R. , Bijlstra, G. , Wigboldus, D. H. J. , Hawk, S. T. , & van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition & Emotion, 24(8), 1377–1388. 10.1080/02699930903485076 [DOI] [Google Scholar]

- Lebel, C. , & Beaulieu, C. (2011). Longitudinal development of human brain wiring continues from childhood into adulthood. Journal of Neuroscience, 31(30), 10937–10947. 10.1523/JNEUROSCI.5302-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebel, C. , Treit, S. , & Beaulieu, C. (2019). A review of diffusion MRI of typical white matter development from early childhood to young adulthood. NMR in Biomedicine, 32(4), e3778. 10.1002/nbm.3778 [DOI] [PubMed] [Google Scholar]

- Leitzke, B. T. , & Pollak, S. D. (2016). Developmental changes in the primacy of facial cues for emotion recognition. Developmental Psychology, 52(4), 572–581. 10.1037/a0040067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez, L. D. , Reschke, P. J. , Knothe, J. M. , & Walle, E. A. (2017). Postural communication of emotion: Perception of distinct poses of five discrete emotions. Frontiers in Psychology, 8, 710. 10.3389/fpsyg.2017.00710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist, D. , Flykt, A. , & Öhman, A. (1998). The Karolinska Directed Emotional Faces. Department of Clinical Neuroscience, Psychology section, Karolinska Institutet.