Abstract

Background:

We performed a multistep quality improvement project related to neuromuscular blockade and monitoring to evaluate the effectiveness of a comprehensive quality improvement program based upon the Multi-institutional Perioperative Outcomes Group (MPOG) Anesthesiology Performance Improvement and Reporting Exchange (ASPIRE) metrics targeted specifically at improving train of four (TOF) monitoring rates.

Methods:

We adapted the plan-do-study-act (PDSA) framework and implemented 2 PDSA cycles between January 2021 and December 2021. PDSA Cycle 1 (Phase I) and PDSA Cycle 2 (Phase II) included a multipart program consisting of (1) a departmental survey assessing attitudes toward intended results, outcomes, and barriers for TOF monitoring, (2) personalized MPOG ASPIRE quality performance reports displaying provider performance, (3) a dashboard access to help providers complete a case-by-case review, and (4) a web-based app spaced education module concerning TOF monitoring and residual neuromuscular blockade. Our primary outcome was to identify the facilitators and barriers to implementation of our intervention aimed at increasing TOF monitoring.

Results:

In Phase I, 25 anesthesia providers participated in the preintervention and postintervention needs assessment survey and received personalized quality metric reports. In Phase II, 222 providers participated in the preintervention needs assessment survey and 201 participated in the postintervention survey. Thematic analysis of Phase I survey data aimed at identifying the facilitators and barriers to implementation of a program aimed at increasing TOF monitoring revealed the following: intended results were centered on quality of patient care, barriers to implementation largely encompassed issues with technology/equipment and the increased burden placed on providers, and important outcomes were focused on patient outcomes and improving provider knowledge. Results of Phase II survey data was similar to that of Phase I. Notably in Phase II a few additional barriers to implementation were mentioned including a fear of loss of individualization due to standardization of patient care plan, differences between the attending overseeing the case and the in-room provider who is making decisions/completing documentation, and the frequency of intraoperative handovers. Compared to preintervention, postintervention compliance with TOF monitoring increased from 42% to 70% (28% absolute difference across N = 10 169 cases; P < .001).

Conclusions:

Implementation of a structured quality improvement program using a novel educational intervention showed improvements in process metrics regarding neuromuscular monitoring, while giving us a better understanding of how best to implement improvements in this metric at this magnitude.

Keywords: Neuromuscular monitoring, plan-do-study-act, quality improvement

Introduction

Train of four (TOF) monitoring plays an important role in assessing the depth of neuromuscular blockade (NMB), in guiding appropriate dosing of neuromuscular blocking agents and their antagonists, and in assessing the adequacy of NMB reversal prior to extubation.1,2 Appropriate use of TOF monitoring helps prevent the occurrence of residual neuromuscular blockade (RNMB) which can lead to significant respiratory complications, including hypoxemia, airway obstruction, and need for reintubation, as well as increased risk for increased postanesthesia care unit length of stay and critical care admission.3–5 Neuromuscular monitoring (NMM) by TOF ratio, a quantitative measure of NMB, along with appropriate dosing of neuromuscular reversal, has been shown to reduce the incidence of RNMB and its associated complications when compared to clinical tests and qualitative measures.1,6–8

However, even with a large number of publications on this topic, including numerous consensus guidelines recommending qualitative TOF monitoring as a minimum requirement to guide and assess adequacy of reversal,9–11 the routine application of evidence-concordant NMM remains low, which represents a significant practice gap in the dissemination and implementation of published research. There is also concern that the introduction of sugammadex may be associated with less application of evidence-based NMM. However, after the administration of sugammadex, the rate of RNMB can be as high as 9.4%.12 As such, based on current evidence, the optimal approach to reducing RNMB is either a graduated dosing of reversal agent when qualitative monitoring is used (ie, peripheral nerve stimulator) or quantitative NMM with goal of TOF ratio of at least 0.9 in all cases in which a NMB agent is used, regardless of what reversal agent is given to the patient.10

In light of this evidence, and with a concern that our own practices were not in line with current recommendations, we undertook a multistep quality improvement (QI) project to evaluate and address our current performance in relation to NMM in our department. We designed and tested the use of a novel framework that included the Anesthesiology Performance Improvement and Reporting Exchange (ASPIRE) QI program, a targeted learning module, and a series of surveys to identify facilitators and barriers to department-wide implementation. The aim of this study was to improve our understanding of the facilitators and barriers to increasing the evidence-based use of TOF monitoring in routine clinical practice at our institution.

Materials and Methods

Study Setup

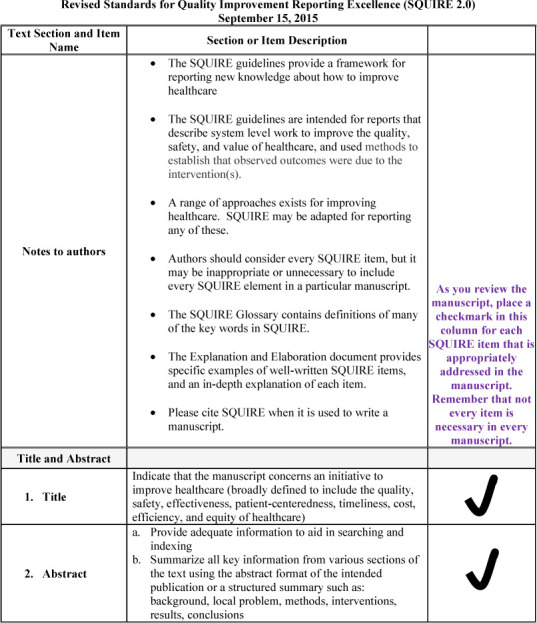

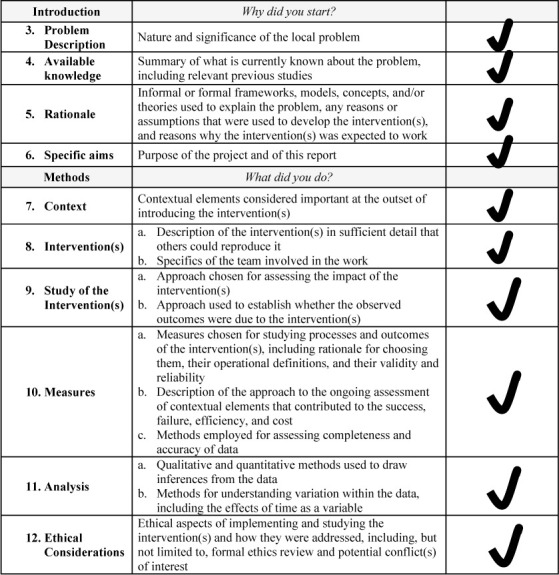

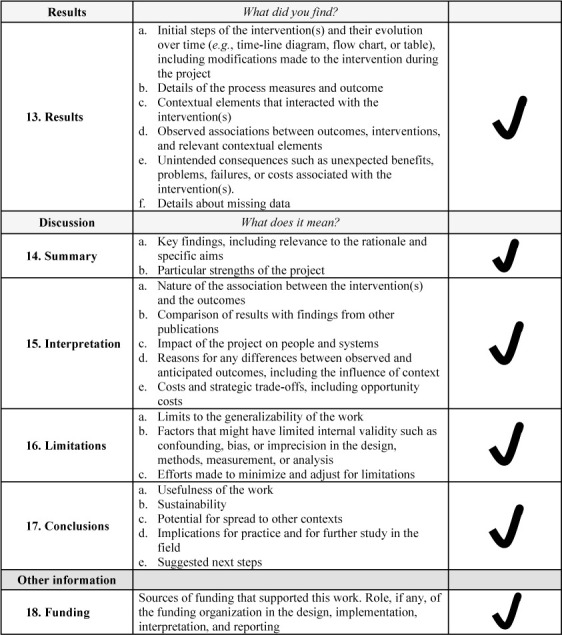

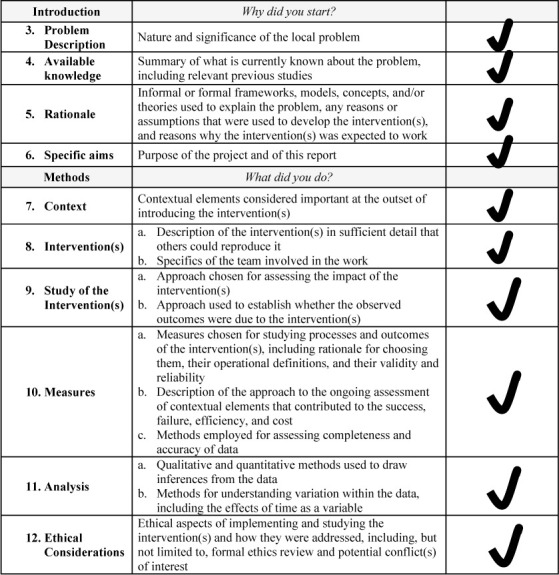

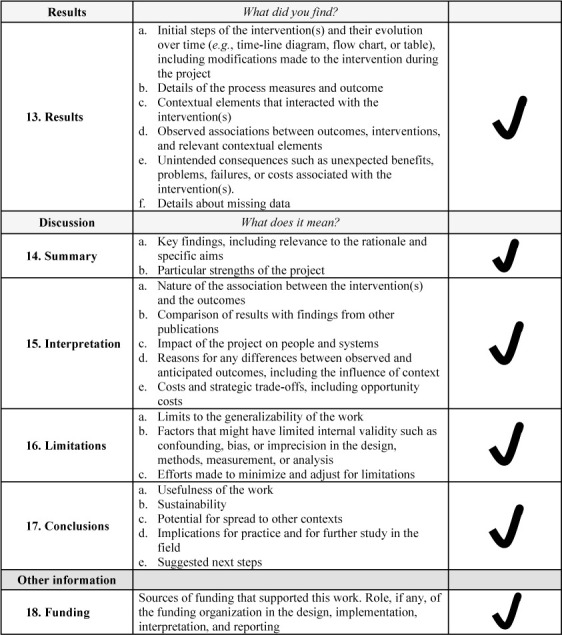

This quasi-experimental QI study was conducted at the Vanderbilt University Medical Center and approved by the Vanderbilt University Institutional Review Board (IRB 210530; Nashville, Tennessee, USA). Requirement for informed consent was waived. Research was conducted in a manner that adheres to the applicable Standards for Quality Improvement Reporting Excellence (SQUIRE) 2.0 reporting guidelines.13 See Supplemental Online Material, Supplemental Figure 1, for a completed SQUIRE 2.0 checklist. Prior to initiation of this study, we conducted a review from the institutional electronic health record that indicated that our current practices for NMM as a department was concordant with published guidelines only 42% of the time. As a next step of our study, we had defined a structured, multistep process for QI initiatives in our department that follows best practices of a learning health care system.14 For this study, we chose the topic of monitoring of NMB and the Multi-institutional Perioperative Outcomes Group (MPOG) ASPIRE program metric associated with this (NMB-01). Our institutional performance on NMB reversal and its MPOG ASPIRE program associated metric (NMB-02) was adequate and thus our study did not focus on identifying facilitators and barriers to improving this specific metric. The departmental anesthesia providers included in the QI program are attending physicians, residents, and certified registered nurse anesthetists (CRNAs).15

Supplemental Figure 1.

SQUIRE checklist.

MPOG ASPIRE QI Program

Participating in the MPOG ASPIRE program provides departmental and provider-level quality performance reports (QPRs) that allow providers to review and improve their performance on quality measures relevant to anesthesia practice.

The MPOG ASPIRE QI initiative created more than 30 measures to track both the processes and outcomes associated with procedures requiring anesthesia. Information on neuromuscular blockade with TOF monitoring (NMB-01), a process measure, is extracted from the anesthesia electronic health record and uploaded on a regular basis to the MPOG ASPIRE QI Coordinating Center Database. NMB-01 is defined as assessing the percentage of operative cases using a nondepolarizing neuromuscular blocker that have a TOF documented after the last dose of neuromuscular blocker and prior to earliest extubation.16 Cases are classified according to the definition of this process measure as passed, flagged (measure failed), or excluded, and subsequently the performance rates for this process measure are made available through departmental-level and provider-level QPR applications and tools. Based on the definition of NMB-01 and the information received from the MPOG coordinating center, any compliance or noncompliance with the measure will reflect on all providers involved in the care of the patient. The performance target for this measure that has been set by MPOG is ≥90% of included cases passing the metric.

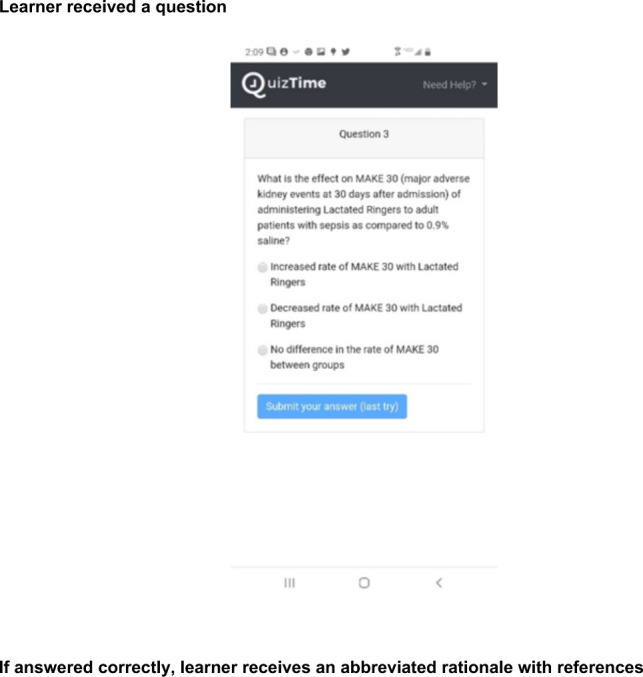

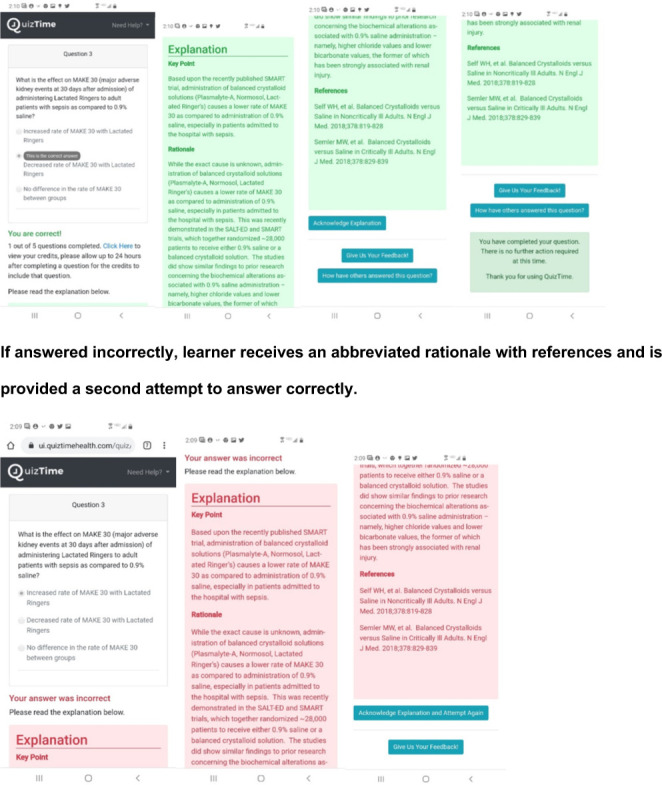

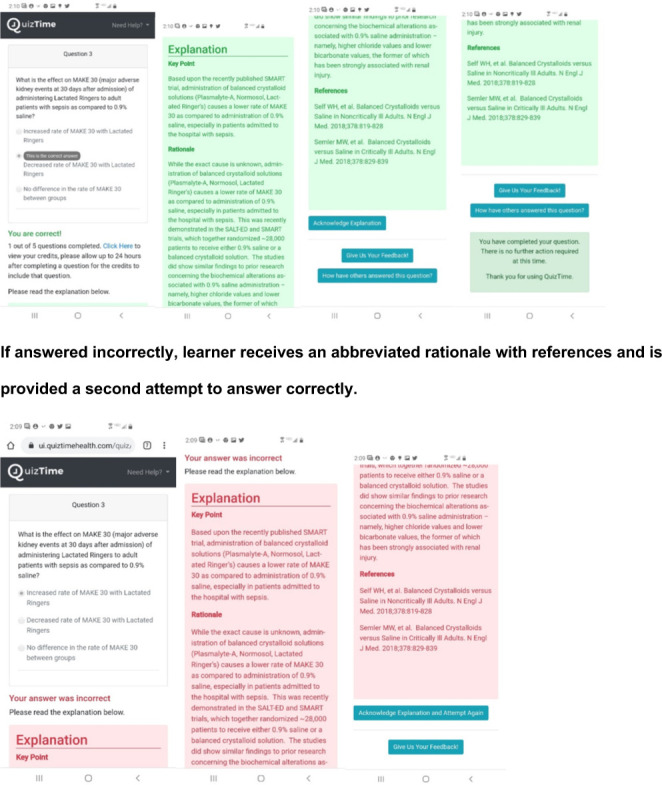

QuizTime: Webapp Educational Platform

QuizTime is a web-based quizzing application (webapp) developed in 2016 at Vanderbilt University Medical Center (Nashville, Tennessee).17 Online Supplemental Material, Supplemental Figure 2 illustrates QuizTime’s learning experience design. QuizTime employs the evidence-based educational concepts of spaced learning, retrieval with feedback, and microlearning.17,18 The educational content for the MPOG ASPIRE NMB-01 QuizTime module was developed with the help of 8 anesthesia providers (residents, nurse anesthetists, and attending anesthesiologists) who were content experts in NMB agent use, NMM, and NMB agent reversal. We configured the QuizTime application for use with 3 quizzes and 3 populations: (1) a condensed pilot quiz of 20 questions, delivered twice a weekday for 2 weeks for 25 targeted learners for test and feedback; (2) the study quiz of the same 20 questions delivered once per weekday over 4 weeks to a 400-learner population of providers; and (3) a subsequent refresher quiz of 5 questions delivered once a day for 1 week to the combined populations of the pilot and study quizzes. We configured the 3 quizzes in the instructor-led mode, meaning all enrollees were placed into a quiz simultaneously so all learners would begin and end within a specified period.

Supplemental Figure 2.

Learning experience design (LDX).

For each of the 3 quizzes, participants were given 24 hours to answer a question after delivery. The question display showed participants a question stem and 4 possible answers. Participants could select an answer by choosing 1 of the unnumbered and unlettered radio buttons (see Online Supplemental Material, Supplemental Figure 2). A correct or incorrect answer provided participants with either a green or red background, which included the question’s key point, rationale, and references. Learners who answered correctly on first attempt were required to read and acknowledge this information in order to receive continuing medical education (CME) credit. Learners who answered incorrectly were provided an immediate second attempt after acknowledging that they had read the rationale and learner material accompanying the question. They had 24 hours to reattempt the question. Upon second attempt, learners were required to reread and acknowledge the question’s key point, rationale, and references (see Online Supplemental Material, Supplemental Figure 2).

For the pilot quiz and the study quiz, each question that was submitted, regardless of correctness, counted toward the possibility of continuing education credit. If learners attempted at least 80% of the questions (16 of 20), they could claim 4 credits of either American Medical Association Physician’s Recognition Award (AMA PRA) Category 1 Credits (physicians) or Non-Physician Attendance credits (CRNAs) within Vanderbilt University Medical Center’s Cloud CME system. The subsequent refresher quiz did not offer the possibility of continuing education credit. Of note, no other educational intervention on NMB use/reversal and TOF monitoring was implemented during the study period and no other incentives or remediations took place regarding this metric over the course of the study period.

Interventions

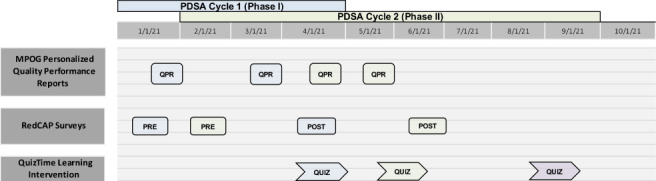

For the design of this QI study, we adapted the plan-do-study-act (PDSA) framework and implemented 2 PDSA cycles.19–21 The project timeline is depicted in Figure 1. In this study, several combined pedagogical approaches as described below were taken to test their impact on improving TOF monitoring.

Figure 1.

Project timeline. This project timeline outlines plan-do-study-act (PDSA) Cycle I (Phase I) and PDSA Cycle II (Phase II) over the course of January 2021 to June 2021. Over the course of each cycle, 2 Multi-institutional Perioperative Outcomes Group (MPOG) personalized quality performance reports were sent out to participants. A REDCap preintervention survey and a REDCap postintervention survey was sent to participants to complete during both Phase I and Phase II. Lastly, the month-long QuizTime Learning Intervention was implemented for participants in Phase I and Phase II with an additional QuizTime Refresher 5-question set sent out to Phase II participants in September 2021.

Prior to Phase I (January 29, 2021), we presented a departmental Grand Rounds on the importance and value of TOF monitoring and the relevant MPOG ASPIRE quality metrics. This was followed by Phase I, in which we enrolled 25 anesthesia providers with a variety of clinical experience to participate in a PDSA cycle. The primary interventions used in Phase I were conducted between January 29, 2021 and April 23, 2021 and included: (1) a process by which these participants were familiarized with the MPOG ASPIRE system by receiving MPOG personalized QPRs displaying their performance on NMB-01 compared to their respective peers, (2) access to the ASPIRE dashboard to help them complete a case-by-case review of flagged and excluded cases, and (3) a targeted educational module through QuizTime. The MPOG QPRs were sent via email in the fourth week of the month for January and March 2021 to Phase I providers who treated patients the prior month. These anesthesia provider participants were also given access to the MPOG ASPIRE dashboard, which allowed them the opportunity to perform a review of each case with an indication as to whether the case had been coded as passed, flagged (measure failed), or excluded for the NMB-01 measure. The targeted educational module in Phase I consisted of use of QuizTime, as outlined above.22 In this phase, 2 multiple-choice questions (MCQs) were sent to participants each weekday over a 2-week period (April 12 – April 23, 2021).

In Phase II, a second PDSA cycle was undertaken in which we enrolled 400 anesthesia providers from the department that were recipients of the monthly QPR. These providers were given the opportunity to opt out of participating in QuizTime. Phase II began with a preintervention survey being delivered via email on February 19, 2021. The goal of sending out the preintervention survey so early in Phase II was to capture as many responses as possible from a large number of our participating providers. This was followed by the first MPOG QPR being sent out on April 28, 2021. Subsequently, from May 10 through June 4, 2021, a single MCQ was sent each weekday to each anesthesia provider in a similar manner (Figure 1). As Phase II was followed by the start of a new academic year with a new set of anesthesia learners (residents and fellow) and providers, a shorter version of our original QuizTime module with 5 MCQs (termed Refresher QT) was administered from Aug 30, 2021, through September 3, 2021, to all anesthesia providers (Figure 1).

Outcomes

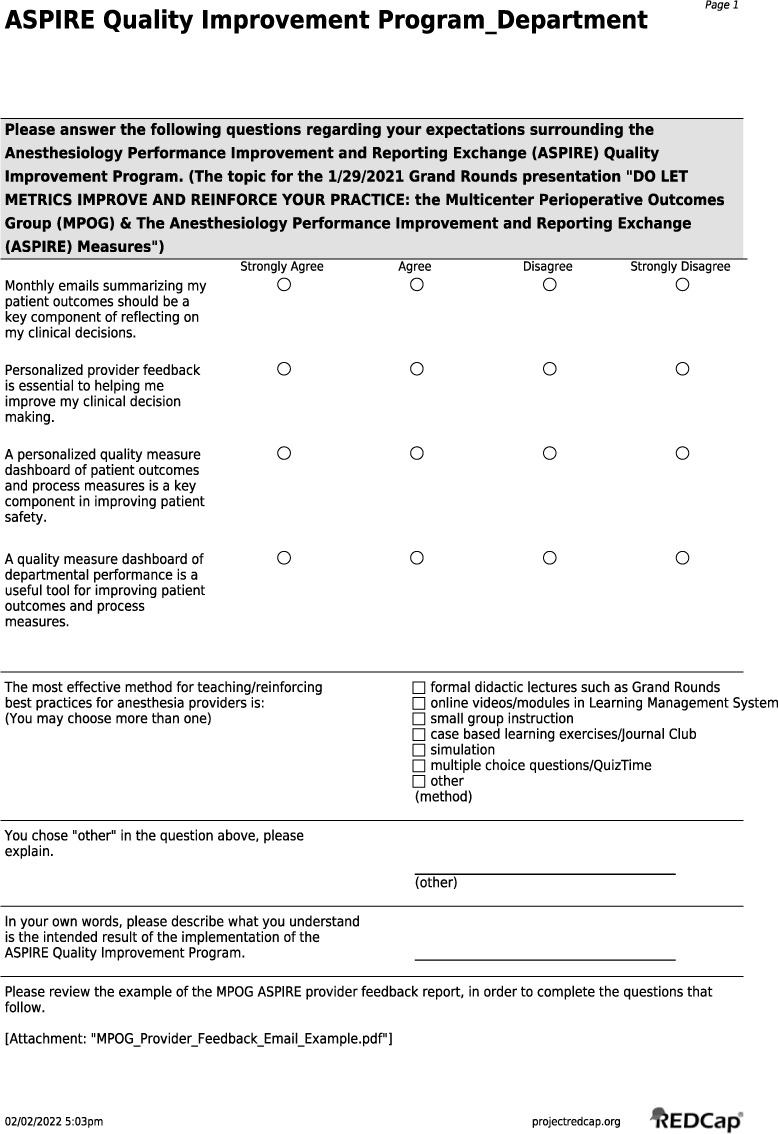

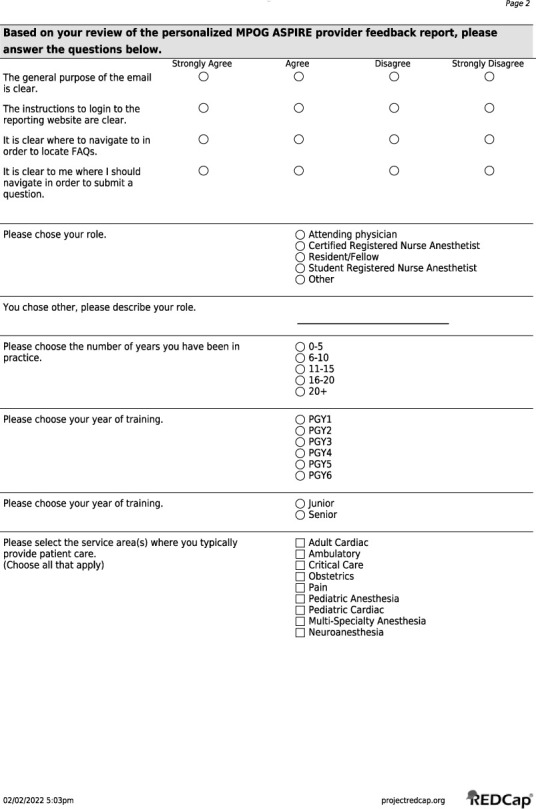

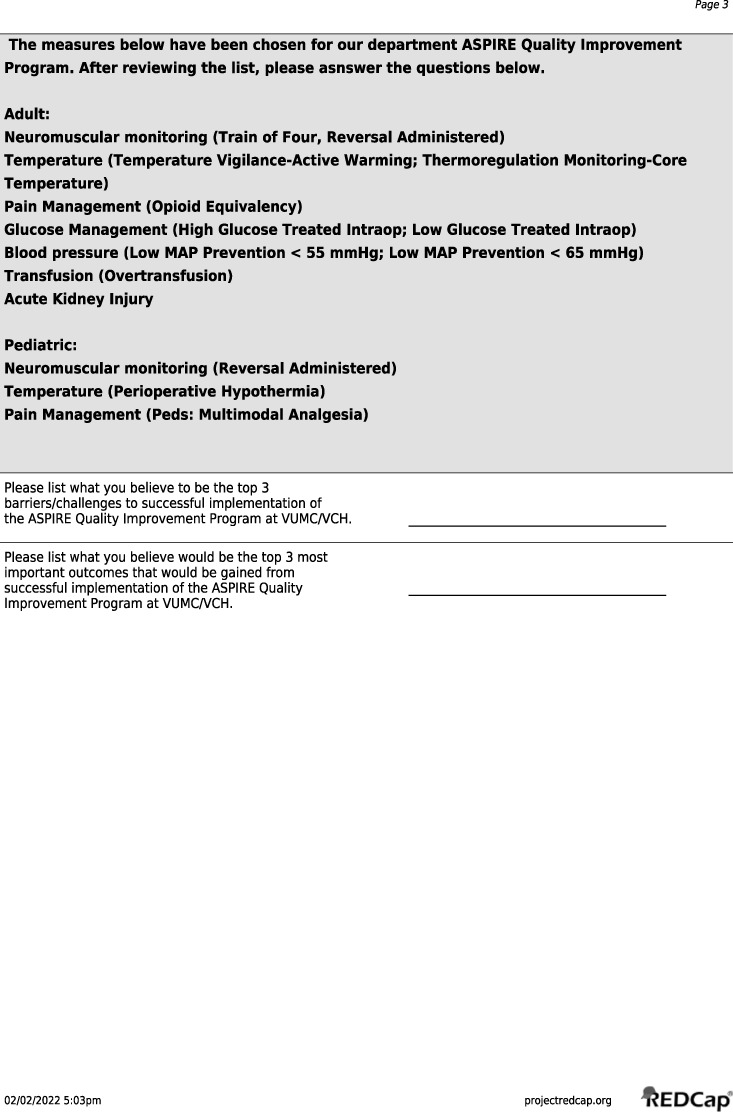

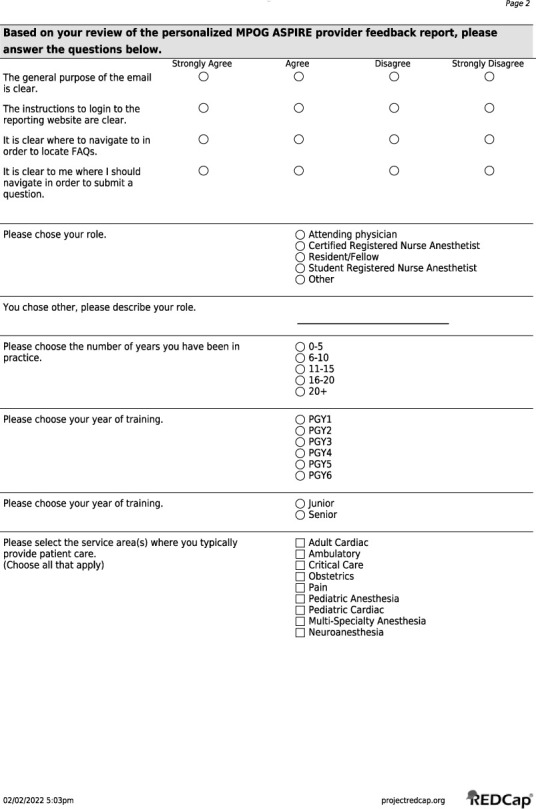

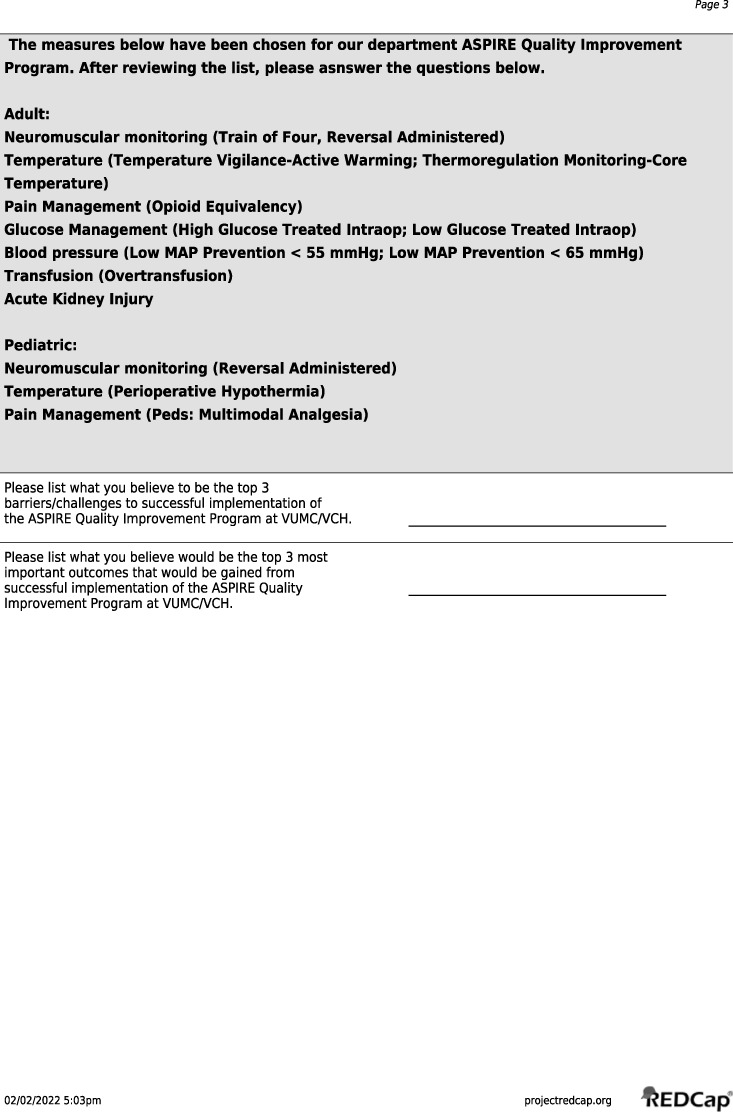

Our primary outcome included identifying the facilitators and barriers to implementation of our intervention aimed at increasing TOF monitoring. Data for the primary outcome was gathered via REDCap survey (Vanderbilt University),23 similar to the approach several other publications have used in identifying facilitators and barriers.24–26 The survey was distributed both prior to and following the initial set of interventions. The survey included both a set of Likert scale questions on a 4-point scale about perceptions of the ASPIRE QI system as well as open-ended questions designed to gain an understanding of providers’ perceived barriers as well as what they believed were the intended results and outcomes of the study (Online Supplemental Material, Supplemental Figure 3). Our secondary outcome was the proportion of patient cases that passed the NMB-01 measure, a documented TOF after the last dose of neuromuscular blocker and prior to earliest extubation, before and after implementation of Phase I and Phase II of PDSA cycle framework.

Supplemental Figure 3.

REDCap Preintervention Survey and Postintervention Survey.

Qualitative Analysis

Data from REDCap surveys from Phase I and Phase II were analyzed using inductive thematic analysis using the steps outlined by Braun and Clarke.27 Briefly, these steps include becoming familiar with the data, systematically generating initial codes based off the most salient features of the data, identifying themes among the code, reviewing the themes, defining and naming the themes, and finally, reporting your findings.27 Survey responses were systematically coded for features relevant to the question being asked. These codes were then collated into overarching themes, which were more clearly and concisely named.

Statistical Analysis

The proportion of patient cases that passed the NMB-01 measure before and after implementation of Phase I and Phase II interventions was determined and compared using a 2-sample proportion test. All statistical tests were 2-tailed, and statistical signficance was set at P ≤ .05. All analyses were performed using SPSS statistical software version 28.0 (IBM SPSS for Macintosh, version 28.0, IBM Corp., Armonk, New York).

Results

Phase 1 (PDSA Cycle 1)

This phase began with an anonymous REDCap preintervention survey, followed by the QuizTime learning module and MPOG QPR, and ended with the REDCap postintervention survey (Figure 1). All 25 providers in Phase I completed the preintervention survey. The level of training and years of practice of these 25 anesthesia providers are shown in Table 1.

Table 1.

Phase I and Phase II Demographics

| Level of Training (%) | Years in Practice/PGY | |

|---|---|---|

| Phase I (n = 25) | ||

| Attending Anesthesiologist | 17 (68.0) | 0–5 years: 4 6–10 years: 6 11–15 years: 3 16–20 years: 4 20+ years: 4 |

| Certified Registered Nurse Anesthetist | 4 (16.0) | |

| Resident/Fellow Anesthesiologist | 4 (16.0) | PGY4: 4 |

| Phase II – Presurvey (n = 222) | ||

| Attending Anesthesiologist | 87 (39.2) | 0–5 years: 54 6–10 years: 32 11–15 years: 38 16–20 years: 8 20+ years: 36 |

| Certified Registered Nurse Anesthetist | 81 (36.5) | |

| Resident/Fellow Anesthesiologist | 50 (22.5) | PGY6: 1 PGY5: 9 PGY4: 11 PGY3: 17 PGY2: 11 PGY1: 1 |

| Nonclinical Faculty | 4 (1.8) | |

| Phase II – Postsurvey (n = 201) | ||

| Attending Anesthesiologist | 73 (36.3) | 0–5 years: 51 6–10 years: 29 11–15 years: 35 16–20 years: 11 20+ years: 29 |

| Certified Registered Nurse Anesthetist | 82 (40.8) | |

| Resident/Fellow Anesthesiologist | 43 (21.4) | PGY6: 0 PGY5: 12 PGY4: 14 PGY3: 11 PGY2: 6 PGY1: 0 |

| Nonclinical Faculty | 3 (1.5) | |

Abbreviation: PGY, postgraduate year.

Thematic analysis of preintervention survey data from Phase I of anesthesia provider attitudes regarding the intended results, barriers to implementation, and important outcomes of implementation of this QI project (Table 2) indicated that intended results were centered on quality of patient care, barriers to implementation largely encompassed issues with technology/equipment and the increased burden placed on providers, and important outcomes were focused on patient outcomes and improving provider knowledge.

Table 2.

Survey Data Results a

| Intended Results | Barriers to Implementation | Intended Outcomes |

|---|---|---|

| Phase I: Preintervention Survey and Postintervention Survey Responses Based on Feedback Given by 25 Providers | ||

|

|

|

| Phase II: Preintervention Survey Responses Based on Feedback Given by 222 Providers | ||

|

|

|

| Phase II: Postintervention Survey Responses Based on Feedback Given by 201 Providers | ||

|

|

|

Since our approach was inductive (no existing framework was presented to the respondent), themes emerged based on similar responses to open-ended questions, which resulted in multiple themes per respondent. Therefore, the number of respondents was not equal to the number themes produced.

All 25 anesthesia providers participated in the process of testing the learning activity provided through the QuizTime application. The delivery method (ie, text, email), quality, and functionality in addition to the content itself were tested. All participants were able to provide immediate feedback to the QuizTime office on how to improve the quality and content of the questions in the learning module. Additionally, attending anesthesiologists were eligible to claim 4 AMA PRA Category 1 Credits, and CRNAs were eligible to claim 4 Non-Physician Attendance credits within the Cloud CME system for attempting 16 or more questions.

In Phase I, the pilot quiz had a 96% active learning population, meaning all but 1 of the 25 enrollees submitted an answer to at least 1 question. At the QuizTime activity-level, on average learners answered the question within 3 hours of receiving it (specifically, 2 hours and 53 minutes). Of the 500 first-attempt question instances delivered, 234 were answered correctly, 99 were answered incorrectly, and 166 were never answered. Of the 99 second-attempt questions sent, 58 were answered correctly, 7 were answered incorrectly, and 34 were never answered. Of the 24 active learners, 45.8% answered 16 or more quiz questions, which gave 11 learners eligibility to claim continuing education credit.

All 25 providers completed the postintervention survey. Thematic analyses of preintervention and postintervention survey data from Phase I resulted in similar themes, as depicted in Table 2.

Phase 2 (PDSA Cycle 2)

As in Phase I, Phase II began with an anonymous REDCap preintervention survey followed by the QuizTime module and MPOG QPR, and then a REDCap postintervention survey. Results from the additional 5-question QuizTime module are also reported in this section. Of the 400 anesthesia providers enrolled in Phase II, we were only able to determine level of training and years in practice for the providers that completed the preintervention survey (n = 222) and postintervention survey (n = 201), shown in Table 1.

Thematic analysis of preintervention survey data from Phase II (n = 222) concerning this QI project are shown in Table 2. There were differences observed in the themes resulting from Phase II preintervention survey data compared to Phase I preintervention survey data. Those differences were specifically for the questions focused on intended results of the project and barriers to implementation. Improved knowledge was emphasized as an intended result across the department. Additional areas that were reported as potential barriers to fully implementing best practice for NMM included a fear of loss of individualization due to standardization of patient care plan, differences between the attending overseeing the case and the in-room provider who is making decisions/completing documentation, and the frequency of intraoperative handovers.

During Phase II, 400 anesthesia providers were enrolled in the QuizTime learning module. Similar to Phase I, in Phase II attending anesthesiologist and CRNA participants were eligible to receive CME or Non-Physician Attendance credits, respectively, for opening and answering 16 or more of the 20 questions. The Phase II quiz, 73% (n = 292) of the enrollees attempted at least 1 question and 27% (n = 108) never attempted a question. On average, learners answered questions within about 4.5 hours of them being sent (specifically, 4 hours and 34 minutes). Of the 8000 first-attempt question items delivered in Phase II, 2489 (31%) were answered correctly, 1189 (15%) were answered incorrectly, and 4322 (54%) were never answered. Of the 1189 second-attempt questions sent, 740 (62%) were answered correctly, 106 (9%) were answered incorrectly, and 343 (29%) were never answered. Of the active learners, 45.21% answered 16 or more quiz questions, which gave 132 learners eligibility to claim continuing education credit.

Thematic analysis of postintervention survey data from Phase II (n = 201) of anesthesia provider attitudes are shown in Table 2. The differences observed in the themes resulting from postintervention survey department data compared to postintervention survey pilot data were again seen for the questions on intended results and barriers to implementation. Similar to the preintervention survey department data, there was an increased emphasis placed on improved knowledge as an intended result. However, different from both the postintervention survey pilot data and the preintervention survey department data, there was also an emphasis placed on increased awareness and identification of areas for improvement as being intended results of these interventions. Similar to the preintervention survey department data, barriers to implementation that were noted included differences between the attending overseeing the case and the in-room provider who is actually making decisions/completing documentation. However, different from both the postintervention survey pilot data and the preintervention survey department data, additional barriers to implementation that were noted after department-wide completion of the interventions included increasing expectations placed on providers and lack of applicability of the measures to the case.

The 5-question QuizTime question series implemented at the end of Phase II was used to reinforce the education previously introduced to providers. Of the 191 providers in Phase II that answered any Refresher QT questions, 16.75% answered 1 question, 13.09% answered 2 questions, 16.75% answered 3 questions, 19.90% answered 4 questions, and 33.51% answered 5 questions (Table 3).

Table 3.

QuizTime Participation Across Phase I and Phase II, including the Refresher QuizTime

| 15 or Fewer Questions (< to 80%) | 16 or More Questions (80% to >) | ||||

|---|---|---|---|---|---|

| QuizTime Phase I (n = 25) | 54.166 | 45.833 | |||

| QuizTime Phase II (n = 400) | 54.79 | 45.21 | |||

| 1 Question | 2 Questions | 3 Questions | 4 Questions | 5 Questions | |

| Refresher QuizTime (n = 409) | 16.75% | 13.09% | 16.75 | 19.90% | 33.51% |

Frequency of TOF Monitoring

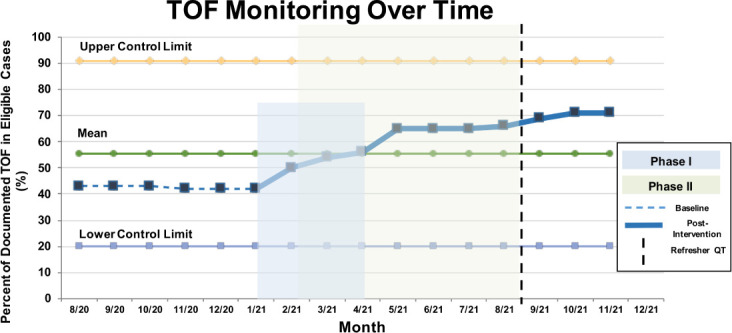

As seen in Figure 2 and based on the information presented in MPOG ASPIRE dashboard, use of the ASPIRE QI Program framework in combination with a targeted learning module demonstrated an improvement in the TOF monitoring with the performance rate improving from 42% (984/2335) to 56% (1457/2618) of eligible patient cases following the completion of Phase I (an absolute 14% difference; P < .001). The performance rate continued to improve over the course of Phase II from 56% (1457/2618) to 65% (1663/2550) prior to the implementation of the 5-question Refresher QuizTime series (9% difference; P < .001). By December 2021, we observed an additional improvement in TOF with the performance rate increasing from 65% (1663/2550) to 70% (1853/2666), a 5% absolute difference P < .001 (Figure 2). Thus, evidence-concordant TOF monitoring increased from 42% to 70% throughout the overall program (28% absolute difference; P < .001).

Figure 2.

Train of Four (TOF) monitoring rate over time. TOF monitoring rate increased from baseline (shown as the horizontal dashed line) over the course of Phase I (blue box) and Phase II (green box). The vertical dashed line depicts the time at which the Refresher QuizTime was implemented. The solid line depicts postintervention improvement in TOF, with continued increase after the Phase II was completed.

Discussion

Lack of evidence-based TOF monitoring can increase the risk for RNMB and postoperative respiratory complications even in patients reversed with sugammadex.12 A gap analysis and needs assessment performed at our institution found that TOF monitoring was evidence-concordant only 42% of the time. Therefore, we sought to identify methods for improving TOF monitoring through a structured QI process. Our major finding is that this structured QI program was associated with a significant increase in the delivery of guideline-concordant patient care across a large anesthesia practice at a quaternary medical center.

To place our findings within prior research in this domain, several studies particularly from Todd et al and Weigel et al must be discussed. Similar to our study, Todd et al found that iterative departmental PDSA cycles that included feedback to the faculty led to an improvement in evidence-based care and patient safety.28 They concluded that their educational and QI initiatives that spanned a 2-year period resulted in a significant increase in TOF monitoring and a reduction in the incidence of RNMB in the postanesthesia care unit. Our study builds upon theirs in 2 ways. First, it shows that 10 years after their publication, routine care regarding NMM and reversal still needs to be improved. Second, their study included observations from roughly 400 patients over a 2-year period. Our study included information on clinician behaviors from over 10 000 patients in a shorter period. We describe QI processes that can be used at scale to improve NMM through the steps of a learning health care system across a large academic practice.14 These included leveraging the MPOG ASPIRE system and the QuizTime webapp, which are approaches that could be employed in practice regardless of the practice size because of their automated nature. Weigel et al more recently implemented a single institutional professional practice change initiative that used many interventions including monitoring equipment trials, educational videos, placement of quantitative monitors in all anesthetizing locations, electronic clinical decision support with real-time alerts, and also initiated an ongoing professional practice metric all with the goal of attaining TOF documentation ratios greater than or equal to 0.9.29 The combined effects of implementing each of these interventions was a decrease in postanesthesia care unit length of stay, postoperative pulmonary complications, and hospital length of stay. Similarly, our study used multiple interventions in a combined approach, with our study’s primary aim focusing more on gathering feedback from our providers on these interventions opposed to directly assessing short-term and long-term outcomes.

It should be noted that an additional publication from Todd et al of 2 cases with severe postoperative pulmonary complications found that failures in NMM likely led to the untoward outcomes.30 This is in line with the report from Fuchs-Buder et al related to the POPULAR study that having recommendations in place is not enough, but rather that evidence-based guidelines for NMM and reversal must actually be followed to improve patient safety and reduce harm.2 These observations clearly highlight the potential risks for a continued failure by some providers to use the available technology and change long-held and dangerous beliefs that such monitoring is unnecessary. This is in line with our findings showing that even after significant improvement in care delivered that was in line with the ASPIRE NMB-01 guideline, we still only reached approximately 70% compliance. These studies and our own finding highlight the fact that there are ongoing challenges for overcoming barriers and creating true organizational learning, and these QI and educational efforts will need to continue until all patients receive evidence-based care. There are several limitations to our study. First, we were not able to determine which of the interventions was most effective in the observed increase in TOF monitoring. That is, we did not test multiple pedagogical approaches against one another and therefore cannot know at this time if traditional approaches (ie, grand rounds presentations), the QuizTime modules, or the combination thereof are needed to realize these changes. As a single-institution study, we did not have a continuous control group.

Second, there is a potential for the Hawthorne Effect.31 Our providers were notified in advance of the QI program implementation, its purpose, and ensuing interventions. Third, it is possible that some of the observed improvement in TOF monitoring could be accounted for by improved documentation of the process measure without a change in actual clinical performance. Providers may have understood the importance of monitoring TOF without grasping the importance of documentation. Finally, while we observed significant performance improvement over time, we do not know the exact educational dose (ie, number of MCQs), frequency of feedback, or frequency and dose of refresher training that is needed to optimize uptake and change practice.

Future studies will include investigation of factors associated with sustained adherence to MPOG ASPIRE process metrics regarding NMM after implementation of department-wide QI programs, determining the optimal delivery methods of workplace education using QuizTime, and identifying best practices for soliciting engagement and promoting buy-in from a majority of our providers. Follow-up studies will also need to investigate the impact of these interventions on the rate of postoperative respiratory complications.

In conclusion, our study showed an association between the implementation of a structured QI program using a novel educational intervention and improvements in process metrics regarding NMM. However, despite our interventions, perceived barriers to implementation remained and provide guidance for the primary areas on which to focus future QI efforts.

Acknowledgments

We would like to acknowledge the Multicenter Perioperative Outcome Group (MPOG) for their ongoing support for our participation in the MPOG ASPIRE QI Program, even though external support for the current project was not received from MPOG ASPIRE QI Program.

Funding Statement

Funding: This manuscript was supported in part by NHLBI K23HL148640 (PI: Freundlich) from the National Institutes of Health (NIH). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Footnotes

Previous Presentations: Preliminary results from this study were previously presented at the 2021 American Society of Anesthesiology Conference.

Supplemental Online Material

Supplemental Figure 1. continued.

SQUIRE checklist.

Supplemental Figure 1. continued.

SQUIRE checklist.

Supplemental Figure 2. continued.

Learning experience design (LDX).

Supplemental Figure 3. continued.

REDCap Preintervention Survey and Postintervention Survey.

Supplemental Figure 3. continued.

REDCap Preintervention Survey and Postintervention Survey.

References

- 1.Carvalho H, Verdonck M, Cools W, et al. Forty years of neuromuscular monitoring and postoperative residual curarisation: a meta-analysis and evaluation of confidence in network meta-analysis. Br J Anaesth . 2020;125(4):466–82. doi: 10.1016/j.bja.2020.05.063. [DOI] [PubMed] [Google Scholar]

- 2.Fuchs-Buder T, Brull SJ. Is less really more? A critical appraisal of a POPULAR study reanalysis. Br J Anaesth . 2020;124(1):12–4. doi: 10.1016/j.bja.2019.09.038. [DOI] [PubMed] [Google Scholar]

- 3.Grabitz SD, Rajaratnam N, Chhagani K, et al. The effects of postoperative residual neuromuscular blockade on hospital costs and intensive care unit admission: a population-based cohort study. Anesth Analg . 2019;128(6):1129–36. doi: 10.1213/ANE.0000000000004028. [DOI] [PubMed] [Google Scholar]

- 4.Murphy GS, Brull SJ. Residual neuromuscular block: lessons unlearned. Part I: definitions, incidence, and adverse physiologic effects of residual neuromuscular block. Anesth Analg . 2010;111(1):120–8. doi: 10.1213/ANE.0b013e3181da832d. [DOI] [PubMed] [Google Scholar]

- 5.Cammu G. Residual neuromuscular blockade and postoperative pulmonary complications: what does the recent evidence demonstrate. Curr Anesthesiol Rep . 2020;10(2):131–6. doi: 10.1007/s40140-020-00388-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lien CA, Kopman AF. Current recommendations for monitoring depth of neuromuscular blockade. Curr Opin Anaesthesiol . 2014;27(6):616–22. doi: 10.1097/ACO.0000000000000132. [DOI] [PubMed] [Google Scholar]

- 7.Brull SJ, Murphy GS. Residual neuromuscular block: lessons unlearned. Part II: methods to reduce the risk of residual weakness. Anesth Analg . 2010;111(1):129–40. doi: 10.1213/ANE.0b013e3181da8312. [DOI] [PubMed] [Google Scholar]

- 8.Murphy Glenn S, Szokol Joseph W, Avram Michael J, et al. Intraoperative acceleromyography monitoring reduces symptoms of muscle weakness and improves quality of recovery in the early postoperative period. Anesthesiology . 2011;115(5):946–54. doi: 10.1097/ALN.0b013e3182342840. [DOI] [PubMed] [Google Scholar]

- 9.Plaud B, Baillard C, Bourgain JL, et al. Guidelines on muscle relaxants and reversal in anaesthesia. Anaesth Crit Care Pain Med . 2020;39(1):125–42. doi: 10.1016/j.accpm.2020.01.005. [DOI] [PubMed] [Google Scholar]

- 10.Naguib M, Brull SJ, Kopman AF, et al. Consensus statement on perioperative use of neuromuscular monitoring. Anesth Analg . 2018;127(1):71–80. doi: 10.1213/ANE.0000000000002670. [DOI] [PubMed] [Google Scholar]

- 11.Klein AA, Meek T, Allcock E, et al. Recommendations for standards of monitoring during anaesthesia and recovery 2021: guideline from the Association of Anaesthetists. Anaesthesia . 2021;76(9):1212–23. doi: 10.1111/anae.15501. [DOI] [PubMed] [Google Scholar]

- 12.Kotake Y, Ochiai R, Suzuki T, et al. Reversal with sugammadex in the absence of monitoring did not preclude residual neuromuscular block. Anesth Analg . 2013;117(2):345–51. doi: 10.1213/ANE.0b013e3182999672. [DOI] [PubMed] [Google Scholar]

- 13.Ogrinc G, Davies L, Goodman D, et al. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): revised publication guidelines from a detailed consensus process. BMJ Qual Saf . 2016;25(12):986–92. doi: 10.1136/bmjqs-2015-004411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McEvoy MD, Dear ML, Buie R, et al. Embedding learning in a learning health care system to improve clinical practice. Acad Med . 2021;96(9):1311–4. doi: 10.1097/ACM.0000000000003969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Colquhoun DA, Shanks AM, Kapeles SR, et al. Considerations for integration of perioperative electronic health records across institutions for research and quality improvement: the approach taken by the Multicenter Perioperative Outcomes Group. Anesth Analg . 2020;130(5):1133. doi: 10.1213/ANE.0000000000004489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shah N, Bailey M. ASPIRE featured measure: NMB 01. Midwest J Anesth Qual Saf . 2019;1(1) [Google Scholar]

- 17.Magarik M, Fowler LC, Robertson A, et al. There’s an app for that: a case study on the impact of spaced education on ordering CT examinations. J Am Coll Radiol . 2019;16(3):360–4. doi: 10.1016/j.jacr.2018.10.024. [DOI] [PubMed] [Google Scholar]

- 18.Triana AJ, White-Dzuro CG, Siktberg J, Fowler BD, Miller B. Quiz-based microlearning at scale: a rapid educational response to COVID-19. Med Sci Educ . 2021;31(6):1–3. doi: 10.1007/s40670-021-01406-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Varkey P, Reller MK, Resar RK. Basics of quality improvement in health care. MayoClin Proc . 2007;82(9):735–9. doi: 10.4065/82.6.735. [DOI] [PubMed] [Google Scholar]

- 20.Langley GJ, Moen RD, Nolan KM, et al. The improvement guide: a practical approach to enhancing organizational performance . San Francisco, CA: John Wiley & Sons; 2009. [Google Scholar]

- 21.Christoff P. Running PDSA cycles. Curr Probl Pediatr Adolesc Health Care . 2018;48(8):198–201. doi: 10.1016/j.cppeds.2018.08.006. [DOI] [PubMed] [Google Scholar]

- 22.McEvoy MD, Fowler LC, Robertson A, et al. Comparison of two learning modalities on continuing medical education consumption and knowledge acquisition: a pilot randomized controlled trial. J Educ Perioper Med . 2021;23(3):E668. doi: 10.46374/volxxiii_issue3_mcevoy. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform . 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McArthur C, Bai Y, Hewston P, et al. Barriers and facilitators to implementing evidence-based guidelines in long-term care: a qualitative evidence synthesis. Implement Sci . 2021;16(1):70. doi: 10.1186/s13012-021-01140-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hanbury A, Farley K, Thompson C, Wilson P, Chambers D. Challenges in identifying barriers to adoption in a theory-based implementation study: lessons for future implementation studies. BMC Health Serv Res . 2012;12(1):422. doi: 10.1186/1472-6963-12-422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Safdar N, Abbo LM, Knobloch MJ, Seo SK. Research methods in healthcare epidemiology: survey and qualitative research. Infect Control Hosp Epidemiol . 2016;37(11):1272–7. doi: 10.1017/ice.2016.171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol . 2006;3(2):77–101. [Google Scholar]

- 28.Todd MM, Hindman BJ, King BJ. The implementation of quantitative electromyographic neuromuscular monitoring in an academic anesthesia department. Anesth Analg . 2014;119(2):323–31. doi: 10.1213/ANE.0000000000000261. [DOI] [PubMed] [Google Scholar]

- 29.Weigel WA, Williams BL, Hanson NA, et al. Quantitative neuromuscular monitoring in clinical practice: a professional practice change initiative. Anesthesiology . 2022;136(6):901–15. doi: 10.1097/ALN.0000000000004174. [DOI] [PubMed] [Google Scholar]

- 30.Todd MM, Hindman BJ. The implementation of quantitative electromyographic neuromuscular monitoring in an academic anesthesia department: follow-up observations. Anesth Analg . 2015;121(3):836–8. doi: 10.1213/ANE.0000000000000760. [DOI] [PubMed] [Google Scholar]

- 31.Demetriou C, Hu L, Smith TO, Hing CB. Hawthorne effect on surgical studies. ANZ J Surg . 2019;89(12):1567–76. doi: 10.1111/ans.15475. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Figure 1. continued.

SQUIRE checklist.

Supplemental Figure 1. continued.

SQUIRE checklist.

Supplemental Figure 2. continued.

Learning experience design (LDX).

Supplemental Figure 3. continued.

REDCap Preintervention Survey and Postintervention Survey.

Supplemental Figure 3. continued.

REDCap Preintervention Survey and Postintervention Survey.