Abstract

During the COVID-19-related school closures teachers and students were challenged to suddenly switch to digital teaching at a distance. In particular, the challenge was to organize high-quality teaching in which students stay on task. Familiarity with technology may have helped to master the situation. However, only few studies so far have examined the quality of digital distance teaching (e.g., cognitive activation) during school closures and its relation to students' learning (e.g., effort investment). Moreover, systematic research concerning the role of familiarity with technology-enhanced teaching and learning acquired during face-to-face teaching is yet lacking. In our study, we used data from 729 ninth graders to investigate how student-observed learning activities when using technology at a distance were related to students' effort in learning in two subjects (mathematics, German). In addition, we examined whether student-perceived cognitive activation mediated this relation. Finally, the sample provides the unique opportunity to examine the role of familiarity, as some of the classes had been randomly equipped with tablet computers one year before the school closures and thus had the opportunity to gain familiarity with using technology in the classroom. Results from structural equation models showed that student-observed learning activities were associated with students’ learning effort in both subjects. Student-perceived cognitive activation mediated this association. Familiarity with face-to-face technology-enhanced teaching gained before the COVID-19 pandemic appeared to be less important for high-quality digital distance teaching. Thus, infrastructural measures, such as equipping schools with digital devices so that teachers and students can familiarize themselves with technology, do not seem to be decisive for high-quality digital (distance) teaching—at least in the case of short-term change from face-to-face to digital distance teaching, as was necessary during the COVID-19 pandemic.

Keywords: Digital distance teaching, Familiarity with technology, Effort, Cognitive activation, ICAP

1. Introduction

During the COVID-19 pandemic, schools were closed worldwide as a measure to prevent the spread of infections. During school closures, the use of technology represented one of the few ways to implement teaching. Thus, teachers and students who were used to face-to-face teaching in the classroom were challenged to suddenly organize digital teaching and learning at a distance. Reports show that there was quite some variability in the learning opportunities with the technology offered to students at that time (e.g., Jaekel, Scheiter, & Göllner, 2021; Pelikan et al., 2021; Robert Bosch Stiftung, 2020; Steinmayr, Lazarides, Weidinger, & Christiansen, 2021; Vodafone Stiftung Deutschland gGmbH, 2020)—ranging from students being completely left to their own devices or receiving lectures via videoconference tools as passive listeners to students whose teachers did their best to involve them actively into learning using diverse methods and tools. Accordingly, it can be assumed that students were engaged in different learning activities with varying potentials for stimulating their effort in learning. Helping students stay on task by providing instruction that encourages them to be cognitively activated and to accomplish higher-order learning is seen as a core element of teaching quality (Bransford, Brown, & Cocking, 2000; see also the concept of cognitive activation, i.e., Praetorius, Klieme, Herbert, & Pinger, 2018). In this vein, cognitive activation has been shown to be an important prerequisite not only for cognitive but also for motivational aspects of student learning (such as their willingness to invest effort) in face-to-face teaching (Fauth, Decristan, Rieser, Klieme, & Büttner, 2014; Lazarides & Buchholz, 2019; Seidel & Shavelson, 2007). To date, only few studies have examined the quality of distance teaching during school closures (e.g., Jaekel et al., 2021; Steinmayr et al., 2021). However, these studies do not distinguish between digital or other forms of distance teaching. Also, they do not consider how technology (e.g., applications) was used to instruct learning activities that are related to higher-order learning processes and whether these relate to students’ perception of high-quality instruction. It remains thus an open question what student-observed learning activities in digital distance teaching relate to whether students perceived the instruction as being of high-quality (i.e., cognitively activating) and ultimately to their effort in learning, which presumably is particularly important in distance teaching and learning.

Overall, studies have shown learning losses related to distance learning during the COVID-19-related school closures (e.g., Betthäuser, Bach-Mortensen, & Engzell, 2023; Donnelly & Patrinos, 2021; Hammerstein, König, Dreisörner, & Frey, 2021; Schult, Mahler, Fauth, & Lindner, 2022; Zierer, 2021); however, due to a lack of data, the role of cognitive activation in this development is unclear—especially with regard to the use of technology. Nevertheless, students' and teachers’ familiarity with technology has been suggested to mitigate this effect (Betthäuser et al., 2023; Hammerstein, König, Dreisörner, & Frey, 2021; Spitzer & Musslick, 2021). Although those studies use rather distal operationalizations of familiarity with technology (e.g., country-level variation in the frequency of teachers using technology for teaching three years prior to the pandemic), they consistently base their operationalizations on the assumption that access to and the use of technology in schools are considered important prerequisites for the acquisition of familiarity in technology-enhanced teaching.

Given that schools in Germany, compared to other countries, were not yet well equipped with digital devices or did not have the necessary technical infrastructure at the time of the school closures (Eickelmann, Bos, & Labusch, 2019; Huber & Helm, 2020), it can be assumed that on average familiarity with technology for teaching and learning was rather low and the preconditions for high-quality digital distance teaching and learning were particularly challenging (Huber & Helm, 2020). However, there are exceptions to this overall trend, as prior to the pandemic, there had been various initiatives to equip designated schools with technology such as tablet computers. The data set used in the present study comes from such an initiative and offers a unique opportunity to examine, under controlled conditions, whether the familiarity of using technology acquired prior to the COVID-19 pandemic was conducive for high-quality digital distance teaching. Participating classes had received or not received tablet computers in 2019 so that some teachers and students did have the opportunity to familiarize themselves with their use during face-to-face teaching in the months preceding the school closures.

The aim of our study was to investigate what constitutes high-quality digital distance teaching and the role that familiarity with technology plays in that context. Using students' self-reports, we analyzed (a) whether student-observed learning activities while working with different technologies were associated with students' willingness to engage in learning and to invest effort in learning, (b) whether these associations were mediated by the students' perception of cognitive activation during digital distance teaching, and (c) how the instructed learning activities, perceived cognitive activation, and students' effort were affected by teachers' and students’ familiarity with technology acquired during previous face-to-face teaching.

2. Theoretical background

2.1. Students’ effort in learning

A high level of effort in learning is demonstrated by learners who are willing to do their best, work diligently and perseveringly, do well on a task, improve their understanding, and acquire sustained knowledge (Fredricks, 2015; Fredricks, Blumenfeld, & Paris, 2004; Fredricks & McColskey, 2012; Trautwein et al., 2015; Wang & Eccles, 2012; Wong & Liem, 2021). More precisely, following Fütterer, Scheiter, Cheng, and Stürmer (2022), students’ effort in learning encompasses academic effort (i.e., willingness to work hard and persist at challenging tasks; e.g., Trautwein et al., 2015) and cognitive engagement (i.e., willingness to invest cognitive resources to gain a deeper understanding of the learning content; e.g., Fredricks, 2015). Academic effort and cognitive engagement are separable constructs but are closely related (Fütterer et al., 2022).

Whereas many studies have examined the impact of COVID-19-related school closures on student achievement, some studies focused on students' motivational orientations (e.g., Steinmayr et al., 2021; Zaccoletti et al., 2020). This is surprising for two reasons: First, from face-to-face teaching it is well known that students' effort is an important aspect in learning, as it is positively related to achievement in an academic context; that is, the more effort students invest into their learning, the better the learning outcome (Finn & Zimmer, 2012; Fredricks, 2015; Marsh et al., 2016; Reeve & Tseng, 2011; Trautwein, Lüdtke, Roberts, Schnyder, & Niggli, 2009, 2015). Second, students' motivational orientations such as staying engaged in learning and willing to invest effort in learning are especially important for effective distance teaching and learning. This is because in distance teaching students are more challenged to regulate their motivation during learning due to the greater flexibility (e.g., scheduling) in organizing learning processes (see Pelikan et al., 2021). For instance, in a retrospective pretest–posttest, Zaccoletti et al. (2020) found that parents reported a decrease in students' academic motivation during distance teaching and learning during the school closures in spring 2020. Furthermore, for the same period, Steinmayr et al., 2021 found school engagement (e.g., “My child always takes an effort to do her/his homework well”) as one of two variables that explained most variance in students' academic outcomes. Lastly, the US-American National Standards for Quality Online Teaching (NSQ, 2019) highlights students’ engagement in learning activities as one of eight standard categories to improve digital education.

2.2. Student-observed learning activities and students’ effort in learning

Students' effortful learning can be promoted by encouraging them to perform specific learning activities that are presumably connected to higher student engagement. Learning activities are observable learning behaviors like making notes or discussing with a peer that students perform while learning. In line with this, specific teaching activities were shown to be related to students' outcomes (e.g., motivation, learning progress) in distance teaching during the school closures (Steinmayr et al., 2021). One approach to describe learning activities during technology-enhanced teaching draws on the Interactive Constructive Active Passive (ICAP) hypothesis (Chi & Wylie, 2014; for its application to technology-enhanced teaching see Sailer, Murböck, & Fischer, 2021; Sailer, Stadler, et al., 2021; Stegmann, 2020). The ICAP hypothesis specifically links learning activities (i.e., observable learning behaviors) to learning processes of students and the engagement exhibited therein (i.e., non-observable learning processes). “The ICAP hypothesis predicts that as students become more engaged with the learning materials, from passive to active to constructive to interactive, their learning will increase” (Chi & Wylie, 2014, p. 219). Passive learning activities involve merely receiving information without doing anything else (e.g., listening in a lecture). Active learning activities involve some sort of action or manipulation of the information to-be-learned (e.g., taking notes in a lecture). Constructive learning activities involve generating or producing output that adds to the information provided in the learning materials (e.g., comparing or contrasting information with prior knowledge). Interactive learning activities involve dialoguing and turn-taking with a partner, whereby both partners need to primarily show constructive learning activities (e.g., asking and answering comprehension questions with a peer, a teacher, or computer agent). Several assumptions underlie the ICAP hypothesis. First, observable learning activities (e.g., listening, discussing) are linked to underlying cognitive learning processes. This is in line with the idea that “mental processes […] can be inferred from behavioral indices” postulated in cognitive psychology (Shuell, 1986, p. 414). Second, it is assumed that regarding students' effort in learning, interactive activities are better than constructive, which are better than active, which are better than passive (Chi, 2009). Therefore, it is assumed that, for instance, constructive—compared with merely active—learning activities are associated with higher levels of students’ effort in learning. Third, higher levels subsume lower levels of learning activities. This means, for example, that interactive learning activities are active at the same time (Chi, 2009). The ICAP hypothesis has been shown to be viable not only in laboratory studies but also in classroom settings (Chi & Wylie, 2014). Most importantly, it is assumed to be valid for both analogous as well as technology-enhanced teaching (Wekerle, Daumiller, & Kollar, 2020).

2.3. Student-perceived cognitive activation

Cognitive activation is a key dimension of teaching quality (Klieme, Pauli, & Reusser, 2009; Praetorius et al., 2018) and commonly used in the tradition of teaching quality research (e.g., Brophy, 2000; Klieme et al., 2009; Kunter et al., 2013; Seidel & Shavelson, 2007). Cognitive activation refers to the degree to which a teacher's instruction has the potential to promote students' active engagement and higher-order thinking. In this vein, it is considered a requirement for students to acquire knowledge (Praetorius et al., 2018). Students' cognitive activation is not observable (deep-structure of classroom learning) but can be stimulated by observable teacher instructions (surface-structure of classroom learning; Klieme et al., 2009), like asking open-ended questions, providing opportunities for problem-solving and critical thinking, and promoting discussions and debates. In general, it is assumed that learning environments that encourage students to think, solve problems, make decisions, select, or integrate knowledge, and thus to develop an elaborated, content-related knowledge base are more likely cognitively activating (Baumert et al., 2010; Brophy, 2000; Klieme et al., 2009; Lipowsky et al., 2009). The ICAP hypothesis—that draws on observable learning activities—has already been used to operationalize higher-order learning processes (e.g., Sailer, Murböck, & Fischer, 2021; Wekerle et al., 2020). Although the observable learning activities of the ICAP framework cannot be equated with higher-order learning activities, these learning activities are assumed to be associated with varying degrees of potential cognitive activation, which then again presumably provokes higher-order learning (Chi & Wylie, 2014).

The potential of technology to promote such valuable cognitive processes in learning is also emphasized in discussions about integrating technology into the classroom (Ainley, Enger, & Searle, 2008; Blumenfeld, Kempler, & Krajcik, 2006; Lai, 2008; Mayer, 2019; Zhu & Urhahne, 2018). A first study in the field investigated the relations between cognitively activating technology integration and students’ effort in face-to-face classroom teaching in a longitudinal design (Fütterer et al., 2022). In math classes, the frequency of technology use was unrelated to the development of effort; rather, it was the quality of technology integration—operationalized as to whether students perceived the technology-enhanced teaching as cognitively activating—that made a difference; that is, students who perceived high cognitive activation when learning with technology were better able to sustain their effort over time. However, this study researched technology-enhanced teaching in face-to-face classrooms. Thus, it is yet an open question whether these findings generalize to digital distance teaching during the COVID-19 pandemic.

In general, it has been discussed that stimulating learners to actively and deeply engage with learning content in terms of cognitive activation poses a significant challenge for distance teaching (Klieme, 2020; Voss & Wittwer, 2020). However, the importance of cognitive activation in (digital) distance teaching has also been emphasized. For instance, findings from a study focusing on teaching quality in digital distance teaching during school closures show that the use of certain technology (e.g., teacher-generated learning videos) relates positively to students' perception of teaching quality (e.g., cognitive activation) as well as to their effort in learning (Jaekel et al., 2021). However, how the technology was used (e.g., the instructed learning activities conducted with a specific technology) was not investigated.

2.4. Familiarity with technology

Familiarity with technology refers to the level of knowledge and experience that students and teachers have with various forms of technology, such as tablet computers or educational software. It includes the ability to use these tools for academic purposes and understanding how to integrate them into instruction and learning. Familiarity with technology is likely related to the digital infrastructure of schools, as students and teachers can only gain experience with and knowledge of technology for academic purposes if the technology is available for teaching. Available digital infrastructure and the accompanying familiarity of teachers and students with technology-enhanced teaching were suggested as important aspects for students’ academic achievement in digital distance teaching during COVID-19-related school closures (Betthäuser et al., 2023; Hammerstein et al., 2021). In a review of studies on academic achievement during school closures, lower learning loss was attributed to the fact that students in such studies had experience using the technology in question prior to the school closures (Hammerstein et al., 2021). It should be noted, however, that these studies focused on experience and performance regarding specific online programs (e.g., learning programs) but not on the students' experience with technology-enhanced teaching or academic achievement in general. Regarding the experience of technology-enhanced teaching in general, a meta-analysis has shown that learning loss due to school closures is lower in countries that generally have a well-developed digital infrastructure for learning (Betthäuser et al., 2023). In line with this, a representative survey of teachers in Germany showed that schools that had already successfully implemented such technology before the first school closures were able to organize more effective digital distance teaching and were more reliably in contact with their students (Vodafone Stiftung Deutschland gGmbH, 2020). Thus, digitization of schools that comes together with more familiarity with technology-enhanced teaching seems to be an important prerequisite for effective distance teaching.

In Germany, however, the digital infrastructure of schools lags behind other countries. While in 2018, the ratio of students to digital devices in the U.S., for example, was 1.6 to 1; in Germany nearly 10 students shared a single device (9.7–1; Eickelmann et al., 2019; Fraillon, Ainley, Schulz, Friedman, & Duckworth, 2020). A comparison of Germany, Austria, and Switzerland from the early days of school closures also showed that Germany had comparatively fewer resources and technical capacity available for digital distance teaching and learning (Huber & Helm, 2020). It is further reported that most teachers did not feel well prepared for distance teaching in Germany (cf. Steinmayr et al., 2021; Vodafone Stiftung Deutschland gGmbH, 2020).

Regarding teachers' familiarity, König, Jäger-Biela, and Glutsch, 2020 found that those teachers who were more familiar with technology or learning tools (e.g., tutorial programs) were at an advantage when mastering the challenges of digital distance teaching. On the one hand, this seems plausible because teachers can draw on familiar teaching practices from technology-enhanced, face-to-face instruction for implementing digital distance teaching lessons. It also appeared that teachers were more likely to use tools in digital distance teaching that they were already familiar with (Jaekel et al., 2021). On the other hand, teachers' professional competencies interplay with the situational demands of teaching (Blömeke, Gustafsson, & Shavelson, 2015), thus being highly contextualized and probably not easily transferable to new situations with unpredictable demands (e.g., using video conferencing tools). In line with this, findings of a study of 1719 mathematics teachers showed that subject-specific tools that presumably have the potential to initiate cognitively activating instruction (e.g., activating prior knowledge with audience response systems), even in digital distance teaching, were used less in digital distance teaching than in face-to-face instruction prior to the school closures (Drijvers et al., 2021). Students were also faced with a new learning environment that was crucially different from traditional classroom instruction (e.g., organizing learning materials mainly online, providing feedback remotely). Therefore, it can be questioned whether students' and teachers’ familiarity with face-to-face technology-enhanced teaching was helpful to orchestrate high-quality (i.e., cognitively activating) digital distance teaching given the vast differences between the two learning scenarios.

2.5. Research questions

The aim of this study was to investigate how student-observed learning activities related to students' effort in learning when teaching and learning was performed at a distance during the COVID-19-related school closures. Therefore, we aimed to gain insight into the relationship of student-observed learning activities while working with different digital tools and student-perceived cognitive activation—traditionally assessed in teaching quality research. Lastly, we aimed to investigate how these constructs (i.e., student-observed learning activities, student-perceived cognitive activation, and students' effort in learning) were affected by teachers' and students’ familiarity with technology acquired during previous face-to-face teaching. Therefore, we examined the following three research questions (RQ):

(RQ1) How are the observable student-observed learning activities that we operationalize along the ICAP framework related to students’ effort in learning?

Based on the ICAP hypothesis, associations between student-observed learning activities and students' effort in learning were expected to be more positive for interactive than constructive than active than passive student-observed learning activities (I>C>A>P).

(RQ2) How does student-perceived cognitive activation contribute to the relation of student-observed learning activities and students’ effort in learning?

We expected that the association of student-observed learning activities and students' effort in learning was mediated by student-perceived cognitive activation. Therefore, we explored whether student-observed learning activities operationalized along the ICAP framework relate to an instrument traditionally used in teaching quality research to assess student-perceived cognitive activation. In addition, considering previous findings on the effects of teaching perceived to be cognitively activating on student learning (e.g., motivation, achievement), we expected a positive association between student-perceived cognitive activation and students’ effort in learning.

(RQ3) How does digital distance teaching and learning differ as a function of teachers and students being either more or less familiar with technology-enhanced face-to-face teaching, and more specifically, how does familiarity explain differences in student-observed learning activities, student-perceived cognitive activation, and students’ effort in learning?

We based our analyses on data from a school trial in which classes from secondary schools were randomly equipped with tablet computers on a one-to-one basis (i.e., tablet condition; referred to as high familiarity group) more than one year before school closures or received no tablets (non-tablet condition; referred to as low familiarity group). Thus, those teachers and students that had been equipped with tablet computers could work with these devices, and we assume that they gained familiarity with technology-enhanced teaching and learning in the classroom for more than one year. Therefore, by familiarity with technology, we mean both the use of tablet computers (hardware component) and the tools available for tablet computers (software component) for academic purposes. This study context allowed us to systematically investigate the role of familiarity with digital face-to-face classroom teaching in the context of digital distance teaching and learning. First, we explored characteristics of teaching separately for low and high familiarity, for instance, the time students had spent on learning with technology, which tools they had used, how satisfied they were with the instruction, and specifically which student-observed learning activities were implemented. Second, we tested for differences as a function of familiarity for all relevant constructs used in the previous research questions, as well as the mediation itself, because previous findings regarding digital distance teaching suggest a positive association of familiarity with technology and digital infrastructure in teaching and learning during the COVID-19 pandemic.

3. Method

3.1. Context of the study

Data was collected as part of a larger research project which investigated the effects of tablet use on students’ learning (e.g., Fütterer et al., 2022; Hammer, Göllner, Scheiter, Fauth, & Stürmer, 2021). This project was connected to the ministerially funded tabletBW school initiative in the federal state of Baden-Württemberg, Germany. All academic track secondary schools of the state of Baden-Württemberg were invited to apply to participate in the school initiative, which required a media concept for integrating digital media in school. From all applicants, 28 schools were randomly chosen to participate as either tablet or control schools with stratified sampling to ensure that schools from all four districts were represented in the study. Thus, the sample was drawn from the same pool (applicants to the school initiative) and included schools from both rural and urban regions. Each school chose two 7th-grade classes to participate in the first cohort (starting in February 2018) and again two 7th-grade classes to participate in the second cohort (starting in February 2019). Classes from schools in the tablet group were then equipped with tablet computers on a one-to-one basis (high familiarity group), allowing teachers and students to gain familiarity with technology in face-to-face classroom situations. Neither students nor teachers received any special training in connection with the research project. Although they were not obliged, all subject teachers in the selected classes were asked to integrate the tablets into their daily teaching routine. Given that German schools have been rather poorly equipped and were unprepared for digital distance learning, one-to-one equipment in this context represented an exceptional opportunity to gain familiarity with technology. Classes from schools in the non-tablet control group had not been chosen to be equipped within the initiative and participated as control classes in the research project (low familiarity group). The project was designed as a longitudinal study intended to investigate conditions for the use of tablets in the classroom over a period of three years (7th to 9th grade). However, the originally planned longitudinal data collections at schools were interrupted when schools were closed due to the COVID-19 pandemic. Data collection for the current study took place online in June and July of 2021. Only students from the second cohort were consulted (as the research project had already been completed in cohort 1), who—in the tablet classes—had received their tablets in February 2019. There was no systematic training on using technology at the beginning of distance teaching. However, students and their teachers in the high familiarity group had the chance to familiarize themselves with the technology for about 12 months prior to switching to distance teaching for more than a whole school year (with only some weeks of face-to-face teaching in between when the students were taught in smaller groups, alternating in school and from a distance).

3.2. Sample and study design

All classes from the second cohort were asked to participate in the COVID-19 online questionnaire. It was conducted after they had used the tablets in the classroom for 12 months and after switching to distance teaching for an additional 18 months due to the COVID-19 pandemic.a Participation was voluntary. Students and their legal guardians gave informed consent to participate. In total N = 729 students of 9th grade participated in the data collection (517 high familiarity group, 212 low familiarity group; M age = 15.02, SD = 0.80; 54% female). Teachers' and students’ familiarity using one-to-one tablet computers in face-to-face classroom situations was operationalized as a dichotomous variable (0 = low familiarity group, 1 = high familiarity group). Data for two school subjects were included in the analyses, that is, mathematics and German. We focused on these subjects because, first, the German federal state of Baden-Württemberg had required schools to administer these two subjects during school closures. Second, these subjects are different in terms of their domain (linguistic vs. mathematical) and thus provide a good insight into different disciplines. Third, differences regarding distance teaching and learning between these subjects have already been shown in previous studies (e.g., regarding technology used; Jaekel et al., 2021).

3.3. Procedure

Teachers were asked to manage the data collection. Students were able to access the online questionnaire via an URL or QR code. Students were instructed that all questions related to the whole period of digital distance teaching. The entire self-report questionnaire used in the overall research project included comprehensive questions about the students’ general orientation regarding technology (e.g., perception of technology), their experience with digital distance teaching (e.g., frequency of distance teaching, tools used, and student-observed learning activities), how they perceived their learning environment (e.g., student-perceived cognitive activation), how they engaged in learning during digital distance teaching (e.g., effort in learning), and their background (e.g., demographic data). Students needed about 35 min to fill in the questionnaire.

3.4. Measures

In a cross-sectional-design, student-observed learning activities were assessed to predict students' effort in learning. Student-perceived cognitive activation was investigated as a mediator of this relation. All constructs were measured separately for mathematics and German, using parallel item formulations. As subjective perceptions of learning environments are important for learning (Hammer et al., 2021) and the ICAP hypothesis focuses on learning from the learners' perspective (Chi, 2009), we used students' self-reports to gain insight into students’ learning which have been widely used for this purpose in previous research (Fauth et al., 2014; Göllner, Wagner, Eccles, & Trautwein, 2018; for instance, for cognitive engagement see Fredricks & McColskey, 2012).

3.4.1. Student-observed learning activities

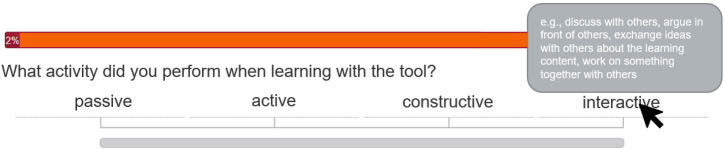

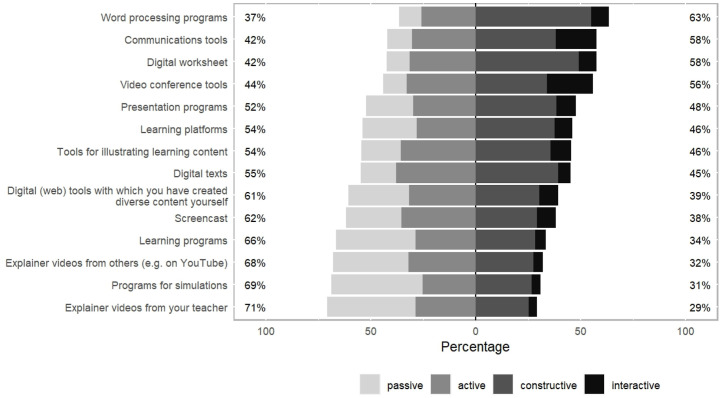

Student-observed learning activities were assessed separately for 14 technology types (e.g., video teleconferencing software programs, programs for simulations; see Fig. 2, Fig. 3). Thus, by the term technology, we refer to both hardware and software components (see Hamilton, Rosenberg, & Akcaoglu, 2016; Koehler, Mishra, & Cain, 2013). We captured 14 different technologies that have also been captured in other studies (e.g., Jaekel et al., 2021; Fraillon et al., 2020), which we believe are representative and also applicable to digital distance learning. Students first rated how often they had worked with the respective technology in digital distance teaching on a 4-point scale from 1 very rarely to 4 very often (fifth category: I don't know the tool). Afterwards, similar to, for instance, Antonietti et al. (2023), they were asked to indicate which observable learning activities they engaged in with that technology. A slider was used with four anchor points that corresponded to the ICAP framework (Chi & Wylie, 2014): passive (e.g., listening to someone, looking at, or reading something), active (e.g., taking notes, underlining/copying/organizing content), constructive (e.g., finding explanations or arguments, thinking about solutions, developing something), and interactive (e.g., discussing with or arguing in front of others, exchanging ideas, developing something together). The scale was introduced and explained to the students with these examples. In addition, the examples were shown in each item when the students moved their mouse over the anchor points (see Fig. 1 for an example).

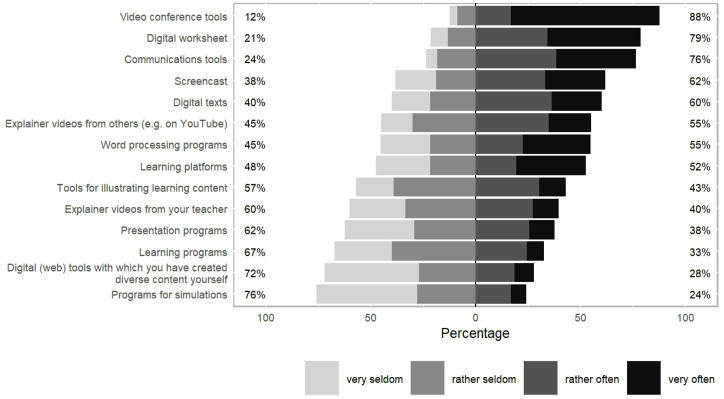

Fig. 2.

Frequency of the use of technology in mathematics.

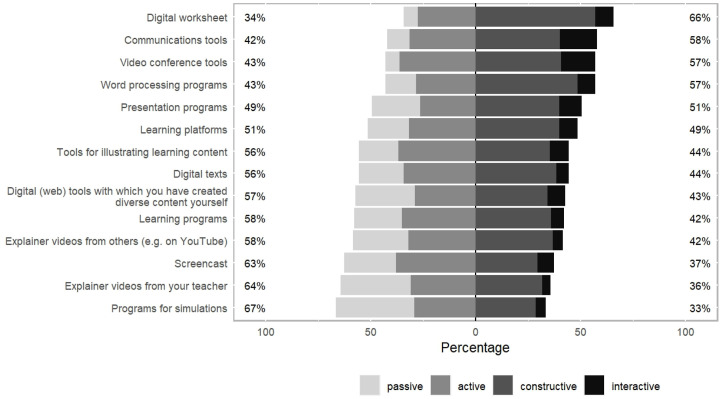

Fig. 3.

Student-observed learning activities with technology in mathematics.

Fig. 1.

Example item used to build an ICAP-Indicator.

As we coded the fifth category “I don't know the tool” as a missing value, only valid information was included in the operationalization of student-observed learning activities. To assess the student-observed learning activities across all 14 technology types, we used the arithmetic means separately for mathematics and German. Doing this, we weighted the responses on student-observed learning activities of the 14 different technology types using the responses on the frequency of use of these 14 different technology types (i.e., we multiplied the value of student-observed learning activities by the frequency of use divided by four); that is, a technology that was used more frequently was considered to a greater extent across all 14 technology types (arithmetic means) than a technology that was used less frequently. The arithmetic means are reported as global ICAP-indicators.

3.4.2. Student-perceived cognitive activation

Student-perceived cognitive activation was measured with the frequently used scale challenging practice (adapted from Baumert et al., 2008), consisting of five items describing sophisticated teaching practices to be answered on a 4-point scale from 1 completely disagree to 4 completely agree (e.g., ‘Among the questions and tasks, there were always some that really required you to think’, mathematics: Cronbach's α = 0.82; German: α = 0.85).

3.4.3. Students’ effort in learning

Following Fütterer et al. (2022), students' effort in learning was operationalized via academic effort (i.e., willingness to perform in instructional settings) and cognitive engagement (e.g., desire to invest effort in understanding [complicated] ideas). Academic effort (adapted from Gaspard et al., 2016) in learning was measured with four items (e.g., ‘In the lessons that took place digitally in distance learning, I really made an effort’, mathematics: α = 0.94; German: α = 0.95) and cognitive engagement (adapted from Rimm-Kaufman, Baroody, Larsen, Curby, & Abry, 2015) was measured with three items (e.g., ‘In the lessons that took place digitally in distance learning, it was important for me to understand things really well’, mathematics: α = 0.89; German: α = 0.90) both on a 4-point scale from 1 completely disagree to 4 completely agree.

3.4.4. Context of digital distance teaching for students high and low in familiarity

To obtain an idea of how much time students had spent on average on learning, they were asked to estimate the hours they had spent on average in learning in an average school week during the COVID-19 related school closures. Furthermore, students indicated the percentage of digital teaching and the percentage of synchronous phases that they had had during that time. To obtain an idea of the general availability of technology to use for digital distance teaching, students reported whether they were able to use technological devices at home (e.g., computer, laptop, tablet, smartphone). Students of the high familiarity group were additionally asked whether they could take home the tablets that they had received in the context of the school trial during school closures. Furthermore, we assessed students' satisfaction with digital distance teaching in mathematics and German with scales consisting of three items each (e.g., ‘I was satisfied with the mathematics/German teaching’) on a 4-point scale from 1 completely disagree to 4 completely agree. The scales showed high internal consistency (mathematics: α = 0.87; German: α = 0.90).

3.4.5. Covariates

We assessed students’ age in years and gender (0 = male, 1 = female). Corresponding to the German grading system, school grades in mathematics and German were measured on a scale from 1 very good to 6 insufficient.

3.5. Data handling

3.5.1. Statistical analyses

Descriptive statistics were calculated using R 4.0.2 (R Core Team, 2020), the statistical software RStudio (RStudio Team, 2020), and the psych package (v2.0.7; Revelle, 2020). All further analyses to answer the research questions were conducted using Mplus 8 (Muthén & Muthén, 1998–2017), separately for the two subjects. To account for alpha error cumulation due to multiple testing, Benjamini-Hochberg (1995) corrections were applied to p-values.

Regarding RQ1, we used a structural equation model (SEM) approach (Geiser, 2013) to calculate the correlation between student-observed learning activities (i.e., global ICAP-indicators; manifest) and students' effort in learning (i.e., academic effort and cognitive engagement; latent). To analyze RQ2 regarding the relation of student-observed learning activities, student-perceived cognitive activation, and students' effort in learning during digital distance teaching, we specified latent path analyses (i.e., mediation models), utilizing the model indirect command as implemented in Mplus 8. In these mediation models, student-perceived cognitive activation functioned as a mediator. We used covariates by regressing students' effort in learning (i.e., students' cognitive engagement and students' willingness to invest effort) on students' age, gender, and grades in the corresponding subject. As the mediation effects are interactions between two regression coefficients, and thus the assumption of a normal distribution of the effect in the population is easily violated, we used the bias-corrected bootstrap as a method for significance testing using asymmetric confidence intervals as robustness analyses (MacKinnon, Lockwood, & Williams, 2004). To investigate associations of familiarity with digital teaching gained in face-to-face classroom situations, with student-observed learning activities, student-perceived cognitive activation, and students' effort in learning (RQ3), we extended the mediation models by integrating teachers' and students' familiarity as a predictor of student-observed learning activities, student-perceived cognitive activation, and students’ effort in learning. Additionally, we tested mean differences of these constructs using a multiple group model approach utilizing the knownclass command and tested group differences in means using the delta-method as implemented in the model constraint option in Mplus 8.

3.5.1. Missing values

In all analyses, missing values existed due to item nonresponse (e.g., students had not used some of the tools). For instance, in the correlation model approximately 6.31% (mathematics) and 9.47% (German) of the values were missing. To address the missing data, we employed full information maximum likelihood (FIML) estimation in all analyses, as research shows that such model-based estimation procedures typically outperform traditional missing data methods such as listwise or pairwise deletion (Graham, 2012, van Buuren, 2018). Except for the mediation model that included students' age as a control variable, the age of the students was used as an auxiliary variable utilizing the Mplus 8 command auxiliary.

3.5.2. Nested data

As the multi-level structure (i.e., students nested in classes) was merely a nuisance factor, we estimated cluster-robust standard errors, utilizing the Mplus 8 command Type = complex to account for the nesting in our models (McNeish, Stapleton, & Silverman, 2017).

4. Results

Descriptive values (means and standard deviations) of student-observed learning activities, student-perceived cognitive activation, and students’ effort in learning as a function of low and high familiarity with technology-enhanced teaching are reported in Table 1 .

Table 1.

Means and standard deviations of investigated constructs as a function of familiarity with technology.

| Mathematics |

German |

|||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HFG |

LFG |

total |

HFG |

LFG |

total |

|||||||||||||

| N | M | SD | N | M | SD | N | M | SD | N | M | SD | N | M | SD | N | M | SD | |

| Learning activities | 481 | 1.62 | 0.54 | 204 | 1.67 | 0.53 | 685 | 1.63 | 0.53 | 461 | 1.53 | 0.59 | 200 | 1.57 | 0.62 | 661 | 1.54 | 0.60 |

| Perceived cognitive activation | 511 | 2.99 | 0.62 | 207 | 3.14 | 0.57 | 718 | 3.04 | 0.61 | 512 | 2.88 | 0.70 | 207 | 2.80 | 0.59 | 719 | 2.86 | 0.67 |

| Academic effort | 495 | 2.71 | 0.90 | 205 | 2.79 | 0.86 | 700 | 2.74 | 0.89 | 496 | 2.79 | 0.86 | 205 | 2.72 | 0.85 | 701 | 2.77 | 0.86 |

| Cognitive engagement | 493 | 2.74 | 0.90 | 205 | 2.80 | 0.84 | 698 | 2.76 | 0.88 | 494 | 2.70 | 0.88 | 206 | 2.66 | 0.81 | 700 | 2.69 | 0.86 |

Note. Statistics refer to scales and not to latent constructs. HFG = high familiarity group, LFG = low familiarity group.

4.1. Description of digital distance teaching and how technology was used

On average, students spent M =25.75 (SD = 12.43) hours per week on learning during the time of school closures. They indicated that most of the distance teaching was organized digitally (M =83.79%, SD = 15.71) and synchronous phases were predominant (M = 74.16%, SD = 21.76). During digital distance teaching, almost all students (98%) were able to use technological devices (e.g., computer, laptop, tablet, smartphone). Almost all students (99%) from the high familiarity group were able to take home and use their one-to-one tablet computer they had received in the school trial. On average, students were neither particularly dissatisfied nor particularly satisfied with their digital distance teaching (mathematics: M = 2.49, SD = 0.83; German: M = 2.49, SD = 0.81).

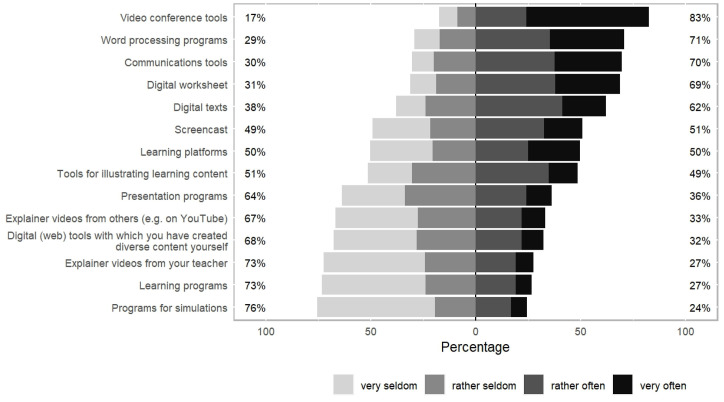

For both subjects, we found that video conference tools such as WebEx or Zoom were most frequently used. This finding is not surprising and also in line with previous research (Drijvers et al., 2021), as through such technology in distance teaching, contact with learners is maintained. In addition to video conference tools, digital distance teaching in mathematics (see Fig. 2) was conducted predominantly with digital worksheets and communications tools (e.g., forums, chats). As can be seen in Fig. 3, technologies in mathematics were rarely used to implement interactive learning activities (e.g., discussing with others, developing something together). For instance, simulations were instead used for initiating passive (e.g., watching) or active (e.g., taking notes) learning activities, even though they presumably are particularly suitable instructing constructive learning activities (e.g., finding explanations for task solutions). Digital distance teaching in German (see Fig. 4 ) was conducted predominantly using word processing programs (e.g., Word) and communications tools in addition to video conferencing tools, which is in line with the nature of the subject. Visible in Fig. 5 , word processing programs were also most likely to be used to implement active and constructive learning activities. However, it is noticeable that technology was hardly used for interactive learning activities.

Fig. 4.

Frequency of the use of technology in German.

Fig. 5.

Student-observed learning activities with technology in German.

4.2. Relation of student-observed learning activities and students’ effort in learning (RQ1)

To evaluate associations between student-observed learning activities and students' effort in learning, we examined correlations between the two constructs separately for mathematics and German. The fit of both correlation models was good (Schermelleh-Engel, Moosbrugger, & Müller, 2003): χ2(18, N = 703) = 62.555, p < .001, standardized root mean square residual (SRMR) = 0.019, comparative fit index (CFI) = 0.986, and root mean square error of approximation (RMSEA) = 0.059 (90% CI [0.044, 0.076]) for mathematics; χ2(18, N = 704) = 52.141, p < .001, SRMR = 0.019, CFI = 0.989, and RMSEA = 0.052 (90% CI [0.036, 0.069]) for German. A comparable pattern of findings emerged for mathematics and German. First, academic effort and cognitive engagement—both constructs used to assess students’ effort in learning—correlated statistically significant positive and strongly, mathematics: r = 0.893, SE = 0.021, p < .001, German: r = 0.869, SE = 0.018, p < .001. These correlations show that the two measures assessed different facets of students' effort in learning during digital distance teaching. Second, as expected, student-observed learning activities operationalized along the ICAP framework (i.e., global ICAP-indicator) were statistically significantly and positively related to the measures of students' effort in learning, mathematics academic effort: r = .232, SE = 0.034, p < .001, mathematics cognitive engagement: r = 0.174, SE = 0.042, p < .001, German academic effort: r = 0.198, SE = 0.043, p < .001, German cognitive engagement: r = 0.165, SE = 0.040, p < .001; that is, the more technology was used to initiate higher levels of student-observed learning activities (e.g., rather interactive than passive) the more effort students invested in learning during digital distance teaching—thus, supporting the ICAP hypothesis.

In sum, the key finding from RQ1 is that, consistently for both subjects, student-observed learning activities related to students’ effort in learning.

4.3. Relation of student-observed learning activities, student-perceived cognitive activation, and students’ effort in learning (RQ2)

First, we investigated whether constructs of student-perceived cognitive activation, academic effort, and cognitive engagement are distinct. The model fit indices of a three-factor model (confirmatory factor analysis) and the corresponding correlations illustrate that cognitive activation is distinct from academic effort and cognitive engagement (see Table A1). In addition, as expected, academic effort and cognitive engagement were closely related but are separable constructs (this was also shown by additional confirmatory factor analyses).

Second, to evaluate the hypothesis that associations of higher levels of student-observed learning activities and students' effort in learning were mediated by student-perceived cognitive activation in digital distance teaching, we looked at the results revealed from the mediation models (mathematics: Table 2 ; German: Table 3 ). Again, a comparable pattern of findings emerged for mathematics and German. First, as can be seen from the first row of the two tables, the degree of student-observed learning activities with technology in digital distance teaching (global ICAP-indicators) predicted statistically significant students' effort in learning (i.e., ICAP hypothesis). Second, the degree of student-observed learning activities with technology in digital distance teaching also predicted statistically significant student-perceived cognitive activation in their lessons—namely, the higher levels of student-observed learning activities the more students perceived their teaching as cognitively activating. Third, as can be seen from the second row of the two tables, student-perceived cognitive activation was statistically significant associated with students' effort in learning (i.e., academic effort, students' engagement)—thus, confirming a common finding in instructional research (cf. Praetorius et al., 2018); that is, if digital distance teaching was perceived as cognitively activating, then students more likely reported that they invested more effort in learning. Fourth, as can be seen from row six and seven in the two tables, a partial mediation was evident (Baron & Kenny, 1986; Rucker, Preacher, Tormala, & Petty, 2011), which is in line with expectations. This means that the prediction of students' effort by student-observed learning activities (direct path) remained statistically significant even when considering the statistically significant indirect paths. Furthermore, part of the prediction of students’ effort by student-observed learning activities (i.e., regarding the ICAP framework) can be explained by student-perceived cognitive activation, namely, that students perceived higher levels of student-observed learning activities as more cognitively activating, which in turn led students to invest more effort (indirect path). The results were robust when using the bias-corrected bootstrap. Finally, it should be mentioned that among the covariates, students' gender was statistically significant and positively related to students' effort in learning, and school grades were statistically significant and negatively related to students' effort in learning (note that German school grades are reverse coded; that is, the higher the grade, the lower the assessed performance). This means that girls compared to boys and students with better grades compared to students with lower grades invested more effort in learning.

Table 2.

Mediation model mathematics.

| Dependent Variable |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

| Academic effort |

Cognitive engagement |

PCA |

|||||||

| Predictor | B | SE | p | B | SE | p | B | SE | p |

| Learning activities (LA) | .183 | .034 | <.001 | .118 | .037 | .002 | .164 | .057 | .004 |

| Perceived cognitive activation (PCA) | .372 | .060 | <.001 | .401 | .056 | <.001 | |||

| Gender | .169 | .033 | <.001 | .149 | .042 | <.001 | .073 | .045 | .105 |

| Age | −.015 | .039 | .695 | .012 | .040 | .771 | −.062 | .055 | .255 |

| Grade | −.227 | .039 | <.001 | −.165 | .047 | <.001 | −.096 | .047 | .041 |

| Mediation model | |||||||||

| Direct path: LA | .183 | .034 | <.001 | .118 | .037 | .002 | |||

| Indirect path: LA*PCA | .061 | .026 | .018 | .066 | .028 | .017 | |||

| Total | .244 | .033 | <.001 | .184 | .042 | <.001 | |||

| R2 | .296, p < .001 | .257, p < .001 | .045, p = .044 | ||||||

| Inferential statistical safeguarding of the model quality | |||||||||

| χ2 | 346.004, p < .001 | ||||||||

| df | 87 | ||||||||

| SRMR | .036 | ||||||||

| CFI | .954 | ||||||||

| RMSEA | .064 (90%-CI [.057, .071]) | ||||||||

Note. N = 721. χ2 = Chi-Square Test of Model Fit, SRMR = standardized root mean square residual, CFI = comparative fit index, RMSEA = root mean square error of approximation. Grades are reverse coded in Germany (i.e., the higher the grade, the lower the assessed performance).

Table 3.

Mediation model German.

| Predictor | Dependent Variable |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Academic effort |

Cognitive engagement |

PCA |

|||||||

| B | SE | p | B | SE | p | B | SE | p | |

| Learning activities (LA) | .113 | .035 | .001 | .081 | .040 | .044 | .197 | .054 | <.001 |

| Perceived cognitive activation (PCA) | .398 | .037 | <.001 | .391 | .042 | <.001 | |||

| Gender | .139 | .041 | .001 | .132 | .039 | .001 | .051 | .060 | .393 |

| Age | .013 | .048 | .791 | .062 | .045 | .174 | −.068 | .063 | .281 |

| Grade | −.201 | .045 | <.001 | −.180 | .052 | <.001 | −.047 | .055 | .396 |

| Mediation model | |||||||||

| Direct path: LA | .113 | .035 | .001 | .081 | .040 | .044 | |||

| Indirect path: LA*PCA | . 078 | .020 | <.001 | .077 | .023 | .001 | |||

| Total | .191 | .040 | <.001 | .158 | .040 | <.001 | |||

| R2 | .278, p < .001 | .246, p < .001 | .050, p = .039 | ||||||

| Inferential statistical safeguarding of the model quality | |||||||||

| χ2 | 235.368, p < .001 | ||||||||

| df | 87 | ||||||||

| SRMR | .030 | ||||||||

| CFI | .972 | ||||||||

| RMSEA | .049 (90%-CI [.041, .056]) | ||||||||

Note. N = 722. χ2 = Chi-Square Test of Model Fit, SRMR = standardized root mean square residual, CFI = comparative fit index, RMSEA = root mean square error of approximation. Grades are reverse coded in Germany (i.e., the higher the grade, the lower the assessed performance).

Overall, the statistical models were able to explain approximately 30% of the variance in academic effort in mathematics, approximately 28% of the variance in academic effort in German, approximately 26% of the variance in cognitive engagement in mathematics, and approximately 25% of the variance in cognitive engagement in German.

In sum, the key finding from RQ2 is that consistently for both subjects, the relation between student-observed learning activities and students’ effort in learning was mediated by student-perceived cognitive activation.

4.4. The role of teachers' and students’ familiarity with technology-enhanced teaching (RQ3)

First, as a manipulation check regarding familiarity with technology, we tested whether students in the tablet group used tablets in the classroom more than students in the non-tablet group before the COVID-19-related school closures. Four months after students had been equipped with tablet computers, we asked students in the non-tablet group and in the tablet group for each subject how often they had used tablets in class in those four months on a scale from 0 (= 0 times) to 20 (= 20 times). t-test showed statistically significant differences in the frequency of tablet use in class (mathematics: M non-tablet_group = 0.30, M tablet_group = 12.69, t(646) = −40.96, p < .001, d = 2.29; German: M non-tablet_group = 0.20, M tablet_group = 6.72, t(644) = −22.60, p < .001, d = 1.26).

Second, we tested for differences in how digital distance teaching was organized as a function of teachers and students being less or more familiar with technology-enhanced teaching in the classroom. Overall, students in the high familiarity group reported a statistically significantly higher percentage of digital distance teaching (M =85.73%, SD = 14.96) than students in the low familiarity group (M =79.02%, SD = 16.52), t(354) = −5.08, p < .001, d = 0.44. Furthermore, students in the high familiarity group reported a statistically significantly higher percentage of synchronous phases (M =76.38%, SD = 20.30) than the low familiarity group (M =68.87%, SD = 24.15), t(332) = −3.92, p < .001, d = 0.35. While students in the high familiarity group were less satisfied with their digital distance teaching in mathematics (M = 2.42, SD = 0.82) than students in the low familiarity group (M = 2.66, SD = 0.83), t(382) = 3.47, p < .001, d = 0.29, students in both groups were similarly satisfied with their digital distance teaching in German (high familiarity group: M = 2.52, SD = 0.83, low familiarity group: M = 2.42, SD = 0.76), t(421) = −1.63, p = .10, d = 0.12.

Third, to further explore how familiarity with technology gained in face-to-face classroom situations contributes to student-observed learning activities, student-perceived cognitive activation, and students' effort in learning in digital distance teaching, we analyzed regression weights of teachers' and students' familiarity implemented in the mediation models. We found that teachers' and students' familiarity was not statistically significant for any construct used (see Table A2 and A3 in the appendix). Furthermore, we looked at group mean differences between teachers and students who were more familiar with using one-to-one technology for teaching and learning and teachers and students who were not (Table 4 ). The mean differences (ΔM) for student-observed learning activities (manifest) in digital distance teaching showed no statistically significant difference between the high and the low familiarity group as did the means for student-perceived cognitive activation and students’ effort in learning (i.e., academic effort, and cognitive engagement; latent). Notably, in mathematics the students in the high familiarity group showed descriptively higher mean scores on all four variables, and in German (except for student-observed learning activities), the students in the low familiarity group showed descriptively higher mean scores.

Table 4.

Mean differences in investigated constructs as a function of the familiarity with technology.

| Mathematics |

German |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SE | N | Δp | |d| | M | SE | N | Δp | |d| | ||

| Learning activitiesa | HFG | 1.62 | 0.04 | 513 | .470 | 0.10 | 1.53 | 0.45 | 513 | .671 | 0.06 |

| LFG | 1.67 | 0.06 | 208 | 1.57 | 0.06 | 209 | |||||

| Perceived cognitive activationb | HFG | 2.75 | 0.08 | 513 | .181 | 0.28 | 2.71 | 0.09 | 513 | .515 | 0.12 |

| LFG | 2.90 | 0.12 | 208 | 2.64 | 0.09 | 209 | |||||

| Academic effortb | HFG | 2.61 | 0.07 | 513 | .517 | 0.17 | 2.80 | 0.06 | 513 | .894 | 0.14 |

| LFG | 2.70 | 0.14 | 208 | 2.72 | 0.13 | 209 | |||||

| Cognitive engagementb | HFG | 2.64 | 0.08 | 513 | .576 | 0.09 | 2.64 | 0.07 | 513 | .448 | 0.02 |

| LFG | 2.72 | 0.14 | 208 | 2.62 | 0.13 | 209 | |||||

Note. HFG = high familiarity group, LFG = low familiarity group. The statistical significances do not change after Benjamini and Hochberg (1995) correction.

manifest.

latent.

In sum, the key findings from RQ3 are that familiarity with technology seems to be important for how distance teaching was organized (e.g., percentage of digital distance teaching) but, consistently for both subjects, did not contribute to student-observed learning activities, student-perceived cognitive activation, and students’ effort in learning in digital distance teaching.

5. Discussion

During the COVID-19 pandemic, distance teaching and learning was foremost conducted digitally (Vodafone Stiftung Deutschland gGmbH, 2020). The way schools implemented digital distance teaching varied widely from school to school, both in terms of the tools used and teaching methods (Jaekel et al., 2021; Steinmayr et al., 2021; Vodafone Stiftung Deutschland gGmbH, 2020). Some teaching methods (e.g., teacher-generated videos, feedback) were found to be related to both perceived teaching quality (Jaekel et al., 2021) and students' motivation (Steinmayr et al., 2021). Furthermore, pre-existing familiarity with technology has been shown to positively impact learning performance (Betthäuser et al., 2023; Hammerstein et al., 2021). In this study, we addressed the key questions of how technology was used in digital distance teaching and learning during COVID-19-related school closures and whether high-quality implementation was related to students' effort in learning. Moreover, we investigated whether familiarity with technology gained in face-to-face teaching before school closures helped teachers and students to conduct high-quality digital distance teaching and learning. To this aim, we studied self-reports of 729 ninth graders in two subjects (mathematics and German) during digital distance teaching that had taken part in a school initiative concerned with equipping schools with technology. Consistently for both subjects, we found that student-observed learning activities related to students' effort in learning and that this relation was mediated by student-perceived cognitive activation. Furthermore, by explicitly connecting tools with student-observed learning activities, we could see that students used tools mostly for passive or active learning activities, as known from studies for face-to-face teaching (Sailer, Murböck, & Fischer, 2021; Wekerle & Kollar, 2022). However, students used tools only rarely for constructive or interactive learning activities, thus not fully exploiting the potentials that are offered in the sense of the ICAP framework. Other than expected, teachers' and students’ familiarity with technology gained in face-to-face classroom situations seemed to play a less important role.

The relationship of perceived cognitive activation and students' effort is in line with the few findings of previous research about (digital) distance teaching (Jaekel, Fütterer, & Göllner, 2023; Praetorius et al., 2018). Thus, our findings confirm that concepts from teaching quality research (i.e., cognitive activation) can be applied to the context of digital distance teaching and that cognitive activation plays an important role in this context as well. Our findings support the operationalization of student-observed learning activities along the ICAP framework, as we found positive relations to students’ effort in learning.

Students' and teachers' familiarity using technology (i.e., tablet computers) in face-to-face teaching prior to COVID-19 school closures was not found to relate to instructed learning activities, perceived teaching quality, or students' learning effort. One explanation might be that merely equipping teachers and students with digital devices might not sufficiently help to prepare them for implementing high-quality digital distance teaching and learning. Familiarity with technology was generally assumed to be important for teachers. However, studies from face-to-face teaching suggest that, for instance, professional knowledge about teaching and pedagogical experience (Lachner, Backfisch, & Stürmer, 2019) or teachers’ technology-related utility beliefs (Backfisch, Lachner, Stürmer, & Scheiter, 2021) also play a crucial role in technology-enhanced teaching. Thus, our results might show that it is not enough to be familiar with technological tools, but it is necessary to understand the added value of technology to improve pedagogy in the virtual classroom—like face-to-face teaching. Several frameworks, like the Replacement, Amplification, and Transformation (RAT; Hughes, Thomas, & Scharber, 2006) or the Substitution Augmentation Modification Redefinition (SAMR; Puentedura, 2006; 2013) framework, refer to this important connection. However, as our results show regarding initiated learning activities when using different digital applications, the added value that the specific technology would provide is rarely addressed. Another explanation for this finding is that technology use in digital distance teaching crucially differs from how technology is used in face-to-face classroom teaching. Thus, teachers and students cannot draw on routines that they might have had from face-to-face classroom settings. However, it should be considered that at the time of data collection all classes—also those from the low familiarity group—had already gained some experience with digital distance teaching. Although we asked students about the entire period with digital distance teaching, it may be that the ratings were influenced by more recent experiences. Therefore, effects would have been expected to be stronger at the beginning of the first school closures.

Still, our results on familiarity being less important somewhat contradict findings on students’ outcomes that suggested familiarity with technology as an important moderator in digital distance teaching (Betthäuser et al., 2023; Hammerstein et al., 2021; Spitzer & Musslick, 2021). However, the way familiarity was looked at differed considerably. For example, other research classified samples of studies to have different levels of familiarity depending on which country the study was conducted in and how advanced digitization was in that country (Betthäuser et al., 2023), or if the sample of a study had already worked with the learning program before the change to digital distance teaching, it was assumed that they were more familiar with the program (Hammerstein et al., 2021). To the best of our knowledge, there is no study that has researched familiarity with technology in a systematic way. Our sample from the school trial allowed us to systematically investigate the effect of familiarity with digital face-to-face teaching on teaching quality in digital distance teaching, thus contributing to the understanding of preconditions for digital distance teaching.

Finally, we found that girls, compared to boys, and students with better than students with lower grades, invested more effort in learning. The gender-specific findings are in line with findings from previous studies (e.g., Wang & Eccles, 2013; Yeung, 2011). The relationship between grades and students' effort in learning must be interpreted against the background that we use cross-sectional data (see limitations). That is, it is also plausible to assume that students who show more effort will also receive better grades.

5.1. Limitations

The findings of this study must be interpreted against the background of at least the following limitations. The analyses refer exclusively to students from academic track secondary schools (i.e., Gymnasiums, the highest track in Germany). This means that the analyses were conducted with a selected sample (e.g., distribution of SES among academic track secondary school students typically in Germany is rather homogeneous), and thus the generalizability of the results to students in other types of schools in Germany should be investigated in future studies. The selectivity of the sample is particularly important in the setting of this study because school success is strongly related to the socioeconomic status (SES) of students (Holzberger, Reinhold, Lüdtke, & Seidel, 2020; Broer, Bai, & Fonseca, 2019; for Germany see for instance: Maaz & Nagy, 2010; Scharf, Becker, Stallasch, Neumann, & Maaz, 2020). Particularly for distance learning during COVID-19-related school closures, recent findings show that students with higher SES enjoyed learning environments conducive to learning (e.g., technology equipment, space to work, more educationally engaged parents) and had fewer learning losses than students with lower SES (Betthäuser et al., 2023; Hammerstein et al., 2021). Thus, it is to be investigated whether the findings generalize to students from other school types.

Our research is limited to investigating cognitive activation as one key dimension of instructional quality. There are, however, other dimensions of instructional quality, such as classroom management or supportive climate (e.g., Praetorius et al., 2018), which should be considered in more detail in future research. Furthermore, our research relies solely on student ratings, which is a commonly used approach to assess teaching quality as well as determining students’ learning outcomes (Göllner et al., 2018; Herbert, Fischer, & Klieme, 2022). Nevertheless, student-observed learning activities are observable, which is why it seems reasonable to additionally observe data externally. Future research should investigate whether the instrument developed to capture ICAP learning activities can be validated by linking it to objective measures of student learning from classroom observations.

Furthermore, we used cross-sectional data. This means that all associations found in this study must be interpreted as correlational and not causal (e.g., the associations between cognitive activation and students' effort could also be reverse effects). Further, the statistically significant mediations should rather be understood to mean that these mediation patterns fit the data well. However, whether the assumed directional paths within the mediations are valid needs to be investigated in future studies using longitudinal data.

5.2. Implications for theory and practice and future research directions

To ensure that students benefit from digital distance teaching, it is important that technology is implemented in a high-quality manner (e.g., cognitively activating) in digital teaching (Fütterer et al., 2022; Lei & Zhao, 2007; Petko, Cantieni, & Prasse, 2017). As an appropriate approach to describe the quality of the use of technology, the ICAP hypothesis is discussed (Fütterer et al., 2022; Sailer, Stadler, et al., 2021). An implication for theory thus arises from the results of this study by showing that the ICAP hypothesis for digital distance teaching is viable. Particularly, the student-observed learning activities as operationalized in this study (i.e., linking technology with learning activities along the ICAP framework) were appropriate to predict the quality of digital distance teaching (i.e., assessed with a traditional measure used in teaching quality research). Thus, it could be shown that observable learning activities can be related to cognitive activation, a not observable construct, which is frequently used in teaching-quality research.

Teachers seem to need support in using technology in the classroom because it has been shown that the potentials associated with the use of technology (e.g., stimulating interactive learning activities) have hardly been exploited. Thus, one implication that can be derived for practice is that teachers can use the student-observed learning activities as a guide to organizing cognitively activating digital (distance) teaching. Solely equipping teachers and students with technology to better prepare them for an unforeseen switch to digital distance teaching—as was the case with the COVID-19 pandemic—does not constitute a benefit in high-quality teaching. Future research should build on this and explore how teachers could be prepared, for instance, in professional development (see Fütterer, Scherer, Scheiter, Stürmer, & Lachner, 2023), to promote high-quality (e.g., cognitively activating) use of technology in the classroom (even independent of COVID-19 school closures) in both digital distance and face-to-face teaching (for instance, by using the ICAP framework as a basis for training).

Credit author statement

Tim Fütterer: Conceptualization, Methodology, Formal analysis, Data Curation, Visualization, Writing - Original Draft, Project administration, Writing - Review & Editing, Emely Hoch: Conceptualization, Methodology, Data Curation, Visualization, Writing - Original Draft, Project administration, Writing - Review & Editing, Andreas Lachner: Conceptualization, Writing - Review & Editing, Katharina Scheiter: Conceptualization, Writing - Review & Editing, Kathleen Stürmer: Conceptualization, Writing - Original Draft, Writing - Review & Editing.

Declaration

This paper uses data from the research project tabletBW meets science. The research project is connected to the tabletBW school trial. The school trial was initiated by the Ministry of Education, Youth and Sports Baden Württemberg. The research was carried out by the Hector Research Institute of Education Sciences and Psychology (HIB) at the University of Tübingen in cooperation with the Leibniz-Institut für Wissensmedien (IWM).

We have no known conflicts of interest to disclose. The authors are responsible for the content of this publication.

Acknowledgements

All authors are members of the LEAD Graduate School & Research Network [GSC1028], which was funded within the framework of the Excellence Initiative of the German federal and state governments.

This research was supported by the Postdoctoral Academy of Education Sciences and Psychology of the Hector Research Institute of Education Sciences and Psychology, Tübingen, funded by the Baden-Württemberg Ministry of Science, Research, and the Arts.

This project is part of the “Qualitätsoffensive Lehrerbildung”, a joint initiative of the Federal Government and the Länder which aims to improve the quality of teacher training. The programme is funded by the Federal Ministry of Education and Research. The authors are responsible for the content of this publication.

Footnotes

The two classes each of seven schools of the low familiarity group were not included in this study due to internal school decisions (i.e., they mixed students between classes). Classes that dropped out did not differ significantly from classes that participated in the COVID-19 data collection in terms of cognitive ability, math skills, or reading skills at the time of the baseline measurement in the longitudinal study.

Supplementary data to this article can be found online at https://doi.org/10.1016/j.compedu.2023.104788.

Appendix A. Supplementary data

The following is the Supplementary data to this article:

Data availability

Data will be made available on request.

References

- Ainley J., Enger L., Searle D. In: International handbook of information technology in primary and secondary education. Voogt J., Knezek G., editors. Springer; 2008. Students in a digital age: Implications of ICT for teaching and learning; pp. 63–80.http://link.springer.com/10.1007/978-0-387-73315-9_4 [Google Scholar]

- Antonietti C., Schmitz M.-L., Consoli T., Cattaneo A., Gonon P., Petko D. Development and validation of the ICAP Technology Scale to measure how teachers integrate technology into learning activities. Computers & Education. 2023;192:104648. doi: 10.1016/j.compedu.2022.104648. [DOI] [Google Scholar]

- Backfisch I., Lachner A., Stürmer K., Scheiter K. Variability of teachers’ technology integration in the classroom: A matter of utility! Computers & Education. 2021;166:104159. doi: 10.1016/j.compedu.2021.104159. [DOI] [Google Scholar]

- Baron R.M., Kenny D.A. The moderator–mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology. 1986;51(6):1173–1182. doi: 10.1037/0022-3514.51.6.1173. [DOI] [PubMed] [Google Scholar]

- Baumert J., Blum W., Brunner M., Dubberke T., Jordan A., Klusmann U., et al. Professionswissen von Lehrkräften, kognitiv aktivierender Mathematikunterricht und die Entwicklung von mathematischer Kompetenz (COACTIV). Dokumentation der Erhebungsinstrumente. Max-Planck-Institut für Bildungsforschung. 2008 http://library.mpib-berlin.mpg.de/dl/Materialien/Materialien_083/pdf/Materialien_Bildungsforschung_MPIB_083.pdf [Google Scholar]

- Baumert J., Kunter M., Blum W., Brunner M., Voss T., Jordan A.…Tsai Y.-M. Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. American Educational Research Journal. 2010;47(1):133–180. doi: 10.3102/0002831209345157. [DOI] [Google Scholar]

- Benjamini Y., Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. 1995;57(1):289–300. doi: 10.2307/2346101. [DOI] [Google Scholar]

- Betthäuser B.A., Bach-Mortensen A.M., Engzell P. A systematic review and meta-analysis of the evidence on learning during the COVID-19 pandemic. Nature Human Behaviour. 2023 doi: 10.1038/s41562-022-01506-4. [DOI] [PubMed] [Google Scholar]

- Blömeke S., Gustafsson J.-E., Shavelson R.J. Beyond dichotomies. Competence viewed as a continuum. Zeitschrift Für Psychologie. 2015;223(1):3–13. doi: 10.1027/2151-2604/a000194. [DOI] [Google Scholar]

- Blumenfeld P.C., Kempler T.M., Krajcik J.S. In: The Cambridge handbook of the learning sciences. Sawyer R.K., editor. Cambridge University Press; 2006. Motivation and cognitive engagement in learning environments; pp. 475–488. [DOI] [Google Scholar]

- Bosch Stiftung R. Das Deutsche Schulbarometer Spezial: Ergebnisse einer Befragung von Lehrerinnen und Lehrern an allgemeinbildenden Schulen im Auftrag der Robert Bosch Stiftung in Kooperation mit der ZEIT. Durchgeführt von forsa Politik- und Sozialforschung GmbH. Robert Bosch Stiftung. 2020 [Google Scholar]

- Bransford J.D., Brown A.L., Cocking R.R. How people learn: Brain, mind, experience, and school. National Academies Press; 2000. [DOI] [Google Scholar]

- Broer M., Bai Y., Fonseca F. (Vol. 5). Springer International Publishing; 2019. (Socioeconomic inequality and educational outcomes). [DOI] [Google Scholar]

- Brophy J. International Academy of Education (IAE); 2000. Teaching. Educational practices series 1. [Google Scholar]

- van Buuren S. Flexible imputation of missing data. 2nd ed. CRC Press; 2018. [Google Scholar]

- Chi M.T.H. Active-constructive-interactive: A conceptual framework for differentiating learning activities. Topics in Cognitive Science. 2009;1(1):73–105. doi: 10.1111/j.1756-8765.2008.01005.x. [DOI] [PubMed] [Google Scholar]

- Chi M.T.H., Wylie R. The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist. 2014;49(4):219–243. doi: 10.1080/00461520.2014.965823. [DOI] [Google Scholar]

- Donnelly R., Patrinos H.A. Learning loss during Covid-19: An early systematic review. PROSPECTS. 2021 doi: 10.1007/s11125-021-09582-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drijvers P., Thurm D., Vandervieren E., Klinger M., Moons F., van der Ree H.…Doorman M. Distance mathematics teaching in Flanders, Germany, and the Netherlands during COVID-19 lockdown. Educational Studies in Mathematics. 2021;108(1–2):35–64. doi: 10.1007/s10649-021-10094-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickelmann B., Bos W., Labusch A. In: ICILS 2018 #Deutschland Computer- und informationsbezogene Kompetenzen von Schülerinnen und Schülern im zweiten internationalen Vergleich und Kompetenzen im Bereich Computational Thinking. Eickelmann B., Bos W., Gerick J., Goldhammer F., Schaumburg H., Schwippert K., et al., editors. Waxmann; 2019. Die Studie ICILS 2018 im Überblick – zentrale Ergebnisse und mögliche Entwicklungsperspektiven; pp. 7–32. [Google Scholar]