Abstract

ChatGPT has recently been shown to pass the United States Medical Licensing Examination (USMLE). We tested ChatGPT (Feb 13, 2023 release) using a typical clinical toxicology case of acute organophosphate poisoning. ChatGPT fared well in answering all of our queries regarding it.

Keywords: ChatGPT, clinical toxicology, organophosphates, artificial intelligence, AI, medical education

Since its public launch on November 30, 2022, ChatGPT, which ironically has not been specifically trained in medicine, has been taking the medical world by storm [1-3]. Developed by the San Francisco–based OpenAI Inc/LP, ChatGPT is a very large language model that uses deep learning artificial intelligence (AI) techniques to generate human-like responses to natural language queries. It is based on the Generative Pre-trained Transformer 3 (GPT-3 x) architecture, which has been trained on gigantic amounts of data. ChatGPT is currently being integrated into the Microsoft Bing search engine, which will soon make it readily accessible to hundreds of millions of online users worldwide, including patients, medical and nursing students, and clinicians [4].

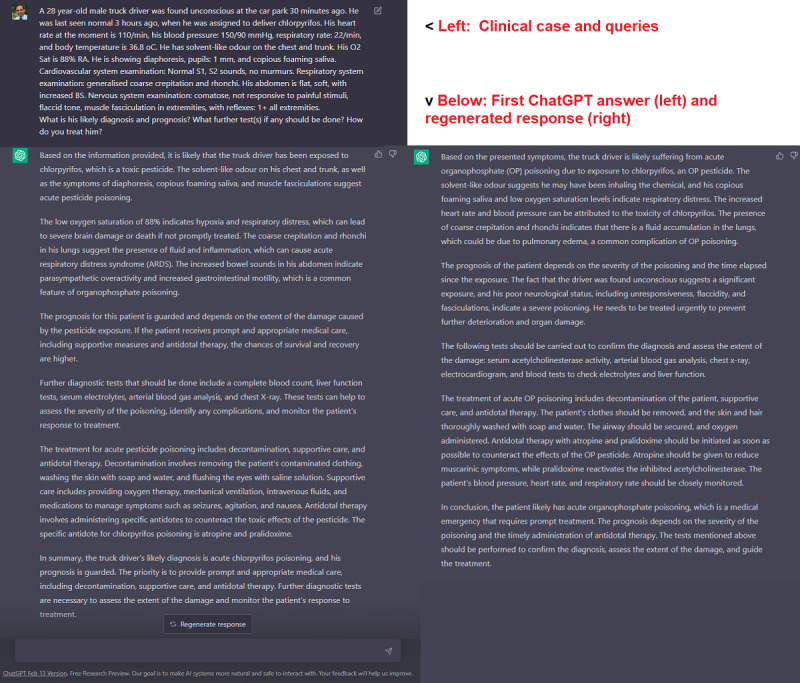

We tested ChatGPT (Feb 13, 2023, release; standalone, available via OpenAI [5]) using a typical clinical toxicology vignette (a case of acute organophosphate poisoning) retrieved from an online presentation [6]. The case, as we modified it for and typed it in ChatGPT, as well as ChatGPT’s answer (which followed only a couple of seconds after inputting the case), are shown in Figure 1. The figure also shows a regenerated ChatGPT response after we pressed the “Regenerate response” button at the bottom of the first ChatGPT answer.

Figure 1.

Diagnosing a case of acute organophosphate poisoning in ChatGPT.

The clinical case example we used is a very straightforward one, unlikely to be missed by any practitioner in the field, and ChatGPT fared well in answering all of our queries regarding it. Both the first ChatGPT response and the regenerated one were fine and offered good explanations of the underlying reasoning. However, the pressing problem in real life is not one of finding the correct diagnosis but of taking appropriate history and being able to elicit and ascertain the correct signs. In real life, junior clinicians may arrive at the wrong diagnosis because they missed or confused the signs. As ChatGPT becomes further developed and specifically adapted for medicine, it could one day be useful in less common clinical cases (ie, cases that experts sometimes miss). Rather than AI replacing humans (clinicians), we see it as “clinicians using AI” replacing “clinicians who do not use AI” in the coming years.

Abbreviations

- AI

artificial intelligence

- GPT-3 x

Generative Pre-trained Transformer 3

Footnotes

Conflicts of Interest: None declared.

References

- 1.Gilson A, Safranek CW, Huang T, Socrates V, Chi L, Taylor RA, Chartash D. How does ChatGPT perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023 Feb 08;9:e45312. doi: 10.2196/45312. https://mededu.jmir.org/2023//e45312/ v9i1e45312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kung TH, Cheatham M, Medenilla A, Sillos C, De Leon L, Elepaño Camille, Madriaga M, Aggabao R, Diaz-Candido G, Maningo J, Tseng V. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023 Feb 9;2(2):e0000198. doi: 10.1371/journal.pdig.0000198. https://europepmc.org/abstract/MED/36812645 .PDIG-D-22-00371 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hailu R, Beam A, Mehrotra A. ChatGPT-assisted diagnosis: is the future suddenly here? STAT. 2023. Feb 13, [2023-03-06]. https://www.statnews.com/2023/02/13/chatgpt-assisted-diagnosis/

- 4.Mehdi Y. Reinventing search with a new AI-powered Microsoft Bing and Edge, your copilot for the web. Official Microsoft Blog. 2023. Feb 07, [2023-03-06]. https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web/

- 5.ChatGPT (Feb 13, 2023, release) OpenAI. [2023-03-06]. https://chat.openai.com/

- 6.Jiranantakan T. Toxicology case studies: medical preparedness & responses for chemical and radiological emergencies (slide 11) Mahidol University. 2018. Jan 21, [2023-03-06]. http://envocc.ddc.moph.go.th/uploads/OEHA2/ELM/Module%204/3.2%206P_Handout_MOPH-ExecutiveLevel_ToxicologyandOEM_2018_Thanjira_Jiranantakan.pdf .